Multi-User Drone Flight Training in Mixed Reality

Abstract

:1. Introduction

- We propose a remote training system for FPV drone flying. The drone player, remote expert, and projector user are the components of the system. We define their roles and describe their configuration.

- We introduce a virtual environment specifically configured for drone flights. We also present a user interface that is specifically designed considering the role of the remote trainer.

- We present a user interaction and remote communication method that is suitable for remote training for flying FPV drones. We also describe the implementation method and discuss the results.

- We present a configuration method for a virtual flight environment using an ultra-wideband (UWB) wireless communication sensor. This method allows the environment to be configured according to the intentions of the drone pilot, who is present in the actual drone flight environment. Hence, a two-way configuration, instead of a one-way configuration by the remote expert, is possible.

2. Related Work

2.1. Drones and Virtual/Mixed Reality

2.2. Remote Collaboration and Communication between Multiple Users in Virtual/Mixed Reality

2.3. User Interface in Virtual/Mixed Reality

2.4. Ultra-Wideband Wireless Communication Sensor and Position Estimation Method

3. Remote Training System for Drone Flight in Mixed Reality

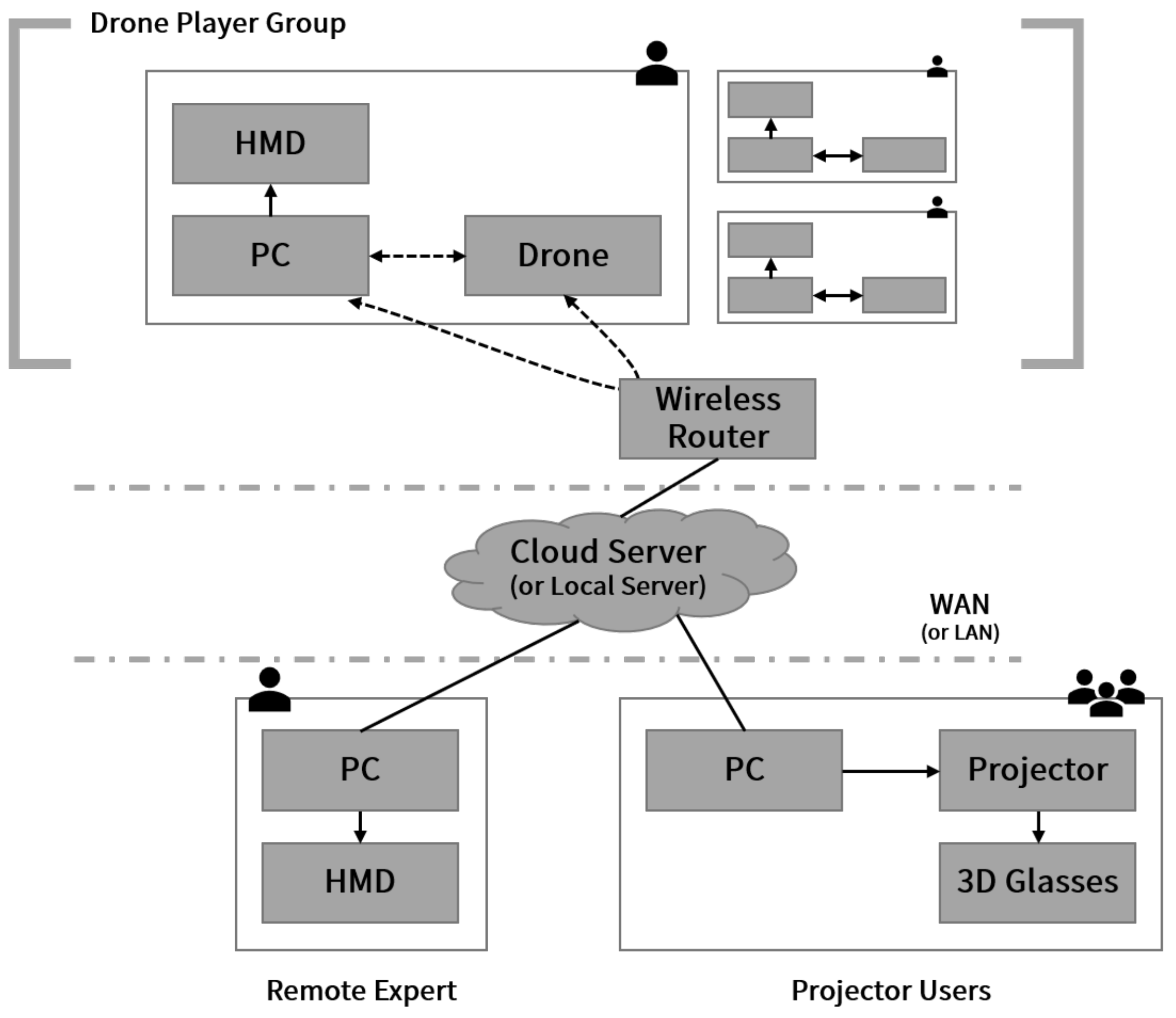

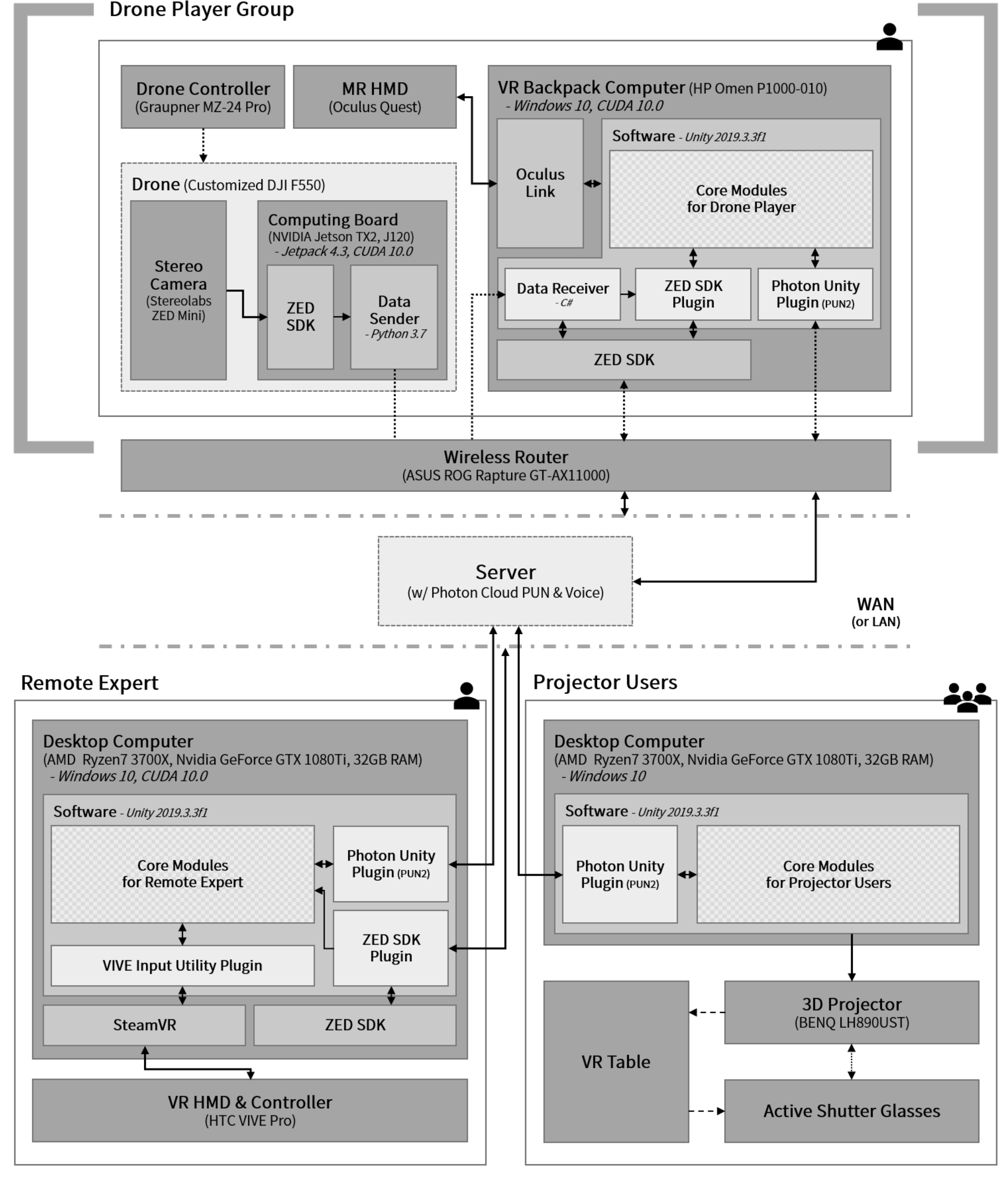

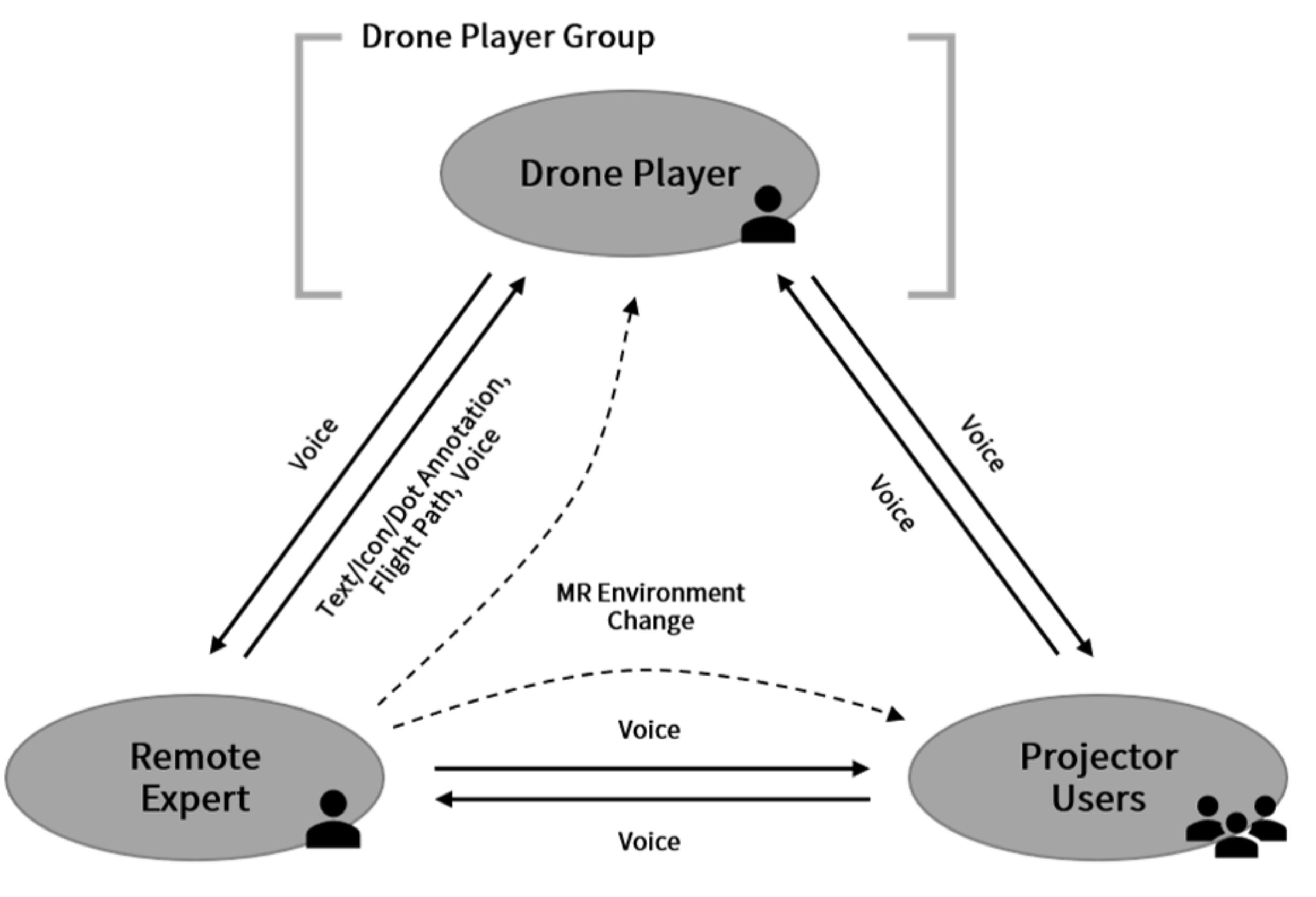

3.1. System Structure and Components

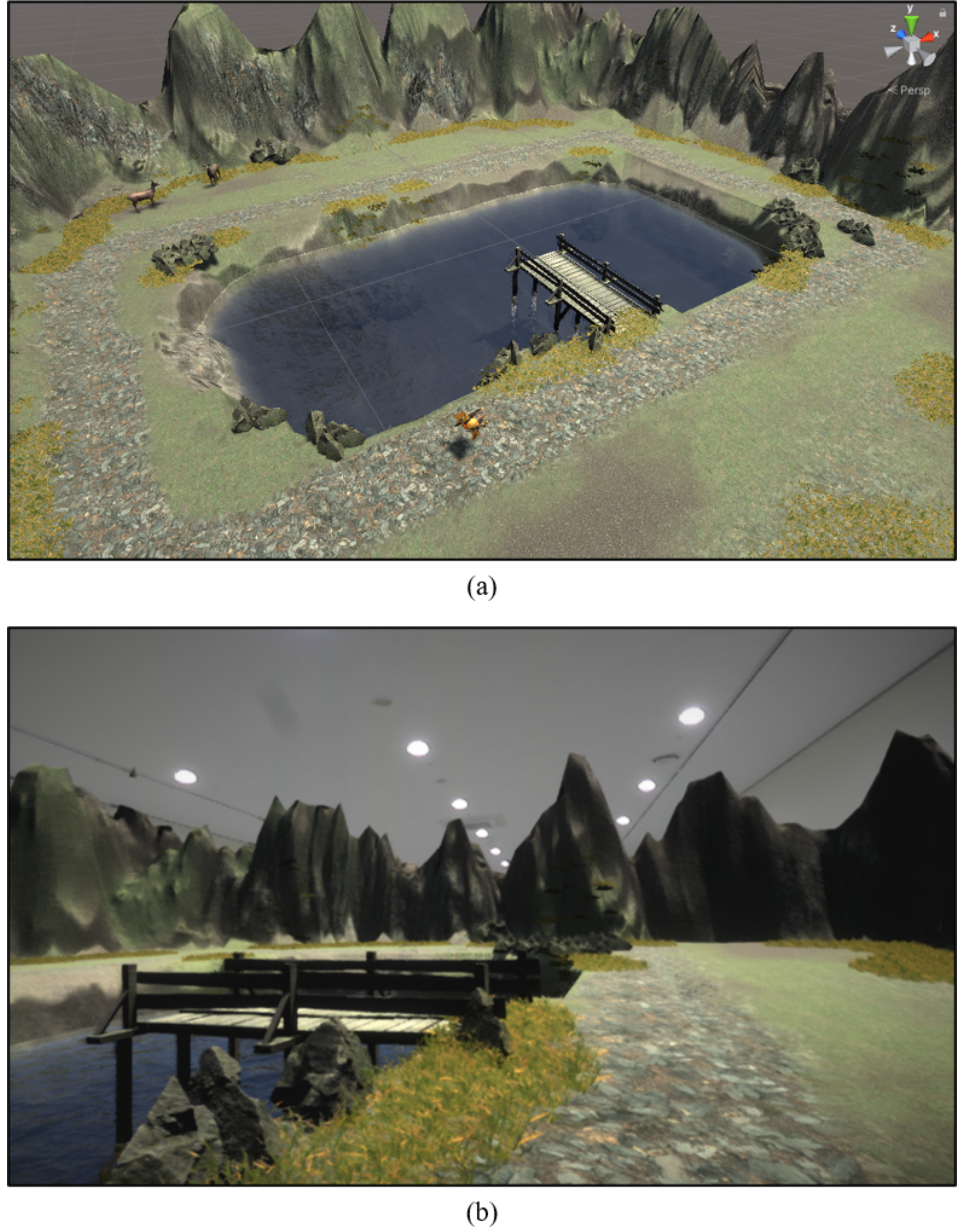

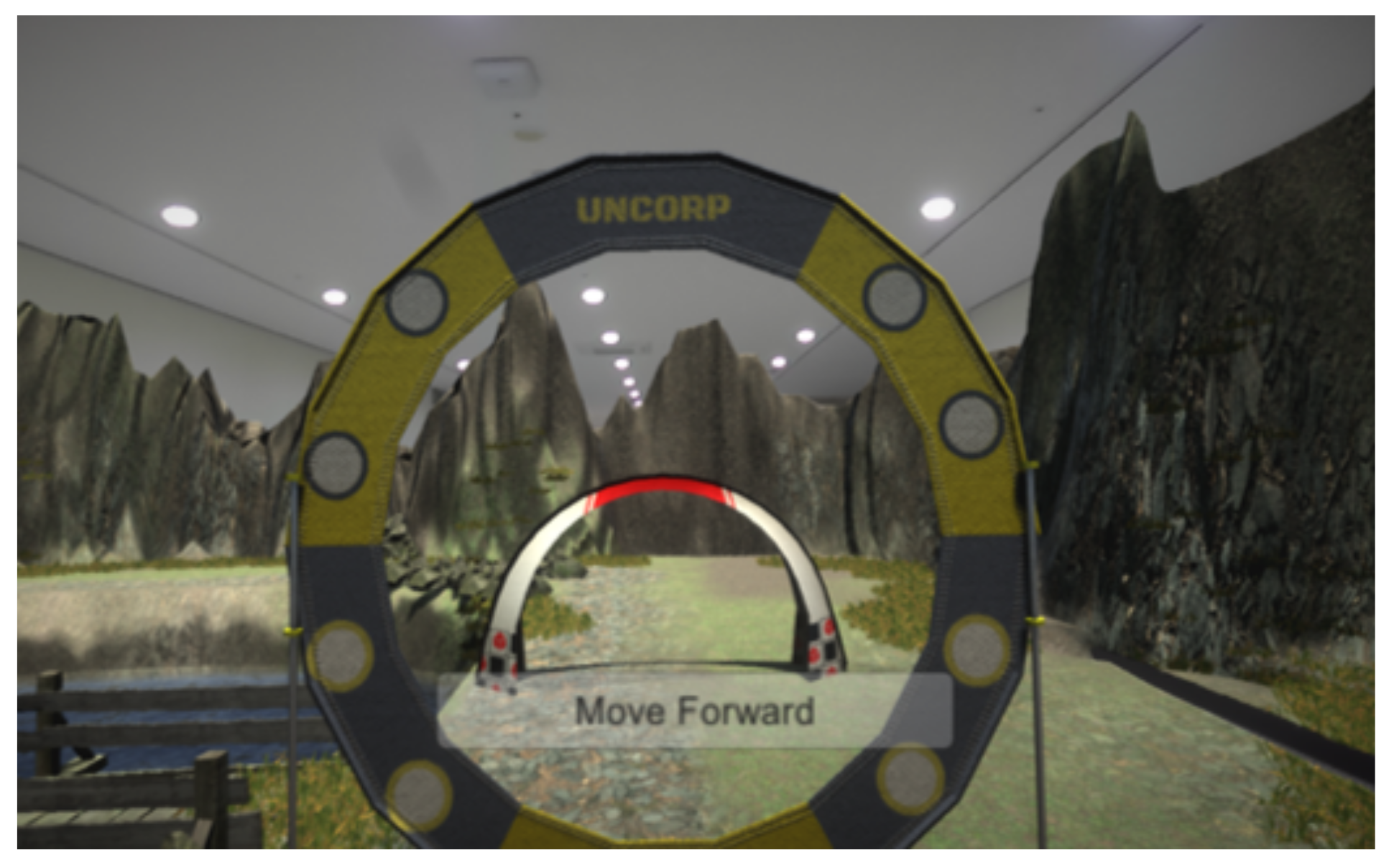

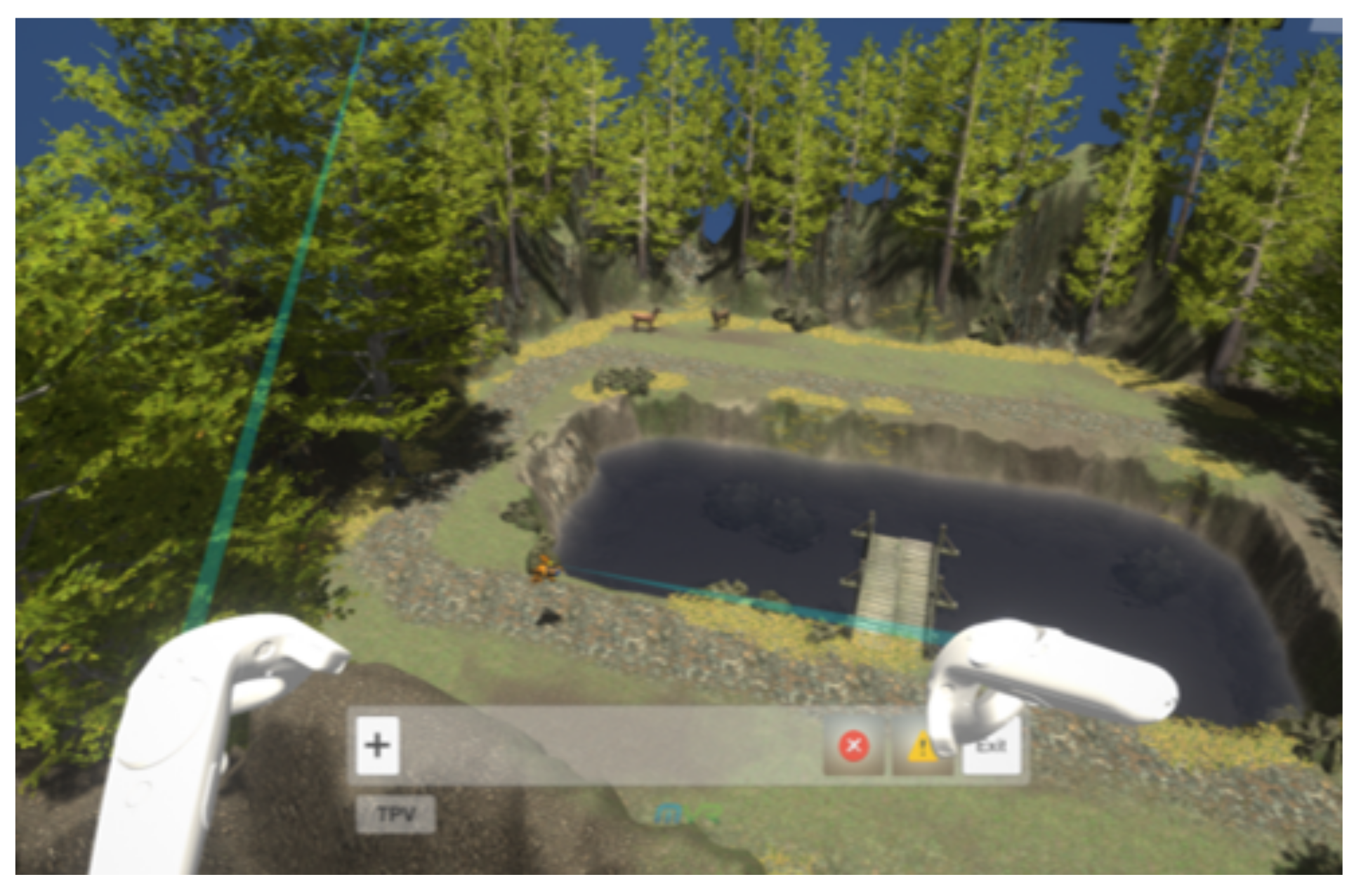

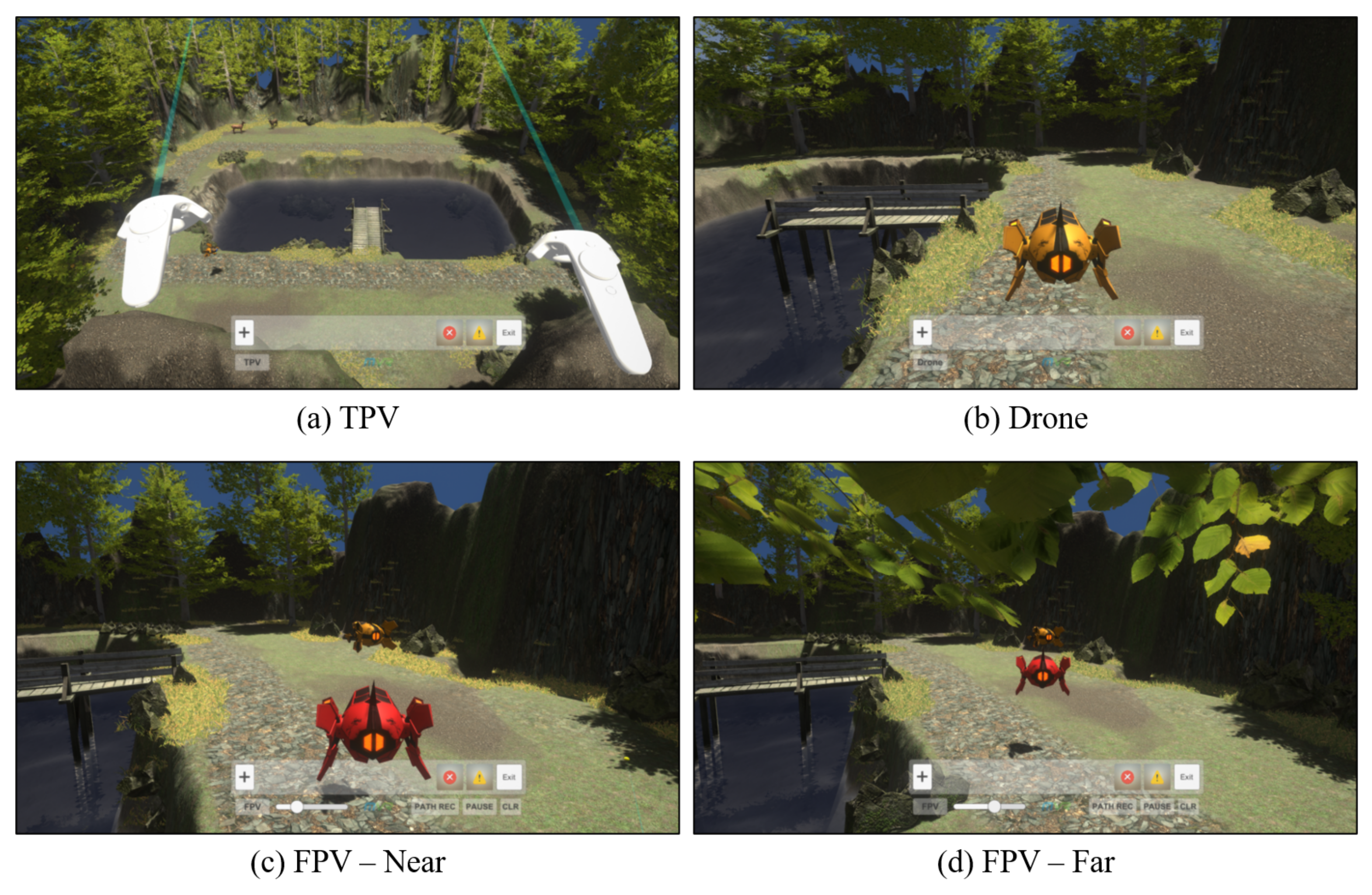

3.2. Design of the Virtual Environment Suitable for Remote Drone Flight Training

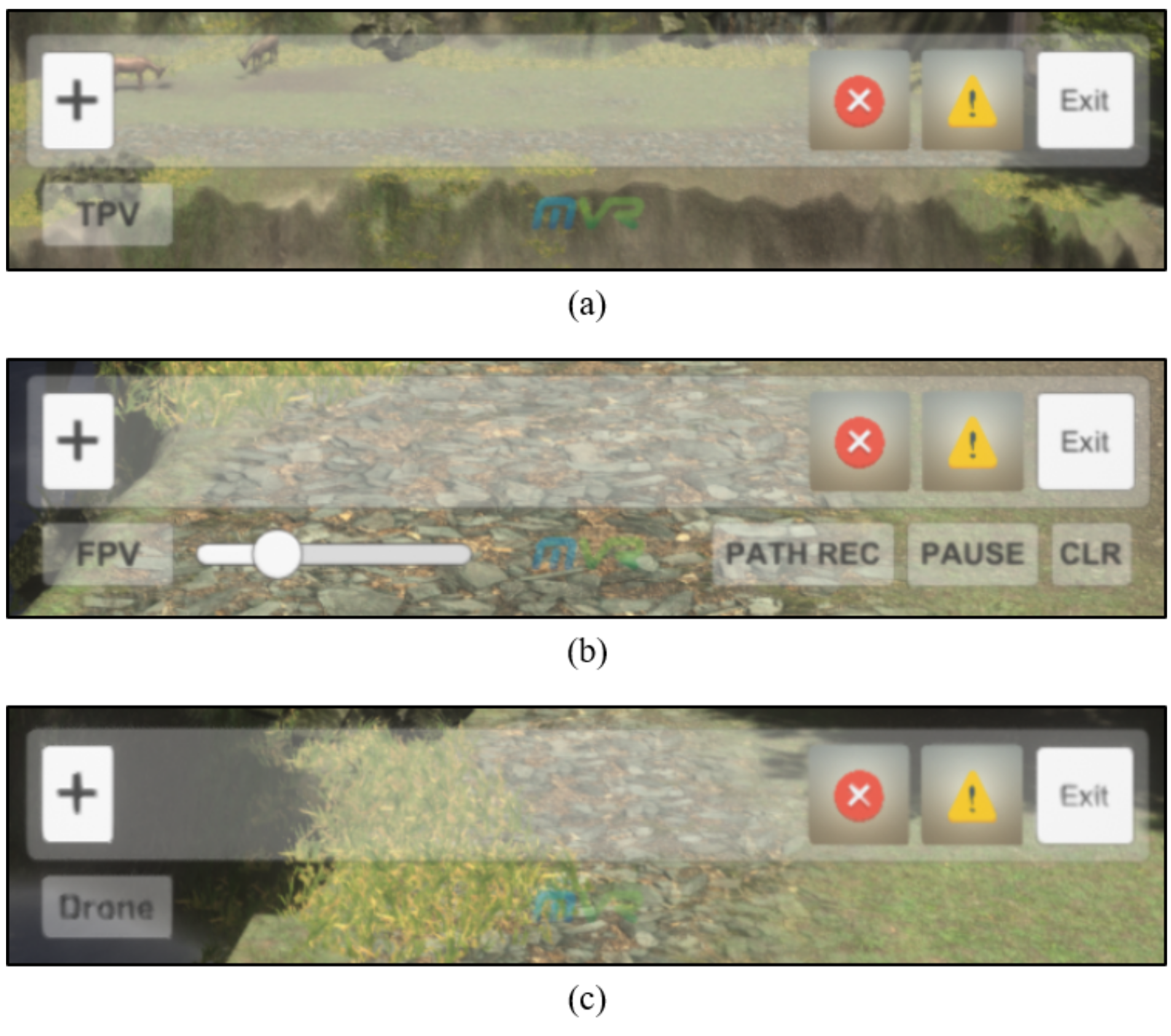

3.3. User Interface Configuration

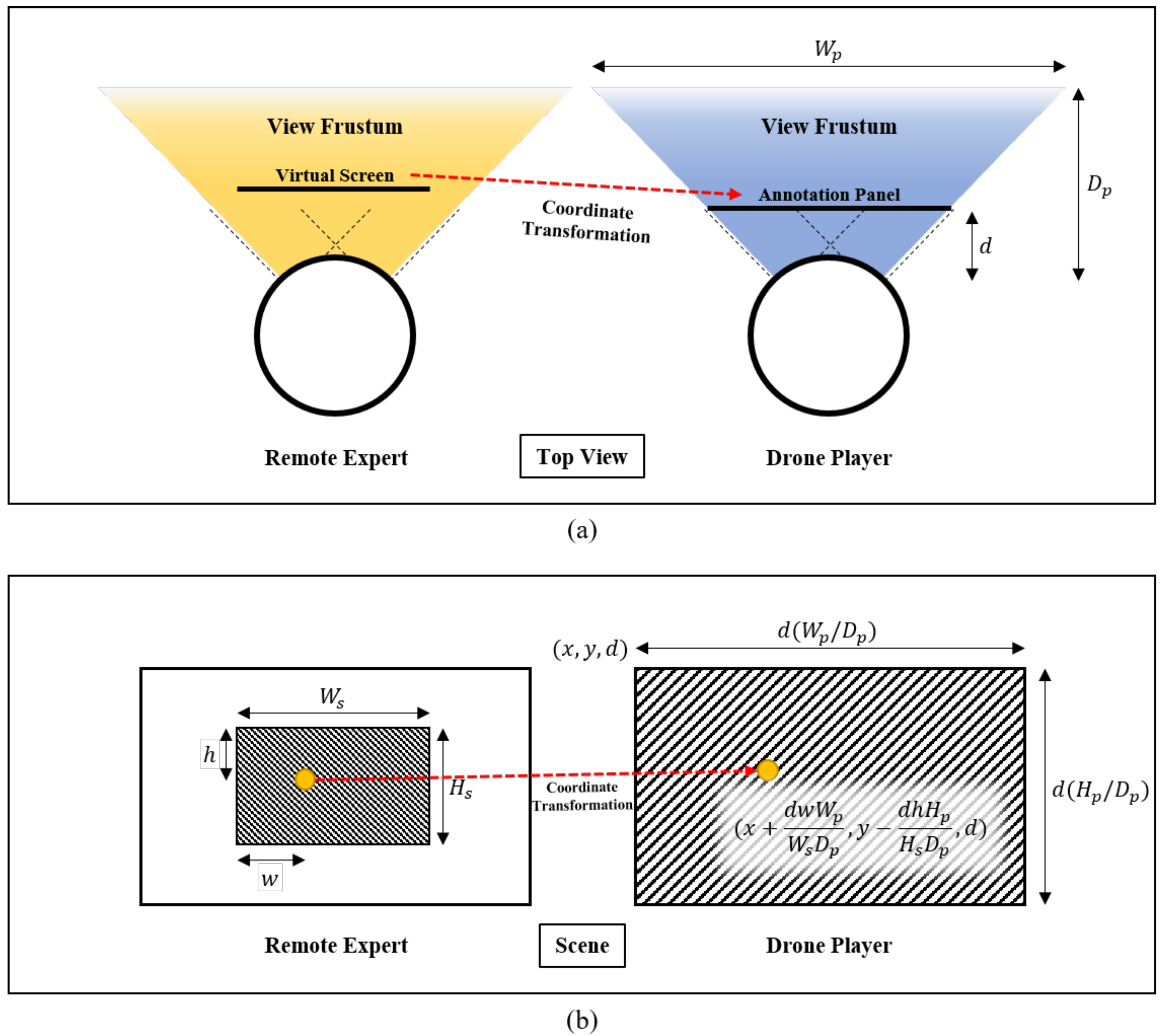

3.4. User Interactions and Remote Communication

| Algorithm 1 Training scenario configuration using extension tools | ||

| 1: | { } ▹ scenario list | 14: if input then |

| 2: | for each frame do | 15: if obstacles then |

| 3: | , getUserInput() | 16: getMapping() |

| 4: | if input then | 17: destroyObstacle(o) |

| 5: | insert(, ) | 18: end if |

| 6: | if obstacles then | 19: remove(, ) |

| 7: | initializeObstacle(e) | 20: if getLength() > 0 then |

| 8: | setMapping(e, o) | 21: getFirstElem() |

| 9: | syncObstacle(o) | 22: if obstacles then |

| 10: | else if getLength() = 1 then | 23: sendAnnotation(e) |

| 11: | sendAnnotation(e) | 24: end if |

| 12: | end if | 25: else |

| 13: | end if | 26: clear() |

| 27: end if | ||

| 28: end if | ||

| 29: end for | ||

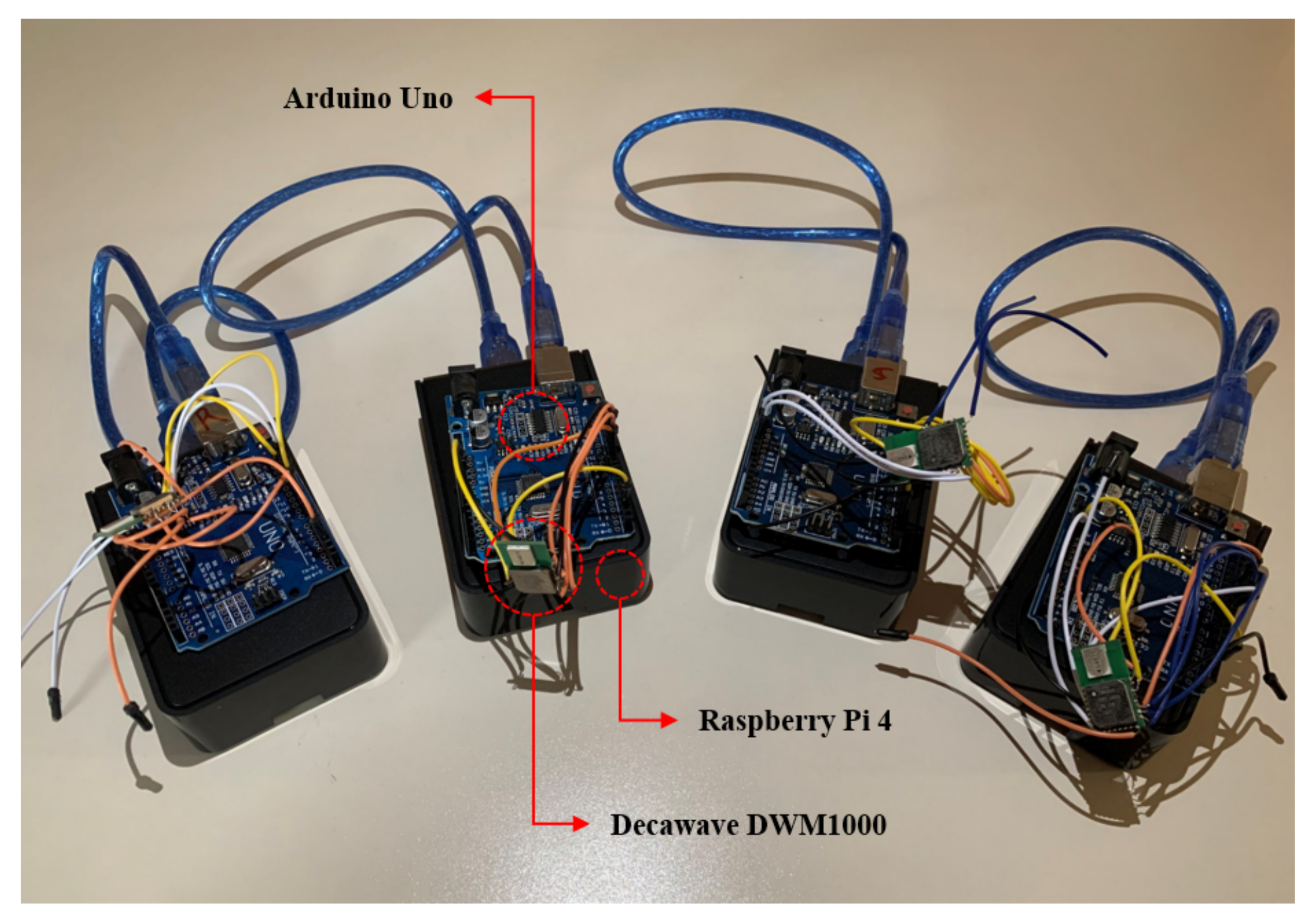

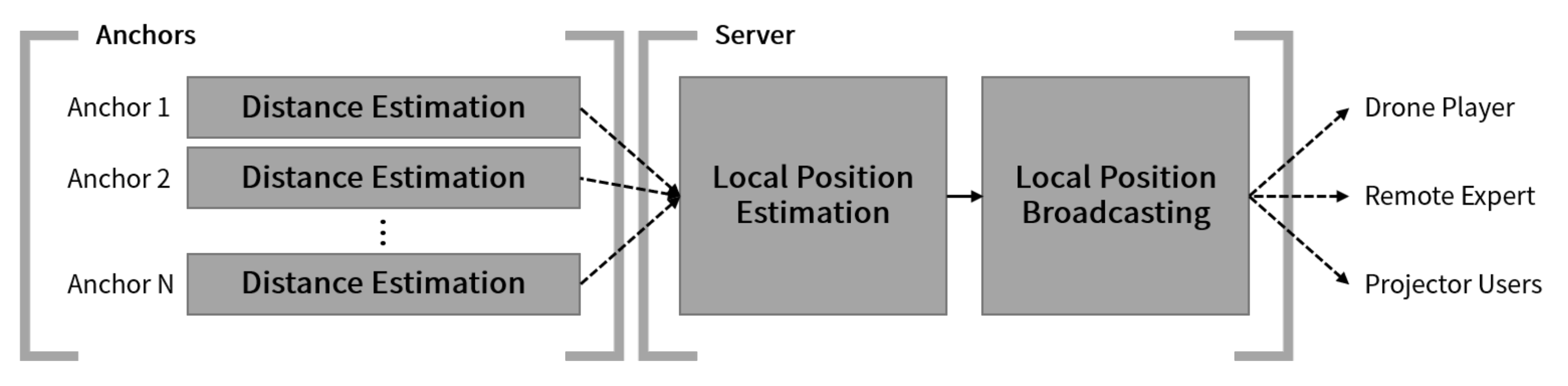

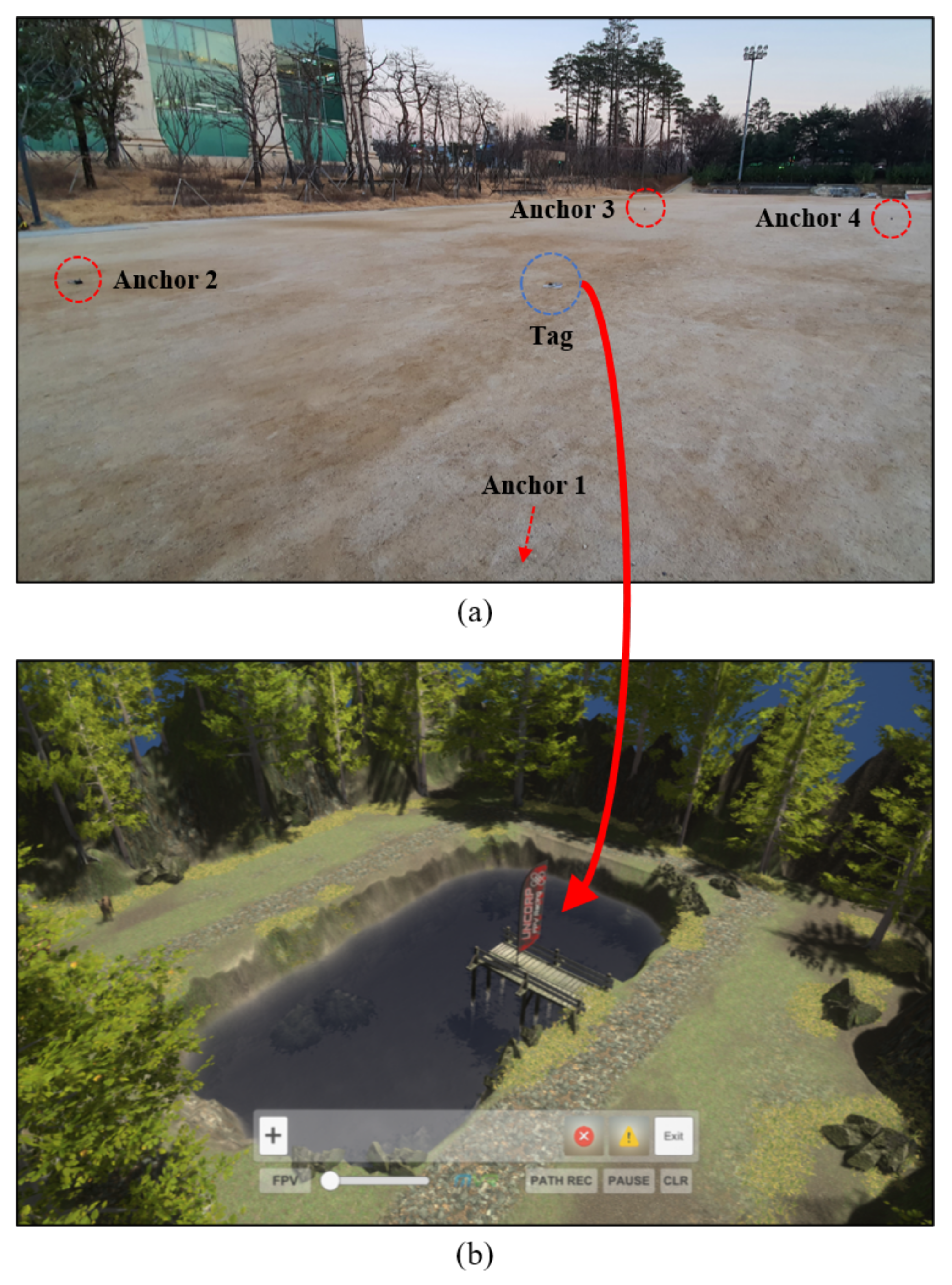

3.5. Configuration of Bidirectional Virtual Environments using Ultra-Wideband Wireless Communication Sensors

4. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented reality: A class of displays on the reality-virtuality continuum. Telemanipulator Telepresence Technol. 1995, 2351, 282–292. [Google Scholar]

- Rizwan, R.; Shehzad, M.N.; Awais, M.N. Quadcopter-Based Rapid Response First-Aid Unit with Live Video Monitoring. Drones 2019, 3, 37. [Google Scholar] [CrossRef] [Green Version]

- Microsoft HoloLens. Available online: https://www.microsoft.com/en-us/hololens/ (accessed on 20 May 2021).

- Erat, O.; Isop, W.A.; Kalkofen, D.; Schmalstieg, D. Drone-Augmented Human Vision: Exocentric Control for Drones Exploring Hidden Areas. IEEE Trans. Visual Comput. Graphics 2018, 24, 1437–1446. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Yang, N.; Li, A.; Paterson, J.; McPherson, D.; Cheng, T.; Yang, A.Y. Usability Evaluation for Drone Mission Planning in Virtual Reality. In Virtual, Augmented and Mixed Reality: Applications in Health, Cultural Heritage, and Industry; Chen, J., Fragomeni, G., Eds.; Springer: Cham, Switzerland, 2018; pp. 313–330. [Google Scholar]

- Vaquero-Melchor, D.; García-Hospital, J.; Bernardos, A.M.; Besada, J.A.; Casar, J.R. Holo-mis: A mixed reality based drone mission definition system. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Barcelona, Spain, 3–6 September 2018; pp. 365–370. [Google Scholar]

- Vaquero-Melchor, D.; Bernardos, A.M. Alternative interaction techniques for drone-based mission definition: From desktop UI to wearable AR. In Proceedings of the 18th International Conference on Mobile and Ubiquitous Multimedia, Pisa, Italy, 26–29 November 2019; pp. 1–5. [Google Scholar]

- Hayakawa, H.; Fernando, C.L.; Saraiji, M.Y.; Minamizawa, K.; Tachi, S. Telexistence Drone: Design of a Flight Telexistence System for Immersive Aerial Sports Experience. In Proceedings of the 6th Augmented Human International Conference, Singapore, Singapore, 9–11 March 2015; pp. 171–172. [Google Scholar]

- Smolyanskiy, N.; Gonzalez-Franco, M. Stereoscopic first person view system for drone navigation. Front. Rob. AI 2017, 4, 11. [Google Scholar] [CrossRef] [Green Version]

- Oculus. Available online: https://www.oculus.com/ (accessed on 20 May 2021).

- O’Keeffe, E.; Campbell, A.; Swords, D.; Laefer, D.F.; Mangina, E. Oculus Rift Application for Training Drone Pilots. In Proceedings of the 10th EAI International Conference on Simulation Tools and Techniques, Hong Kong, China, 11–13 September 2017; pp. 77–80. [Google Scholar]

- Piumsomboon, T.; Lee, G.A.; Ens, B.; Thomas, B.H.; Billinghurst, M. Superman vs Giant: A Study on Spatial Perception for a Multi-Scale Mixed Reality Flying Telepresence Interface. IEEE Trans. Visual Comput. Graph. 2018, 24, 2974–2982. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.; Go, Y.; Choi, S. First-person-view drone flying in mixed reality. In Proceedings of the SIGGRAPH Asia 2018 Posters, Tokyo, Japan, 4–7 December 2018; pp. 1–2. [Google Scholar]

- Kim, D.; Go, Y.; Choi, S. An Aerial Mixed-Reality Environment for First-Person-View Drone Flying. Appl. Sci. 2020, 10, 5436. [Google Scholar] [CrossRef]

- Cavallo, M.; Dholakia, M.; Havlena, M.; Ocheltree, K.; Podlaseck, M. Dataspace: A Reconfigurable Hybrid Reality Environment for Collaborative Information Analysis. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 145–153. [Google Scholar]

- Kunert, A.; Weissker, T.; Froehlich, B.; Kulik, A. Multi-Window 3D Interaction for Collaborative Virtual Reality. IEEE Trans. Visual Comput. Graphics 2020, 26, 3271–3284. [Google Scholar] [CrossRef] [PubMed]

- Plumsomboon, T.; Lee, G.A.; Irlitti, A.; Ens, B.; Thomas, B.H.; Billinghurst, M. On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow Scotland, UK, 4–9 May 2019; pp. 1–17. [Google Scholar]

- Norman, M.; Lee, G.A.; Smith, R.T.; Billinghurst, M. The impact of remote user’s role in a mixed reality mixed presence system. In Proceedings of the 17th International Conference on Virtual-Reality Continuum and its Applications in Industry, Brisbane, Australia, 14–16 November 2019; pp. 1–9. [Google Scholar]

- Teo, T.; Lawrence, L.; Lee, G.A.; Billinghurst, M.; Adcock, M. Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow Scotland, UK, 4–9 May 2019; pp. 1–14. [Google Scholar]

- Lee, G.; Kang, H.; Lee, J.; Han, J. A User Study on View-sharing Techniques for One-to-Many Mixed Reality Collaborations. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22–26 March 2020; pp. 343–352. [Google Scholar]

- Rhee, T.; Thompson, S.; Medeiros, D.; Anjos, R.d.; Chalmers, A. Augmented Virtual Teleportation for High-Fidelity Telecollaboration. IEEE Trans. Visual Comput. Graph. 2020, 26, 1923–1933. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Lee, G.; Billinghurst, M.; Huang, W. The combination of visual communication cues in mixed reality remote collaboration. J. Multimodal User Interfaces 2020, 14, 321–335. [Google Scholar] [CrossRef]

- Irlitti, A.; Piumsomboon, T.; Jackson, D.; Thomas, B.H. Conveying spatial awareness cues in xR collaborations. IEEE Trans. Visual Comput. Graph. 2019, 25, 3178–3189. [Google Scholar] [CrossRef] [PubMed]

- LaViola, J.J.; Kruijff, E.; McMahan, R.P.; Bowman, D.A.; Poupyrev, I. 3D User Interfaces: Theory and Practice, 2nd ed.; Addison-Wesley: Boston, MA, USA, 2017. [Google Scholar]

- Lee, J.; Su, Y.; Shen, C. A Comparative Study of Wireless Protocols: Bluetooth, UWB, ZigBee, and Wi-Fi. In Proceedings of the IECON 2007—33rd Annual Conference of the IEEE Industrial Electronics Society, Taipei, Taiwan, 5–8 November 2007; pp. 46–51. [Google Scholar]

- Zhang, J.; Orlik, P.V.; Sahinoglu, Z.; Molisch, A.F.; Kinney, P. UWB Systems for Wireless Sensor Networks. Proc. IEEE 2009, 97, 313–331. [Google Scholar] [CrossRef]

- Six Colors—The U1 Chip in the iPhone 11 is the Beginning of an Ultra Wideband Revolution. Available online: https://sixcolors.com/post/2019/09/the-u1-chip-in-the-iphone-11-is-the-beginning-of-an-ultra-wideband-revolution/ (accessed on 20 May 2021).

- Mobile ID World—NXP and Samsung Unveil First Android Device with UWB Technology. Available online: https://mobileidworld.com/nxp-and-samsung-unveil-first-android-device-with-uwb-technology-108132/ (accessed on 20 May 2021).

- Fresno, J.M.; Robles, G.; Martínez-Tarifa, J.M.; Stewart, B.G. Survey on the Performance of Source Localization Algorithms. Sensors 2017, 17, 2666. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pozyx. Available online: https://www.pozyx.io/ (accessed on 20 May 2021).

- Nelder, J.A.; Mead, R. A Simplex Method for Function Minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Liu, K.; Chauhan, S.; Devaraj, R.; Shahi, S.; Sreekumar, U. Enabling Autonomous Unmanned Aerial Systems via Edge Computing. In Proceedings of the IEEE International Conference on Service-Oriented System Engineering (SOSE), San Francisco, CA, USA, 4–9 April 2019; pp. 374–379. [Google Scholar]

- Go, Y.; Lee, J.; Kang, H.; Choi, S. Interactive Training of Drone Flight Control in Mixed Reality. In Proceedings of the SIGGRAPH Asia 2020 XR, Daegu, Korea, 10–13 December 2020; pp. 1–2. [Google Scholar]

- GitHub Repository—Thotro/Arduino-dw1000. Available online: https://github.com/thotro/arduino-dw1000 (accessed on 20 May 2021).

| Viewpoint | Characteristic |

|---|---|

| Third-person view (TPV) | Viewing the entire virtual flight environment |

| First-person view (FPV) | View from a virtual drone operated by an expert |

| Drone view (Drone) | Close-up view of the actual drone controlled by the pilot |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Go, Y.-G.; Kang, H.-S.; Lee, J.-W.; Yu, M.-S.; Choi, S.-M. Multi-User Drone Flight Training in Mixed Reality. Electronics 2021, 10, 2521. https://doi.org/10.3390/electronics10202521

Go Y-G, Kang H-S, Lee J-W, Yu M-S, Choi S-M. Multi-User Drone Flight Training in Mixed Reality. Electronics. 2021; 10(20):2521. https://doi.org/10.3390/electronics10202521

Chicago/Turabian StyleGo, Yong-Guk, Ho-San Kang, Jong-Won Lee, Mun-Su Yu, and Soo-Mi Choi. 2021. "Multi-User Drone Flight Training in Mixed Reality" Electronics 10, no. 20: 2521. https://doi.org/10.3390/electronics10202521

APA StyleGo, Y.-G., Kang, H.-S., Lee, J.-W., Yu, M.-S., & Choi, S.-M. (2021). Multi-User Drone Flight Training in Mixed Reality. Electronics, 10(20), 2521. https://doi.org/10.3390/electronics10202521