Design and Implementation of Deep Learning Based Contactless Authentication System Using Hand Gestures

Abstract

:1. Introduction

2. Materials and Methods

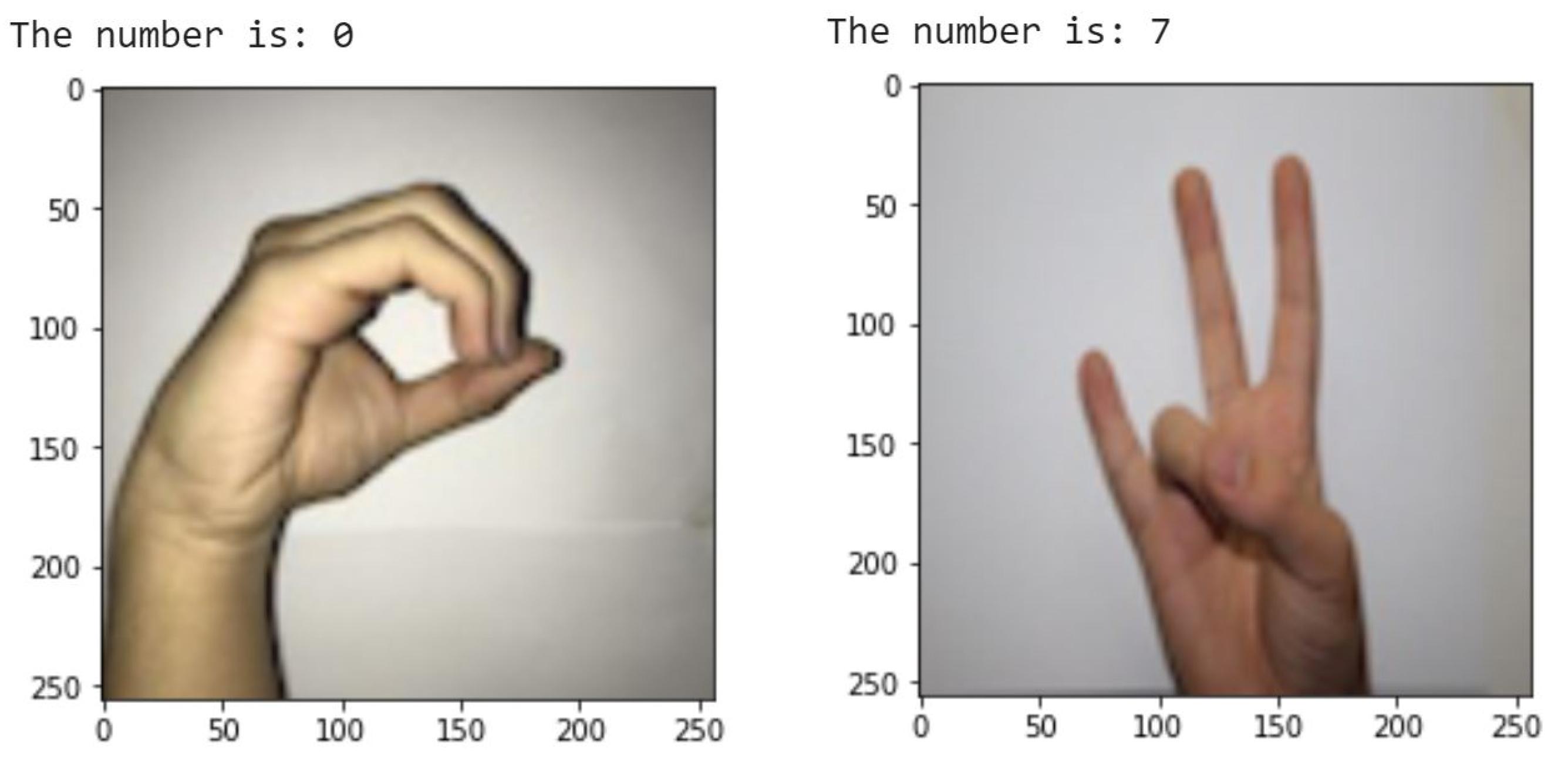

2.1. Dataset

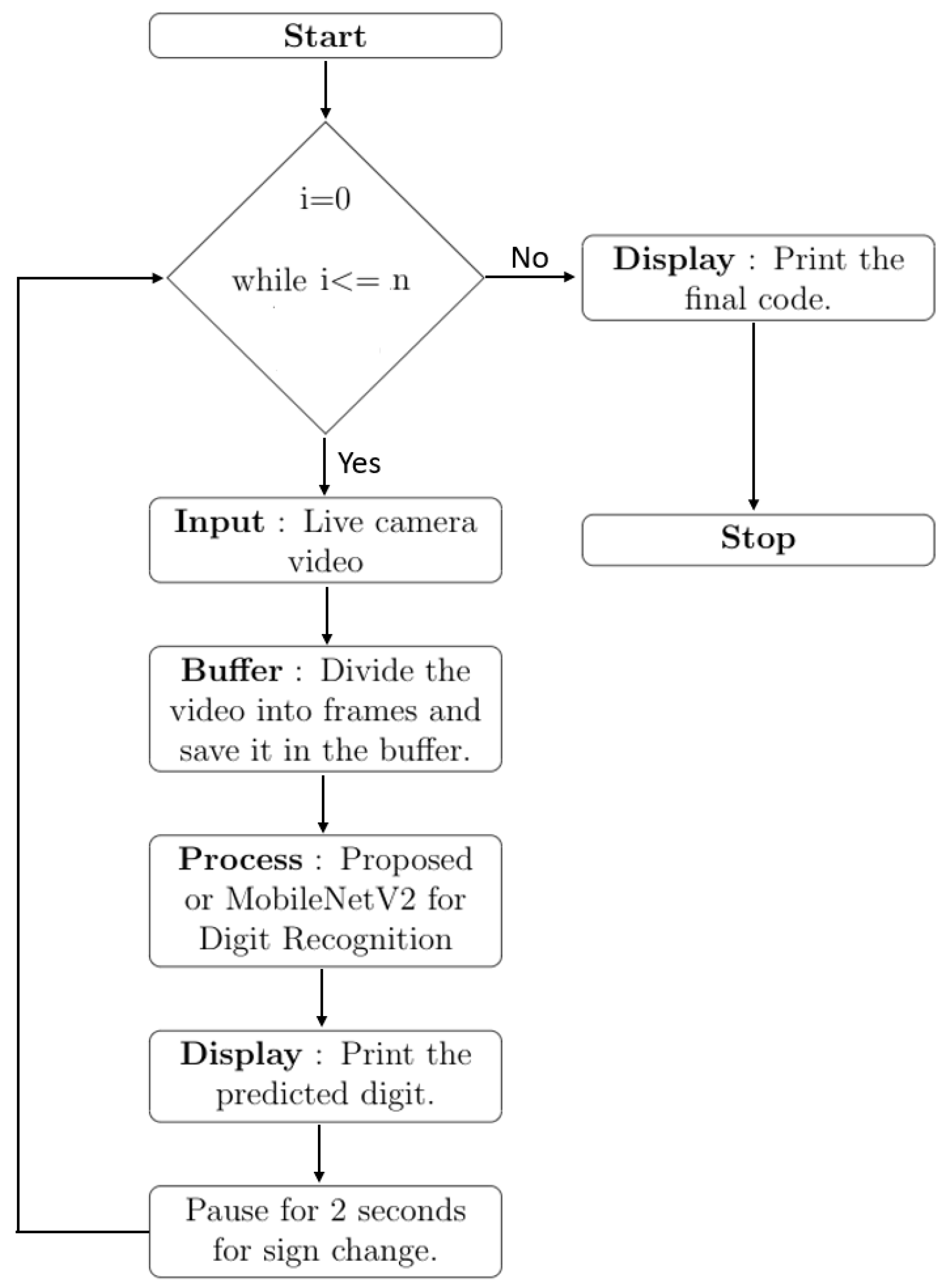

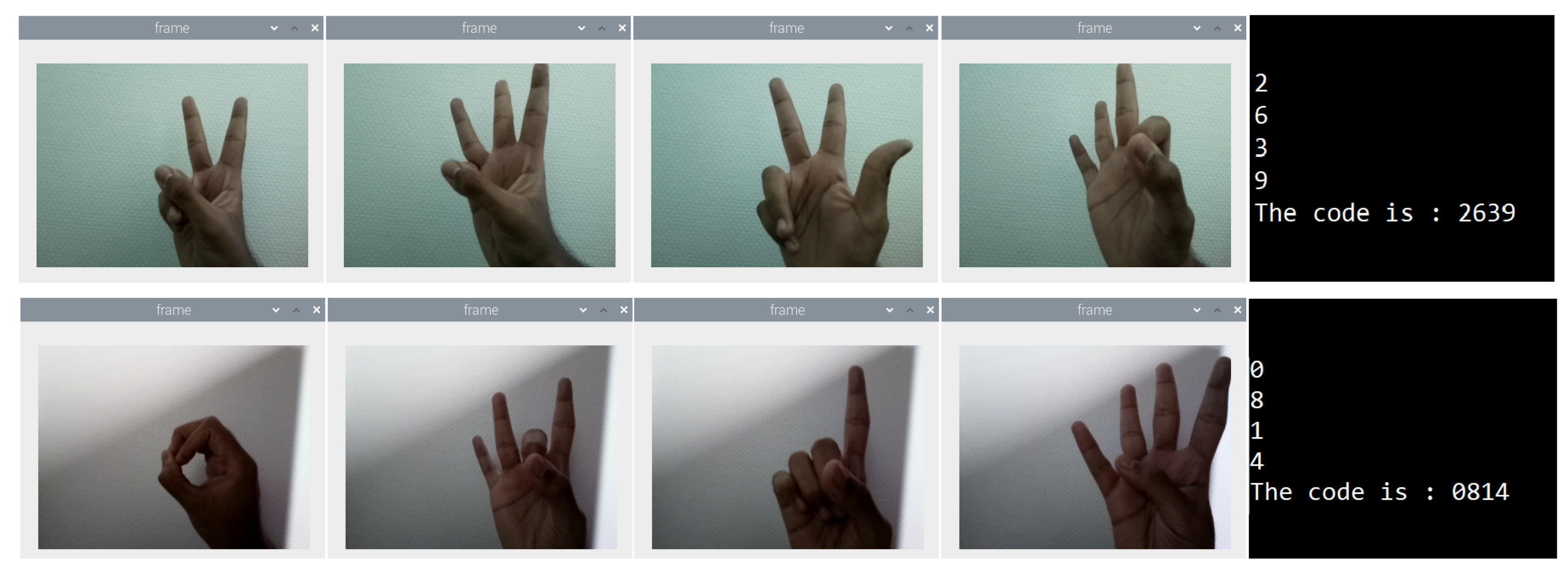

2.2. Proposed End to End System for Contactless Authentication

2.3. Lightweight Deep Learning Models for Hand Gestures Recognition

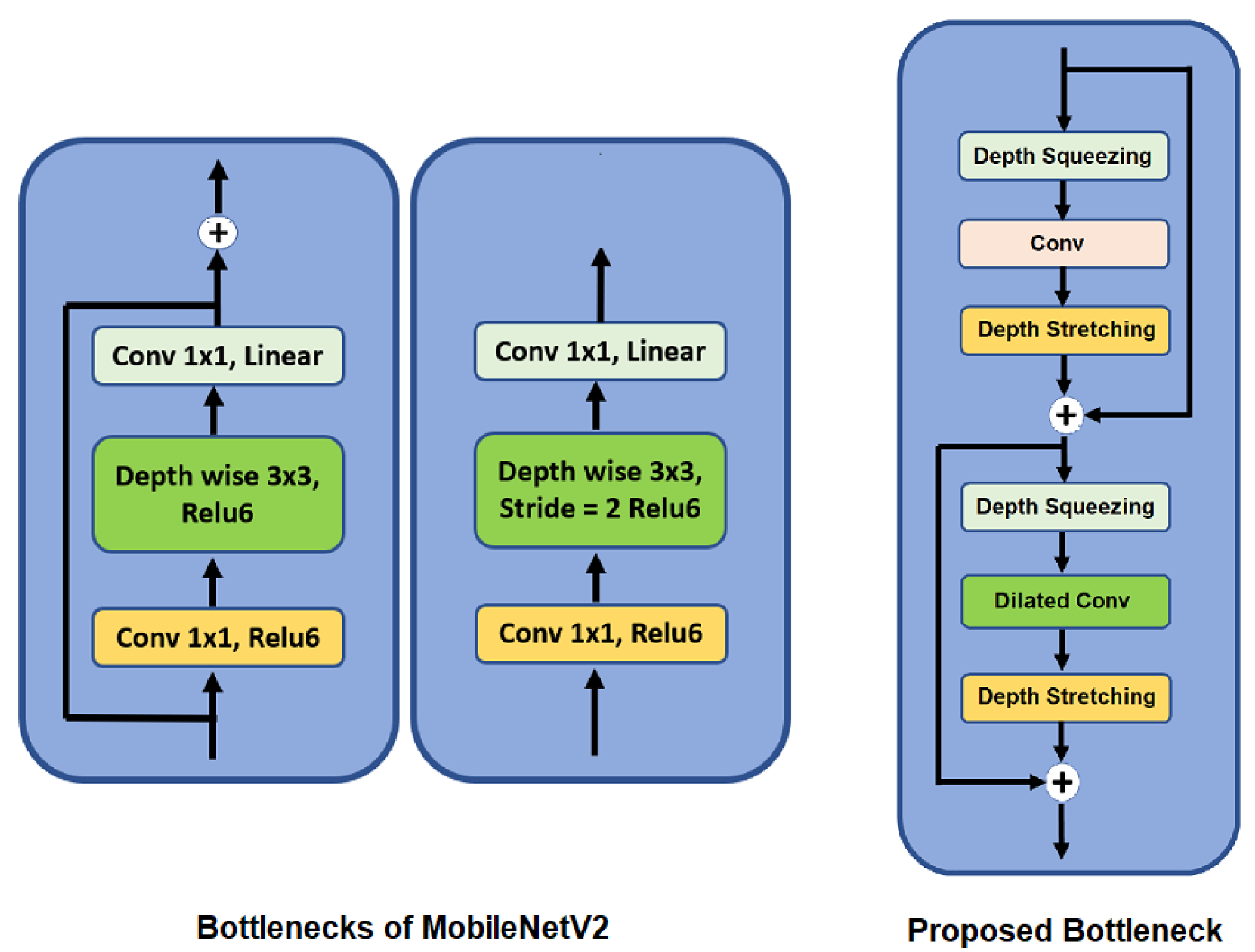

2.3.1. Mobilenetv2

- Feature extraction: In this method, we use the MobileNetV2 model pre-trained on the ImageNet dataset to work as a feature extractor. We do not include the final dense layer, ‘classification layer’ of the MobileNetV2 model because the number of classes for the ImageNet dataset is different from the ‘Sign Language Digit Dataset’ we use. So in total, we use 154 pre-trained layers of the MobileNetV2 model as the base model. The output from this base model is the learned features which is a 4 dimensional tensor of size [None, 8, 8, 1280]. We then flatten the output of the base model into a 2-dimensional matrix of size [None, 1280] using ‘Global Average Pooling 2D function’ [39]. After this layer we add a Dense Layer which is the classification layer for the dataset we use. Therefore using the Feature extraction method we do not train the base model (MobileNetV2, excluding its final layer) instead, we use the base model to extract features from the input sample and then use these extracted features as input to the dense layer (the added classification layer according to the ‘Sign Language Digit Dataset’) and just train the dense layer on our dataset. The following hyper-parameter values are used to train the MobileNetV2 model using this method, ‘RMS Prop optimizer’ [40] with a learning rate of 0.0001, batch size of 32 for a total of 10 epochs. The architecture and the total number of parameters (model weights) used for training the MobileNetV2 model using this method are shown in Table 2 and Table 3 respectively.

- Fine-tuning: In this method, we use the same model architecture as the above method, the MobileNetV2 model (excluding its classification layer) pre-trained on the ImageNet dataset is the base model and an added dense layer (classification layer according to our dataset). The only difference is that in this method, we train a few layers of the base model along with the final added dense layer on the ‘Sign Language Digit Dataset’. So in total, we freeze the first ‘99’ layers and start training from the layer ‘100’ to layer ‘154’ of the base model along with the final added dense layer on the ‘Sign Language Digit Dataset’. Therefore in this method, the number of trainable parameters is more as opposed to the above method. The following are the hyper-parameter values used for training the MobileNetV2 model using this method, ‘RMS Prop optimizer’ with a learning rate of 0.00001, batch size of 32 for a total of 25 epochs. The architecture and the total number of parameters used for training the MobileNetV2 model using this method are shown in Table 2 and Table 3 respectively.

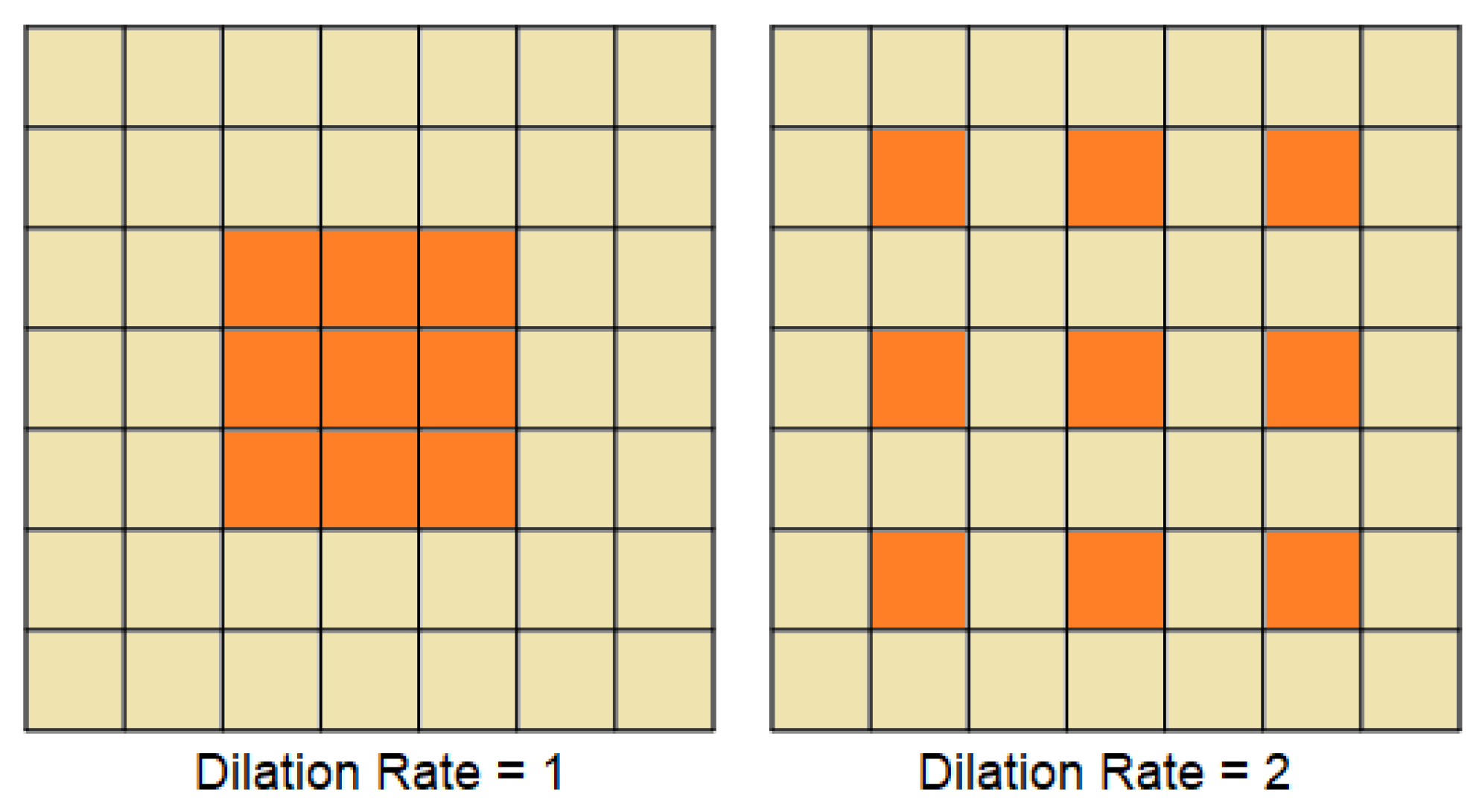

2.3.2. Proposed Model

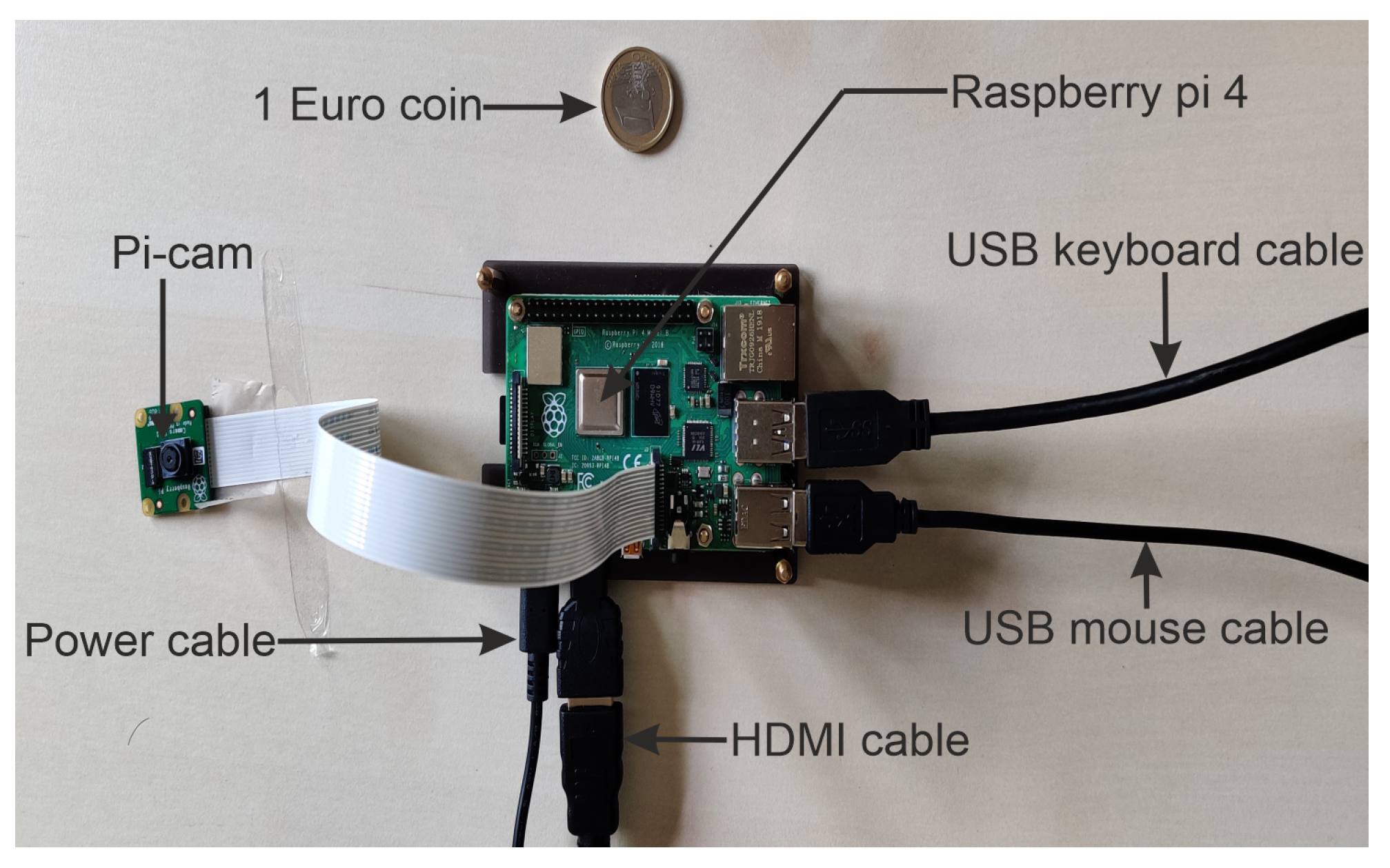

2.4. Deployment on Edge Computing Device

2.5. Performance Evaluation Metrics

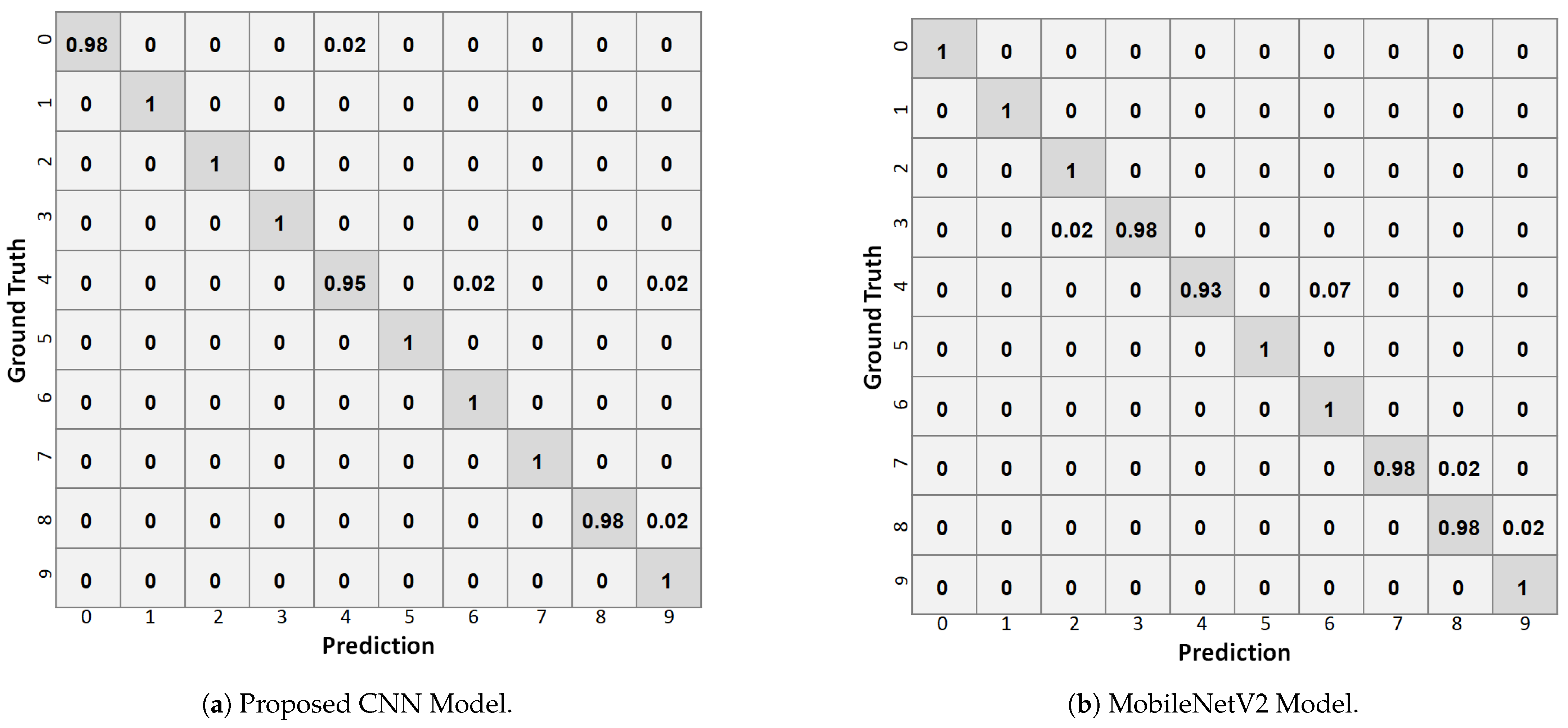

2.5.1. Confusion Matrix

2.5.2. True Positive

2.5.3. True Negative

2.5.4. False Positive

2.5.5. False Negative

2.5.6. Accuracy

2.5.7. Recall

2.5.8. Precision

2.5.9. F1-Score

3. Results

4. Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jain, A.K.; Ross, A.; Prabhakar, S. An introduction to biometric recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 4–20. [Google Scholar] [CrossRef] [Green Version]

- Yahya, F.; Nasir, H.; Kadir, K.; Safie, S.; Khan, S.; Gunawan, T. Fingerprint Biometric Systems. Trends Bioinform. 2016, 9, 52–58. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Chellappa, R.; Phillips, P.J.; Rosenfeld, A. Face Recognition: A Literature Survey. ACM Comput. Surv. 2003, 35, 399–458. [Google Scholar] [CrossRef]

- Zhang, D.; Lu, G.; Kong, A.W.K.; Wong, M. Palmprint Authentication System for Civil Applications. In Biometric Authentication; Maltoni, D., Jain, A.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 217–228. [Google Scholar]

- Mazumdar, J. Retina Based Biometric Authentication System: A Review. Int. J. Adv. Res. Comput. Sci. 2018, 9, 711–718. [Google Scholar] [CrossRef] [Green Version]

- Chowdhury, R.; Ghosh, D.; Agarwal, P.; Samir, K.; Bandyopadhyay, S. Ear Based Biometric Authentication System. World J. Eng. Res. Technol. 2018, 2, 224–233. Available online: https://www.researchgate.net/publication/328610283_EAR_BASED_BIOMETRIC_AUTHENTICATION_SYSTEM (accessed on 12 December 2020).

- Olatinwo, S.; Shoewu, O.; Omitola, O. Iris Recognition Technology: Implementation, Application, and Security Consideration. Pac. J. Sci. Technol. 2013, 14, 228–233. [Google Scholar]

- Ali, M.; Tappert, C.; Qiu, M.; Monaco, V. Keystroke Biometric Systems for User Authentication. J. Signal Process. Syst. 2017, 86. [Google Scholar] [CrossRef]

- Jabin, S.; Zareen, F. Biometric signature verification. Int. J. Biom. 2015, 7, 97. [Google Scholar] [CrossRef]

- Gafurov, D. A Survey of Biometric Gait Recognition: Approaches, Security and Challenges. In Proceedings of the Norsk Informatikkonferanse; Oslo, Norway, 2007; Available online: https://www.researchgate.net/profile/Davrondzhon_Gafurov/publication/228577046_A_survey_of_biometric_gait_recognition_Approaches_security_and_challenges/links/00b49528e834aa68eb000000.pdf (accessed on 12 December 2020).

- Iannizzotto, G.; Rosa, F. A SIFT-Based Fingerprint Verification System Using Cellular Neural Networks. In Pattern Recognition Techniques, Technology and Applications; I-Tech: Vienna, Asutria, 2008. [Google Scholar] [CrossRef]

- Naidu, S.; Chemudu, S.; Satyanarayana, V.; Pillem, R.; Hanuma, K.; Naresh, B.; CH.Himabin, d. New Palm Print Authentication System by Using Wavelet Based Method. Signal Image Process. 2011, 2. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105. [Google Scholar] [CrossRef] [Green Version]

- Zulfiqar, M.; Syed, F.; Khan, M.; Khurshid, K. Deep Face Recognition for Biometric Authentication. In Proceedings of the 2019 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Swat, Pakistan, 24–25 July 2019. [Google Scholar] [CrossRef]

- Praseetha, V.; Bayezeed, S.; Vadivel, S. Secure Fingerprint Authentication Using Deep Learning and Minutiae Verification. J. Intell. Syst. 2019, 29. [Google Scholar] [CrossRef]

- Shao, H.; Zhong, D. Few-shot palmprint recognition via graph neural networks. Electron. Lett. 2019, 55, 890–892. [Google Scholar] [CrossRef]

- Aizat, K.; Mohamed, O.; Orken, M.; Ainur, A.; Zhumazhanov, B. Identification and authentication of user voice using DNN features and i-vector. Cogent Eng. 2020, 7, 1751557. [Google Scholar] [CrossRef]

- Terrier, P. Gait Recognition via Deep Learning of the Center-of-Pressure Trajectory. Appl. Sci. 2020, 10, 774. [Google Scholar] [CrossRef] [Green Version]

- Poddar, J.; Parikh, V.; Bharti, D. Offline Signature Recognition and Forgery Detection using Deep Learning. Procedia Comput. Sci. 2020, 170, 610–617. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidi, A.; Su, H.; Bennamoun, M.; Zhang, D. Biometric Recognition Using Deep Learning: A Survey. arXiv 2019, arXiv:cs:CV/1912.00271. [Google Scholar]

- Wu, K.; Chen, Z.; Li, W. A Novel Intrusion Detection Model for a Massive Network Using Convolutional Neural Networks. IEEE Access 2018, 6, 50850–50859. [Google Scholar] [CrossRef]

- Kim, K.H.; Hong, S.; Roh, B.; Cheon, Y.; Park, M. Pvanet: Deep but lightweight neural networks for real-time object detection. arXiv 2016, arXiv:1608.08021. [Google Scholar]

- Deng, B.L.; Li, G.; Han, S.; Shi, L.; Xie, Y. Model Compression and Hardware Acceleration for Neural Networks: A Comprehensive Survey. Proc. IEEE 2020, 108, 485–532. [Google Scholar] [CrossRef]

- Dey, N.; Mishra, G.; Kar, J.; Chakraborty, S.; Nath, S. A Survey of Image Classification Methods and Techniques. In Proceedings of the International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumaru, India, 10–11 July 2014. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. (IJCV) 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. Master’s Thesis, Department of Computer Science, University of Toronto, Toronto, ON, Canada, 2009. Available online: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf (accessed on 13 December 2020).

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A.; Jawahar, C.V. Cats and Dogs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Nilsback, M.E.; Zisserman, A. A Visual Vocabulary for Flower Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 2, pp. 1447–1454. [Google Scholar]

- LeCun, Y.; Cortes, C. MNIST Handwritten Digit Database. 2010. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 3 January 2021).

- Foret, P.; Kleiner, A.; Mobahi, H.; Neyshabur, B. Sharpness-Aware Minimization for Efficiently Improving Generalization. arXiv 2020, arXiv:cs.LG/2010.01412. [Google Scholar]

- Wang, X.; Kihara, D.; Luo, J.; Qi, G.J. EnAET: Self-Trained Ensemble AutoEncoding Transformations for Semi-Supervised Learning. arXiv 2019, arXiv:cs.CV/1911.09265. [Google Scholar]

- Kolesnikov, A.I.; Beyer, L.; Zhai, X.; Puigcerver, J.; Yung, J.; Gelly, S.; Houlsby, N. Big Transfer (BiT): General Visual Representation Learning. Comput. Vis. Pattern Recognit. 2019, 6, 8. [Google Scholar]

- Byerly, A.; Kalganova, T.; Dear, I. A Branching and Merging Convolutional Network with Homogeneous Filter Capsules. arXiv 2020, arXiv:abs/2001.09136. [Google Scholar]

- Zeynep Dikle, A.M.; Students, T.A.A.A.H.S. Sign Language Digits Dataset. 2017. Available online: https://github.com/ardamavi/Sign-Language-Digits-Dataset (accessed on 2 June 2020).

- Gavade, A.; Sane, P. Super Resolution Image Reconstruction By Using Bicubic Interpolation. In Proceedings of the ATEES 2014 National Conference, Belgaum, India, October 2013. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in Network. arxiv 2013, arXiv:cs:CV/1312.4400. [Google Scholar]

- Tieleman, T.; Hinton, G. Lecture 6.5—RmsProp: Divide the Gradient by a Running Average of Its Recent Magnitude. COURSERA: Neural Networks for Machine Learning, 2012. Available online: http://www.cs.toronto.edu/~hinton/coursera/lecture6/lec6.pdf (accessed on 4 January 2021).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Keras: The Python Deep Learning Library. Available online: http://ascl.net/1806.022 (accessed on 4 January 2021).

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Ghori, K.M.; Abbasi, R.A.; Awais, M.; Imran, M.; Ullah, A.; Szathmary, L. Performance Analysis of Different Types of Machine Learning Classifiers for Non-Technical Loss Detection. IEEE Access 2020, 8, 16033–16048. [Google Scholar] [CrossRef]

| Class | Number of Samples | Number of Training Samples | Number of Validation Samples | Number of Testing Samples |

|---|---|---|---|---|

| 0 | 205 | 123 | 41 | 41 |

| 1 | 206 | 124 | 41 | 41 |

| 2 | 206 | 124 | 41 | 41 |

| 3 | 206 | 124 | 41 | 41 |

| 4 | 207 | 124 | 42 | 41 |

| 5 | 207 | 124 | 42 | 41 |

| 6 | 207 | 124 | 42 | 41 |

| 7 | 206 | 124 | 41 | 41 |

| 8 | 208 | 125 | 42 | 41 |

| 9 | 204 | 122 | 41 | 41 |

| Total | 2062 | 1238 | 414 | 410 |

| Layer Type | Output Size |

|---|---|

| MobileNetV2 (Base Model) | (None, 8, 8, 1280) |

| Global_Average_Pooling | (None, 1280) |

| Output | (None, 10) |

| Method | Total no. of Parameters | Trainable | Non-Trainable |

|---|---|---|---|

| Feature extraction method | 2,270,794 | 12,810 | 2,257,984 |

| Fine-tuning method | 2,270,794 | 2,236,682 | 34,112 |

| Layer Type | Operation | Output Size |

|---|---|---|

| Input | - | (None, M, M, Z) |

| 1 × 1 Conv | Depth Squeezing | (None, M, M, Z/4) |

| 3 × 3 Conv | Feature Extraction | (None, M, M, Z/4) |

| 1 × 1 Conv | Depth Squeezing | (None, M, M, Z) |

| Add | Addition | (None, M, M, Z) |

| 1 × 1 Conv | Depth Squeezing | (None, M, M, Z/4) |

| 3 × 3 Dilated Conv | Feature Extraction | None, M, M, Z/4) |

| 1 × 1 Conv | Depth Stretching | (None, M, M, Z) |

| Add | Addition | (None, M, M, Z) |

| Output | - | (None, M, M, Z) |

| Layer Type | Input | Conv | Conv | Bottleneck |

| Output Size | (None, 256, 256, 3) | (None, 127, 127, 64) | (None, 63, 63, 128) | (None, 63, 63, 128) |

| Layer Type | Conv | Bottleneck | Conv | Bottleneck |

| Output Size | (None, 31, 31, 256) | (None, 31, 31, 256) | (None, 15, 15, 128) | (None, 15, 15, 128) |

| Layer Type | Conv | Bottleneck | Conv | Flatten |

| Output Size | (None, 7, 7, 64) | (None, 7, 7, 64) | (None, 3, 3, 16) | (None, 144) |

| Layer Type | Dense | - | - | - |

| Output Size | (None, 10) | - | - | - |

| Model | MobileNetV2 | Proposed |

|---|---|---|

| Tag | ||

| Parameters | 2.27 Million | 0.97 Million |

| TF Model Size | 11.1 MB | 6 MB |

| TFL Model Size | 8.5 MB | 4 MB |

| TFL Inference time | 212 ms | 280 ms |

| Model | Accuracy |

|---|---|

| Proposed CNN | 99.1% |

| MobileNetV2 | 98.5% |

| Class | Recall | Precision | F1-score | |||

|---|---|---|---|---|---|---|

| Proposed CNN | MobileNetV2 | Proposed CNN | MobileNetV2 | Proposed CNN | MobileNetV2 | |

| 0 | 0.98 | 1.0 | 1.0 | 1.0 | 0.99 | 1.0 |

| 1 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| 2 | 1.0 | 1.0 | 1.0 | 0.98 | 1.0 | 0.99 |

| 3 | 1.0 | 0.98 | 1.0 | 1.0 | 1.0 | 0.99 |

| 4 | 0.95 | 0.93 | 0.97 | 1.0 | 0.96 | 0.96 |

| 5 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| 6 | 1.0 | 1.0 | 0.98 | 0.93 | 0.99 | 0.97 |

| 7 | 1.0 | 0.98 | 1.0 | 1.0 | 1.0 | 0.99 |

| 8 | 0.98 | 0.98 | 1.0 | 0.98 | 0.99 | 0.98 |

| 9 | 1.0 | 1.0 | 0.95 | 0.98 | 0.98 | 0.99 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dayal, A.; Paluru, N.; Cenkeramaddi, L.R.; J., S.; Yalavarthy, P.K. Design and Implementation of Deep Learning Based Contactless Authentication System Using Hand Gestures. Electronics 2021, 10, 182. https://doi.org/10.3390/electronics10020182

Dayal A, Paluru N, Cenkeramaddi LR, J. S, Yalavarthy PK. Design and Implementation of Deep Learning Based Contactless Authentication System Using Hand Gestures. Electronics. 2021; 10(2):182. https://doi.org/10.3390/electronics10020182

Chicago/Turabian StyleDayal, Aveen, Naveen Paluru, Linga Reddy Cenkeramaddi, Soumya J., and Phaneendra K. Yalavarthy. 2021. "Design and Implementation of Deep Learning Based Contactless Authentication System Using Hand Gestures" Electronics 10, no. 2: 182. https://doi.org/10.3390/electronics10020182

APA StyleDayal, A., Paluru, N., Cenkeramaddi, L. R., J., S., & Yalavarthy, P. K. (2021). Design and Implementation of Deep Learning Based Contactless Authentication System Using Hand Gestures. Electronics, 10(2), 182. https://doi.org/10.3390/electronics10020182