1. Introduction

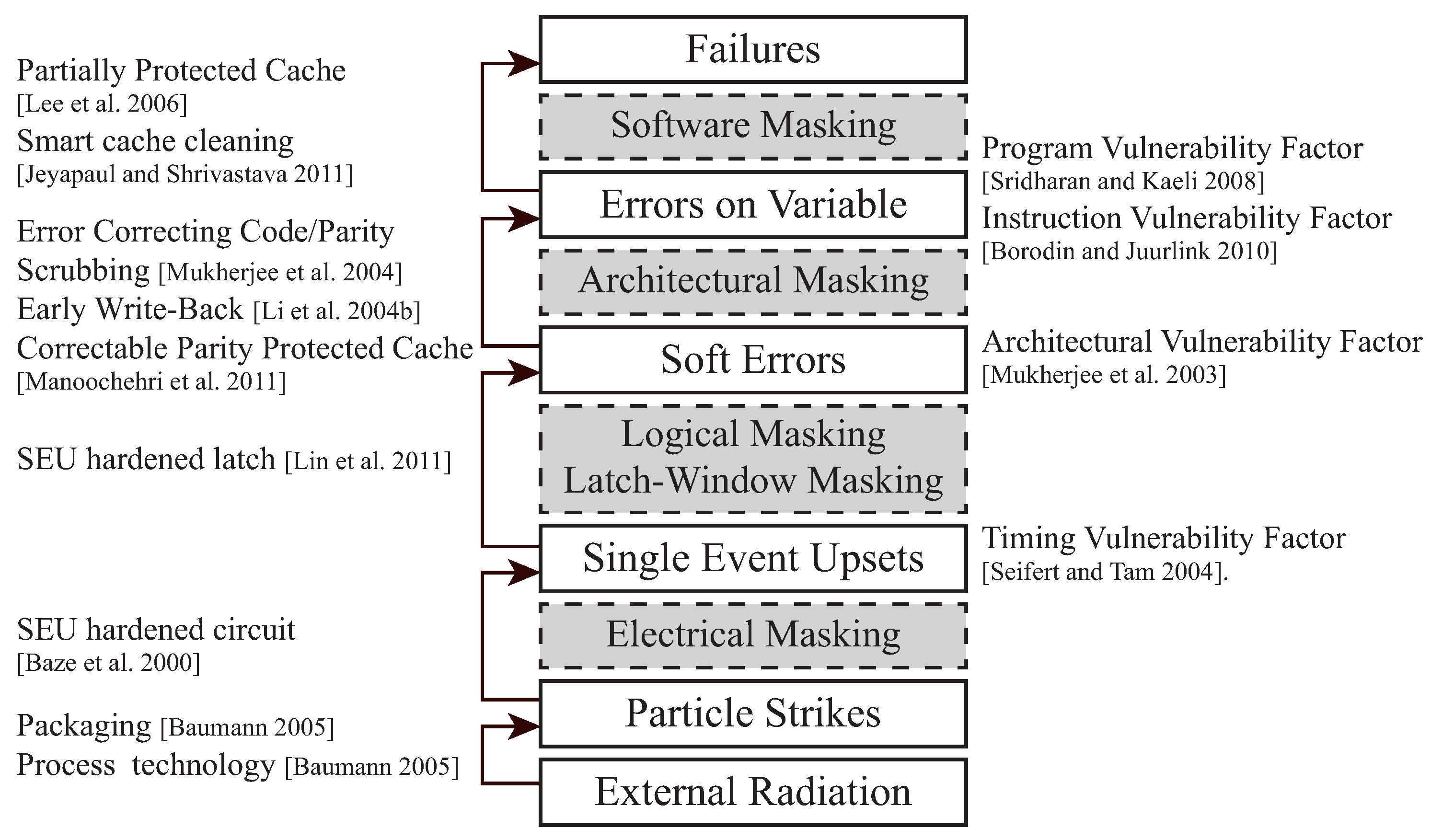

Reliability against soft errors is becoming one of the most crucial design concerns in modern embedded systems [

1]. Soft errors are transient faults in semiconductor devices caused by external radiation, such as alpha particles, thermal neutrons, cross-talk, and cosmic rays [

2]. The soft error rate is exponentially increasing, mainly because of the reduction in chip size and supply voltage as technology scales [

3]. Several techniques have been presented in various layers to protect embedded systems against soft errors. Because most protection techniques are based on redundancy methods, they should be more complicated than unprotected architectures, that is, larger hardware areas, longer execution times, or both. However, soft error protection techniques can be expensive or ineffective and sometimes fail to protect systemss [

4]. Thus, we need to quantify the system reliability against soft errors in a quantitative and accurate manner to apply appropriate protection for resource-constrained or hard real-time embedded systems.

To quantify system reliability against soft errors, accelerated radiation beam testing [

5] and exhaustive fault injection campaigns [

6] have been used. Beam testing and fault injections are the two most accurate methods for representing system reliability against soft errors. However, they are not only expensive to perform but also challenging to set up correctly. Vulnerability modeling is an alternative method to evaluate reliability against soft errors [

7] efficiently. Vulnerability estimation tools based on cycle-accurate simulators [

8,

9] are much faster than beam testing or fault injection campaigns because they can return vulnerability using only a single simulation. However, vulnerability estimation can be inaccurate, mainly due to several existing masking effects, because it only considers microarchitecture-level behaviors and ignores software-level masking effects. To the best of our knowledge, there have been no research works to analyze masking effects at the system level.

In this study, we developed and implemented a system-level framework designed to characterize soft error masking effects and analyze their distributions based on a cycle-accurate gem5 simulator [

10] by considering both microarchitecture-level and software-level masking effects. We injected faults into the register file in an out-of-order processor for comprehensive system-level masking effects. Because errors in register files can be quickly and frequently propagated to other components [

11], they can represent the fault injection into all the microarchitectural components in the out-of-order processor [

12]. Furthermore, the register file is among the most sensitive components in a processor. The register file is essential to modern processors, and it usually holds data for a long time before being used.

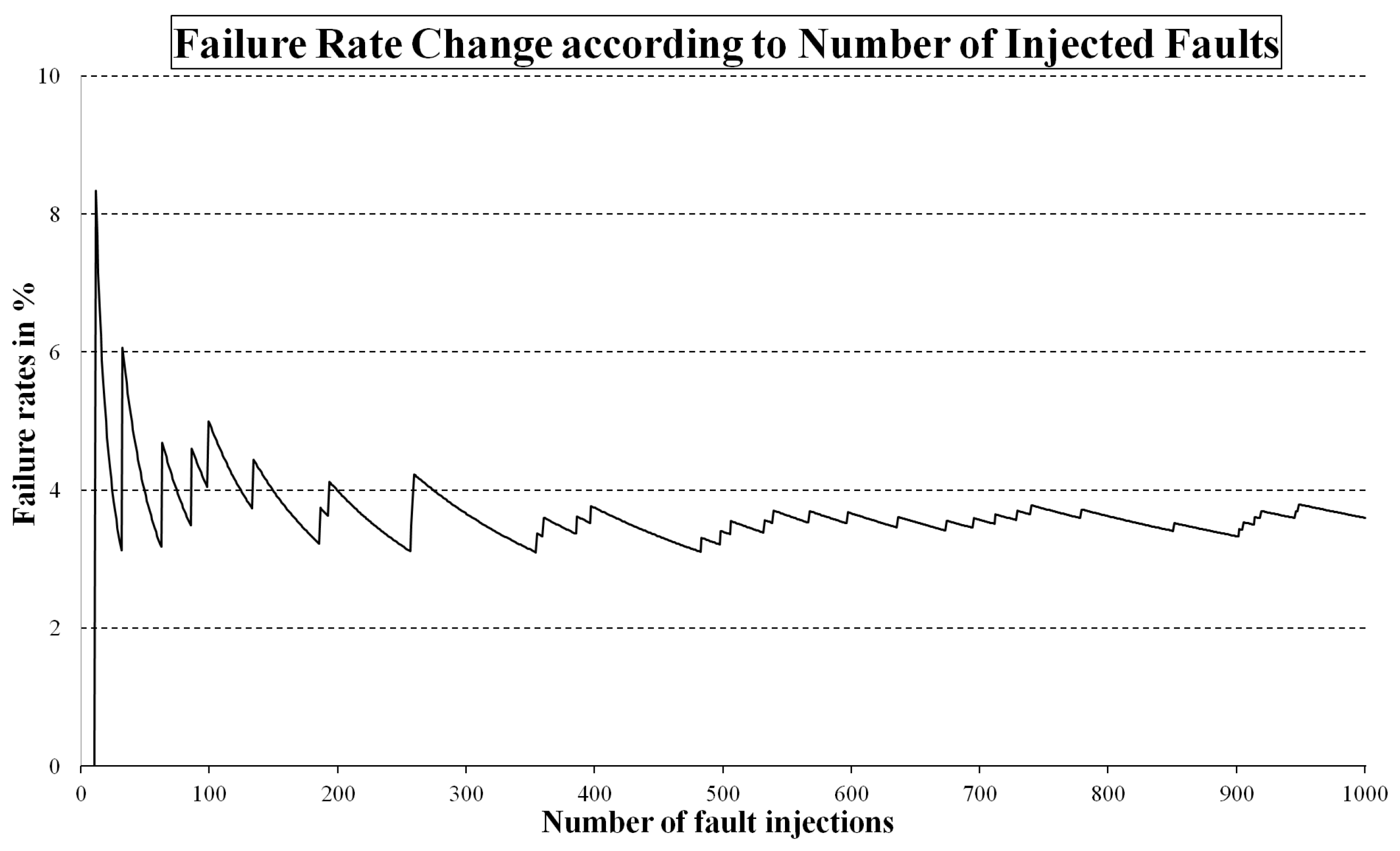

We injected faults (soft errors) into randomly chosen bits in the register file at a randomly selected time during the execution time. Then, our framework determined whether an injected fault induces system failures. Existing fault injection approaches [

13,

14] focus on the failure rate, and they can return the number of injected faults that are eventually masked. However, our framework provides not only the failure rate but also the distribution of the masking effects from fault injection campaigns. Furthermore, if an inject fault does not cause any system failures (i.e., an error is masked), our framework analyzes the masking effect at the system level: (i) microarchitecture level and (ii) software level.

First, our framework analyzes the microarchitecture-level masking effects based on the microarchitectural behaviors of the components. For example, if committed instructions do not read the corrupted data due to soft errors in the register file, the corrupted data on the register file do not cause system failure or result in incorrect output. Our framework needs to trace microarchitecture-level behaviors (such as read and write operations) and analyze their masking effects to decide whether committed instructions read corrupted data. For instance, it is not vulnerable if write operations overwrite corrupted data before they are read or used for program execution.

Second, we also need to analyze masking effects at the software level when corrupted data is read by committed instructions but correct output. For instance, a compare instruction compares two source operands and sets the flag bits accordingly, but soft errors on one source operand may not change flag bits or affect systems at all (e.g., CMP , 0x10 and has been erroneous from 0x00 to 0x01). Because the compare instruction reads the corrupted data in , the microarchitecture-level analysis determines this behavior as vulnerable. However, it does not affect the program, and it is not vulnerable. Thus, it is necessary to characterize and analyze them at the software level and accurately estimate the system-level susceptibility to soft errors.

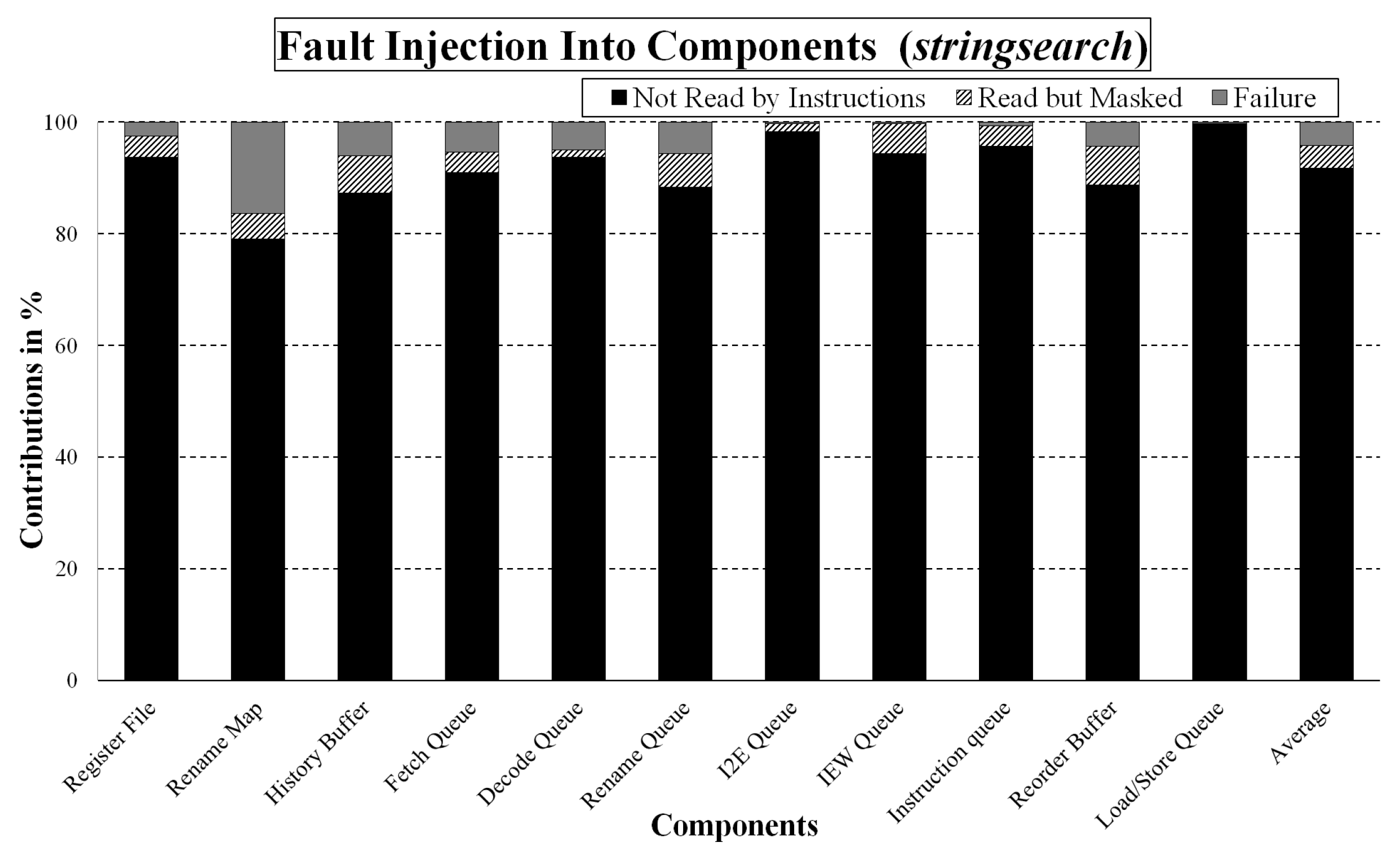

Interestingly, our experimental results revealed that only 5% of the injected faults on the register file in an out-of-order processor cause system failures, such as segmentation faults, infinite loops, or incorrect outputs from several benchmarks, but primarily from the MiBench benchmark suite [

15]. Thus, we need to analyze system-level masking effects to understand why 95% of the injected faults do not cause system failures. First, our system-level analysis framework traces microarchitectural behaviors, such as reads and writes and instruction commitments, to answer whether committed instructions read corrupted data. If not (e.g., corrupted data is overwritten or program execution does not use corrupted data), they are not vulnerable and do not affect the program. Our framework has shown that, interestingly, 91% of fault injections are masked on average over benchmarks because committed instructions do not read them.

If program execution reads corrupted data on the register file, we need to analyze the masking effects at the software level, such as assembly/instruction level. Interestingly, our experimental results show that almost 40% of the injected faults do not cause any system failures, even though committed instructions read them. Therefore, our framework compared outputs and execution traces (e.g., instructions, register data, and memory data) between the original execution and corrupted execution with a fault injection campaign to analyze the masking effects at the software level. If their program outputs are identical, execution traces are evaluated to investigate the masking effects at the software level. Furthermore, we traced the propagation of soft errors to other registers and memory components, and our framework analyzes the masking effects from propagated errors.

Our experimental results on several benchmarks show several interesting results based on a software-level masking effect analysis. First off, more than half of the software-level masking effects come from dynamically dead instructions, whose results are no longer used nor affect system behaviors. Second, when injected faults were masked at the software level, approximately 17% and 12% of soft errors induced corrupted operands in comparison and logical instructions, respectively. However, the results of these instructions were identical to those of the original execution without soft errors. Finally, approximately 16% of software-level masking effects take the incorrect branch, but they can still result in the correct output with with slightly affected execution time (at most 2%).

The remainder of this paper is structured as follows. In

Section 2, we introduce related research on masking effects and reliability quantification at various layers. Next, the theoretical background of system-level masking effects is described in

Section 3, and we explain our masking effect analysis framework in

Section 4. Then, our experimental observations based on our framework are described in

Section 5. Finally,

Section 6 concludes this paper and indicates directions for future research.

3. Classification When Soft Errors Occur

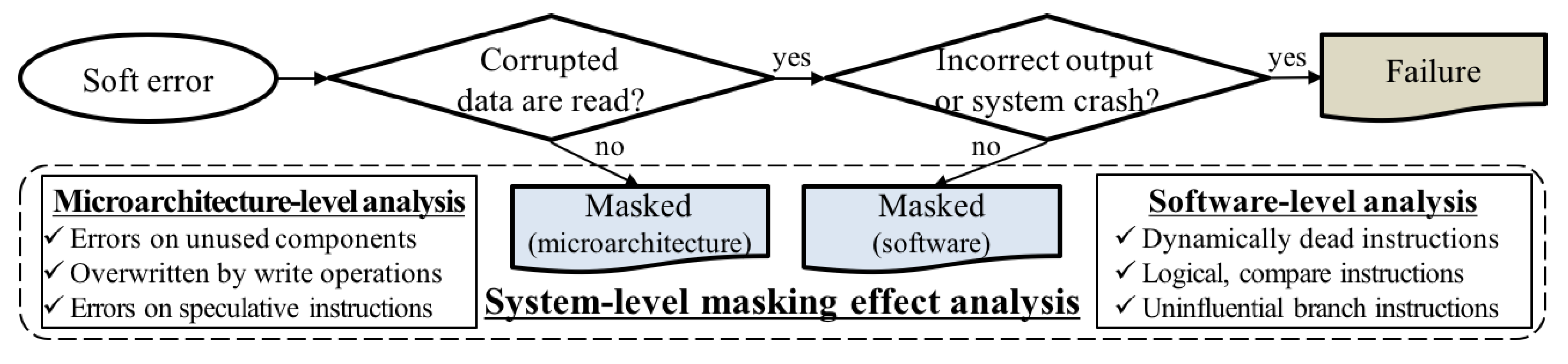

Figure 2 shows the classification model when a soft error corrupts the data in the microstructural components. Existing fault injection schemes [

13,

14] only consider whether soft errors induce failures. On the other hand, our framework analyzes system-level masking effects both at the microarchitecture-level and at the software level because not all the faults in microarchitectural components cause system failures. Microarchitecture-level analysis determines whether corrupted data is used or read for program execution. The software-level analysis traces software-level behaviors to analyze masking effects when corrupted data is read but still results in the correct output.

As shown in

Figure 2, corrupted data in microarchitectural components can be masked at the microarchitectural level if committed instructions do not read corrupted data. Furthermore, soft errors do not affect the program execution when corrupted data is not used, overwritten, or used by mispredicted instructions (i.e., uncommitted/squashed instructions) in the out-of-order execution. These masking effects can be analyzed by tracing microarchitectural behaviors, such as reads and writes of each microarchitectural component and instruction commitment.

If committed instructions read corrupted data, it is necessary to check whether they cause a system crash. If the system halts (i.e., segmentation faults or system crashes) or program loops forever (i.e., infinite loop), we define these instances as system failures. If the program returns output without system failures, we must compare the output with the original one. If their outputs are different, they are also defined as failures (i.e., silent data corruption). Even though committed instructions read corrupted data, they can still produce the correct output because of several software-level masking effects.

Soft errors do not induce failures if dynamically dead instructions read corrupted data caused by soft errors. Dynamically dead instruction is an instruction whose result is no longer used or affects system behaviors [

32]. Statically dead instructions can be excluded from the assembly code at compile-time, but dynamically dead instructions cannot be determined before execution. For instance, instructions in the loop can be executed many times, and only a part of the instructions can be dynamically dead based on the context. Thus, we need to trace whether other instructions use corrupted results of instructions by analyzing the data flow of a program.

In

Figure 3, instruction A (STORE

, 0x100) stores data contained in

into the memory 0x100. If data contained in

is changed to 0x11 from 0x10 due to soft errors, memory 0x100 will also contain the corrupted data (0x11). However, corrupted in

is overwritten by instruction B (MOV

,

), and the corrupted memory 0x100 is also overwritten by instruction C (STORE

, 0x100). Memory 0x100 is updated by instruction A (STORE

, 0x100), but the memory data is overwritten by instruction C (STORE

, 0x100) before being used or read for program execution. Thus, it does not affect the program if soft error corrupts the data in

at instruction A (STORE

, 0x100) since the result of instruction A is not used (overwritten).

Second, instructions can return correct results even when they read corrupted data. These software-level masking effects can occur in logical instructions (such as logical AND and OR instructions) and compare instructions. If one of the source bits of logical AND instruction is zero, the result is always zero, regardless of the source bit. On the other hand, logical OR instruction always returns one if the source bit of the instruction is one. The result of the comparison instructions can also be correct even if one of the source registers is corrupted. Assume that an instruction compares the data between the two registers, and they have different values. Even though register data is corrupted by soft errors, the values in the two registers can still be different. If a source register of instruction is damaged, we need to trace whether the results of these instructions are different from the original execution.

We have captured and simplified the masking effect at the logical AND instruction from our preliminary experiments. In

Figure 4a, a logical AND instruction (AND

,

,

) stores the result of logical AND between data in

and

into

register. In addition, source registers

and

store 0x00 and 0x01, respectively. Assume that data contained in

is corrupted, and it is changed to 0x10 from 0x00. Fault in data contained in

is not propagated to the destination register (

) since the result of corrupted AND instruction (0x10 AND 0x01) and that of the original one (0x00 AND 0x01) are still identical (0x00). If one of the source bits of logical AND instruction is zero, the result is always zero regardless of another source bit. On the other hand, logical OR instruction always returns one if a source bit of the instruction is one.

The following software-level masking effect can occur due to compare instructions, as shown in

Figure 4b. Although compare instructions read corrupted data, the result of compare instructions can still be the same. We have found a set of instructions to show the masking effect with compare instructions from our preliminary experiments. In this example, a compare instruction (CMP

, 0x00) compares the data in

and immediate value zero (0x00). Assume that

contains 0x01 originally, and it is changed to 0x11 due to soft errors. In case of original execution, data in

(0x01) is greater than zero (0x00). Even though soft error corrupts data contained in

to 0x11 from 0x01, data in

(0x11) is still greater than zero (0x00). Thus, the result of compare instruction (flag bits) can still be the same as compared to that of original execution, and soft errors do not affect the application at all.

Lastly, incorrectly taken branches due to soft errors can still result in the correct output [

33]. Wang et al. proposed that approximately 40% of dynamic branches are outcome tolerant, which means that a program can return the correct outputs even though it requires additional instructions due to the incorrect branch. The execution time can be slightly increased or decreased compared to the original execution because control flow violations execute different instructions. Thus, we need to consider two factors from the control flow of a program for software-level masking effects: (i) control flow is correct or not compared to the original execution, and (ii) if an incorrect branch is taken, it affects the final program outputs.

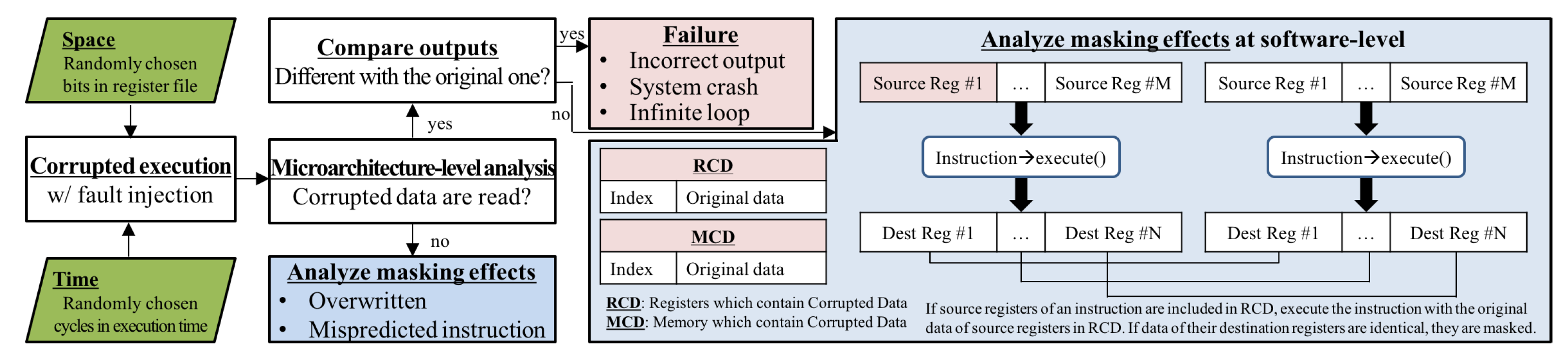

4. Proposed Masking Effects Analysis Framework

4.1. Fault and Failure Model

To characterize system-level masking effects and analyze their distributions, we implemented an automated framework based on fault injection campaigns, as shown in

Figure 5. We have performed fault injection campaigns to mimic soft errors in the register file on ARM architecture with gem5 [

10] system-call emulation mode. In this work, we have used single-bit flip models instead of multiple-bit soft errors for brevity’s sake. The rate of multiple-bit transient faults is increasing with the aggressive technology scaling. However, the rate of multiple-bit error is almost negligible as compared to single-bit errors. For example, the single-bit flip is 100 × more frequent than multiple-bit soft errors [

34].

Note that we have used system-level gem5 simulator since we can track the detailed behavior of microarchitectural components and software instructions. Therefore, we can track the error propagation of transient faults from hardware and software perspectives. However, it is challenging to capture the detailed behavior of hardware and software by using real hardware. The spatial and temporal domains of the fault injection campaign are randomly chosen from the entire physical integer register and execution time, respectively.

After the randomly selected fault is injected into the register file, our framework traces the microarchitectural behaviors of the microarchitectural components. If committed instructions do not read corrupted data, this fault does not affect the program because they are not used for the program execution, as shown in

Figure 2. If committed instructions read corrupted data, we need to trace whether a system crash occurs. If a system crash occurs (e.g., segmentation faults, page table faults, etc.), it is defined as a failure (system crash). It is also defined as a failure if the execution time of the fault-injected execution is more than double the original execution time (i.e., infinite loop). If a program returns the output without a system crash, our framework compares the output with the original one. If their outputs are different, it is also defined as a failure (silent data corruption). If the outputs are still identical to the original one within the deadline, we need to analyze the masking effects at the software level.

4.2. Error Propagation Model

Data structures, RCD (registers that contain corrupted data) and MCD (memory which includes corrupted data), have been exploited to analyze masking effects at the software level, as shown in

Figure 5. We traced memory by using MCD and register data by RCD because corrupted data in the register can be propagated to the memory. RCD and MCD store indexes (register number or memory address) contain corrupted data and their original for register and memory, respectively. Note that (index, data) represents the index and original data in RCD and MCD, respectively. The following error propagation model updates the RCD and MCD during corrupted execution.

We modeled error propagation according to various types of instructions. We analyzed the error spread of non-memory instructions, such as data movement between registers (MOV) and ALU instructions. If non-memory instructions read corrupted data, errors can be propagated to the destination register. Assume that there is an addition instruction (ADD , , ), and and contain 0x01 and 0x02, respectively. Without soft errors, data contained in should become 0x03 as a result of the addition instruction. If data contained in is corrupted and changed to 0x00 from 0x01 because of soft errors, [(, 0x01)] is stored in RCD. After executing the ADD instruction, data in the destination register () are changed to 0x02 from 0x03 due to error propagation. In this example, RCD is updated to [(, 0x01), (, 0x03)].

We also modeled the error propagation of memory instructions, such as store and load. For the error propagation of a store instruction (STORE , 0x100), originally contained 0x01 in the previous example. Memory address 0x100 should store 0x01 if there are no soft errors. In this example, RCD contains [(, 0x01), (, 0x03)], which means that has corrupted data. After executing the store instruction, memory 0x100 also stores corrupted data (0x00) from corrupted data contained in , and RCD and MCD stores [(, 0x01), (, 0x03)] and [(0x100, 0x01)], respectively. Thus, the MCD should be updated by store instructions when they read corrupted data.

If corrupted memory data is read by the load instructions, errors can be propagated to the destination register. A load instruction (LOAD , 0x100) reads data from memory 0x100, and it makes data contained in into 0x01 without soft errors. However, data in memory 0x100 stores corrupted data (0x00) since MCD stores [(0x100, 0x01)]. In this example, data contained in is corrupted and changed to 0x00 because of the corrupted data in memory 0x100. After executing the load instructions, RCD and MCD become [(, 0x01), (, 0x01), (, 0x03)] and [(0x100, 0x01)], respectively. After executing these three instructions, errors in are propagated to other registers ( and ) and memory (0x100).

To analyze the masking effects at the software level, we execute an instruction again with the original data and compare their results if the instruction has source registers that are included in the RCD (shaded), as shown in

Figure 5. For instance, a logical OR instruction (OR

,

,

) stores the result of the logical OR between data in

and

into

. Assume that data contained in

is corrupted and changed to 0x11 from the original data 0x10, and RCD stores [(

, 0x10)]. Another source register,

, stores 0x01. Our error propagation model considers that errors in data contained in

can be propagated to the destination register (

) because the logical OR instruction (non-memory instruction) reads corrupted data in

.

Because a source register () of the instruction is included in the RCD, we execute the instruction again with the original data in the RCD. In the case of corrupted execution, data contained in becomes 0x11 (0x11 OR 0x01) because and store 0x11 and 0x01, respectively. Our framework changes data contained in to 0x10 by loading the original data from RCD, [(, 0x10)], and data contained in becomes 0x11 (0x10 OR 0x01) for the original execution. Our framework compares the results if two versions (corrupted and original) of execution are performed. In this example, the results of corrupted and original executions are exactly the same (0x11). Thus, the destination register of instruction () is not updated into the RCD. Our software-level analysis determines that is masked by logical OR instruction even though the instruction reads corrupted data from . Note that the results of the logical instructions may not be masked. Assume that data contained in was changed to 0x00 from 0x10. In this case, data in the destination register () becomes 0x01 (0x00 OR 0x01) as the result of corrupted execution. Because the result of the corrupted execution (0x01) is different from that of the original execution (0x11), our framework updates RCD to [(, 0x10), (, 0x11)].

Further, our data structure can also determine whether soft errors are read by dynamically dead instructions. In the previous example, we hold RCD as [(, 0x10), (, 0x11)], which means that and contain incorrect values as compared to the original execution. Assume that the next instructions to access are the data transfer instructions (MOV , ). In this example, the corrupted value in is overwritten because does not contain corrupted data. This means that is masked by dynamically dead instructions because the result () of previous OR instruction (OR , , ) is overwritten by another instruction before being used.

6. Conclusions

Embedded systems suffer from soft errors owing to their tiny feature size and aggressive dynamic voltage and frequency scaling. Several techniques have been proposed to protect embedded processors against soft errors. However, protection techniques against soft errors are not always practical or robust. Thus, we need to quantify the reliability of the system in finding and choosing appropriate protections. Because exhaustive fault injection campaigns and radiation beam testing are too expensive to perform, vulnerability estimation techniques based on cycle-accurate simulators have been proposed. However, vulnerability modeling can be inaccurate owing to several system-level masking effects. Thus, we have characterized the system-level masking effect and have implemented an automated framework to analyze their distribution by exhaustive fault injection campaigns on the register file. In addition, we have examined the microarchitectural behaviors of the register file and found that almost 93% of errors are masked because corrupted data is not read. To analyze software-level masking effects when corrupted data is read by program execution, we also investigated the masking effects at the software level. Based on the software-level analysis, we found that 80% of instances do not change the program at all because faults are injected into dynamically dead, compare, and logical instructions. Approximately 20% of software-level masking effects take the incorrect branch but still produce the correct output by varying the execution time slightly.

We will perform the masking analysis for real hardware devices by exploiting beam testing. Even though the soft error rate is increasing exponentially, a soft error is still a rare event. For example, even though we have tested high-energy neutrons, it still needs more than 500 h to represent 57,000 years in normal execution [

35]. Further, even though we have injected radiation-induced faults into hardware devices, it is hard to analyze masking effects since we cannot determine types of soft errors, such as the number of bits and locality of errors. Our future work will also include system-level masking effect analysis for all microarchitectural components in a processor. Thus, our framework will support the comprehensive masking analysis based on various platforms.