A Hardware Platform for Ensuring OS Kernel Integrity on RISC-V †

Abstract

:1. Introduction

2. Background

3. Threat Model

4. Design and Implementation

4.1. Design Principles

- P1. Compatible with existing architecture and software: The design of our approach should be compatible with existing architecture and software running on it. Otherwise, it will require a tremendous amount of effort in porting it to those architecture and software.

- P2. Comprehensive integrity verification mechanism: As described in Section 3, we assume that attackers can attempt to thwart the integrity of the kernel in various ways. Therefore, for a complete integrity verification mechanism, RiskiM has to perform comprehensive checks on various kernel events to detect all of these attacks.

- P3. Non-bypassable monitoring mechanism: In our approach, RiskiM verifies the kernel integrity based on the information delivered by PEMI. Thus, if the extracted information can be forged, an attacker can bypass RiskiM and successfully perpetrate the attack. Therefore, our approach should provide a non-bypassable monitoring mechanism of the kernel behavior.

- P4. Low hardware and performance overhead: To make our approach applicable even on resource-constrained systems, we designed PEMI and RiskiM to minimize the performance and hardware overhead caused by them.

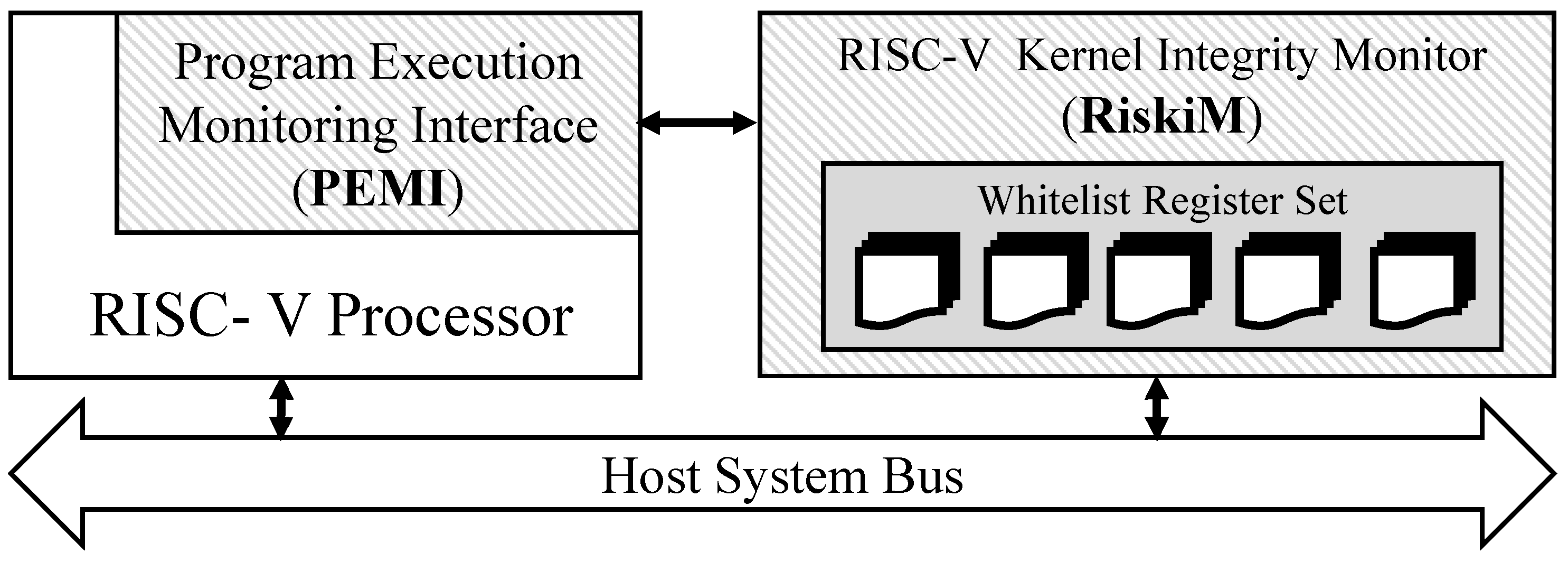

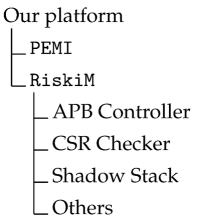

4.2. Architecture Overview

4.3. Program Execution Monitor Interface

- Memory write dataset = {Instruction address, Data address, Data value}

- CSR update dataset = {CSR number, CSR update type, CSR data value}

- Control transfer dataset = {Instruction address, Target address, Transfer type}

4.4. RISC-V Kernel Integrity Monitor

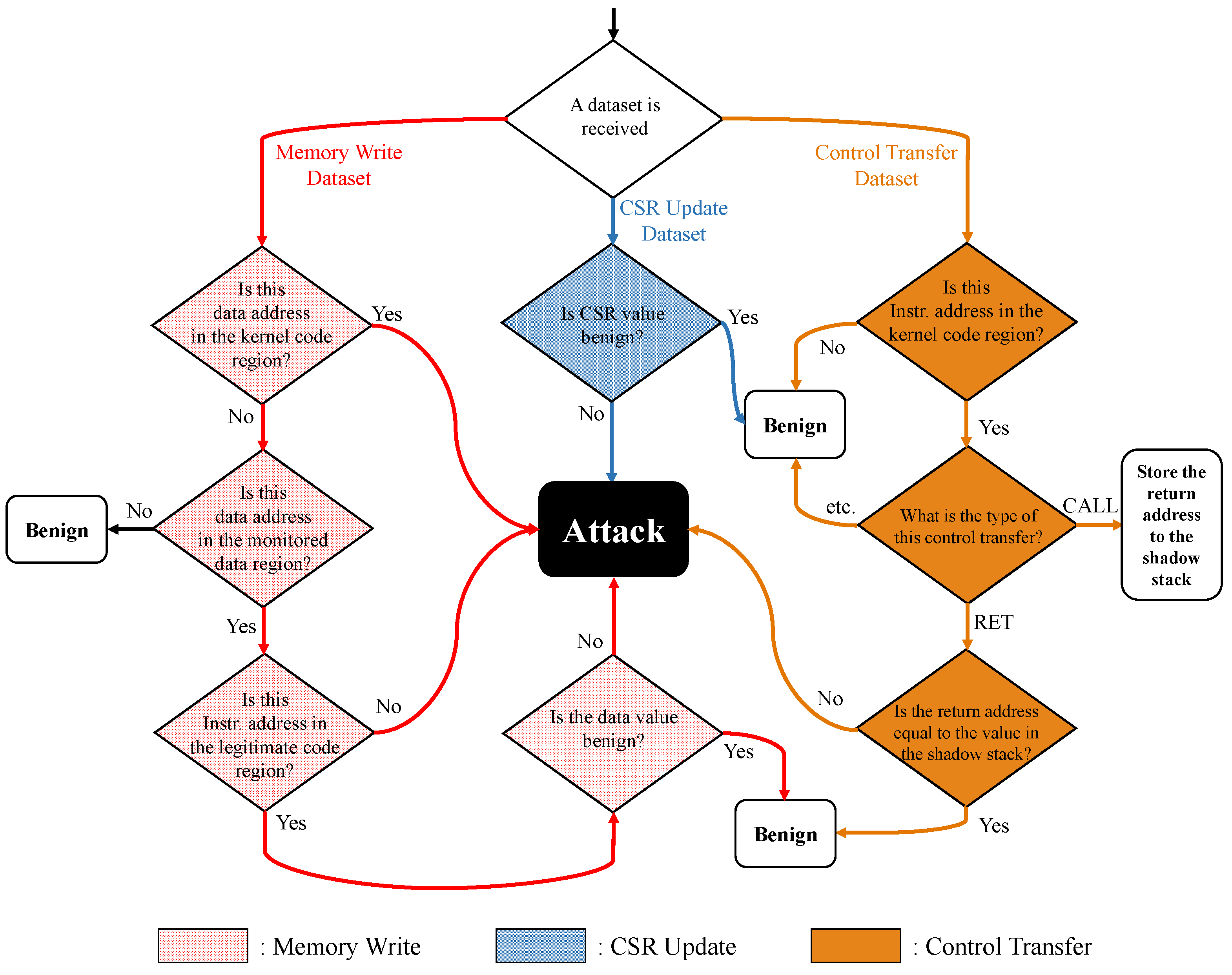

4.4.1. Kernel Integrity Verification Mechanism

- 1.

- Check for the memory write datasets. For the memory write datasets, we first check the data address. If the data address is within the kernel immutable regions such as kernel code and read-only data, we identified it as an attack (e.g., code manipulation attack). Because the kernel immutable region should not be changed during the normal kernel execution. Moreover, it is noteworthy that this protection is also essential in preventing attacks from bypassing our monitoring mechanism (P3). For example, suppose an attacker manipulated the sensitive kernel code, which originally uses the monitored data, to make it use the attacker-controlled data. In that case, the attacker could take control of the sensitive kernel code without tempering the monitored data. Second, we check if the data address is within the monitored data regions. If not, it means that the dataset is not subject to verification and the check routine terminates. If yes, we proceed to further integrity verification routines for instruction address and data value. To be specific, we compare the data value and instruction address with the corresponding whitelists that define the monitored data’s allowed values and the ranges of the legitimate code regions, respectively. If there is no matched entry in the whitelist, it is considered as an attack and RiskiM propagates the attack signal to PEMI. In specific, when the data value is not matched, we judge that the monitored data is manipulated with malicious value. If the instruction address is not valid, it implies that the monitored data is modified by unallowed code such as vulnerable kernel drivers. Note that no external hardware-based works perform the verification of the instruction address because they do not have the capability to monitor the data value and instruction address synchronously. However, in our approach, thanks to PEMI, RiskiM can verify the monitored data is accessed by the legitimate code.

- 2.

- Check for the CSR update datasets. As described in Section 2, CSRs take an important role in the system, such as memory management and privilege mode. Thus, if these registers are maliciously modified, the integrity of OS kernel could be tampered. For example, a developer may want to verify that sstatus register holds a valid value. For example, MPRV bit in sstatus provides the ability to change the privilege level for a memory load/store, which allows an attacker to gain an unauthorized memory access. In addition, it is important to ensure the integrity of sptbr which defines the physical base address of the root page table. The verification method for sptbr is described in Section 5. Consequently, RiskiM compares whether the CSR data value is equal to the legal value of the corresponding CSR. If not, RiskiM recognizes it as an attack and propagates the attack signal to PEMI; otherwise the verification process terminates normally.

- 3.

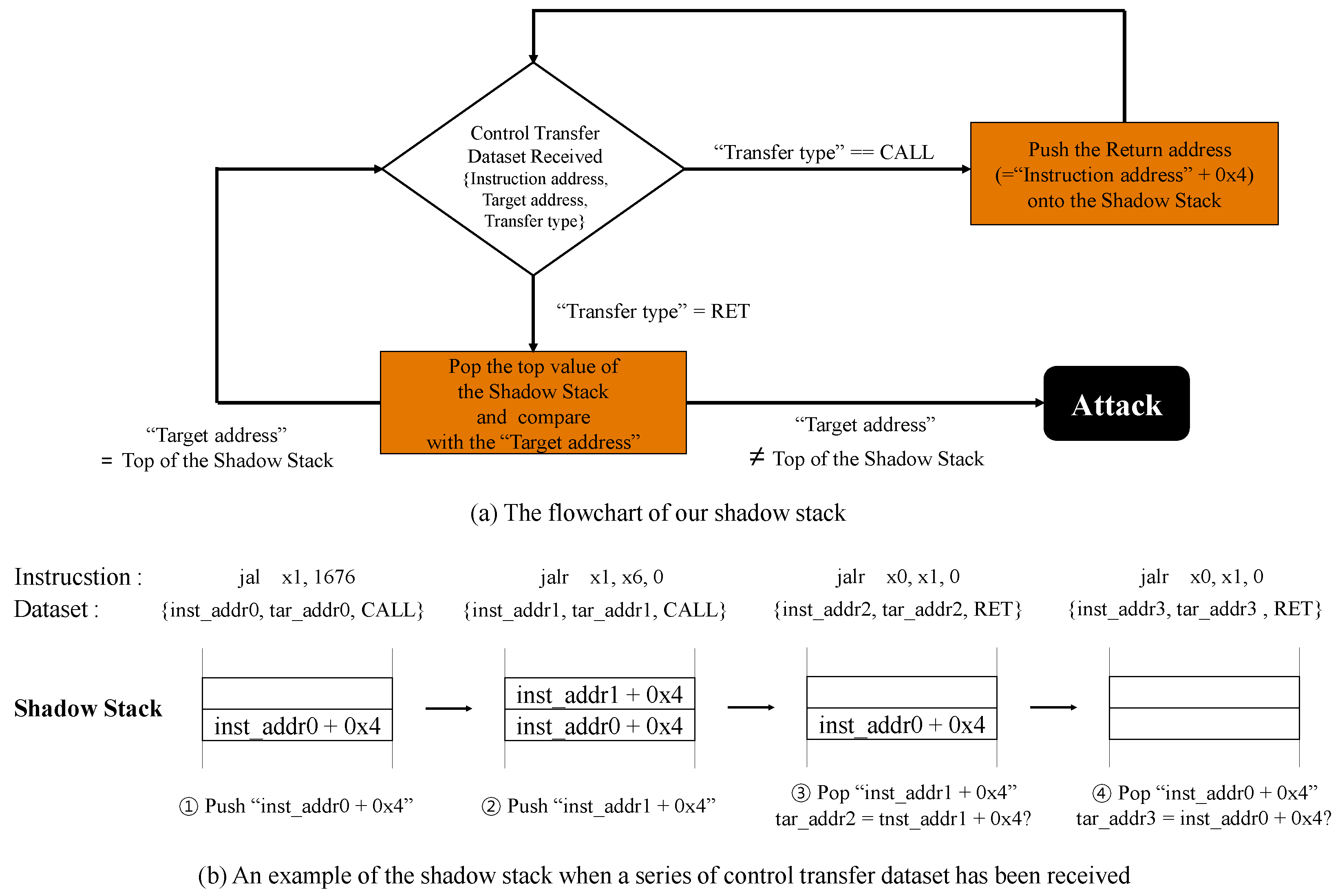

- Check for the control transfer datasets. Along with the sensitive data structures and CSRs, the control data, such as return address in the stack, should be protected. Otherwise, the attacker can take control of the kernel. For example, if an attacker can change the control flow of the kernel, she can launch a code reuse attack (e.g., return-oriented programming (ROP) attack). To prevent this, we implemented shadow stack [24], a well-known protection mechanism for attacks to return address. The shadow stack itself refers to a separate stack that is designed to store return addresses only. At the function prologue, the return address is stored in both call stack and shadow stack. Then, at the function epilogue, two return addresses are popped from both stacks and compared to detect whether the return address in the call stack is tampered by an attacker or not. RiskiM implements the shadow stack using the control transfer datasets received from PEMI. First, RiskiM identifies whether the dataset represents the behavior of the OS kernel. In other words, RiskiM checks whether the instruction address in the dataset is in the kernel code region. If yes, RiskiM performs the operations for the shadow stack (Figure 4a). If the transfer type is CALL type, the return address (i.e., Instruction address + 4) is stored in the shadow stack. In the current implementation, the shadow stack in RiskiM can hold one thousand entries. Then, if the transfer type is RET type, the latest return address stored in the shadow stack is popped and compared with the target address. Thus when the two addresses do not match, RiskiM signals PEMI to notify the occurrence of the attack. Figure 4b shows the change of the shadow stack when RiskiM receives a series of control transfer datasets (① CALL- ② CALL- ③ RET- ④ RET). Note that the control transfer dataset includes information about branches other than call and return. Therefore, RiskiM can be configured to defend against attacks that use indirect branch (e.g., jump-oriented programming).

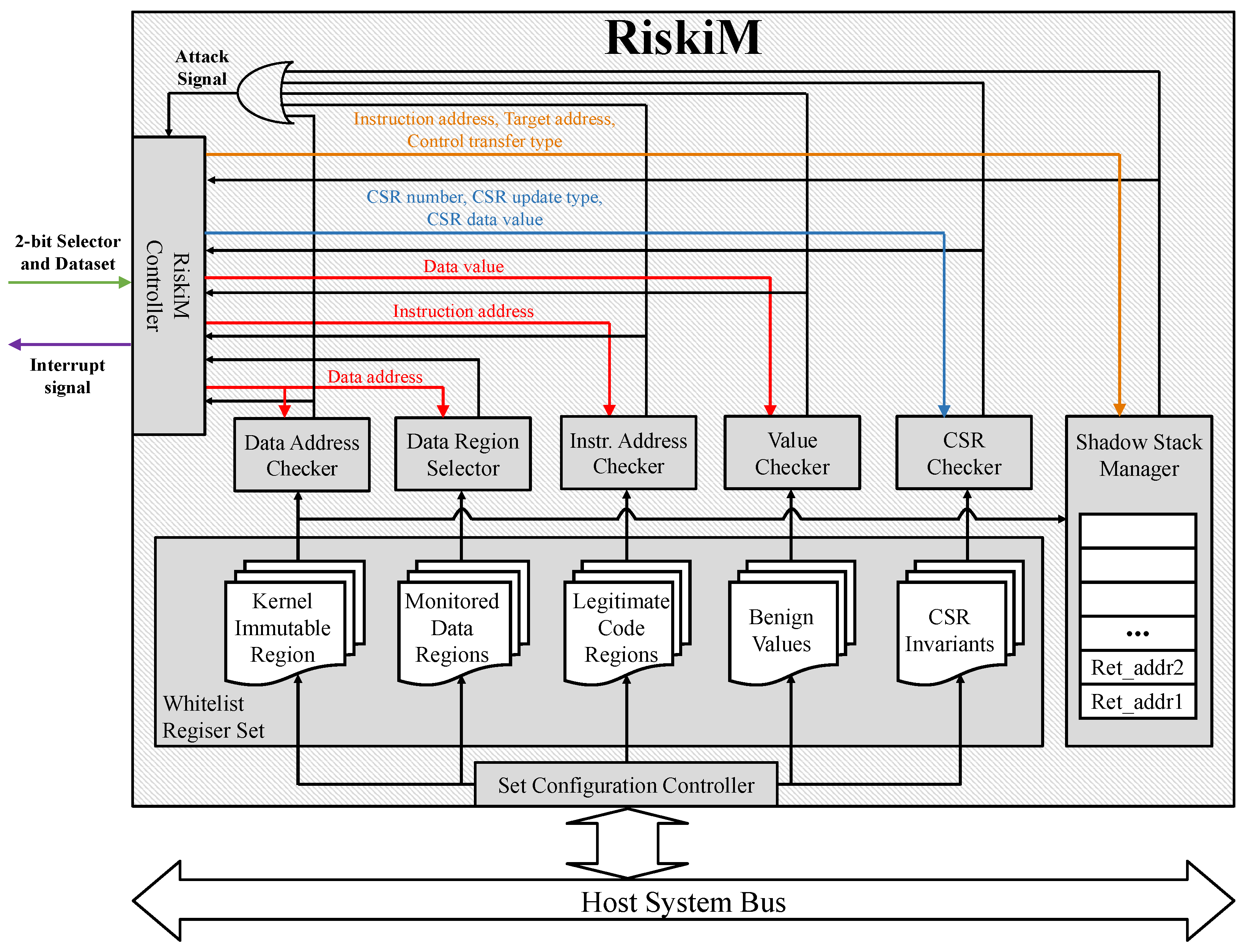

4.4.2. RiskiM Hardware Components

4.5. Security Analysis

5. Evaluation

5.1. Security Case Study

5.1.1. Data Attacks to Kernel Page Table

- Manipulation by a malicious data value. In this attack, we modified the page table entry to a malicious value using valid kernel code. Specifically, we tampered with a page table entry to allow a corresponding memory page to have read + write + execute (RWX) permission. RiskiM successfully detected this attack by confirming that the value is not legitimate in the W⊕X policy. To detect this, we configured RiskiM as follows. First, we set the monitored data region of RiskiM to the memory region containing the kernel page table and the benign data value as the legitimate value of the page table entry, which the W⊕X policy was applied. As a result, we confirmed that RiskiM under the described setting can detect the attack. Precisely, when the memory store instruction to modify the page table entry is executed in the processor, the corresponding internal information of the processor is transmitted to RiskiM as a memory write dataset. Then, RiskiM first checks whether the data address in the dataset is included in the monitored data region, and it detects an attack by comparing data value with the legitimate page table entry value.

- Manipulation with an unintended code. We also designed an attack that modifies the page table using the unintended kernel code, e.g., a buggy device driver. Note that, even if the modified value is benign, this attack can be considered as a data-only attack where an attacker modifies sensitive data through a kernel vulnerability [28]. For example, through this attack, attackers can make the payload appear as a normal kernel code by modifying the page table entry of the payload page to hold from read + write(RW) to read + execute (RX) permission. Notably, since RW and RX permissions are legitimate in the W⊕X policy, verifying the value is not enough to detect such an attack. Thus, we configured RiskiM to monitor the memory region containing the kernel page table to detect this attack. Then we made the legitimate kernel APIs as the legitimate code region in RiskiM. As a result, we confirmed that RiskiM can be utilized to detect this attack because the instruction address in the memory write dataset is not included in the legitimate code region.

5.1.2. Control Flow Hijacking Attacks

5.2. Hardware Area and Power Analysis

5.3. Performance Evaluation

6. Related Work

6.1. Software-Based Kernel Integrity Monitor

6.2. Internal Hardware-Based Kernel Integrity Monitor

6.3. External Hardware-Based Kernel Integrity Monitor

6.4. Architectural Supports for Security Solutions

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- CVE. Linux Kernel: Vulnerability Statistics. 2018. Available online: https://www.cvedetails.com/product/47/Linux-Kernel.html?vendor (accessed on 26 August 2021).

- Shevchenko, A. Rootkit Evolution. 2018. Available online: http://www.securelist.com/en/analysis (accessed on 10 December 2014).

- Song, C.; Lee, B.; Lu, K.; Harris, W.; Kim, T.; Lee, W. Enforcing Kernel Security Invariants with Data Flow Integrity. In Proceedings of the Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 21–24 February 2016. [Google Scholar]

- Chen, Q.; Azab, A.M.; Ganesh, G.; Ning, P. PrivWatcher: Non-bypassable Monitoring and Protection of Process Credentials from Memory Corruption Attacks. In Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security, Abu Dhabi, United Arab Emirates, 2–6 April 2017; pp. 167–178. [Google Scholar]

- Kwon, D.; Yi, H.; Cho, Y.; Paek, Y. Safe and efficient implementation of a security system on ARM using intra-level privilege separation. ACM Trans. Priv. Secur. (TOPS) 2019, 22, 1–30. [Google Scholar] [CrossRef]

- Dautenhahn, N.; Kasampalis, T.; Dietz, W.; Criswell, J.; Adve, V. Nested kernel: An operating system architecture for intra-kernel privilege separation. In Proceedings of the ACM SIGPLAN Notices, Istanbul, Turkey, 14–18 March 2015; Volume 50, pp. 191–206. [Google Scholar]

- Wang, X.; Qi, Y.; Wang, Z.; Chen, Y.; Zhou, Y. Design and Implementation of SecPod, A Framework for Virtualization-based Security Systems. IEEE Trans. Dependable Secur. Comput. 2017, 16, 44–57. [Google Scholar] [CrossRef]

- Vasudevan, A.; Chaki, S.; Jia, L.; McCune, J.; Newsome, J.; Datta, A. Design, implementation and verification of an extensible and modular hypervisor framework. In Proceedings of the 2013 IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 19–22 May 2013; pp. 430–444. [Google Scholar]

- Lee, H.; Moon, H.; Heo, I.; Jang, D.; Jang, J.; Kim, K.; Paek, Y.; Kang, B. KI-Mon ARM: A hardware-assisted event-triggered monitoring platform for mutable kernel object. IEEE Trans. Dependable Secur. Comput. 2017, 16, 287–300. [Google Scholar] [CrossRef]

- Moon, H.; Lee, J.; Hwang, D.; Jung, S.; Seo, J.; Paek, Y. Architectural supports to protect OS kernels from code-injection attacks. In Proceedings of the Hardware and Architectural Support for Security and Privacy 2016, Seoul, Korea, 18 June 2016; p. 5. [Google Scholar]

- Koromilas, L.; Vasiliadis, G.; Athanasopoulos, E.; Ioannidis, S. GRIM: Leveraging GPUs for kernel integrity monitoring. In Lecture Notes in Computer Science, Proceedings of the International Symposium on Research in Attacks, Intrusions, and Defenses, Evry, France, 19–21 September 2016; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Kwon, D.; Oh, K.; Park, J.; Yang, S.; Cho, Y.; Kang, B.B.; Paek, Y. Hypernel: A hardware-assisted framework for kernel protection without nested paging. In Proceedings of the 55th Annual Design Automation Conference, San Francisco, CA, USA, 24–29 June 2018; p. 34. [Google Scholar]

- AMD. AMD Secure Processor. 2021. Available online: https://www.amd.com/en/technologies/pro-security (accessed on 26 August 2021).

- Apple. Apple Platform Security. 2021. Available online: https://support.apple.com/guide/security/welcome/web (accessed on 26 August 2021).

- Roemer, R.; Buchanan, E.; Shacham, H.; Savage, S. Return-oriented programming: Systems, languages, and applications. ACM Trans. Inf. Syst. Secur. (TISSEC) 2012, 15, 2. [Google Scholar] [CrossRef]

- Hwang, D.; Yang, M.; Jeon, S.; Lee, Y.; Kwon, D.; Paek, Y. Riskim: Toward complete kernel protection with hardware support. In Proceedings of the 2019 Design, Automation & Test in Europe Conference & Exhibition (DATE), Florence, Italy, 25–29 March 2019; pp. 740–745. [Google Scholar]

- Lee, H.; Moon, H.; Jang, D.; Kim, K.; Lee, J.; Paek, Y.; Kang, B.B. KI-Mon: A Hardware-assisted Event-triggered Monitoring Platform for Mutable Kernel Object. In Proceedings of the USENIX Security Symposium, Washington, DC, USA, 14–16 August 2013; pp. 511–526. [Google Scholar]

- Williams, M. ARMV8 debug and trace architectures. In Proceedings of the System, Software, SoC and Silicon Debug Conference (S4D), Vienna, Austria, 19–20 September 2012; pp. 1–6. [Google Scholar]

- Waterman, A.; Asanovi’, K. The RISC-V Instruction Set Manual, Volume II: Privileged Architecture, Document Version 20190608-Priv-MSU-Ratified; Technical Report; RISC-V Foundation; University of California: Berkeley, CA, USA, 2019. [Google Scholar]

- Waterman, A.; Lee, Y.; Avizienis, R.; Patterson, D.A.; Asanovic, K. The Risc-v Instruction Set Manual Volume 2: Privileged Architecture Version 1.7; Technical Report; University of California at Berkeley United States: Berkeley, CA, USA, 2015. [Google Scholar]

- Suh, G.E.; Clarke, D.; Gassend, B.; Van Dijk, M.; Devadas, S. AEGIS: Architecture for tamper-evident and tamper-resistant processing. In Proceedings of the 17th annual international conference on Supercomputing, San Francisco, CA, USA, 23–26 June 2003; pp. 357–368. [Google Scholar]

- UEFI Forum. Unified Extensible Firmware Interface Specification. 2021. Available online: https://uefi.org/specifications (accessed on 26 August 2021).

- Guide, P. Intel® 64 and IA-32 Architectures Software Developer’s Manual. Syst. Program. Guide Part 2018, 3, 335592. [Google Scholar]

- Burow, N.; Zhang, X.; Payer, M. SoK: Shining light on shadow stacks. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 985–999. [Google Scholar]

- Akritidis, P.; Cadar, C.; Raiciu, C.; Costa, M.; Castro, M. Preventing memory error exploits with WIT. In Proceedings of the 2008 IEEE Symposium on Security and Privacy (sp 2008), Oakland, CA, USA, 18–22 May 2008; pp. 263–277. [Google Scholar]

- Xilinx. Zynq-7000 All Programmable SoC ZC706 Evaluation Kit. 2013. Available online: https://www.xilinx.com/products/boards-and-kits/ek-z7-zc706-g.html (accessed on 26 August 2021).

- Wang, X.; Chen, Y.; Wang, Z.; Qi, Y.; Zhou, Y. Secpod: A framework for virtualization-based security systems. In Proceedings of the 2015 {USENIX} Annual Technical Conference ({USENIX}{ATC} 15), Santa Clara, CA, USA, 8–10 July 2015; pp. 347–360. [Google Scholar]

- Davi, L.; Gens, D.; Liebchen, C.; Sadeghi, A.R. PT-Rand: Practical Mitigation of Data-only Attacks against Page Tables. In Proceedings of the Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 26 February–1 March 2017. [Google Scholar]

- Synopsys Inc. Synopsis Design Compiler User Guide. 2016. Available online: https://www.synopsys.com/support/training/rtl-synthesis/design-compiler-rtl-synthesis.html (accessed on 17 April 2018).

- Moon, H.; Lee, J.; Hwang, D.; Jung, S.; Seo, J.; Paek, Y. Architectural Supports to Protect OS Kernels from Code-Injection Attacks and Their Applications. ACM Trans. Des. Autom. Electron. Syst. (TODAES) 2017, 23, 10. [Google Scholar] [CrossRef]

- Smith, B.; Grehan, R.; Yager, T.; Niemi, D. Byte-Unixbench: A Unix Benchmark Suite. 2021. Available online: https://code.google.com/archive/p/byte-unixbench/ (accessed on 26 August 2021).

- Dang, T.H.; Maniatis, P.; Wagner, D. The performance cost of shadow stacks and stack canaries. In Proceedings of the 10th ACM Symposium on Information, Computer and Communications Security, Singapore, 14 April–17 March 2015; pp. 555–566. [Google Scholar]

- Hofmann, O.S.; Dunn, A.M.; Kim, S.; Roy, I.; Witchel, E. Ensuring operating system kernel integrity with OSck. In Proceedings of the ACM SIGARCH Computer Architecture News, New York, NY, USA, 5–11 March 2011; Volume 39, pp. 279–290. [Google Scholar]

- Petroni, N.L., Jr.; Hicks, M. Automated detection of persistent kernel control-flow attacks. In Proceedings of the 14th ACM Conference on Computer and Communications Security, Alexandria, VA, USA, 2 November–31 October 2007; pp. 103–115. [Google Scholar]

- Srivastava, A.; Giffin, J. Efficient protection of kernel data structures via object partitioning. In Proceedings of the 28th Annual Computer Security Applications Conference, Orlando, FL, USA, 3–7 December 2012; pp. 429–438. [Google Scholar]

- Azab, A.M.; Ning, P.; Shah, J.; Chen, Q.; Bhutkar, R.; Ganesh, G.; Ma, J.; Shen, W. Hypervision across worlds: Real-time kernel protection from the arm trustzone secure world. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014; pp. 90–102. [Google Scholar]

- Azab, A.M.; Swidowski, K.; Bhutkar, R.; Ma, J.; Shen, W.; Wang, R.; Ning, P. SKEE: A lightweight Secure Kernel-level Execution Environment for ARM. In Proceedings of the Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 21–24 February 2016. [Google Scholar]

- Song, C.; Moon, H.; Alam, M.; Yun, I.; Lee, B.; Kim, T.; Lee, W.; Paek, Y. HDFI: Hardware-assisted data-flow isolation. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; pp. 1–17. [Google Scholar]

- Woodruff, J.; Watson, R.N.; Chisnall, D.; Moore, S.W.; Anderson, J.; Davis, B.; Laurie, B.; Neumann, P.G.; Norton, R.; Roe, M. The CHERI capability model: Revisiting RISC in an age of risk. In Proceedings of the ACM SIGARCH Computer Architecture News, Minneapolis, MN, USA, 14–18 June 2014; Volume 42, pp. 457–468. [Google Scholar]

- Menon, A.; Murugan, S.; Rebeiro, C.; Gala, N.; Veezhinathan, K. Shakti-T: A RISC-V Processor with Light Weight Security Extensions. In Proceedings of the Hardware and Architectural Support for Security and Privacy, Toronto, ON, Canada, 25 June 2017; p. 2. [Google Scholar]

- Das, S.; Unnithan, R.H.; Menon, A.; Rebeiro, C.; Veezhinathan, K. SHAKTI-MS: A RISC-V processor for memory safety in C. In Proceedings of the 20th ACM SIGPLAN/SIGBED International Conference on Languages, Compilers, and Tools for Embedded Systems, Phoenix, AZ, USA, 23 June 2019; pp. 19–32. [Google Scholar]

- Delshadtehrani, L.; Eldridge, S.; Canakci, S.; Egele, M.; Joshi, A. Nile: A Programmable Monitoring Coprocessor. IEEE Comput. Archit. Lett. 2018, 17, 92–95. [Google Scholar] [CrossRef]

- Delshadtehrani, L.; Canakci, S.; Zhou, B.; Eldridge, S.; Joshi, A.; Egele, M. Phmon: A programmable hardware monitor and its security use cases. In Proceedings of the 29th {USENIX} Security Symposium ({USENIX} Security 20), 12 August 2020. (online). [Google Scholar]

- Petroni, N.L., Jr.; Fraser, T.; Molina, J.; Arbaugh, W.A. Copilot-a Coprocessor-based Kernel Runtime Integrity Monitor. In Proceedings of the USENIX Security Symposium, San Diego, CA, USA, 9–13 August 2004; pp. 179–194. [Google Scholar]

- Moon, H.; Lee, H.; Lee, J.; Kim, K.; Paek, Y.; Kang, B.B. Vigilare: Toward snoop-based kernel integrity monitor. In Proceedings of the 2012 ACM Conference on Computer and Communications Security, Raleigh North, CA, USA, 16–18 October 2012; pp. 28–37. [Google Scholar]

- Jang, D.; Lee, H.; Kim, M.; Kim, D.; Kim, D.; Kang, B.B. Atra: Address translation redirection attack against hardware-based external monitors. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014; pp. 167–178. [Google Scholar]

- Das, S.; Werner, J.; Antonakakis, M.; Polychronakis, M.; Monrose, F. SoK: The challenges, pitfalls, and perils of using hardware performance counters for security. In Proceedings of the 40th IEEE Symposium on Security and Privacy (S&P’19), San Francisco, CA, USA, 19–23 May 2019. [Google Scholar]

- Pappas, V.; Polychronakis, M.; Keromytis, A.D. Transparent {ROP} Exploit Mitigation Using Indirect Branch Tracing. Presented at the Part of the 22nd {USENIX} Security Symposium ({USENIX} Security 13), Washington, DC, USA, 14–16 August 2013; pp. 447–462. [Google Scholar]

- Cheng, Y.; Zhou, Z.; Miao, Y.; Ding, X.; Deng, R.H. ROPecker: A Generic and Practical Approach for Defending against ROP Attack. In Proceedings of the Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 23–26 February 2014. [Google Scholar]

- Xia, Y.; Liu, Y.; Chen, H.; Zang, B. CFIMon: Detecting violation of control flow integrity using performance counters. In Proceedings of the IEEE/IFIP International Conference on Dependable Systems and Networks (DSN 2012), Boston, MA, USA, 25–28 June 2012; pp. 1–12. [Google Scholar]

- Gu, Y.; Zhao, Q.; Zhang, Y.; Lin, Z. PT-CFI: Transparent backward-edge control flow violation detection using intel processor trace. In Proceedings of the Seventh ACM on Conference on Data and Application Security and Privacy, Scottsdale, AZ, USA, 22–24 March 2017; pp. 173–184. [Google Scholar]

- Ge, X.; Cui, W.; Jaeger, T. Griffin: Guarding control flows using intel processor trace. In Proceedings of the ACM SIGPLAN Notices, New York, NY, USA, 8–12 April 2017; Volume 52, pp. 585–598. [Google Scholar]

- Ding, R.; Qian, C.; Song, C.; Harris, B.; Kim, T.; Lee, W. Efficient protection of path-sensitive control security. In Proceedings of the 26th {USENIX} Security Symposium ({USENIX} Security 17), Vancouver, BC, Canada, 16–18 August 2017; pp. 131–148. [Google Scholar]

- Asanovic, K.; Avizienis, R.; Bachrach, J.; Beamer, S.; Biancolin, D.; Celio, C.; Cook, H.; Dabbelt, D.; Hauser, J.; Izraelevitz, A.; et al. The Rocket Chip Generator; Technical Report UCB/EECS-2016-17; EECS Department, University of California, Berkeley: Berkeley, CA, USA, 2016. [Google Scholar]

| Transfer Type | Instructions | |

|---|---|---|

| CALL | jal jalr | x1, offset x1, rs, 0 |

| RET | jalr | x0, x1, 0 |

| BRANCH | beq blt jal jalr | rs, x0, offset rt, rs, offset x0, offset x0, rs, 0 |

| etc. | ||

| Components | LUTs | Regs | BRAMs | F7 Muxes | F8 Muxes | DSPs |

|---|---|---|---|---|---|---|

| 3160 | 6939 | 0 | 822 | 327 | 0 |

| 14 | 165 | 0 | 0 | 0 | 0 | |

| 3146 | 6774 | 0 | 822 | 327 | 0 | |

| 2536 | 6212 | 0 | 768 | 306 | 0 | |

| 12 | 5 | 0 | 0 | 0 | 0 | |

| 591 | 359 | 0 | 54 | 21 | 0 | |

| 7 | 198 | 0 | 0 | 0 | 0 |

| Test | Baseline | Our Approach | SW-Only |

|---|---|---|---|

| syscall null | 0.60 us | 0.60 us (00.00%) | 0.60 us (00.00%) |

| syscall open | 10.11 us | 10.24 us (01.29%) | 10.39 us (02.77%) |

| syscall stat | 4.58 us | 4.63 us (01.09%) | 5.30 us (15.72%) |

| signal install | 1.03 us | 1.04 us (00.97%) | 1.06 us (02.91%) |

| signal catch | 5.52 us | 5.52 us (00.00%) | 5.57 us (00.91%) |

| pipe | 18.38 us | 18.46 us (00.44%) | 18.65 us (01.47%) |

| fork | 641.07 us | 650.13 us (01.41%) | 721.63 us (12.57%) |

| exec | 704.71 us | 706.92 us (00.31%) | 797.05 us (13.10%) |

| page fault | 1.13 us | 1.15 us (01.77%) | 1.47 us (30.09%) |

| mmap | 241.00 us | 241.00 us (00.00%) | 263.67 us (09.41%) |

| GEOMEAN | 0.73% | 8.55% |

| Benchmark | Spec. | Dhry. | Whet. | Hack. | Iozone | Tar |

|---|---|---|---|---|---|---|

| Overhead | 0.50% | 0.55% | 0.05% | 0.01% | 0.45% | 0.38% |

| Software | Internal Hardware | External Hardware | Proposed Approach | |

|---|---|---|---|---|

| Software Compatibility | highly compatible | not compatible | compatible | compatible |

| Performance overhead on host system | slow | fast | fast | fast |

| Hardware Cost | None | low | high | low |

| Security of SEE | may vulnerable | secure | secure | secure |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwon, D.; Hwang, D.; Paek, Y. A Hardware Platform for Ensuring OS Kernel Integrity on RISC-V. Electronics 2021, 10, 2068. https://doi.org/10.3390/electronics10172068

Kwon D, Hwang D, Paek Y. A Hardware Platform for Ensuring OS Kernel Integrity on RISC-V. Electronics. 2021; 10(17):2068. https://doi.org/10.3390/electronics10172068

Chicago/Turabian StyleKwon, Donghyun, Dongil Hwang, and Yunheung Paek. 2021. "A Hardware Platform for Ensuring OS Kernel Integrity on RISC-V" Electronics 10, no. 17: 2068. https://doi.org/10.3390/electronics10172068

APA StyleKwon, D., Hwang, D., & Paek, Y. (2021). A Hardware Platform for Ensuring OS Kernel Integrity on RISC-V. Electronics, 10(17), 2068. https://doi.org/10.3390/electronics10172068