1. Introduction

Machine learning algorithms for analyzing and manipulating large data sets have become an integral part of today’s world. Much of the rapid progress made in this area over the past decade can be attributed to the availability of large data sets for machine learning algorithms to train on, and advances in hardware, such as the graphics processing unit, which accelerate the training process. The last decade has also witnessed the emergence of prototype quantum computers which have been implemented using various qubit technologies, including superconducting circuits, trapped ions, neutral atoms, and solid state devices [

1,

2,

3,

4]. The intersection between the emergent machine learning and quantum computing fields has produced many new algorithms which promise further advances in data processing capabilities.

Quantum algorithms such as HHL for solving linear systems [

5] and Grover’s algorithm for database search [

6] are known to achieve exponential and quadratic speedups over their classical counterparts, respectively. However, many quantum algorithms for machine learning, including HHL and Grover search, also assume the use of an input data model (e.g., quantum RAM [

7]) which allows them to easily load classical data onto the quantum processor. This model is currently unrealistic [

8]. Without access to quantum RAM, which presents the state

on demand, we resort to using a quantum circuit to generate the desired state

. Unfortunately, as the size of classical data sets grows to millions or billions of data points, the time and space requirements necessary to load the data may erase any potential quantum speedup.

Recent work by Harrow [

9] introduced a new paradigm of hybrid quantum-classical computing to address this issue. The main idea is to take a large classical data set

and use a classical computer, potentially aided by a small quantum processor, to construct a coreset: a smaller data set

combined with a weight function

which sufficiently summarizes the original data. If the coreset is small enough (but still a faithful representation of

), one could hope to optimize under the coreset with a small quantum computer. Prior work has focused on finding coreset construction algorithms that allow machine learning models to train on the coreset while remaining competitive with models that are trained on the entire data set [

10,

11,

12].

In [

9], Harrow proposes three new hybrid algorithms which cover a range of machine learning tasks including maximum a posteriori estimation, inference, and optimization. We evaluate the first of Harrow’s new algorithms and adapt it to noisy quantum computers. The general version of this algorithm takes a data set

and cost function

f as input, uses a classical computer to construct a coreset

, and then uses a quantum optimization algorithm to perform maximum a posteriori estimation ([

9], Algorithm 1). A specific instance of the algorithm is also outlined which solves the

k-means clustering problem ([

9], Algorithm 1.1). The specific case of

k-means is the focus of this paper. At a high level, this algorithm solves

k-means clustering on a data set

by first constructing a coreset

, and then optimally clustering

with Grover search.

However, Grover search is unlikely to be tenable on noisy, near-term devices [

13]. As proposed in [

9], we reformulated the coreset clustering problem as a quantum approximate optimization algorithm (QAOA) [

14] instance. QAOA is variationally optimized and is able to tolerate some noise when coupled with a robust classical optimizer. For simplicity, we restricted our study to 2-means clustering problems, and Algorithm 1 summarizes our approach.

| Algorithm 1: 2-means clustering via coresets+QAOA. |

Input : A data set

Output : Cluster centers and which approximately minimize

Algorithm:

1. Construct a coreset of size m.

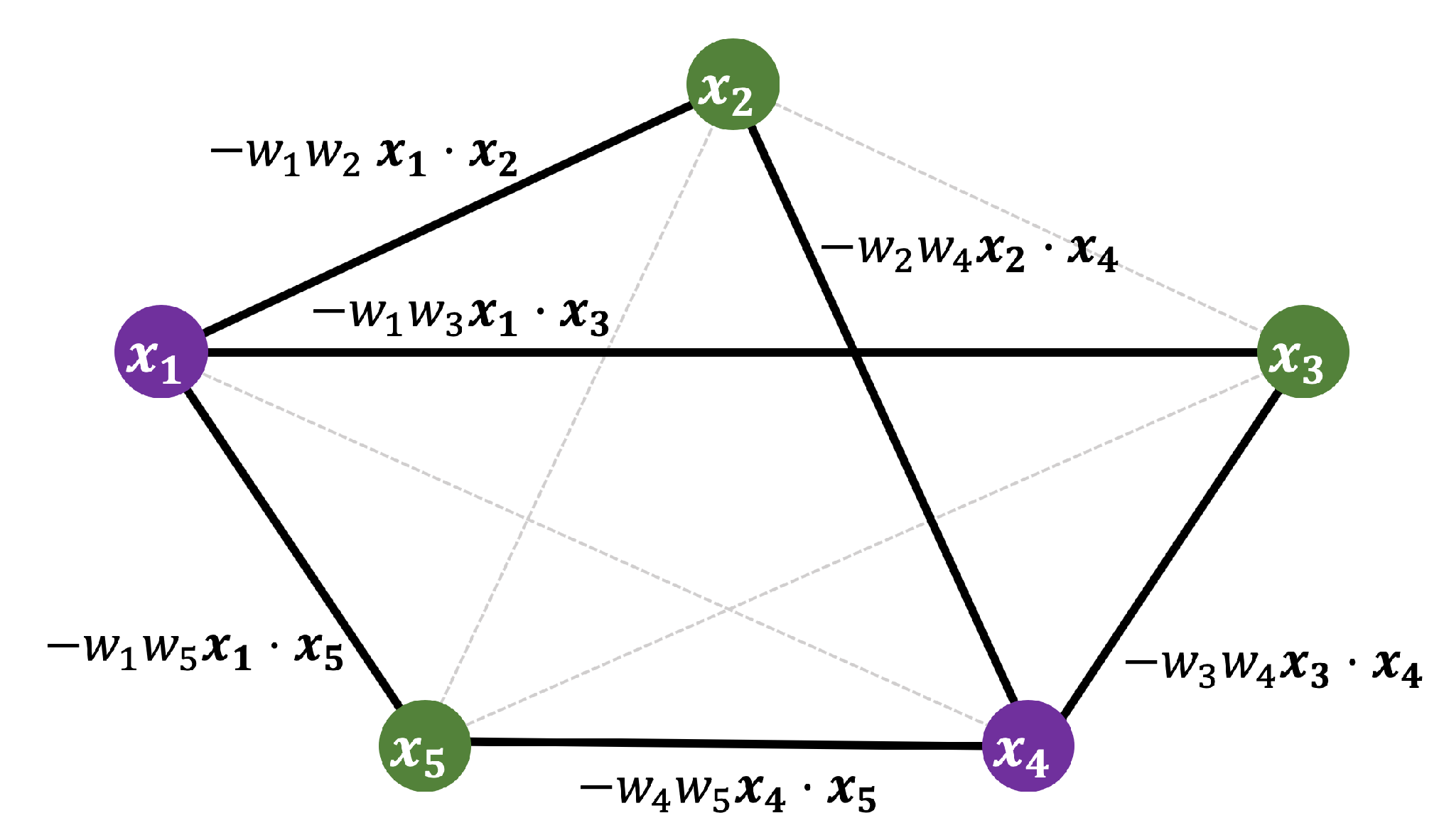

2. Construct a jth order m-qubit Hamiltonian for the coreset.

3. Use QAOA to variationally approximate an energy-maximizing eigenstate of

the Hamiltonian.

4. Treat the 0/1 assignment of the eigenstate as the clustering.

|

Our contributions are as follows:

We implemented algorithms for coresets and evaluated their performance on real data sets.

We cast coreset clustering to a Hamiltonian optimization problem that can be solved with QAOA, and herein we demonstrate how to break past the assumption of equal cluster weights.

We benchmarked the performance of Algorithm 1 across six different data sets, including real and synthetic data, comparing the 2-means clusterings found by quantum and classical means. We found that some data sets are better suited to coreset summarization than others, which can play a large role in the quality of the clustering solutions.

In our evaluations, the sizes of the coresets constructed in step 1 of Algorithm 1 are limited by the size of the quantum computer used in step 3. For some data sets, this restriction on coreset size negatively impacts the performance of clustering on the coresets when compared to k-means on the entire data set. Nonetheless, we were able to find cases where QAOA-based clustering on the coresets is competitive with the standard 2-means algorithms on the same coresets. This suggests that the performance of Algorithm 1 will improve as quantum processors add additional high-quality qubits, thereby allowing them to utilize larger coresets. However, our evaluations also suggest that either large m (i.e., many qubits) or a high order QAOA implementation (i.e., many gates) will be needed for a possible quantum advantage on typical data sets.

The rest of the paper is organized as follows. In

Section 2 we give an overview of the

k-means clustering problem.

Section 3 discusses coresets for

k-means.

Section 4 describes the reduction from

k-means to QAOA. Finally, we present and discuss our results in

Section 5 and

Section 6.

2. -Means Clustering

The

k-means clustering problem takes an input data set

and aims to identify cluster centers

that are near the input data. Typically,

; for simplicity we focus on

. Foreshadowing quantum notation, we will prefer to denote our cluster centers as

and

. Then, the objective of this 2-means problem is to find the partitioning of

into two sets

and

that minimizes the squared-distances from the closest cluster centers:

While the cluster centers

and

appear to be free variables, it can be shown [

15] that they are uniquely determined by the

and

partitionings that minimize Equation (

1). In particular, these cluster centers are the centroids:

Thus, in principle the objective function in Equation (

1) can be minimized by evaluating all

possible partitionings of

into

and

. However, this brute force exponential scaling is impractical, even for modest

n. Instead,

k-means is typically approached with heuristics such as Lloyd’s algorithm [

16], which does not guarantee optimal solutions in polynomial time, but performs well in practice. Moreover, relatively simple improvements to the initialization step in Lloyd’s algorithm leads to performance guarantees. Notably, the

k-means++ initialization procedure guarantees

-competitive solutions in the worst case [

17].

For many data sets, Lloyd’s algorithm augmented with

k-means++ initialization rapidly converges to close-to-optimal (often optimal) solutions. However, in general, finding the optimal cluster centers is a computationally hard problem. Even for

, the problem is

NP-hard [

18].

3. Coresets for -Means

An

-coreset for

k-means is a set of

m (typically

) weighted points such that the optimal

k-means clustering on the coreset,

, is within

of the optimal clustering on the entire data set of

n points,

. In other words

. A coreset data reduction is appealing because we would prefer to solve a problem with

points. The size

m needed depends on

k, the target error

, the data set dimension

d, and the probability of success

. We implemented two coreset procedures. The first, BLK17 ([

10], Algorithm 2), gives a coreset size of

. The second, BFL16 ([

19], Algorithm 2), gives a coreset size of

.

One might hope to pick a target and then pick m accordingly. However, the exact expressions—including constants—for the scaling of m are not readily available. Regardless, our goal was simply to probe the limits of small current-generation quantum computers, which have at most a few dozen qubits. Therefore, we approached coreset construction in the reverse direction by first choosing m and then evaluating the performance of the resulting coreset. As discussed in the next section, m will equal the number of qubits we need. Therefore, we chose for our evaluations.

For implementations of the BLK17 and BFL16 coreset algorithms, an

bicriterion approximation is required. We used

sampling, which is the initialization step for

k-means++ [

17], as our bicriterion approximation. We chose

, which corresponds to picking

centroids in the bicriterion approximation. For each data set, we selected the best (lowest cost) approximation from 10 repeated trials, as is also done by Scikit-learn’s default implementation of

k-means.

During our evaluations, we did not find significant differences between the performance of BLK17 and BFL16. In fact, we did not observe a significant improvement over random sampling either, except for a synthetic data set with a few rare and distant clusters.

5. Results

5.1. Data Sets

Table 1 describes the six data sets used in our evaluations. The Epilepsy, Pulsars, and Yeast data sets are parts of the UCI Machine Learning Repository [

22]. For Common Objects in Context (COCO), the image pixels were preprocessed with the img2vec [

23] library. This library translates the pixels of each image into a 512-dimensional feature vector using a Resnet-18 model [

24] pretrained on the ImageNet data set [

25].

5.2. Evaluation Methodology

We evaluated 2-means on each of these data sets using four different approaches. The first three evaluation modes use classical

k-means to find a clustering over the entire data set, a random coreset, and a coreset generated with BFL16. The final evaluation mode finds a clustering via QAOA (Algorithm 1) over the same BFL16 coreset. For classical clustering, we used Scikit-learn’s [

26] default implementation of

k-means, which initializes clusters with the best of 10

k-means++ [

17] and terminates either after 300 iterations or upon stabilizing within

relative tolerance. This default implementation is an aspirational, though realistic, target against which to compare QAOA. The cost function we compute is the “sum of squared distances to nearest cluster” objective function in Equation (

3), also referred to as inertia [

27].

In all of our evaluations the lowest clustering cost was found by evaluating k-means over the entire data set. We used this fact to rescale the coreset clustering costs, dividing their scores by the lower bound achieved with k-means over the full data set. This rescaling lets us better visualize the differences in performance between the different coresets and clustering methods.

On each data set, we computed m = 5, 10, 15, and 20 uniformly random samples, and m-coresets using BFL16. Then, we ran the 2-means clustering implementation on each coreset, and evaluated the cost of the output solution against the entire data set. For each data set, we ran this process 10 times. For five of the six data sets, we report the best of 10 results, since in practice one would indeed choose the best result. For the synthetic data set, we report average, best, and worst costs, to emphasize that the coreset algorithm is consistently better than random sampling.

Table 1.

Data sets evaluated.

Table 1.

Data sets evaluated.

| Data Set | Description |

|---|

| CIFAR-10 | 10,000 images (32 × 32 pixels) from CIFAR-10 data set [28]. 1000 images per category. |

| COCO | 5000 images from Common Objects in Context validation data set [29]. Images translated into feature vectors of dimension 512. |

| Epilepsy | Epileptic seizure recognition data set from [30]. 11,500 vectors of dimension 179. |

| Pulsars | Pulsar candidates from HTRU2 data set [31]. 1600/17,900 of 9-dimensional feature vectors are pulsars. |

| Yeast | Localization sites of proteins [32]. 1500 8-dimensional vectors. |

| Synthetic | 40,000 512-dimensional points drawn from 11 random Gaussian clusters. Ten clusters contribute 5 points each, last cluster has majority. |

In addition to these classical results, we took the best

and

BFL16 coresets and constructed Hamiltonians for them, as described in

Section 4. For

, we constructed Hamiltonians with zeroth, first, second, and infinite order Taylor expansions. For

, we only constructed the zeroth order Hamiltonian (i.e., assuming equal cluster weights as in

Section 4.2), because this is a realistic experimental target for current devices (see the evaluation in

Section 5.4).

For each Hamiltonian, we found its highest-energy eigenstate by brute force search over the basis states; for larger instances where brute force searching is impossible, one would approximately optimize with QAOA. This is the solution one would expect to find with Grover’s search algorithm, and it can also be interpreted as a bound on the best possible QAOA performance. The highest eigenstate is the weighted Max-Cut solution, or equivalently, the best cluster assignment on the coreset. For the infinite order Hamiltonian, this highest eigenstate is truly the optimal clustering of the coreset. However, note that the optimal clustering on a coreset does not necessarily correspond to the optimal clustering on the whole data set. This is because the coreset is only an approximation of the underlying data set.

5.3. Coreset and QAOA Bound Results

Figure 3 shows the results of using the methodology above on our six data sets. The green and orange bars, which are entirely classical, correspond to 2-means on the random and BFL16 coresets, respectively (note that all of the costs are scaled with respect to

k-means over the full data set). Interestingly, for the majority of our benchmarked data sets, the BFL16 and random coresets show similar performances. The only data set whereon BFL16 consistently outperformed the random coresets was the synthetic data set, which has 39,950 points in a localized Gaussian cluster, along with 50 points in ten distant clusters. On this data set, random sampling did not perform well, because the random samples were unlikely to capture points outside of the big cluster. This suggests we may see gains from using coresets on data sets oriented towards anomaly-detection.

The blue bars in

Figure 3 correspond to the energy-maximizing eigenstate of each Hamiltonian, which was constructed from a BFL16 coreset of

m elements and a given Taylor expansion order (indicated by the tick labels). QAOA attempts to find this energy-maximizing eigenstate, but it is only an approximate solver. Therefore, the blue bars can be interpreted as a lower bound on the cost of a QAOA-based solution. However, the approximate nature of QAOA could serve as a benefit to the clustering algorithm overall. Recall that the final clustering cost is evaluated with respect to the whole data set, and the coreset is only an approximation of this data set. Therefore, a suboptimal eigenstate of the Hamiltonian (i.e., a suboptimal clustering of the coreset) can actually outperform the solution obtained via the Hamiltonian’s optimal eigenstate because the cost is evaluated over the full data set.

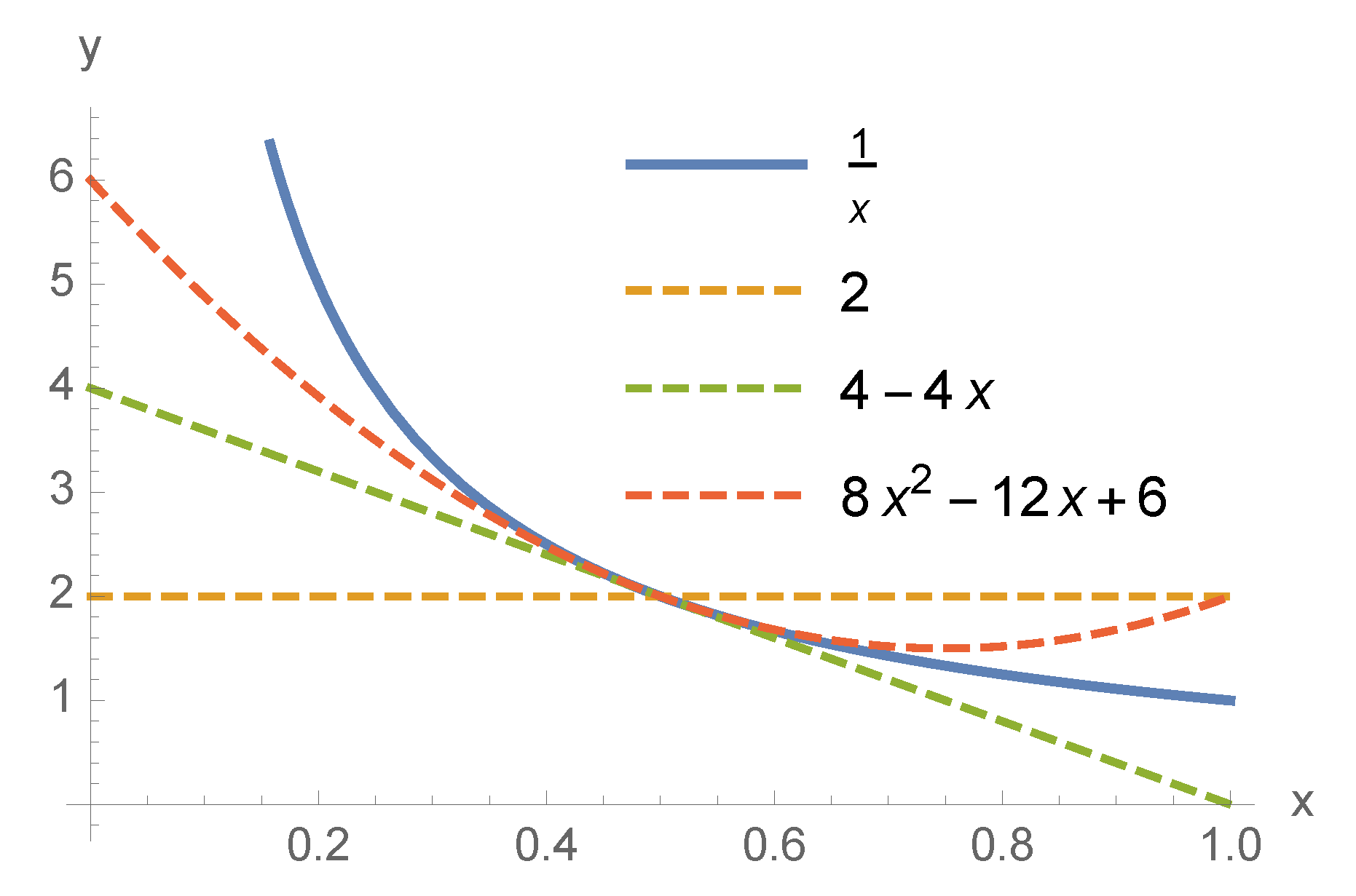

We observed this behavior in a few data sets. Focusing on the blue bars only, the cost was almost always lower (or the same) when we increased the order of the Hamiltonian (see

Figure 2). However, for the CIFAR-10 and Pulsars data sets, there were lower-order Taylor expansions (i.e. more approximate Hamiltonians) of the

coresets, which had lower costs than the higher-order expansions. We also found cases where a coarser approximation to the data set provides better clustering than a low-order expansion of a larger coreset. In the Pulsars experiment, the optimal clustering of the

coreset found by the

∞-order QAOA bound and the classical 2-means (

) does outperform the coarser

coreset clustering. However, the zeroth order QAOA bound on this coarse

coreset (

) is able to outperform the zeroth, first, and second order approximations of the

coreset. These examples highlight the interplay between the two levels of approximation within this algorithm: the coreset approximation of the data set and the QAOA approximation of the clustering solution. It may indicate potential tradeoffs that can be made between the classical (coreset) and quantum (QAOA) aspects of this algorithm when one or the other may be resource constrained.

On all six data sets studied, the lowest cost was achieved by running 2-means on the whole data set. The main barrier to a possible “quantum advantage” seems to be that for small m, the coreset approximation is too coarse. However, we were able to find QAOA bounds that outperform the corresponding classical results on the same coreset. This occurred on the CIFAR-10, Epilepsy, Yeast, and synthetic data sets, where the QAOA bound outperformed 2-means on the same BFL16 coreset. It is also encouraging that the zeroth and first order QAOA bounds, which have only quadratic gate count, are generally similar to the higher order bounds. The exceptions, where unequal cluster sizes are favored, appear in the synthetic (unbalanced by construction) and Pulsars (only a fraction of the data vectors are truly pulsars) data sets. The broader pattern appears to be that if a data set is amenable to coresets, it does not work well for low-order QAOA approximation, and vice versa.

5.4. Experimental QAOA Results

For each of the six data sets examined above, we also tested the performance of QAOA for the

case on the 5-qubit

ibmq_rome processor (IBM, Yorktown Heights, NY, USA).

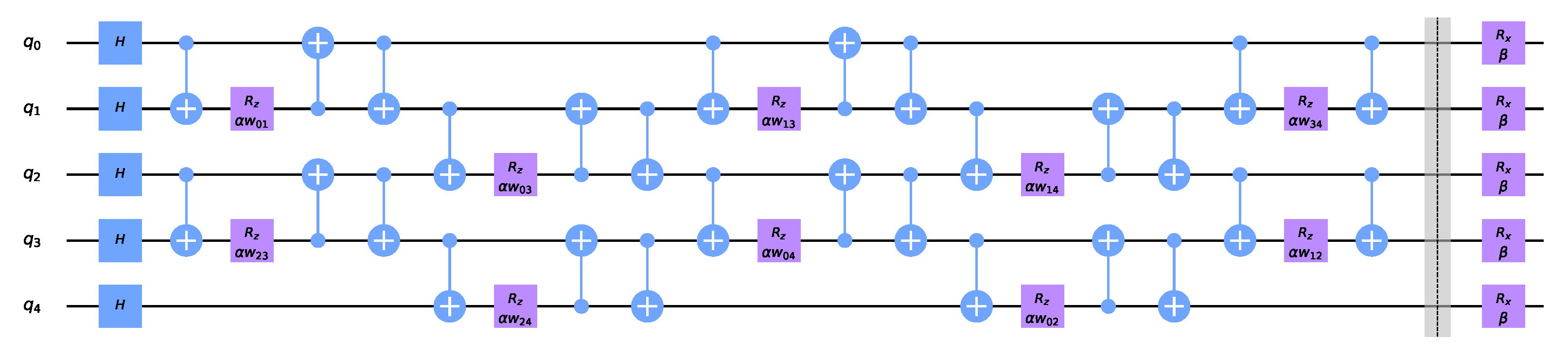

Figure 4 shows the QAOA circuit. Solving

k-means via QAOA on the zeroth or first order Hamiltonian is equivalent to finding the weighted Max-Cut on a complete graph, which requires an interaction between every pair of qubits during each step of QAOA. This would seem to imply that the quantum computer must support a high degree of connectivity between qubits or else incur a large number of SWAP operations which would deteriorate performance. However, using the swap networks proposed in [

33,

34], we can achieve the required all-to-all interactions in depth which scales linearly with the number of qubits

m, while only requiring nearest-neighbor interactions. This is critical for the

ibmq_rome processor which has a linear communication topology. Swap networks were also implemented for Max-Cut QAOA in [

35] where the total number of controlled-NOT gates (CNOTs) required for

p layers of QAOA on

m qubits was found to scale as

. We note that this CNOT count can be slightly reduced by forgoing the last layer of the SWAP network and reordering the bits in a classical post-processing step so that the overall number of CNOTs scales as

.

Figure 5 shows the results of running

k-means QAOA for the Epilepsy

coreset on

ibmq_rome with and without the swap network. Before running on the quantum hardware, we found optimal values for the variational parameters using noisy circuit simulation in conjunction with a Nelder–Mead optimizer. In

Figure 5 the best partitionings found by the noiseless simulation are the symmetric 01100 and 10011 states. We used the cluster centers given by the coreset partitioning to evaluate the cost function on the entire data set; both the 01100 and 10011 partitions had a cost of

. Dividing this value by the cost achieved with

k-means over the full data set (

) gives a rescaled cost of

, which approaches the bound of

and outperforms the other

and 10 BFL16 coresets for Epilepsy shown in

Figure 3. There is a significant amount of noise present in the hardware executions compared to the noiseless simulation, but when the swap network was utilized, the 01100 solution state was still the most likely state to be measured. Without the swap network, the highest probability state would result in a suboptimal partition.

6. Discussion and Conclusions

In this work we investigated the performance of

k-means clustering via QAOA, using offline coresets to effectively reduce the size of the target data sets. Indeed, there do exist data sets where coresets seem to work well in practice, such as the synthetic data set we analyzed in

Section 5.3. Furthermore, as our Hamiltonian construction of the problem is all-to-all connected, our QAOA instance circumvents the light cone oriented issues [

36] associated with running constant

p-depth QAOA on sparse bounded-degree graphs.

However, in practice, coresets constructed via BFL16 and random sampling had similar performances on the standard classification data sets we benchmarked. This may have been due to the small m we restricted our coresets to, with the motivation of fitting the problem instance to today’s quantum computers. Alternatively, it may have been due to the fact that these “natural” data sets have near equally sized optimal clusters. Indeed, the synthetic data set where coresets performed well had artificially rare clusters that naive random sampling would miss—however, this worked to the detriment of our Hamiltonian construction of the problem, which involves Taylor expanding the optimization problem around near equal optimal cluster sizes. As standard k-means already performs remarkably well, it seems that one would need a high-degree Hamiltonian expansion for a method such as QAOA to compete, or otherwise a more clever Hamiltonian construction of the problem. Methods such as Grover’s algorithm, however, would not necessarily have this limitation. We leave for future work refining this intuition and perhaps finding instances where both coresets and QAOA work well in conjunction.