Viewing Direction Based LSB Data Hiding in 360° Videos †

Abstract

:1. Introduction

1.1. Related Work

1.2. Motivation and Contributions

- A viewing direction based LSB data hiding method for 360° videos is proposed and pseudo code to assist the implementation of the method is provided.

- Normalized relative viewing direction frequencies with respect to latitude, longitude, and both latitude and longitude are derived using the respective GMMs.

- Viewing direction based data hiding weight functions with respect to latitude, longitude, and both latitude and longitude are defined.

- Analytical expressions for the capacities offered by viewing direction based LSB data hiding in the latitude, longitude, and both latitude and longitude are derived. Numerical results for these capacities are also provided.

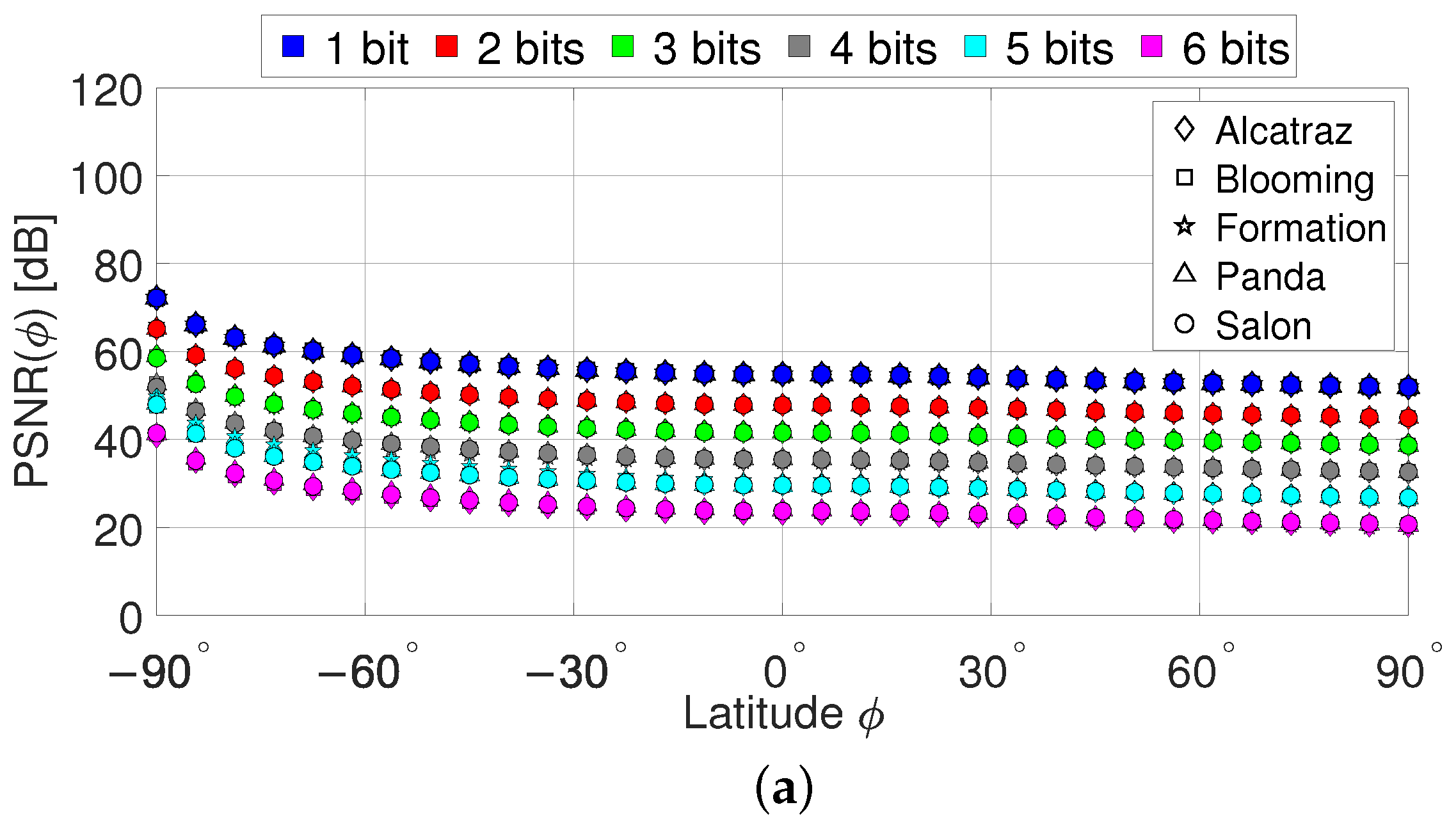

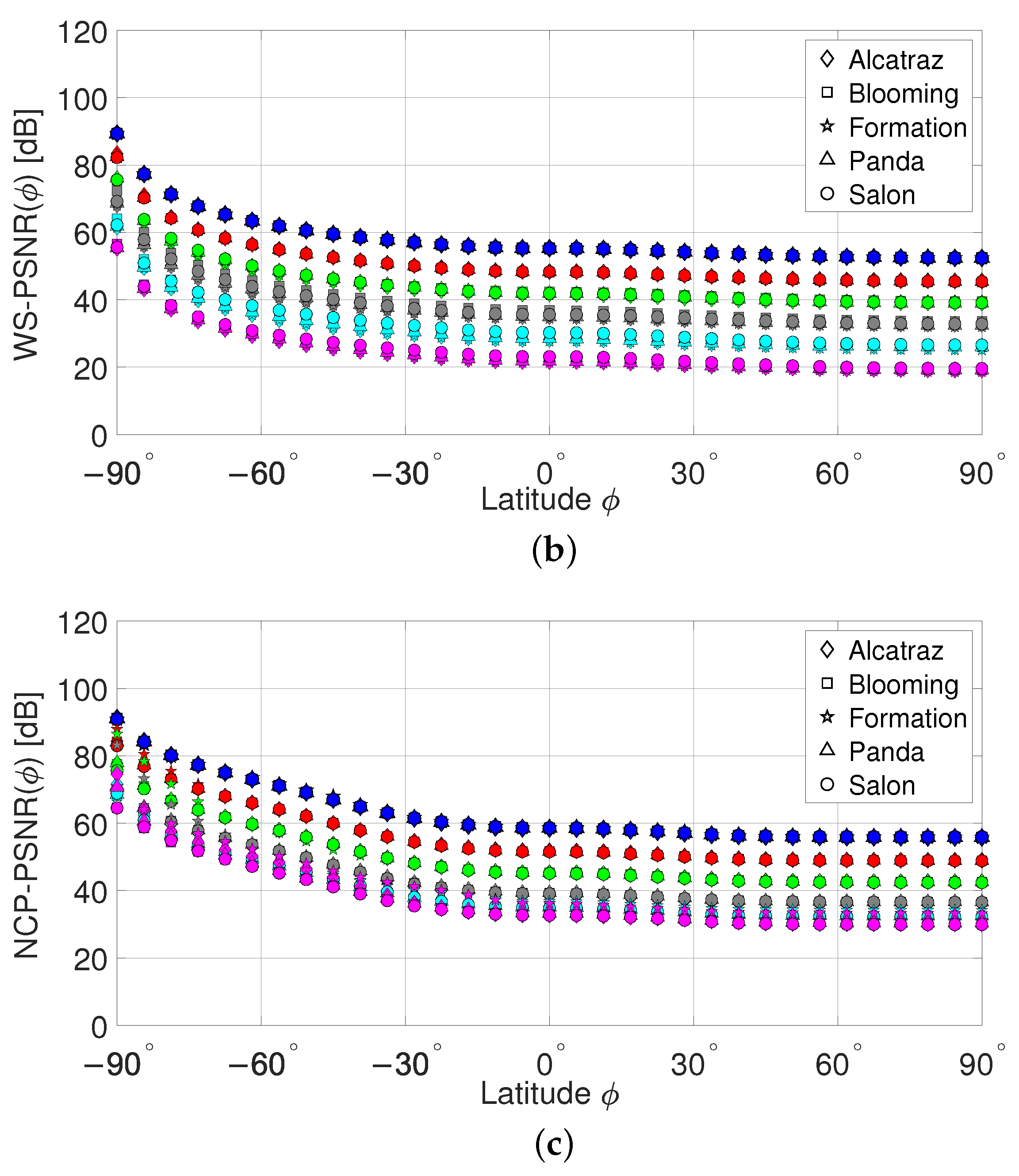

- The fidelity of viewing direction based LSB data hiding is assessed in terms of the peak-signal-to-noise (PSNR) ratio, weighted-to-spherical-uniform PSNR (WS-PSNR), and non-content-based perceptual PSNR (NCP-PSNR).

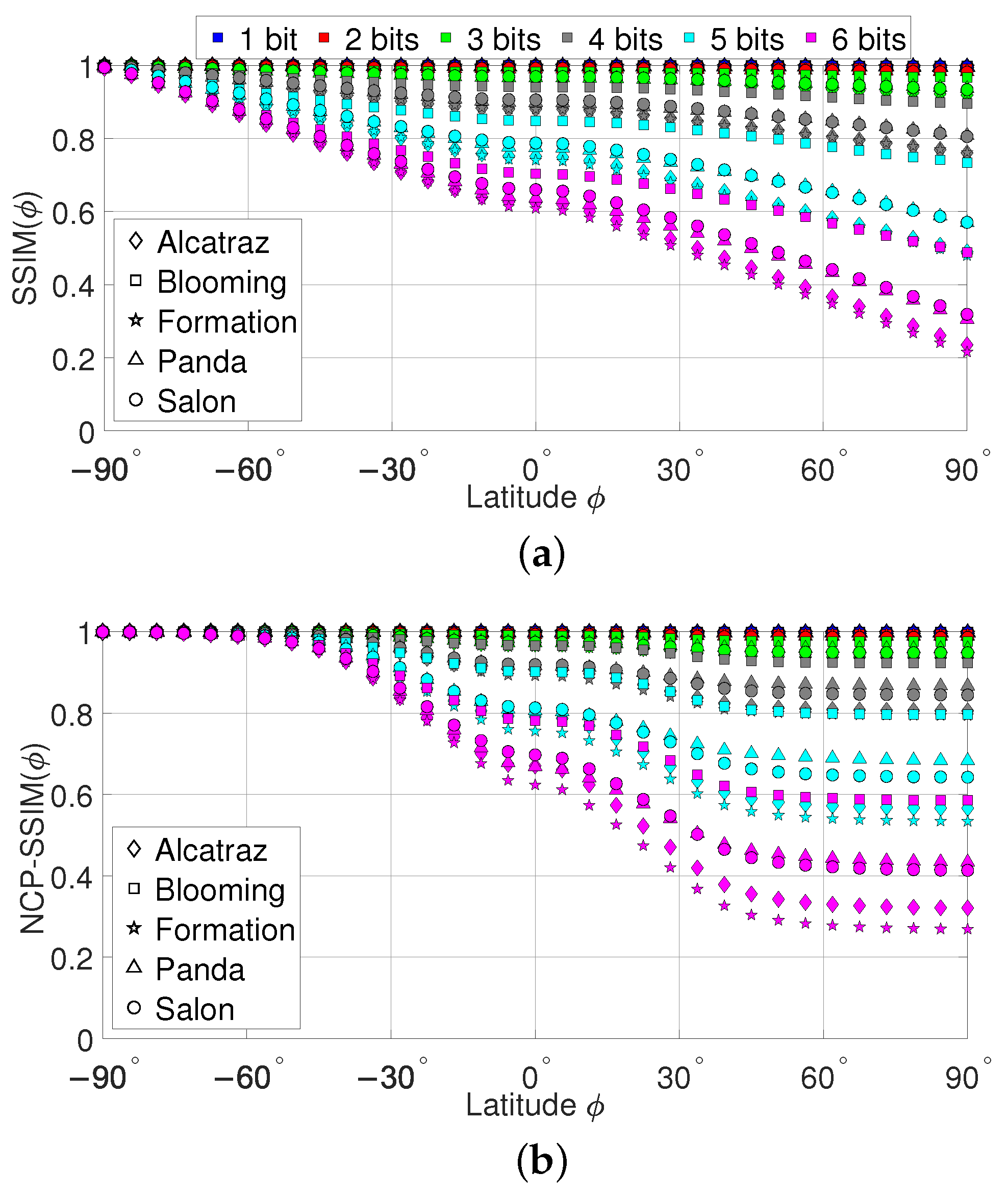

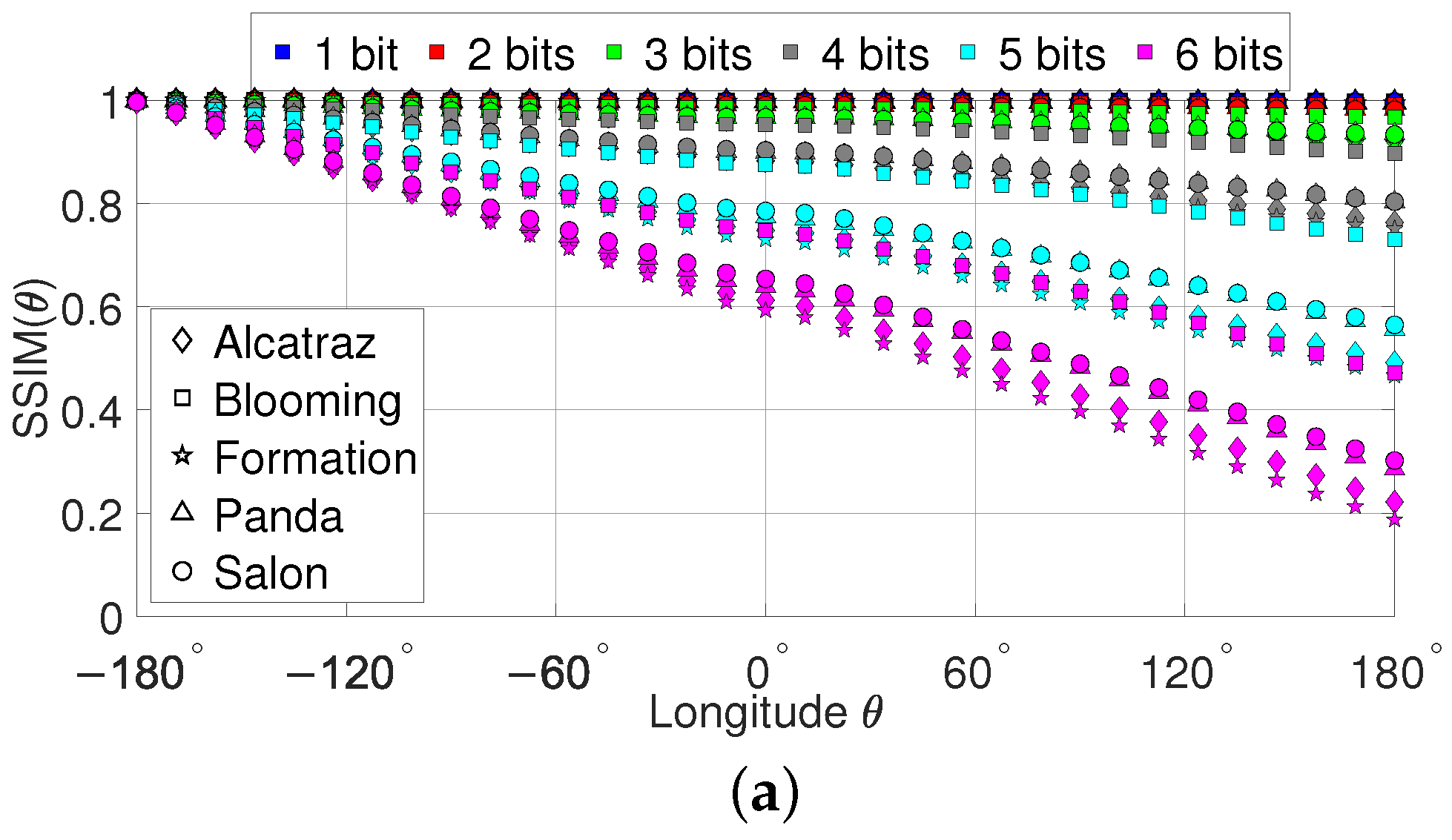

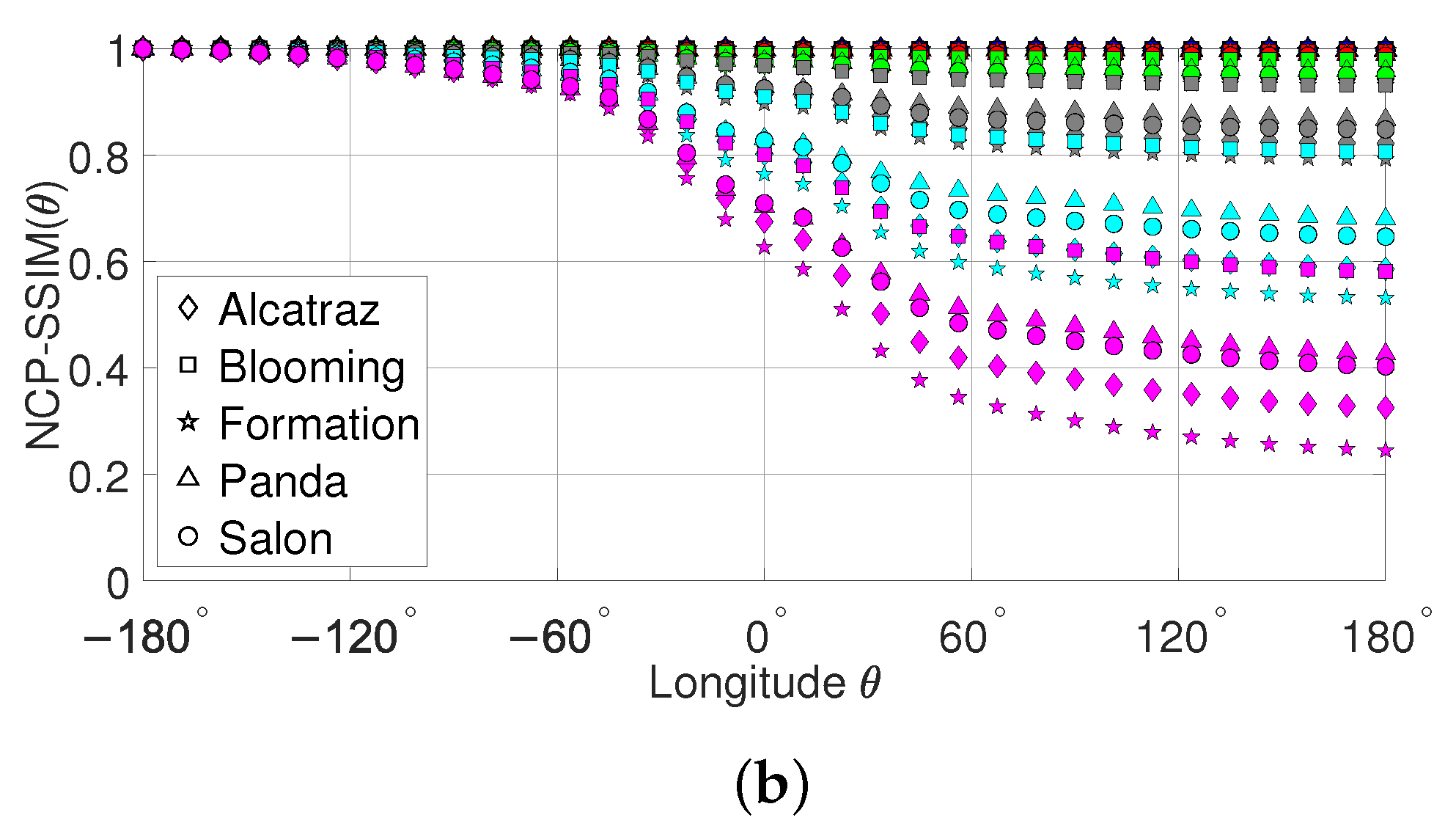

- The visual quality of viewing direction based LSB data hiding is assessed in terms of the structural similarity (SSIM) index and non-content-based perceptual SSIM (NCP-SSIM) index.

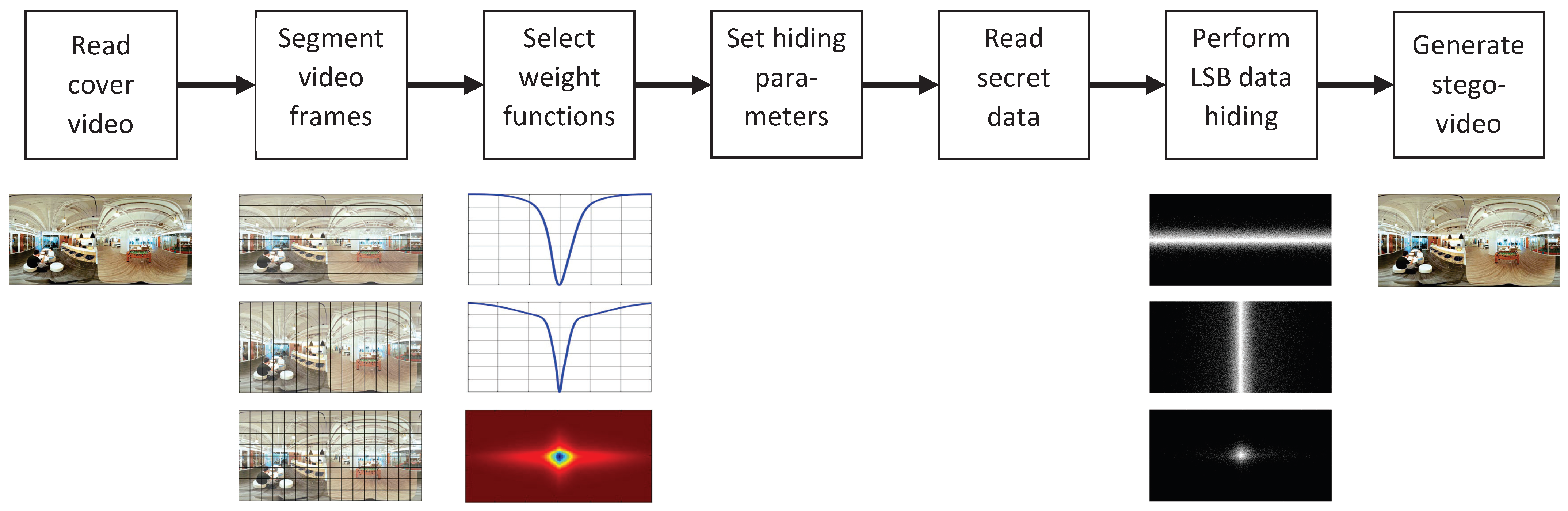

2. Viewing Direction Based LSB Data Hiding

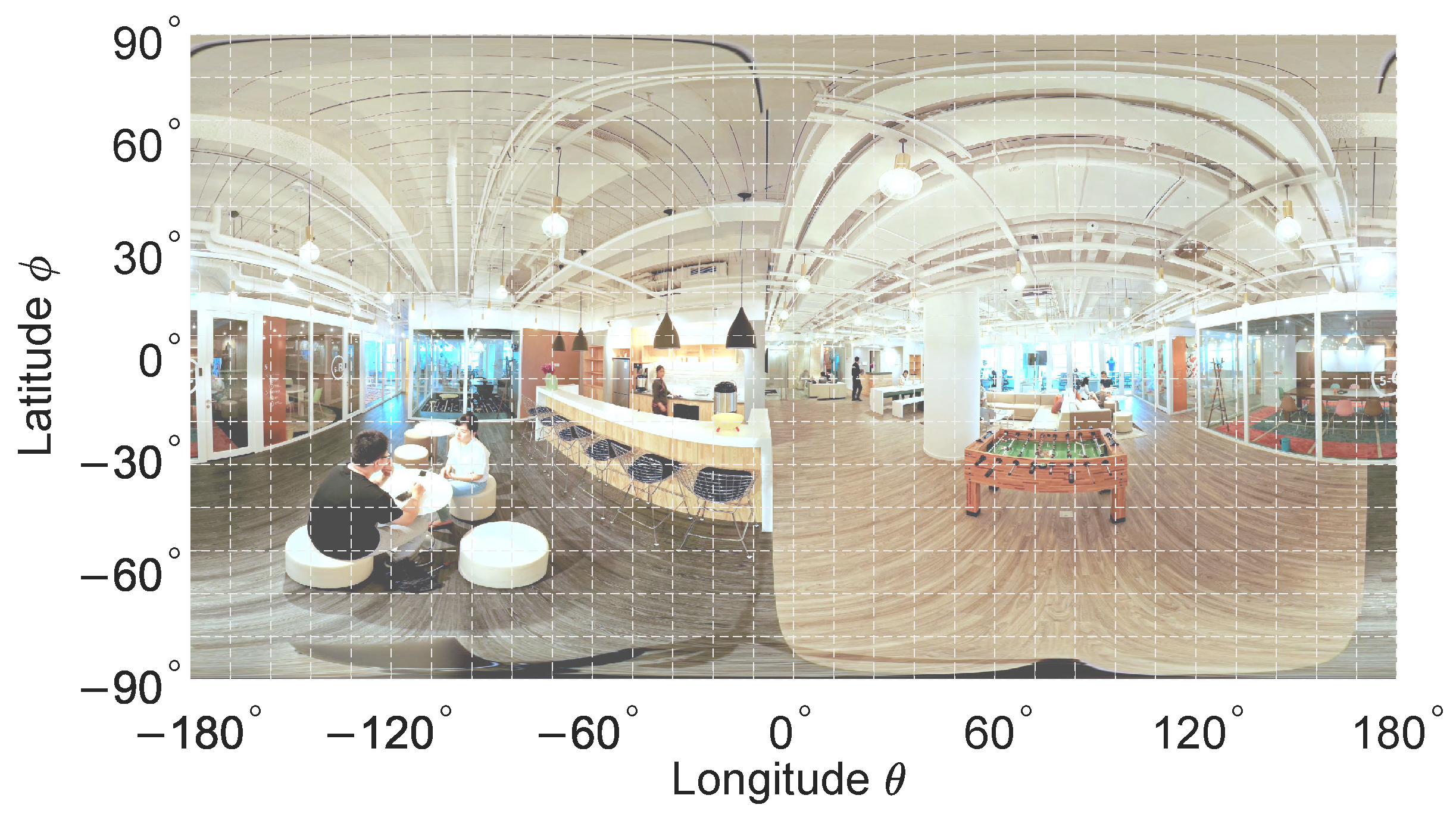

2.1. Equirectangular Projection

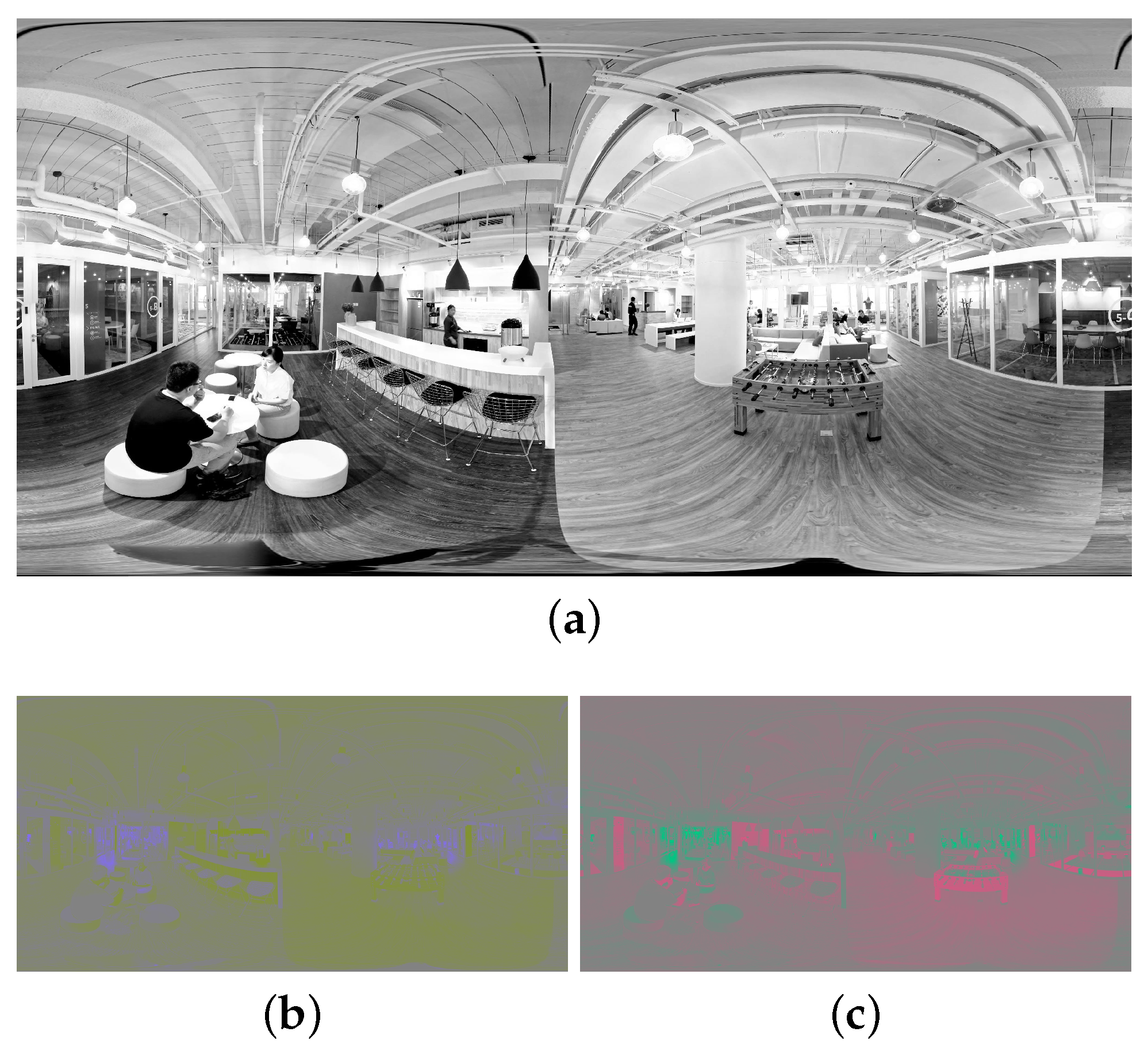

2.2. YUV Color Encoding

2.3. LSB Data Hiding Approach

- w1(ϕ):

- Weight function accounting only for the viewing direction frequency along the latitude.

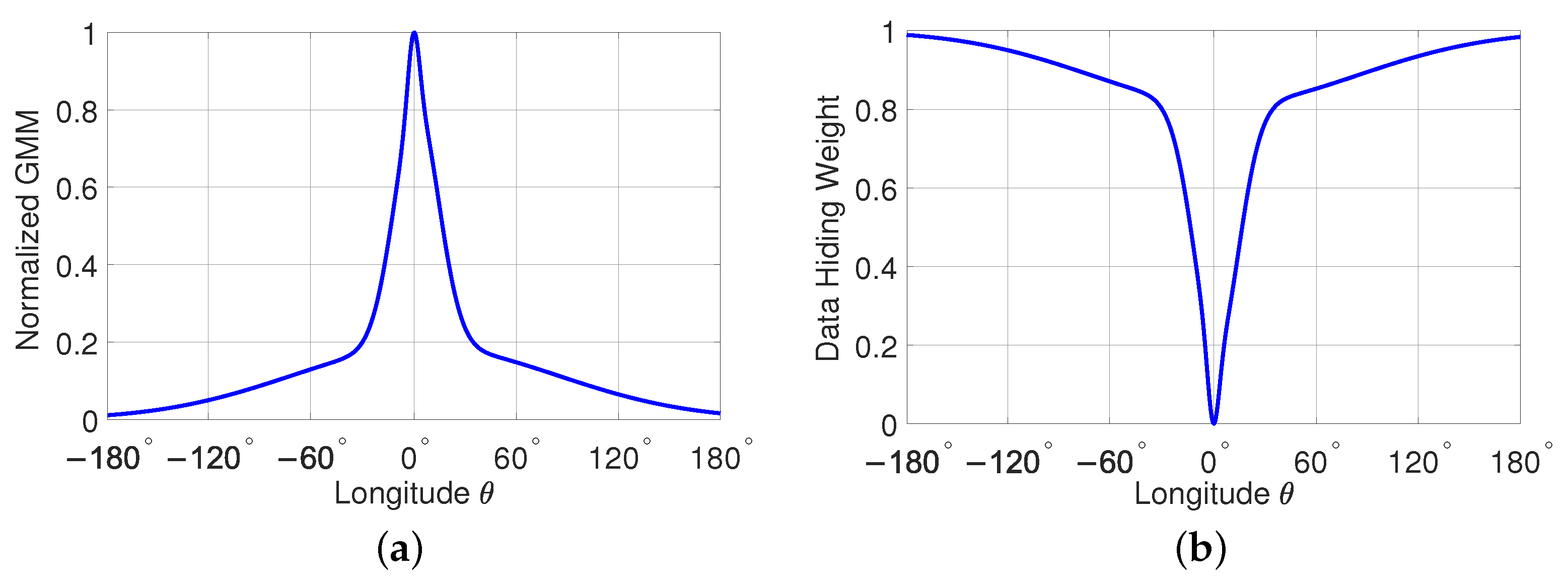

- w2(θ):

- Weight function accounting only for the viewing direction frequency along the longitude.

- w3(ϕ, θ):

- Weight function accounting for the viewing direction frequency along both the latitude and longitude.

2.4. Pseudo Code of Viewing Direction Based LSB Data Hiding

| Algorithm 1 Viewing Direction Based LSB Data Hiding in 360° Videos |

|

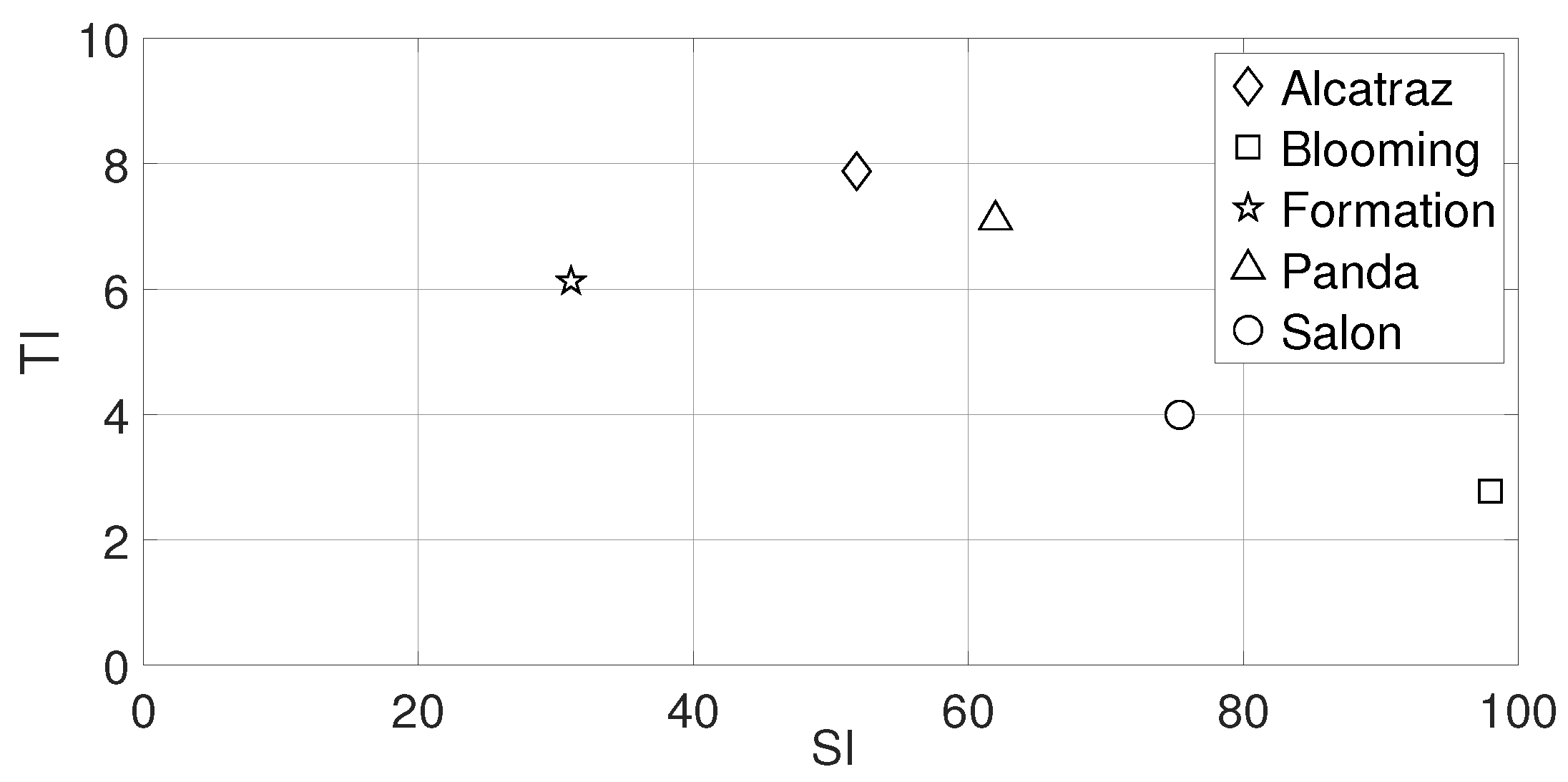

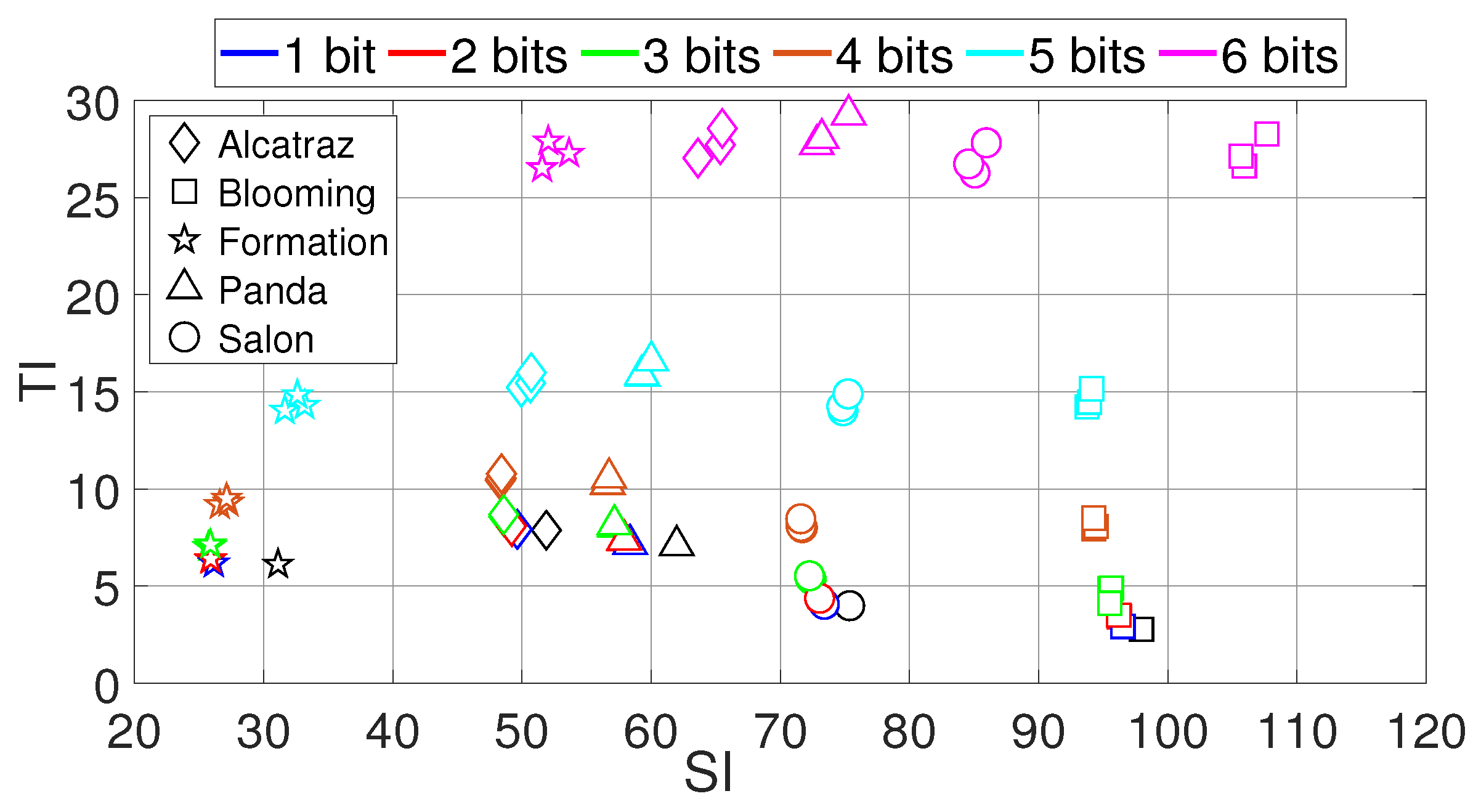

3. Cover Videos and Stego-Videos

3.1. Cover Videos Used for Viewing Direction Based LSB Data Hiding

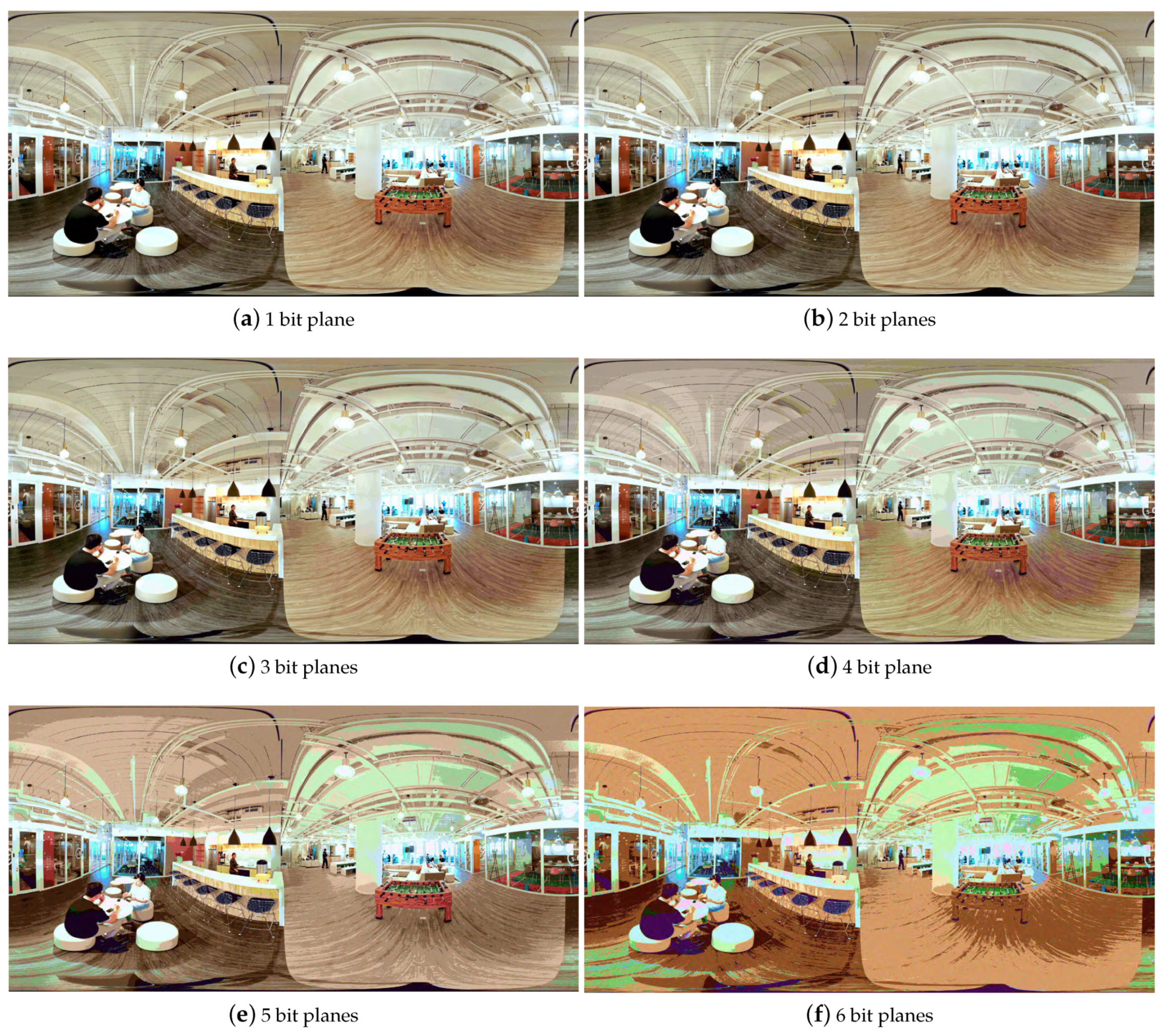

3.2. Examples of Stego-Videos

4. Models for Viewing Direction Based LSB Data Hiding

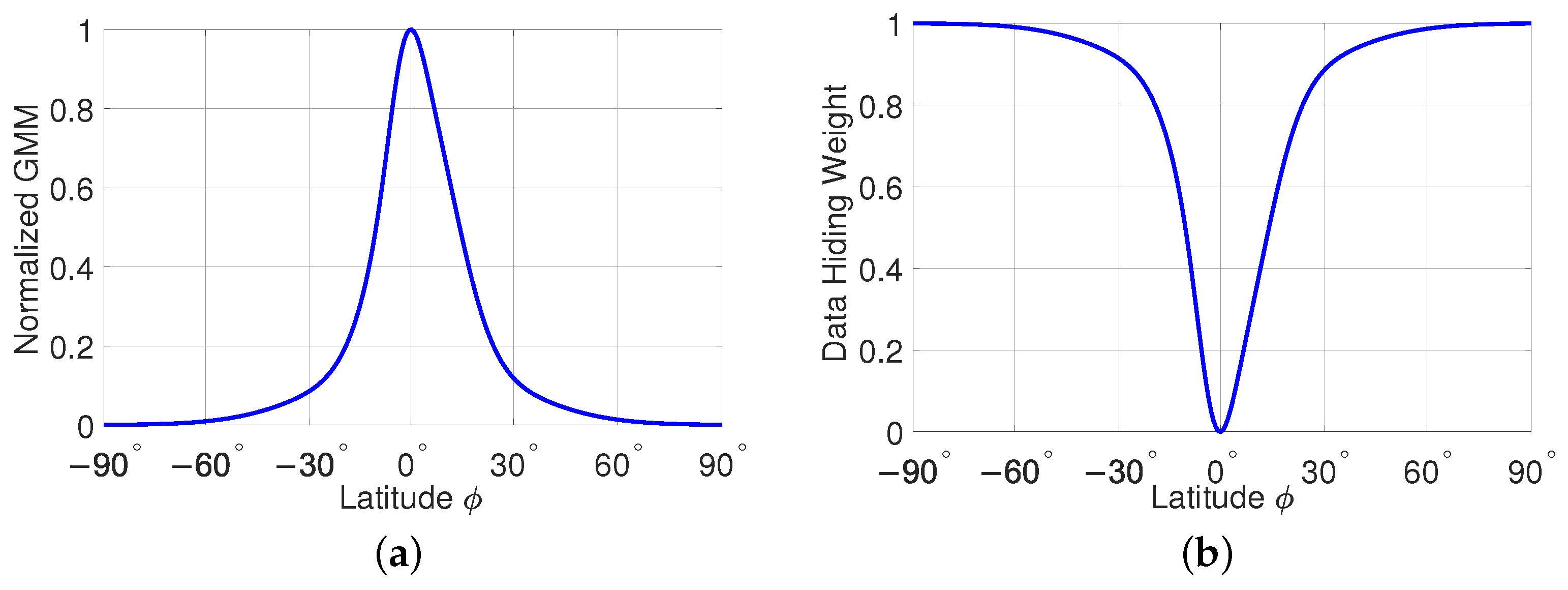

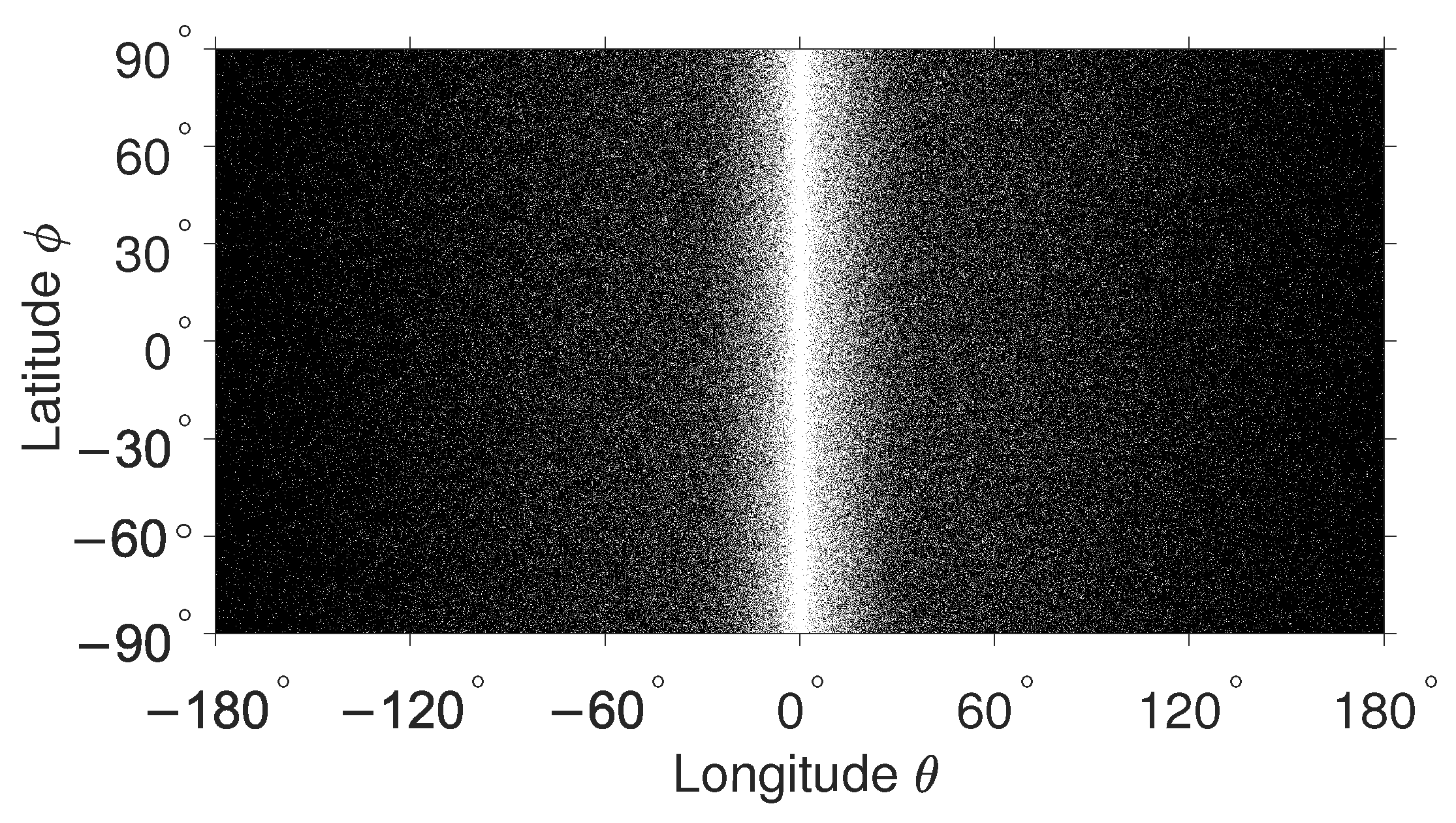

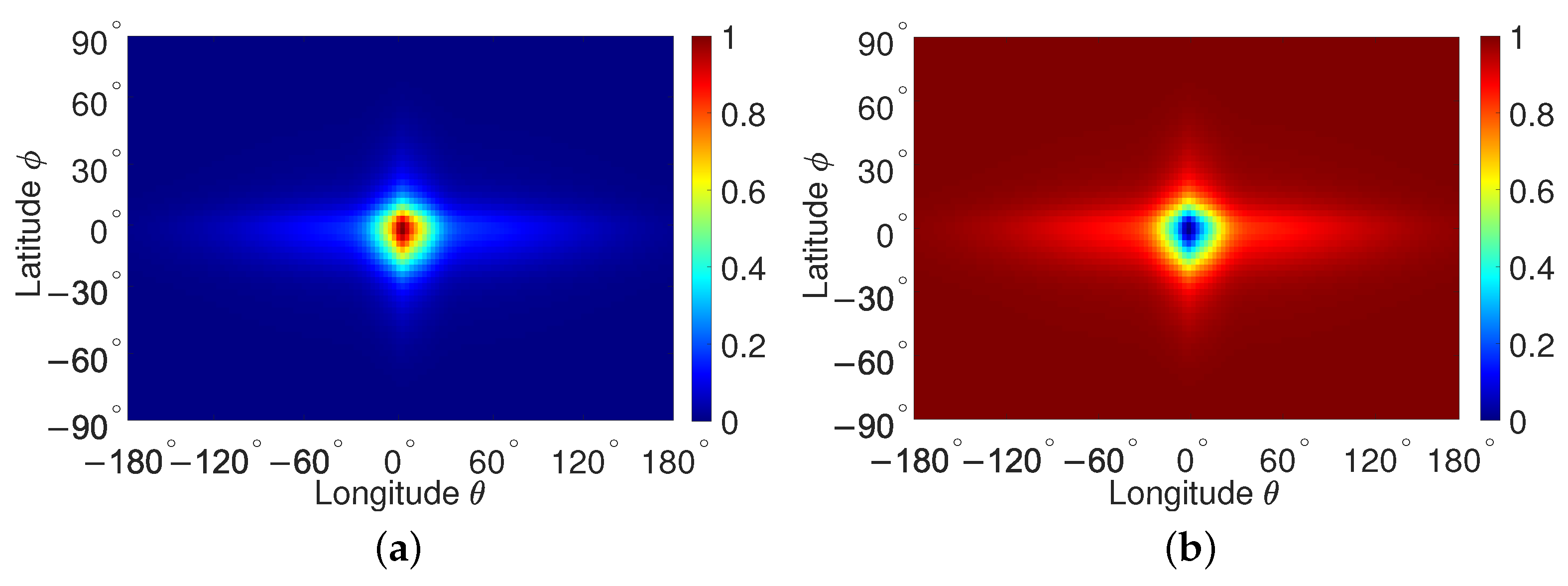

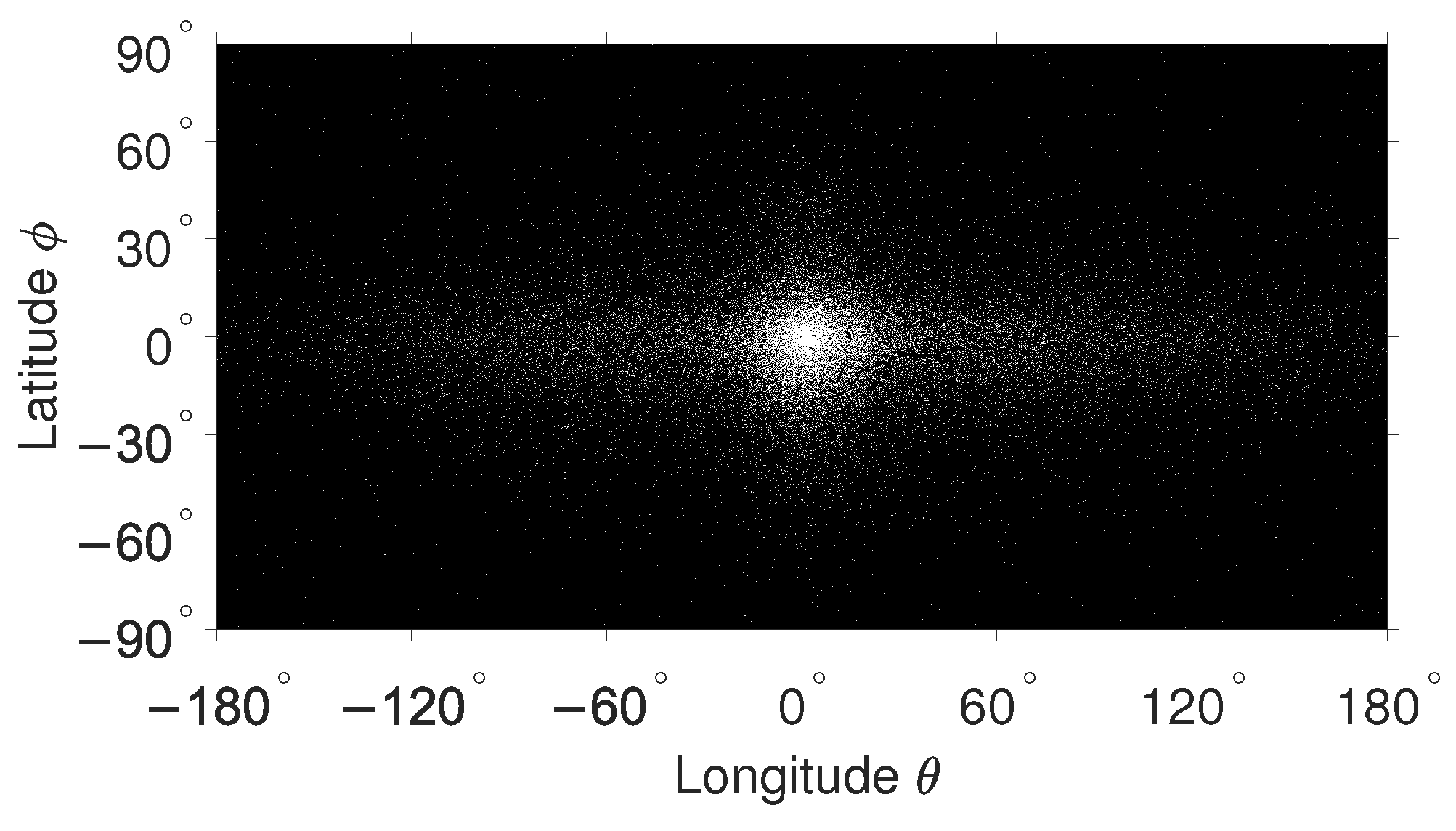

4.1. Normalized Relative Viewing Direction Frequency and Data Hiding Weight Functions

4.1.1. GMM for the Latitude

4.1.2. GMM for the Longitude

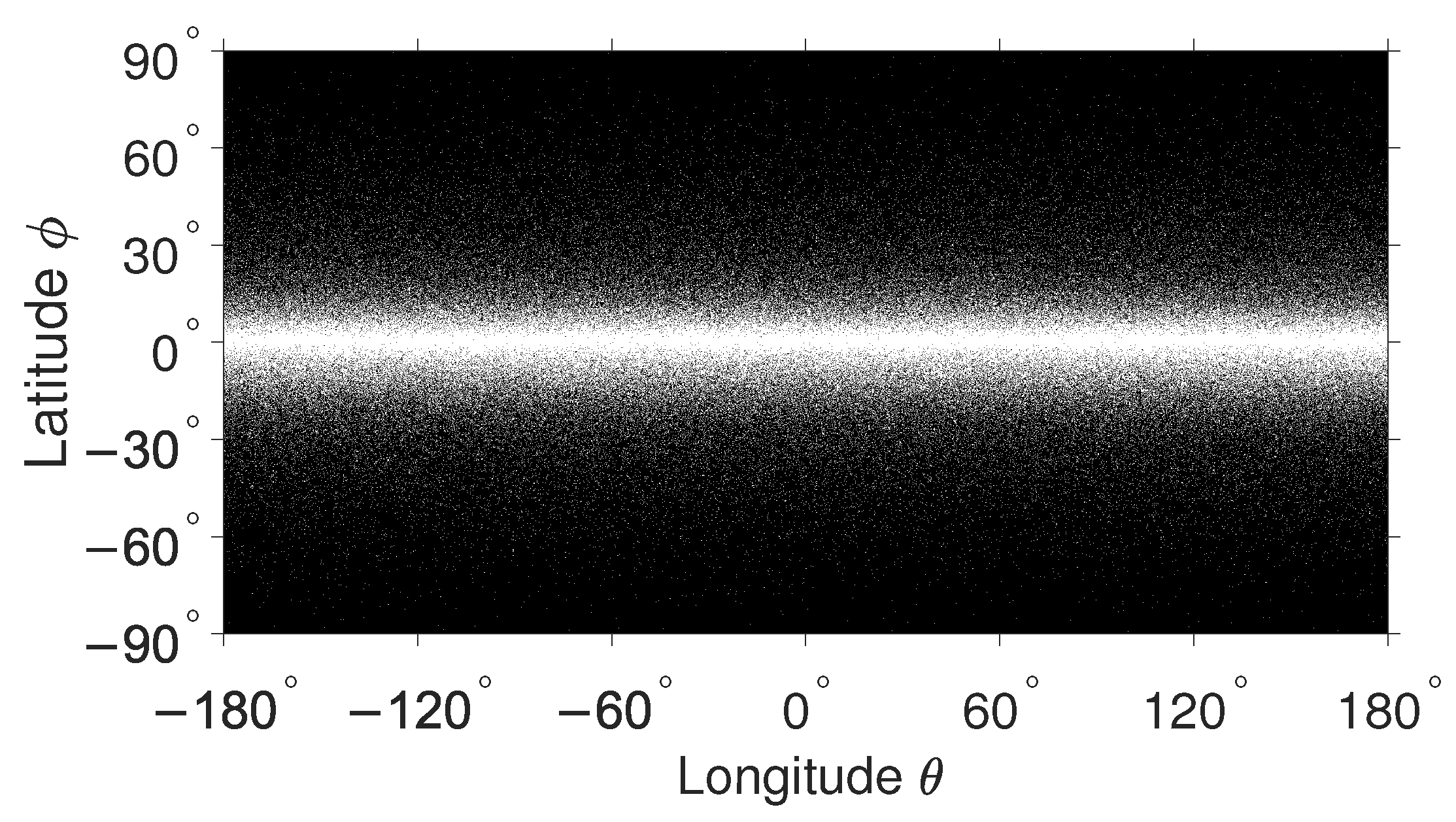

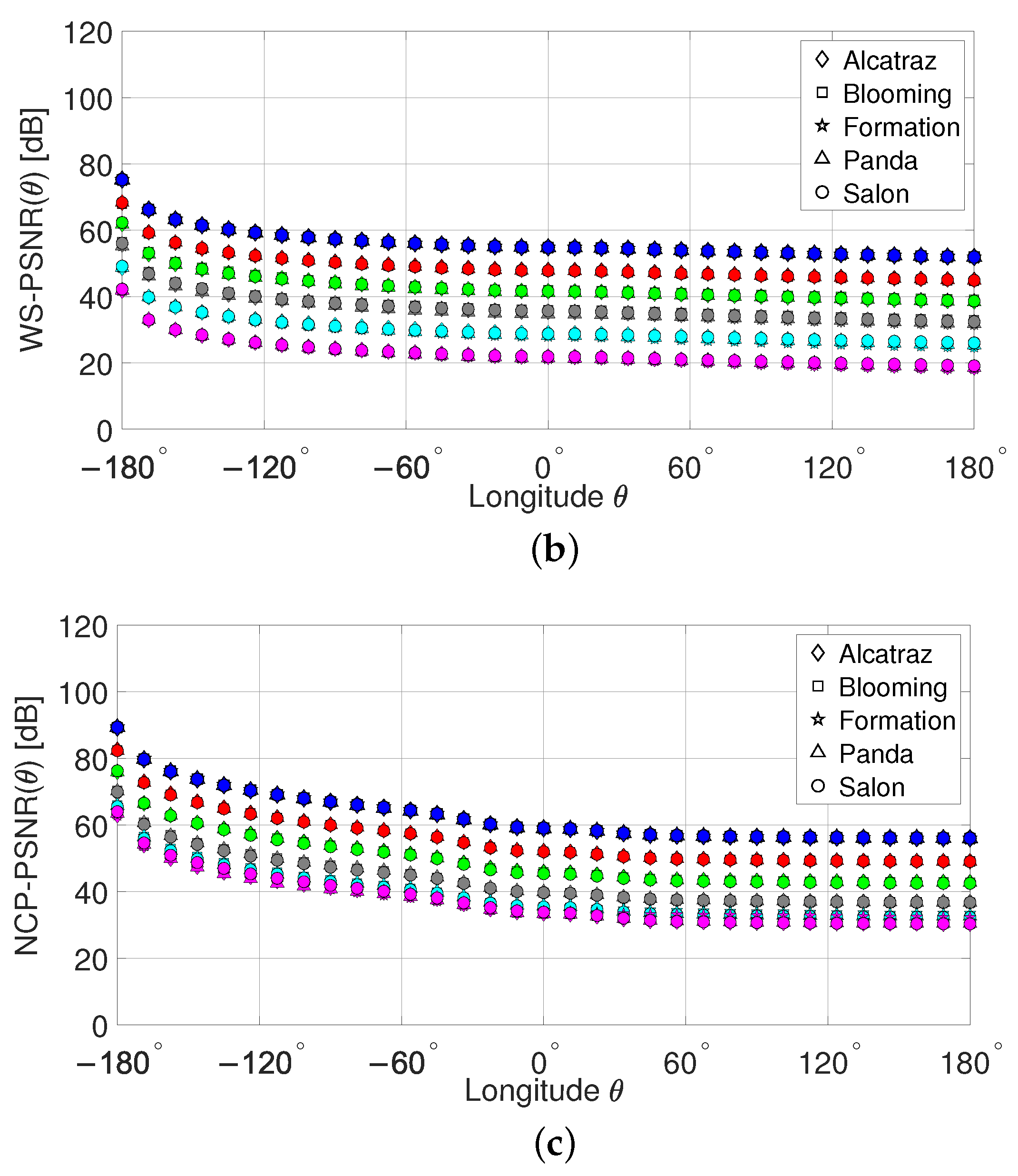

4.1.3. GMM for Latitude and Longitude

5. Capacity

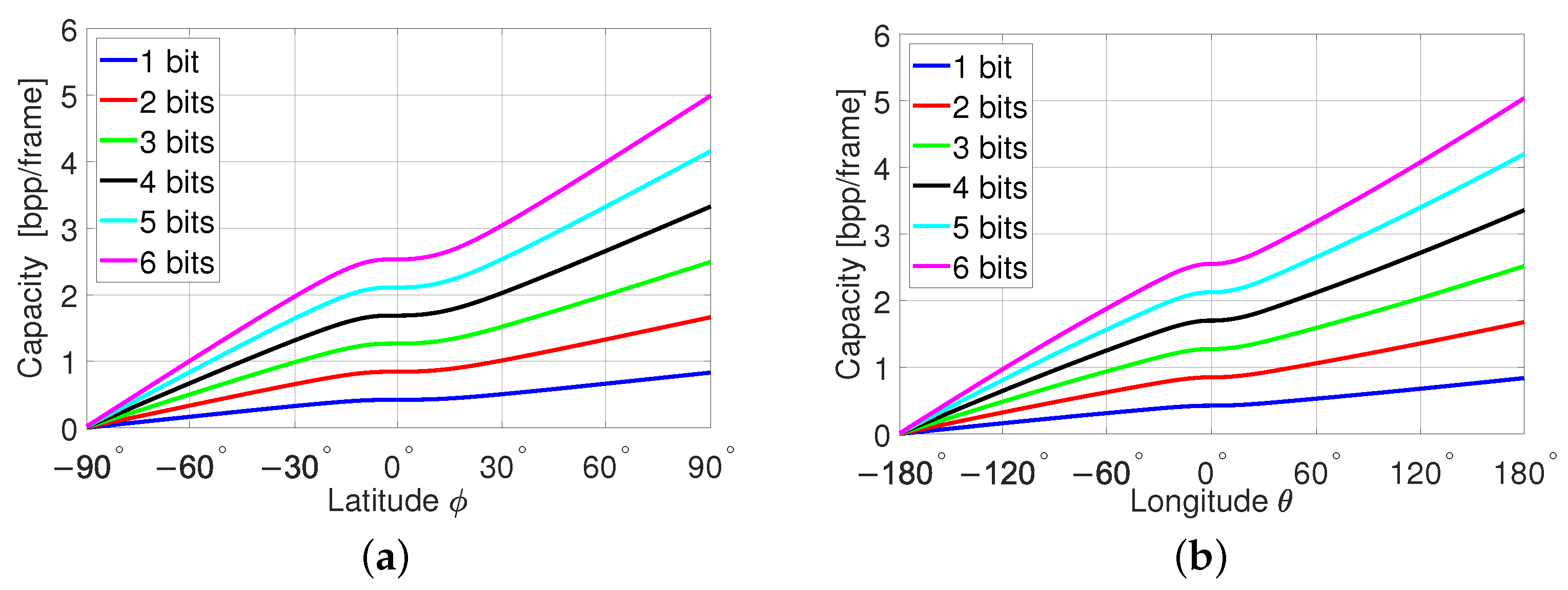

5.1. Capacity for Data Hiding in the Latitude

5.2. Capacity for Data Hiding in the Longitude

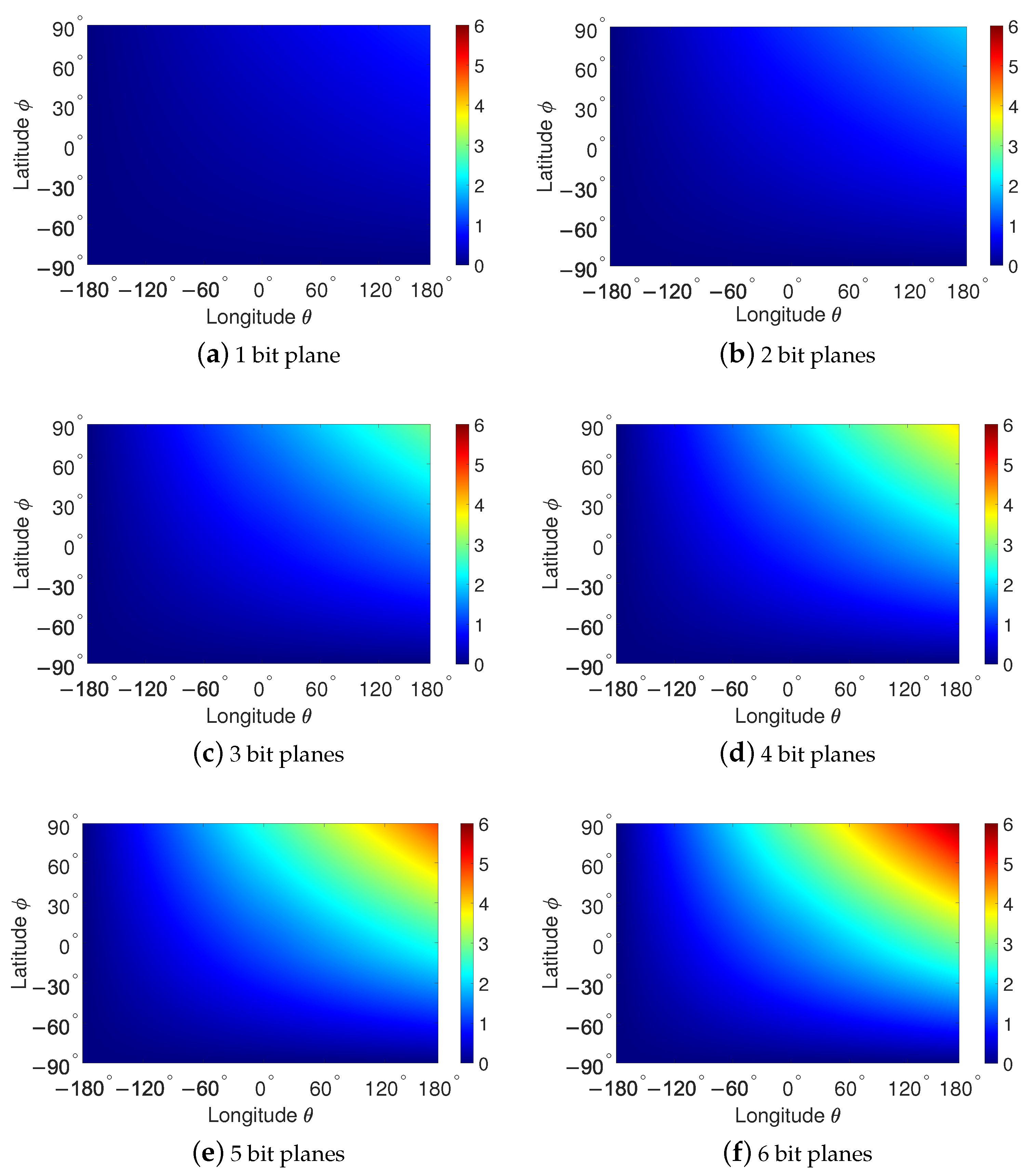

5.3. Capacity for Data Hiding in Latitude and Longitude

5.4. Numerical Results for Capacity

6. Fidelity Assessment of Viewing Direction Based LSB Data Hiding

6.1. Fidelity Metrics

6.1.1. Peak-Signal-to-Noise Ratio

6.1.2. Weighted-to-Spherical-Uniform PSNR

6.1.3. Non-Content-Based Perceptual PSNR

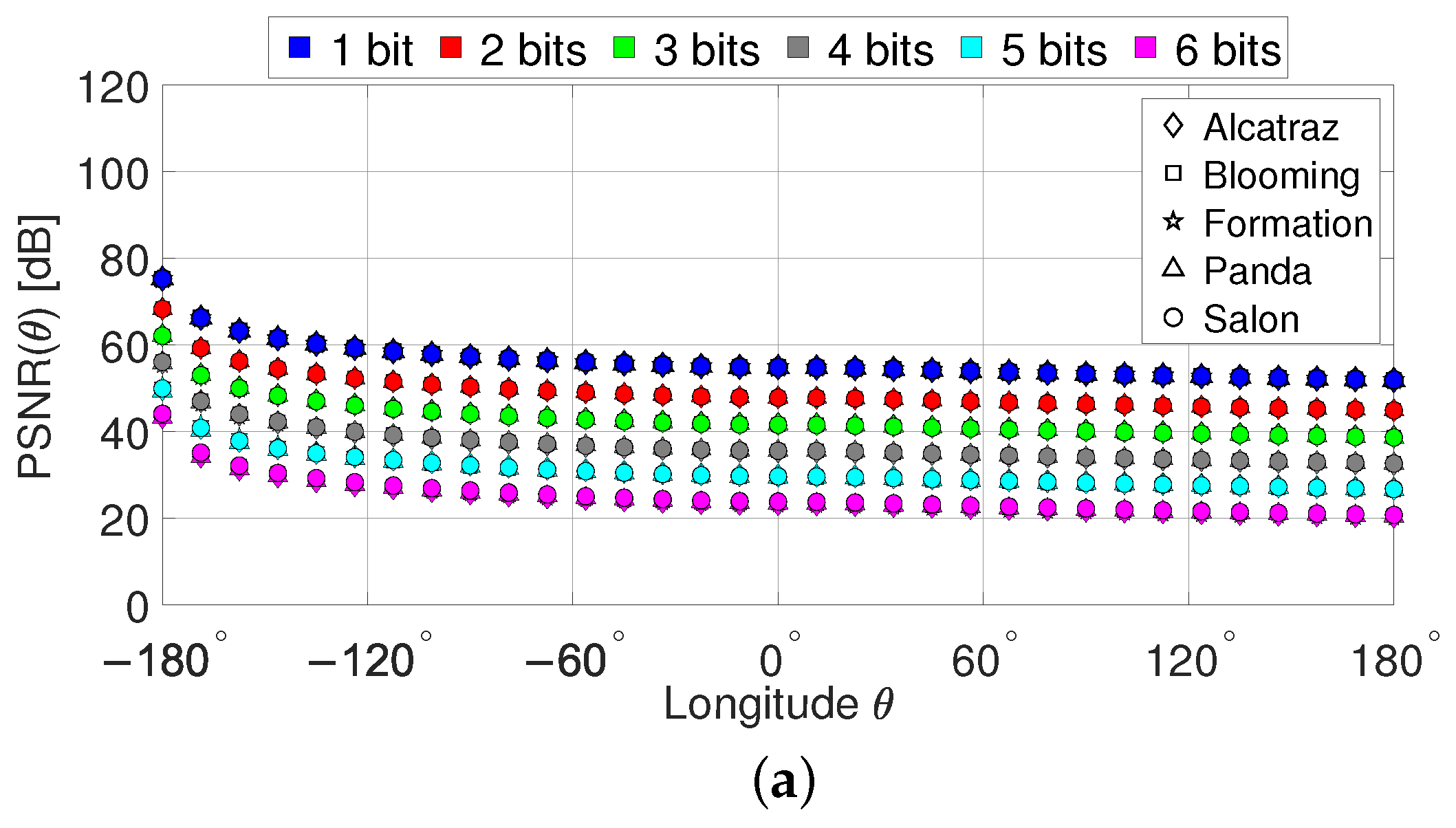

6.2. Experimental Results for Fidelity

6.2.1. Data Hiding in the Latitude

6.2.2. Data Hiding in the Longitude

6.2.3. Data Hiding in the Latitude and Longitude

7. Visual Quality Assessment of Viewing Direction Based LSB Data Hiding

7.1. Visual Quality Metrics

7.1.1. Structural Similarity Index

7.1.2. Non-Content-Based Perceptual Structural Similarity Index

7.2. Experimental Results for Visual Quality

7.2.1. Data Hiding in the Latitude

7.2.2. Data Hiding in the Longitude

7.2.3. Data Hiding in the Latitude and Longitude

8. Summary and Conclusions

- Depending on the number of bit planes used for viewing direction based LSB data hiding and the selected resolution, the total capacity may range from 1.74 to 172.04 Mbits per 360° cover video frame.

- As data hiding degrades the visual quality of the 360° stego-video frames, the wide range of total capacities allows for trading off capacity versus quality such that sufficient data can be hidden in each video frame while keeping visual quality at a satisfactory level.

- The fidelity assessment shows that NCP-PSNR gives the highest fidelity compared to PSNR and WS-PSNR because it gives lower weights to the impact of LSB data hiding on fidelity outside the front regions near the equator.

- The visual quality assessment reveals that both SSIM-based metrics are able to account for the spatial perceptual information of different scenes while the PSNR-based fidelity metrics cannot exploit this information.

- Furthermore, NCP-SSIM reflects much better the impact of the proposed viewing direction based LSB data hiding method on visual quality with respect to viewing directions compared to SSIM.

- Overall, NCP-SSIM turned out to be the most effective and realistic metric among the considered metrics when it comes to assessing the visual quality of the proposed viewing direction based LSB data hiding method. It is able to accommodate the distribution of viewing direction frequencies and spatial perceptual information into the visual quality assessment.

- It is recalled that NCP-SSIM was developed in [11] based on extensive subjective experiments and is consistent with recommendation [10] in terms of seating arrangements and number of participants. As such, NCP-SSIM is indeed very well applicable to the assessment of the viewing direction based LSB data hiding method proposed in this paper.

- Given that an HMD provides the users only with a limited viewport rather than the entire sphere at a given time, more advanced visual attention models beyond viewing direction distributions and adaptive LSB data hiding with respect to the viewport dynamics may be considered.

- On this basis, content based fidelity and visual quality metrics may be developed that are able to predict the impact of LSB data hiding methods or other data hiding methods on the fidelity as well as the visual quality as perceived by humans.

- Other types of projection techniques than the ERP can be used such as cube map projection, octahedron projection, and segmented sphere projection. The distributions of intensity pixels on the sphere are projected differently to the plane by these techniques. This may lead to different hiding positions and different impact on the visual quality of the obtained 360° videos.

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| 6G | Sixth generation |

| AR | Augmented reality |

| ERP | Equirectangular projection |

| GMM | Gaussian mixture model |

| HMD | Head-mounted display |

| LSB | Least significant bit |

| MSE | Mean square error |

| NCP-PSNR | Non-content-based perceptual PSNR |

| NCP-SSIM | Non-content-based perceptual SSIM |

| PSNR | Peak signal-to-noise ratio |

| RGB | Red, green, blue |

| SSIM | Structural similarity |

| VQA | Video quality assessment |

| VR | Virtual reality |

| WMSE | Weighted mean square error |

| WS-PSNR | Weighted-to-spherical-uniform PSNR |

Appendix A. Peak Signal-to-Noise Ratio Based Metrics

Appendix A.1. Peak Signal-to-Noise Ratio

Appendix A.2. Weighted-to-Spherically Uniform PSNR

References

- Huawei iLab. VR Big Data Report; Huawei Technologies Co., Ltd.: Shenzhen, China, 2017. [Google Scholar]

- Huawei iLab. Cloud VR Network Solution White Paper; Huawei Technologies Co., Ltd.: Shenzhen, China, 2018. [Google Scholar]

- Yrjölä, S.; Ahokangas, P.; Matinmikko-Blue, M. (Eds.) White Paper on Business of 6G. (6G Research Visions, No. 3); University of Oulu: Oulu, Finland, 2020. [Google Scholar]

- Djebbar, F.; Ayad, B.; Meraim, K.A.; Hamam, H. Comparative Study of Digital Audio Steganography Techniques. EURASIP J. Audio Speech Music Process. 2012, 2012, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Cheddad, A.; Condell, J.; Curran, K.; Kevitt, P.M. Digital Image Steganography: Survey and Analysis of Current Methods. Signal Process. 2010, 90, 727–752. [Google Scholar] [CrossRef] [Green Version]

- Kadhim, I.J.; Premaratne, P.; Vial, P.J.; Halloran, B. Comprehensive Survey of Image Steganography: Techniques, Evaluations, and Trends in Future Research. Neurocomputing 2019, 335, 299–326. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Wang, Y.; Zhao, H.; Liu, S. Video Steganography: A Review. Neurocomputing 2019, 335, 238–250. [Google Scholar] [CrossRef]

- Tran, D.N. On LSB Data Hiding in New Digital Media; Licentiate Dissertation, Blekinge Institute of Technology: Karlskrona, Sweden, 2020. [Google Scholar]

- Yu, M.; Laksman, H.; Girod, B. Framework to Evaluate Omnidirectional Video Coding Schemes. In Proceedings of the International Symposium on Mixed and Augmented Reality, Fukuoka, Japan, 29 September–3 October 2015; pp. 31–36. [Google Scholar]

- Recommendation ITU-T P.919. Subjective Test Methodologies for 360 Degree Video on HMD; International Telecommunication Union—Telecommunication Standardization Sector: Geneva, Switzerland, 2020. [Google Scholar]

- Xu, M.; Li, C.; Chen, Z.; Wang, Z.; Guan, Z. Assessing Visual Quality of Omnidirectional Videos. IEEE Trans. Ciruits Syst. Video Technol. 2019, 29, 3516–3530. [Google Scholar] [CrossRef] [Green Version]

- Tran, D.N.; Zepernick, H.J. Spherical Light-Weight Data Hiding in 360-Degree Videos With Equirectangular Projection. In Proceedings of the 2019 International Conference on Advanced Technologies for Communications (ATC), Hanoi, Vietnam, 17–19 October 2019; pp. 56–62. [Google Scholar]

- Tran, D.N.; Zepernick, H.J. Spherical LSB Data Hiding in 360° Videos Using Morphological Operations. In Proceedings of the 2019 13th International Conference on Signal Processing and Communication Systems (ICSPCS), Gold Coast, Australia, 16–18 December 2019; pp. 573–582. [Google Scholar]

- Tran, D.N.; Zepernick, H.J.; Chu, T.M.C. Visual Attention Based LSB Data Hiding in 360° Videos. In Proceedings of the 2020 14th International Conference on Signal Processing and Communication Systems (ICSPCS), Adelaide, Australia, 14–16 December 2020; pp. 1–8. [Google Scholar]

- Bjarne. YUV Pixel Formats. Available online: https://www.fourcc.org/yuv.php (accessed on 8 May 2021).

- Beihang University, School of Electronic and Information Engineering, Beijing, China. VQA-ODV. Available online: https://github.com/Archer-Tatsu/VQA-ODV (accessed on 1 June 2020).

- Li, C.; Xu, M.; Wang, Z. Bridge the Gap Between VQA and Human Behavior on Omnidirectional Video: A Large-Scale Dataset and a Deep Learning Model. In Proceedings of the ACM International Conference on Multimedia, Seoul, Korea, 15 October 2018; pp. 932–940. [Google Scholar]

- Recommendation ITU-T P.910. Subjective Video Quality Assessment Methods for Multimedia Applications; International Telecommunication Union—Telecommunication Standardization Sector: Geneva, Switzerland, 2008. [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables, 10th ed.; Mathematics Series; National Bureau of Standards: Washington, DC, USA, 1972; Volume 55.

- Huynh-Tuh, Q.; Ghanbari, M. Scope of Validity of PSNR in Image/Video Quality Assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Huynh-Tuh, Q.; Ghanbari, M. The Accuracy of PSNR in Predicting Video Quality for Different Video Scenes and Frame Rates. Telecommun. Syst. 2012, 49, 35–48. [Google Scholar] [CrossRef]

- Sun, Y.; Lu, A.; Yu, L. Weighted-to-Spherically-Uniform Quality Evaluation for Omnidirectional Video. IEEE Signal Process. Lett. 2017, 24, 1408–1412. [Google Scholar] [CrossRef]

- Wu, H.R.; Rao, K.R. Digital Video Image Quality and Perceptual Coding; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Samsung, Republic of Korea. 360tools: Projection and Quality Evaluation Tools for VR Video Compression Exploration Experiments. Available online: https://github.com/Samsung/360tools (accessed on 23 May 2019).

| k | |||

|---|---|---|---|

| 1 | 0.2272 | −2.3738 | 6.6437 |

| 2 | 0.6333 | 1.8260 | 14.8171 |

| 3 | 0.1727 | 1.4618 | 36.1311 |

| l | |||

|---|---|---|---|

| 1 | 0.1988 | −0.1549 | 4.6740 |

| 2 | 0.6198 | 1.5140 | 18.51 |

| 3 | 0.1871 | 6.3670 | 110.5 |

| b | |||||||

|---|---|---|---|---|---|---|---|

| 1 | |||||||

| 1 | |||||||

| 1 | |||||||

| 1 | |||||||

| 1 | |||||||

| 3 | |||||||

| 3 | |||||||

| 3 | |||||||

| 3 | |||||||

| 3 | |||||||

| 6 | |||||||

| 6 | |||||||

| 6 | |||||||

| 6 | |||||||

| 6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tran, D.N.; Zepernick, H.-J.; Chu, T.M.C. Viewing Direction Based LSB Data Hiding in 360° Videos. Electronics 2021, 10, 1527. https://doi.org/10.3390/electronics10131527

Tran DN, Zepernick H-J, Chu TMC. Viewing Direction Based LSB Data Hiding in 360° Videos. Electronics. 2021; 10(13):1527. https://doi.org/10.3390/electronics10131527

Chicago/Turabian StyleTran, Dang Ninh, Hans-Jürgen Zepernick, and Thi My Chinh Chu. 2021. "Viewing Direction Based LSB Data Hiding in 360° Videos" Electronics 10, no. 13: 1527. https://doi.org/10.3390/electronics10131527

APA StyleTran, D. N., Zepernick, H.-J., & Chu, T. M. C. (2021). Viewing Direction Based LSB Data Hiding in 360° Videos. Electronics, 10(13), 1527. https://doi.org/10.3390/electronics10131527