1. Introduction

Until recently, watermark embedding for most 2D images has been algorithmically performed, and watermark extracting has been proposed according to the embedding process or by modifying it [

1,

2,

3,

4,

5,

6,

7]. In general, watermarking is subject to non-malicious and malicious attacks. Deliberately damaging or removing watermark information is called a malicious attack, and image processing to store or distribute content is called a non-malicious attack. Watermark embedding can be performed algorithmic or deterministic, but watermark extracting is not. An image signal that has already been attacked may not be a signal predicted when an algorithmic extracting method is devised. Therefore, it may not be possible to extract the embedding watermark by the deterministic extracting method. To overcome such difficulties, techniques for watermarking using machine learning have been studied [

8,

9,

10,

11,

12,

13,

14]. These methods are also performed as a network by separating the embedding process and the extracting process.

Research on watermarking techniques using deep learning is still in the beginning stage. Although not many studies exist, it can be seen that the number has been increasing in recent years. Previous studies mainly use convolutional neural network (CNN), autoencoder (AE), and generative adversarial network (GAN), which are widely used in deep learning. We will look at the features of the previous studies in detail in the next Section [

8,

9,

10,

11,

12,

13,

14,

15]. A lot of research has been conducted to implement a watermarking algorithm in hardware for various purposes [

16,

17,

18,

19,

20,

21,

22,

23,

24]. It is mainly targeting field programmable gate arrays (FPGAs), and recently, studies based on graphic processing units (GPUs) have also been conducted. Looking at previous studies, it is difficult to find a case where a deep learning-based watermarking algorithm is implemented in hardware. Considering the recent trend in which neural processing units (NPUs) are embedded inside various system on chip (SoC), it can be predicted that the hardware implementation of deep learning-based watermarking algorithms will be expanded.

In this study, we design and implement a watermark embedder to the ASIC for embedding a user-selected watermark with high-speed. For this, we propose an optimization methodology for arithmetic and bus after applying the fixed-point simulation for implementing hardware. We propose a new hardware architecture for the efficient operation of watermarking and implement it to hardware. We verify that the implemented hardware can operate with high speed by analyzing the watermarking processor.

This paper consists as follows.

Section 2 introduces the related work for the deep learning-based watermarking and the hardware implementation of watermarking.

Section 3 explains the deep learning-based watermarking algorithm, which was previously proposed by our team. In

Section 4, we propose an optimization methodology for developing hardware and propose a hardware structure. In

Section 5, we show the experimental result and conclude the paper in

Section 6.

2. Related Work

In this section, we analyze previous studies in the two fields that underlie our research. First, we review watermarking techniques using deep learning [

8,

9,

10,

11,

12,

13,

14,

15], which includes a study that we previously published, providing a deep learning-based watermarking algorithm in this paper [

15]. Next, we review the studies that implemented the watermarking algorithm in hardware. However, when analyzing previous studies, it can be confirmed that there is no case of implementing a deep learning-based watermarking algorithm in hardware [

16,

17,

18,

19,

20,

21,

22,

23,

24].

J. Zhu et al. proposed the steganalysis network and added an adversarial loss between a host image and a watermarking image to the loss function of the watermarking network [

9]. M. Ahmadi et al. proposed a watermark embedding and extracting method with the DCT and inverse DCT layer which have the pre-trained and fixed weight in the frequency domain [

10]. S. M. Mun et al. used an AE that consisted of the residual block for watermarking [

11]. X. Zhong et al. used the invariance layer for removing the effect of attack and proposed a new modeling method for attack simulation using the Frobenius norm in loss function [

12]. Bingyang et al. used the same network as J. Zhu’s, but they replaced the fixed attack simulation with the adaptive attack simulation [

13]. Y. Liu et al. propose a new two-stage training method that re-trains the extractor with adding attack simulation after training the whole network without attack simulation [

14].

The most machine learning-based methods are blind watermarking [

9,

10,

11,

12,

13,

14], use spatial data rather than frequency data [

8,

9,

11,

12,

13,

14], and limitedly use both host and watermark data [

9,

10,

11,

12,

13,

14,

15]. In using the specified watermark for training data, new training is inevitable when a new watermark is used. They have a problem that the host image is fixed using a fully-connected layer [

9,

13,

14] or that there is no universality because they did not execute the experiment using various resolutions [

10,

11,

12]. Among them, some methods did not develop a trade-off relationship between invisibility and robustness [

8,

9,

12,

13]. Our research team proposed a new universal and practical watermarking algorithm that embeds a watermark without additional training. It proved high invisibility and robustness against various pixel-value and geometric attacks from versatile experiments [

15].

Although it might be easier to implement a watermarking algorithm on a software platform, there is a strong motivation for a move toward hardware implementation. The hardware implementation offers several distinct advantages over the software implementation in terms of low power consumption, less area usage, and reliability. It features real time capabilities and compact implementations [

16]. In consumer electronic devices, a hardware watermarking solution is often more economical because adding the watermark component only takes up a small dedicated area of silicon. Several hardware implemented watermarking techniques in various domains have been proposed. There are several proposed techniques to implement hardware watermarking, which involve the use of Very Large Scale Integration (VLSI), hash function, or FPGA. Mohanty et al. presented a Field Programmable Gate Array (FPGA) based implementation of an invisible spatial domain watermarking encoder [

17]. Another attempt by Mohanty and others is to provide a VLSI implementation of invisible digital watermarking algorithms towards the development of secure JPEG encoder [

18]. Several attempts have been made to develop digital cameras with watermarking capabilities. The important research has been done in developing algorithms for watermarking and encryption with the aim of using them in digital cameras by Friedman et al. [

19]. Adamoand et al. used VLSI architecture along with FGPA prototype of digital camera for the purpose of image security and authentication [

20]. Adamo et al. proposed a chip for the secure digital camera using the DCT-based visible watermarking algorithm [

21]. Mathai et al. proposed a real time video watermarking scheme, called Just another Watermarking System (JAWS) [

22]. Mohanty at al. in [

23] proposed a new generation of GPU architecture with watermarking co-processor to enable the GPU to watermark multimedia elements, like images, efficiently. A hierarchical watermarking scheme is proposed using IC design to independently process multiple abstraction levels present in a design [

24]. When observing the results researched until recently, there is still no case of implementing watermarking hardware based on deep learning. We intend to optimize the deep learning-based watermarking we recently developed [

15] and implement it in hardware. Therefore, we try to show the first case of watermarking hardware based on deep learning.

3. Deep Learning-Based Watermarking

We studied an algorithm that effectively executes watermarking using deep learning. Based on this algorithm, we propose a new dedicated processor for watermarking. This section introduces the deep learning-based watermarking algorithm [

15] and then proposes the optimization methodology in the next section.

3.1. Network Structure

The structure of the digital watermarking network is shown in

Figure 1. It consists of four sub-networks; host image pre-processing, watermark pre-processing, embedding, and extraction networks. The attack simulation is also in the network, and this is not a neural network, but a signal processing. The host image pre-processing consists of a convolution layer with 64 filters, which maintains the original resolution of the host image and has 1 stride step.

3.2. Network Operation

The watermark pre-processing network is configured to gradually increase the resolution to match the host image pre-processing network’s resolution. It is to increase the watermark invisibility. Our experiments have confirmed that the case maintaining the resolution of the host image in watermark embedding has high watermark invisibility than the case reducing the resolution to that of the watermark and increasing the resolution to output the watermarked image. This network includes four network blocks: the first three consist of the convolution layer (CL), batch normalization (BN), activation function (AF), and average pooling (AP), but the last block consists of CL and AP. All CLs have a 0.5 stride for up-sampling. The corresponding number of filters is 512, 256, 128, and 1, respectively. AF is the rectified linear unit (ReLU), and AP is a 2 × 2 filter with a stride of 1. The watermark pre-processing network output is multiplied by the strength scaling factor to control the invisibility and robustness of the WM.

The watermark embedding network concatenates the 64 channels of the pre-processed host information and one channel of the pre-processed watermark information and uses them as the input to output the watermarked image information. The network consists of CL-BN-AF (ReLU) for the front four blocks, and the last block consists of CL-AF (tanh). The tanh activation maintains the positive and negative values to meet the data range of [−1, 1] to the input host information. Because we are aiming for invisible watermarking, we use the mean square error (MSE) between the watermarked image (

) and the host image (

) as a loss function (

) of the pre-processing network and the embedded network. This is shown in Equation (

1). Here,

M ×

N is the resolution of the host image [

15].

The extraction network consists of three CL-BN-AF (ReLU) blocks and one CL-AF (tanh) block, which is the last block. We set the stride of all CLs to 2 for down-sampling. The number of filters used in the CLs is 128, 256, 512, and 1, respectively. This network uses mean absolute error (MAE) between the extracted WM (

) and the original WM (

) as a loss function (

) as shown in Equation (

2), where

X ×

Y is the resolution of a watermark [

15].

The loss function

for the watermark embedding consists of

for the embedding network and

for the extracting in Equation (

3). On the other hand, the loss function

for the extracting consists of only

in Equation (

4).

In Equations (3) and (4), , , and are hyper-parameter of the neural network which control both invisibility and robustness.

For high robustness, the watermarked image is intentionally suffered from preset attacks in the attack simulation.

Table 1 shows the types, strengths, and the ratio of each attack used in one mini-batch in training [

9,

10].

In this paper, the deep learning-based watermarking algorithm proposed in our previous study [

15] is used. The robustness of this watermarking algorithm is summarized in

Table 2 [

15]. The result of

Table 2 is a case where the quality of the watermark-embedded image is 40.58 dB (BER:0.7015, VIF [

25]: 0.7350). The quality of the image was chosen for comparison with the latest similar study, ReDMark [

10]. Compared with ReDMark, it showed excellent attack robustness in most cases.

Table 2 shows the quality of the watermark extracted from the watermark-embedded image in terms of BER (bit error rate) and VIF (visual information fidelity).

4. Watermarking Processor

In this section, we develop hardware for digital watermarking based on deep learning. Hardware implementation is for high-speed operation or high performance. Since the extracting does not need a high-speed operation, the embedding is only implemented to hardware.

We propose an optimization method to implement a deep learning-based watermarking algorithm in hardware. The optimizations that we propose include computational optimization for batch normalization and memory optimization using shared memory. That is, the optimization step corresponds to the process of reconfiguring the S/W-based algorithm for hardware implementation. When a modified algorithm is obtained through this process, the hardware is designed using this algorithm. These two optimization techniques are at the core of what we are proposing.

We consider the operation information of the hardware and modify the operation method of the algorithm for the software to be suitable for the operation of the hardware. We define this process as an optimization process. Since we use a watermarking algorithm based on deep learning, we optimize the operation method of the neural network for deep learning according to the hardware implementation and operation. Since the deep neural network for our watermarking algorithm is a CNN-based network, we propose an optimization technique for hardware implementation by analyzing the calculation and operation of CNN. CNN operation requires a lot of memory resources and the number of memory accesses. This is not a big problem when it is a software operation, but when implemented in hardware, it greatly affects the operation of the hardware due to a memory bottleneck. Therefore, we propose a memory access optimization technique to alleviate the memory access bottleneck.

4.1. Optimization Methodology

For improving hardware performance, we propose an optimization for deep learning to minimize the amount of calculation and the number of memory access. The convolution arithmetic consists of multiplication of input feature map (IFM)

and the weight

and addition of the multiplication result and the bias

B. The convolution formula is defined as Equation (

5), where

A ×

B is the resolution of the weight.

The batch normalization has four kinds of parameters; data average

, standard deviation

, and trained parameters

and

. The formular is defined as Equation (

6).

corresponds the batch normalized result of

.

Since the batch normalization requires a large number of parameters, it has a large number of memory accesses and high calculating cost by division. For optimizing the batch normalization, we try to analyze the arithmetic of the convolution and batch normalization. Equation (

7) is the reconfigured version of the combined result of Equations (5) and (6). Through Equation (

7), we can find that both convolution and batch normalization are calculated using only the convolution without any other calculation for batch normalization. This method seems to be a kind of efficient optimization because it excessively reduces the number of memory access and the calculation cost. In Equation (

7),

is the modified weight and

is the modified bias.

Table 3 shows the comparison result before and after optimization. After optimization, the 2-input adder is reduced to about 30%, and the divider is no longer required.

After the algorithm performs optimization on the number of operations of the operator, we perform optimization on the amount of memory access. We propose a method to reduce the number of memory accesses of repeatedly used weights. Whenever weights are used, they are not fetched from external memory but stored in shared internal memory and reused. We increase the reuse rate and reduced the number of memory accesses by reusing the input feature map for each row of the image. The amount of memory access due to Equation (

7) is compared in

Table 4. It can be seen that before and after algorithm optimization, it is decreased by about 21 ×

times, and before and after memory optimization, it is decreased by about 2492 ×

times.

In addition, the maximum and minimum values of IFM before optimization changed from 41.95 and −57.22 to 22.54 and −21.58 after optimization. This fact makes it possible to use less hardware resources in the process of analyzing the number system, which will be explained in the next section.

4.2. Fixed-Point Number System

Unlike other signal processing techniques, some variables to be processed in an equation have very different value ranges such that a variable has almost no fractional part and a large integer part. Still, another variable has almost no integer part and only has many digits of a fractional part. Such calculation dramatically increases the size of the computing element and decreases the calculation efficiency. Therefore, before implementing the hardware, the number range and precision for the intermediate calculations must be analyzed, and the bit-width (size of the bus) adequately adjusted.

For a software implementation, the calculation error seldomly occurs or is trivial if it happens because the calculation is usually carried out with high precision. However, there must be precision limits for hardware implementation due to the limitations in the hardware resources. Let us consider a case in which two operators with both integer part and fraction part are multiplied, but we only need the fractional part of the result to calculate the argument of the cosine function in the CGH calculation. An example of a fixed-point simulation for such a case is shown in

Figure 2, in which three partial products are generated. The desired result can be obtained by adding all these partial products. In the fixed-point simulation, the result is checked as increasing the number of bits for the integer and fractional parts in executing. Note that each bit increased for the integer and fractional parts increase the precision twice as before. In our experiment, the number of bits was increased until the fixed-point simulation, and the software execution results are the same.

As the method to check if the two results are the same, we used both numerical analysis and visual analysis for both the calculated digital hologram and the restored object. The peak signal-to-noise ratio (PSNR) value and the error rate are used as the numerical analysis. We divide the data into four types: weight, IFM, partial sum, and tanh.

4.3. Hardware Structure

Figure 3 shows the top structure, including the host and watermark image pre-processing network and the embedding network. The structure is divided into the datapath part and the control part. The datapath part includes the memory buffer, the input interface, the convolution block (CONV), the post-processing block (POST), the output buffer, and the SRAM. The memory buffer receives and sends the data for calculation from and to the external memory. The input interface inputs the data to the internal blocks such as the convolution and the post-processing blocks. The post-processing block calculates the activation function. The control part includes the SRAM controller for the SRAM and the main controller for controlling the operation of the datapath part.

In

Figure 3, the solid line represents the flow of the control signal, and the dotted line represents the data flow. The signal from the main controller is used as an input to all blocks except SRAM, which means that all blocks are executed under the control of the main controller. Data input from the external memory is transferred to the memory buffer under the control of the main controller. The input interface transfers data to registers in the convolution block and post-processing block according to the timing of the operation. In convolution and post-processing operations, the input data map and filter data are repeatedly reused. The main controller controls the signal to prioritize the reuse of filter data over the input feature map.

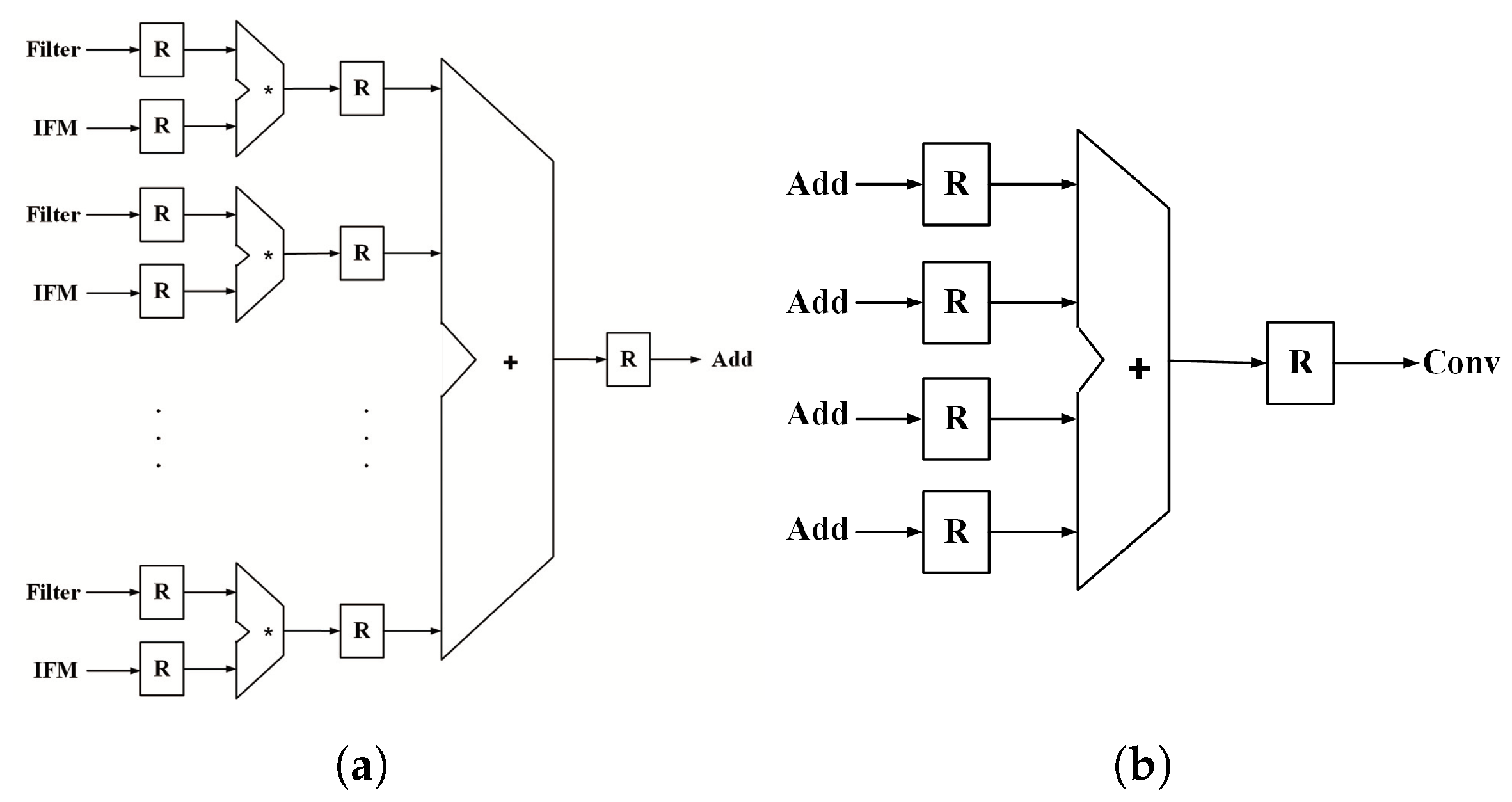

The convolution block is shown in detail in

Figure 4. All convolution layers use a 3 × 3 filter, and the number of channels varies according to the convolution block. Considering the amount of hardware, we design the hardware for the 4-channel operation that can be calculated by dividing the number of channels in each block. It has a structure consisting of 4 units of one channel convolution operator (

Figure 4a) that performs 3 × 3 multiplication and accumulation. Next, it consists of an adder (

Figure 4b) that adds all four outputs (Add). The convolution operation of each block controls the number of operations by using information about the block in the main controller. The result of the convolutional block (Conv) is stored in the SRAM for addition with the additional channel operation or is added with the data output from the SRAM. The data on which all convolution operations have been completed is transferred to the post-processing block.

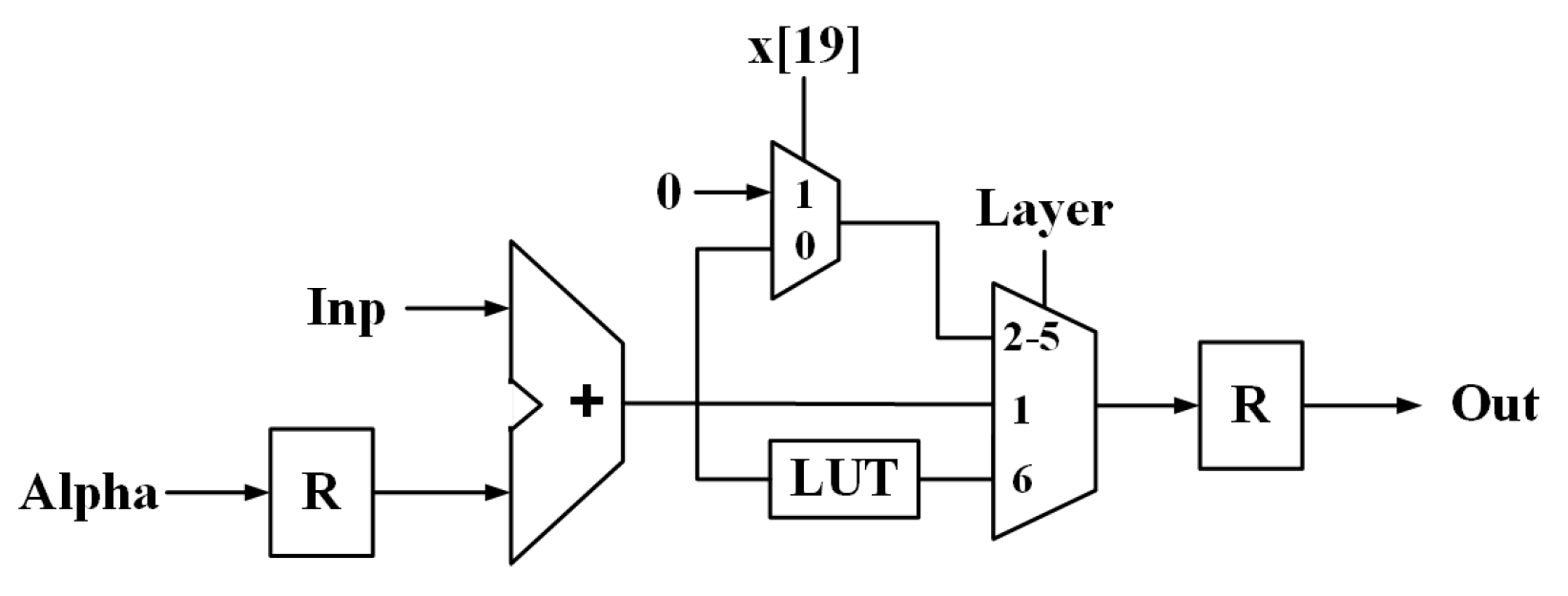

The structure of the post-processing block is shown in

Figure 5. The input of this block is added with the modified bias stored in the alpha register. The addition result is output through the activation function (MUX) to the output buffer. The activation function operates in variety according to the operating layer. The first layer does not use the activation function, so the input is directly output without any calculation. The second to fifth layers execute the activation function in which the ReLU is used, and the ReLU is designed as the MUX. If the most significant bit is one, the MUX outputs the input. Otherwise, the MUX outputs the zero value. The sixth layer uses the tanh function as activation. The other calculations are covered with the multiplication and addition, but the tanh activation function requires the complicated exponential function and division operation. The hardware implementation of them needs large hardware resources and calculation amount. Therefore we replace the complex and large logic with the look-up table (LUT). The size of the LUT is 256 because the bitwidth is eight, including the sign bit.

6. Conclusions

In this paper, we proposed a hardware dedicated to watermarking that can embed watermarks on digital images and videos at high speed and implement them in the form of ASIC. The deep learning-based watermarking algorithm we previously proposed was used as the basis platform and the algorithm optimization and the memory optimization were applied to the algorithm for software. As a result, the computational amount of the algorithm was reduced by 21 × times, and the number of memory accesses decreased by about 2492 × . Through the number system analysis technique, the precision of 64 bits of the software was optimized to 16, 16, 20, and 8 bits for the weight, the IFM, the partial sum, and the tanh, respectively. Finally, the processor implemented as an ASIC used a silicon area of 1,076,147.3750, and watermarking of 1.05 frames per second is possible based on 75 Mhz. If the operating frequency is increased, a real-time watermarking operation will be sufficiently possible.