ARINC653 Channel Robustness Verification Using LeonViP-MC, a LEON4 Multicore Virtual Platform

Abstract

1. Introduction

1.1. Motivation

1.2. Related Works

1.2.1. Multicore Simulation Techniques

1.2.2. LLVM as a Simulation Support Tool

1.3. Paper Contribution

2. Multicore Virtual Platform

2.1. Base Single-Core Virtual Platform

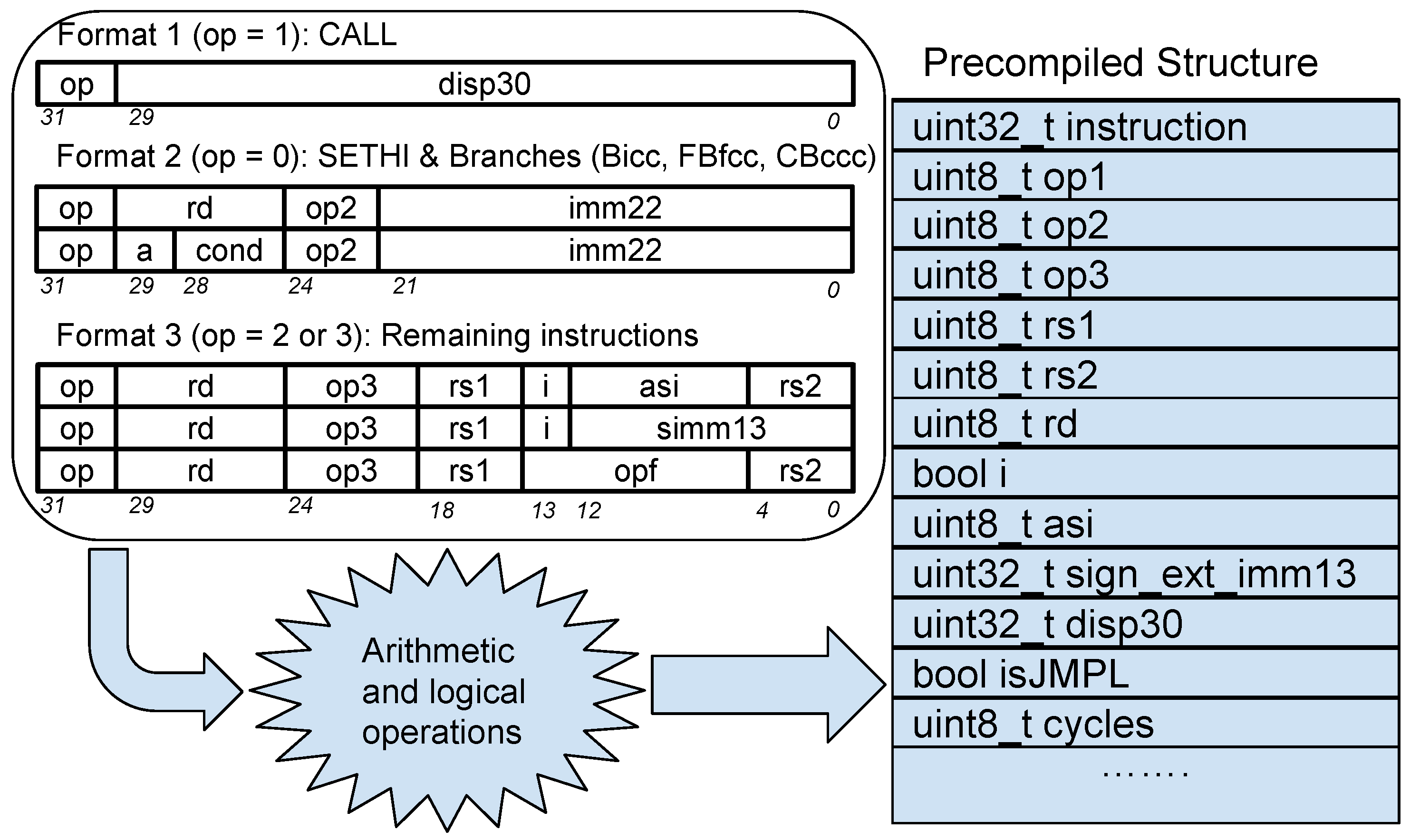

Cycle Accuracy

- Static cycle counting—The simulator assigns, for each instruction, the number of cycles it consumes nominally according to the LEON4 processor specification;

- Dynamic cycle counting—The LEON4 specification details those cases where having two specific instructions executed one after the other causes execution delays. These delays can be caused by data hazards or by the intrinsic nature of the instructions. During instruction execution, the simulator temporarily stores the data needed to calculate this delay effectively.

- Peripheral access cycle counting—When an instruction needs to access data or other resources beyond the processor cache, accessing the AHB bus may cause a delay in execution. This delay may be caused by the intrinsic speed of the peripheral and/or because another core is using the bus in the multicore scenario. Since this delay is pseudo-random in real hardware, we estimate the average cycles per access based on empirical tests performed on the real GR740 platform.

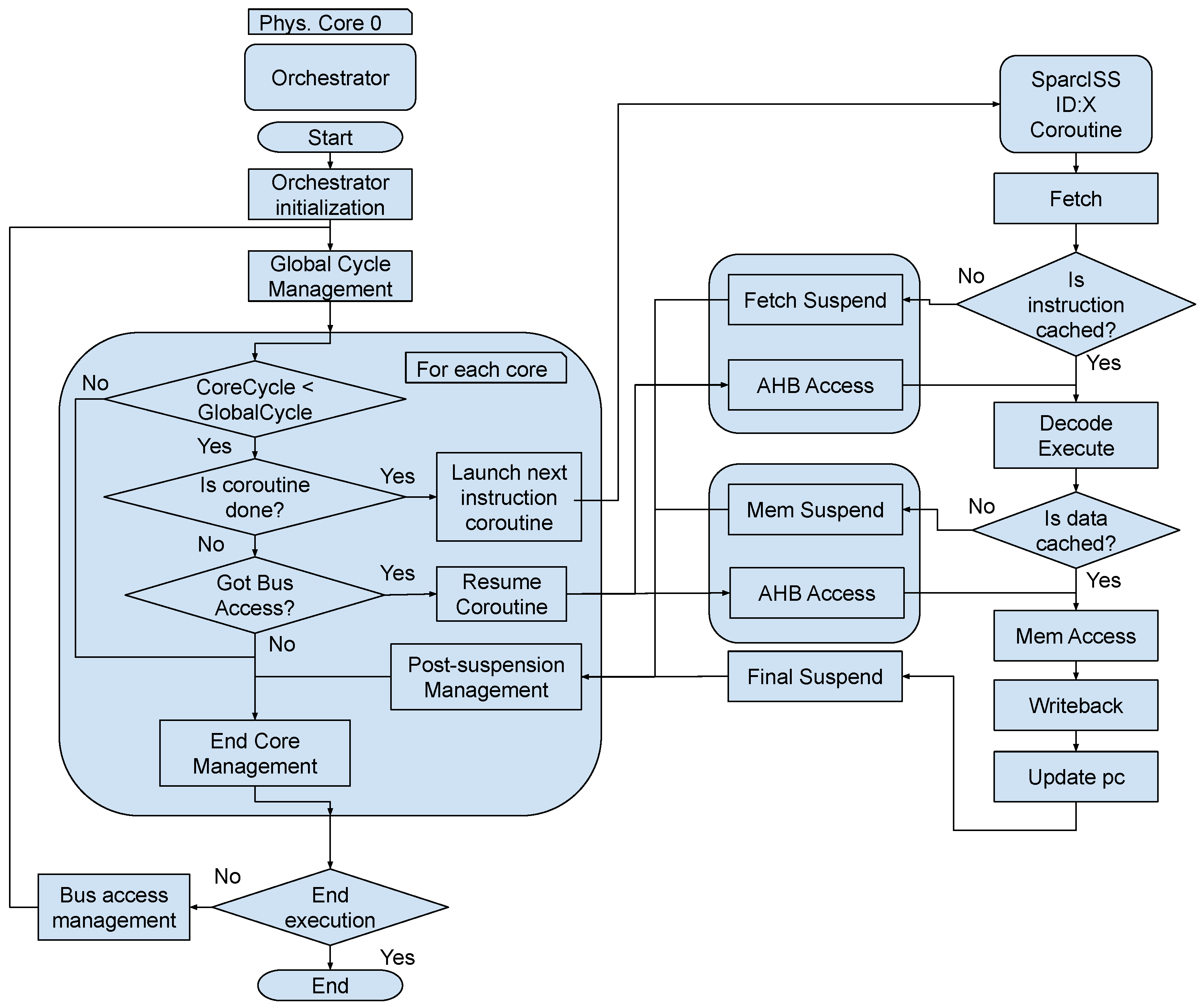

2.2. Multicore Simulation

2.2.1. Multicore Simulation with Threads

2.2.2. Multicore Simulation with LLVM and Coroutines

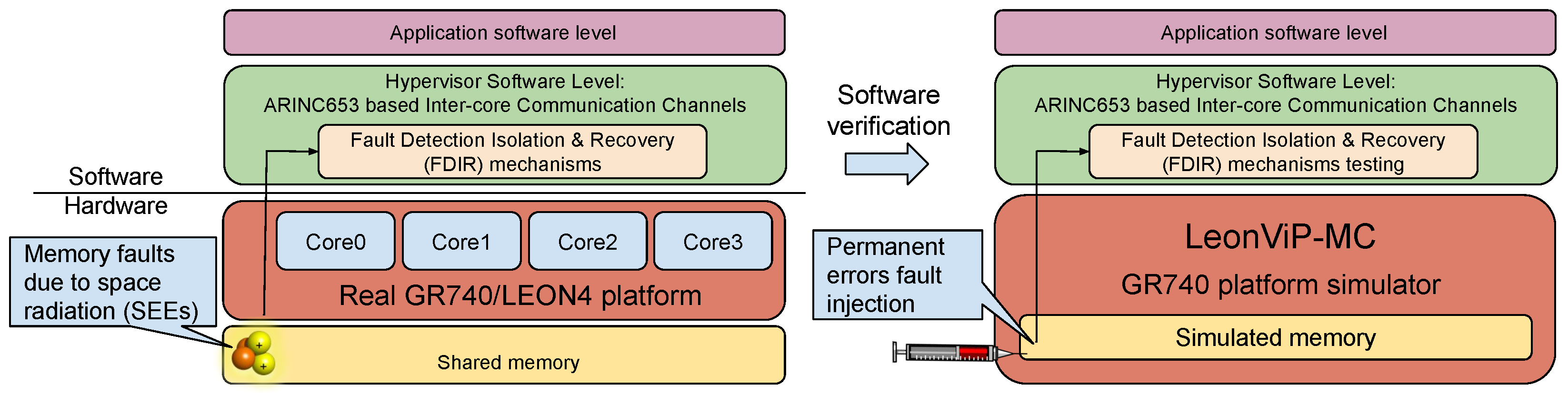

2.3. Fault-Tolerance Campaign Testing Mechanisms

3. Use Case: ARINC653 Message Channel Robustness Verification

- The verification of the BSW boot process asks for the possibility of the corruption of application binaries stored in the EEPROM or permanent faults in the SDRAM application deployment areas. First of all, the injection of permanent faults in real hardware is not technically achievable in a non-intrusive manner. Secondly, in order to carry out an exhaustive verification, each memory location of EEPROM and SDRAM areas must be individually corrupted and BSW behavior tested. This leads to a very huge amount of BSW runs. Although each just takes a few seconds, completing the entire test would take months running in a single machine. The use of virtual platforms allows the injection of permanent errors and can significantly reduce the verification time spent since several instances of the Virtual Platform can be run in parallel on different real machines, thus shortening the overall testing time [30].

- Although BSW is not deployed in the SDRAM, but is executed directly in the PROM to increase its reliability, it still uses SDRAM to map the program stack and global variables. In order to avoid the malfunction of the BSW itself due to SDRAM permanent faults, stack and runtime variables must not be statically allocated. Therefore, during system startup, an error-free memory area is searched for and used for nominal boot as is described in [32].

- The BSW is a criticality category B software, which cannot be replaced during the mission, so testing this kind of critical software must cover 100% of the source code statement and decision paths. Coverage is a major concern for dependability because parts of the code that are never executed during a test workload run cannot be properly verified. So, the efficient coverage of an exception handling code is an essential concern. Regarding BSW, the developed framework and figures are described in [7].

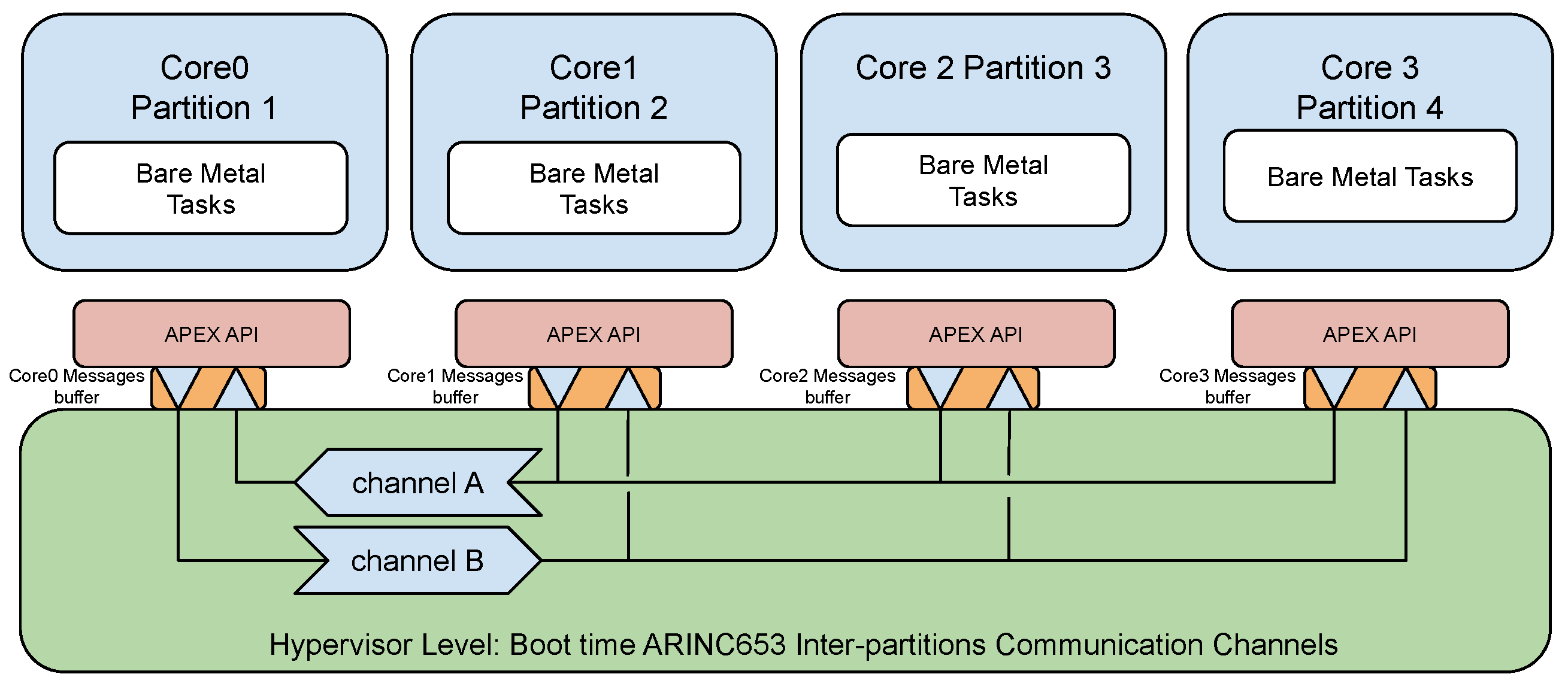

3.1. System under Test

3.2. Channel Buffer Provisioning Approach

3.3. Fault Injection Campaign

3.4. Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chan, W.J.; Kahng, A.B.; Nath, S.; Yamamoto, I. The ITRS MPU and SOC system drivers: Calibration and implications for design-based equivalent scaling in the roadmap. In Proceedings of the 2014 IEEE 32nd International Conference on Computer Design (ICCD), Seoul, Korea, 19–22 October 2014; pp. 153–160. [Google Scholar] [CrossRef]

- Zuepke, A.; Bommert, M.; Lohmann, D. AUTOBEST: A united AUTOSAR-OS and ARINC 653 kernel. In Proceedings of the 21st IEEE Real-Time and Embedded Technology and Applications Symposium, Seattle, WA, USA, 13–16 April 2015; pp. 133–144. [Google Scholar] [CrossRef]

- Rocha, F.; Ost, L.; Reis, R. Soft Error Reliability Using Virtual Platforms; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Akram, A.; Sawalha, L. A Survey of Computer Architecture Simulation Techniques and Tools. IEEE Access 2019, 7, 78120–78145. [Google Scholar] [CrossRef]

- Muñoz-Quijada, M.; Sanz, L.; Guzman-Miranda, H. A Virtual Device for Simulation-Based Fault Injection. Electronics 2020, 9, 1989. [Google Scholar] [CrossRef]

- Engblom, J. Virtual to the (near) end—Using virtual platforms for continuous integration. In Proceedings of the 2015 52nd ACM/EDAC/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 8–12 June 2015; Volume 2015. [Google Scholar] [CrossRef]

- Parra, P.; da Silva, A.; Óscar, R.P.; Sánchez, S. Agile Deployment and Code Coverage Testing Metrics of the Boot Software on-board Solar Orbiter’s Energetic Particle Detector. Acta Astronaut. 2018, 143, 203–211. [Google Scholar] [CrossRef]

- Rodríguez-Pacheco, J.; Wimmer-Schweingruber, R.F.; Mason, G.M.; Ho, G.C.; Sánchez-Prieto, S.; Prieto, M.; Martín, C.; Seifert, H.; Andrews, G.B.; Kulkarni, S.R.; et al. The Energetic Particle Detector—Energetic particle instrument suite for the Solar Orbiter mission. A&A 2020, 642, A7. [Google Scholar] [CrossRef]

- da Silva, A.; Sánchez, S. LEON3 ViP: A Virtual Platform with Fault Injection Capabilities. In Proceedings of the 2010 13th Euromicro Conference on Digital System Design: Architectures, Methods and Tools, Lille, France, 1–3 September 2010; pp. 813–816. [Google Scholar] [CrossRef]

- George, A.D.; Wilson, C.M. Onboard Processing With Hybrid and Reconfigurable Computing on Small Satellites. Proc. IEEE 2018, 106, 458–470. [Google Scholar] [CrossRef]

- COBHAM. GR740 Quad Core LEON4 SPARC V8 Processor 2020 Data Sheet and User’s Manual. 2020. Available online: https://www.gaisler.com/doc/gr740/GR740-UM-DS-2-4.pdf (accessed on 3 May 2021).

- ESA. GR740: The ESA Next Generation Microprocessor (NGMP). 2020. Available online: http://microelectronics.esa.int/gr740/index.html (accessed on 3 May 2021).

- Krutwig, A.; Huber, S. RTEMS SMP Final Report; Technical Report, Embedded Brains GmbH and ESA; ESA: Paris, France, 2017. [Google Scholar]

- ESA. Leading Up to LEON: ESA’s First Microprocessors. 2013. Available online: http://www.esa.int/Enabling_Support/Space_Engineering_Technology/Leading_up_to_LEON_ESA_s_first_microprocessors (accessed on 3 May 2021).

- Gaisler, J. Leon-1 processor-first evaluation results. In Proceedings of the European Space Components Conference: ESCCON 2000, Noordwijk, The Netherlands, 21–23 March 2000; Volume 439. [Google Scholar]

- ESA. LEON: The Space Chip that Europe Built. Available online: https://www.esa.int/Enabling_Support/Space_Engineering_Technology/LEON_the_space_chip_that_Europe_built (accessed on 3 May 2021).

- ESA. Chang’e-4 Lander. 2019. Available online: http://www.esa.int/ESA_Multimedia/Images/2019/07/Chang_e-4_lander (accessed on 3 May 2021).

- ESA. LEON’s First Flights. 2013. Available online: https://www.esa.int/Enabling_Support/Space_Engineering_Technology/Onboard_Computers_and_Data_Handling/Microprocessors (accessed on 3 May 2021).

- Foundation, L. The LLVM Compiler Infrastructure Project. 2021. Available online: https://llvm.org/ (accessed on 3 May 2021).

- Reshadi, M.; Mishra, P. Hybrid-compiled simulation: An efficient technique for instruction-set architecture simulation. ACM Trans. Embed. Comput. Syst. 2009, 8. [Google Scholar] [CrossRef]

- Bellard, F. QEMU, a Fast and Portable Dynamic Translator. In Proceedings of the Annual Conference on USENIX Annual Technical Conference, ATEC ’05, Anaheim, CA, USA, 10–15 April 2005; USENIX Association: Anaheim, CA, USA, 2005; p. 41. [Google Scholar]

- Lowe-Power, J.; Ahmad, A.M.; Akram, A.; Alian, M.; Amslinger, R.; Andreozzi, M.; Armejach, A.; Asmussen, N.; Beckmann, B.; Bharadwaj, S.; et al. The gem5 Simulator: Version 20.0+(2020). arXiv 2020, arXiv:2007.03152. [Google Scholar]

- Carvalho, H.; Nelissen, G.; Zaykov, P. mcQEMU: Time-Accurate Simulation of Multi-core platforms using QEMU. In Proceedings of the 2020 23rd Euromicro Conference on Digital System Design (DSD), Kranj, Slovenia, 26–28 August 2020; pp. 81–88. [Google Scholar] [CrossRef]

- Guo, X.; Mullins, R. Accelerate Cycle-Level Full-System Simulation of Multi-Core RISC-V Systems with Binary Translation. arXiv 2020, arXiv:2005.11357v1. [Google Scholar]

- Joloboff, V.; Zhou, X.; Helmstetter, C.; Gao, X. Fast Instruction Set Simulation Using LLVM-based Dynamic Translation. In Proceedings of the International MultiConference of Engineers and Computer Scientists 2011, Hong Kong, China, 16–18 March 2011; Lecture Notes in Engineering and Computer Science; IAENG. Springer: Hong Kong, China, 2011; Volume 2188, pp. 212–216. [Google Scholar]

- Böhm, I.; Franke, B.; Topham, N. Cycle-accurate performance modelling in an ultra-fast just-in-time dynamic binary translation instruction set simulator. In Proceedings of the 2010 International Conference on Embedded Computer Systems: Architectures, Modeling and Simulation, Samos, Greece, 19–22 July 2010; pp. 1–10. [Google Scholar] [CrossRef]

- Brandner, F.; Fellnhofer, A.; Krall, A.; Riegler, D. Fast and Accurate Simulation using the LLVM Compiler Framework. In Proceedings of the RAPIDO’09: 1st Workshop on Rapid Simulation and Performance Evaluation: Methods and Tools, Paphos, Cyprus, 25 January 2009. [Google Scholar]

- Alexander, B.; Donnellan, S.; Jeffries, A.; Olds, T.; Sizer, N. Boosting Instruction Set Simulator Performance with Parallel Block Optimisation and Replacement. In Proceedings of the Thirty-Fifth Australasian Computer Science Conference (ACSC 2012), Melbourne, Australia, 30 January–2 February 2012. [Google Scholar]

- Wagstaff, H.; Gould, M.; Franke, B.; Topham, N. Early partial evaluation in a JIT-compiled, retargetable instruction set simulator generated from a high-level architecture description. In Proceedings of the 2013 50th ACM/EDAC/IEEE Design Automation Conference (DAC), Austin, TX, USA, 29 May–7 June 2013; pp. 1–6. [Google Scholar]

- da Silva, A.; Sánchez, S.; Óscar, R.P.; Parra, P. Injecting Faults to Succeed. Verification of the Boot Software on-board Solar Orbiter’s Energetic Particle Detector. Acta Astronaut. 2014, 95, 198–209. [Google Scholar] [CrossRef]

- Reshadi, M.; Mishra, P.; Dutt, N. Instruction set compiled simulation: A technique for fast and flexible instruction set simulation. In Proceedings of the 2003 Design Automation Conference (IEEE Cat. No.03CH37451), Anaheim, CA, USA, 2–6 June 2003; pp. 758–763. [Google Scholar] [CrossRef]

- Óscar, R.P.; Sánchez, J.; da Silva, A.; Parra, P.; Hellín, A.M.; Carrasco, A.; Sánchez, S. Reliability-oriented design of on-board satellite boot software against single event effects. J. Syst. Archit. 2020, 101920. [Google Scholar] [CrossRef]

- Petersen, E. Single Event Effects in Aerospace; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Taylor, D.J.; Morgan, D.E.; Black, J.P. Redundancy in Data Structures: Improving Software Fault Tolerance. IEEE Trans. Softw. Eng. 1980, SE-6, 585–594. [Google Scholar] [CrossRef]

| Thread Approach | LLVM First Execution | LLVM with Cached Coroutines | |

|---|---|---|---|

| smpschededf01 (single-core) | 0.57 MIPS | 2.745 MIPS | 5.052 MIPS |

| smpmigration01 (dual-core) | 0.791 MIPS | 4.261 MIPS | 4.862 MIPS |

| smpatomic01 (quad-core) | 0.804 MIPS | 6.263 MIPS | 6.691 MIPS |

| smpaffinity01 (quad-core) | 1.303 MIPS | 1.179 MIPS | 9.457 MIPS |

| ARINC653 use case (quad-core) | 0.576 MIPS | 0.062 MIPS | 4.31 MIPS |

| k | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Faulty configurations | 12 | 66 | 220 | 495 | 792 | 924 | 792 | 495 | 220 | 66 | 12 | 1 | 4095 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sánchez, J.; da Silva, A.; Parra, P.; R. Polo, Ó.; Martínez Hellín, A.; Sánchez, S. ARINC653 Channel Robustness Verification Using LeonViP-MC, a LEON4 Multicore Virtual Platform. Electronics 2021, 10, 1179. https://doi.org/10.3390/electronics10101179

Sánchez J, da Silva A, Parra P, R. Polo Ó, Martínez Hellín A, Sánchez S. ARINC653 Channel Robustness Verification Using LeonViP-MC, a LEON4 Multicore Virtual Platform. Electronics. 2021; 10(10):1179. https://doi.org/10.3390/electronics10101179

Chicago/Turabian StyleSánchez, Jonatan, Antonio da Silva, Pablo Parra, Óscar R. Polo, Agustín Martínez Hellín, and Sebastián Sánchez. 2021. "ARINC653 Channel Robustness Verification Using LeonViP-MC, a LEON4 Multicore Virtual Platform" Electronics 10, no. 10: 1179. https://doi.org/10.3390/electronics10101179

APA StyleSánchez, J., da Silva, A., Parra, P., R. Polo, Ó., Martínez Hellín, A., & Sánchez, S. (2021). ARINC653 Channel Robustness Verification Using LeonViP-MC, a LEON4 Multicore Virtual Platform. Electronics, 10(10), 1179. https://doi.org/10.3390/electronics10101179