Multi-Input Deep Learning Based FMCW Radar Signal Classification

Abstract

1. Introduction

- We propose a radar-based classification system with collected data using frequency modulated continuous wave (FMCW) radar.

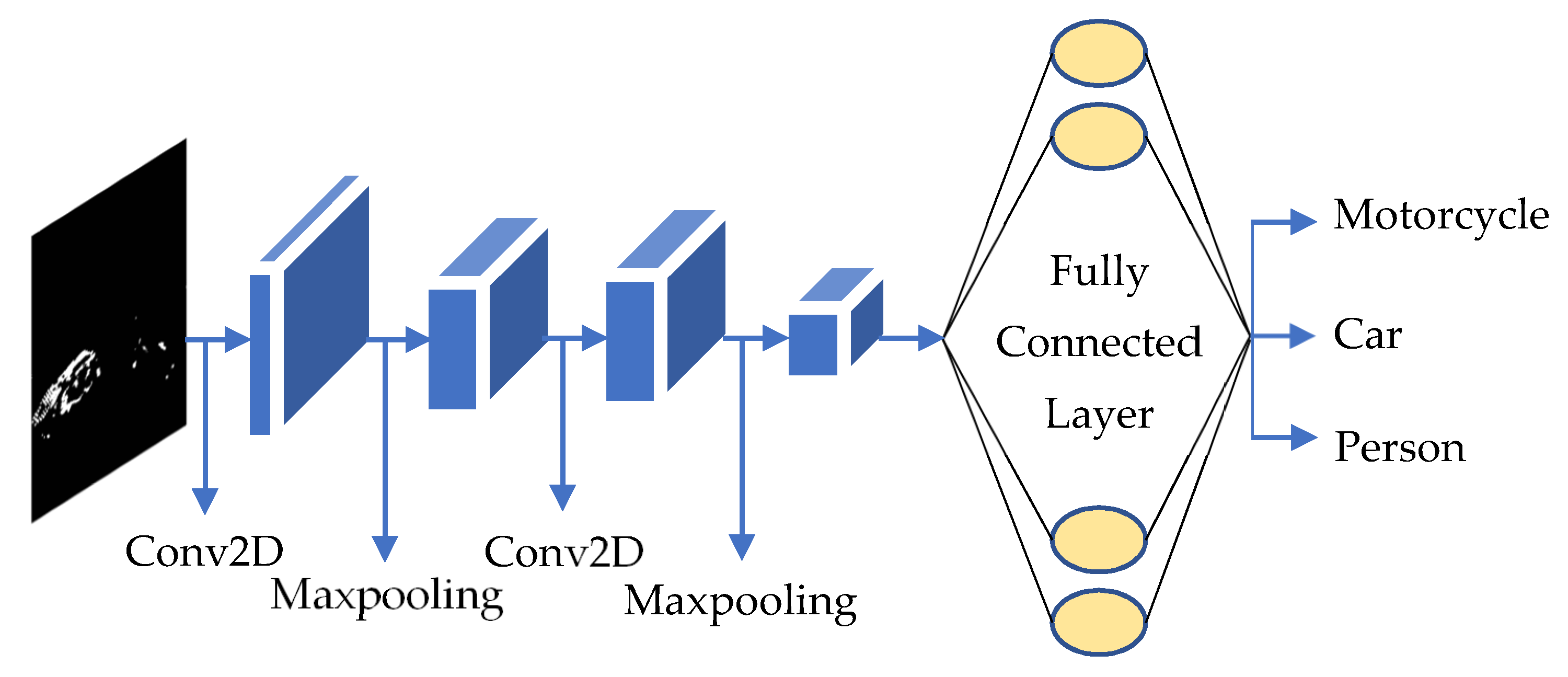

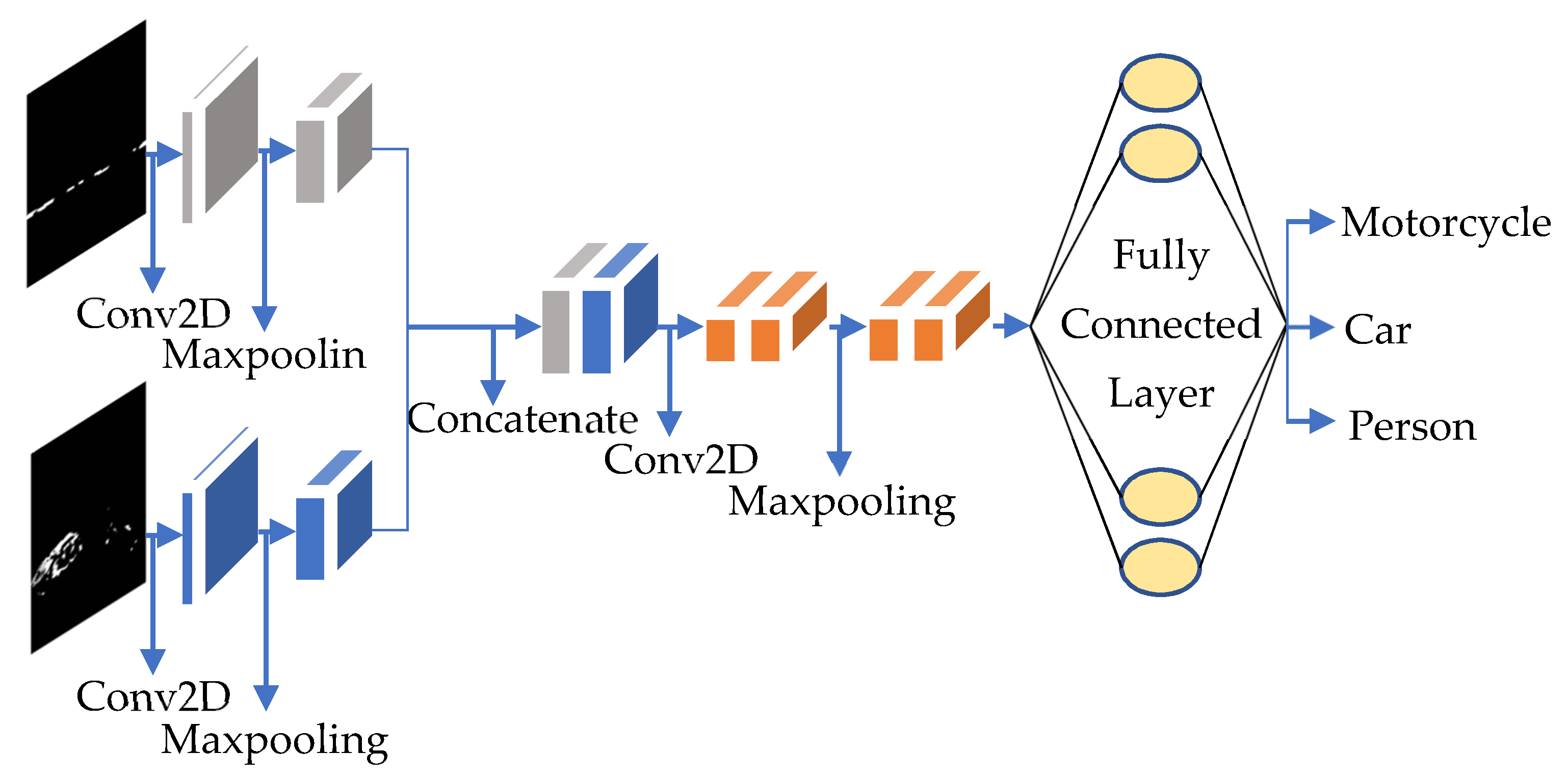

- The distance–Doppler map changes greatly depending on the angle at which the object faces the radar. Therefore, we propose a convolutional neural network (CNN) -based multi-input deep learning model, which uses both the distance–Doppler map and the point cloud map as inputs to enhance the classification accuracy.

2. Related Work

3. Proposed Multi-Input Based CNN Classifier

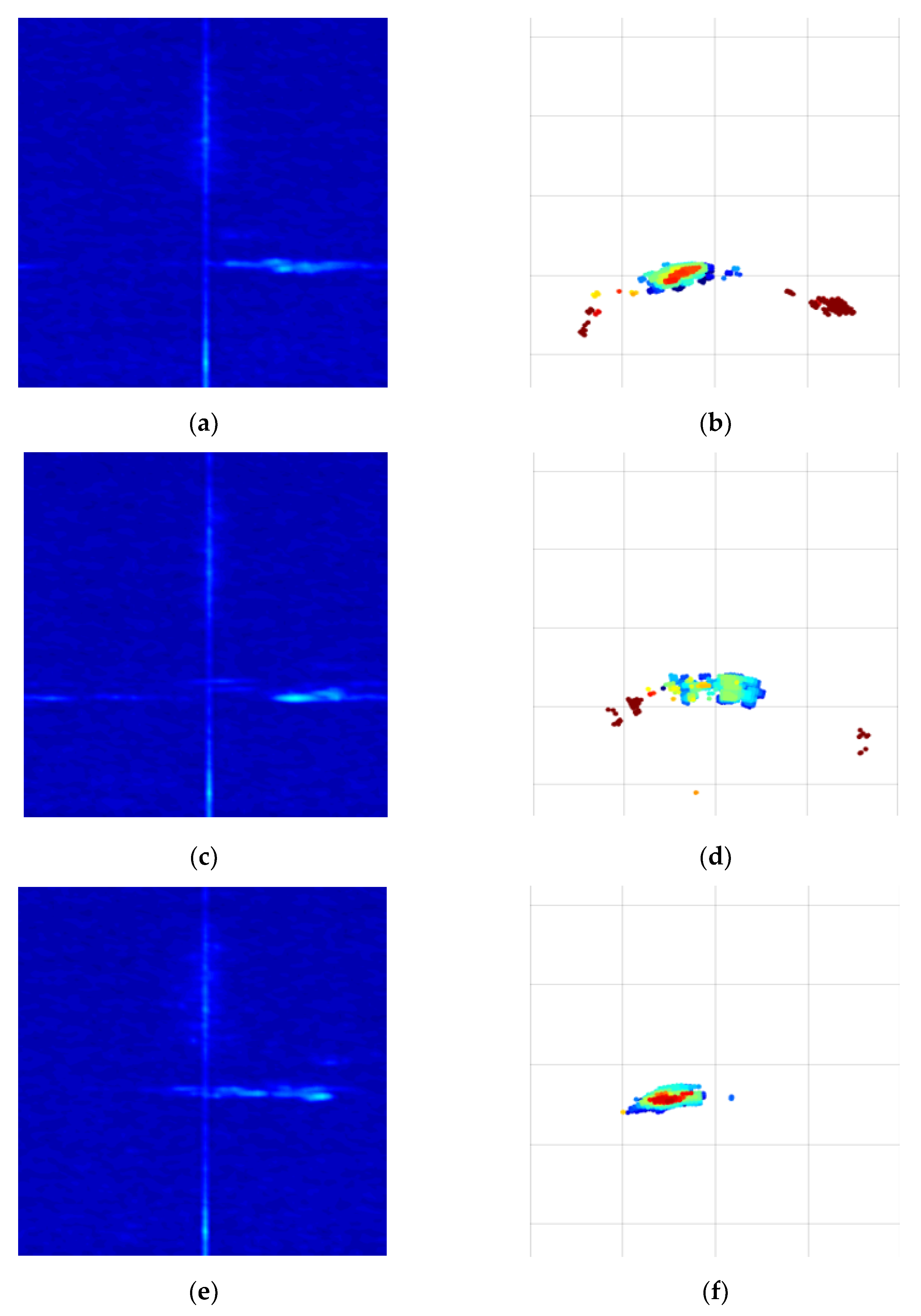

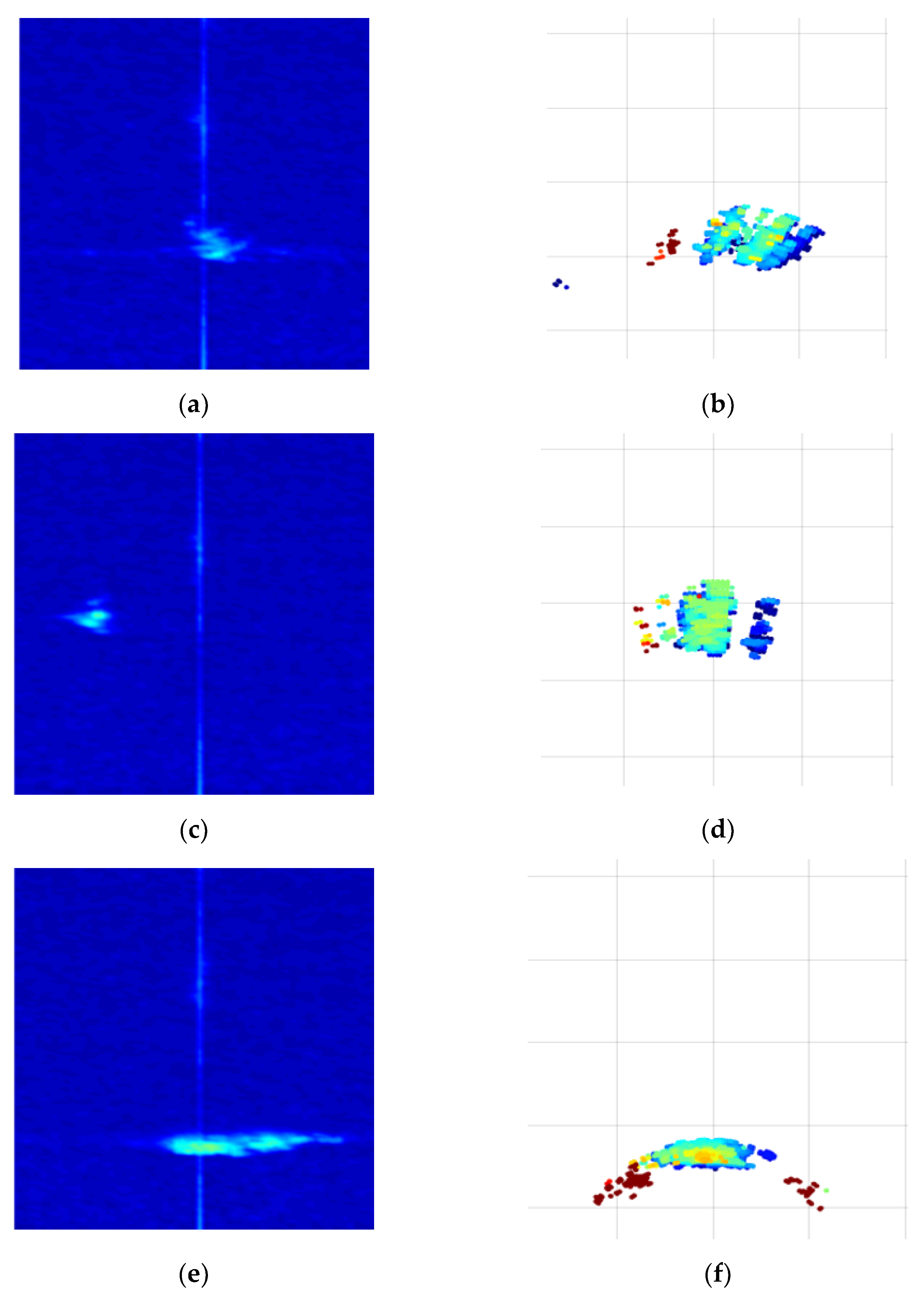

4. Experiment Setup and Data Analysis

5. Experimental Result

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Brännström, M.; Coelingh, E.; Sjöberg, J. Model-based threat assessment for avoiding arbitrary vehicle collisions. Trans. Intell. Transp. Syst. 2010, 11, 658–669. [Google Scholar] [CrossRef]

- Vahidi, A.; Eskandarian, A. Research advances in intelligent collision avoidance and adaptive cruise control. Trans. Intell. Transp. Syst. 2003, 4, 143–153. [Google Scholar] [CrossRef]

- Han, S.J.; Choi, J. Parking space recognition for autonomous valet parking using height and salient-line probability maps. Etri J. 2015, 37, 1220–1230. [Google Scholar] [CrossRef]

- Leonard, J.; How, J.; Teller, S.; Berger, M.; Campbell, S.; Fiore, G.; Fletcher, L.; Frazzoli, E.; Huang, A.; Karaman, S.; et al. A perceptiondriven autonomous urban vehicle. J. Field Robot. 2008, 25, 727–774. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Matsunami, I.; Nakamura, R.; Kajiwara, A. Rcs measurements for vehicles and pedestrian at 26 and 79ghz. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2016, E99A, 204–206. [Google Scholar] [CrossRef]

- Prophet, R.; Hoffmann, M.; Vossiek, M.; Strum, C.; Ossowska, A.; Malik, W.; Lubbert, U. Pedestrian classification with a 79 GHz automotive radar sensor. In Proceedings of the 19th International Radar Symposium (IRS), Bonn, Germany, 20–22 June 2018; pp. 1–6. [Google Scholar]

- Patel, K.; Rambach, K.; Visentin, T.; Rusev, D.; Pfeiffer, M.; Yang, B. Deep learning-based object classification on automotive radar spectra. In Proceedings of the IEEE Radar Conference, Boston, MA, USA, 22–26 April 2019; pp. 1–6. [Google Scholar]

- Du, L.; Li, L.; Wang, B.; Xiao, J. Micro-doppler feature extraction based on time-frequency spectrogram for ground moving targets classification with low-resolution radar. IEEE Sens. J. 2016, 16, 3756–3763. [Google Scholar] [CrossRef]

- Lees, W.M.; Wunderlich, A.; Jeavons, P.J.; Hale, P.D.; Souryal, M.R. Deep learning classification of 3.5-GHz band spectrograms with applications to spectrum sensing. IEEE Trans. Cogn. Commun. Netw. 2019, 5, 224–236. [Google Scholar] [CrossRef]

- Lee, H.R.; Park, J.; Suh, Y.-J. Improving classification accuracy of hand gesture recognition based on 60 GHz FMCW radar with deep learning domain adaptation. Electronics 2020, 9, 2140. [Google Scholar] [CrossRef]

- Wu, Q.; Gao, T.; Lai, Z.; Li, D. Hybrid SVM-CNN classification technique for human–vehicle targets in an automotive LFMCW radar. Sensors 2020, 20, 3504. [Google Scholar] [CrossRef] [PubMed]

- Peter, T. Introduction to Radar Target Recognition; The Institution of Engineering and Technology: London, UK, 2005. [Google Scholar]

- Lim, S.; Yoon, Y.-J.; Lee, J.-E.; Kim, S.-C. Phase-based target classification using neural network in automotive radar systems. In Proceedings of the IEEE Radar Conference (RadarConf), Boston, MA, USA, 22–26 April 2019; pp. 1–6. [Google Scholar]

- Villeval, S.; Bilik, I.; Gurbuz, S.Z. Application of a 24 GHz FMCW automotive radar for urban target classification. In Proceedings of the IEEE Radar Conference, Cincinnati, OH, USA, 19–23 May 2014. [Google Scholar]

- Rytel-Andrianik, R.; Samczynski, P.; Gromek, D.; Weilgo, J.; Drozdowicz, J.; Malanowski, M. Micro-range, micro-Doppler joint analysis of pedestrian radar echo. In Proceedings of the IEEE Signal Processing Symposium (SPSympo), Debe, Poland, 10–12 June 2015; pp. 1–4. [Google Scholar]

- Kim, S.; Lee, S.; Doo, S.; Shim, B. In Moving target classification in automotive radar systems using convolutional recurrent neural networks. In Proceedings of the 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 1482–1486. [Google Scholar]

- Vaishnav, P.; Santra, A. Continuous human activity classification with unscented kalman filter tracking using FMCW radar. IEEE Sens. Lett. 2020, 4, 1–4. [Google Scholar] [CrossRef]

- Anishchenko, L.; Zhuravlev, A.; Chizh, M. Fall detection using multiple bioradars and convolutional neural networks. Sensors 2019, 19, 5569. [Google Scholar] [CrossRef] [PubMed]

- Skaria, S.; Al-Hourani, A.; Lech, M.; Evans, R.J. Hand-gesture recognition using two-antenna Doppler radar with deep convolutional neural networks. IEEE Sens. J. 2019, 19, 3041–3048. [Google Scholar] [CrossRef]

- Kim, Y.; Moon, T. Human detection and activity classification based on micro-Doppler signatures using deep convolutional neural networks. IEEE Geosci. Remote. Sens. Lett. 2015, 13, 8–12. [Google Scholar] [CrossRef]

- Angelov, A.; Robertson, A.; Murray-Smith, R.; Fioranelli, F. Practical classification of different moving targets using automotive radar and deep neural networks. IET Radar Sonar Navig. 2018, 12, 1082–1089. [Google Scholar] [CrossRef]

- Lee, S.; Seo, I.; Seok, I.; Seog Han, D. Active sonar target classification with power-normalized cepstral coefficients and convolutional neural network. Appl. Sci. 2020, 10, 8450. [Google Scholar] [CrossRef]

- Daher, A.W.; Rizik, A.; Randazzo, A.; Tavanti, E.; Chible, H.; Muselli, M.; Caviglia, D.D. Pedestrian and multi-class vehicle classification in radar systems using rulex software on the raspberry pi. Appl. Sci. 2020, 10, 9113. [Google Scholar] [CrossRef]

- Muselli, M. Extracting knowledge from biomedical data through Logic Learning Machines and Rulex. EMBnet. J. 2012, 18, 56–58. [Google Scholar] [CrossRef]

- Kim, W.; Cho, H.; Kim, J.; Kim, B.; Lee, S. YOLO-based simultaneous target detection and classification in automotive FMCW Radar Systems. Sensors 2020, 20, 2897. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten digit recognition with a backpropagation network. In Advances in Neural Information Processing Systems; Touretzky, D., Ed.; Morgan-Kaufmann: San Francisco, CA, USA, 1990; Volume 2, pp. 396–404. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zhu, J.; Chen, H.; Ye, W. A hybrid CNN–LSTM network for the classification of human activities based on micro-doppler radar. IEEE Access 2020, 8, 24713–24720. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In ICML’10: Proceedings of the 27th International Conference on International Conference on Machine Learning; Omnipress: Madison, WI, USA, 2010; pp. 807–814. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

| Parameter | Value |

|---|---|

| Center frequency | 79 GHz |

| Bandwidth | 2 GHz |

| Resolution | Vertical and horizontal |

| Field of view | Vertical and horizontal |

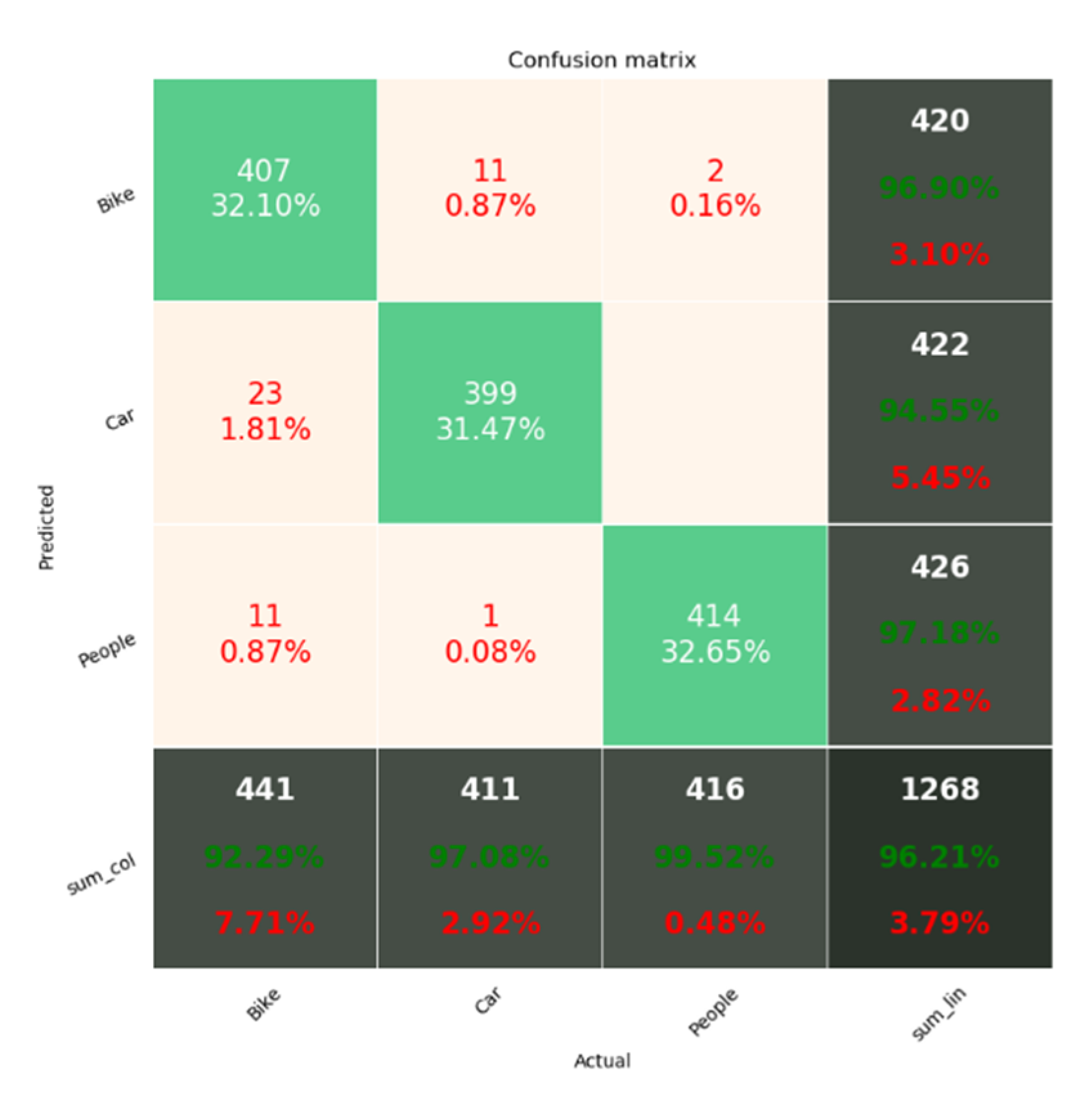

| Approach | Number of Input Layers | Classification Performance | Number of Parameters | Training Time |

|---|---|---|---|---|

| Range–Doppler map [16] | 1 | 82.26% | 16,207 | 47s |

| Point cloud map | 1 | 91.32% | 16,207 | 48s |

| Range–Doppler and point cloud maps | 1 | 92.82% | 16,243 | 54s |

| Range–Doppler and point cloud maps (3 fully connected layers) | 2 | 95.98% | 62,699 | 63s |

| Range–Doppler and point cloud maps (1 fully connected layers) | 2 | 96.21% | 16,207 | 56s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cha, D.; Jeong, S.; Yoo, M.; Oh, J.; Han, D. Multi-Input Deep Learning Based FMCW Radar Signal Classification. Electronics 2021, 10, 1144. https://doi.org/10.3390/electronics10101144

Cha D, Jeong S, Yoo M, Oh J, Han D. Multi-Input Deep Learning Based FMCW Radar Signal Classification. Electronics. 2021; 10(10):1144. https://doi.org/10.3390/electronics10101144

Chicago/Turabian StyleCha, Daewoong, Sohee Jeong, Minwoo Yoo, Jiyong Oh, and Dongseog Han. 2021. "Multi-Input Deep Learning Based FMCW Radar Signal Classification" Electronics 10, no. 10: 1144. https://doi.org/10.3390/electronics10101144

APA StyleCha, D., Jeong, S., Yoo, M., Oh, J., & Han, D. (2021). Multi-Input Deep Learning Based FMCW Radar Signal Classification. Electronics, 10(10), 1144. https://doi.org/10.3390/electronics10101144