Study of Process-Focused Assessment Using an Algorithm for Facial Expression Recognition Based on a Deep Neural Network Model

Abstract

1. Introduction

- The ability to understand materials can be observed in real time.

- Based on the learned expression data, teachers can determine the next level of difficulty of a problem (or studying materials).

- Teachers can prepare teaching materials in a more precise manner such that the materials reflect the learning ability of each student.

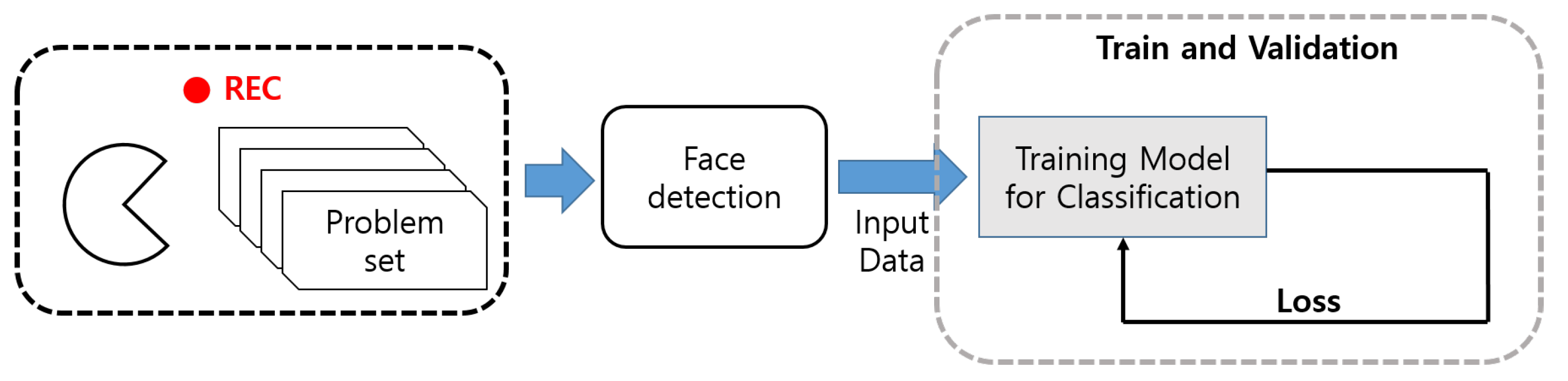

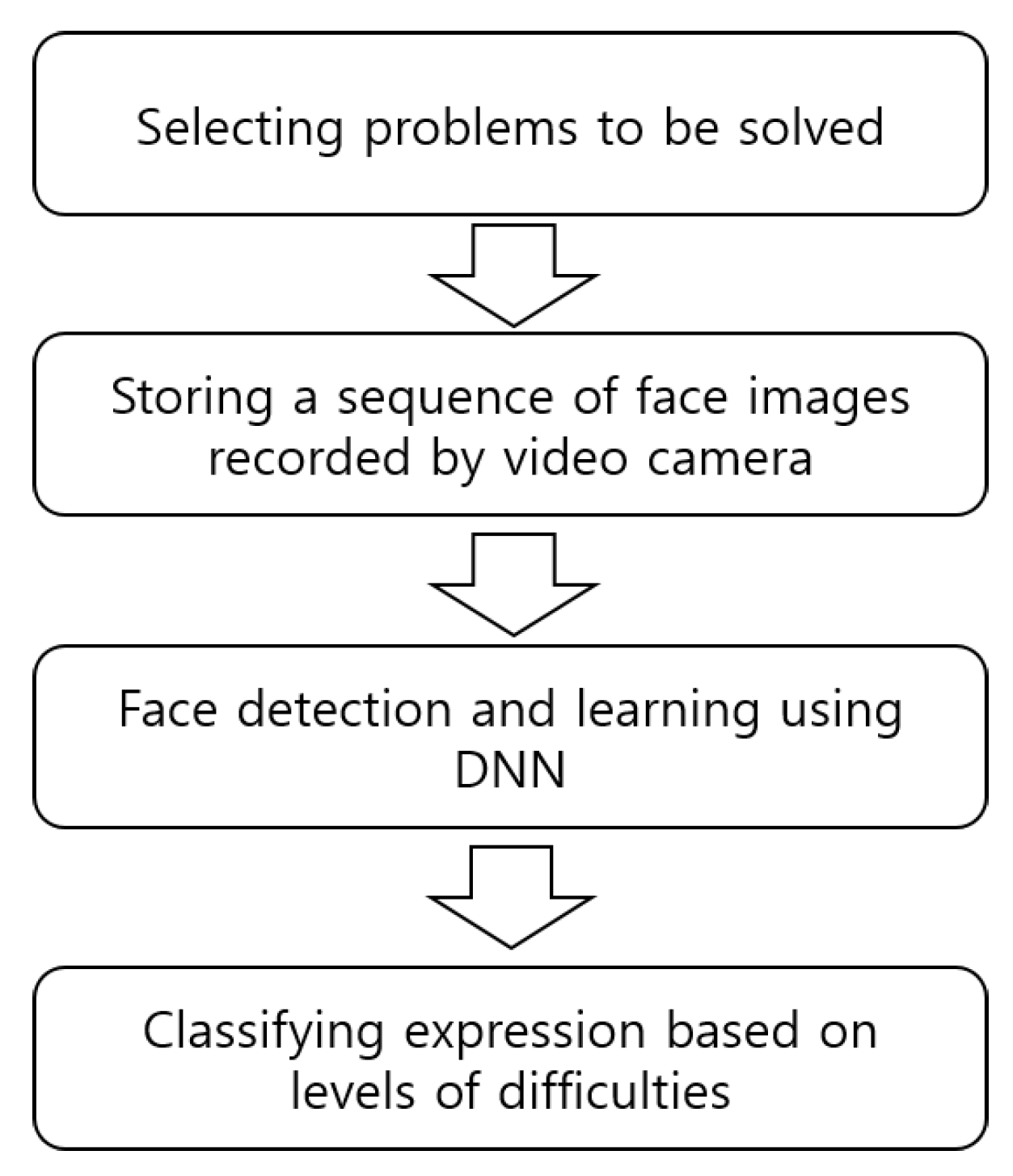

2. Overall Architecture

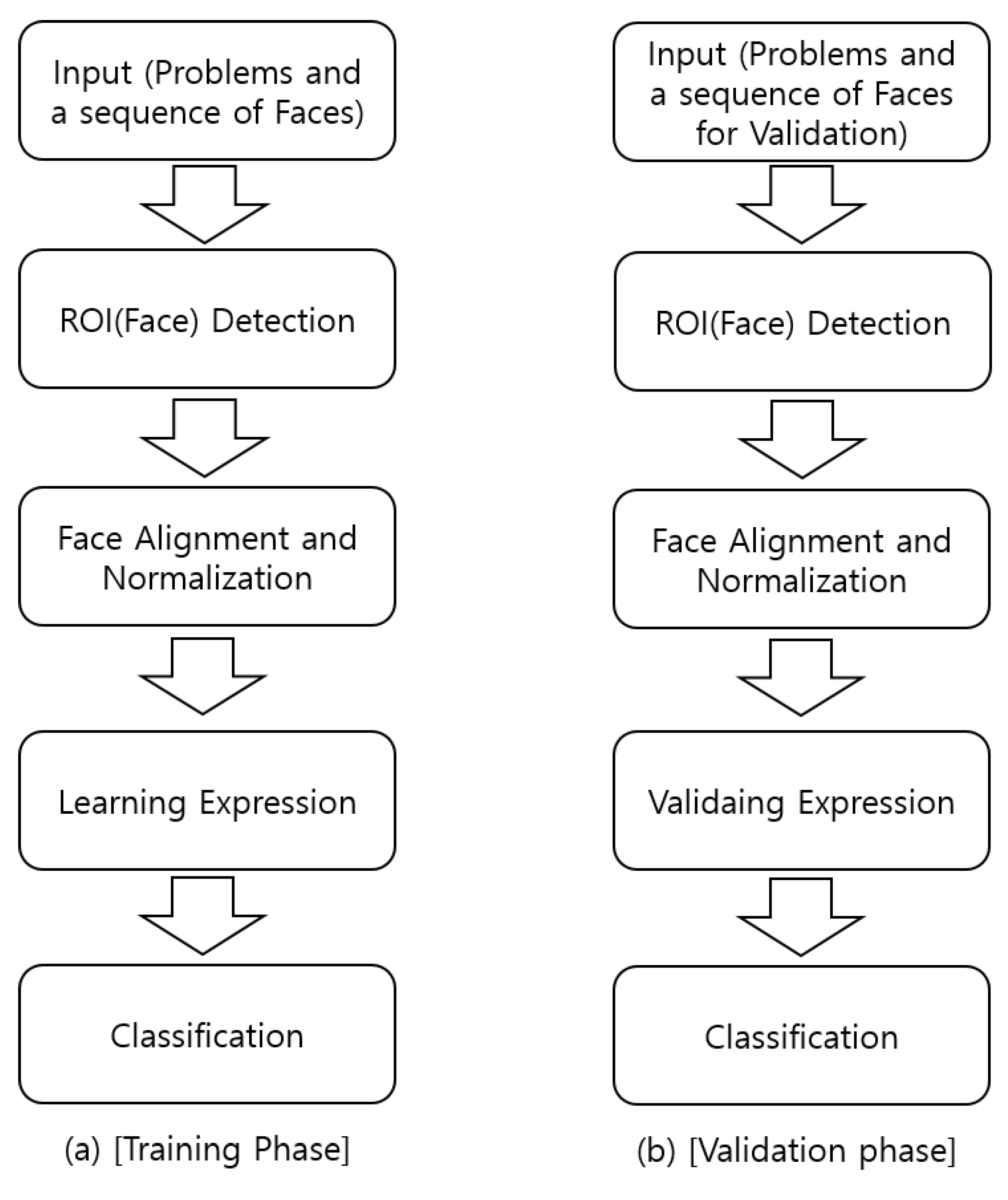

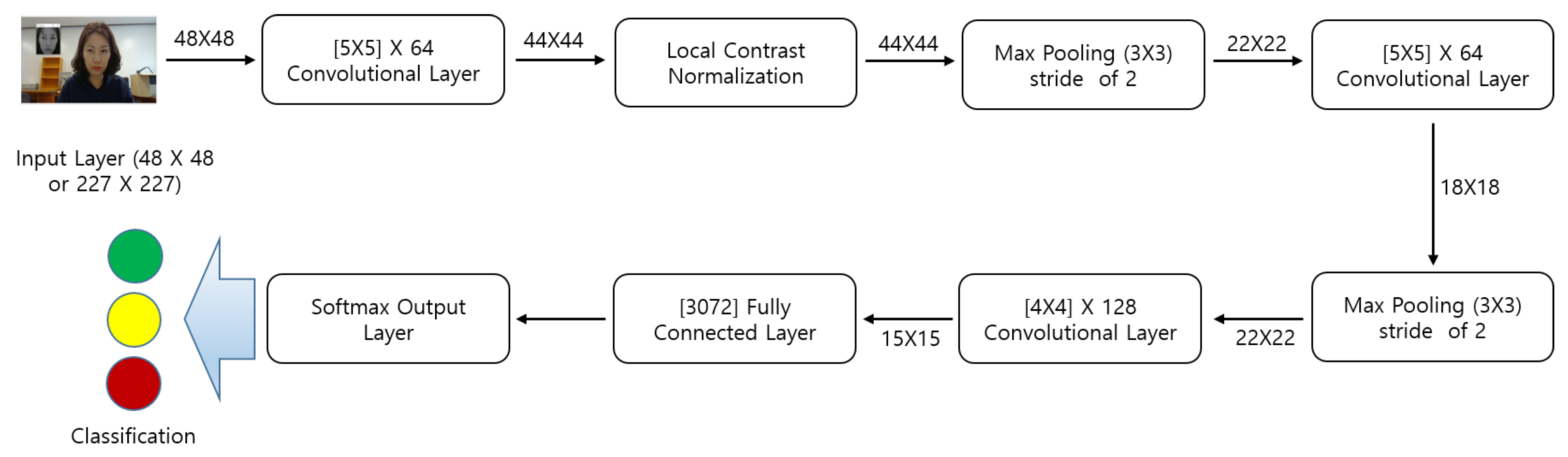

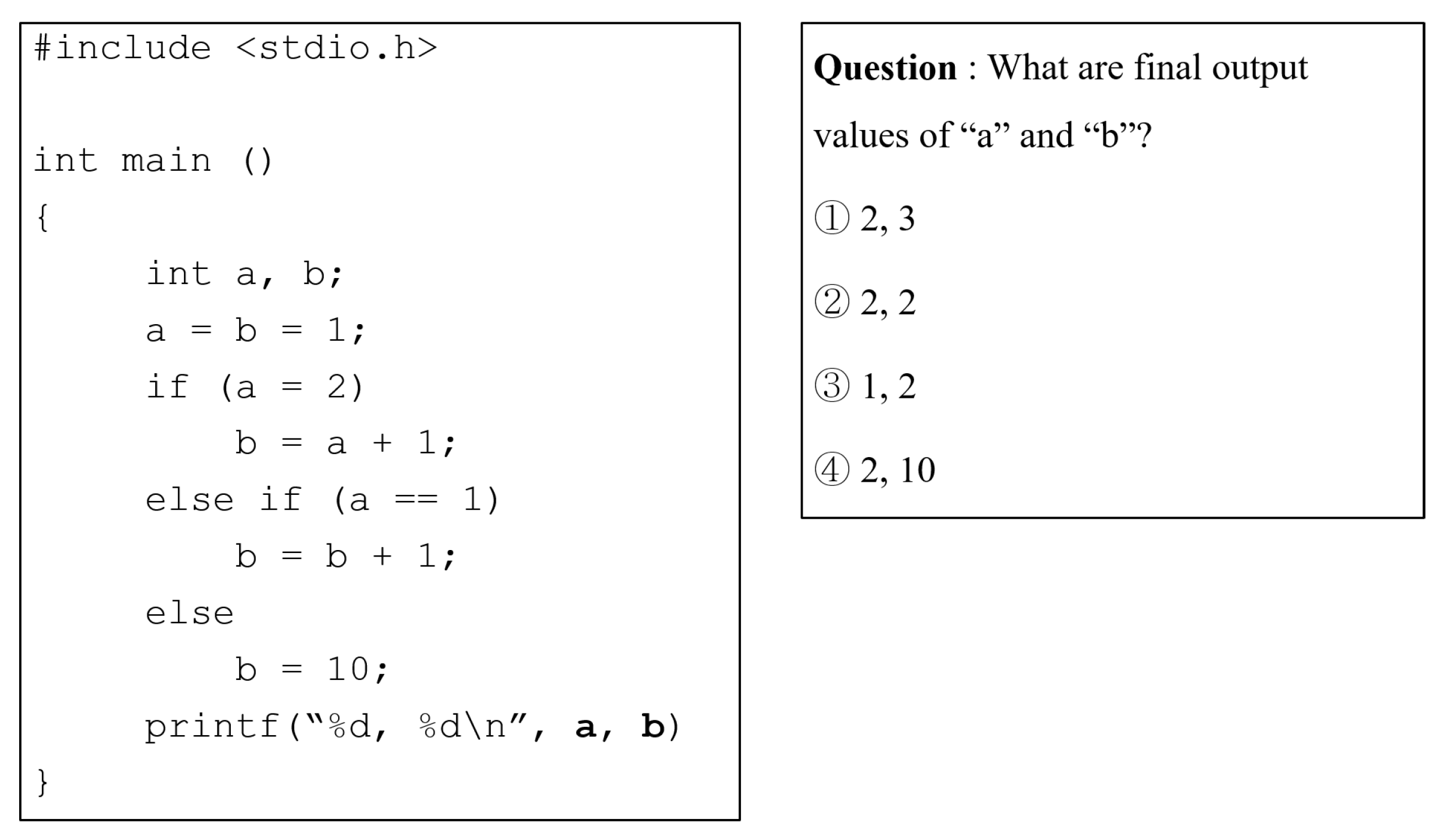

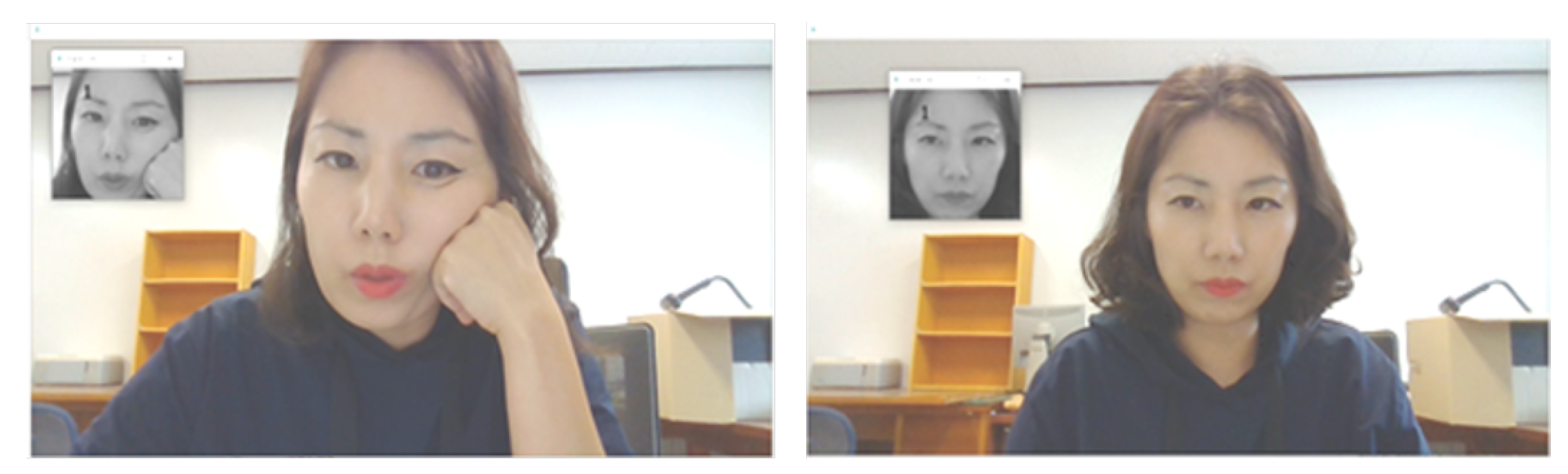

3. Detection and Classification of Facial Expression

- Case 1: A participant is confronting and solving an easy problem.

- Case 2: A participant is confronting and solving a neutral problem.

- Case 3: A participant is confronting and solving a difficult problem.

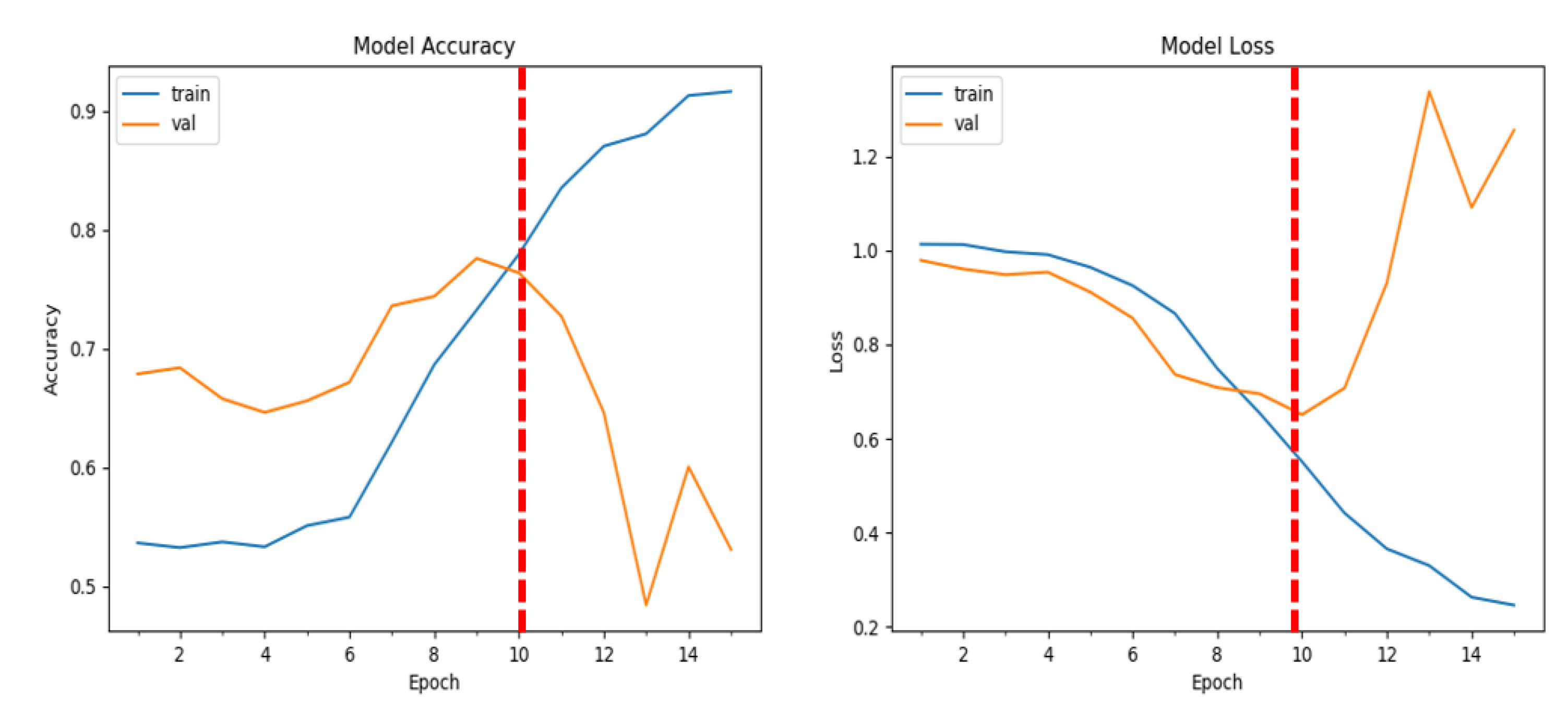

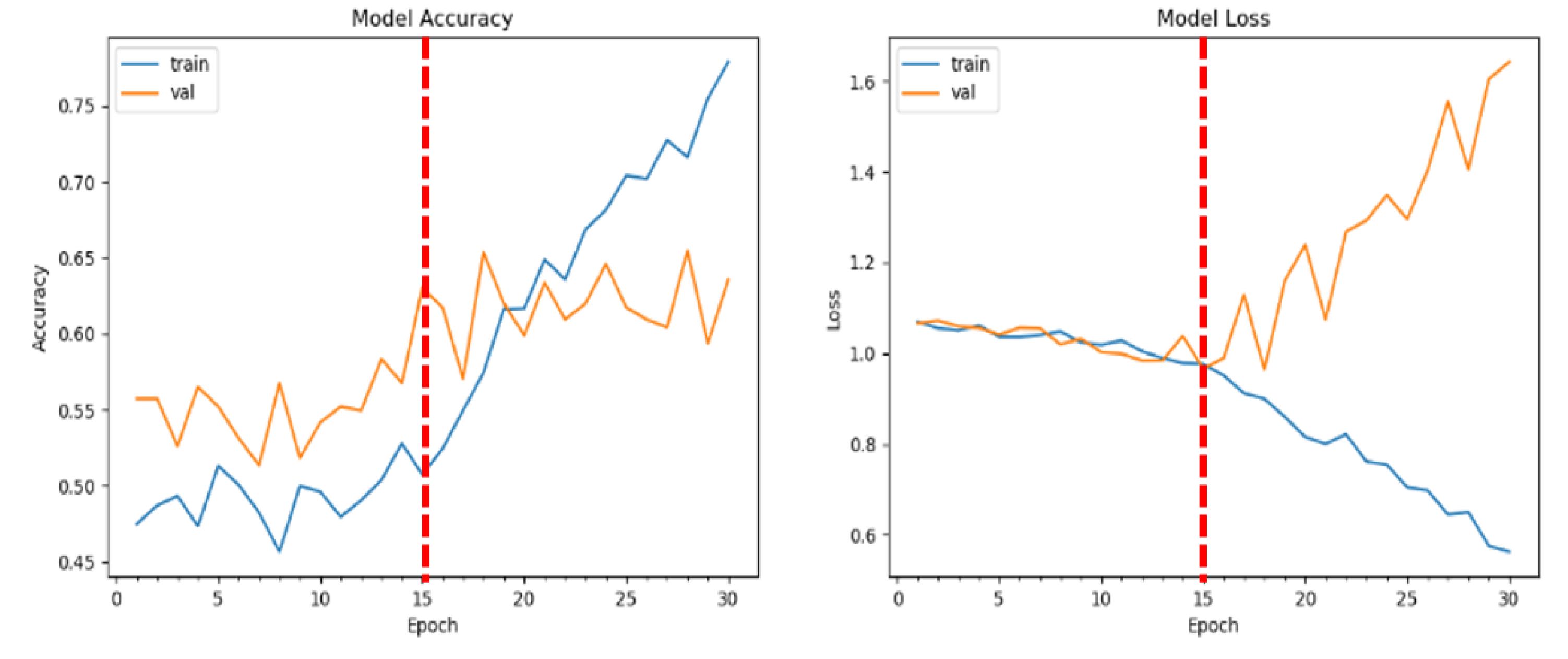

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Loncomilla, P.; Ruiz-del-Solar, J.; Martinez, L. Object recognition using local invariant features for robotic applications: A survey. Pattern Recognit. 2016, 60, 499–514. [Google Scholar] [CrossRef]

- Serban, A.; Poll, E.; Visser, J. Adversarial examples on object recognition: A comprehensive survey. ACM Comput. Surv. 2020, 53, 1–38. [Google Scholar] [CrossRef]

- Bucak, S.; Jin, R.; Jain, A. Multiple kernel learning for visual object recognition: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1354–1369. [Google Scholar] [PubMed]

- Jain, A.; Duin, R.P.W.; Mao, J. Statistical pattern recognition: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 4–37. [Google Scholar] [CrossRef]

- Zhao, W.; Chellappa, R.; Phillips, P.J.; Rosenfeld, A. Face recognition: A literature survey. ACM Comput. Surv. 2003, 35, 1–61. [Google Scholar] [CrossRef]

- Jafri, R.; Arbnia, H. A survey of face recognition techniques. J. Inf. Process. Syst. 2009, 5, 41–68. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, present, and future of face recognition: A review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Pantic, M.; Patras, I. Dynamics of facial expression: Recognition of facial actions and their temporal segments from face profile image sequences. IEEE Trans. Syst. Man Cybern. Part B 2006, 36, 433–449. [Google Scholar] [CrossRef]

- Fasel, B.; Luettin, J. Automatic facial expression analysis: A survey. Pattern Recognit. 2003, 36, 259–275. [Google Scholar] [CrossRef]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality Reduction by Learning an Invariant Mapping. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 1735–1742. [Google Scholar]

- Delac, K.; Grgic, M.; Grgic, S. Independent comparative study of PCA, ICA, and LDA on the FERET data set. Int. J. Imaging Syst. Technol. 2006, 15, 252–260. [Google Scholar] [CrossRef]

- Seow, M.-J.; Tompkins, R.C.; Asari, V.K. A New Nonlinear Dimensionality Reduction Technique for Pose and Lighting Invariant Face Recognition. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)—Workshops, San Diego, CA, USA, 21–23 September 2005. [Google Scholar]

- Huang, W.; Yin, H. On nonlinear dimensionality reduction for face recognition. Image Vis. Comput. 2012, 30, 355–366. [Google Scholar] [CrossRef][Green Version]

- Wismüller, A.; Verleysen, M.; Aupetit, M.; Lee, J.A. Recent Advances in Nonlinear Dimensionality Reduction, Manifold and Topological Learning. In Proceedings of the ESANN 2010 Proceedings, European Symposium on Artificial Neural Networks—Computational Intelligence and Machine Learning, Bruges, Belgium, 28–30 April 2010; pp. 71–80. [Google Scholar]

- Cao, L.J.; Chua, K.S.; Chong, W.K.; Lee, H.P.; Gu, Q.M. A comparison of PCA, KPCA and ICA for dimensionality reduction in support vector machine. Neurocomputing 2003, 55, 321–336. [Google Scholar] [CrossRef]

- Geng, X.; Zhan, D.-C.; Zhuo, Z.-H. Supervised nonlinear dimensionality reduction for visualization and classification. IEEE Trans. Syst. Man Cybern. Part B 2005, 35, 1098–1107. [Google Scholar] [CrossRef]

- Lee, D.; Krim, H. 3D face recognition in the Fourier domain using deformed circular curves. Multidimens. Sys. Signal Process. 2017, 28, 105–127. [Google Scholar] [CrossRef]

- Drira, H.; Amor, B.; Srivastava, A.; Daoudi, M.; Slama, R. 3D face recognition under expressions, occlusions, and pose variations. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2270–2283. [Google Scholar] [CrossRef] [PubMed]

- Kuanar, S.; Athitsos, V.; Pradhan, N.; Mishra, A.; Rao, K.R. Cognitive Analysis of Working Memory Load from EEG, by a Deep Recurrent Neural Network. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2576–2580. [Google Scholar]

- Wu, D.; Zheng, S.-J.; Zhang, X.-P.; Yuan, C.-A.; Cheng, F.; Zhao, Y.; Lin, Y.-J.; Zhao, Z.-Q.; Jiang, Y.-L.; Huang, D.-S. Deep learning-based methods for person re-identification: A comprehensive review. Neurocomputing 2019, 337, 354–371. [Google Scholar] [CrossRef]

- Kuanar, S.; Rao, K.R.; Blias, M.; Bredow, J. Adaptive CU mode selection in HEVC intra prediction: A deep learning approach. Circuits Syst. Signal Process. 2019, 38, 5081–5102. [Google Scholar] [CrossRef]

- Wu, Y.; Ji, Q. Facial landmark detection: A literature survey. Int. J. Comput. Vis. 2019, 127, 115–142. [Google Scholar] [CrossRef]

- Ko, B.C. A brief review of facial emotion recognition based on visual information. Sensors 2018, 18, 401. [Google Scholar] [CrossRef]

- Kumari, J.; Rajesh, R.; Pooja, K.M. Facial expression recognition: A survey. Procedia Comput. Sci. Second Int. Symp. Comput. Vis. Internet 2015, 58, 486–491. [Google Scholar] [CrossRef]

- Lee, K.-H.; Kang, H.; Ko, E.-S.; Lee, D.-H.; Shin, B.; Lee, H.; Kim, S. Exploration of the direction for the practice of process-focused assessment. J. Educ. Res. Math. 2016, 26, 819–834. [Google Scholar]

- Krithika, L.B.; Lakshmi, P.G.G. Student emotion recognition system (SERS) for e-learning improvement based on learner concentration metric. Procedia Comput. Sci. 2016, 85, 767–776. [Google Scholar] [CrossRef]

- Rao, K.; Chandra, M.; Rao, S. Assessment of students’ comprehension using multimodal emotion recognition in e-learning environments. J. Adv. Res. Dyn. Control Syst. 2018, 10, 767–773. [Google Scholar]

- Olivetti, E.C.; Violante, M.G.; Vezzetti, E.; Marcolin, F.; Eynard, B. Engagement evaluation in a virtual learning environment via facial expression recognition and self-reports: A preliminary approach. Appl. Sci. 2020, 10, 314. [Google Scholar] [CrossRef]

- Tarnnowski, P.; Kolodziej, M.; Majkowski, A.; Rak, R. Emotion recognition using facial expressions. Procedia Comput. Sci. 2017, 108, 1175–1184. [Google Scholar] [CrossRef]

- Ma, S.; Bai, L. A Face Detection Algorithm Based on AdaBoost and New Haar-Like Feature. In Proceedings of the 7th IEEE International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 26–28 August 2016; pp. 651–654. [Google Scholar]

- Correa, E.; Jonker, A.; Ozo, M.; Stolk, R. Emotion Recognition using Deep Convolutional Neural Network; Tech. Report IN4015; TU Delft: Delft, The Netherlands, 2016; pp. 1–12. [Google Scholar]

- Kwak, J.-H.; Woen, I.-Y.; Lee, C.-H. Learning algorithm for multiple distribution data using Haar-like features and decision tree. KIPS Trans. Softw. Data Eng. 2013, 2, 43–48. [Google Scholar] [CrossRef]

- Goodfellow, J., II; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.-H.; et al. Challenges in representation learning: A report on three machine learning contests. Neural Netw. 2015, 64, 59–63. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Category | Version |

|---|---|

| Operation system | Window 10 |

| CPU | Intel(R) Core(TM) i5-8250U CPU @1.80 GHz 1.60 GHz |

| System type | 64 bits |

| Memory (RAM) | 8.0 GB |

| Simulation environment | Anaconda 4.7.5 |

| Classification | Training Set | Validation Set | Total |

|---|---|---|---|

| Easy | 7001 | 1780 | 8781 |

| Hard | 9922 | 2520 | 12,442 |

| Neutral | 4322 | 1565 | 5887 |

| Total | 21,245 | 5865 | 27,110 |

| Classification | Training Set | Validation Set | Total |

|---|---|---|---|

| Easy | 5220 | 1308 | 6537 |

| Hard | 7229 | 1810 | 9039 |

| Neutral | 2217 | 554 | 2711 |

| Total | 14,666 | 3672 | 18,338 |

| Experiments Setup | Size (Resolution) | Training Set | Validation Set | Total |

|---|---|---|---|---|

| Setup I | 2250 | 460 | 2710 | |

| Setup II | 14,602 | 3488 | 18,090 | |

| Setup III | 5871 | 1250 | 7121 |

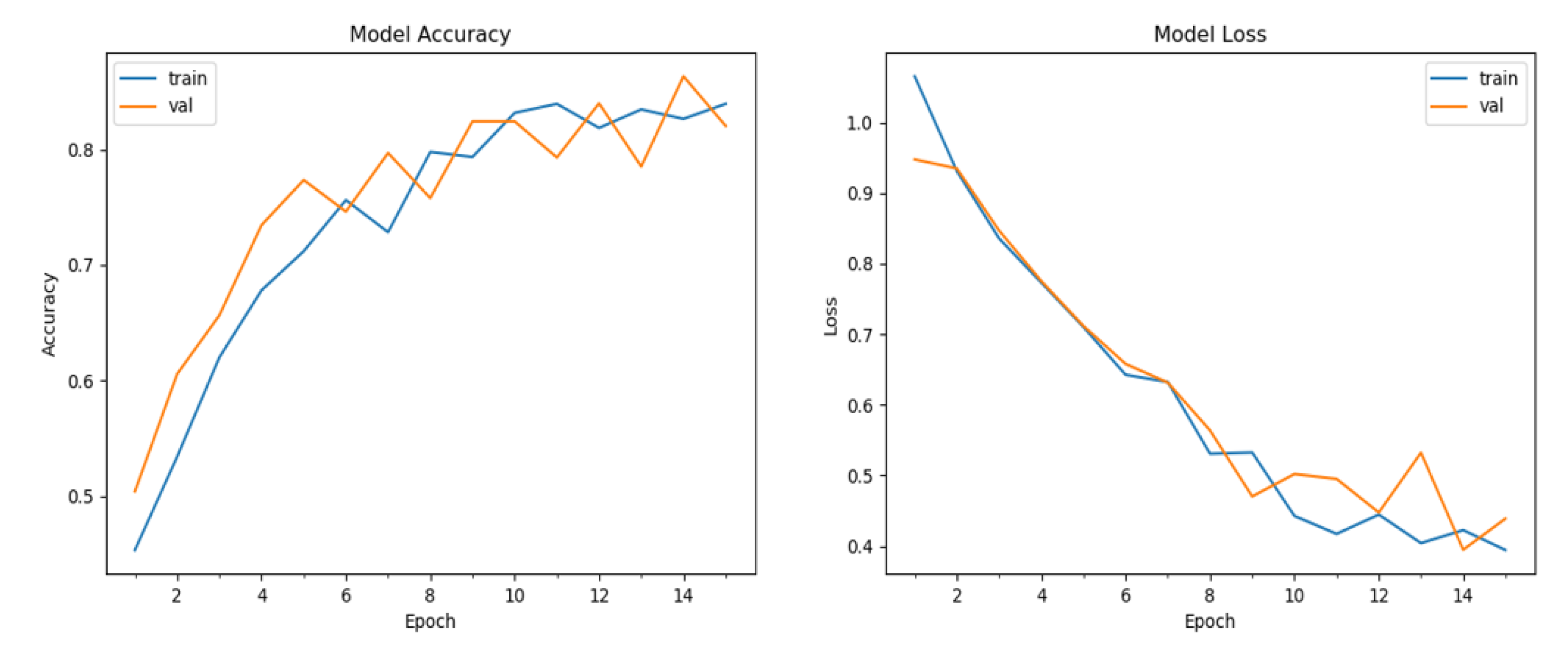

| Experiments Setup | Size (Resolution) | Number of Samples | Training Accuracy (%) | Validation Accuracy (%) |

|---|---|---|---|---|

| Goodfellow et al. [33] | 35,887 | – | 64.24% | |

| Setup I | 2710 | 70 | 75 | |

| Setup II | 18,090 | 52 | 65 | |

| Setup III | 7121 | 83.9 | 82 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, H.-J.; Lee, D. Study of Process-Focused Assessment Using an Algorithm for Facial Expression Recognition Based on a Deep Neural Network Model. Electronics 2021, 10, 54. https://doi.org/10.3390/electronics10010054

Lee H-J, Lee D. Study of Process-Focused Assessment Using an Algorithm for Facial Expression Recognition Based on a Deep Neural Network Model. Electronics. 2021; 10(1):54. https://doi.org/10.3390/electronics10010054

Chicago/Turabian StyleLee, Ho-Jung, and Deokwoo Lee. 2021. "Study of Process-Focused Assessment Using an Algorithm for Facial Expression Recognition Based on a Deep Neural Network Model" Electronics 10, no. 1: 54. https://doi.org/10.3390/electronics10010054

APA StyleLee, H.-J., & Lee, D. (2021). Study of Process-Focused Assessment Using an Algorithm for Facial Expression Recognition Based on a Deep Neural Network Model. Electronics, 10(1), 54. https://doi.org/10.3390/electronics10010054