2.3.1. Generating Scenarios

Scenario development demands that participants be highly competent about the topic being investigated, knowledge of current strategies, in-depth understanding of the workforce and processes, as well as potential antagonists [

26]. We propose to describe the scenario in terms of the nature of a wargame [

27].

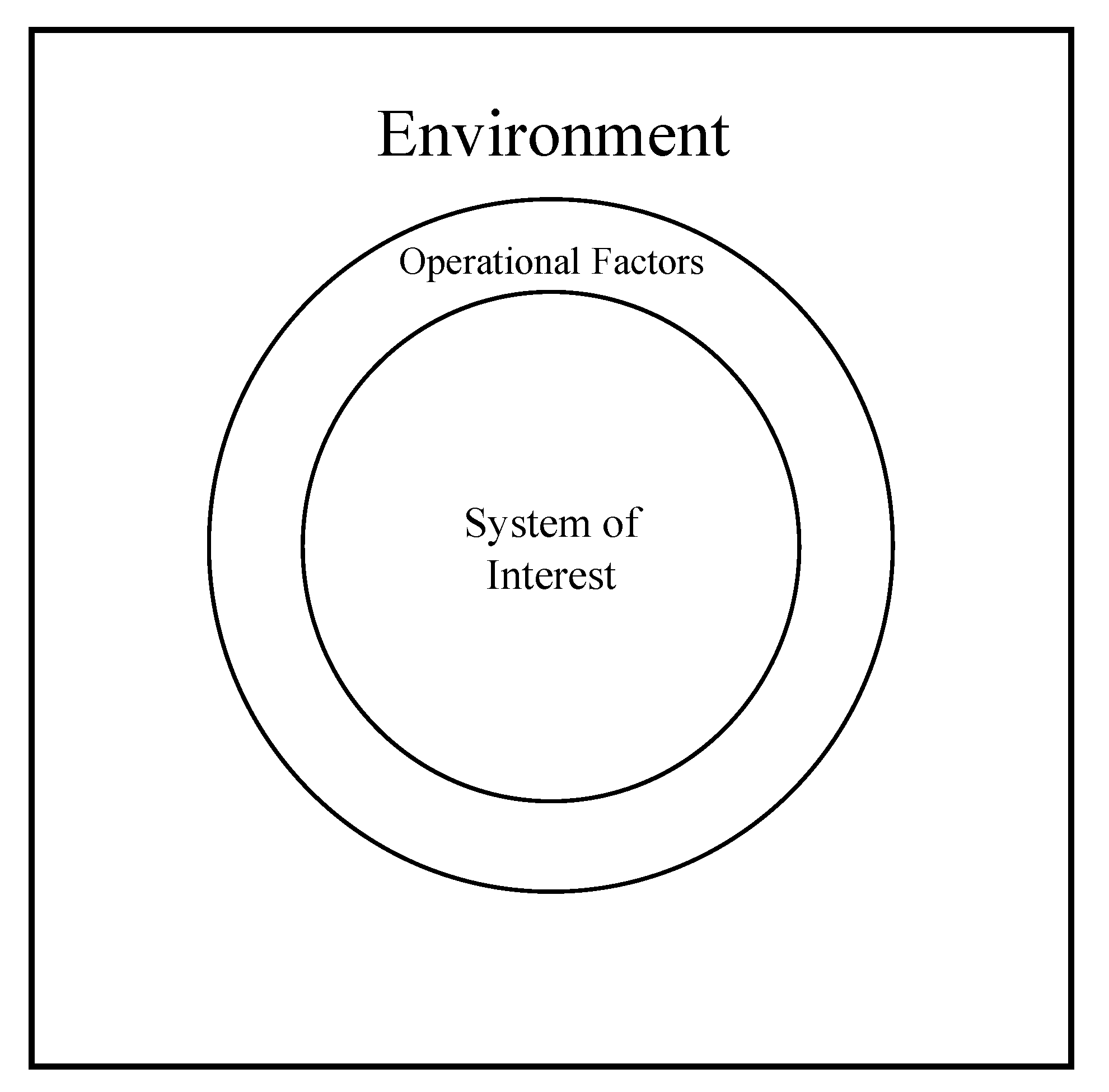

The conditions of a scenario are either controllable by humans, such as technologies and organizational structures, or uncontrollable, such as the weather, terrain, and temperature of the operational landscape. To illustrate the ideas presented in this paper, we specifically list uncontrollable, non-numeric scenario factors for military studies. For instance, knowledgeable participants about military maneuver would specify different terrain types.

2.3.2. Ranking the Non-Numeric Values of Scenario Factors

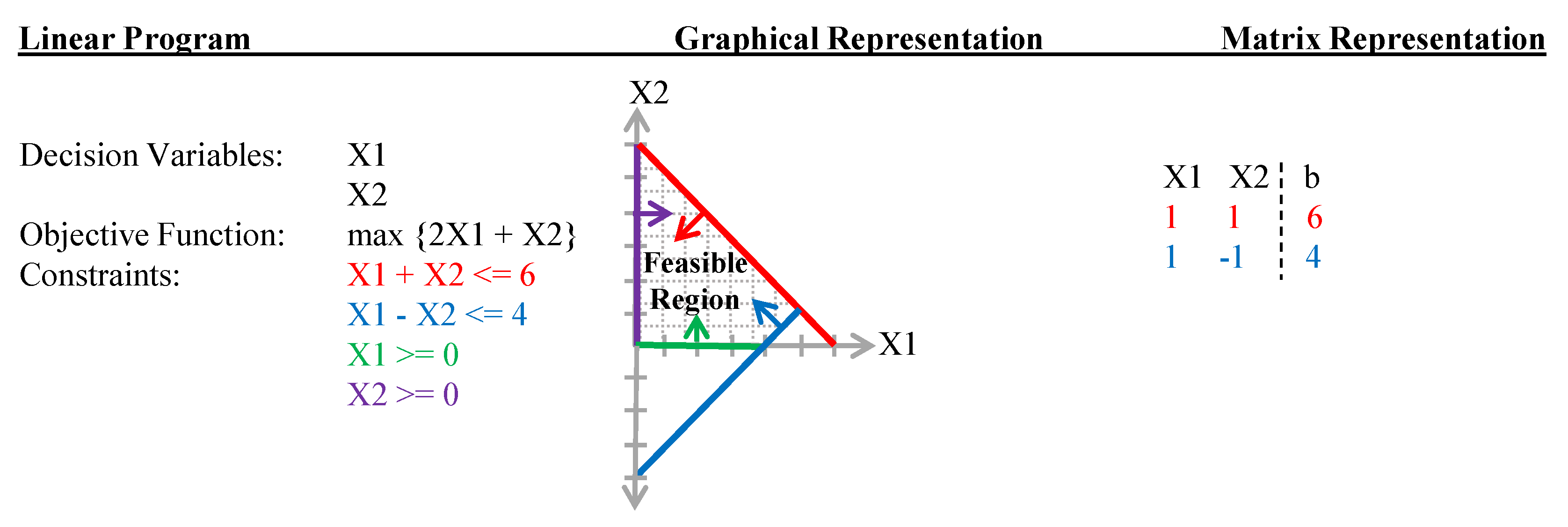

Non-numeric, unordered values greatly diminish the advantage of applying efficient DOE, i.e., the value combinations would be arbitrary and have no real meaning for selecting an appropriate subset of combinations that will mathematically support a global optimum [

28]. At times, subject matter experts (SME) assign a rank order based on direct elicitation, which simply places each factor value on a line from 1 to 100. However, direct elicitation is replete with flaws for capturing the actual order of importance [

29]. We select two techniques because of their applicability to different study conditions: (1) Analytic Hierarchical Process and (2) Failure Mode, Effects, and Criticality Analysis.

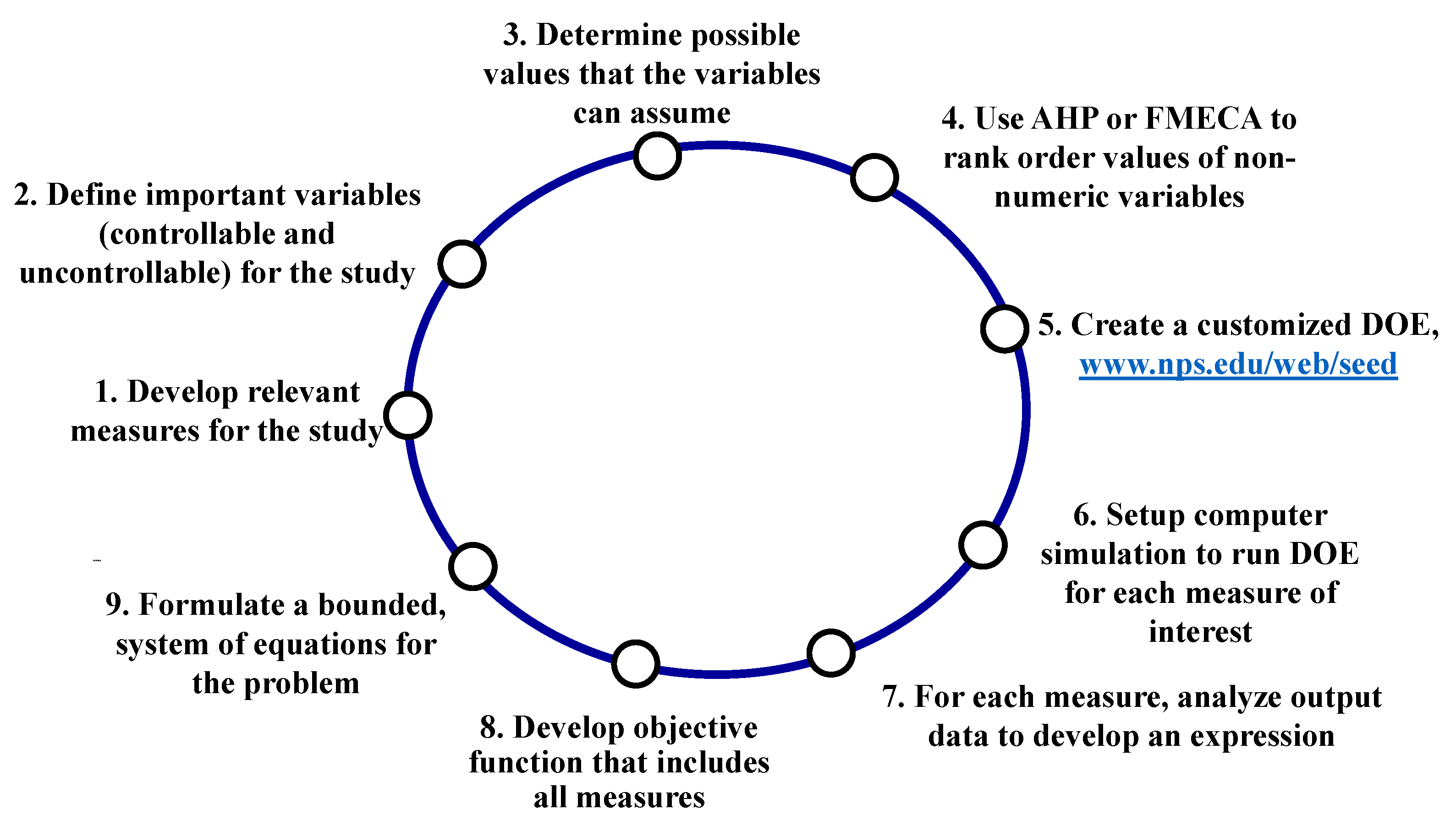

The Analytical Hierarchy Process (AHP) develops a hierarchy for a set of elements [

30]. The first step identifies the values that the non-numeric factor can assume. The second step calls on the expertise of the participants to assign an AHP score for each pairwise comparison of values within each factor [

31]. Each paired comparison between two values results in exactly one of several conclusions from

Table 2, which is adapted from Taderhoost’s original work [

31].

Expert participants determine the conclusion for each pairwise comparison of values. For instance, a score of “5” in comparing B with D means that B is “Strongly Important” over D. Reciprocally, a comparison of D with B results in a score of “1/5” with an inverse meaning to a score of “5.” The complete set of pairwise comparisons is designated as matrix A.

A consistency check of the resultant pairwise comparison matrix is important to validate the prioritization. Consistency refers to the experts’ logical assignment of the scores, i.e., if Mountain is preferred over Desert and Jungle is preferred over Mountain, then Jungle should be preferred over Desert. Eigenvector computations determine the consistency of matrix

A [

30,

32]. The consistency ratio is calculated from the maximum Eigen value (λ

max) of matrix

A, and the random index (RI) obtained from Saaty’s [

30] tables. If λ

max/RI is lower than 0.10, then the resulting rankings are reasonable [

30].

We discuss the AHP with an example that considers a scenario to test a new, individually carried radio system. SME develop a comparison matrix,

A (bordered, shaded area), for values that the scenario factor, operational terrain, can assume (

Table 3). Eigen value computations is left to the reader [

32]. The computed consistency ratio from matrix

A in this example is <0.10 and is therefore logically consistent [

30]. The sum score of each column determines how to rank each factor value as shown in the last row of

Table 3. The final rankings (high is best or most important) suggest that urban is the most important terrain type to test an individually carried radio system.

AHP is useful for rank ordering a relatively small number (<10) of non-numeric factor values. However, pairwise comparisons of more than nine elements are impractical. The following section is an approach for comparing more than 10 elements.

Failure mode, effects, and criticality analysis (FMECA) is a second approach to rank order non-numeric factor values based on their criticality to stressing the system. This approach is adapted from system reliability analysis. FMECA is a design technique to study how subsystem failures may affect the rest of the system [

33]. Determining the criticality of any specific subsystem failure consists of three scores: severity, frequency, and detection probability.

Severity is the seriousness of the failure’s impact on the system.

Frequency is how often the failure occurs, regardless of manner.

Detection probability is the likelihood that the failure will be detected before it has catastrophic effects on the system. For the purposes of this work, the detection score is not applicable and is therefore shaded in gray. Each element of an FMECA score ranges from 1–10 (

Table 4). A failure’s

criticality score is the product of all three scores. In this paper, only

Severity and

Frequency are multiplied. We submit that the criticality score of a factor value for achieving the goals of a study is a credible means to rank order values.

We again consider the factor values of the operational terrain: desert, mountain, jungle, or urban. The expert participants review the objectives for testing an individually carried radio and score the factor’s value on the ability to stress the radio. For instance, the experts may consider that “urban terrain” has a highly negative impact (stress) on the radio transmission, thereby assigning a score of 8 for severity. If the concept of operations for the radio indicates that the radio will routinely be used in an urban environment, then the experts may rate frequency a 10. Therefore, the

criticality score for urban is 8 × 10 = 80. Criticality scores for the remaining terrain values may result in the rankings shown in

Table 5.

Rank ordering the factor values enables the use of DOE. After discretizing the factor values with either AHP or FMECA, expert participants may treat the factor as numeric and frame the problem space into unique combinations of the factor values. It is possible to rank order a magnitude more factor values with FMECA than AHP. Consequently, the number of future scenarios also increases, and the role of an efficient DOE becomes clear.

2.3.3. Identifying an Efficient Set of Scenario Designs

The diversity of scenarios that can be imagined range from those that the social sciences community would create, as well as scenarios formulated by the math and engineering sciences. This combinatorial issue is the same challenge for many experimenters [

18]. In recent decades, there has been great advances in improving DOE to efficiently examine complex problems. Efficiency in DOE refers to minimizing the design points (scenarios) to explore while gaining the most information [

34]. Foremost in these new experimental designs are nearly orthogonal Latin hypercubes (NOLH).

As Hernandez, Lucas, and Carlyle [

35] explain, when using lattice sampling [

36], a DOE with

k factors and

n values for each factor, results in a Latin hypercube that is

n x

k in size. The factor values in the lattice are 1 to

n and basically act as ordered ranks. The number of possible scenarios is (

n!)

k−1. However, the new methods for constructing experimental designs can specify a much smaller subset of design points that will gain the most information from the problem space. For instance, Cioppa and Lucas [

28] show that an NOLH design can efficiently examine seven factors, each assuming 17 different values.

Consider three (3) critical scenario factors: terrain, friendly organizational makeup, and enemy rules of engagement. Notionally, the scenarios are being developed to test a new motorized vehicle.

Table 6 is an NOLH design to examine three factors, each able to assume eight different values [

35]. Each row is a design combination for a new scenario. In viewing the DOE structure, we can see the relationship with Zwicky’s morphological box [

13,

14,

17]. Experimenters replace the rank shown in the design with the appropriate factor value to complete the design matrix for the simulation runs. For instance, if

k1 is Terrain, subject matter experts may rank the factor values from most passable, rank #1, to least passable, rank #8. The assigned ranks could be: 1 = Open, 2 = Oasis, 3 = Desert, 4 = Steppe, 5 = Tundra, 6 = Forest, 7 = Hill, and 8 = Swamp. Thus, creating a scenario within a “Swamp” will likely stress the motorized vehicle the most.