Abstract

This study utilized the application of authentic Virtual Reality (VR) to replicate the real-world complex system scenarios of a large retail supply chain. The proposed VR scenarios were developed based on an established systems thinking instrument that consists of seven dimensions: level of complexity, independence, interaction, change, uncertainty, systems’ worldview, and flexibility. However, in this study, we only developed the VR scenarios for the first dimension, level of complexity, to assess an individual’s Systems Thinking Skills (STS) when he or she engages in a turbulent virtual environment. The main objective of this study was to compare a student’s STS when using traditional ST instruments versus VR scenarios for the complexity dimension. The secondary aim was to investigate the efficacy of VR scenarios utilizing three measurements: Simulation Sickness Questionnaire (SSQ), System Usability Scale (SUS), and Presence Questionnaire (PQ). In addition to the three measures, NASA TLX assessment was also performed to assess the perceived workload with regards to performing the tasks in VR scenarios. The results show students’ preferences in the VR scenarios are not significantly different from their responses obtained using the traditional systems skills instrument. The efficacy measures confirmed that the developed VR scenarios are user friendly and lie in an acceptable region for users. Finally, the overall NASA TLX score suggests that users require 36% perceived work effort to perform the activities in VR scenarios.

1. Introduction

The intense competition in today’s global economy stimulates the advancement of technologies producing new requirements for future jobs. “The Future of Jobs,” published through the World Economic Forum (WEF) in 2016, identified the critical workforce skills needed in the future complex workplace environment. The report indicated that complex problem solving and critical/systems thinking (ST) skills are the most important skills for the next five years, outpacing the need for other skills such as people management, emotional intelligence, negotiation, and cognitive flexibility. In other words, because the skills required are beyond the narrow focus of traditional engineering disciplines, more focused emphasis on holistic thinking modes is necessary and should be emphasized in the training of a future workforce [1,2]. The need for these skills is growing because of the complexity and uncertainty associated with modern systems is increasing remarkably [3,4]. A modern complex system encompasses characteristics that can confound an individual’s ability to visualize the complexity of a situation with all its uncertainty, ambiguity, interaction, integration, evolutionary development, complexity, and emergence [5,6,7,8]. These characteristics impose challenges for individuals and practitioners when addressing and solving complex system problems. For example, with the increasing level of uncertainty, it is challenging to support actions and strategic goals within a complex system [9,10]. Practitioners are left with no choice but to deal with these escalating characteristics and challenges. Thus, dealing with the challenges inherent in modern systems necessitates the adoption of a more ‘systemic’ approach to better discern and govern problems [11,12,13,14,15,16,17,18]. One systemic approach is the use of systems thinking and a systems theory paradigm. Therefore, in this study, we emphasized the need to prepare qualified individuals capable of dealing with socio-technical system problems since failures in complex systems can be triggered by technical elements and human errors.

Systems Thinking (ST), as defined by Checkland [19], is a thought process that develops the ability to think and speak in a new holistic language. Similarly, Cabrera et al. [20] described systems thinking as a cognitive science that is an “awareness of norms and the aspirations to think beyond norms, while also taking them into account” [20] (p. 35). Checkland and other popular researchers in the field emphasized the concept of wholeness to navigate modern system problems [15,19,21,22], and the extant literature is replete with studies that focus on systems thinking skill set. While current themes in some of the literature focus on the concept and theory of systems thinking, including systems theory and laws, other studies focus on the generalizability and applicability of systems thinking across different domains. A third theme in the literature is focused on the tools and methods of systems thinking across different domains, including some systems thinking tools. Although the studies related to this theme indicate that different tools and methods of systems thinking have been developed, few are purposefully developed to deal with complex system problems. For instance, the Beergame [23], Littlefield Labs [24], and Capstone games [25] are some of the games developed to measure players’ decision-making, analytical, and thinking skills while playing them.

The main contribution of this study is to measure individual’s skills through an immersive real-world case scenario involving a supply system. The developed gaming scenarios built within the VR are based on a thorough systems skills instrument established by Jaradat [15], and the scenarios are built and inspired by the popular and vetted Beer Game. For the purpose of validation, the systems skills results obtained from the VR scenarios were further analyzed and compared to the survey (ST skills instrument) answered by each individual.

In Section 2, which follows, we will present the history of VR along with systems skills and its instruments with an emphasis on the systems skills instrument used in this study. Section 3 addresses the research design and methodology. Section 4 presents the results and the analysis, and Section 5 includes the conclusions, limitations, and future work.

2. Related Work

The purpose of this section is five-fold: first, to present the existing literature pertinent to the application of systems thinking across different fields; second, to summarize the existing tools and techniques that assess systems thinking skills; third, to provide a brief history of VR and how the different applications of VR were introduced in different fields over time; fourth, to summarize existing games that assess decision-making skills and thinking ability; and last, to demonstrate efficacy measures of VR simulations.

2.1. Systems Thinking: Overview and Application

A complex system usually involves high levels of change, uncertainty, and inter-relations among the subsystems. Thus, its behavior cannot be deduced from the study of its elements independently since the complexity of a system is determined by the volume of information needed to understand the behavior of this system as a whole and the degree of detail necessary to describe it [26]. Maani and Maharaj [6] support the same notion by agreeing that implementing reductionist methods to solve complex problems is insufficient to interpret systems involving high levels of complexity. It has been proven through the literature and practice that systems thinking can help deal with the increasing complexity of businesses in particular [27,28] and the world in general [6,29]. There is a growing emphasis on systems thinking in almost all fields, including education [30,31,32], engineering [33,34], military [35,36], agriculture [37,38,39], weather [40,41], and public health [42,43,44].

The existing literature is replete with studies, both theoretical and observational, concerning systems thinking and management. Senge [45] stated the benefits of systems thinking in helping to determine the fundamental management goals to build the adaptive-management approach in an organization. A case study by Senge [46] validated the relationship and relevance of systems thinking in all levels of leadership. Jacobson [26] explained how systems thinking approaches aid the integration of management systems in an organization. In his study, he presented a list of procedures to be followed to determine the most appropriate way to implement the management systems model. In another study, Leischow et al. [42] discussed the importance of systems thinking in marketing by implementing a systemic approach to marketing management. Systems thinking approach was also adopted in risk management [47,48,49], medicine management [50,51,52], project management [53,54,55], quality management [56,57], and many other domains of management.

2.2. Systems Thinking Skills and Assessment

With the popularity and advancement of systems theories and methods, the identification and assessment of systems thinking skills becomes more important. More emphasis is placed on the development of tools and techniques that can effectively determine and measure systems thinking capabilities. For example, Cabrera and Cabrera [22] described systems thinking as a “set of skills to help us engage with a systemic world more effectively and prosocially” [22] (p. 14). Over time, researchers attempted to develop tools and techniques to measure an individual’s skillset in both a qualitative and quantitative manner. For example, Dorani and co-authors [58] developed a set of questions to assess systems thinking skills by combining the concepts of the System Thinking Hierarchical (STH) model [59] and the System Thinking Continuum (STC) [60] with Richmond’s seven thinking tracks model [61]. This scenario-based assessment process consists of six questions, each measuring important systems thinking skills, i.e., dynamic thinking, cause–effect thinking, system-as-cause thinking, forest thinking (holistic thinking), closed-loop thinking, and stock-and-flow thinking. Cabrera et al. [22] summarized the evolution of systems thinking skills into four waves and embraced the methodological plurality and universality of systems framework in the third and fourth waves. By emphasizing the broader plurality and universality of systems methods, Cabrera and colleagues introduced DSRP (distinctions, systems, relationships, and perspectives) theory [62,63,64] that offers a comprehensive framework of systems thinking. Later, Cabrera et al. [65] introduced an edumetric test called Systems Thinking Metacognition Inventory (STMI) to measure three important aspects of a systems thinker, i.e., systems thinking skills, confidence in each skill, and metacognition. Many other researchers developed different guidelines and assessment tools to assess the systems thinking skills by embedding systems theories and principles [6,66,67,68,69].

2.3. Systems Thinking and Technology

Virtual-Reality (VR) technology dates back to the mid-1940s with the advancement of computer science [70]. In 1965, Ivan Sutherland first proposed the idea of VR when he stated: “make that (virtual) world in the window look real, sound real, feel real, and respond realistically to the viewer’s actions” [71] (p. 3). In the 1980s, some schools started adopting personal computers and digital technology, and multiple studies shifted focus towards VR technologies, its applications, and its implications [72]. At the beginning of the 1990s, the area of virtual reality experienced an enormous advancement. The primary purpose of VR technologies was to generate a sense of presence for the users and enable the user to experience an immersive virtual environment generated by a computer as if the user was there [73].

Although the ideas around virtual reality emerged during the 1960s, the actual application of Virtual Reality (VR) has expanded to almost all fields of science and technology. During the last two decades, this growth demonstrates the increasing popularity of this technology and the unlimited potential it has for future research in various sectors such as engineering, military, education, medicine, and business. For example, in academia, the profitableness of VR technologies proved to be of a notable significance. Numerous studies encouraged the use of VR, especially in education, for plenty of reasons. In a study of 59 students, Bricken and Byrne [73] reported results that favored VR technologies in enhancing student learning. Along the same line, Pantelidis [74] demonstrated the potential benefit of VR technology in classroom pedagogy. Another study involving 51 students was conducted by Crosier et al. [75] to assess the capacity of VR technologies to convey the concept of radioactivity. The results of the study showed that students gained more knowledge in the VR environment compared to traditional methods. Mikropoulos [76] also showed that VR advanced imagery features and manipulative capabilities provided by multisensory channels and three-dimensional special representations proved to have a positive impact on students’ learning process.

Similarly, Dickey [77] stated that VR technologies generate realistic environments that enable students to enhance their competencies, waive the need for pricey equipment, and avoid hazardous settings sometimes necessary for learning. Echoing Dickey’s findings from 2005, Dawley and Dede [78] demonstrated that VR helps in developing students’ cognitive capabilities by simulating real-world settings that make the users feel as if they were there. In other words, with VR, students no longer need to “imagine” a situation but are able to be there in real time and interact with different objects and scenarios related to the subject studied using simple gestures. A similar study by Hamilton et al. [79] was performed to examine how VR technology helps students grasp queuing theory concepts in industrial engineering in an immersive environment. The results showed that the virtual queuing theory module is a feasible option to learn queuing theory concepts. Similarly, Byrne and Furness [80] and Winn [81] highlighted the efficacy and usability of VR technology in modern pedagogy. The literature review revealed that the integration of VR technology in education has significant benefits for students.

For a detailed investigation into the application of VR technology across different disciplinary domains, readers are referred to such works as McMahan et al. [82] (entertainment industry), Opdyke et al. [83], Ende et al. [84], Triantafyllou et al. [85] (healthcare), and Durlach and Mavor [86] (military application). On the other hand, the skills of systems thinking come with practice and are applied across different fields, including virtual reality, which is one of the effective ways to practice and learn Systems Thinking. The Systems Thinking Skills recognized in the academic literature include the ability to visualize the system as a whole, develop a mental map of a system, and think in dynamic terms to understand behavioral patterns. In VR games, the participants engage in various experiences and use their systems thinking skills to respond to various complex systems problems based on the real-world scenario.

The review of the literature also shows that several systems thinking tools and techniques have evolved over the decades to address complex system problems. Some tools could assess only one or two ST skills [87,88,89] and only to a certain extent. Many of the current tools are purposefully designed for a specific domain, such as education, to measure the students’ ST skills [59,90,91,92]. However, none of the standalone tools could capture the overall systems thinking skills of an individual. These tools and techniques might satisfy a specific need, but they do not facilitate solutions against the backdrop of complex system domains. Moreover, many of the current ST tools neither published their claims nor demonstrated the accompanying evidence of validity and reliability. Enforcing this criticism, Camelia and Ferris [90] stated that “there are over 200 instruments designed to measure any of a variety of attitudes toward science education, but most have been used only once, and only a few shows satisfactory statistical reliability or validity” [90] (p. 3).

2.4. Gamification

Games are a combination of many fundamental conditions without which a game cannot be constituted. A game is considered incomplete if one of these conditions is not met; hence, it cannot be carried on [93,94]. Taking that into consideration, games can be developed to help gamers grasp any concept related to any field. Gamification has and is still attracting attention from both industry and academia [93,95,96]. Although a considerable number of games have been invented for gaming purposes, not as many were built to help understand particular concepts in scientific, academic, or business fields [97,98,99]. An example of such games is the beergame. The beergame is an online game to teach operations. It allows students to sense the real, traditional supply chains in which coordination, sharing, and collaboration are missing. This non-coordinated game/system shows the problems that result from the absence of systemic thinking (website: https://beergame.org (accessed on 21 May 2020)) [23]. While the beergame was built to help students gain insight and conceptual background into supply chains, Littlefield Labs was developed to help its users acquire certain skills. Littlefield is an online analytics game simulating a queuing network where students compete to maximize their revenue [100]. Students can see their performance history to examine the impact of their previous decisions and how to manage future decisions. Capstone games are more involved than analytics games because they provide more complex instructions and a wider area for decision making. Capstone simulations are an example of capstone games. Capstone is an online business simulation developed to explain marketing, finance, operations, and others. A taxonomy of online games was developed specifically for these games to classify them based on their pedagogical objective [100]. Table 1 below shows the taxonomy table.

Table 1.

A taxonomy of online games (table adapted from [100]) (p. 3).

In addition to the taxonomy table, the Table 2 below presents a general description of the three games. The table discusses the uniqueness of the games compared to each other, describes their process, and presents different parameters these games have.

Table 2.

A taxonomy of online games (table adapted from [100]) (p. 3).

Since the focus of this study was on the tools and methods of systems thinking (the third theme), we surveyed the literature to study the tools, techniques, and games used to measure systems thinking. Based on the review, we found that (1) several of these tools are survey-based instruments; (2) few tools such as assessing systems thinking by Grohs et al. [101] are developed to measure ST (however, the validity and reliability of these tools are questionable since no sign for validity has been conducted on the theme); and (3) new technology such as virtual reality and mixed reality have not been used in the domain of systems thinking. The motivation of this study was to measure individuals’ ST skills using real-case scenarios in which individuals make decisions in uncertain, complex environments while managing different entities in the system. To develop a more valid, real-case scenario, we used Beer game as an inspirational game.

2.5. Efficacy Measures of the VR Scenarios

When referring to VR scenarios, the efficacy measures generally indicate the quality of the environment or the ability to perform the intended outcome [72,102]. An extensive literature review showed that many different qualitative questionnaires exist from past research efforts [103,104]; however, studies from literature lack flexibility and are not conducive to be generally applied to any VR study. As a result of this heuristic search, three effective assessments were chosen to collect information of interest from the users. These assessments include simulation sickness questionnaire (SSQ), system usability scale (SUS), and a presence questionnaire (PQ).

Kennedy et al. [105] prepared a simulation sickness questionnaire by including 21 symptoms that can result from virtual environment exposure. These 21 symptoms are grouped into three areas: nausea, oculomotor disturbance, and disorientation. This questionnaire gauges virtual movement sickness by allowing the user to rate their level of feeling from 0–3. The overall score is obtained by summing the weighted score of each category and then multiplying the result by 3.74. The weighted thresholds for nausea, oculomotor disturbance, and disorientation are 9.54, 7.58, and 13.92, respectively. This final score reflects the severity of the symptoms experienced. Table 3 below shows the score categorization of the final SSQ score.

Table 3.

SSQ score categorization (table adapted from [72]) (p. 3).

The second efficacy measure, the System Usability Study (SUS), comes primarily from a tool developed by Brooke [106]. This tool consists of 10 questions using a 5-point Likert scale to measure the user’s expectation of the virtual system. These questions can be reworded positively or negatively and can be modified to be more specific to the environment under question. The final score of the usability study is obtained by summing all the items’ scores and then multiplying the result by 2.5.

The presence questionnaire, which is the third measure, is an indicator of the user’s feelings about the virtual system. This survey, which includes 22 questions, was introduced by Witmer and Singer [107] and utilizes a 0–6 scale. Similar to the two previous questionnaires, the answers are summed to obtain an overall score for user presence.

These three efficacy measures fill the gap in the literature of a lack of generalized, qualitative questionnaires for the evaluation of VR scenarios. The non-specific nature of the surveys allows for their continued use on future VR studies, while adequately obtaining the necessary research information needed.

It is apparent that, although much has been written in the existing literature about the application of VR across different fields, including education, there is an apparent lack of empirical investigations conducted to measure students’ ST skills using the immersive VR complex system scenarios. The rationale of this research was to address this current gap in the literature. To the best of our knowledge, this is the first attempt to appraise the ST skills of students through VR immersive technology. The research will be contributing to the field by:

- Developing a set of VR gaming scenarios to measure the ST skills of the students based on the systems skills instrument by Jaradat [15]. In this study, the proposed VR scenarios were developed to measure only the first dimension of the instrument, level of complexity—simplicity vs. complexity (see Table 2). Six binary questions were used to determine the complexity dimension level.

- Investigating whether or not the proposed VR scenarios can be an appropriate environment to authentically measure students’ level of ST skills.

- Conducting different types of statistical analysis such as ANOVA and post hoc to provide better insights concerning the findings of the research.

- Demonstrating the efficacy and extensibility of VR technology in the engineering education domain.

3. Research Design and Methodology

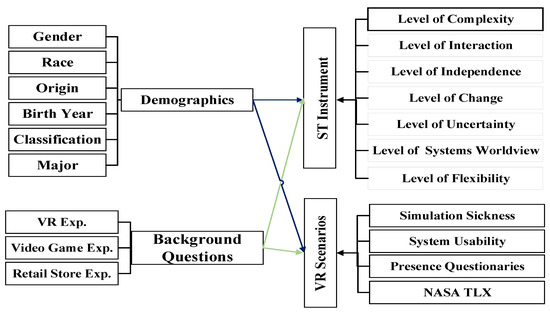

This section has four parts. First, the systems thinking instrument used in the experiment is demonstrated. In the second part, the developed VR scenarios and the environment design are presented. The third part presents the design of the experiment to illustrate the experiment’s flow. The research design and methodology section ends with the mitigation techniques used in the study. The theoretical model of this study is illustrated in Figure 1 and details are provided in the following subsections.

Figure 1.

Theoretical model of the study (Exp.–Experience).

3.1. Systems Thinking Instrument—An Overview

The systems thinking instrument was comprised of 39 questions with binary responses [15]. The responses of the participants were recorded in the score sheet. The score sheet had seven letters, each one indicating an individual’s level of inclination toward systems thinking when dealing with system problems. The instrument was composed of seven scales identifying 14 major preferences that determine an individual’s capacity to deal with complex systems. The seven scales that constituted the instrument are presented below and shown in Table 4. For more details about the instrument, including the validity, readers can refer to [15] (p. 55).

Table 4.

Systems thinking preferences dimensions.

Level of Complexity: The level of complexity refers to the level of interconnection spawned from systems and their components. In other words, it stands for the level at which the forces acting on a set of processes find a balance. It also indicates which strategy an individual adopts while facing an issue: simple strategy or complex.

Level of Independence: The level of independence stands for the level of integration or autonomy an individual will adopt while dealing with a complex system. The individual tends toward a dependent decision and global performance level (integration) or an independent decision and local performance level (autonomy).

Level of Interaction: The level of interaction stands for the individual’s preference in regards to the manner by which he/she reacts with systems.

Level of Change: The level of change indicates the degree of tolerance with which an individual accepts changes.

Level of Uncertainty: Uncertainty refers to the situations where information is unknown or incomplete. The level of uncertainty illustrates how the individual makes decisions when he/she is uncertain about the situation. This level ranges from stability, which means uncomfortable with uncertainty, to emergence, which is the case when dealing with uncertainty without any pre-plan.

Level of Systems Worldview: The world system view depicts how the individual sees the systems’ structure, as a whole or a combination of separated parts. There exist two main levels: holism and reductionism. Holism refers to focusing on the whole and the big picture of the system. On the other hand, reductionism consists of thinking that the whole is simply the sum of the parts and its properties are the sum of the properties of the total parts. Therefore, we must break the whole into elementary parts to analyze them.

Level of Flexibility: Flexibility characterizes the capability and willingness to react when there are unanticipated changes in circumstances. The level of flexibility of individuals ranges from flexible to rigid. For some individuals, the idea of flexibility produces considerable anxiety, especially when they have already formulated a plan; for others, the option for flexibility is vital to determine their plan.

3.2. VR Scenario Case and Environment Design

The experiment was conducted using three VR-compatible computers for one week. Before engaging participants with the virtual scenarios, they were asked to complete a demographic questionnaire and one detailing any simulation sickness they may have experienced in the past. They were also asked to describe their familiarity with virtual reality, video game-playing experience, and retail store experience using a Likert scale. After filling the two questionnaires, participants were asked to answer six questions constituting the systems skills instrument. These questions assess the participants’ ability to deal with complexity and illustrate their preferences. After answering the instrument questions, students were assigned to computers and began the VR scenarios. Following the completion of the VR scenarios, three questionnaires were used to evaluate the user experience (post-simulation sickness, system usability, presence questionnaire). For each participant, the surveys and VR scenarios took approximately 30 min to complete.

3.2.1. VR Supply Chain Case Scenario

The VR case scenario is developed based on real-life situations in which participants have to make decisions and choose between several options. Their answers/preferences indicate how they think in complex situations and this determines their systems thinking skills when dealing with complex system problems. The simulated scenarios were set up using Unity3D game engine (Unity Technologies, San Francisco, CA, USA). To be engaged in the VR environment, the Oculus Rift VR headset (Oculus VR, Facebook Inc., Menlo Park, CA, USA) was used. The VR scenario was composed of five complex scenes in a marketplace and is illustrated in the next section.

The complex system scenario is a decision-making VR game in which participants experience immersive real-life situations in a large retail grocery chain where uncertainty, ambiguity, and complexity exist. This supply chain could represent any non-coordinated system where problems arise due to a lack of systemic thinking. Although these scenarios were developed based on a well-known beer game, the aim and the scope of the developed scenarios were different and purposely designed to measure a participant’s skill set in addressing the grocery chain’s problems.

3.2.2. The Design of the VR Scenarios

A VR grocery chain was chosen because a majority of the study participants would be familiar with a grocery store and easily grasp concepts such as stocking shelves and displaying merchandise. Furthermore, this type of environment would also allow for multiple scenes and stories to be developed to guide the user through all 39 questions. In each scene, the user assumed the role of the grocery store manager. As the users began each prompted task, they were asked to make decisions that they thought were best for the store. Each decision they were prompted to make corresponded to a question and recorded the user’s decisions. Each decision-making event was presented in a non-biased, binary way that allowed the users to choose their personal preference and give genuine reactions to their decisions without feeling that a wrong decision was made because, within the ST practice, there are no “bad” or “good” decisions.

Before starting the VR experience, an ID identifier and a computer were assigned to each participant so that the information from the systems thinking questionnaire would be matched to the data from the VR scenario. Each student was assisted by a member of the research team to ensure that the experiment was conducted properly. All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Institutional Review Board of Mississippi State University (IRB-18-379).

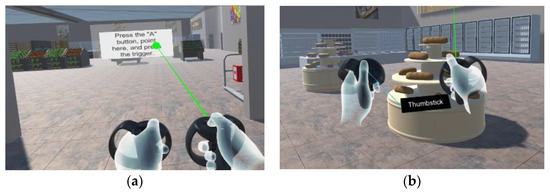

The VR scenarios started with an identification window where the assistant entered the participant ID and selected a mitigation type. To begin the VR scenarios, the student was asked to “Click the A button to start” by pointing the laser at the caption, as illustrated in Figure 2a. Oral instructions that were provided to facilitate and direct the participants’ interactions could be activated/deactivated during the simulation. The first audio recording began after pressing the caption and indicated how to move and interact in the scenes using the buttons and triggers on the touch controllers (see Figure 2b).

Figure 2.

(a) Start-off UI in the main store I scene. (b) Instructions for Oculus controllers.

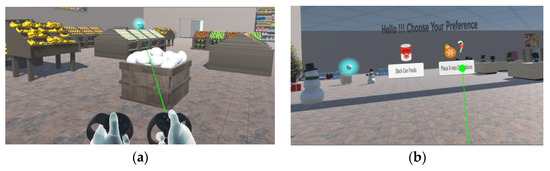

To start with the first VR scene, main store I, the participant was required to target and press a blue orb to continue with the simulation (see Figure 3a). Once the orb was selected, an audio recording was prompted to illustrate what the participant was supposed to do in the virtual supply chain store. The VR scenarios were reformulated, real-life events of the systems thinking instrument, which ensured a better output since the participants would respond to the situations based on their understanding (see Figure 3).

Figure 3.

(a) Blue orb to continue the scene. (b) User preference UI in the main store I scene.

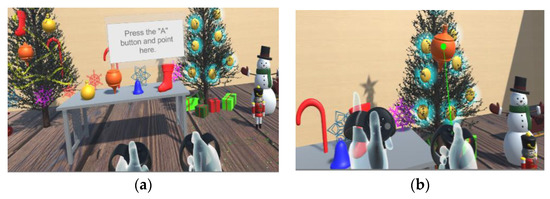

Depending on the user’s preference, the following scene could be either the can stacking or the Christmas decoration scene. This revealed one trait of the individual’s systems skills. The can-stacking scene consisted of three shelves with three different types of cans on each. The user was asked to place the rest of the cans on the shelves; he/she would either place the cans the same way they were given or as he/she pleased. Based on the way the user performed, another trait of their ST skills was reflected. Similarly, the Christmas scene was developed to identify individuals’ systems skills/preferences. In this scene, there were two trees: one decorated and one undecorated. The participant was asked to decorate the undecorated tree using the same ornaments used in the first tree. Figure 4 and Figure 5 demonstrate both Christmas and can-stacking scenes, respectively.

Figure 4.

(a) First UI to start the Christmas decoration scene. (b) Placing ornaments on the Christmas tree.

Figure 5.

(a) Picking cans from the table. (b) Placing cans onto shelves.

At the end of the second or third scene, the user was required to click on the appearing blue orb, which took him/her back to the main store scene II. In this scene, he/she responded to further questions and was then transferred to the final scene, the Christmas inventory scene, where the user interacted with three animated characters, as shown in Figure 6. These characters were employees working in the retail grocery supply chain system.

Figure 6.

(a) User interaction with employees. (b) Employees’ feedback to the user.

Table 5 provides a glimpse of the existing scenes. These developed scenes acted as a baseline in measuring the systems thinking skills.

Table 5.

Systems thinking preferences’ dimensions.

3.3. Experimental Design Steps and Study Population

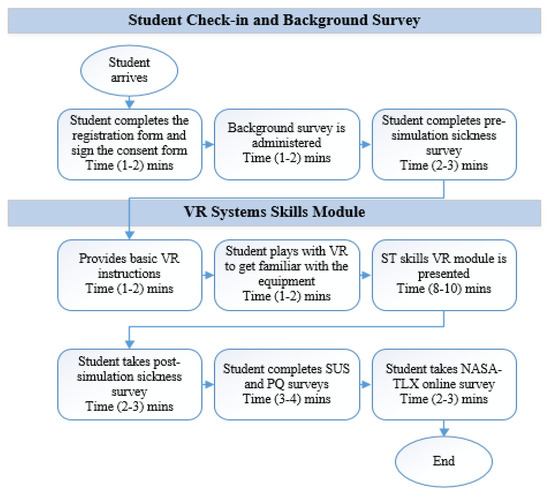

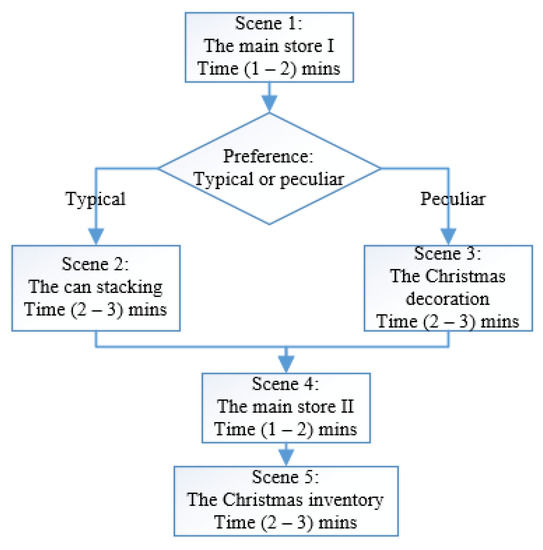

A total of 30 participants participated in this study based on immersive VR scenarios for systems skills thinking. The users were not allowed to wear the headset until they felt comfortable in the virtual environment before beginning the assessment. The first scene (the main store I) began with audio and closed-captioned instructions for the user, and the user was shown how to select the “Begin” button to start the assessment. This method of selecting objects and progressing forward in the assessment was repeated throughout the entire VR scenarios. Figure 7 below shows the data collection flow of the entire study and Figure 8 depicts the flow of VR scenarios.

Figure 7.

Data collection flow for STS study.

Figure 8.

Flow diagram of VR scenarios.

After the participants completed the VR scenarios, they were asked to complete three questionnaires (post-simulation sickness, system usability, presence questionnaire) and the NASA TLX [108] survey to evaluate their perceived workload of the environment. Six demographic questions (Gender, Origin, Race, Birth Year, Classification, and Major), three background questions (Virtual reality experience, Video game playing experience, and Retail store experience), and two dependent variables (The Level of Complexity scores in the ST skills instrument and the Level of Complexity scores in the ST skills VR gaming scenario) were collected from the participants.

The male students made up 63% of the sample and the majority (63%) of students were domestic. Among them, 50% of the participants were born between 1995 and 2000. Over 60% of the participants were pursuing bachelor’s degrees and 70% reported as Industrial Engineering students. For the background questions, students were asked to respond to a 5-point Likert scale (0–4) to describe their previous experiences. For the Video Game Experience, the scale was formed as: 0 = Never Played, 1 = Once or Twice, 2 = Sometimes, 3 = Often, and 4 = Game Stop Second Home. The description for the other two background questions was: 0 = None, 1 = Basic, 2 = Average, 3 = Above Average, and 4 = Expert. Around 36% of the participants had an average experience toward virtual reality and none of them described themselves as an expert. Regarding the video game-playing experience, 50% of the students rated themselves as occasional players and 16% as expert players. The majority of the participants (53%) declared that they had average knowledge regarding the retail store experience.

3.4. Simulation Sickness Mitigation Techniques

In previous VR studies, Hamilton et al. [79] and Ma et al. [72] identified some users who were unfamiliar with video games, VR technology, and other immersive environments. To improve the VR experience and minimize simulation sickness among participants, three mitigation techniques were employed with this study’s VR immersive scenarios. For the first mitigation technique, the regular Unity field of view was assigned and researchers designed an increasing reticle for the second option. The increasing reticles were designed in a way that three glowing rings appeared in the center field of view based on the velocity of user rotation in the VR simulation. Starting with one small ring with slight movements, users would glimpse three glowing rings with higher speeds. The reason for implementing the increasing reticle was to reduce simulation sickness by maintaining the user focus on the center field of view. In the third mitigation technique, users experienced a peripheral view during intense motion. To implement the third option, researchers used VR Tunnelling Pro asset from the Unity Asset store. The asset comes with multiple tunneling modes (3D cage, windows, cage, etc.) that work by fading out users’ peripheral view without significant information loss. This tunneling technique is capable of reducing simulation sickness when users engage with intense thumbstick movements in VR simulations.

4. Results

In this section, students’ systems thinking skills was assessed along with three efficacy measures results: simulation sickness, system usability, and user experience. In addition to the efficacy measures, NASA TLX assessment was used to measure users’ perceived work effort to finish VR scenarios.

4.1. The Assessment of Participants’ Systems Thinking Skills

The study used two ST skills score sheets. The first one was prepared from the student’s preferences in the ST skills instrument and the second one was prepared from the student’s decisions in the VR scenarios. The primary focus of preparing score sheets was to investigate the student’s responses toward a high-systematic approach to evaluate their level of systems skills. All responses were captured from binary-coded questions, and the responses for high-systematic skills were referred to as irregular patterns, unique approach, large systems, many people, a working solution, and adjusted system performance in the scenes.

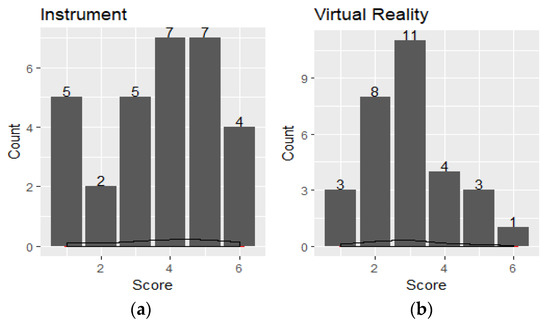

The score of the high-systematic skills for each participant ranged between 0–6, and the distribution of scores is shown in Figure 9. The shape of the distribution of the ST skills instrument and ST skills VR scenario scores were non-normally distributed. The average score of the ST skills instruments (Mean (M) = 3.57, Standard Deviation (SD) = 1.736) was higher than the participant’s average score in the VR scenarios (M = 2.97, SD = 1.245).

Figure 9.

(a) Score distribution of students’ ST skills via instrument. (b) Score distribution of students’ ST skills via VR scenarios.

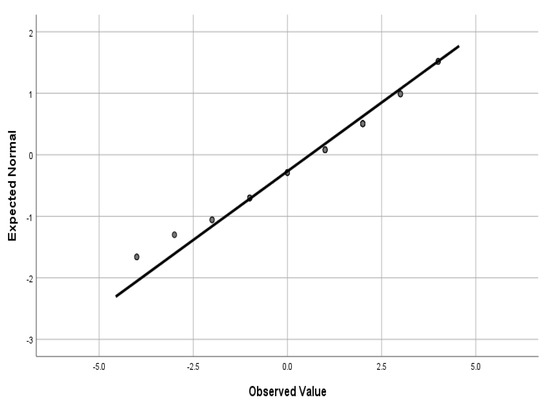

To investigate the mean score differences between the ST skills instrument and the VR scenarios, a paired sample t-test was performed under the normality assumption. First, the Shapiro–Wilk normality test confirmed the normality of score differences for the matched pairs in both scoring sheets (W = 0.954, df = 30, p = 0.213). Additionally, the Q–Q plot was plotted to confirm the normality of the score differences, as shown in Figure 10. Both the plot and the normality tests confirmed that the distribution of data was not significantly different from a normal distribution.

Figure 10.

Normal Q–Q plot of score differences.

With the confirmed normality, a paired samples t-test was carried out and the test confirmed that students’ ST skills scores in VR scenarios were not significantly different from their ST skills scores via the ST skills instrument (t (29) = −1.469, p = 0.153). The results verified the construct validity of VR scenarios used to measure the students’ ST skills in the study. These results also confirmed the validity and usability of the ST skills instrument, where the same binary questions were presented via VR scenarios in a different setting with no significantly different results. Furthermore, a one-way Analysis of Variance (ANOVA) was carried out to investigate the impact of demographic and knowledge variables on VR ST skills scores. Again, the Shapiro–Wilk normality test confirmed the normality of the distribution of VR scores (W = 0.921, p = 0.059). All independent variables had no statistically significant influence on students’ ST skills except knowledge in VR (F (3) = 3.041, p = 0.047). The post hoc Scheffe test revealed that the level of average knowledge in VR was significantly different from the level of above-average knowledge in VR and the latter group showed a higher average systematic score (M = 3.71, SD = 1.380) than the level of average knowledge in VR (M = 2.18, SD = 0.982).

4.2. Efficacy Investigation of the VR Scenarios

4.2.1. Simulation Sickness Assessment

Simulation sickness is a type of motion sickness that can occur during a VR simulation that results in sweating and dizziness. The user’s inability to sync between the visual motion and the vestibular system was the main reason for such discomfort in virtual environments. To reduce simulation sickness in this study, students were permitted to play with VR headsets and Unity before engaging with the actual study. As demonstrated in the data collection flow, participants marked their prior experience with simulation-related activities on a pre-simulation sickness questionnaire and responded to the post-questionnaire with the new experience at the end of the study. The questionnaire captured 16 probable symptoms that can be placed into three general groups through factor analysis: Nausea, Oculomotor, and Disorientation [105]. For each symptom, a four-point Likert was used to capture the degree of user discomfort (0 = none, 1 = slight, 2 = moderate, 3 = severe).

The scores for nausea, oculomotor, and disorientation in the pre-questionnaire were 7.63, 12.38, and 12.99, respectively, and the overall SSQ score was 12.59. This indicated that users experienced significant symptoms on previous simulation-related activities. The scores for three sub-symptoms in the post SSQ questionnaire were 13.67, 17.43, and 22.72, respectively. The overall SSQ score was 19.95 and the score verified the VR module was in an acceptable range and no immediate modifications were needed. However, the score triggered a necessity for design modifications to ensure a smooth simulation for future studies. The paired sample t-test confirmed that post-simulation scores were not significantly different from their prior simulation sickness scores, at a 0.05 significance level. Table 6 presents the SSQ scores concerning independent variables. The ANOVA results revealed that none of the demographics or knowledge-based questions significantly impacted the SSQ score, at 0.05 significance. This means gender, field of study, age, or any previous knowledge in similar technology/content made no difference in simulation sickness in this study. Furthermore, the three mitigation techniques were not significantly different from each other; however, the mean of no mitigation technique indicated fewer simulation sickness symptoms than the other mitigation techniques.

Table 6.

ANOVA results of SSQ score.

4.2.2. System Usability Assessment

System usability is a measurement used to assess the easiness of a given system to its users. The SUS questionnaire, which is used widely to capture user response toward the usability of a system, covers four important factors: efficiency, satisfaction, ease, and intuitiveness [106]. In this study, the SUS questionnaire was prepared with 10 items, including six positively worded items (1, 2, 3, 5, 7, 9) and four negatively worded items (4, 6, 8, 10). A five-point Likert scale was initially used and then converted to a scale ranging from 0 to 4. For the positively worded items, the scale was developed by subtracting one from the user response, and the scale for negatively worded items was developed by subtracting user response from five. The final SUS score was calculated by multiplying the sum of the adjusted score by 2.5. The new scale ranged from 0 to 100, and a score above 68 was considered as an above-average user agreement while any score less than 68 was deemed as below-average user agreement [109].

As shown in Table 7, all items were above 2, indicating the average user agreement of system usability of the developed VR scenarios. The total SUS score, which was 74.25 and above-average agreement, confirmed that users considered the VR scenarios to be effective and easy to use. Table 8 presents the SUS score with respect to independent variables. Similar to SSQ, independent variables did not significantly affect SUS scores, at 0.05 significance level. Interestingly, prior experience in VR or mitigation techniques did not impact the usability of the developed VR scenarios.

Table 7.

System usability scale results.

Table 8.

ANOVA results of SUS scores.

4.2.3. User Presence Experience Assessment

User presence can be defined as the sense of ‘being there’ in a computer-simulated environment. Similarly, Witmer and Singer [107] (p. 225) described the presence as “experiencing the computer-generated environment rather than the actual physical locale.” PQ consists of 22 six-point Likert scale questions to capture user agreement covering five subscales: involvement, immersion, visual fidelity, interface quality, and sound. The first 19 questions, excluding sound items, were used to calculate the total PQ score and the total score, ranging from 0 to 114.

Table 9 demonstrates the average score for five subscales in PQ and average score indicates all subscales had “above average” (>4) user agreement except interface quality. The below-average score for interface quality emphasized the need for a better visual display quality that did not interfere with performing tasks in future VR modules. The average PQ score for 19 items was 78.7, indicating the user experience for the developed VR scenarios was in an acceptable range. The impact of independent variables on PQ is shown in Table 10. The ANOVA result showed only nationality and age had a significant impact on the PQ score, at 0.05 significance level. The post hoc Scheffe test revealed that international students perceived a higher user experience than domestic students. Also, the post hoc test showed that students born in 1986–1990 were significantly different from students who were born in 1996–2000, at 0.05 significant level. The mean PQ value suggested that older participants perceived greater user experience than the younger students in the VR simulation.

Table 9.

Presence questionnaire results.

Table 10.

ANOVA results of PQ scores.

4.3. NASA Task Load Index (NASA TLX) Assessment

NASA TLX is a multi-dimensional scale that assesses a user’s perceived workload for a given task [108]. Six subscales were used to calculate the overall workload estimates regarding different aspects of user experiences: mental demand, physical demand, temporal demand, performance, effort, and frustration. These subscales were designed to represent the user workload after a thorough analysis conducted on different types of workers performing various activities [110]. The overall score varied between 0–100, and higher scores indicated greater perceived workload for the given task.

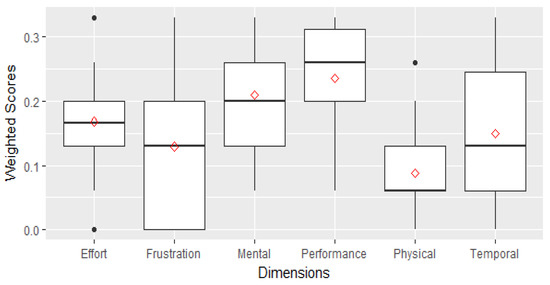

The mean and median measures for overall NASA TLX scores suggested that participants required approximately 36% work effort to perform the activities in the developed VR scenarios. The interquartile range reported 50% of the user overall scores ranged between 26% and 47%. Figure 11 displays the weighted scores for six subscales based on user responses. The performance dimension represented the highest contribution to overall index scores. Mental demand was the second-highest contributor and physical demand represented the lowest contribution for perceived workload in regard to performing the tasks in the VR scenarios.

Figure 11.

Weighted NASA TLX aspects of performance.

For further investigation, the impact of independent variables on the overall index score was analyzed. The normality of the distribution of the overall index score was tested with the Shapiro–Wilk normality test, and the p-value recommended that the scores were normally distributed (W = 0.9650, p = 0.4137). A one-way ANOVA was used to evaluate the differences in the levels of independent variables on the overall scores. As shown in Table 11 below, the results indicated none of the independent variables significantly impacted the overall index score.

Table 11.

ANOVA results of overall index score.

5. Conclusions and Future Directions

The main focus of this study was to assess a student’s systems thinking skills through developed VR scenarios. Researchers used a valid systems thinking skills tool developed by Jaradat [15] to construct VR scenarios representing complex, real-world problems. A VR retail store combined with realistic scenarios was used to evaluate students’ level of complexity using six binary questions. Two scoring sheets were prepared to record a student’s high-systematic approach, and the result showed the approach in which students reacted to the VR scenarios were not significantly different from their response obtained from the traditional systems skills instrument. This confirmed the construct validity of the ST skills instrument and the reliability of using VR scenarios to measure students’ high systematic skills. The student’s prior knowledge in VR significantly impacted his/her systematic skills, in which students with above-average prior VR exposure advanced the higher-systematic skills in the study.

The study showed gender does not affect the students’ systems thinking skills. This result is consistent with other studies in the literature. For example, Stirgus et al. [111] showed that both male and female engineering students demonstrated a similar level of systems thinking skills in the domain of complex system problems. Cox et al. [112] also showed that gender had no effect on student’s systems thinking ability based on a study conducted to investigate the systems thinking level of last- or penultimate-year of secondary-school (age 16–18 year) students in Belgium. The literature shows that the level of education is considered a significant factor in assessing individuals’ systems thinking skills. For example, Hossain et al. [113] and Nagahi et al. [114] explained that individuals with higher education backgrounds tend to be more holistic thinkers. The findings of this study are consistent with previous results with regards to the individuals’ simple average ST scores.

The efficacy results revealed that the developed VR scenarios are an efficient mechanism by meeting user expectations. The post-simulation sickness results indicated that the VR scenarios are in an acceptable range for users to access with no immediate modifications. The simulation sickness associated with the developed VR scenarios made no difference with their previous simulation-related experiences. The regular unity field of view indicated lower simulation sickness symptoms compared to the two new mitigation techniques (increasing reticle and peripheral view) employed with VR simulation. The participants indicated ‘above-average’ user agreement for the usability of the VR scenarios. This result also implied the user friendliness and ease of use of the VR scenarios. Furthermore, users experienced the virtual environment with no technical interference except lesser interface quality. The PQ results also showed that age positively influenced the user experience in the study.

5.1. Managerial Implications

Knowing an individual’s systems thinking skill is vital for many organizational personnel, including recruitment managers and decision makers. A thorough review of the literature showed that practitioners use limited tools and techniques to measure an individual’s systems thinking skills for making decisions in their organizations. In this study, researchers used an advanced, multi-dimensional tool to capture users’ systems thinking characteristics in a complex supply chain store. The developed VR scenarios reflect the effective usage of advanced technologies to measure individual systems thinking skills. Unlike traditional paper-based evaluations, VR technology provides an opportunity for users to interact with scenario-based, real-world, complex problems and respond accordingly. Some of the potential research implications can be categorized as follow.

- There are many related theories, concepts, perspectives, and tools that have been developed in the systems thinking field. Still, this study serves as the first-attempt research that bridges the ST theories and latest technology to measure an individual’s ST skills by simulating real-world settings.

- This research used VR to replicate the real-world, complex system scenarios of a large retail supply chain; however, researchers/practitioners can apply the same concept to other areas such as military, healthcare, and construction by developing and validating different scenarios relevant to their field.

- The findings of this research confirmed that modern technology is safe and effective to measure individual’s level of ST skills. These VR scenarios work as a recommender system that can assist practitioners/enterprises to evaluate individual’s/employees’ ST skills.

5.2. Limitations and Directions for Future Studies

The current study measured high systematic skills using the Level of Complexity dimension in the ST skills instrument. To provide a complete assessment of an individual’s ST skills, all seven dimensions will be modeled into a VR simulation in future studies. The user responses toward efficacy measures heightened the researchers’ interest and attention toward new features in future studies. More, new mitigation techniques will be integrated with future VR modules to investigate lower simulation sickness complications. To provide better interface quality for users, advanced graphics will be included in future studies. Moreover, new evaluations will be used to assess the efficiency and effectiveness of the VR modules, along with alternative simulation technologies. Alternative multi-paradigm modeling tools and cross-platform game engines (Simio or Unreal Engine) can be used to evaluate user satisfaction in future studies. For this study, researchers used Oculus Rift S to connect users with modeling software. Other, cheaper VR devices such as HTC Vive and PlayStation VR can be compared with Oculus to explore possibilities for better user experience. Since the sample size of this study is considered small, more studies are needed to collect more data sets to better draw conclusions of the proposed methodology.

Author Contributions

Conceptualization, V.L.D., R.J. and M.A.H.; methodology, V.L.D., R.J. and M.A.H.; software, V.L.D., S.K., M.A.H., P.J., E.S.W. and S.E.A.; validation, V.L.D., R.J., M.A.H. and E.S.W.; formal analysis, V.L.D.; investigation, V.L.D., S.K., R.J., P.J., E.S.W. and N.U.I.H.; resources, R.J. and M.A.H.; data curation, V.L.D., R.J. and M.A.H.; writing—original draft preparation, V.L.D., S.K., S.E.A., N.U.I.H. and F.E.; writing—review and editing, R.J. and M.A.H.; visualization, V.L.D., S.K., P.J., S.E.A., N.U.I.H. and F.E.; supervision, R.J. and M.A.H.; project administration, R.J. and M.A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of Mississippi State University (protocol code: IRB-18-379 and date of approval: 14 January 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Karam, S.; Nagahi, M.; Dayarathna, V.L.; Ma, J.; Jaradat, R.; Hamilton, M. Integrating Systems Thinking Skills with Multi-Criteria Decision-Making Technology to Recruit Employee Candidates. Expert Syst. Appl. 2020, 60, 113585. [Google Scholar] [CrossRef]

- Małachowski, B.; Korytkowski, P. Competence-based performance model of multi-skilled workers. Comput. Ind. Eng. 2016, 91, 165–177. [Google Scholar] [CrossRef]

- Clancy, T.R.; Effken, J.A.; Pesut, D. Applications of complex systems theory in nursing education, research, and practice. Nurs. Outlook 2008, 56, 248–256. [Google Scholar] [CrossRef]

- Jaradat, R.; Stirgus, E.; Goerger, S.R.; Buchanan, R.K.; Ibne Hossain, N.U.; Ma, J.; Burch, R. Assessment of Workforce Systems Preferences/Skills Based on Employment Domain. EMJ Eng. Manag. J. 2019, 32, 1–13. [Google Scholar] [CrossRef]

- Jaradat, R.M.; Keating, C.B.; Bradley, J.M. Individual capacity and organizational competency for systems thinking. IEEE Syst. J. 2017, 12, 1203–1210. [Google Scholar] [CrossRef]

- Maani, K.E.; Maharaj, V. Links between systems thinking and complex decision making. Syst. Dyn. Rev. J. Syst. Dyn. Soc. 2004, 20, 21–48. [Google Scholar] [CrossRef]

- Sterman, J. System Dynamics: Systems thinking and modeling for a complex world. In Proceedings of the ESD Internal Symposium, MIT, Cambridge, MA, USA, 29–30 May 2002. [Google Scholar]

- Yoon, S.A. An evolutionary approach to harnessing complex systems thinking in the science and technology classroom. Int. J. Sci. Educ. 2008, 30, 1–32. [Google Scholar] [CrossRef]

- Khalifehzadeh, S.; Fakhrzad, M.B. A Modified Firefly Algorithm for optimizing a multi stage supply chain network with stochastic demand and fuzzy production capacity. Comput. Ind. Eng. 2019, 133, 42–56. [Google Scholar] [CrossRef]

- Mahnam, M.; Yadollahpour, M.R.; Famil-Dardashti, V.; Hejazi, S.R. Supply chain modeling in uncertain environment with bi-objective approach. Comput. Ind. Eng. 2009, 56, 1535–1544. [Google Scholar] [CrossRef]

- Boardman, J.; Sauser, B. Systems Thinking: Coping with 21st Century Problems; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Cook, S.C.; Sproles, N. Synoptic views of defense Systems development. In Proceedings of the SETE 2000, Brisbane, Australia, 15–17 November 2000. [Google Scholar]

- Dayarathna, V.L.; Mismesh, H.; Nagahisarchoghaei, M.; Alhumoud, A. A discrete event simulation (des) based approach to maximize the patient throughput in outpatient clinic. Eng. Sci. Tech. J. 2019, 1, 1–11. [Google Scholar] [CrossRef]

- Hossain, N.U.I. A synthesis of definitions for systems engineering. In Proceedings of the International Annual Conference of the American Society for Engineering Management, Coeur d’Alene, ID, USA, 17–20 October 2018; American Society for Engineering Management (ASEM): Huntsville, AL, USA, 2018; pp. 1–10. [Google Scholar]

- Jaradat, R.M. Complex system governance requires systems thinking-how to find systems thinkers. Int. J. Syst. Syst. Eng. 2015, 6, 53–70. [Google Scholar] [CrossRef]

- Keating, C.B. Emergence in system of systems. In System of Systems Engineering-Innovation for the 21st Century; Jamshidi, M., Ed.; John Wiley & Sons: New York, NY, USA, 2008; pp. 169–190. [Google Scholar]

- Lane, D.C.; Jackson, M.C. Only connect! An annotated bibliography reflecting the breadth and diversity of systems thinking. Syst. Res. 1995, 12, 217–228. [Google Scholar] [CrossRef]

- Maier, M.W. Architecting principles for systems-of-systems. Syst. Eng. 1998, 1, 267–284. [Google Scholar] [CrossRef]

- Checkland, P. Systems thinking. In Rethinking Management Information Systems; Oxford University Press: Oxford, UK, 1999; pp. 45–56. [Google Scholar]

- Cabrera, D.; Cabrera, L.; Cabrera, E. A Literature Review of the Universal and Atomic Elements of Complex Cognition. In The Routledge Handbook of Systems Thinking; Cabrera, D., Cabrera, L., Midgley, G., Eds.; Routledge: London, UK, 2021; Volume 20, pp. 1–55. [Google Scholar]

- Cabrera, D.; Cabrera, L. Systems Thinking Made Simple: New Hope for Solving Wicked Problems, 1st ed.; Odyssean Press: Ithaca, NY, USA, 2015. [Google Scholar]

- Cabrera, D.; Cabrera, L.; Midgley, G. The Four Waves of Systems Thinking. In The Routledge Handbook of Systems Thinking; Cabrera, D., Cabrera, L., Midgley, G., Eds.; Routledge: London, UK, 2021; pp. 1–44. [Google Scholar]

- The Home of the Beergame. Available online: https://beergame.org/ (accessed on 21 May 2020).

- Learning Technologies. Available online: http://responsive.net/littlefield.html (accessed on 21 May 2020).

- Capsim. Available online: https://www.capsim.com/ (accessed on 25 May 2020).

- Jacobson, M.J. Problem solving, cognition, and complex systems: Differences between experts and novices. Complexity 2001, 6, 41–49. [Google Scholar] [CrossRef]

- Bruni, R.; Carrubbo, L.; Cavacece, Y.; Sarno, D. An Overview of the Contribution of Systems Thinking Within Management and Marketing. In Social Dynamics in a Systems Perspective; Springer: Berlin/Heidelberg, Germany, 2018; pp. 241–259. [Google Scholar]

- Loosemore, M.; Cheung, E. Implementing systems thinking to manage risk in public private partnership projects. Int. J. Proj. Manag. 2015, 33, 1325–1334. [Google Scholar] [CrossRef]

- Hossain, N.U.I.; Dayarathna, V.L.; Nagahi, M.; Jaradat, R. Systems Thinking: A Review and Bibliometric Analysis. Systems 2020, 8, 23. [Google Scholar] [CrossRef]

- Cabrera, D.; Cabrera, L. Complexity and systems thinking models in education: Applications for leaders. In Learning, Design, and Technology: An International Compendium of Theory, Research, Practice, and Policy; Spector, M.J., Lockee, B.B., Childress, M.D., Eds.; Springer: Cham, Switzerland, 2019; pp. 1–29. [Google Scholar]

- Tamim, S.R. Analyzing the Complexities of Online Education Systems: A Systems Thinking Perspective. TechTrends 2020, 64, 740–750. [Google Scholar] [CrossRef]

- Gero, A.; Zach, E. High school programme in electro-optics: A case study on interdisciplinary learning and systems thinking. Int. J. Eng. Edu. 2014, 30, 1190–1199. [Google Scholar]

- Carayon, P. Human factors in patient safety as an innovation. Appl. Ergon. 2010, 41, 657–665. [Google Scholar] [CrossRef]

- Gero, A.; Danino, O. High-school course on engineering design: Enhancement of students’ motivation and development of systems thinking skills. Int. J. Eng. Educ. 2016, 32, 100–110. [Google Scholar]

- Wilson, L.; Gahan, M.; Lennard, C.; Robertson, J. A systems approach to biometrics in the military domain. J. Forensic Sci. 2018, 63, 1858–1863. [Google Scholar] [CrossRef]

- Stanton, N.A.; Harvey, C.; Allison, C.K. Systems Theoretic Accident Model and Process (STAMP) applied to a Royal Navy Hawk jet missile simulation exercise. Saf. Sci. 2019, 113, 461–471. [Google Scholar] [CrossRef]

- Bawden, R.J. Systems thinking and practice in agriculture. J. Dairy Sci. 1991, 74, 2362–2373. [Google Scholar] [CrossRef]

- Jagustović, R.; Zougmoré, R.B.; Kessler, A.; Ritsema, C.J.; Keesstra, S.; Reynolds, M. Contribution of systems thinking and complex adaptive system attributes to sustainable food production: Example from a climate-smart village. Agric. Syst. 2019, 171, 65–75. [Google Scholar] [CrossRef]

- Mitchell, J.; Harben, R.; Sposito, G.; Shrestha, A.; Munk, D.; Miyao, G.; Southard, R.; Ferris, H.; Horwath, W.R.; Kueneman, E.; et al. Conservation agriculture: Systems thinking for sustainable farming. Calif. Agric. 2016, 70, 53–56. [Google Scholar] [CrossRef]

- Wilson, T.M.; Stewart, C.; Sword-Daniels, V.; Leonard, G.S.; Johnston, D.M.; Cole, J.W.; Wardman, J.; Wilson, G.; Barnard, S.T. Volcanic ash impacts on critical infrastructure. Phys. Chem. Earth 2012, 45, 5–23. [Google Scholar] [CrossRef]

- Siri, J.G.; Newell, B.; Proust, K.; Capon, A. Urbanization, extreme events, and health: The case for systems approaches in mitigation, management, and response. Asia Pac. J. Public Health 2016, 28, 15S–27S. [Google Scholar] [CrossRef]

- Leischow, S.J.; Best, A.; Trochim, W.M.; Clark, P.I.; Gallagher, R.S.; Marcus, S.E.; Matthews, E. Systems thinking to improve the public’s health. Am. J. Prev. Med. 2008, 35, S196–S203. [Google Scholar] [CrossRef]

- Trochim, W.M.; Cabrera, D.A.; Milstein, B.; Gallagher, R.S.; Leischow, S.J. Practical challenges of systems thinking and modeling in public health. Am. J. Public Health 2006, 96, 538–546. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.; Oliver, P.; Midgley, G.; Yearworth, M. Evaluating how system health assessment can trigger anticipatory action for resilience. In Disciplinary Convergence in Systems Engineering Research; Madni, A.M., Boehm, B., Ghanem, R., Erwin, D., Wheaton, M.J., Eds.; Springer: Cham, Switzerland, 2018; pp. 765–776. [Google Scholar]

- Senge, P.M. The fifth discipline, the art and practice of the learning organization. Perform. Instr. 1991, 30, 37. [Google Scholar] [CrossRef]

- Senge, P.M. The Art and Practice of the Learning Organization; Doubleday: New York, NY, USA, 1990; p. 7. [Google Scholar]

- Cavallo, A.; Ireland, V. Preparing for complex interdependent risks: A system of systems approach to building disaster resilience. Int. J. Disaster Risk Reduct. 2014, 9, 181–193. [Google Scholar] [CrossRef]

- Leveson, N. A systems approach to risk management through leading safety indicators. Reliab. Eng. Syst. Saf. 2015, 136, 17–34. [Google Scholar] [CrossRef]

- Salmon, P.M.; McClure, R.; Stanton, N.A. Road transport in drift? Applying contemporary systems thinking to road safety. Saf. Sci. 2012, 50, 1829–1838. [Google Scholar] [CrossRef]

- Brimble, M.; Jones, A. Using systems thinking in patient safety: A case study on medicine management. Nurs. Manag. 2017, 24, 28–33. [Google Scholar] [CrossRef]

- Jun, G.T.; Canham, A.; Altuna-Palacios, A.; Ward, J.R.; Bhamra, R.; Rogers, S.; Dutt, A.; Shah, P. A participatory systems approach to design for safer integrated medicine management. Ergonomics 2018, 61, 48–68. [Google Scholar] [CrossRef]

- Rusoja, E.; Haynie, D.; Sievers, J.; Mustafee, N.; Nelson, F.; Reynolds, M.; Sarriot, E.; Swanson, R.C.; Williams, B. Thinking about complexity in health: A systematic review of the key systems thinking and complexity ideas in health. J. Eval. Clin. Pract. 2018, 24, 600–606. [Google Scholar] [CrossRef] [PubMed]

- Ahiaga-Dagbui, D.D.; Love, P.E.; Smith, S.D.; Ackermann, F. Toward a systemic view to cost overrun causation in infrastructure projects: A review and implications for research. Proj. Manag. J. 2017, 48, 88–98. [Google Scholar] [CrossRef]

- Cavaleri, S.; Firestone, J.; Reed, F. Managing project problem-solving patterns. Int. J. Manag. Proj. Bus 2012, 5, 125–145. [Google Scholar] [CrossRef]

- Yap, J.B.H.; Skitmore, M.; Gray, J.; Shavarebi, K. Systemic view to understanding design change causation and exploitation of communications and knowledge. Proj. Manag. J. 2019, 50, 288–305. [Google Scholar] [CrossRef]

- Bashan, A.; Kordova, S. Globalization, quality and systems thinking: Integrating global quality Management and a systems view. Heliyon 2021, 7, e06161. [Google Scholar] [CrossRef] [PubMed]

- Conti, T. Systems thinking in quality management. TQM J. 2010, 22, 352–368. [Google Scholar] [CrossRef]

- Dorani, K.; Mortazavi, A.; Dehdarian, A.; Mahmoudi, H.; Khandan, M.; Mashayekhi, A.N. Developing question sets to assess systems thinking skills. In Proceedings of the 33rd International Conference of the System Dynamics Society, Cambridge, MA, USA, 19–23 July 2015. [Google Scholar]

- Assaraf, O.B.Z.; Orion, N. Development of system thinking skills in the context of earth system education. J. Res. Sci. Teach. Off. J. Natl. Assoc. Res. Sci. Teach. 2005, 42, 518–560. [Google Scholar] [CrossRef]

- Stave, K.; Hopper, M. What constitutes systems thinking? A proposed taxonomy. In Proceedings of the 25th International Conference of the System Dynamics Society, Boston, MA, USA, 29 July–3 August 2007; pp. 1–24. [Google Scholar]

- Richmond, B. The “Thinking” in Systems Thinking: Seven Essential Skills; Pegasus Communications: Waltham, MA, USA, 2000. [Google Scholar]

- Cabrera, D.; Colosi, L. Distinctions, systems, relationships, and perspectives (DSRP): A theory of thinking and of things. Eval. Program Plann. 2008, 31, 311–316. [Google Scholar] [CrossRef] [PubMed]

- Cabrera, D.; Cabrera, L. Developing Personal Mastery of Systems Thinking. In The Routledge Handbook of Systems Thinking; Cabrera, D., Cabrera, L., Midgley, G., Eds.; Routledge: London, UK, 2021; pp. 1–40. [Google Scholar]

- Cabrera, D.; Cabrera, L.; Powers, E. A unifying theory of systems thinking with psychosocial applications. Syst. Res. Behav. Sci. 2015, 32, 534–545. [Google Scholar] [CrossRef]

- Cabrera, L.; Sokolow, J.; Cabrera, D. Developing and Validating a Measurement of Systems Thinking: The Systems Thinking and Metacognitive Inventory (STMI). In The Routledge Handbook of Systems Thinking; Cabrera, D., Cabrera, L., Midgley, G., Eds.; Routledge: London, UK, 2021; pp. 1–42. [Google Scholar]

- Plate, R. Assessing individuals’ understanding of nonlinear causal structures in complex systems. Syst. Dyn. Rev. 2010, 26, 19–33. [Google Scholar] [CrossRef]

- Moore, S.M.; Komton, V.; Adegbite-Adeniyi, C.; Dolansky, M.A.; Hardin, H.K.; Borawski, E.A. Development of the systems thinking scale for adolescent behavior change. West. J. Nurs. Res. 2018, 40, 375–387. [Google Scholar] [CrossRef]

- Sweeney, L.B.; Sterman, J.D. Bathtub dynamics: Initial results of a systems thinking inventory. Syst. Dyn. Rev. 2000, 16, 249–286. [Google Scholar] [CrossRef]

- Sommer, C.; Lücken, M. System competence–Are elementary students able to deal with a biological system? Nord. Stud. Sci. Educ. 2010, 6, 125–143. [Google Scholar] [CrossRef]

- Molnar, A. Computers in education: A brief history. Journal 1997, 24, 63–68. [Google Scholar]

- Sutherland, I.E. The ultimate display. In Information Processing 1965, Proceedings of the IFIP Congress, New York, NY, USA, 24–29 May 1965; Macmillan and Co.: London, UK, 1965; pp. 506–508. [Google Scholar]

- Ma, J.; Jaradat, R.; Ashour, O.; Hamilton, M.; Jones, P.; Dayarathna, V.L. Efficacy Investigation of Virtual Reality Teaching Module in Manufacturing System Design Course. J. Mech. Des. 2019, 141, 1–13. [Google Scholar] [CrossRef]

- Bricken, M.; Byrne, C.M. Summer students in virtual reality: A pilot study on educational applications of virtual reality technology. Virtual Real. 1993, 199–217. [Google Scholar] [CrossRef]

- Pantelidis, V.S. Virtual reality in the classroom. Educ. Technol. 1993, 33, 23–27. [Google Scholar]

- Crosier, J.K.; Cobb, S.V.; Wilson, J.R. Experimental comparison of virtual reality with traditional teaching methods for teaching radioactivity. Educ. Inform. Tech. 2000, 5, 329–343. [Google Scholar]

- Mikropoulos, T.A. Presence: A unique characteristic in educational virtual environments. Virtual Real. 2006, 10, 197–206. [Google Scholar] [CrossRef]

- Dickey, M.D. Brave new (interactive) worlds: A review of the design affordances and constraints of two 3D virtual worlds as interactive learning environments. Interact. Learn. Environ. 2005, 13, 121–137. [Google Scholar] [CrossRef]

- Dawley, L.; Dede, C. Situated Learning in Virtual Worlds and Immersive Simulations. In Handbook of Research on Educational Communications and Technology; Springer: New York, NY, USA, 2014; pp. 723–734. [Google Scholar]

- Hamilton, M.; Jaradat, R.; Jones, P.; Wall, E.; Dayarathna, V.; Ray, D.; Hsu, G. Immersive Virtual Training Environment for Teaching Single and Multi-Queuing Theory: Industrial Engineering Queuing Theory Concepts. In Proceedings of the ASEE Annual Conference and Exposition, Salt Lake City, UT, USA, 24–27 June 2018; pp. 24–27. [Google Scholar]

- Byrne, C.; Furness, T. Virtual Reality and education. IFIP Trans. A Comput. Sci. Tech. 1994, 58, 181–189. [Google Scholar]

- Furness, T.A.; Winn, W. The Impact of Three-Dimensional Immersive Virtual Environments on Modern Pedagogy. Available online: http://vcell.ndsu.nodak.edu/~ganesh/seminar/1997_The%20Impact%20of%20Three%20Dimensional%20Immersive%20Virtual%20Environments%20on%20Modern%20Pedagogy.htm (accessed on 25 May 2020).

- McMahan, R.P.; Bowman, D.A.; Zielinski, D.J.; Brady, R.B. Evaluating display fidelity and interaction fidelity in a virtual reality game. IEEE Trans. Vis. Comput. Graph. 2012, 18, 626–633. [Google Scholar] [CrossRef]

- Opdyke, D.; Williford, J.S.; North, M. Effectiveness of computer-generated (virtual reality) graded exposure in the treatment of acrophobia. Am. J. Psychiatry 1995, 1, 626–628. [Google Scholar]

- Ende, A.; Zopf, Y.; Konturek, P.; Naegel, A.; Hahn, E.G.; Matthes, K.; Maiss, J. Strategies for training in diagnostic upper endoscopy: A prospective, randomized trial. Gastrointest. Endosc. 2012, 75, 254–260. [Google Scholar] [CrossRef] [PubMed]

- Triantafyllou, K.; Lazaridis, L.D.; Dimitriadis, G.D. Virtual reality simulators for gastrointestinal endoscopy training. World J. Gastrointest. Endosc. 2014, 6, 6–12. [Google Scholar] [CrossRef] [PubMed]

- Durlach, N.I.; Mavor, A.S. Virtual Reality Scientific and Technological Challenges; National Academy Press: Washington, DC, USA, 1995. [Google Scholar]

- Dolansky, M.A.; Moore, S.M. Quality and safety education for nurses (QSEN): The key is systems thinking. Online J. Issues Nurs. 2013, 18, 1–12. [Google Scholar] [PubMed]

- Hooper, M.; Stave, K.A. Assessing the Effectiveness of Systems Thinking Interventions in the Classroom. In Proceedings of the 26th International Conference of the System Dynamics Society, Athens, Greece, 20–24 July 2008. [Google Scholar]

- Plate, R. Attracting institutional support through better assessment of systems thinking. Creat. Learn. Exch. Newsl. 2008, 17, 1–8. [Google Scholar]

- Camelia, F.; Ferris, T.L. Validation studies of a questionnaire developed to measure students’ engagement with systems thinking. IEEE Trans. Syst. Man Cybern. Syst. 2016, 48, 574–585. [Google Scholar] [CrossRef]

- Frank, M. Characteristics of engineering systems thinking-a 3D approach for curriculum content. IEEE Trans. Syst. Man Cybern. Part C 2002, 32, 203–214. [Google Scholar] [CrossRef]

- Frank, M.; Kasser, J. Assessing the Capacity for Engineering Systems Thinking (Cest) and Other Competencies of Systems Engineers. In Systems Engineering-Practice and Theory; Cogan, P.B., Ed.; InTech: Rijeka, Croatia, 2012. [Google Scholar]

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From game design elements to gamefulness: Defining gamification. In Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments, Tampere, Finland, 28–30 September 2011; pp. 9–15. [Google Scholar]

- Juul, J. The game, the player, the world: Looking for a heart of gameness. In Proceedings of the DiGRA International Conference, Utrecht, The Netherlands, 4–6 November 2003; pp. 30–45. [Google Scholar]

- Bogost, I. Persuasive Games; MIT Press: Cambridge, MA, USA, 2007; Volume 5. [Google Scholar]

- McGonigal, J. Reality Is Broken: Why Games Make Us Better and How They Can Change the World; Penguin Books: New York, NY, USA, 2011. [Google Scholar]

- Hamari, J.; Lehdonvirta, V. Game design as marketing: How game mechanics create demand for virtual goods. Int. J. Bus. Sci. Appl. Manag. 2010, 5, 14–29. [Google Scholar]

- Hamari, J.; Järvinen, A. Building customer relationship through game mechanics in social games. In Business, Technological, and Social Dimensions of Computer Games: Multidisciplinary Developments; IGI Global: Hershey, PA, USA, 2011; pp. 348–365. [Google Scholar]

- Stenros, J.; Sotamaa, O. Commoditization of Helping Players Play: Rise of the Service Paradigm. In Proceedings of the DiGRA: Breaking New Ground: Innovation in Games, Play, Practice and Theory, Brunel University, London, UK, 1–4 September 2009. [Google Scholar]

- Wood, S.C. Online games to teach operations. INFORMS Trans. Educ. 2007, 8, 3–9. [Google Scholar] [CrossRef]

- Grohs, J.R.; Kirk, G.R.; Soledad, M.M.; Knight, D.B. Assessing systems thinking: A tool to measure complex reasoning through ill-structured problems. Think. Ski. Creat. 2018, 28, 110–130. [Google Scholar] [CrossRef]

- Dayarathna, V.L.; Karam, S.; Jaradat, R.; Hamilton, M.; Nagahi, M.; Joshi, S.; Ma, J.; Driouche, B. Assessment of the Efficacy and Effectiveness of Virtual Reality Teaching Module: A Gender-based Comparison. Int. J. Eng. Educ. 2020, 36, 1938–1955. [Google Scholar]

- Brooks, B.M.; Rose, F.D.; Attree, E.A.; Elliot-Square, A. An evaluation of the efficacy of training people with learning disabilities in a virtual environment. Disabil. Rehabil. 2002, 24, 622–626. [Google Scholar] [CrossRef]

- Rizzo, A.A.; Buckwalter, J.G. Virtual reality and cognitive assessment and Rehabilitation: The State of the Art. In Virtual Reality in Neuro-Psycho-Physiology: Cognitive, Clinical and Methodological Issues in Assessment and Rehabilitation; Rivs, S., Ed.; IOS Press: Amsterdam, The Netherlands, 1997; Volume 44, pp. 123–146. [Google Scholar]

- Kennedy, R.S.; Lane, N.E.; Berbaum, K.S.; Lilienthal, M.G. Simulator sickness questionnaire: An enhanced method for quantifying simulator sickness. Int. J. Aviat. Psychol. 1993, 3, 203–220. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Witmer, B.G.; Singer, M.J. Measuring presence in virtual environments: A presence questionnaire. Presence 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Hart, S.G. NASA Task Load Index (TLX). Volume 1.0; Paper and Pencil Package; NASA: Washington, DC, USA, 1986.