1. Introduction

Addressing complex problems in industry, government, and academic sectors often requires the coordination of expertise of multiple human, and more recently, non-human, entities [

1,

2]. Critical aspects of team level event response and problem solving include the support of distributed expertise through “information alignment” [

3], which facilitates efficient and effective coordination. While human factors and social psychology research on team-oriented work offers a wide array of frameworks, methodologies, and applications [

4,

5], studying information alignment in teams remains a challenge due to constraints around time and resources.

Simulation modeling offers a potential avenue for exploring taskwork and information alignment within teams. More specifically, simulation methods may allow researchers to observe factor interactions and generate new theories or research questions from model outputs. Identified factors may be operationalized and incorporated into mathematical representations of teams performing taskwork. This paper, adapted from [

6], investigates the general concept of using simulation to help define and operationalize factors that affect team coordination during task performance. Future research and model development may be able to accommodate experiments and scenario testing of autonomous agent characteristics within a particular (or hypothetical) human team.

3. Materials and Methods

Simulation-based modeling was the main method employed for this research, which was completed to establish feasibility of using this particular method within team research applications. Past research in communication theory has suggested such software simulation techniques, focusing on the development of “transactive memory” in task performance [

7] and evolutions of “knowledge networks” [

9]; more recent work has begun to address the role of simulation modeling in disaster response planning [

37]. The model was constructed in Java by operationalizing theories and constructs from other domains, including group dynamics, management, and psychology. The general process of creating the model included: defining the team and factors of interest, defining the “agent” interactions based on theories, defining the activities or tasks to be performed in the form of equations, choosing a modeling method and language, constructing the model, verifying model performance, and internal validation.

3.1. Defining the Team and Factors of Interest

Ghosh and Caldwell [

38] explored the idea of using “player stats” to describe real teams, with the stats representing characteristics of each team member. Onken and Caldwell [

39] furthered this idea by constructing a model in Java with abstracted characteristics and measures, and modeled using real task performance data from NASA Mission Control. The study conducted as part of this paper aimed to operationalize specific characteristics from existing group dynamics and sociotechnical coordination theories, but agnostic of a particular team or environment. Instead, the focus was to connect relevant team factors, such as subject matter expertise, cognitive styles, and task types across different team combinations (and of differing team sizes), within a model in a way that treatments could be applied and outcomes be easily observed. Teams were artificially constructed using general statistics of respective factor distributions from literature. One factor did not have general statistics available: “expertise”, especially defined in terms of dimensions [

40], is not easily measured, but theoretically could be evaluated within an organization. Thus, the authors chose a triangular distribution to represent expertise across an organization, and applied it to a pool of potential team members across a range of dimensions of expertise.

3.2. Defining Agent Interactions

Social interactions can be defined or operationalized using existing theories, constructs, or data, as well as a number of other constructs. For the purposes of this feasibility study, agents in the examples presented are human agents represented with characteristics of social and organizational styles of information processing and sharing. However, this can also be applied to non-human agents interacting with humans, such as robotic assistants, virtual assistants, or simply program interfaces. Generic examples of these will be described below.

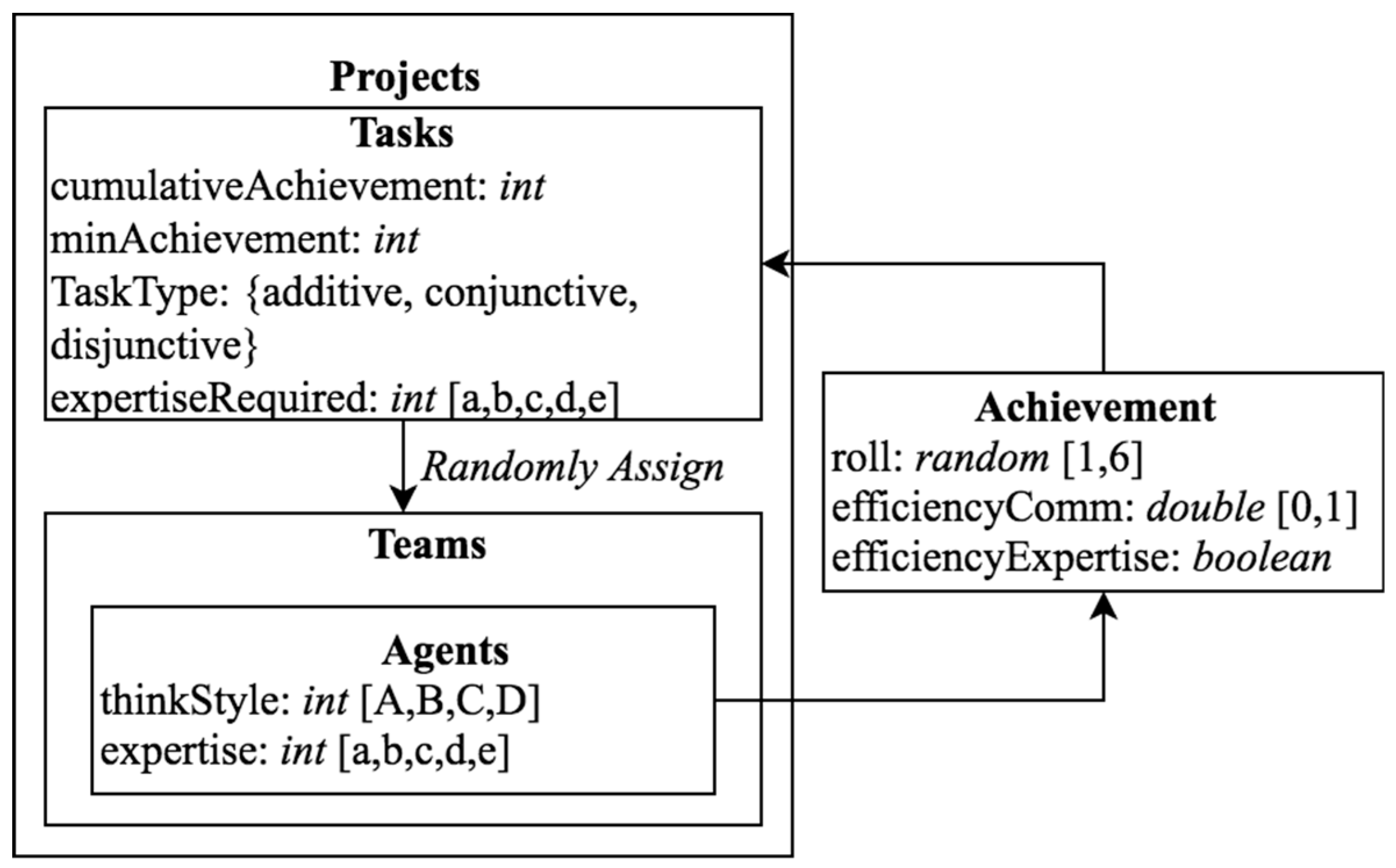

Figure 1 depicts the model construct applied in this study.

Basic interactions were operationalized using basic mathematical representations of task type and cognitive style compatibilities. Group dynamics literature defines three team task types as they relate to team interactions, more specifically regarding how expertise can be applied to the task [

28].

Additive tasks are tasks that allow for all expertise in a team to be combined;

disjunctive tasks require that only one member of the team need have the required expertise;

conjunctive tasks require that all team members have the minimum required expertise to perform the task (in the context of a human interacting with a non-human, task types can represented by accounting for knowledge or expertise that the non-human assistant might have access to, and its ability to share this with the human (or user), for a particular task in the proper context).

Constructs from management and psychology have developed four-factor models of communication and cognitive styles to describe profiles of individuals that can then be put into the context of interactions with others who have different cognitive style profiles. One such construct is the Whole Brain Model [

29,

41,

42]. This model describes four factors of thinking, and uses the Herrmann Brain Dominance Instrument (HBDI) to assess individual cognitive style as combination of factors (each factor measured with a magnitude), and expressed as a four-dimensional point. Many different four-factor models exist, and no attempt was made to operationally test this (or any other) specific model. The HBDI model presented as an example only as a reference to a four-dimensional coordinate measure of overall cognitive style, rather than a single qualitative “type” description (such as might be described with the Myers-Briggs or other personality indicator). Thus, the four-factor model developed here is simply a descriptive example of interactions of compatibility between these factors in a way that could be operationalized. Three different algorithms were explored to calculate distance between cognitive style points [

43,

44], each resulting in a number between 0 and 1 that could then be used as a coefficient for calculating effectiveness of communication.

3.3. Operationalizing Team Performance Factors

The developed model was able to successfully execute code based on operationalized factors that impact task performance in teams. More specifically, the authors were able to produce three comparable algorithms for calculating communication efficiency as a coefficient based on an existing four-factor construct of cognitive style and different methods of computing distance. Note that the goal of this model was to demonstrate operational feasibility of incorporating a four-factor construct, not the specific construct (such as HBDI, DISC, or Myers-Briggs, or other multi-factor conceptualization of cognitive style). Using the magnitude value for each of the four factors, a profile of cognitive style per individual can be represented as a coordinate point in four-dimensional space. The distance between points represents the amount of figurative distance teams would need to cross in order to gain information alignment during task performance.

The first algorithm computes communication efficiency by determining the Euclidean distance between the centroid of a given team (“team centroid” or TC) with all individuals included, and the centroid of the team without the individual with the most divergent cognitive style profile, or the outlier (TCoutlier). Equations (1)–(3) are general equations used for all algorithms. Equations (4) and (5) are used to determine communication efficiency for Algorithm 1.

The second algorithm computes communication efficiency by determining the angle between the centroid of a given team (TC) and the centroid of the team without the outlier (TCoutlier). Equations (6)–(10) are used to determine communication efficiency for Algorithm 2.

The third and final algorithm computes communication efficiency similar to first algorithm. That is, communication efficiency is calculated as the Euclidean distance, but instead of between centroid with and without the outlier, it is between the centroid of the team (TC) and the coordinate point of the outlier (TCoutlier). Equations (1), (3), and (5) are the same from Algorithm 1, and Equation (11) below is used to determine the distance for Algorithm 3.

As explained, each of these algorithms calculates a distance expressed as a coefficient, which indicates the potential difficulty in reaching information alignment within teams performing time-based tasks. That is, a low coefficient would affect overall progress towards a task in a given attempt, and result in more time to complete a task. Alternatively, low distance between points indicates similar cognitive styles amongst team members, resulting in a high coefficient of efficiency, and a high amount of productivity towards task completion. The underlying construct discusses this effect in homogeneous teams with respect to time-based indicators [

29], and empirical evidence from [

22] supports the idea that even

perceived dissimilarity has effects on productivity. The authors note that time as a measure of task performance is applies to only certain types of tasks in the task circumplex, and that other outcomes and interactions should be measured for tasks such as problem solving and group decision-making.

3.4. Choosing a Model Type and Constructing the Model

There are different forms of computational models and methods for numerical simulation, depending on what needs to be modeled. In this case, the goal was to model human behavior in teams with respect to information alignment. Considering the deterministic nature of the quantification techniques regarding communication effectiveness and task completion, Monte Carlo methods were considered to be viable option for representing probabilistic distributions of success. Another method explored included agent-based modeling (ABM), which allows individual behaviors to amalgamate to emergent behaviors in the larger organization [

45,

46]. Since joint team tasks (based on expertise and cognitive style) were the focus of this study, ABM techniques did not produce emergent behavior. However, ABM should be considered in future studies that further expand on individual contributions to larger tasks.

3.5. Model Performance and Internal Validation

The model analyzed in this research randomly selected a given number of agents from a pool (or “virtual organization”) that had triangular distributions of abstracted areas of expertise to represent how actual expertise might be distributed across a real organization. Though expertise is not often measured, and rarely static, it could be measured and adopted into such a model. The pool also had defined distributions of cognitive style based on the HBDI instrument and statistical outputs of Whole Brain Thinking research. The team would be randomly assigned a series of tasks that required a given amount of expertise in different dimensions to be completed; each task was also assigned a type (additive, disjunctive, conjunctive) to determine how expertise of the team would be evaluated against the task requirement. Communication effectiveness was calculated using one of the three different algorithms to determine cognitive style compatibility (mentioned earlier), and affected the overall time to complete a task. The more diverse the team, the longer it would take them to share expertise to complete a task.

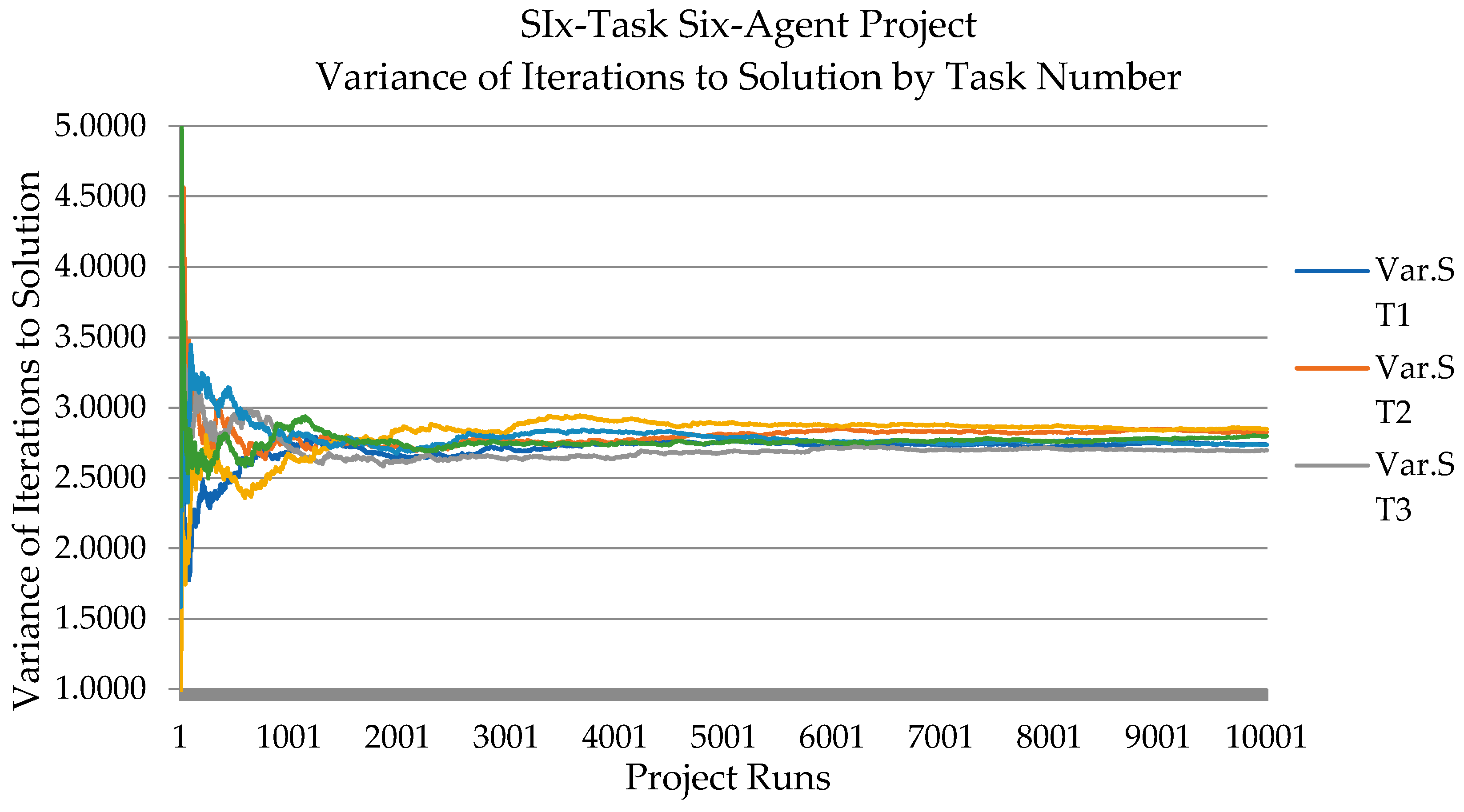

Outputs of the model could be verified based on theoretical statements. However, distributional aspects of the model were evaluated separately by evaluating model convergence, shown in

Figure 2. The variance of the model was measured over 10,000 runs until a stable point was reached at approximately 3000 iterations. This point became the minimum number of runs each experimental combination would need to run in order to reduce stochastic variance in the outputs (and, thus, highlight differences in treatment conditions).

4. Results

The goals of the study were: (a) to determine feasibility of creating a simulation model of teams performing tasks that is capable of producing testable results, and (b) to further simulated team performance research by operationalizing factors related to team performance. The following subsections describe results with respect to each of these items.

4.1. Model Evaluation

As the purpose of the study was to test the feasibility of using simulation methods to study information alignment aspects of taskwork, emphasis of the Results section is on the ability of the model to stabilize over time (convergence of variance) and produce testable outputs. The authors note that the model itself has not been validated for specific hypothesis testing of particular organizational manipulations or any specific theoretical construct of cognitive processing style. Higher levels of validation and verification were not within the scope of this feasibility study, but offer opportunity for further development.

Furthermore, the study did not include measures of uncertainty or robustness, as this example model will not be used for experimentation or to predict outcomes. Future research in model development, with full attention to robustness and validity, are important if models such as this are to be used in experimentation and prediction cases. This model was useful in the sense that it was able to inspire more research questions around the included factors, as well as other potentially influential factors; these could be researched, incorporated, and studied within this virtual environment.

Feasibility was determined through (a) confirmation that the designed model could run in reasonable time, (b) ability to easily modify important parameters, and (c) when calculating variance reduction and statistical convergence, that is there no significant barrier in executing the simulation (i.e., runtime of the model) at different iteration levels (600 runs vs. 3000 runs vs. 10,000 runs). The model was able to run all 72 experimental treatments for 600 runs, with each treatment needing roughly three minutes to execute. The model was then tested for convergence (further described below), by running one treatment 10,000 times, and evaluating at how many runs the model’s variance stabilizes. This was found to be around 3000 runs, which became the minimum number of runs needed per experimental treatment for hypothesis testing. The runtime for 3000 runs was under 10 min, and the runtime for 10,000 runs was around 30 min. Compared to the time and cost associated with current team research methods, these numbers are a notable improvement, and do not indicate significant barriers at different iteration levels. Finally, changing a parameter in the model is as simple as editing a line of code; additional parameters and individual agent variables can be introduced into the model with relative ease when using object-oriented programming. With these three aspects confirmed, the feasibility of using simulation modeling methods for team information alignment research is established.

4.2. Model Experimental Outputs

The measured model outputs were iterations to solution, which essentially reflects an abstracted amount of time needed to complete a given project. Recalling that the constructs of task performance emphasize time as a measure of performance, iterations to solution was chosen as a comparable measure of performance for the model. An Anderson-Darling test indicated non-normal distributions of task performance (p < 0.001) across independent variables, thus, a Mood’s Median test with a 95% confidence level was used to measure differences between team size, project configuration, and algorithm used to calculate cognitive style compatibility.

These statistical tests were conducted to determine how the team, project, and compatibility variables affected task completion in both the additive and disjunctive task types. The results, shown in

Table 1 below, indicate significant differences in time to complete certain types of tasks to show differences in time-based task performance based on model inputs, including task type, team composition, and team size. It is important to note that random selection of expertise profiles from a simulated organization led to extremely low probabilities of teams

ever completing the conjunctive task.

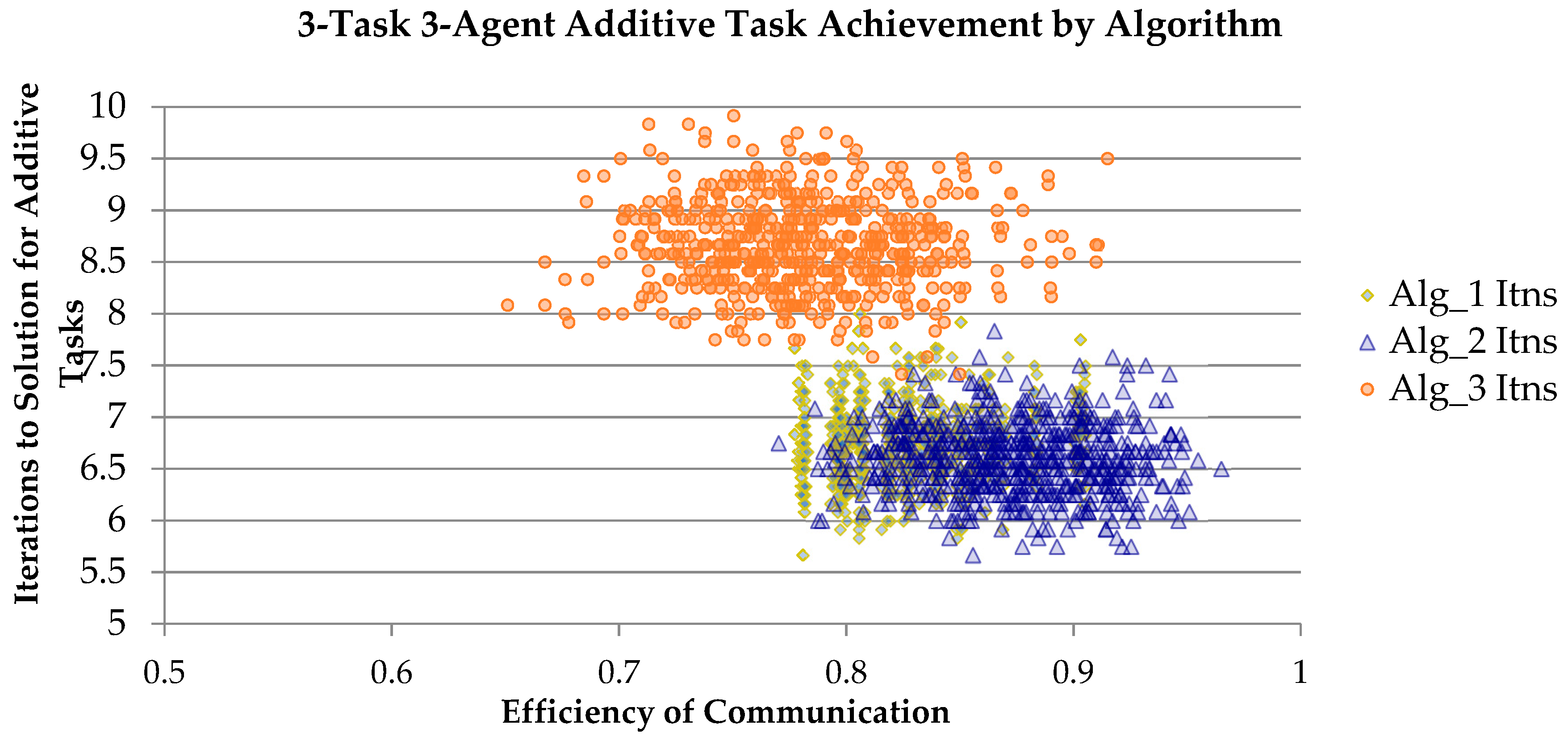

4.3. Comparing Diversity Coordination Algorithms

Recall that three distinct algorithms were proposed to assess the effects of cognitive diversity (differences in information processing style or technique) on information alignment and expertise coordination among members of a heterogenous team. This section describes how the simulation results helped to test the implications of those algorithms. Each of the three algorithms was examined across task types, to determine the impact of different measures of cognitive diversity on team performance and problem solving. A graphical comparison of the results is shown in

Figure 3 below. The results of Algorithms 1 and 2 are similar, and may be evaluated side by side in future studies regarding validation of such algorithms. Algorithm 3 resulted in lower efficiency and higher iterations to solution, which may represent a more conservative approach to calculating implications of cognitive style diversity on time-based task performance on teams. However, there is no a priori or empirical justification at this time to assume the relative accuracy or validity of one cognitive diversity coordination algorithm over another; this is an intriguing and open question for both the simulation and human factors research communities.

Because the cognitive style and communication coordination calculations (and even the population of the virtual organization “pool”) were operationalized in a manner not constrained by any particular cognitive construct, the range of team members modeled is not restricted to the characteristics of any particular organizational environment. This approach thus moderates particular confounds associated with implicit biases regarding gender, ethnicity, or neurodiversity (such as persons on the autistic spectrum) in modern learning and work settings [

47,

48]. In fact, this approach does not require all team members to be based on naturally occurring human cognitive styles at all. In other words, team members that are software agents can be included in the model, as long as a style of communication for human-agent communication can be estimated. While there have been multiple studies within the field of computer science to create and support such human-agent hybrids, the efforts to model performance and coordination of self-organizing knowledge networks [

7,

49] incorporating both human and software agent team members are less well developed.

5. Discussion and Conclusions

Revisiting the goal of this research, the results indicate significant promise in using simulation modeling methods to study team performance and group dynamics. Model outputs provided data for hypothesis testing such that the researchers could perform quantitative experiments (versus qualitative methods common in team environments). The results above helped generate new research questions regarding learning effects and style flexibility. These research outcomes are directed at researchers who can further explore task performance factors for a given task setting by constructing their own model with factors that are relevant to their particular research questions. Further model exploration, development, and validation is needed for such a model to be deployed in real world settings. However, future iterations of this research may allow managers and leaders to explore hypothetical situations, effectively testing out ideas of team construction and task performance.

While the goal for this feasibility study was not to determine

how expertise and cognitive style directly affect performance, the researchers were able to observe the larger system effects of these factors across team size and task type. Results did indicate that homogeneous teams will perform certain activities faster, which is consistent with the literature [

29], and that available expertise directly impacts performance of certain task types. The authors note that the measure of desired outputs (throughput, creativity, etc.) may vary across potential tasks, and that cognitive diversity impacts these outputs differently [

50]. However, the more important outcome of the results is that model construction can integrate multiple factors across different theory bases and allow for experimentation and advance theory generation.

The results support a new, more accessible avenue for exploring human coordination in complex systems, including the design of intelligent technologies. Humans operating in complex systems must often work with technology and each other in order to complete tasks. As highlighted in some applied literature, and partially addressed in this paper, multi-organizational communication and task coordination for large-scale disaster response and emergency management represents a significant challenge on organizational, situational, and technological dimensions [

37,

51,

52,

53]. Traditional information theory and computer science approaches to multi-agent coordination do not incorporate the challenges of social and organizational factors, including cognitive style and distributed dimensions of expertise [

40], that both limit and enable effective team performance. By contrast, human factors research focused on empirical data collection is not able to ethically or practically examine the full range of cognitive style factors representing a wide spectrum of human and non-human agent interactions.

The research presented in this paper was conceived in order to address some of the research gaps describing challenges in defining, operationalizing and evaluating expertise coordination dynamics in the completion of different types of time-based performance tasks in teams. Of particular interest was the development of plausible descriptions of the diversity of cognitive/information processing capabilities and styles among a range of human experts. Rather than attempting confirmation or validation of a particular model (such as HDMI, “Big Five” or other cognitive styles inventories), the computational simulation simply emphasized a computational approach with four distinct types. A computer-based numerical model was developed to simulate the dynamics of information sharing and understanding of simulated actors with varying levels of expertise performing problem-solving tasks where individual or combined expertise was required. The numerical simulation based on these descriptions depicts, using dynamic event stochastic (“Monte Carlo”) simulations of teams of agents working towards common goals, and measuring time-based performance based on the teams’ abilities to reconcile communication differences in order to share expertise.

Three distinct types of research results were generated using this simulation method and model. (1) Dynamic event simulations were able to successfully run using various random selections from distributions of expertise in “virtual organization” pools, and demonstrate problem solving behaviors in different task demand configurations. These simulations were able to reach statistical variance stability in a reasonable (~3000) number of runs, allowing for more detailed examinations of differences between task, cognitive diversity, and coordination conditions. (2) Results of problem solving simulations clearly distinguished effects of expertise distributions and coordination constraints affecting task completion dynamics between task types (additive, conjunctive, disjunctive) classically defined in the group dynamics and social psychology research literature [

28]. (3) Results of simulations within a single task type distinguished different expertise coordination computational algorithms, using different plausible measures of cognitive diversity and information sharing.

Multiple areas of additional research are enabled and envisioned by these encouraging results. Algorithmic definitions of task and expertise demands for problem solving were able to create face valid distributions of task performance outcomes; these definitions can be shown to be due to differences between conditions, rather than computational instability of the algorithms themselves. While this research clearly describes ways to consider diversity of expertise and challenges of expertise sharing and information alignment, there are no a priori reasons to select one measure of diversity and misalignment over another. Thus, a new area of human factors research can be developed to explore how, where, and in what ways differences in cognitive or information processing styles affect information sharing and mutual understanding in cognitively diverse teams. Using a multidimensional measure of expertise incorporating both domain knowledge and communication effectiveness, the dynamics of coordination among human-human and human-software agent team members.

Using simulation modeling techniques, research can apply different experimental treatments, explore or develop new theories relating to teams, and continue to expand upon operationalized factors discussed in this paper. This can be seen as a cost-effective way of directing relatively expensive human factors research resources to exploring previously unresolved (or undefined) operational measures or critical variables that strongly affect performance outcomes. In addition, this line of inquiry represents a successful advance of previous calls for meaningful study of the complexity of human-systems integration in a variety of advanced complex systems with increasingly capable software agents.