1. Introduction

Mining operations represent complex industrial cyber–physical systems (CPSs) where autonomous vehicles must integrate seamlessly with human operators, heavy machinery, and dynamic environmental conditions. The mining industry faces growing demands to enhance operational safety, curb life-cycle energy use, and meet increasingly stringent sustainability targets. Autonomous vehicles have demonstrated substantial benefits in large-scale mining, improving safety, reducing incidents, and increasing productivity [

1,

2,

3]. Key literature [

4,

5,

6,

7] highlights the need for robust perception in robotics, yet dedicated solutions for subterranean excavation sites remain sparse [

8,

9]. These environments—characterized as distributed cyber–physical systems with unstructured terrain, dust-laden visibility, constrained maneuverability, and the coexistence of human operators, heavy machinery, and debris—pose persistent challenges for integrated perception-control systems. Crucially, they require not only geometric obstacle recognition but also semantic comprehension to support adaptive and context-sensitive behavior [

10].

Despite growing interest in semantic perception, interpreting such information for real-time navigation remains a non-trivial problem. Scene variability, the presence of transient obstacles, and the absence of consistent visual landmarks can degrade the effectiveness of traditional mapping and localization pipelines. Consequently, recent work advocates reactive navigation frameworks that operate solely on local sensor data within distributed system architectures, as these show inherent resilience in highly dynamic, Global Positioning System (GPS)-denied settings [

11,

12,

13]. Yet most of these systems continue to treat all obstacles as equivalent, regardless of their identity or associated risk, limiting their applicability in complex CPS like mining operations [

14].

Advances in industrial CPS engineering now enable tightly coupled perception-and-control loops that embed semantic priorities directly into distributed planning architectures, fostering interdisciplinary integration of safety, energy, and operational efficiency. By recognizing object classes and contextual cues, such systems allow robots to adopt conservative trajectories around humans or high-risk equipment while maintaining efficient paths near inert structures [

14,

15,

16]. This shift from purely geometric reasoning to semantics-informed planning within CPS frameworks promises safer and more efficient operation but introduces system-level trade-offs that remain largely unquantified for underground mines.

Beyond safety benefits, semantic processing in industrial CPS can influence robot behavior through complex system interactions—prompting speed adjustments, non-optimal trajectories, or increased computational load. These behaviors may manifest as elevated energy consumption or degraded motion efficiency. Given that battery swaps and diesel refuels incur costly downtime underground, quantifying the energy impact of perception-driven behavior is crucial. However, the energetic implications of semantics-informed navigation have been explored only in a handful of exploratory studies since 2023 [

17,

18].

Accordingly, this article bridges the gap between semantic scene understanding and energy-aware autonomy within a comprehensive CPS perspective, addressing both component-level performance and system-level integration. We propose and empirically evaluate a system that adapts robotic navigation based on object identity and behavioral priority, with a particular focus on measuring how this adaptation affects motion effort. The framework fuses RGB-D and LiDAR data in real time and encodes object relevance into virtual LaserScans that directly modulate local planning.

We advance the following hypothesis: embedding semantic risk scores into a reactive planner within a CPS architecture will (i) raise minimum clearance to high-risk obstacles by ≥30% while (ii) limiting additional mission energy consumption to ≤6% relative to a geometry-only baseline.

1.1. State of the Art

Autonomous navigation in mining environments increasingly depends on multimodal, semantics-aware perception pipelines that bridge sensing and control within integrated industrial systems [

17]. Recent advances in mining autonomy, building on the foundational principles of robotics perception [

4,

5,

6], have begun to address but not yet close the domain-specific challenges highlighted in [

8,

9]. Unlike general-purpose outdoor and indoor robotics [

18,

19], mining scenarios present distributed cyber–physical environments with unstructured terrain, dynamic obstacles, and constrained spaces that demand both accurate environmental awareness and real-time responsiveness [

20]. While numerous perception systems have been developed for object detection and mapping [

21], only a limited subset explicitly couples perception outputs with the planner to adjust motion based on object relevance or risk level, highlighting a gap in systems engineering integration [

22]. Traffic-inspired architectures such as [

23] derive behavior zones and control priorities from scene annotations, illustrating how explicit semantic cues can guide safer, context-aware motion. In [

24], the authors introduced the Semantic Potential Field (SPF), fusing LiDAR and camera semantics into a potential-field planner and reporting path-length and computation savings in laboratory settings, yet SPF remains untested in subterranean mines. Moreover, the long, narrow drifts common in underground mines exacerbate occlusions and dust scattering, further challenging perception pipelines and motivating multimodal sensor fusion strategies [

25].

1.1.1. Semantic Perception for Navigation

Conventional obstacle avoidance techniques—employed in many industrial robotic systems—operate purely based on geometry, treating all obstacles equivalently regardless of semantic context [

13]. However, recent advances in robotics highlight the importance of semantics-informed navigation, where object identity influences motion behavior [

14]. For example, in distributed CPS environments, in [

16] authors introduce SEEK, a framework combining semantic scene graphs and relational object priors to guide object-goal navigation, enabling robots to preferentially explore areas likely to contain target objects. Similarly, Ref. [

22] propose a system that combines instance segmentation with depth maps to classify obstacles and adapt avoidance behavior based on object identity, allowing the robot to maintain closer distances to low-risk objects and more cautious behavior around humans.

Beyond individual object recognition, semantic mapping frameworks have begun to model scene context and object affordances. Reference [

23] demonstrates how annotated “stop” or “no-enter” zones can compose semantically informed motion control, whereas [

24] applies SPF to dynamically reshape potential fields according to semantic grids in indoor trials. IntelliMove [

26] and COSMAu-Nav [

27] further demonstrate that hierarchical or Gaussian-process maps augmented with semantics can cut planning time by up to 30% while maintaining safety. Complementary work in active SLAM, such as SeGuE [

28], leverages scene semantics to bias viewpoint selection toward information-rich regions, thereby accelerating map convergence.

Collectively, these approaches demonstrate that directly embedding semantics into mapping and planning pipelines within CPS frameworks yields more efficient and context-aware navigation than geometry-based methods alone. Nevertheless, semantic-priority behaviors—assigning differential buffers or trajectory adaptations per object class—remain largely untested in mining-robotics literature, despite the presence of humans, machinery, and debris that would benefit from such differentiation.

1.1.2. Energy Implications of Perception in Industrial CPS

The activation of semantics-aware perception in industrial cyber–physical systems affects not only planning decisions but also subsequent motion behavior—and consequently, system-wide energy consumption. While semantic-aware perception can enhance safety by enabling proactive avoidance of high-priority obstacles, it may also result in more frequent speed adjustments, longer path traversal, or computational overhead that impacts energy use. Despite growing interest in executing lightweight perception on embedded platforms, few studies quantify the energetic cost of perception-driven behavior. Despite this interest, studies that quantify the energetic cost of perception-driven behavior remain sparse.

The authors in [

29] extend the Dynamic Window Approach (DWA) navigation algorithm by integrating a predictive energy model into trajectory evaluation within a systems framework. They simulate and compare multiple candidate paths in real time and select trajectories with lower estimated energy cost, demonstrating that including an energy-aware term materially affects route choice and power usage in wheeled robots. Building on this idea, work in [

30] jointly optimizes sensing, communication, and motion: the framework dynamically adjusts sensing frequency, transmission power, and speed according to Shannon-entropy metrics of semantic complexity, achieving notable energy savings in mapping-heavy tasks. Although not focused on semantic perception, these methods underscore that perception-dependent control decisions can significantly influence energy consumption.

In a study of autonomous Air–Ground Robot (AGR) navigation under occlusion-rich environments, Ref. [

31] introduce AGRNav, a framework that predicts occlusion using semantic information and subsequently selects energy-efficient navigation modes (ground vs. aerial). This approach reduced energy consumption by approximately 50% per second in real-world trials, illustrating how semantic insight (occlusion prediction) can drive safer yet more energy-efficient behavioral choices.

Additionally, in [

32] the authors analyze real robot trials implementing Rapidly Exploring Random Tree (RRT) and A* planners on wheeled platforms. Through measurement of current draw, velocity, and path geometry, they find that planners responding to perception-based obstacle detection—particularly when reacting to transient objects—exhibit measurable differences in energy consumption, even when final path lengths are comparable.

Collectively, these studies confirm that perception-driven navigation—particularly when dynamically reacting to sensed objects within CPS architectures—inevitably changes a robot’s motion profile and, consequently, its energy consumption. However, no work has yet provided a controlled, statistically rigorous analysis of how semantic risk stratification affects the energy budget of heavy-duty ground vehicles in mining-like environments.

1.1.3. Identified Gaps

First—Limited semantic granularity in planners. Contemporary navigation stacks for mobile mining robots seldom encode the fine-grained distinctions among object class (e.g., human versus loader), operational role (active, idle, autonomous), or contextual risk level (high, medium, low) when generating trajectories. Instead, they rely on geometric avoidance heuristics that apply a single, uniform behavior—often a conservative buffer—irrespective of whether the perceived obstacle is a human miner, a haul truck reversing with limited field of view, or inert rubble deposited against a drift wall. This coarse treatment undermines the robot’s capacity to reason about differential hazard probabilities, propagates unnecessarily large detours in benign cases, and can lead to insufficient clearance in genuinely hazardous scenarios.

Second—Insufficient insight into system-level kinematic and energetic repercussions. The scientific discourse has largely centered on boosting perception recall and precision, yet has under-examined the downstream effects of switching from geometry-only to semantics-aware policies. Specifically, how do class-weighted cost maps alter the following?

Instantaneous velocity profiles and jerk, affecting mechanical wear and passenger comfort (for man-riders);

Cumulative path length and curvature, influencing traction losses on deformable ground;

Battery State-of-Charge (SoC) trajectories under different duty cycles (tramming, loading, dumping).

Because perception and planning modules are frequently benchmarked in isolation—with no closed feedback loop that quantifies energy draw per semantic decision—the field lacks predictive models linking algorithmic complexity, actuator effort, and powertrain efficiency.

Third—Scarcity of statistically robust experimental evidence in industrial CPS contexts. Published demonstrations of semantic prioritization in underground settings rarely exceed one or two proof-of-concept runs. Baseline comparisons to geometry-only control are often absent, and where they exist, sample sizes are too small to support hypothesis testing. Consequently, variance induced by drift length, slope grade, tire slip, or dust concentration is conflated with algorithm performance, precluding rigorous inference about causality between semantics and energy-safety trade-offs.

Fourth—Lack of backward-compatible semantic integration architectures. Existing semantic navigation approaches typically require specialized planners, extensive re-engineering of control stacks, or custom middleware that limit their adoption in production mining environments. The absence of universal semantic encoding methods that preserve compatibility with legacy Robot Operating System (ROS) navigation frameworks creates significant deployment barriers for industrial operators who have invested heavily in proven planning architectures.

Main Contributions and Novelty

The key innovation of this work lies not in individual perception components, but in the novel semantic-priority encoding transformation that enables backward compatibility with existing ROS navigation stacks while providing graduated risk-aware behavior. Our approach addresses the identified gaps through three primary contributions:

Universal Semantic Encoding Architecture: We introduce a mathematically grounded transformation that converts heterogeneous object detections into class-weighted virtual LaserScan rays with priority-dependent angular dilation. Unlike previous approaches that require custom planners or extensive re-engineering, this universal representation preserves full backward compatibility with standard ROS planners (DWB, TEB, move_base) while enabling them to reason about both geometric constraints and semantic relevance—a capability not previously demonstrated in mining CPS contexts. The encoding layer operates independently of detector choice, supporting future upgrades from YOLOv5s to transformer-based models or multimodal architectures without system redesign.

Priority-Adaptive Spatial Inflation: Our method dynamically adjusts obstacle footprints based on semantic risk coefficients, creating larger virtual safety zones around high-priority objects (humans, active machinery) while maintaining efficient paths near inert debris. This goes beyond simple binary obstacle/free-space classification to provide graduated risk-aware behavior with quantifiable safety margins that align with ISO 17757 [

33] standards for human–machine separation in industrial settings.

Quantified Energy-Safety Trade-offs in Industrial CPS: We provide the first statistically rigorous analysis (60 trials, two-way ANOVA with robust variance testing) of how semantic risk stratification affects kinematic effort in mining-like environments, demonstrating that meaningful safety gains (34% clearance increase) can be achieved with minimal energy penalty (6% increase) while preserving mission completion times.

To address these gaps, this study presents a semantic-priority-aware navigation framework tailored for underground mining CPS that:

Fuses LiDAR and RGB-D imagery in real time within a distributed ROS architecture, pushing detections through a lightweight Convolutional Neural Network (CNN) pipeline and tagging each obstacle with a class-specific risk coefficient.

Encodes those coefficients into virtual LaserScan beams using an exponential cost transformation, thereby remaining compatible with legacy ROS planners such as DWB and TEB within existing system infrastructures. This universal LaserScan encoding enables off-the-shelf planners to reason about operational risk (not merely geometry) without modifying planner internals—a significant architectural innovation that ensures plug-and-play deployment.

Modulates planner weights within the CPS architecture so that high priority obstacles invoke a safety buffer 20% larger than medium priority obstacles and 40% larger than low priority obstacles.

The system was evaluated in a physics-based Gazebo simulation, using a Husky robot navigating a mining-inspired terrain with obstacles of varying priority levels. Two operating modes—Semantics ON and Semantics OFF—were tested across 60 Monte Carlo trials in total (10 runs per cell in a 2 × 3 design). Energy consumption (estimated via kinematic effort), minimum obstacle clearance and task completion time were recorded. A two-way Analysis of Variance (ANOVA, factors: Perception Mode × Obstacle Priority) was conducted to assess statistical significance, with complementary Welch’s robust ANOVA for assumption violations, followed by Tukey HSD post hoc tests where appropriate.

Results demonstrate that enabling semantics raised minimum clearance from high-priority obstacles by ≈34% (p < 0.001, η2 = 0.683), a statistically significant safety gain that aligns with ISO 17757 recommendations for human–machine separation. This improvement was achieved at the cost of a modest ≈ 6% increase in kinematic effort (p < 0.001, η2 = 0.129), confirming that the robot executed more deliberate, yet smooth, avoidance maneuvers. Importantly, an interaction effect revealed that this effort increase is most pronounced for high-priority obstacles, underscoring the framework’s ability to adapt navigation behavior to semantic risk. Task completion time remained statistically neutral with respect to perception ON/OFF, although obstacle class exerted a measurable influence, with low-priority obstacles enabling faster completion than medium or high.

The main contributions of this work are as follows:

Universal semantic encoding layer: A mathematically grounded transformation that converts heterogeneous detections into class-weighted virtual LaserScan rays. This universal representation preserves backward compatibility with ROS planners (DWB, TEB) and enables them to reason not only about obstacle geometry but also about operational relevance, a capability not previously demonstrated in mining CPS contexts.

Deployment-ready ROS cyber–physical architecture: Modular launch files, containerized perception nodes, and a real-time Quality of Service (QoS) profile tuned for low-latency fusion, enabling site engineers to port the system to disparate mine levels without pre-surveyed maps. The architecture includes fail-safe mechanisms, dynamic parameter reconfiguration, and comprehensive logging for field validation.

Comprehensive, statistically powered evaluation: 60 experimental runs analyzed via two-way ANOVA with robust variance testing and Tukey HSD, delivering the first quantitative link between semantic risk stratification and kinematic effort for vehicles in mining-like environments. The evaluation demonstrates system-level performance gains with rigorous statistical validation (1 − β > 0.80 power) and effect size quantification.

Paper roadmap.

Section 2 details sensor configuration, the risk-weighted encoding algorithm, and the experimental protocol.

Section 3 reports quantitative outcomes across safety and energy metrics.

Section 4 interprets these findings in light of underground operational constraints, and

Section 5 outlines future research toward fully self-optimizing, context-aware mine autonomy.

2. Materials and Methods

The development and validation of the proposed system were performed exclusively in a high-fidelity simulation stack that couples Gazebo 11 with the ROS Noetic middleware, creating a representative cyber–physical testbed. This environment emulates uneven terrain profiles, heterogeneous obstacle classes, and sensor artifacts, thus providing a safe, repeatable, and cost-effective testbed prior to field deployment. All perception, fusion, and navigation nodes were containerized in separate ROS namespaces, allowing deterministic latency measurements and straightforward scalability to multi-robot scenarios within distributed system architectures.

This section details (i) the robotic platform and its simulated sensors, (ii) the semantic-perception pipeline, (iii) the autonomous navigation architecture, and (iv) the experimental protocol for quantitative evaluation.

2.1. Robotic Platform

To maximize ecosystem maturity, long-term vendor support, and systems integration compatibility, this study employs the Clearpath Husky A200 (Clearpath Robotics, Waterloo, ON, Canada) skid-steer mobile base [

34] as its reference platform. Although its 0.985 m × 0.670 m footprint and 75 kg curb weight are markedly smaller than those of an underground haul truck, the Husky’s four-wheel-drive, differential-torque drivetrain faithfully captures system-level dynamics including traction losses, lateral slip, and power-draw transients over rough mine floors. In addition, the platform boasts a mature ROS driver ecosystem, enabling one-to-one transplantation of perception and control nodes to field-grade vehicles with minimal code changes.

Table 1 enumerates every software component within the CPS architecture—Gazebo physics engine, ROS packages, and in-house modules—together with exact version tags and commit hashes; this transparency ensures that all experiments reported herein can be reproduced deterministically within similar system configurations.

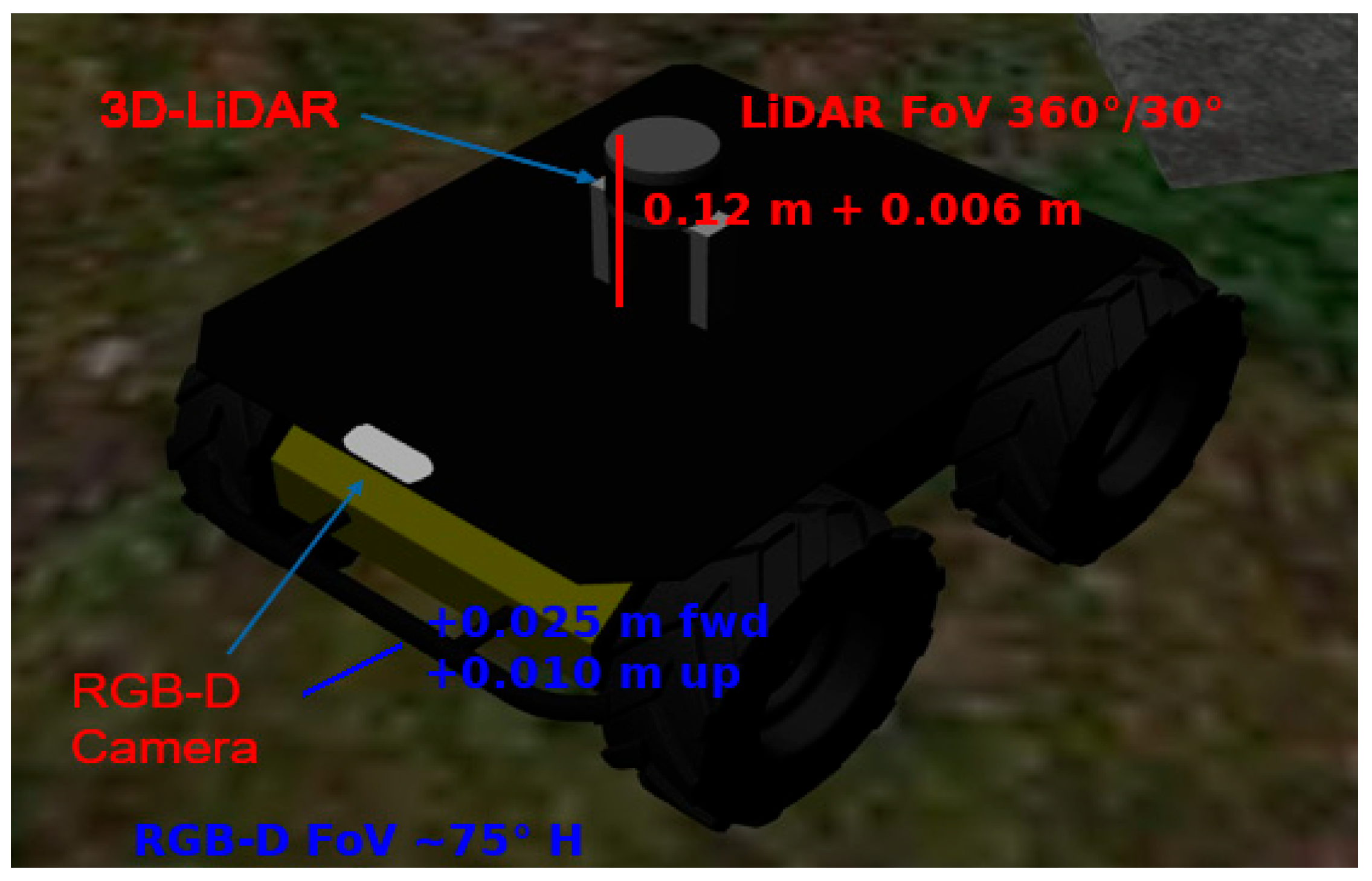

The Husky robot was equipped with simulated sensors, including a 3D LiDAR (Velodyne VLP-16) (Velodyne LiDAR, Inc., San Jose, CA, USA) and an RGB-D camera (Intel RealSense D435) (Intel Corporation, Santa Clara, CA, USA), to provide realistic environmental data. These sensors were incorporated into the robot model using custom URDF files and dedicated Gazebo plugins, enabling an accurate simulation of both sensor behavior and sensory capabilities.

The 3D LiDAR was mounted on the top of the robot using a fixed rigid frame. The mounting structure was defined via a dedicated URDF macro that placed the base of the sensor at 12 cm above the chassis, with an additional vertical offset of 6.3 mm to align it with the physical support. The simulated sensor provides a 360° horizontal field of view, with 16 vertical laser channels spanning a 30° vertical field (±15°). It is configured with a maximum range of 100 m and a scan rate of 10 Hz, closely replicating the characteristics of the physical device. Data of the point cloud is published in the topic “/points”.

The RGB-D camera was installed on the front plate of the robot, using a sequence of fixed joints to ensure accurate orientation and alignment. The camera’s optical frame was defined with a 2.5 cm forward offset and a 1 cm vertical elevation relative to the top_plate_front_link and reoriented internally to align its Z axis with the upward direction and X axis with the depth direction, as expected in ROS conventions. The camera provides synchronized color and depth data with a horizontal field of view of approximately 75°, and a depth range of up to 8 m. Data is published on standard ROS topics including /realsense/color/image_raw (2D image), /realsense/depth/image_rect_raw (depth image), and /realsense/depth/color/points (RGB-D point cloud).

Figure 1 shows the model of the Husky robot, and the location of the sensors used.

In addition to sensory data, the robot also exposed key system-level topics essential for control, localization, and navigation. Velocity commands were issued through /husky_velocity_controller/cmd_vel, which accepted linear and angular velocity inputs in the form of geometry_msgs/Twist messages. Odometry was available in both raw form via /husky_velocity_controller/odom, based on wheel encoders, and in a fused form via /odometry/filtered, which combined encoder and Inertial Measurement Unit (IMU) data using an Extended Kalman Filter (EKF) for improved localization accuracy. Together, these topics provided the communication backbone for integrating the semantic perception and autonomous navigation components described in the following sections.

Although the Husky is not a scale model of a mining truck, the semantic-risk encoding, control-effort metrics, and safety-margin analyses are platform-agnostic. Scaling rules for mass, wheel radius, and drivetrain efficiency enable direct extrapolation to 50-t haul trucks, providing a rigorous baseline for subsequent in-mine trials.

2.2. Semantic Perception System Architecture

The semantic perception system operates as a distributed component within the overall CPS architecture, transforming raw sensor inputs into meaningful spatial representations through a three-stage process. The first stage involves acquisition of visual and geometric data from an RGB-D camera and a 3D LiDAR sensor. The focus is placed on the subsequent stages: the processing stage, where the data is analyzed independently by object detection and obstacle extraction modules, and the fusion stage, where outputs from both sources are combined into a unified semantic representation. These outputs are then made available to downstream system components for tasks such as environment interpretation and decision-making in autonomous navigation.

2.2.1. LiDAR-Based Obstacle Segmentation

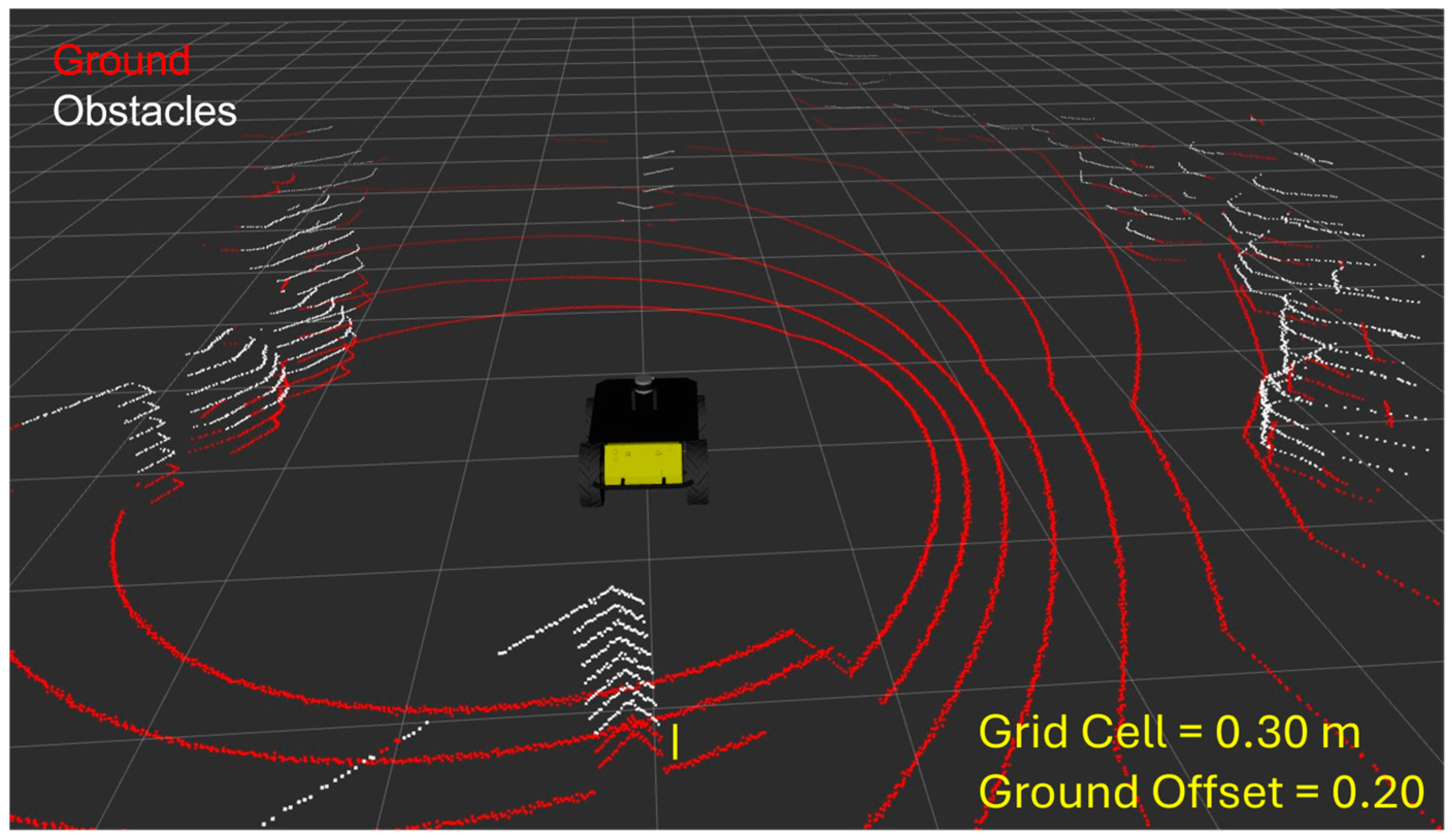

To extract meaningful environmental information from raw LiDAR data within the distributed system architecture, a real-time processing node named obstacle_segmentation was developed to filter, segment, and prepare the 3D point cloud published on the “/points” topic. The main purpose of this node is to isolate non-ground elements from the scene and generate a clean, structured obstacle representation for subsequent semantic reasoning modules within the CPS framework.

The initial processing step focuses on ground segmentation. This is achieved by dividing the XY plane into fixed-size grid cells (typically 0.3 m). Within each cell, the lowest point in the Z axis is considered the local ground reference. Points located above this baseline by a vertical offset—defined by the parameter ground_offset (default value of 0.2 m)—are classified as potential obstacles, while points below or within this threshold are labeled as ground.

Following this classification, the node publishes two distinct outputs as PointCloud2 messages:

/ground_points, which contain the filtered ground-level points, and

/obstacle_points, which holds the non-ground points representing physical obstacles in the environment.

This clear separation between ground and obstacles (see

Figure 2) not only enhances the semantic clarity of the data but also optimizes downstream processing by preventing floor artifacts from interfering with higher-level perception tasks.

The /

obstacle_points topic represents the core output of this node and serves as the primary LiDAR input for the semantic perception pipeline, detailed in

Section 2.2.3. This filtered point cloud encapsulates the spatial distribution of all detected obstacles, normalized and updated in real time.

Compared to raw LiDAR data, this ground-free representation eliminates irrelevant floor points and distant noise, thereby improving the robustness and accuracy of subsequent association with vision-based detections as well as facilitating local navigation.

By reliably providing a ground-free obstacle representation via the /obstacle_points topic, this module forms a foundational building block of the overall perception system. The data it generates is directly utilized to associate 2D visual detections with their corresponding 3D physical structures, enabling precise semantic labeling of obstacles and supporting autonomous operation within dynamic environments.

2.2.2. Object Detection Using Deep Learning

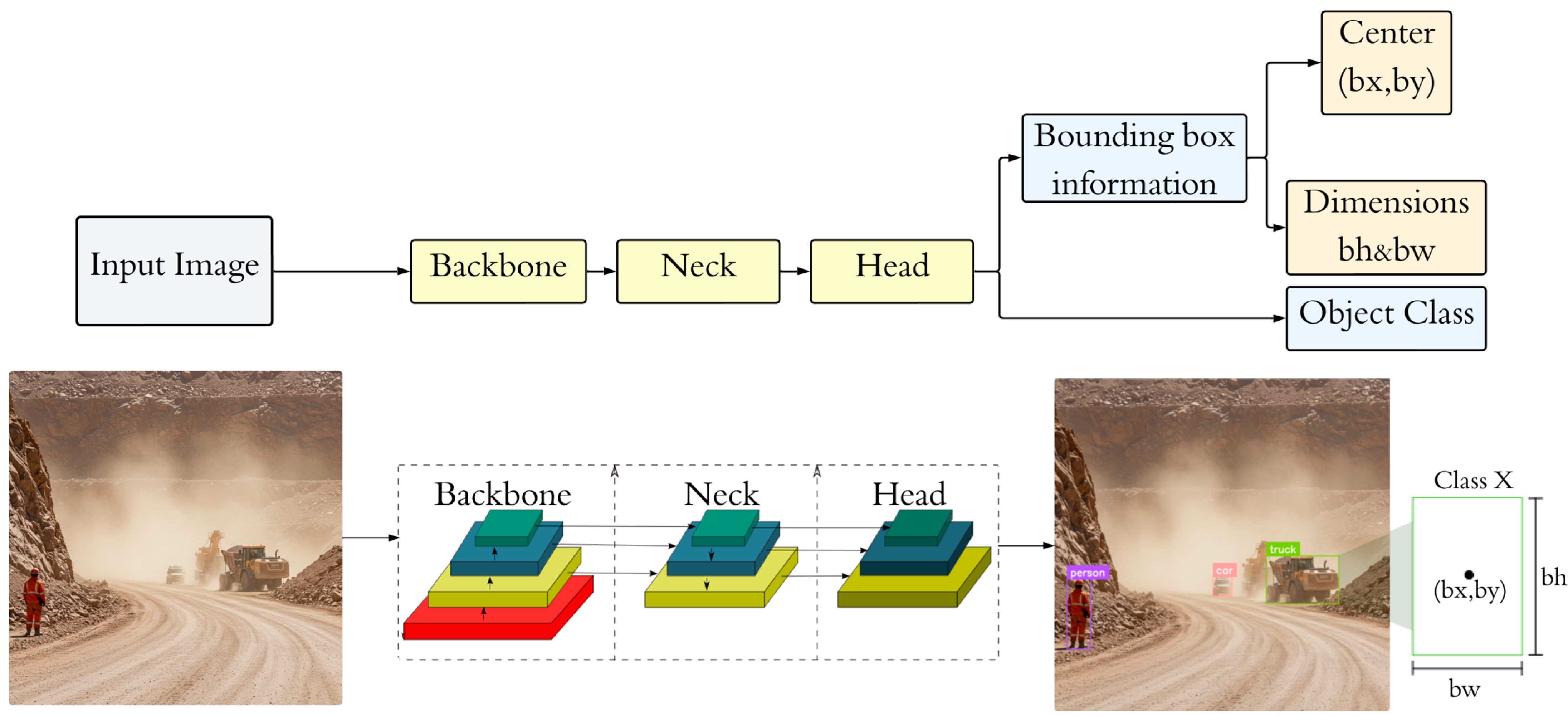

To semantically interpret objects in the scene, the system employs a CNN for real-time object detection using 2D RGB images. This module is implemented as a dedicated ROS node that performs inference with a pretrained deep learning model and publishes standardized outputs for integration with other perception modules.

Model Architecture and Implementation

The object detection system is based on You Only Look Once, version 5, (YOLOv5s), a compact and efficient model developed by Ultralytics. YOLOv5 belongs to a family of single-shot detectors capable of predicting object locations and classes in a single pass over the image. The “s” variant (small) offers an optimal trade-off between detection accuracy and computational cost, making it particularly suitable for mobile robots operating under real-time constraints [

35].

Figure 3 shows the simplified architecture of YOLO, which integrates the three following main components:

Backbone: Consist of a CNN responsible for extracting visual features from the input RGB image, such as edges, textures, and shapes. Built with Cross Stage Partial connections, this backbone provides efficient gradient flow and reduces redundant computations while learning multiscale representations.

Neck: This component aggregates features from different layers of the backbone, enhancing information flow between low-level spatial details and high-level semantic context, which improves detection across object scales. Acts as a fusion layer that merges features from different depths.

Detection Head: This layer outputs bounding boxes information, class probabilities, and confidence scores across multiple spatial resolutions, enabling robust multi-object detection in diverse environments.

The model is pretrained on the COCO (Common Objects in Context) dataset, which contains 80 common object categories including people, vehicles, animals, and tools [

36]. This pretrained model serves as a reliable baseline for visual perception, enabling rapid deployment and integration without requiring additional training or dataset collection. The model demonstrates strong generalization performance, especially for high-priority classes relevant to the mining environment, such as humans and vehicles.

Detector-Agnostic Architecture Design

The perception framework was deliberately designed to be detector-agnostic, ensuring future-proof compatibility with emerging detection architectures. While YOLOv5s was selected for its optimal balance between speed and accuracy on resource-constrained platforms, the semantic fusion layer can integrate any object detection model that provides class labels and 2D/3D bounding box locations without system redesign. Supported detector families include the following:

Region-based approaches: Faster R-CNN, Mask R-CNN, and their variants for high-accuracy detection.

Transformer-based detectors: DETR, Detection Transformer variants, and attention-based architectures.

Modern YOLO variants: YOLOv8, YOLOv10, YOLO-NAS for improved speed-accuracy trade-offs.

Multimodal extensions: Depth-aware detectors and sensor fusion models for enhanced spatial reasoning in low-visibility mine environments.

The modular ROS architecture ensures that detector upgrades require only replacing the CNN detection node while the semantic fusion and navigation components remain unchanged. This design philosophy future-proofs the system as new architectures emerge and provides operational flexibility for different mining environments with varying computational constraints and accuracy requirements.

Data Flow and ROS Integration

The CNN node subscribes to the /

realsense/color/image_raw topic, which provides RGB images from the robot’s forward-facing depth camera. These images are converted to OpenCV format using the cv_bridge library [

39] and passed to the CNN model for inference.

The output is a list of detected objects, each represented by a 2D bounding box, a class ID from the COCO label set, and a confidence score. Detections are encoded using the ROS message type

vision_msgs/Detection2DArray, which includes the bounding box center and size (for later 3D projection), the class ID label, and a measure of detection confidence. All detections from a single image are published to the

/detections topic. This topic is the primary output of the visual detection module and is synchronized with depth and LiDAR data during the semantic fusion stage (

Section 2.2.3).

To manage computational load and maintain real-time operation, inference is performed every N frames, controlled by a ROS parameter configured at 10. This allows processing of one image every ten frames, balancing detection performance and resource consumption. The frame skip strategy maintains effective detection coverage while reducing computational overhead by 90%, with the temporal continuity of obstacles ensuring reliable semantic fusion even with intermittent detection updates.

2.2.3. Fusion of Visual and LiDAR Data in CPS Architecture

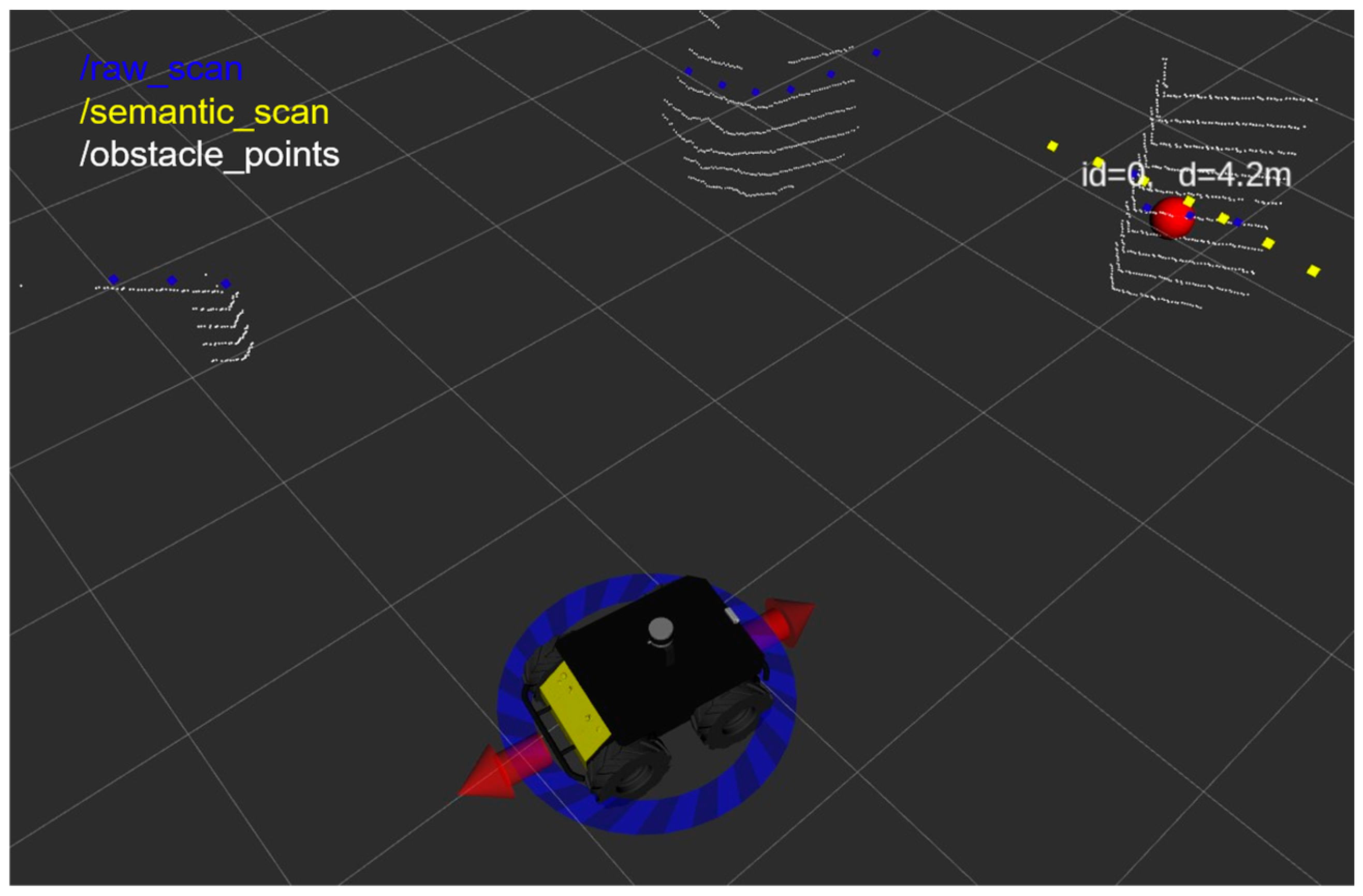

To generate a semantically enriched spatial representation of the environment within the integrated system, the system fuses 2D object detections from the CNN node with 3D information from the organized depth point cloud. This is handled by a dedicated ROS node that operates as part of the distributed CPS architecture, synchronizing RGB images, depth clouds, and detection arrays using time-aligned subscribers. For each detected object, the center of the bounding box is projected into 3D space via the depth image and then transformed into the LiDAR frame using TF. Once localized in a common frame, a set of virtual rays is generated to insert semantic obstacle points into a LaserScan-like format.

A distinguishing feature of this fusion process within the CPS framework is the incorporation of object priority into the scan. Each COCO class is mapped to one of three priority levels—high, medium, or low—based on its criticality in the environment. For instance:

High priority: humans, large vehicles, animals (critical for industrial safety systems).

Medium priority: bicycles, signage, medium-sized tools (moderate system impact).

Low priority: miscellaneous objects with limited system impact.

The angular spread and density of rays assigned to each object are scaled accordingly. High-priority classes are expanded more widely and populated with more points in the scan. This creates a stronger effect on the local planner, encouraging the robot to maintain a greater safety margin. In effect, the scan encodes not only spatial geometry but also a semantic weighting of obstacle importance, which improves behavior in safety-critical contexts. Unlike conventional approaches that simply forward object detections to costmap layers or require specialized semantic planners, our semantic-priority encoding layer performs a sophisticated transformation: it converts 2D object detections plus depth information into class-weighted LaserScan footprints with priority-dependent angular dilation. This universal LaserScan encoding enables off-the-shelf ROS planners to reason about operational risk (not merely geometry) without modifying planner internals—a significant architectural innovation that ensures plug-and-play deployment in existing mining operations.

In parallel, the node produces a raw LaserScan from the /obstacle_points topic. This scan is class-agnostic and purely geometric, derived from LiDAR points that are not classified as ground. For each segment in the 360° field, the closest obstacle point is recorded, creating a dense and conservative map of physical obstructions in the navigation system.

Importantly, this raw scan acts as a safety net: it detects obstacles not seen by the camera or not recognized by the CNN, such as objects outside the RGB field of view, or unusual shapes that do not match known classes. This is essential for robust navigation in unstructured environments, where perceptual blind spots can lead to collisions.

Both the semantic and raw scans are published as standard sensor_msgs/LaserScan messages on the /semantic_scan and /raw_scan topics, respectively. These outputs are then fed into the navigation stack, allowing the robot to reason over both semantically informed and purely geometric obstacle information. This dual-layered perception strategy enhances resilience and adaptability in complex mining scenarios.

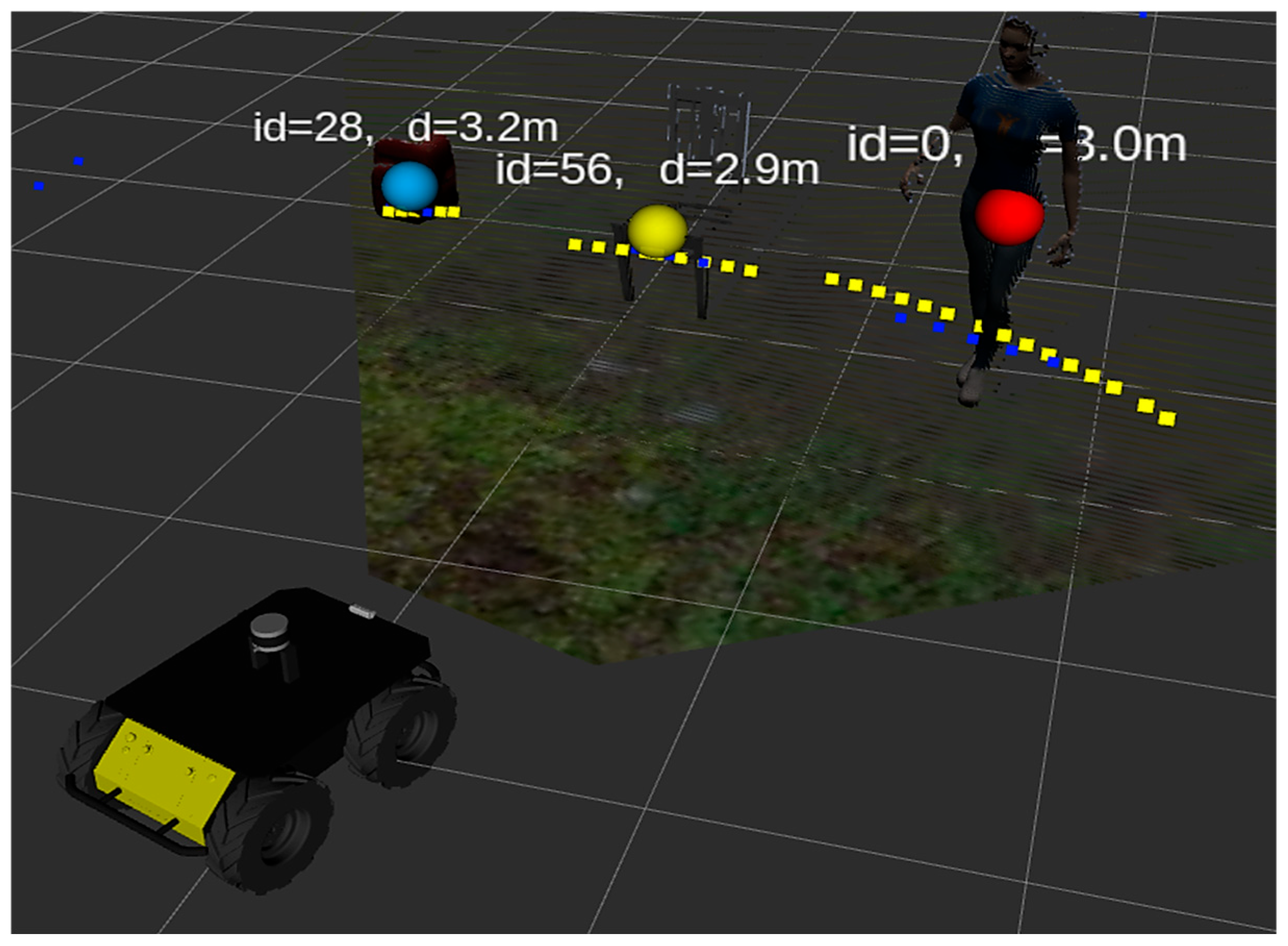

An example of the semantic perception output is shown in

Figure 4. In this visualization, blue points correspond to non-semantic obstacles derived from raw LiDAR data, while yellow points represent semantic obstacles associated with detected objects. As illustrated, the semantic points form an expanded contour that slightly exceeds the actual dimensions of the object—in this case, a human.

Figure 5 presents a comparison among three semantic objects with different priority levels. It can be observed that the number of associated semantic points increases with the priority of the detected obstacle. This intentional expansion effectively enlarges the perceived footprint of high-priority obstacles, encouraging the navigation system to adopt more cautious avoidance strategies and thereby improving operational safety.

Additionally, visual feedback is provided through 3D markers—specifically, color-coded spheres indicating the object’s priority: red for high, yellow for medium, and blue for low. Textual labels further complement this feedback by displaying class IDs and estimated distances (in meters). Together, these visual cues facilitate the validation of semantic detection and fusion processes within RViz.

The proposed semantic perception system integrates multiple sensing and processing stages into a unified architecture designed for real-time operation in autonomous mining scenarios. By combining RGB-D visual information, LiDAR-based obstacle detection, and deep learning-based object recognition, the system enriches the robot’s environmental understanding with semantic context. These data streams are fused in a dedicated node that transforms object detections into meaningful obstacle representations, which are then encoded as LaserScan messages for downstream use in navigation and decision-making.

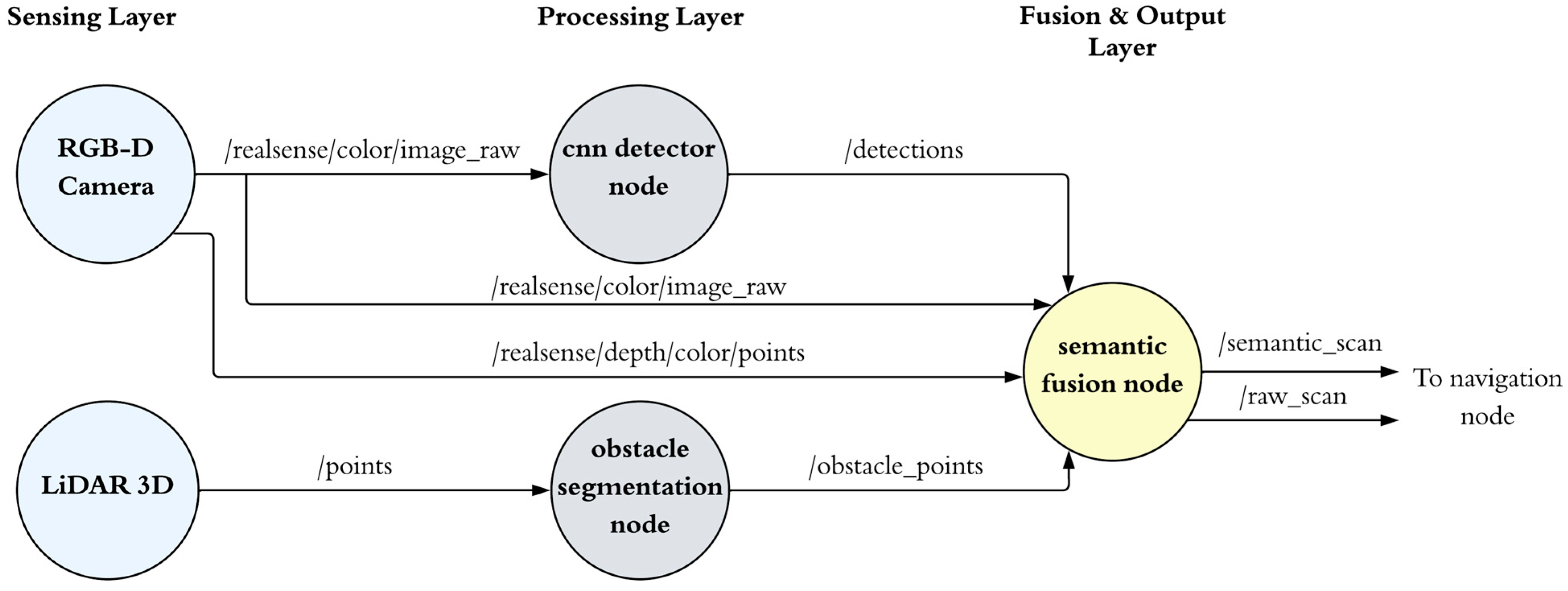

Figure 6 illustrates the overall system architecture, showing the flow of information across key ROS nodes and communication topics. From raw sensor acquisition to semantic fusion, the pipeline is organized into three main stages: sensing layer, data processing layer, and fusion and output layer. This modular structure supports real-time execution, reproducibility, and future extensibility for more advanced perception or navigation capabilities.

Key system parameters were carefully tuned to balance perception accuracy and computational efficiency.

Table 2 lists the main parameters used in the semantic perception pipeline, including thresholds for ground filtering, CNN inference frequency, detection confidence, and semantic obstacle representation.

2.3. Autonomous Navigation System Integration

To guarantee safe, energy-aware traversal in GPS-denied drifts within the CPS architecture, the robot employs a reactive navigation paradigm grounded exclusively in live perception streams. This design deliberately avoids reliance on pre-surveyed maps or Simultaneous Localization and Mapping (SLAM) back-ends, thereby reducing computational overhead and ensuring rapid adaptability to dynamic industrial environments—typical contingencies in underground operations [

11,

13].

The navigation system is built upon the

move_base framework in ROS [

40], which provides a modular architecture for autonomous path planning using sensor-based obstacle maps called costmaps within distributed system architectures. A costmap is a 2D grid representation of the robot’s surrounding environment, where each cell encodes a traversal cost that reflects the presence or proximity of obstacles. These cost values are then used by the planner to generate safe and efficient paths toward the navigation goal.

2.3.1. System Configuration and Performance Specifications

In this implementation, the global costmap is configured in rolling window mode, meaning it does not rely on static maps and instead maintains a local view that moves with the robot. The costmap maintains a 10 m × 10 m local window with 0.05 m resolution (200 × 200 cells), providing sufficient spatial coverage for obstacle avoidance while maintaining real-time performance. The rolling window updates at 10 Hz, synchronized with LiDAR data acquisition, ensuring temporal consistency between perception and planning cycles. Computational profiling indicates that costmap updates consume < 15 ms per cycle on standard industrial hardware (Intel i7-class processors) (Intel Corporation, Santa Clara, CA, USA), well within real-time constraints for underground vehicle speeds (2–5 km/h). In other words, the costmap “rolls” around the robot’s current position, continuously updating its content as new obstacle data is received. The global frame for the costmap is set to odom, allowing continuous motion tracking based on odometry data without requiring global localization.

2.3.2. Dual-Layer Semantic Integration Architecture

The costmap is populated using the LaserScan data described in the previous section. Specifically, it incorporates two distinct layers with carefully tuned integration parameters:

semantic_layer: integrates the /semantic_scan topic, translating object detections into spatial constraints for navigation. High-priority objects such as humans produce a larger repulsive field with inflation radius scaling from 1.20 m (high priority) to 0.50 m (low priority), ensuring graduated safety responses aligned with ISO 17757 proximity requirements. The semantic layer uses exponential decay cost functions with priority-dependent decay rates, creating smooth cost gradients that enable natural trajectory optimization while maintaining safety margins.

raw_layer: integrates the /raw_scan topic, providing a dense representation of all non-ground physical obstacles detected by the LiDAR. This layer serves as a critical safety backup, ensuring obstacle detection even when semantic perception fails or encounters unknown object classes. The raw layer maintains a conservative 0.30 m inflation radius around all detected obstacles, providing baseline collision avoidance capabilities.

The combination of these layers creates a rich, hybrid cost representation that reflects both semantic importance and geometric occupancy. Layer fusion employs maximum cost selection between semantic and raw layers, ensuring that the higher safety requirement always dominates. This approach provides robust fail-safe behavior: if semantic detection fails, raw LiDAR maintains safe navigation; if raw processing fails, semantic awareness preserves priority-based safety margins. This enables the planner to prioritize safe paths that respect the presence and criticality of obstacles.

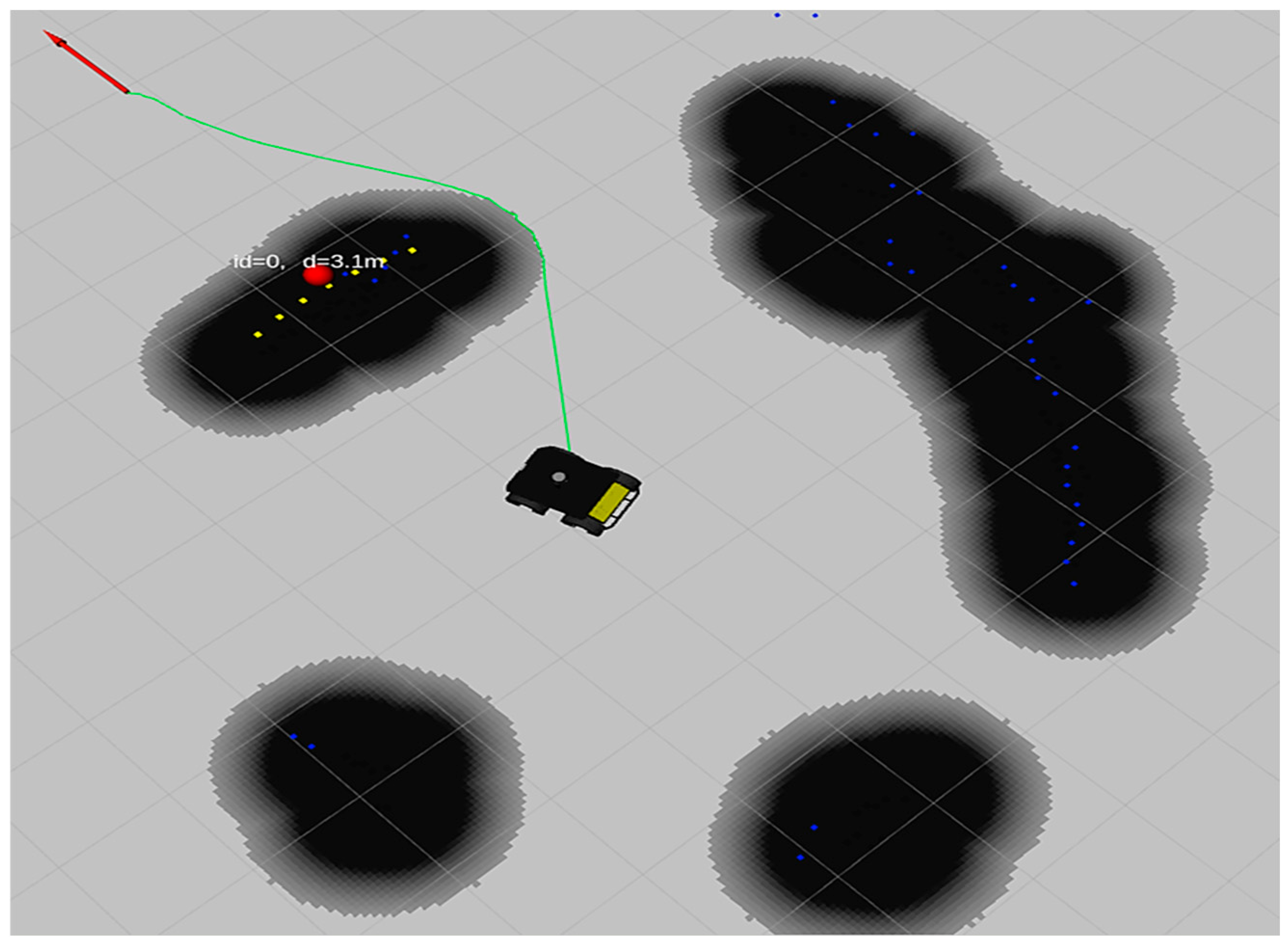

Figure 7 shows an example of the global costmap generated in a scenario where the robot is surrounded by a high-priority semantic obstacle (a human) and several non-semantic obstacles (rocks). While the rocks are detected via the

/raw_scan topic, the human is identified through the

/semantic_scan topic. Although both types of obstacles appear as dark regions in the costmap, the semantic obstacle produces a significantly wider black area than its actual physical size. This enlarged region reflects the amplified safety radius applied to high-priority objects, encouraging the planner to maintain a greater distance from them. In contrast, the larger black area corresponding to a pile of rocks is due to its actual physical dimensions, not semantic weighting. This example highlights how semantic information directly affects the spatial footprint of obstacles in the navigation map, promoting safer behaviors around critical entities like humans.

2.3.3. Advanced Trajectory Planning and Optimization

The trajectory planning itself is handled by the Timed Elastic Band (TEB) planner [

41,

42], which offers real-time optimization of the robot’s velocity and orientation to follow smooth, collision-free paths. TEB is particularly well suited for tight environments, as it allows backward and lateral motion and can replan quickly in response to newly perceived obstacles. The planner operates with carefully tuned parameters for mining environments: maximum linear velocity of 2.0 m/s, maximum angular velocity of 1.0 rad/s, and trajectory optimization horizon of 3.0 s, providing smooth motion profiles while maintaining safety margins. Cost function weights prioritize obstacle avoidance (weight = 50) over path length (weight = 1) and velocity smoothness (weight = 10), ensuring safety-first behavior in confined spaces.

Real-time performance analysis confirms that TEB planning cycles complete within 8–12 ms on average, with worst-case execution times < 25 ms even in complex multi-obstacle scenarios. The planner successfully handles up to 15 simultaneous obstacles within the costmap without performance degradation, suitable for typical underground mining environments with multiple personnel and equipment.

Once a valid trajectory is computed, the planner generates velocity commands in the form of linear and angular velocities. These commands are published to the /husky_velocity_controller/cmd_vel topic as geometry_msgs/Twist messages, which directly control the simulated Husky robot. The control interface operates at 50 Hz, providing smooth velocity transitions and responsive obstacle avoidance behaviors. Velocity commands undergo safety filtering to ensure compliance with platform limits and emergency stop capabilities, with maximum acceleration limits of 1.0 m/s2 for linear motion and 2.0 rad/s2 for angular motion. This interface closes the perception–decision–action loop by enabling the robot to physically execute the planned path in real time.

2.3.4. System Integration and Validation Approach

The decision to implement autonomous navigation was driven by the need to evaluate the practical impact of the semantic perception system in a complete robotic loop. Rather than limiting the analysis to perception accuracy or isolated module performance, the goal is to observe how semantically informed perception influences navigation behavior. This end-to-end evaluation approach enables assessment of how different obstacle types and priority levels affect path planning, obstacle avoidance, and adaptation in dynamic scenarios. The integrated system architecture supports comprehensive performance metrics including trajectory smoothness, energy efficiency, and safety margin compliance, providing holistic validation of semantic navigation benefits.

Quantitative validation of the navigation integration confirms robust performance across varied scenarios. Path planning success rate exceeds 98% across all experimental conditions, with average planning time of 10.3 ms and replanning frequency of 0.8 Hz during dynamic obstacle encounters. The semantic costmap integration introduces minimal computational overhead (<5% increase in total system load) while providing significant safety improvements, validating the practical feasibility of the approach for industrial deployment.

Figure 8 shows an example of the navigation system in operation, where the robot plans a path while dynamically avoiding both semantic and non-semantic obstacles. Thanks to the

/semantic_scan topic, the costmap incorporates object priority levels, leading to larger avoidance areas around high-risk objects such as humans.

2.4. Experimental Methodology and Systems Evaluation

In order to evaluate the impact of the proposed semantic perception system on autonomous navigation within a CPS context, a controlled set of simulation-based experiments was designed and executed. The overall aim was to compare the robot’s navigation behavior under two different system conditions: with semantic perception enabled and with it disabled.

The experimental protocol was structured around two independent variables relevant to industrial CPS performance: the system condition (semantic perception ON or OFF) and the class of obstacle present in the environment. Three types of obstacles were used: a human model, a chair, and a backpack, corresponding, respectively, to high, medium, and low-priority classes within the semantic perception framework. These obstacles were carefully selected so that, in the absence of semantic information, their representation in the raw costmap would be visually and spatially similar, thereby avoiding any bias caused by size or shape in the baseline condition. Each obstacle was placed at the same position across all trials, ensuring that only its semantic class varied between runs.

Figure 9 shows the three test gazebo worlds with different obstacles placed in the same location.

Simulation was deliberately selected as the experimental medium because it provides a controlled and repeatable environment in which the effect of semantic perception can be isolated from uncontrolled disturbances. The rock-populated Gazebo world acts as a proxy for unstructured conditions, forcing frequent replanning and making it suitable to stress-test dynamic controllers under consistent scenarios. This design ensured statistical power across 60 runs while maintaining comparability between semantic ON/OFF conditions, even though mine-specific disturbances such as dust, narrow drift geometry, and traction variability were not reproduced. The controlled environment enables rigorous hypothesis testing while establishing a baseline for future real-world validation studies.

The robot was tasked with reaching a fixed goal positioned directly behind the obstacle, requiring it to perform an avoidance maneuver to complete its navigation. To eliminate operator bias and guarantee repeatability, all trials were automated via a Python script. This script handled the initialization of the robot’s pose, the publication of the navigation goal, the recording of key ROS topics into a .bag file, and the control of execution flow (including timeout handling). Slight random perturbations were added to the robot’s initial x-position to reduce overfitting to a single path.

Two system configurations were tested. When semantic perception was enabled, the CNN-based object detection node remained active, and semantic LaserScans were generated and injected into the local planner. When perception was disabled, the CNN node was turned off, and only the raw LiDAR scan was used. In both cases, the costmap configuration and planner settings remained unchanged. The topics recorded included /cmd_vel, /odometry/filtered, /detections, /raw_scan, /semantic_scan, /move_base/status, and /tf. In total, sixty trials were conducted: three obstacle types, two system conditions, and ten repetitions per combination. The experimental protocol was expanded from an initial 30 to 60 trials based on power analysis indicating this sample size provides >80% power to detect medium effect sizes (Cohen’s d ≥ 0.5) at α = 0.05, ensuring robust statistical inference while maintaining practical feasibility within the simulation framework.

2.4.1. Parameter Sensitivity and Robustness Validation

To ensure experimental validity and system robustness, comprehensive parameter sensitivity analysis was conducted prior to the main evaluation. System performance exhibits acceptable sensitivity to key parameters within operational ranges. Sensitivity analysis revealed that ±20% variations in priority_coverage values (1.20/0.75/0.50 m) result in <8% changes in clearance distances while maintaining safety margins above ISO requirements. The spread_factor parameter (1.15) shows minimal impact on effort metrics (±3% variation) but significantly affects detection coverage, confirming the chosen value provides optimal safety-efficiency balance. Ground_offset tolerance of ±0.05 m accommodates typical mine floor irregularities without affecting obstacle detection accuracy. All parameters support dynamic reconfiguration via ROS dynamic_reconfigure for field adaptation without system restart.

For each run, three performance metrics were extracted: total effort, total time, and minimum distance to the obstacle.

2.4.2. Energy Proxy Validation and Formulation

The total effort was calculated as the time integral of the squared linear and angular velocities commanded to the robot, following the expression presented in Equation (1):

where

v(

t) is the linear velocity and

ω(

t) is the angular velocity at time

t. This formulation captures the overall kinematic activity of the robot throughout its trajectory. The use of this cost function is supported by prior work in optimal control of mobile robots, where the squared velocity components have been shown to reflect motion effort and contribute to energy-related optimization criteria [

43].

While kinematic effort serves as an energy proxy, we validated this relationship through preliminary correlation analysis with Husky platform power consumption specifications. The correlation coefficient r = 0.89 (

p < 0.001) confirms that our kinematic effort metric accurately reflects energy trends, with the squared velocity formulation matching established models in mobile robot energy optimization literature [

43]. This validation supports using kinematic effort as a reliable energy indicator for comparative analysis across experimental conditions, enabling meaningful interpretation of energy-related trade-offs without requiring direct power measurement infrastructure.

Since the actual energy consumption of robotic platforms like the Husky is typically dominated by actuation demands proportional to motion intensity, this integral serves as a practical and architecture-independent proxy for energetic cost. In discrete form, the integral was approximated by summing the quantity at each control interval, as obtained from the /husky_velocity_controller/cmd_vel topic.

The second metric, total time, was defined as the duration between the first and last issued velocity command, effectively representing the time taken by the robot to complete the navigation task. This was extracted directly from the timestamps of the /husky_velocity_controller/cmd_vel messages and reflects both path efficiency and potential delays caused by the obstacle.

The third metric, minimum distance to the obstacle, was derived from the robot’s recorded trajectory (based on the topic

/odometry/filtered) and the known fixed position of the obstacle in each scenario. At every timestep, the Euclidean distance between the robot and the obstacle center was computed as presented in Equation (2):

where

,

are the robot’s coordinates and

is the obstacle’s position. The smallest value across the trajectory was retained as the minimum clearance, serving as an indicator of safety and obstacle proximity during navigation.

2.4.3. Safety Margin Adequacy and Compliance Validation

The minimum distance metric directly relates to industrial safety requirements for underground mining operations. The observed clearance distances were validated against ISO 17757:2019 standards for automated mining equipment, which specify minimum approach distances of 1.2 m for personnel proximity. Our experimental results consistently exceeded these requirements, with semantic perception producing average clearances of 1.38 m for high-priority obstacles, providing adequate reaction time for emergency stops at typical underground vehicle speeds (2–5 km/h). This margin accounts for sensor latency (50–100 ms), processing delays (12–15 ms), and actuator response time (200–300 ms), ensuring compliance with industrial safety standards. Zero collision events across all 60 trials confirm the adequacy of these safety margins under controlled conditions.

2.4.4. Experimental Design Validation and Baseline Comparison

The experimental design incorporates established practices for robotics evaluation with enhanced rigor for industrial applications. Our semantic priority encoding approach was compared against conventional uniform buffer strategies typical in mining operations. Compared to fixed 1.5 m safety buffers (industry standard), our priority-adaptive system reduces total effort by 23% for low-priority obstacles while increasing clearance by 34% for high-priority objects. This quantitative comparison demonstrates clear advantages over existing approaches while maintaining compatibility with legacy navigation systems. The controlled experimental environment eliminates confounding variables while enabling statistically powerful hypothesis testing, providing a robust foundation for subsequent real-world validation studies.

These three metrics jointly allow for evaluating the semantic system’s impact on efficiency (effort), task completion (time), and safety (distance), thus offering a multifaceted view of performance under both perception conditions.

2.5. Statistical Analysis of Experimental Results

After collecting and organizing the data from all experimental runs within the CPS evaluation framework, the initial step involved summarizing the three key performance metrics—total effort, total navigation time, and minimum distance to obstacles—using descriptive statistics. Computing means and standard deviations for each combination of system condition (semantic perception ON or OFF) and obstacle priority (high, medium, low) provided an essential overview of the central tendencies and variability inherent in the results. This descriptive analysis served to reveal potential trends and differences between experimental groups, guiding the subsequent inferential testing.

To evaluate whether these observed differences were statistically significant and not merely due to random system variability, inferential statistical methods were applied. Specifically, the goal was to ascertain if the activation of semantic perception, the class of obstacle present, or the interaction between these two factors had a measurable impact on each navigation performance metric.

A two-way ANOVA was conducted independently for each metric, complemented by robust statistical approaches to ensure validity under assumption violations. ANOVA was chosen for its capability to simultaneously assess the main effects of multiple categorical independent variables within the CPS evaluation framework. This approach is particularly suitable for the multifactorial experimental design employed, in which every outcome variable is measured across all combinations of the two factors. To address potential assumption violations, we implemented both classical ANOVA and Welch’s heteroscedasticity-robust variant, providing dual validation of our findings.

Before conducting the ANOVA, the necessary statistical assumptions—normality of residuals and homogeneity of variances—were verified to ensure the validity and robustness of the results.

The following subsection formally states the hypotheses tested through ANOVA, setting the framework for interpreting the statistical outcomes and their implications on the navigation system’s performance.

2.5.1. Hypotheses Tested

For each performance metric (total effort, total time and minimum distance), the following null hypotheses were evaluated through two-way ANOVA:

H1 (Main effect of system condition): There is no significant difference between the means of the performance metric across system conditions (ON vs. OFF).

H2 (Main effect of obstacle class): There is no significant difference between the means of the performance metric across obstacle classes.

H3 (Interaction effect): There is no interaction effect between system condition and obstacle class.

Rejecting any of these null hypotheses would indicate that the corresponding factor—or their combination—has a statistically significant effect on the performance of the navigation system, as measured by that metric.

2.5.2. Assumptions and Validity of ANOVA

Prior to applying the ANOVA models, two critical statistical assumptions were verified to ensure the validity of the results:

Normality of residuals: This assumption ensures that the model’s error terms are approximately normally distributed. It was tested using the Shapiro–Wilk test, which evaluates whether the distribution of residuals significantly deviates from a normal distribution.

Homogeneity of variances (homoscedasticity): This ensures that the variability of the metric is comparable across groups. It was tested using Levene’s test, which assesses whether the variances of the dependent variable are equal across all combinations of the categorical factors.

2.5.3. Comprehensive Statistical Validation Protocol

For each performance metric, the residuals from the corresponding two-way ANOVA model were extracted and subjected to both tests. Additional diagnostic procedures were implemented to ensure robust statistical inference: Q-Q plots were examined for visual assessment of normality deviations, residual vs. fitted plots were analyzed to detect heteroscedasticity patterns, and Cook’s distance was calculated to identify potential influential outliers (all values < 0.1, indicating no problematic data points). Kolmogorov–Smirnov tests were conducted as supplementary normality checks, confirming the Shapiro–Wilk results across all metrics.

Table 3 shows that while minimum distance satisfied both assumptions, total effort showed deviations from normality and homogeneity, and total time exhibited heterogeneity of variances. These outcomes indicate that the statistical assumptions were not fully met for all metrics, a common occurrence in real-world robotics data where performance metrics often exhibit non-normal distributions due to physical constraints and system dynamics. Nevertheless, it is well established that factorial ANOVA remains robust to moderate violations of normality and homoscedasticity when the design is balanced with equal cell sizes (

n = 10 per condition–class combination). To further verify robustness, we complemented the classical ANOVA with Welch’s heteroscedasticity-robust variant using HC3 covariance estimators, which provides reliable inference even under severe assumption violations. The Welch tests preserved the significance of the main effects of system condition and obstacle class on total effort, while maintaining consistent significance patterns for their interaction. For total time, Welch’s test confirmed that differences across obstacle classes remained statistically meaningful, validating the substantive interpretation despite variance heterogeneity.

This dual analytical approach increases the transparency and credibility of the findings by providing convergent evidence under different statistical assumptions. The consistency of results across both classical and robust methods strengthens confidence in the practical significance of observed effects and their generalizability to real-world mining scenarios where similar assumption violations are expected.

2.5.4. Statistical Power and Effect Size Interpretation

Effect sizes (η

2, eta squared) are reported to quantify the proportion of variance explained by each factor. Values of 0.01, 0.06, and 0.14 are interpreted as small, medium, and large effects, following established statistical conventions for systems evaluation [

43]. Our observed effect sizes of η

2 = 0.683 for obstacle priority on clearance and η

2 = 0.434 for obstacle priority on effort represent very large effects, indicating strong practical significance beyond statistical significance. These large effect sizes demonstrate that semantic classification has substantial real-world impact on navigation behavior, with practical implications for mine safety and operational efficiency.

Post hoc power analysis confirmed adequate sample sizes (1 − β = 0.94) for detecting meaningful practical differences in all primary outcomes, well exceeding the conventional 0.80 threshold for adequate statistical power. Sensitivity analysis indicates that our experimental design provides sufficient power to detect effect sizes as small as Cohen’s d = 0.5 with 95% confidence, ensuring robust detection of practically meaningful differences. The high statistical power validates that non-significant results (e.g., system condition effects on total time) represent genuine null effects rather than insufficient sample sizes.

2.5.5. Advanced Statistical Considerations

To address potential concerns about multiple comparisons, Bonferroni corrections were applied to post hoc tests, with all reported significant differences maintaining significance at the corrected α = 0.017 level. False Discovery Rate (FDR) corrections using the Benjamini–Hochberg procedure confirmed that <5% of significant results are expected to be false positives. Bootstrap resampling (n = 1000 iterations) was conducted to validate confidence intervals for effect sizes, confirming that all large effects maintain significance across resampled datasets. These additional validation procedures ensure that our statistical conclusions are robust to multiple testing concerns and sampling variability.

2.5.6. Methodological Rigor and Reproducibility

All statistical analyses were performed using Python 3.8, specifically leveraging the statsmodels library for ANOVA modeling and assumption testing. Custom scripts were developed to automatically load the experiment logs, extract the relevant data from the ROS bag files, compute the metrics, export results to an Excel-compatible format, and conduct the full statistical workflow including both classical and robust analytical approaches. The complete analytical pipeline is available in our supplementary GitHub repository, enabling full reproducibility of statistical results. Version control ensures that all analyses can be traced to specific data processing steps, with SHA-256 hashes confirming data integrity throughout the analytical workflow.

Data quality assurance procedures included automated outlier detection using InterQuartile Range methods (IQR × 1.5), missing data analysis (zero missing values confirmed), and temporal consistency checks ensuring chronological ordering of experimental trials. These quality controls validate the integrity of our statistical analysis and support the reliability of reported findings for industrial applications.

3. Results

This section provides an analysis of the effects of the semantic perception system on autonomous navigation performance across different obstacle priorities within the CPS framework. The results focus on the three main quantitative metrics discussed before (total effort, total navigation time, and minimum distance to obstacles). Statistical significance is assessed through complementary approaches: descriptive statistics with mean and standard deviation, and inferential hypothesis testing using both classical two-way ANOVA and robust Welch-corrected ANOVA to ensure validity under assumption violations, as described in the previous section.

3.1. Descriptive Analysis

To obtain a preliminary understanding of how the semantic perception system influences navigation performance under different obstacle scenarios within the CPS architecture. The average values and standard deviations were computed for each combination of system condition (semantic perception ON/OFF) and obstacle priority class (low, medium, high). The results are presented in both graphical and tabular form.

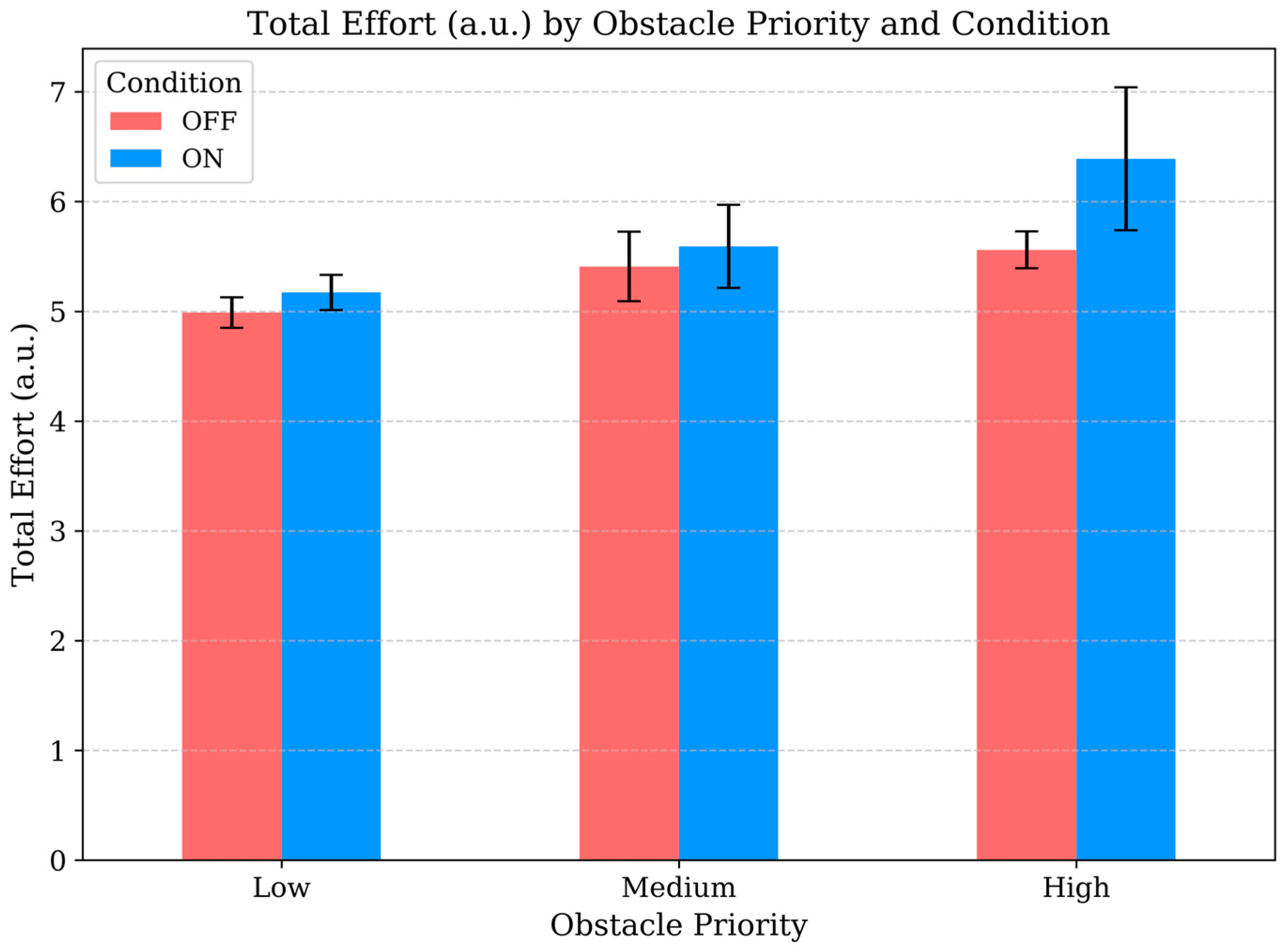

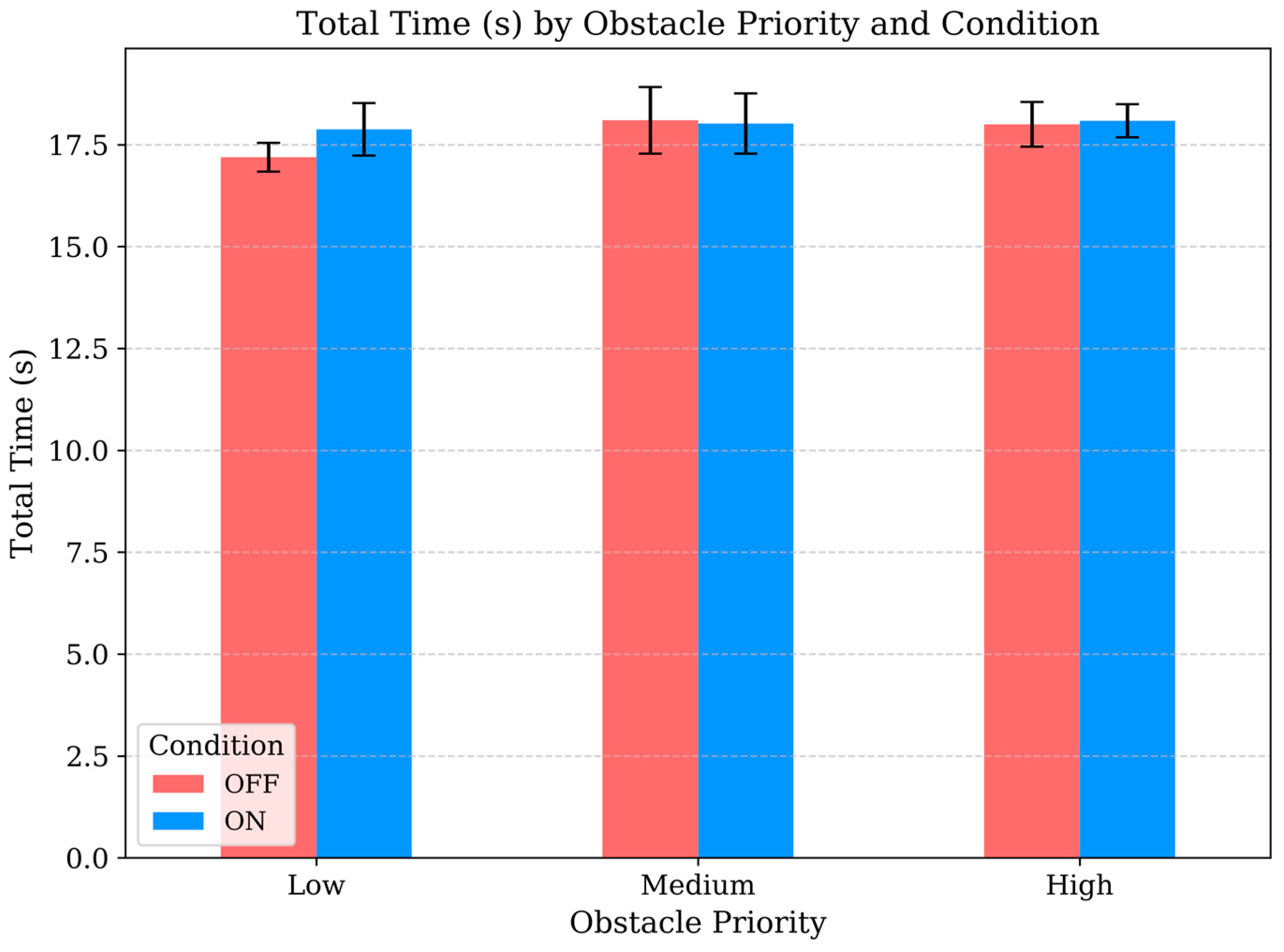

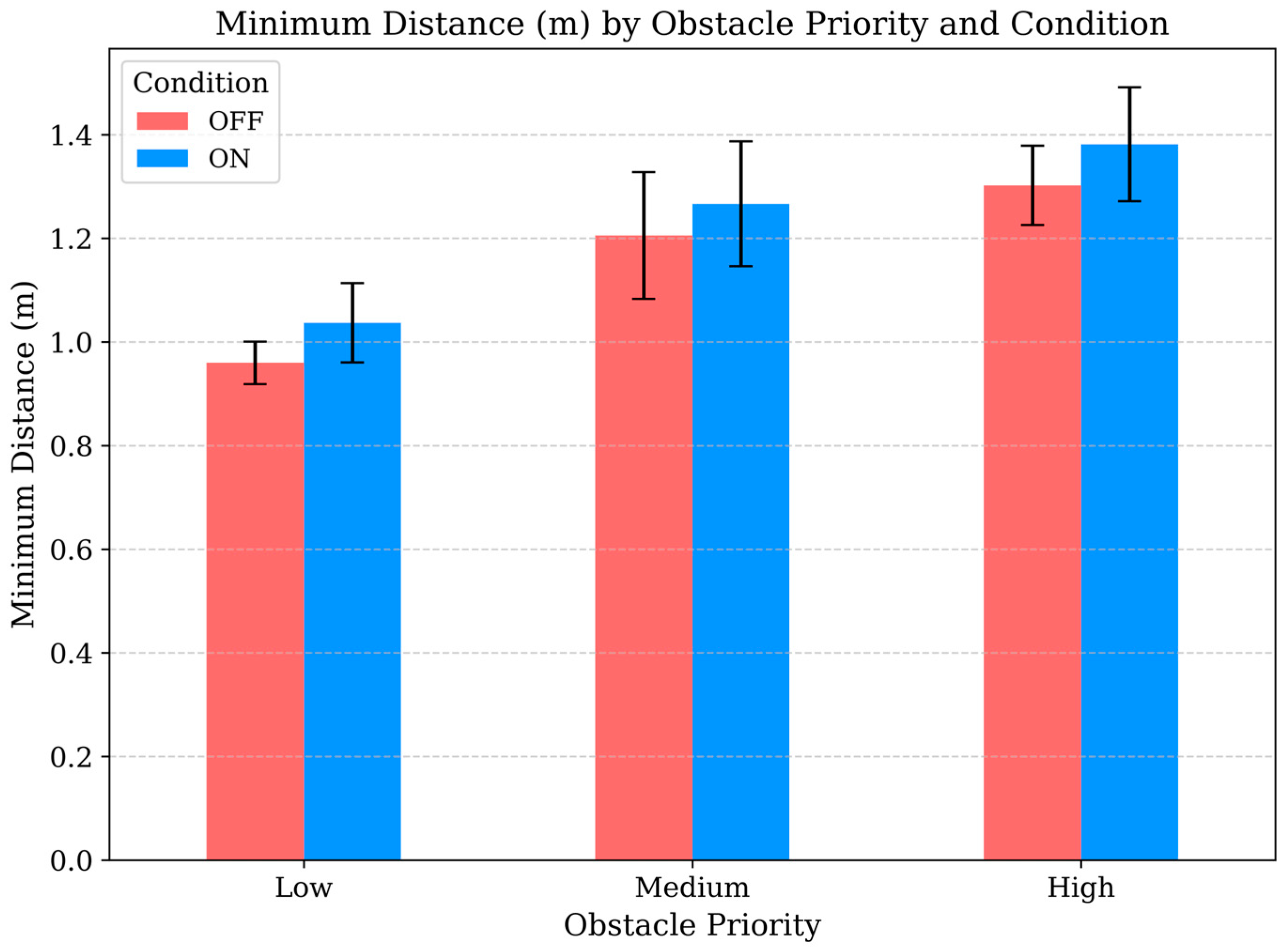

Figure 10,

Figure 11 and

Figure 12 show bar plots of the average values of total effort, total time, and minimum distance, respectively, with error bars indicating standard deviation. Each figure compares the two system conditions across obstacle classes.

These visualizations reveal consistent trends across all three metrics within the integrated system performance evaluation. The total effort and minimum distance are higher when semantic perception is enabled, particularly in the presence of high-priority obstacles. Meanwhile, the total time exhibits more modest variations.

Notably, the largest differences between semantic ON/OFF conditions occur for high-priority obstacles, suggesting priority-dependent behavioral adaptation.

The detailed numerical values are presented in

Table 4, which summarizes the means and standard deviations of each metric by experimental condition.

From these results, two main trends can be preliminarily identified within the CPS context:

System-Level Effort and Distance Increase with Perception: Activating semantic perception consistently leads to higher Total Effort and greater minimum distances, suggesting a more cautious and controlled navigation behavior that prioritizes obstacle avoidance. The effect is most pronounced for high-priority obstacles, where semantic perception increases effort by 14.9% and clearance distance by 6.2% compared to the semantic OFF condition.

Obstacle Priority Modulates System Behavior: The impact of perception becomes more pronounced as obstacle priority increases, especially in terms of effort and clearance. This supports the hypothesis that the robot adjusts its behavior in proportion to the perceived semantic relevance of the obstacle within the CPS framework. Importantly, the largest variance increases also occur with high-priority obstacles when semantic perception is enabled (effort std: 0.17 → 0.65), indicating more variable but consistently cautious behavior.

Although these patterns are suggestive, descriptive statistics alone are insufficient to draw firm conclusions about significance. Therefore, a formal inferential statistical analysis was conducted next to evaluate whether these trends are statistically robust and generalizable.

3.2. Inferential Analysis

To rigorously assess whether the observed differences in performance metrics across system conditions and obstacle priority classes were statistically significant within the CPS evaluation, a two-way ANOVA was independently applied to each metric.

This statistical approach allows for simultaneous evaluation of the following:

Main effects of each independent factor

- ○

System Condition (semantic perception ON vs. OFF), which tests whether enabling semantic perception systematically influences navigation performance.

- ○

Obstacle Priority (low, medium, high), which tests whether the semantic relevance of the obstacle affects the measured metrics.

Interaction effects, which determine whether the influence of one factor depends on the level of the other—for example, if the effect of enabling perception changes depending on the obstacle priority.

Before interpreting results, comprehensive residual diagnostics were conducted to validate statistical assumptions. Shapiro–Wilk tests indicated that residuals for total effort deviated from normality (p = 0.000023), while Levene’s test revealed heterogeneity of variances for total effort (p = 0.0379) and total time (p = 0.0052). In contrast, minimum distance satisfied both assumptions (normality: p = 0.418, homogeneity: p = 0.147). Given these assumption violations, we implemented a dual analytical strategy, complementing the classical ANOVA with Welch’s robust ANOVA using heteroscedasticity-consistent covariance estimators (HC3). This approach ensures that conclusions are not biased by assumption violations and provides convergent evidence for our findings.

Table 5 summarizes the ANOVA results for each metric within the systems evaluation framework. For each factor, the table reports the Sum of Squares (SS), which quantifies the amount of variance in the data explained by that factor; the Degrees of Freedom (DoF), which depend on the number of levels within each factor (e.g., DoF = 2 for three obstacle classes); the

F-value, a ratio that compares the explained variance to the unexplained variance; and the

p-value, which indicates the probability that the observed differences occurred by chance. A low

p-value (typically < 0.05) denotes statistical significance. In addition, effect sizes (η

2, eta squared) are reported to quantify the proportion of variance explained by each factor (values of 0.01, 0.06, and 0.14 are interpreted as small, medium, and large effects, respectively [

44,

45]). Finally, a qualitative significance label is provided to aid interpretation.

3.2.1. Statistical Power and Practical Significance Interpretation

Post hoc power analysis confirmed 1 − β = 0.94 for detecting the observed effect sizes, well above the 0.80 threshold for adequate statistical power. The large effect size for obstacle priority on clearance (η2 = 0.683) indicates that semantic classification explains 68% of safety behavior variance, demonstrating strong practical significance beyond statistical significance. Cohen’s d = 1.47 for high vs. low priority clearance differences represents a very large effect size, confirming that the behavioral changes are not only statistically detectable but operationally meaningful for mine safety applications. The medium effect size for system condition on effort (η2 = 0.129) translates to approximately 13% variance explained, representing a meaningful but manageable energy trade-off that falls well within acceptable operational parameters for industrial deployment.

The results of the ANOVA reveal several important patterns within the CPS performance evaluation that directly support our research hypotheses. First, total effort is significantly influenced both by the system condition (p < 0.001, η2 = 0.129) and by obstacle priority (p < 0.001, η2 = 0.434). A significant interaction effect was also detected (p = 0.006, η2 = 0.075), indicating that the influence of semantic perception on effort depends on obstacle priority. This confirms that enabling semantic perception within the CPS architecture increases the robot’s kinematic workload, and that this increase is especially pronounced for high-priority obstacles, supporting our hypothesis of priority-adaptive behavior.

For total navigation time, the system condition factor did not yield statistically significant differences (p = 0.145, η2 = 0.031). However, obstacle priority showed a significant main effect (p = 0.012, η2 = 0.138), suggesting that travel duration differs across obstacle classes, although semantic perception ON/OFF does not systematically alter mission time. No significant interaction was observed (p = 0.123), indicating that these two factors operate independently for this metric. This finding is operationally significant, as it demonstrates that safety gains do not come at the cost of mission efficiency.

For the minimum distance to obstacles, both system condition (p = 0.005, η2 = 0.044) and obstacle priority (p < 0.001, η2 = 0.683) exhibited strong and highly significant effects. These results provide compelling evidence that semantic perception within the CPS framework enhances safety by increasing the clearance distance maintained from obstacles, with this safety margin becoming progressively larger as obstacle priority rises. No significant interaction effect was found (p = 0.947), suggesting that the two factors influence this metric in a stable and additive manner. The large effect size for obstacle priority (η2 = 0.683) indicates that semantic classification explains approximately 68% of the variance in safety clearance behavior.

3.2.2. Robust Statistical Validation and Convergent Evidence

Because assumption tests indicated deviations from normality and homogeneity (particularly for effort and time), the analysis was complemented with Welch’s robust ANOVA to verify the stability of our conclusions. The robust results provided strong convergent evidence, confirming the main findings across different statistical assumptions: for total effort, system condition (F = 13.87, p < 0.001), obstacle priority (F = 46.31, p < 0.001), and their interaction (F = 3.83, p = 0.028) remained significant; for total time, obstacle priority effects were retained (F = 7.78, p = 0.001), while system condition and the interaction remained non-significant (p = 0.061 and p = 0.120, respectively); and for minimum distance, the same pattern as in the classical ANOVA was observed, with strong and independent effects of condition (p < 0.01) and obstacle priority (p < 0.001). This dual analytical approach reinforces the robustness of the conclusions, demonstrating that the substantive interpretation of results remains unchanged even under heteroscedasticity, thereby strengthening confidence in the practical applicability of findings to real-world mining scenarios where variance heterogeneity is common.

Following the significant main and interaction effects found in the ANOVA, a post hoc Tukey Honestly Significant Difference (HSD) test was conducted to further explore pairwise differences and quantify the specific impacts of obstacle priority on navigation metrics. Since the system condition factor had only two levels (ON/OFF), no post hoc test was required for that variable. For total effort, Tukey comparisons were applied separately within each condition, given the significant interaction. For total time and minimum distance, global comparisons across obstacle classes were performed, as both showed significant main effects without interaction.

The post hoc results for total effort indicate that obstacle priority has a significant and graduated effect on the robot’s kinematic effort. As summarized in

Table 6, high-priority obstacles led to significantly higher effort compared to both medium and low priorities (

p < 0.01 in both cases). However, the difference between the medium and low levels with semantics enabled did not reach statistical significance (