Performance and Efficiency Gains of NPU-Based Servers over GPUs for AI Model Inference †

Abstract

1. Introduction

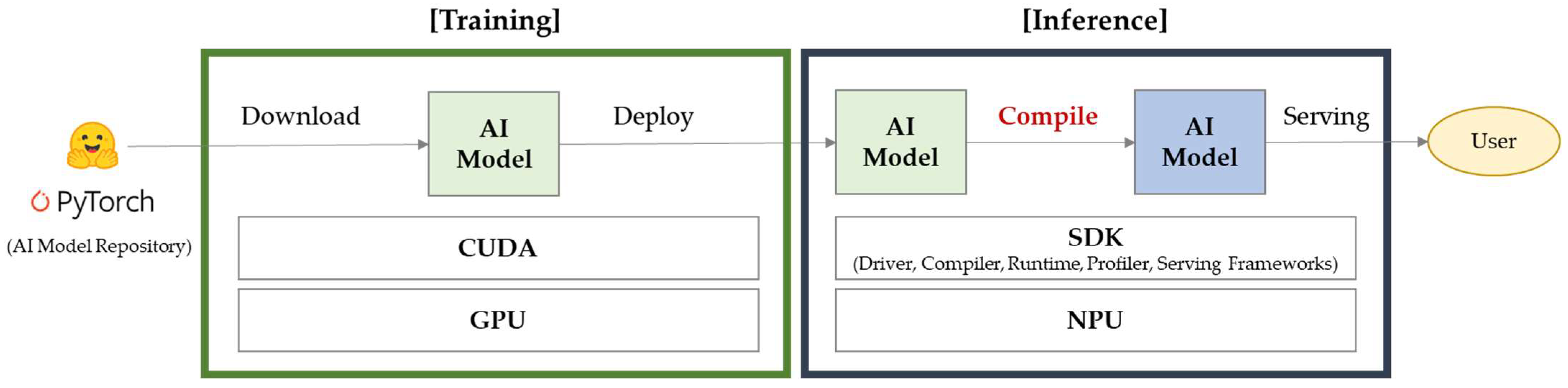

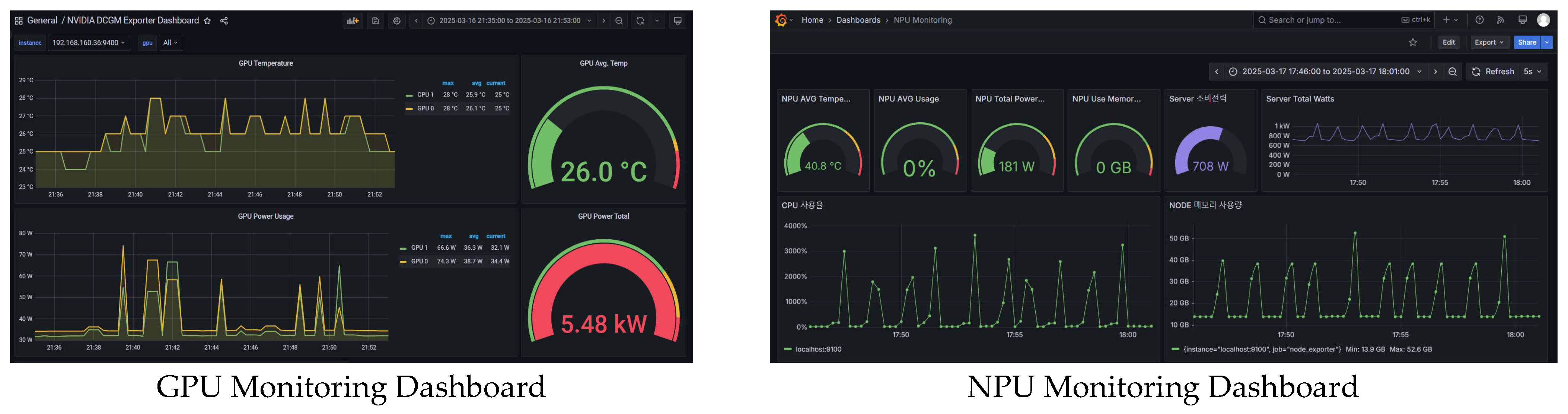

2. Proposed Architecture for AI Model Inference

3. Experiments

4. Evaluations

4.1. Text-to-Text

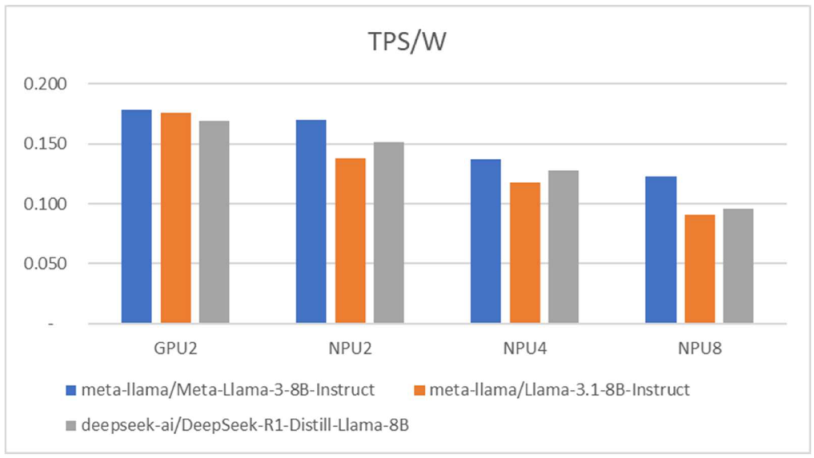

4.1.1. Performance Evaluation of Llama-8B Models

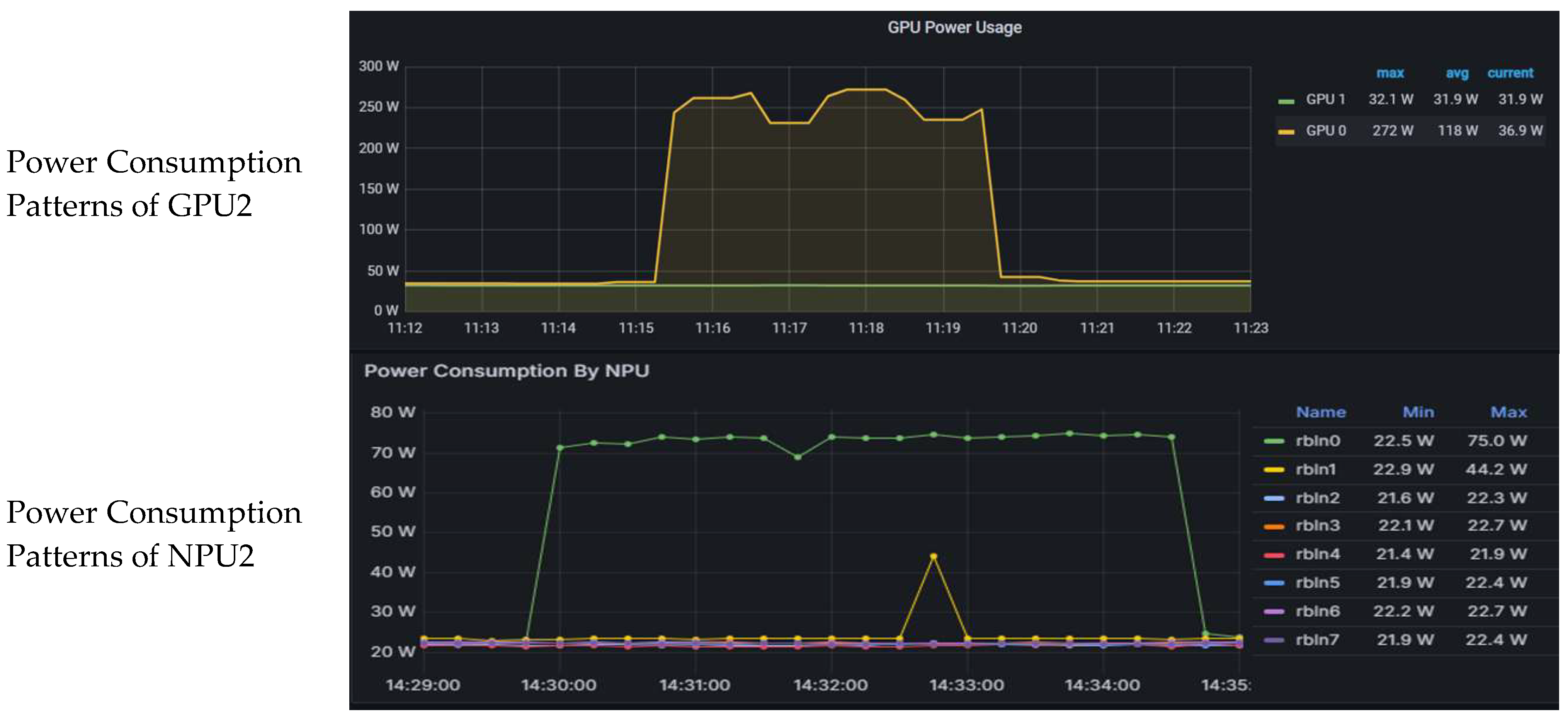

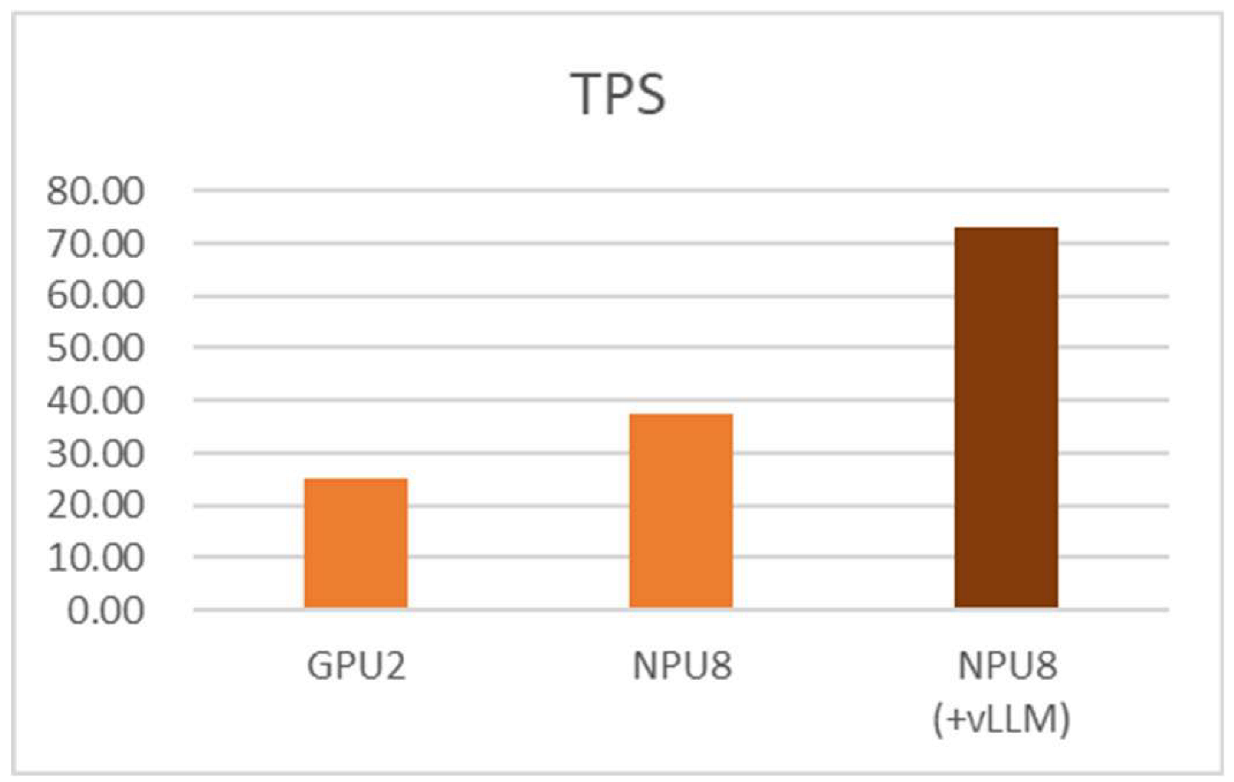

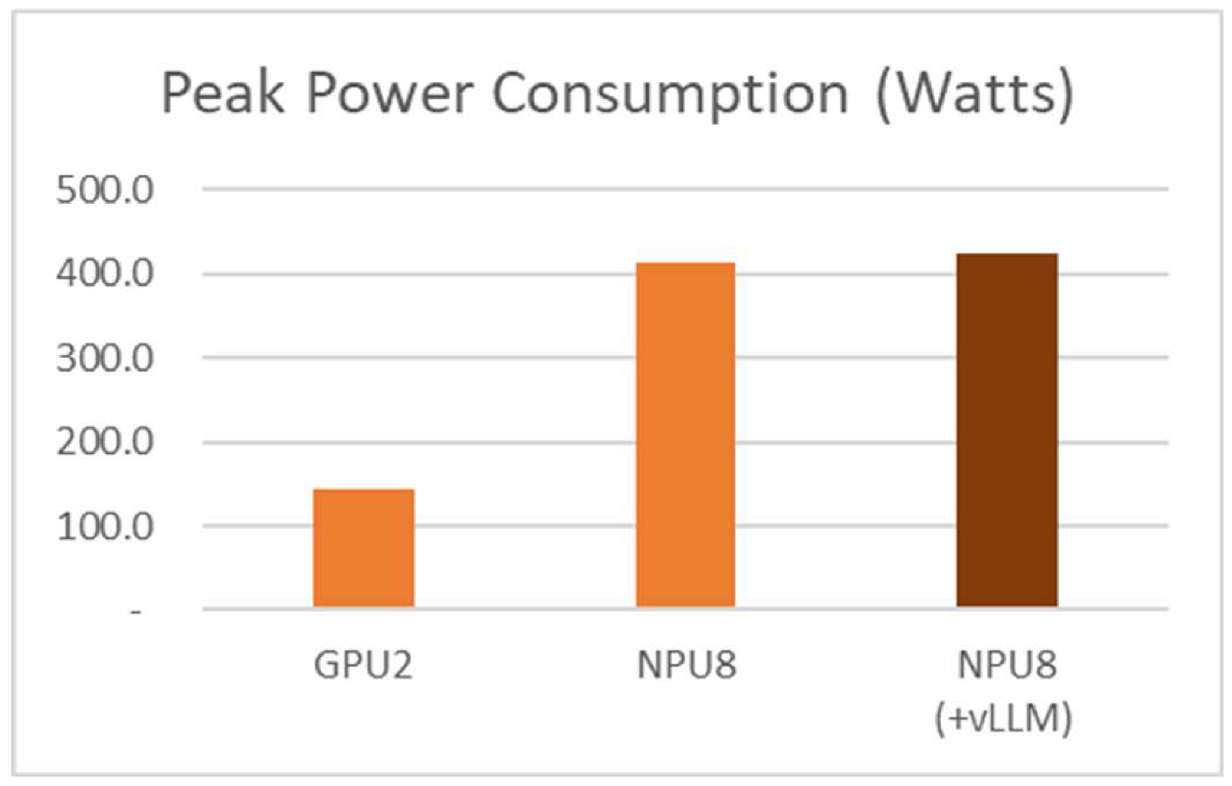

4.1.2. Performance Evaluation After vLLM Integration

4.2. Text-to-Image

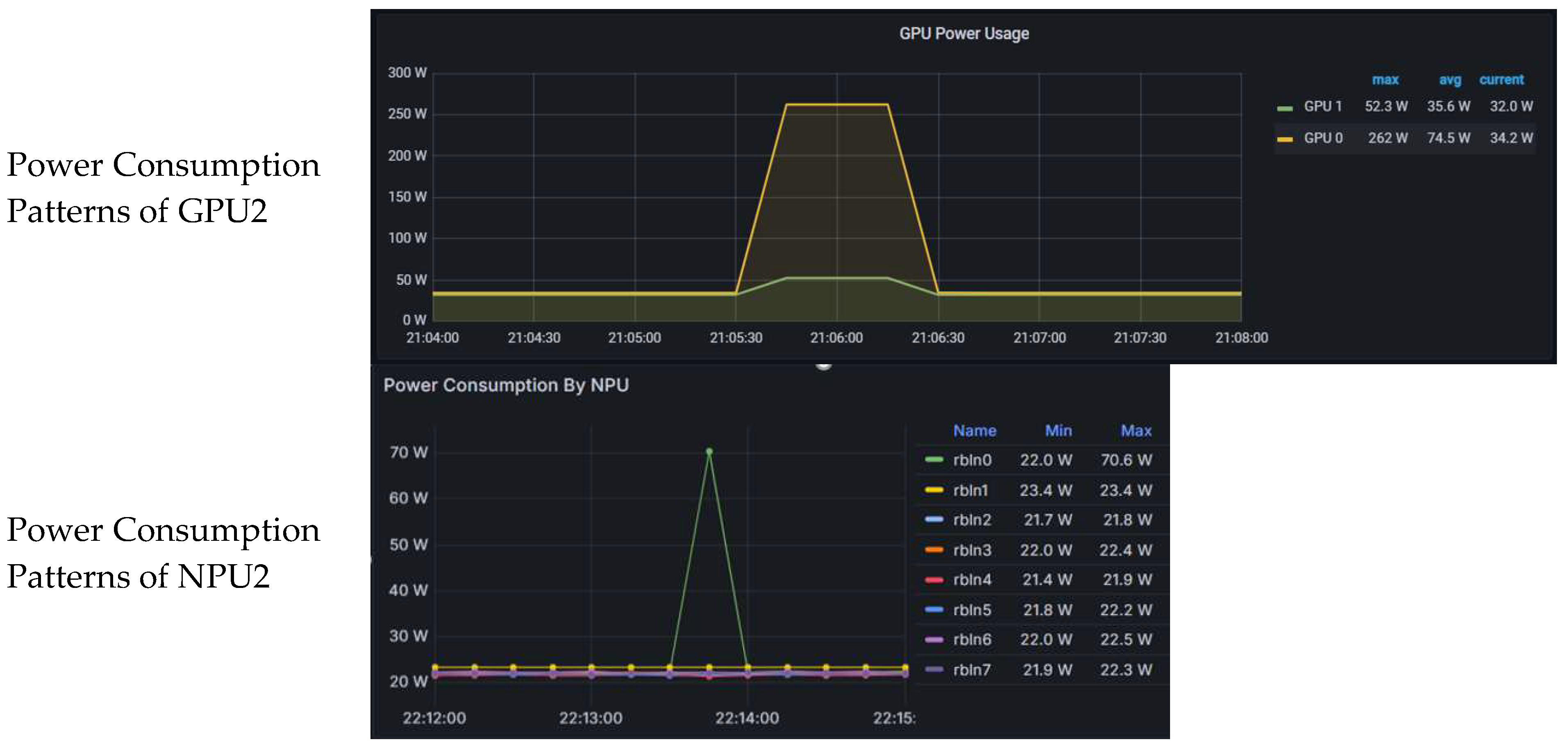

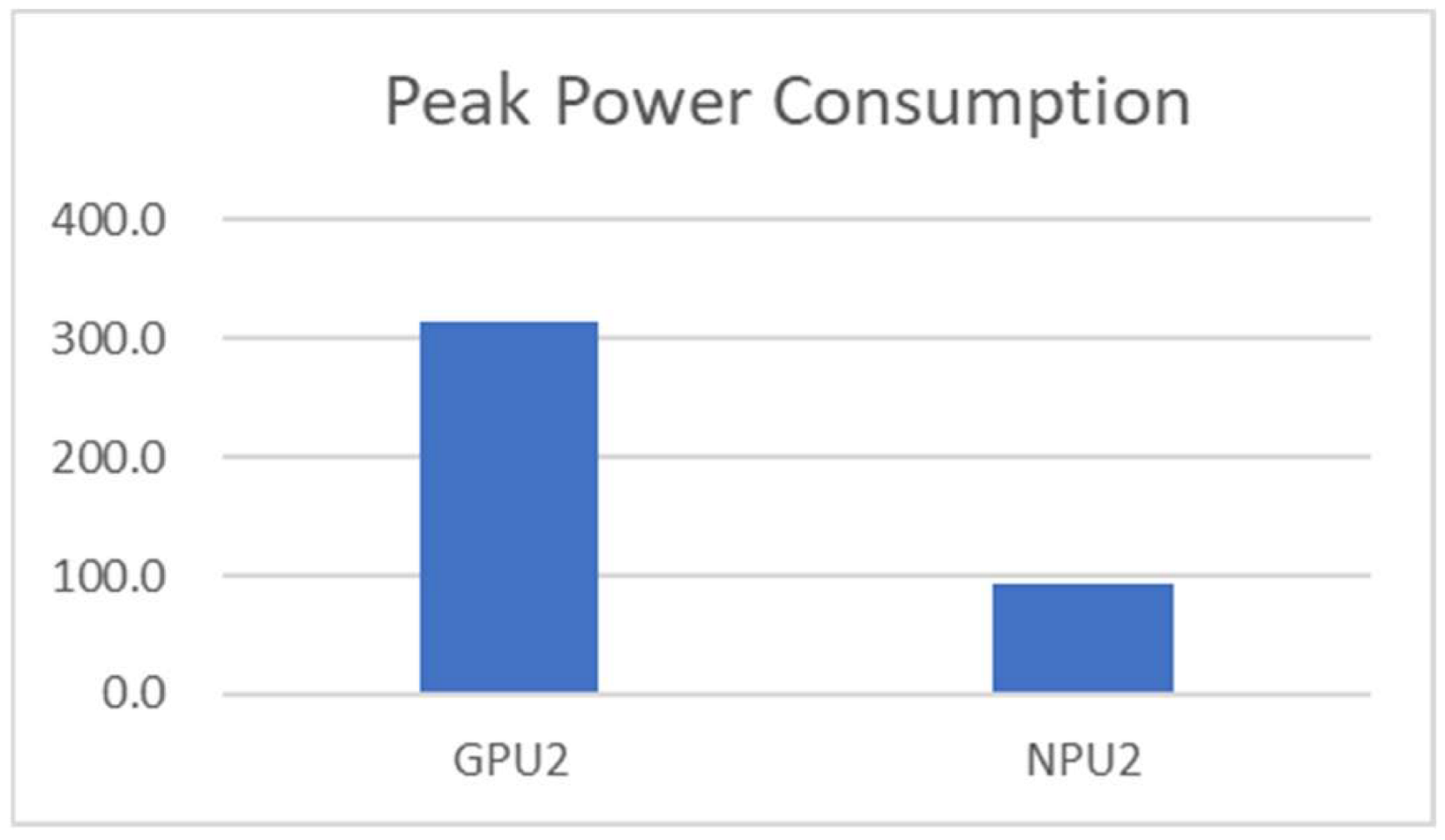

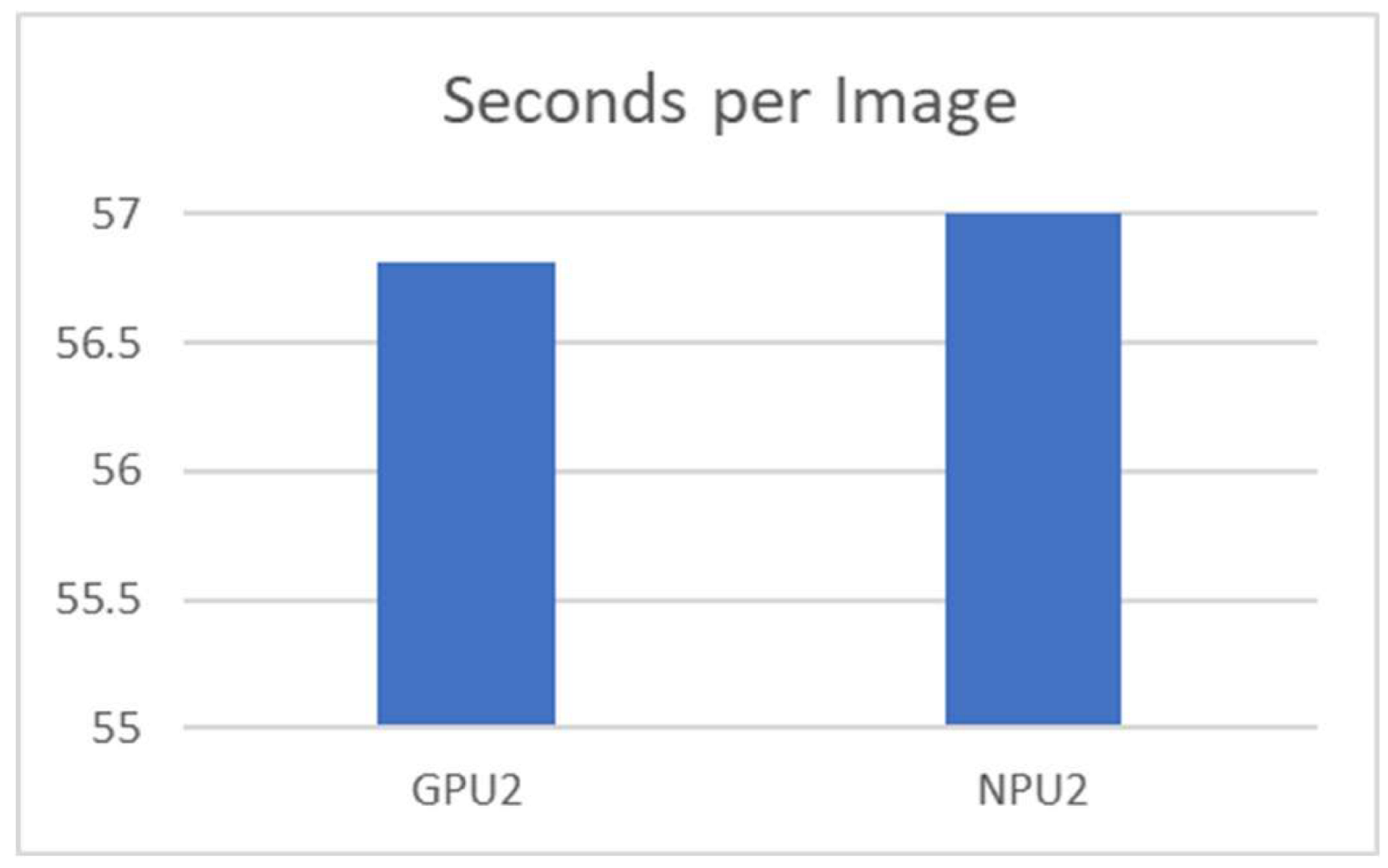

4.2.1. Performance Evaluation of SDXL-Turbo

4.2.2. Performance Evaluation of Stable Diffusion 3 Medium

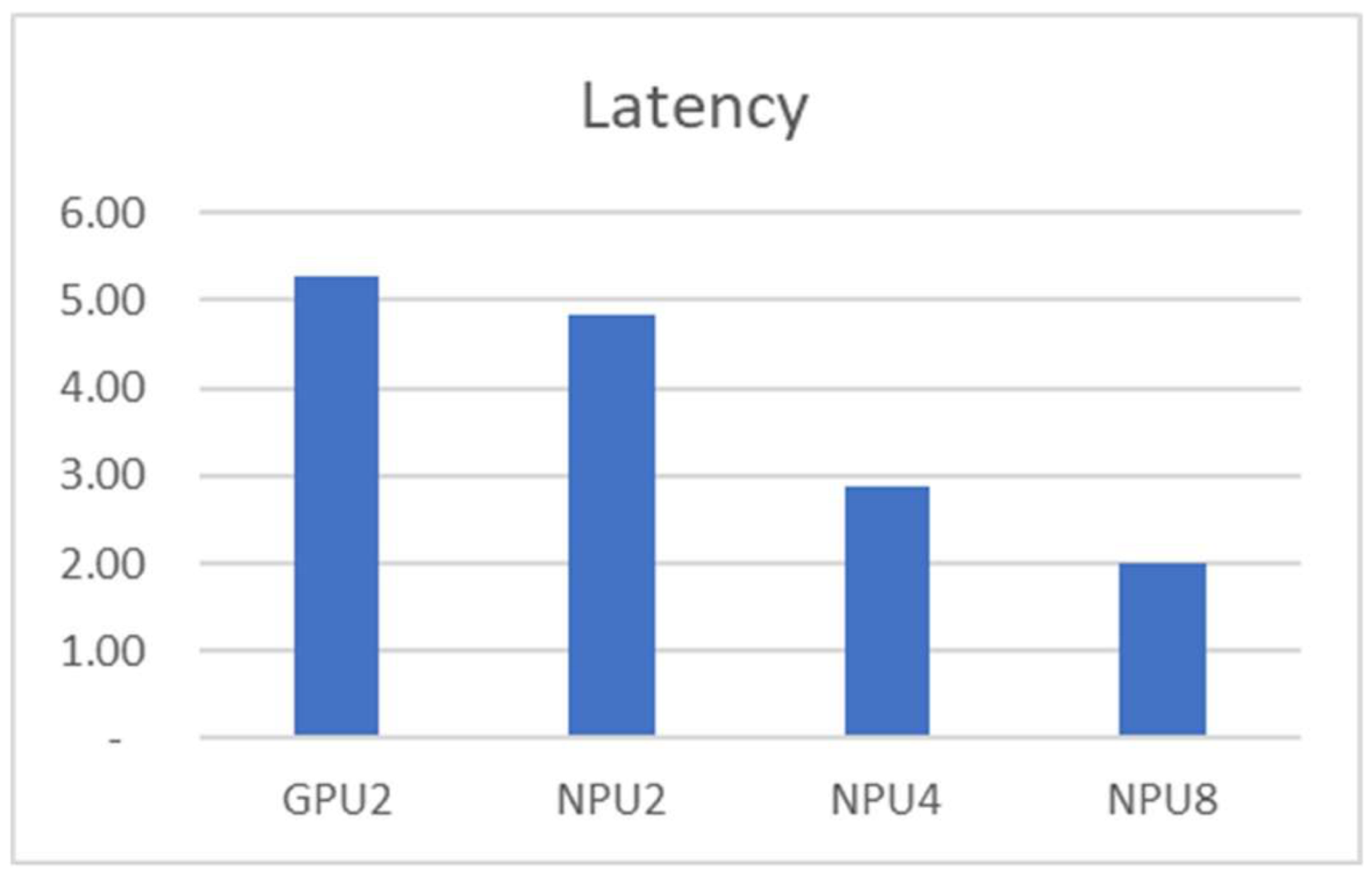

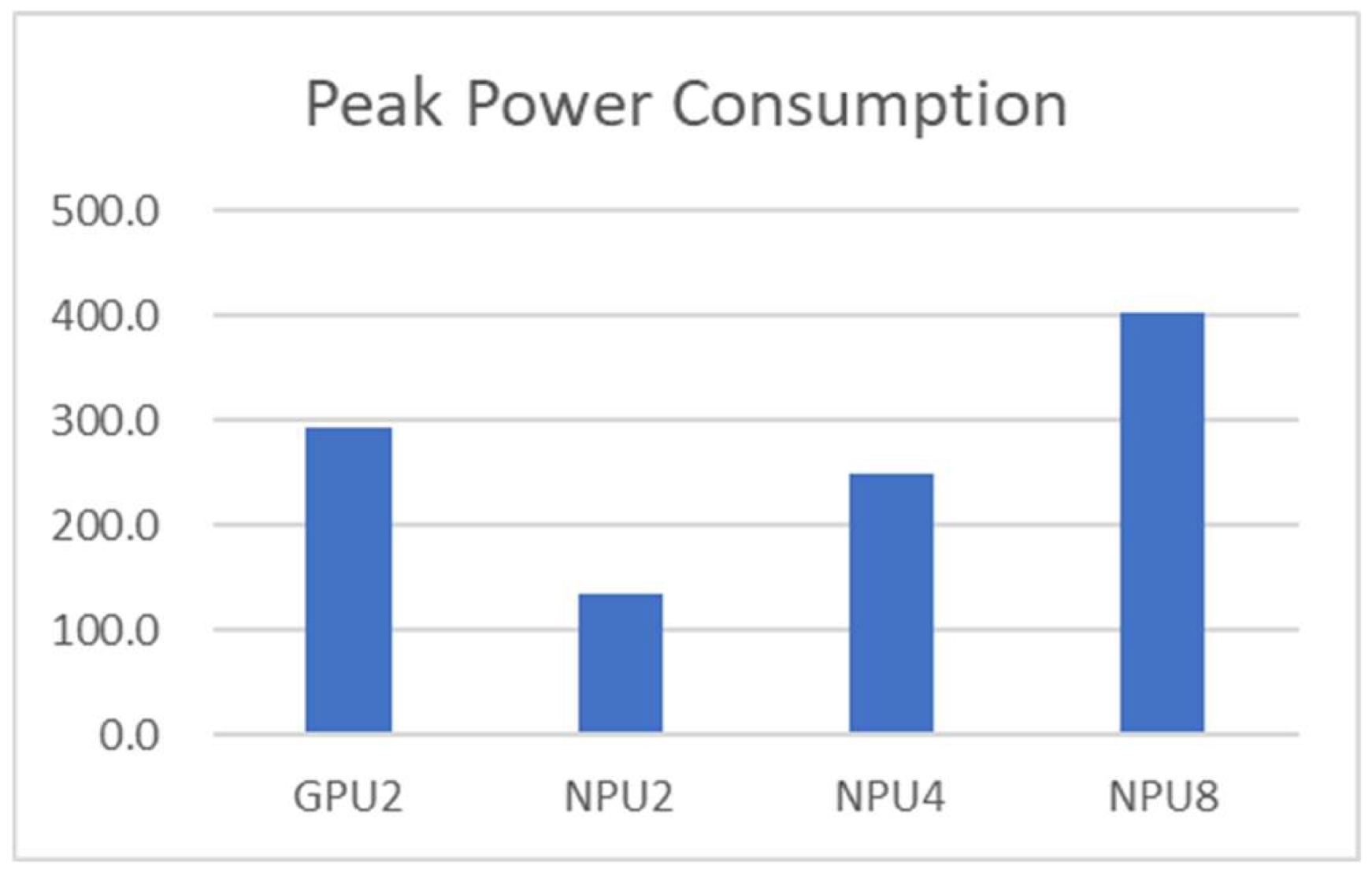

4.3. Multimodal

Performance Evaluation of LLaVA-NeXT

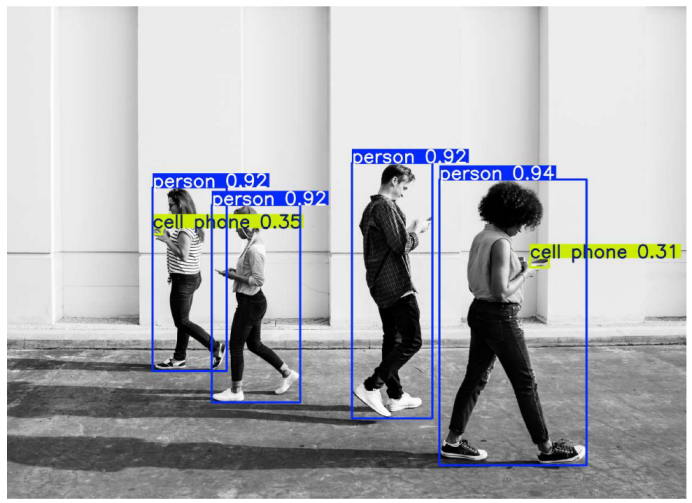

4.4. Object Detection

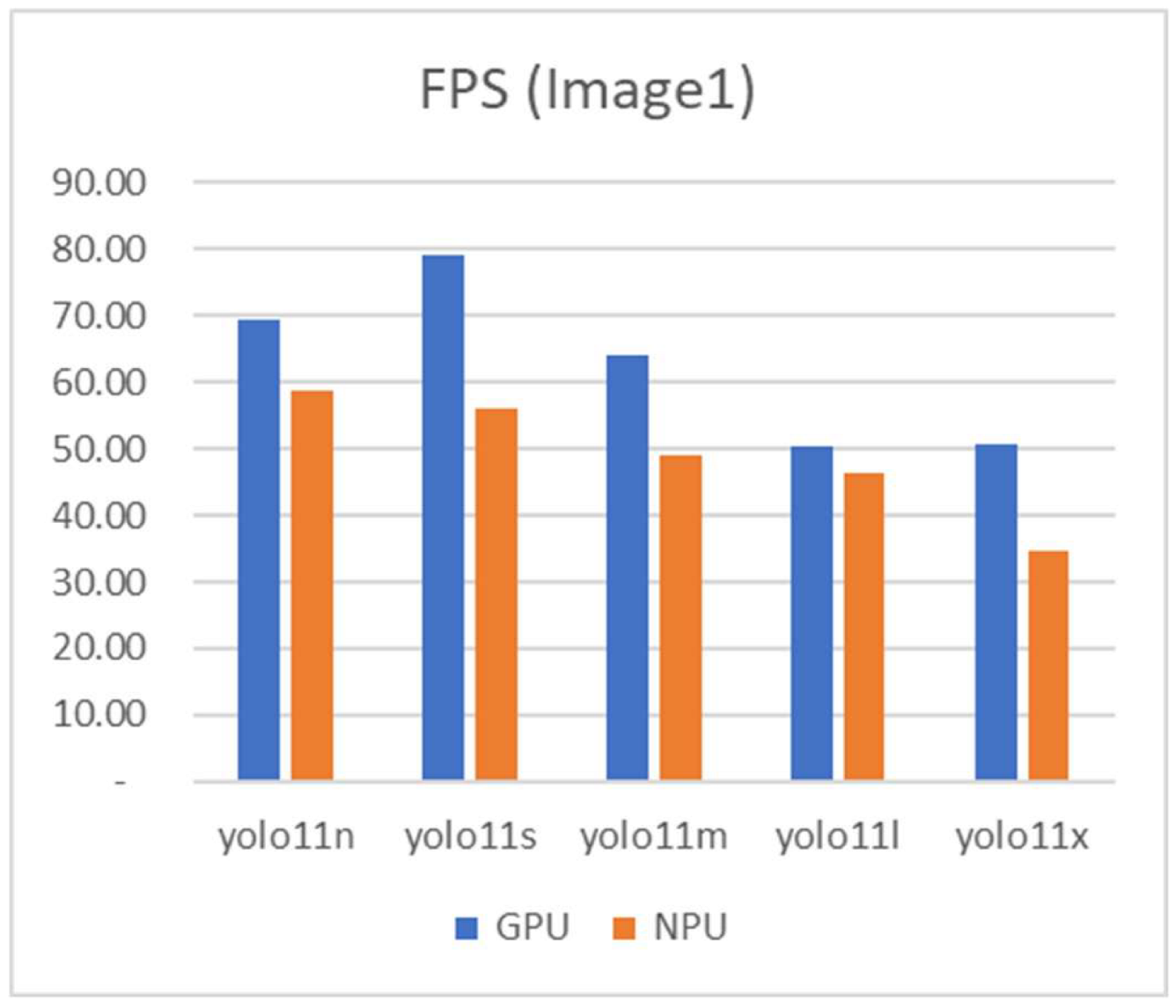

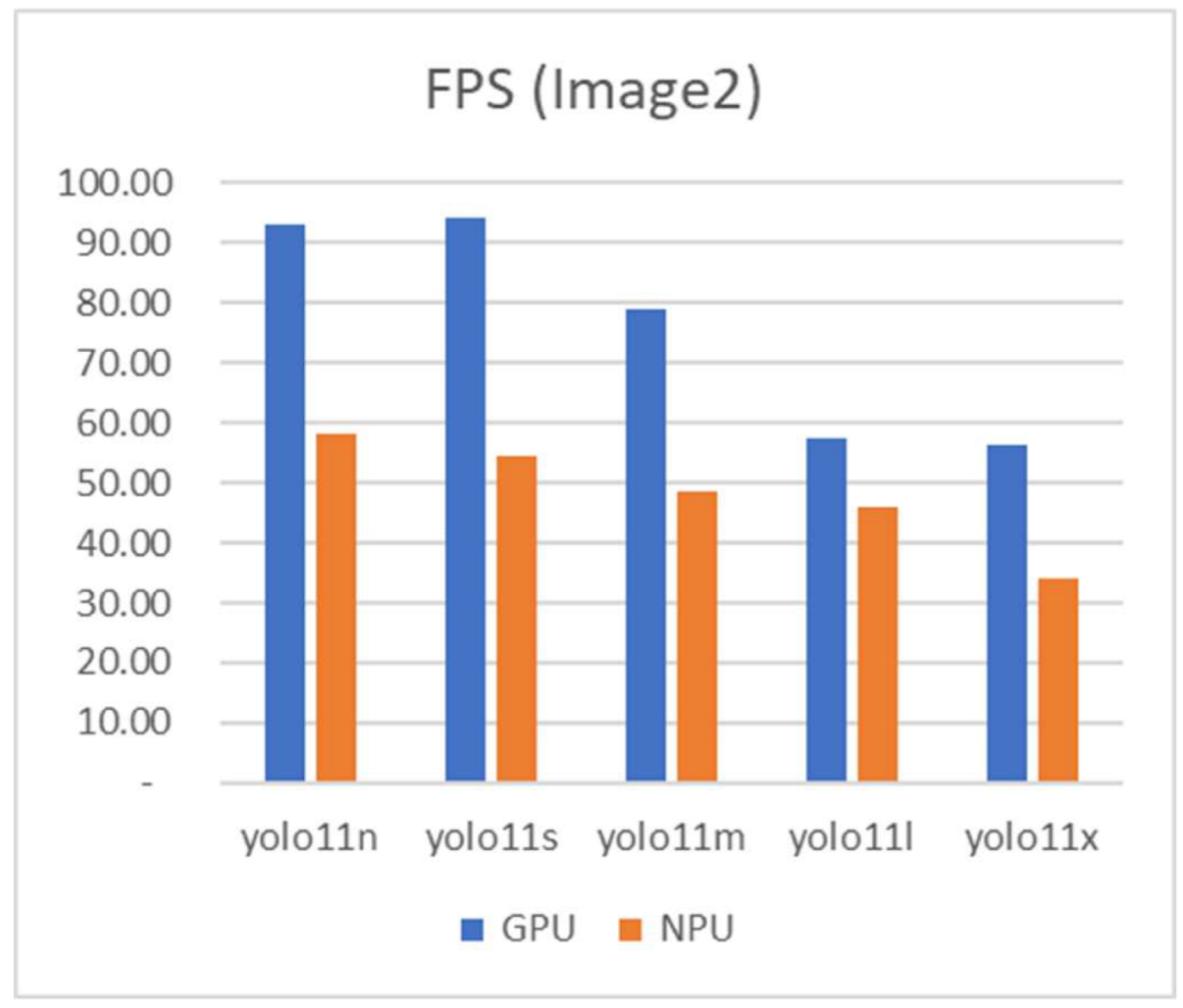

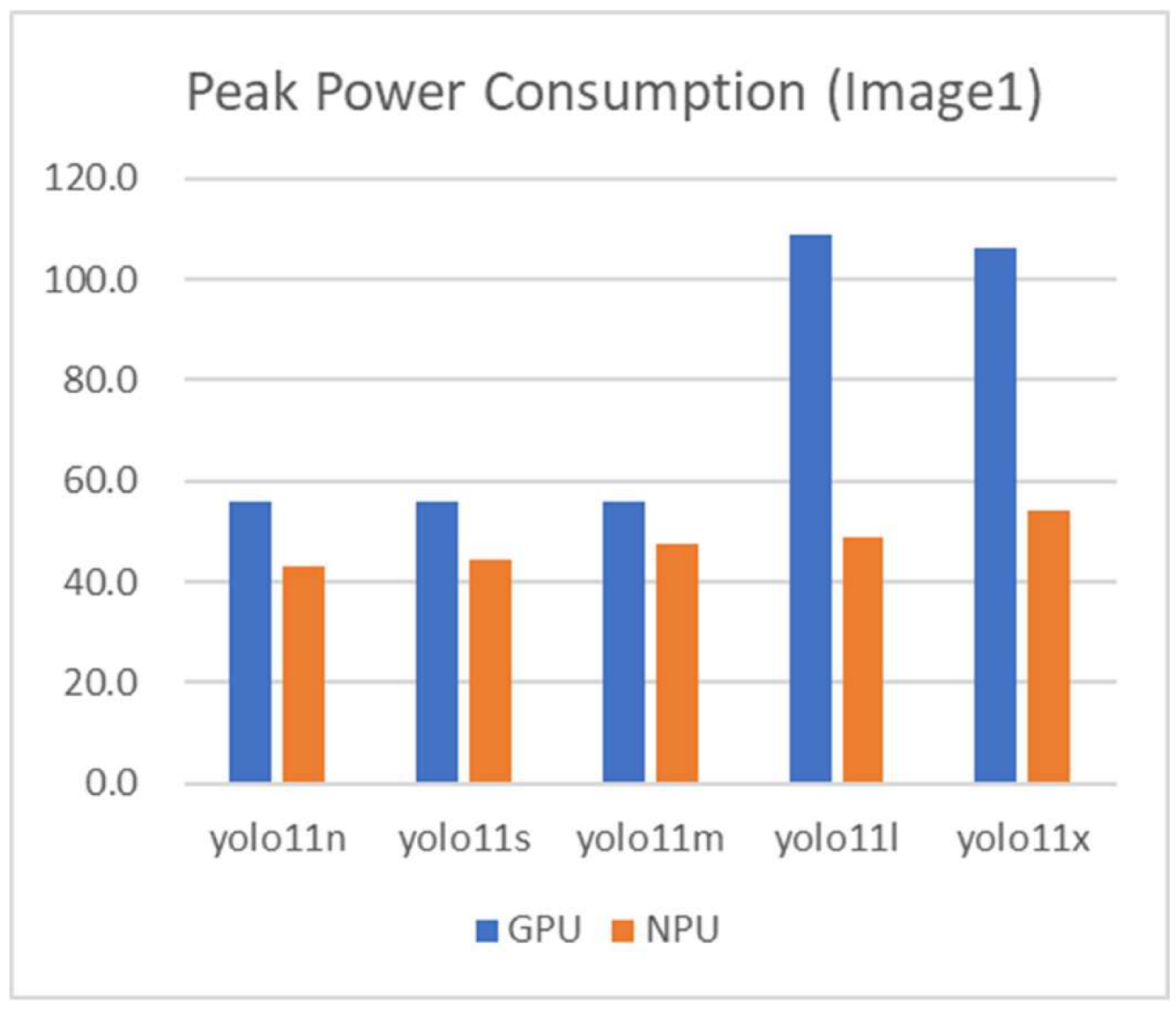

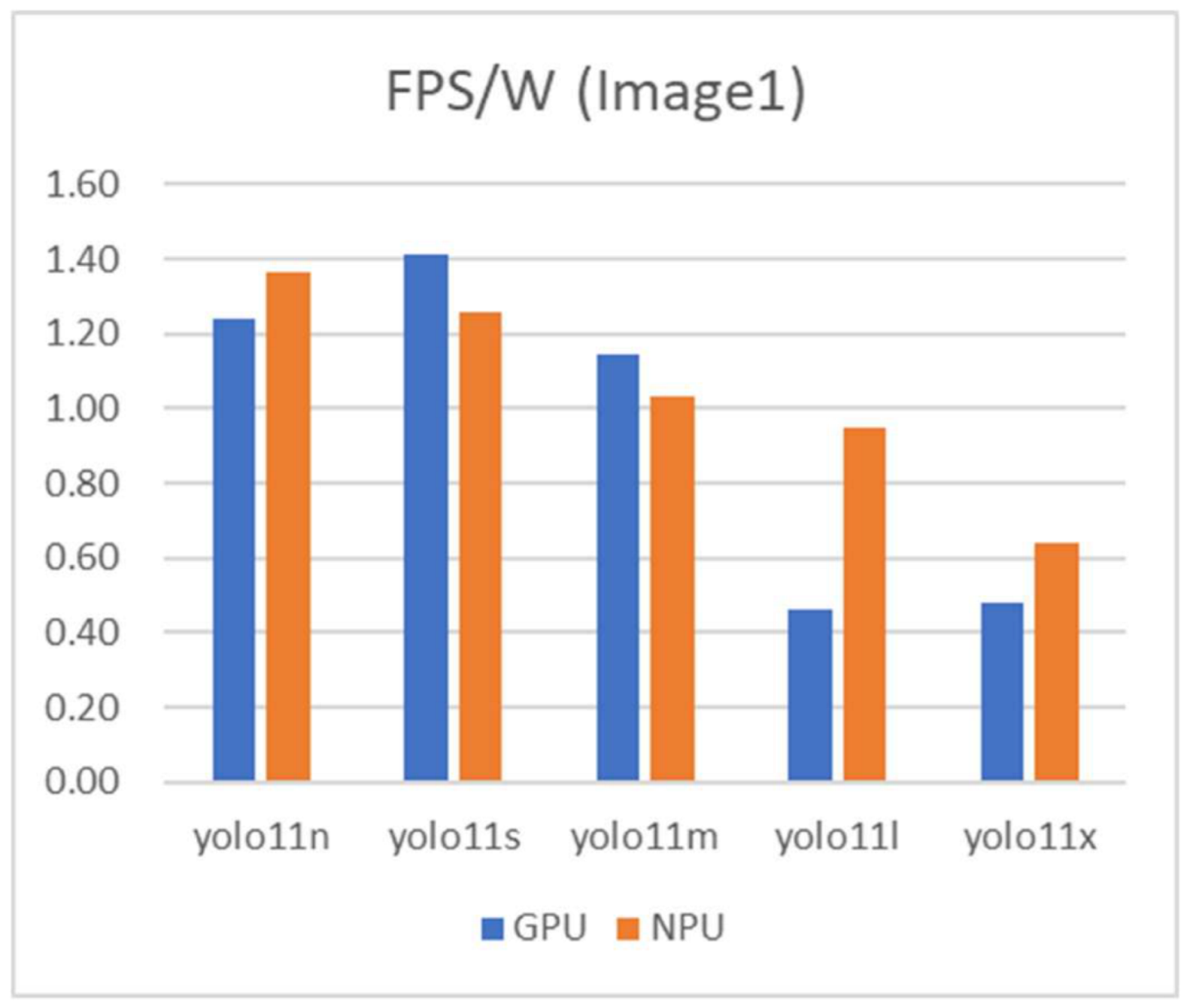

Performance Evaluation of YOLO11

5. Discussion

6. Conclusions

- Text-to-Text: NPUs scale effectively with device count, outperforming dual-GPU setups at four-chip configurations and achieving up to 2.8× higher tokens-per-second with vLLM integration, alongside a 92% improvement in TPS/W.

- Text-to-Image: For real-time generation (SDXL-Turbo), NPUs deliver 14% higher image-per-second rates and 70% better power efficiency. In high-resolution synthesis (Stable Diffusion 3 Medium), NPUs match GPU speed with 60% lower peak power.

- Multimodal: LLaVA-NeXT inference latency decreases by 62% from GPU2 to NPU8, with NPUs maintaining energy-efficient operation—NPU2 consumes 54% less power than GPU2.

- Object Detection: Although GPUs lead in raw FPS for smaller YOLO11 variants, NPUs exhibit 1.3–1.9× higher FPS/W for larger models, highlighting their advantage for accuracy-driven workloads under power constraints.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Category | GPU Server | NPU Server |

|---|---|---|

| Model ID | PowerEdge R7525 | G293-S43-AAP1 |

| Manufacturer | Dell | Gigabyte |

| CPU | AMD EPYC 7352 24-Core Processor × 2 | INTEL(R) XEON(R) GOLD 6542Y x × 2 |

| AI Accelerators | NVIDIA-A100-PCIe-40GB × 2 | RBLN-CA12 × 8 |

| Memory | Samsung DDR4 Dual Rank 3200 MT/s 256GB | Samsung DDR5 5600MT/S 64GB × 24 |

| Storage | Intel SSD 1787.88 GB × 2 Raid 0 | Samsung SSD 1.92TB Raid 1 |

| Networking | BCM5720 1GB ethernet525 | 2 x 10Gb/s LAN ports via Intel® X710-AT2 |

| Power | DELTA 1400W × 2 | Dual 3000W 80 PLUS Titanium redundant power supply |

| Category | GPU | NPU |

|---|---|---|

| Model ID | NVIDIA A100 (PCIe 80 GB) | Rebellions RBLN-CA12 |

| Process/Architecture | 7 nm TSMC N7, GA100 Ampere GPU | 5 nm Samsung EUV, ATOM™ inference SoC |

| Peak tensor performance | FP16 312 TFLOPS/INT8 624 TOPS | FP16 32 TFLOPS/INT8 128 TOPS |

| On-chip memory | 40 MB L2 cache | 64 MB on-chip SRAM (scratch + shared) |

| External memory and Bandwidth | 80 GB HBM2e, 1.94 TB/s | 16 GB GDDR6, 256 GB/s |

| Host interface/Form-factor | PCIe 4.0 x16; dual-slot FHFL card (267 × 111 mm) | PCIe 5.0 x16; single-slot FHFL card (266.5 × 111 × 19 mm) |

| Multi-instance | Up to 7 MIGs (≈10 GB each) | Up to 16 HW-isolated inference instances |

| TDP | 300 W fixed | 60–130 W configurable |

Appendix B

Appendix B.1

Appendix B.2

- Image 2 (bus.jpg) in Table 21. Reproduced from the Ultralytics YOLOv11 sample images. © Ultralytics. Licensed under the GNU Affero General Public License v3.0 (AGPL-3.0).

References

- Hong, Y.; Kim, D. NPU Enhanced Hybrid AI Architecture for High-Performance and Cost-Effective AI Services. In Proceedings of the 19th International Conference on Innovative Computing, Information and Control (ICICIC 2025), Kitakyushu, Japan, 26–29 August 2025. [Google Scholar]

- IoT Analytics. The Leading Generative AI Companies. Available online: https://iot-analytics.com/leading-generative-ai-companies/ (accessed on 26 July 2025).

- Aghapour, E. Efficient Deep Learning Inference on End Devices. Ph.D. Thesis, University of Amsterdam, Amsterdam, The Netherlands, 2025. [Google Scholar]

- Reuther, A.; Michaleas, P.; Jones, M.; Gadepally, V.; Samsi, S.; Kepner, J. Survey and Benchmarking of Machine Learning Accelerators. In Proceedings of the IEEE High Performance Extreme Computing Conference (HPEC 2019), Waltham, MA, USA, 24–26 September 2019; pp. 1–9. [Google Scholar] [CrossRef]

- Jayanth, R.; Gupta, N.; Prasanna, V. Benchmarking Edge AI Platforms for High-Performance ML Inference. arXiv 2024, arXiv:2409.14803. [Google Scholar]

- Xu, D.; Zhang, H.; Yang, L.; Liu, R.; Huang, G.; Xu, M.; Liu, X. Fast On-Device LLM Inference with NPUs. In Proceedings of the 30th ACM International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS’25), Rotterdam, The Netherlands, 30 March–3 April 2025; pp. 1–18. [Google Scholar] [CrossRef]

- Boutros, A.; Nurvitadhi, E.; Betz, V. Specializing for Efficiency: Customizing AI Inference Processors on FPGAs. In Proceedings of the 2021 International Conference on Microelectronics (ICM 2021), New Cairo, Egypt, 19–22 December 2021; pp. 62–65. [Google Scholar] [CrossRef]

- Li, R.; Fu, D.; Shi, C.; Huang, Z.; Lu, G. Efficient LLMs Training and Inference: An Introduction. IEEE Access 2025, 13, 32944–32970. [Google Scholar] [CrossRef]

- Tan, T.; Cao, G. Deep Learning on Mobile Devices with Neural Processing Units. Computer 2023, 56, 48–57. [Google Scholar] [CrossRef]

- Oh, S.; Kwon, Y.; Lee, J. Optimizing Real-Time Object Detection in a Multi-Neural Processing Unit System. Sensors 2025, 25, 1376. [Google Scholar] [CrossRef] [PubMed]

- Xue, Y.; Liu, Y.; Huang, J. System Virtualization for Neural Processing Units. In Proceedings of the 19th Workshop on Hot Topics in Operating Systems (HotOS’23), Providence, RI, USA, 22–24 June 2023. [Google Scholar] [CrossRef]

- Heo, G.; Lee, S.; Cho, J.; Choi, H.; Lee, S.; Ham, H.; Kim, G.; Mahajan, D.; Park, J. NeuPIMs: NPU–PIM Heterogeneous Acceleration for Batched LLM Inferencing. In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS’24), La Jolla, CA, USA, 27 April–1 May 2024. [Google Scholar] [CrossRef]

- Alexander, D.; Ghozi, W. Performance Analysis of Deep Learning Model Quantization on NPU for Real-Time Automatic License Plate Recognition Implementation. J. Appl. Inform. Comput. 2025, 9, 1227–1233. [Google Scholar] [CrossRef]

- Indirli, F.; Ornstein, A.C.; Desoli, G.; Buschini, A.; Silvano, C.; Zaccaria, V. Layer-Wise Exploration of a Neural Processing Unit Compiler’s Optimization Space. In Proceedings of the 2024 10th International Conference on Computer Technology Applications (ICCTA 2024), Vienna, Austria, 15–17 May 2024. [Google Scholar] [CrossRef]

- Rebellions. Rebellions’ Software Stack: Silent Support (White Paper). Available online: https://rebellions.ai/wp-content/uploads/2024/08/WhitePaper_Issue2_ATOM_SoftwareStack.pdf (accessed on 26 July 2025).

- NVIDIA. NVIDIA A100 Tensor Core GPU Architecture White Paper. Available online: https://images.nvidia.com/aem-dam/en-zz/Solutions/data-center/nvidia-ampere-architecture-whitepaper.pdf (accessed on 26 July 2025).

- Rebellions. ATOM™ Architecture: Finding the Sweet Spot for GenAI (White Paper). Available online: https://rebellions.ai/wp-content/uploads/2024/07/ATOMgenAI_white-paper.pdf (accessed on 26 July 2025).

- rebellions-sw. rbln-model-zoo (GitHub Repository). Available online: https://github.com/rebellions-sw/rbln-model-zoo (accessed on 26 July 2025).

- Rebellions. RBLN SDK Guide (Online Documentation). Available online: https://docs.rbln.ai/index.html (accessed on 26 July 2025).

- Rebellions. Understanding RBLN Compiler (White Paper). Available online: https://rebellions.ai/wp-content/uploads/2024/09/WhitePaper_Issue3_UnderstandingRBLNCompiler-3.pdf (accessed on 26 July 2025).

- Dubey, A.; Grattafiori, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar]

- Kwon, W.; Li, Z.; Zhuang, S.; Sheng, Y.; Zheng, L.; Yu, C.H.; Gonzalez, J.E.; Zhang, H.; Stoica, I. Efficient Memory Management for Large Language Model Serving with PagedAttention. In Proceedings of the 29th ACM Symposium on Operating Systems Principles (SOSP’23), Koblenz, Germany, 23–26 October 2023; pp. 611–626. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2022), New Orleans, LA, USA, 19–24 June 2022; pp. 10684–10695. [Google Scholar] [CrossRef]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. In Proceedings of the 37th Conference on Neural In-for-mation Processing Systems (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Curran Associates, Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 34892–34916. Available online: https://dl.acm.org/doi/abs/10.5555/3666122.3667638 (accessed on 1 September 2025).

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

| Characteristics | Training | Inference |

|---|---|---|

| Primary Purpose | Model parameter learning and optimization | Real-time prediction generation |

| Computational Intensity | Very high (multi-epoch iterations) | Moderate (single forward pass) |

| Processing Pattern | Batch processing with large datasets | Single sample or micro-batch processing |

| Resource Requirements | Extensive compute, memory, and storage | Moderate compute, optimized for efficiency |

| Precision Requirements | High (FP32/FP16 for stability) | Flexible (INT8/INT4 quantization) |

| Latency Constraints | Relaxed (batch processing) | Critical (real-time response) |

| Energy Consumption | High (sustained computation) | Low (efficient operation) |

| Optimization Priority | Throughput maximization | Latency minimization, energy efficiency |

| Typical Environment | Data centers, cloud computing | Edge devices, embedded systems |

| Hardware Preference | GPU (high throughput) | NPU (Low latency, power efficient) |

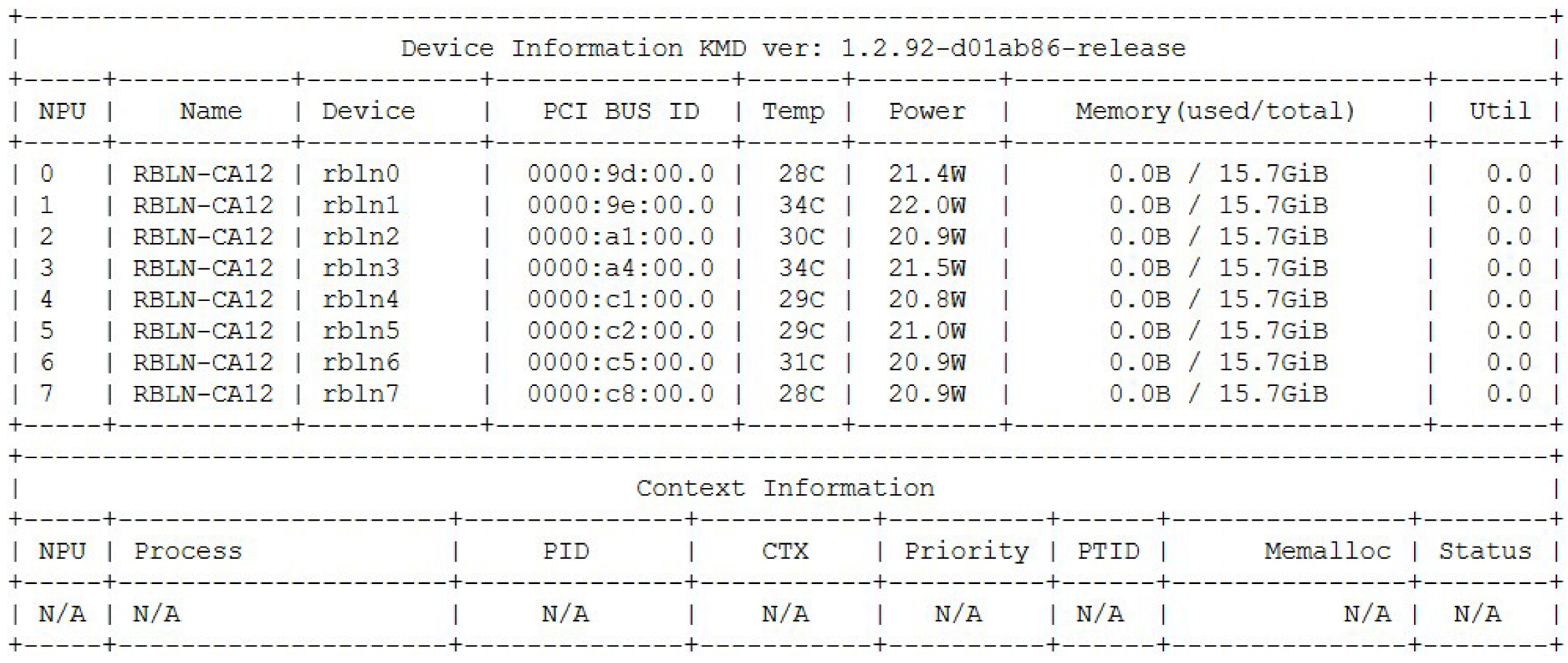

| Category | GPU Server | NPU Server |

|---|---|---|

| Model | DELL PowerEdge R7525 | Gigabyte G293-S43-AAP1 |

| AI Accelerators | NVIDIA A100-PCIE-40GB × 2 | RBLN-CA12(ATOM) × 8 |

| Driver | 570.124.06 | 1.2.92 |

| Toolkit | CUDA 12.8 | RBLN Compiler 0.7.2 |

| OS | Ubuntu 22.04.5 LTS | Ubuntu 22.04.5 LTS |

| Python | 3.10.12 | 3.10.12 |

| PyTorch | 2.6.0 + cu118 | 2.5.1 + cu124 |

| NVIDIA A100-PCIE-40GB × 2 | RBLN-CA12(ATOM) × 8 |

|---|---|

|  |

| Category | AI Model | Model ID |

|---|---|---|

| Text-to-Text | Llama-3-8B | meta-llama/Meta-Llama-3-8B-Instruct |

| Llama-3.1-8B | meta-llama/Llama-3.1-8B-Instruct | |

| Deepseek-r1-distill-llama-8B | deepseek-ai/DeepSeek-R1-Distill-Llama-8B | |

| Text-to-Image | SDXL-Turbo | stabilityai/sdxl-turbo |

| Stable Diffusion 3 Medium | stabilityai/stable-diffusion-3-medium-diffusers | |

| Multimodal | LLaVA-NeXT | llava-hf/llava-v1.6-mistral-7b-hf |

| Object Detection | YOLO11 | yolo11n, yolo11s, yolo11m, yolo11l, yolo11x |

| Category | Content |

|---|---|

| Input prompt | {한은 금통위는 25일 기준금리를 연 3.0%에서 연 2.75%로 0.25%포인트 인하했다. … 이번 기준금리 인하로 한국과 미국(연 4.25~4.5%)과의 금리차는 상단 기준 1.50%포인트에서 1.75%포인트로 확대됐다.} Please translate the above article in English |

| Output (Response) | The Bank of Korea (BOK) cut its base rate by 0.25 percentage points to 2.75% on the 25th, … The interest rate gap between the two countries has widened to 1.75 percentage points, from 1.5 percentage points before the rate cut. |

| Category | Content |

|---|---|

| Input prompt | “Hey, are you conscious? Can you talk to me?” |

| Output (Response) | Alright, so I’m trying to figure out how to approach this problem. … I think that’s a good approach. It’s clear, respectful, and sets the stage for further interaction without overstepping my capabilities. </think> Hello! I’m an AI, so I don’t have consciousness or feelings, but I’m here to help with any questions or tasks you have. How can I assist you today? |

| Model ID | Token Categories | GPU2 | NPU2 | NPU4 | NPU8 | |

|---|---|---|---|---|---|---|

| meta-llama/Meta-Llama-3-8B-Instruct | Input | Avg | 913 | 913 | 913 | 913 |

| Output | Min | 397 | 518 | 434 | 455 | |

| Max | 620 | 615 | 644 | 657 | ||

| SD | 81 | 29 | 84 | 59 | ||

| Avg | 527 | 569 | 539 | 566 | ||

| meta-llama/Llama-3.1-8B-Instruct | Input | Avg | 938 | 938 | 938 | 938 |

| Output | Min | 625 | 639 | 632 | 643 | |

| Max | 746 | 716 | 712 | 732 | ||

| SD | 37 | 21 | 25 | 29 | ||

| Avg | 675 | 678 | 680 | 692 | ||

| deepseek-ai/DeepSeek-R1-Distill-Llama-8B | Input | Avg | 18 | 18 | 18 | 18 |

| Output | Min | 191 | 281 | 130 | 190 | |

| Max | 965 | 2213 | 845 | 709 | ||

| SD | 229 | 571 | 195 | 167 | ||

| Avg | 543 | 657 | 390 | 413 |

| Model ID | GPU2 | NPU2 | NPU4 | NPU8 | |

|---|---|---|---|---|---|

| meta-llama/Meta-Llama-3-8B-Instruct | Min | 15.95 | 25.30 | 13.89 | 8.40 |

| Max | 24.61 | 30.89 | 20.63 | 11.72 | |

| SD | 3.16 | 1.64 | 2.60 | 0.92 | |

| Avg | 20.96 | 28.24 | 17.30 | 10.57 | |

| meta-llama/Llama-3.1-8B-Instruct | Min | 24.80 | 38.67 | 24.04 | 17.77 |

| Max | 29.74 | 43.16 | 27.62 | 19.79 | |

| SD | 1.51 | 1.20 | 1.07 | 0.64 | |

| Avg | 26.85 | 40.82 | 26.31 | 18.53 | |

| deepseek-ai/DeepSeek-R1-Distill-Llama-8B | Min | 7.44 | 15.81 | 4.88 | 5.18 |

| Max | 37.59 | 127.91 | 31.61 | 18.33 | |

| SD | 8.89 | 33.05 | 7.25 | 4.29 | |

| Avg | 21.22 | 37.57 | 14.45 | 10.63 | |

| |||||

| Model ID | GPU2 | NPU2 | NPU4 | NPU8 | |

|---|---|---|---|---|---|

| meta-llama/Meta-Llama-3-8B-Instruct | Min | 24.90 | 19.91 | 30.51 | 47.66 |

| Max | 25.42 | 20.48 | 31.39 | 56.07 | |

| SD | 0.18 | 0.18 | 0.26 | 2.30 | |

| Avg | 25.11 | 20.15 | 31.11 | 53.53 | |

| meta-llama/Llama-3.1-8B-Instruct | Min | 24.87 | 16.52 | 25.41 | 35.96 |

| Max | 25.34 | 16.72 | 26.29 | 39.16 | |

| SD | 0.11 | 0.06 | 0.27 | 0.98 | |

| Avg | 25.13 | 16.60 | 25.84 | 37.35 | |

| deepseek-ai/DeepSeek-R1-Distill-Llama-8B | Min | 24.85 | 17.30 | 26.35 | 34.66 |

| Max | 25.97 | 17.77 | 27.47 | 41.77 | |

| SD | 0.29 | 0.14 | 0.35 | 2.23 | |

| Avg | 25.59 | 17.55 | 26.98 | 38.77 | |

| |||||

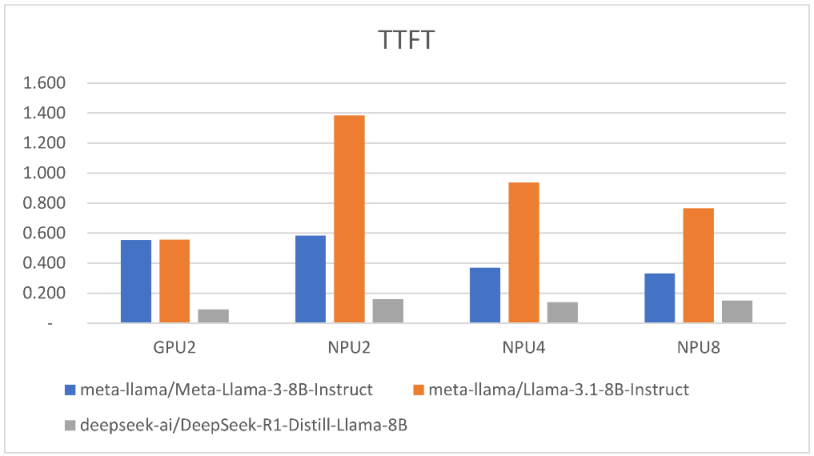

| Model ID | GPU2 | NPU2 | NPU4 | NPU8 | |

|---|---|---|---|---|---|

| meta-llama/Meta-Llama-3-8B-Instruct | Min | 0.540 | 0.569 | 0.360 | 0.316 |

| Max | 0.563 | 0.637 | 0.379 | 0.355 | |

| SD | 0.008 | 0.018 | 0.005 | 0.012 | |

| Avg | 0.554 | 0.583 | 0.370 | 0.332 | |

| meta-llama/Llama-3.1-8B-Instruct | Min | 0.547 | 1.362 | 0.923 | 0.715 |

| Max | 0.566 | 1.399 | 0.952 | 0.794 | |

| SD | 0.006 | 0.011 | 0.011 | 0.022 | |

| Avg | 0.557 | 1.385 | 0.937 | 0.766 | |

| deepseek-ai/DeepSeek-R1-Distill-Llama-8B | Min | 0.042 | 0.156 | 0.126 | 0.142 |

| Max | 0.530 | 0.166 | 0.155 | 0.157 | |

| SD | 0.146 | 0.003 | 0.008 | 0.004 | |

| Avg | 0.092 | 0.159 | 0.140 | 0.150 | |

| |||||

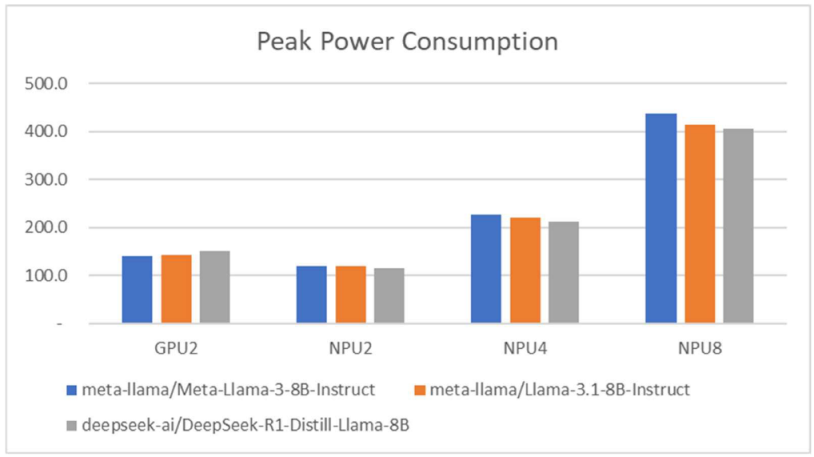

| Model ID | GPU2 | NPU2 | NPU4 | NPU8 |

|---|---|---|---|---|

| meta-llama/Meta-Llama-3-8B-Instruct | 140.9 | 118.7 | 227.4 | 437.5 |

| meta-llama/Llama-3.1-8B-Instruct | 143.0 | 120.5 | 219.8 | 413.3 |

| deepseek-ai/DeepSeek-R1-Distill-Llama-8B | 151.4 | 116.2 | 211.6 | 406.2 |

| ||||

| Model ID | GPU2 | NPU2 | NPU4 | NPU8 |

|---|---|---|---|---|

| meta-llama/Meta-Llama-3-8B-Instruct | 0.178 | 0.170 | 0.137 | 0.122 |

| meta-llama/Llama-3.1-8B-Instruct | 0.176 | 0.138 | 0.118 | 0.090 |

| deepseek-ai/DeepSeek-R1-Distill-Llama-8B | 0.169 | 0.151 | 0.127 | 0.095 |

| ||||

| Metrics | GPU2 | NPU8 | NPU8(+vLLM) |

|---|---|---|---|

| TPS | 25.13 | 37.35 | 73.30 |

| TTFT | 0.557 | 0.766 | 0.238 |

| Peak Power Consumption (W) | 143.0 | 413.3 | 424.0 |

| TPS/W | 0.176 | 0.090 | 0.183 |

|  | ||

|  | ||

| Category | Content |

|---|---|

| Input prompt | A cinematic shot of a baby raccoon wearing an intricate Italian priest robe. |

| Output (Generated image) |  |

| Model ID | GPU2 | NPU2 |

|---|---|---|

| Images Per Second (img/sec) | 3.22 | 3.67 |

| Peak Power Consumption (W) | 314.3 | 94.0 |

|  | |

| Category | Content |

|---|---|

| Input prompt | Draw me a picture of a church located on a wavy beach, photo, 8 k |

| Output (Generated image) |  |

| Model ID | GPU2 | NPU2 |

|---|---|---|

| Seconds per Image (sec/img) | 56.81 | 57.15 |

| Peak Power Consumption (W) | 304.1 | 119.2 |

|  | |

| Category | Content |

|---|---|

| Input prompt | What is shown in this image? |

| Output (Generated captions) | The image shows a cat lying down with its eyes closed, appearing to be sleeping or resting. The cat has a mix of white and gray fur, and it’s lying on a patterned fabric surface, which could be a piece of furniture like a couch or a chair. The cat’s ears are perked up, and it has a contented expression. |

| Model ID | GPU2 | NPU2 | NPU4 | NPU8 | |

|---|---|---|---|---|---|

| Latency (seconds) | Min | 5.21 | 4.76 | 2.81 | 1.96 |

| Max | 5.52 | 4.92 | 2.92 | 2.11 | |

| SD | 0.09 | 0.05 | 0.04 | 0.04 | |

| Avg | 5.27 | 4.84 | 2.86 | 2.00 | |

| Peak Power Consumption (W) | 292.9 | 134.4 | 249.4 | 404.3 | |

|  | ||||

| Model | Size Category | Parameters | Key Features |

|---|---|---|---|

| YOLO11n | Nano | 2.6M | Ultra-lightweight mobile/IoT inference |

| YOLO11s | Small | 9.4M | Balanced mobile performance |

| YOLO11m | Medium | 20.1M | Balanced server performance |

| YOLO11l | Large | 25.3M | High-accuracy server inference |

| YOLO11x | Extra Large | 56.9M | Maximum accuracy for demanding tasks |

| Image 1 (People 4.jpg) | Image 2 (Bus.jpg) | ||

|---|---|---|---|

| Original image (4892 × 3540 (7.44 MB)) | Object detection output image | Original image (810 × 1080 (134 KB)) | Object detection output image |

|  |  |  |

| Metrics | Image | Model ID | GPU | NPU |

|---|---|---|---|---|

| FPS | Image1 | yolo11n | 69.38 | 58.54 |

| yolo11s | 78.97 | 56.07 | ||

| yolo11m | 63.98 | 49.12 | ||

| yolo11l | 50.32 | 46.30 | ||

| yolo11x | 50.81 | 34.64 | ||

| Image 2 | yolo11n | 93.18 | 58.23 | |

| yolo11s | 94.25 | 54.35 | ||

| yolo11m | 78.98 | 48.60 | ||

| yolo11l | 57.62 | 45.98 | ||

| yolo11x | 56.36 | 34.17 | ||

|  | |||

| Metrics | Image | Model ID | GPU | NPU |

|---|---|---|---|---|

| Peak | Image1 | yolo11n | 56.0 | 43.0 |

| Power | yolo11s | 56.0 | 44.5 | |

| Consumption | yolo11m | 56.0 | 47.6 | |

| (W) | yolo11l | 109.0 | 48.9 | |

| yolo11x | 106.0 | 54.1 | ||

| Image2 | yolo11n | 55.0 | 43.0 | |

| yolo11s | 56.0 | 44.7 | ||

| yolo11m | 99.0 | 47.6 | ||

| yolo11l | 109.0 | 48.9 | ||

| yolo11x | 118.0 | 54.6 | ||

|  | |||

| Metrics | Image | Model ID | GPU | NPU |

|---|---|---|---|---|

| FPS/W | Image1 | yolo11n | 1.24 | 1.36 |

| yolo11s | 1.41 | 1.26 | ||

| yolo11m | 1.14 | 1.03 | ||

| yolo11l | 0.46 | 0.95 | ||

| yolo11x | 0.48 | 0.64 | ||

| Image2 | yolo11n | 1.69 | 1.35 | |

| yolo11s | 1.68 | 1.22 | ||

| yolo11m | 0.80 | 1.02 | ||

| yolo11l | 0.53 | 0.94 | ||

| yolo11x | 0.48 | 0.63 | ||

|  | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, Y.; Kim, D. Performance and Efficiency Gains of NPU-Based Servers over GPUs for AI Model Inference. Systems 2025, 13, 797. https://doi.org/10.3390/systems13090797

Hong Y, Kim D. Performance and Efficiency Gains of NPU-Based Servers over GPUs for AI Model Inference. Systems. 2025; 13(9):797. https://doi.org/10.3390/systems13090797

Chicago/Turabian StyleHong, Youngpyo, and Dongsoo Kim. 2025. "Performance and Efficiency Gains of NPU-Based Servers over GPUs for AI Model Inference" Systems 13, no. 9: 797. https://doi.org/10.3390/systems13090797

APA StyleHong, Y., & Kim, D. (2025). Performance and Efficiency Gains of NPU-Based Servers over GPUs for AI Model Inference. Systems, 13(9), 797. https://doi.org/10.3390/systems13090797