1. Introduction

With the increasing complexity of urban transportation systems and the continuous evolution of travel demand structures, taxi pick-up demand prediction plays a vital role in optimizing fleet dispatch and providing route recommendations for improving passenger pick-up efficiency. The significantly improved accessibility of large-scale, high-frequency travel data has made accurate taxi pick-up demand prediction based on data-driven methods increasingly feasible.

Traditional regression models are difficult to use to effectively characterize the nonlinear features and regional heterogeneity in taxi pick-up demand; therefore, recent studies have gradually introduced machine learning methods to improve prediction performance by utilizing richer variable structures and more flexible modeling frameworks. Liu et al. [

1] developed an ensemble prediction model by combining ridge regression and random forest and incorporated weather and air quality indicators to effectively improve the accuracy and stability of taxi demand prediction in hotspot areas. Roy et al. [

2] proposed a cross-city prediction framework to evaluate the spatial transferability of various machine learning models for ride-hailing demand prediction. The results showed that with knowledge transfer strategies, the models could still achieve good predictive performance in new cities. Building on this, Agarwal et al. [

3] introduced a dynamic surge pricing factor and combined structural modeling with empirical simulation to quantify the substitution effect of platform price changes on taxi orders and demonstrated the value of price information in dispatch optimization. Sun et al. [

4] focused on user cancelation behavior and developed a deep residual network model to reveal the critical impact of vehicle distance and user waiting time on the probability of order cancelation, improving the foresight and stability of platform dispatching. At the system level, Beojon et al. [

5] developed a multi-region dynamic Macroscopic Fundamental Diagram (MFD) model for ride-hailing and ride-sharing services, which captures the dynamic flows of vehicles and orders across regions, thereby improving overall operational efficiency and regulatory stability. Jin et al. [

6] proposed an integrated prediction framework combining fuzzy clustering and reinforcement learning. The framework first identifies typical travel patterns, dynamically selects appropriate model combinations for prediction, and then employs kernel density estimation to generate prediction intervals, thereby enhancing the accuracy and stability of ride-hailing demand forecasting.

As traditional machine learning methods struggle to capture the relationships between regions, studies have gradually adopted graph convolutional networks to model the connections and influences among regions from a holistic perspective. Tang et al. [

7] constructed a spatiotemporal graph convolutional network model based on multi-community partitioning. By incorporating both a geographic adjacency graph and a functional similarity graph for joint modeling, the approach improved the clustering rationality of regional partitioning and demonstrated higher stability and generalization ability in cross-region taxi pick-up demand prediction. Feng et al. [

8] proposed a multi-task graph neural network that jointly models taxi pick-up demand and OD travel demand. A shared regional representation was innovatively introduced to enable information integration across different spatial hierarchies, thereby improving the overall prediction performance.

In multi-region taxi pick-up demand prediction, graph neural networks alone have difficulty modeling the temporal variation in demand. Therefore, research has gradually shifted focus toward integrating temporal modeling methods to more fully capture spatiotemporal dependencies. Ke et al. [

9] constructed a multi-task model that integrates multiple graph convolutions and GRU and, for the first time, separately modeled the spatial and temporal features of different travel modes (e.g., ride-hailing, ride-sharing). A shared mechanism was used to enable information transfer across modes, improving the prediction accuracy for various service types. Ye et al. [

10] proposed a coupled graph convolutional model that dynamically adjusts the connections between regions at each layer and integrates GRU to handle the temporal variations. This enables the model to learn both inter-regional relationships and temporal patterns in demand, thereby enhancing its adaptability to changes in travel demand. Liu et al. [

11] proposed a context-aware spatiotemporal network (CSTN), which innovatively integrates local spatial convolution, ConvLSTM-based temporal modeling, and a global correlation weighting mechanism. The model captures demand variations from three perspectives, influence from neighboring regions, historical evolution trends, and overall patterns, thereby improving its representation capacity and prediction performance. Zhao et al. [

12] proposed a coupled neural network model that uses a dual-channel structure to separately process taxi and ride-hailing demand and integrates LSTM for temporal modeling. A semantic interaction mechanism is employed to enable information sharing between the two travel modes, improving the accuracy and coordination of multi-modal demand prediction. Chen et al. [

13] constructed an integrated prediction framework combining GCN and LSTM and introduced a bagging learning strategy to address the data imbalance problem in order records. The approach demonstrated stronger prediction robustness in regions with sparse demand. Jin et al. [

14] constructed a spatiotemporal prediction model named MSTIF-Net by integrating GCN and LSTM, which adopts a multi-branch structure to incorporate heterogeneous information such as holidays, weather, and orders, thereby enhancing the multi-factor modeling capability and prediction accuracy in ride-hailing demand forecasting. Chen et al. [

15] proposed a deep spatiotemporal prediction model that integrates multi-source information. The model uses GCN to capture spatial dependencies across regions and employs an LSTM module for short-term temporal modeling. External variables such as weather and events are also introduced to enhance awareness of environmental influences, significantly improving short-term prediction accuracy and robustness. Zhong et al. [

16] constructed the RF-STED model, which adopts a multi-branch structure combining ConvLSTM and GCN, and designed a residual feature extractor to enhance the reconstruction of OD graph structures within an encoder–decoder architecture, significantly improving the accuracy of short-term OD demand prediction. Liu et al. [

17] proposed the H-ConvLSTM model, which combines hexagonal convolution with ConvLSTM to investigate how different combinations of spatial grids and temporal intervals (a total of 36 combinations) affect the prediction accuracy of ride-hailing departure and arrival demand. The experimental results show that the best performance is achieved with a grid size of 800 m and a time interval of 30 min and further reveal that departure and arrival demand exhibit different sensitivities to granularity changes.

Since traditional graph convolutional networks cannot distinguish the importance of adjacent edges, researchers have gradually combined graph neural networks (GNNs) with attention mechanisms to enhance the modeling of heterogeneity in adjacency relationships. Makhdomi et al. [

18] proposed a passenger request prediction framework based on graph neural networks. By constructing an OD network graph and incorporating an attention mechanism, the framework assigns weights to adjacent edges, thereby achieving more accurate OD request prediction. Zhang et al. [

19] proposed the DNEAT model, which integrates graph neural networks with attention mechanisms to construct a dynamic graph without relying on a fixed adjacency structure and enhances the ability to capture OD-level regional interaction relationships by simultaneously updating node and edge representations. Guo et al. [

20] proposed a multi-gated deep graph network that integrates graph convolution, attention mechanisms, and multi-layer gating structures. By adaptively adjusting inter-regional connection strength via attention mechanisms and filtering key spatiotemporal information through gating units, the approach effectively improves the modeling accuracy and robustness of taxi pick-up demand. Wang et al. [

21] proposed a graph neural network-based modeling approach that constructs a dynamic directed weighted graph to represent inter-regional travel relationships. By integrating multiple distinct attention mechanisms, including temporal attention, directional attention, and edge weight attention, the approach effectively captures changing trends in passenger travel behavior and improves prediction accuracy. Ai et al. [

22] proposed a multi-step prediction model named PSA-DM, which uses a graph attention network (GAT) to model spatial relationships between regions and employs a self-attention structure to predict demand over multiple future time steps. It also feeds the previous step’s prediction results into subsequent steps, improving the coherence and accuracy of multi-step forecasting.

Although graph neural networks have achieved certain results by separately integrating attention mechanisms and LSTM, they still struggle to simultaneously account for spatial dependencies, the differential influence of neighboring nodes, and temporal variation characteristics. Therefore, research has further combined these three aspects to enhance the modeling capacity for complex traffic patterns. Mi et al. proposed a residual attention-based graph convolutional LSTM network, in which the graph convolution module is constructed based on GatedGCN. A residual attention mechanism and a multi-source external variable fusion structure are introduced, combined with LSTM for temporal modeling, significantly improving the accuracy and spatiotemporal generalization in taxi demand prediction [

23]. Li et al. [

24] proposed three hybrid deep prediction models that integrate CNN with various types of RNNs (LSTM, BiLSTM, GRU, and ConvLSTM) to jointly model spatial and temporal features. An attention mechanism is introduced to highlight key time periods, and multi-source external factors such as weather and holidays are incorporated. These approaches lead to improved accuracy and stability in short-term ride-hailing demand prediction tasks.

Although the above models have achieved certain success in extracting spatial structural features and capturing dependencies between nodes, they generally adopt static attention mechanisms, which makes it difficult to flexibly adjust weights based on structural differences among neighboring nodes, thereby limiting their predictive capability in complex traffic networks. Therefore, Brody et al. [

25] proposed GATv2, which introduces a dynamic attention mechanism that enables the adaptive learning of attention weights under different contexts, significantly enhancing expressiveness and flexibility. On the official OGBN dataset (Open Graph Benchmark for Node Classification, a standard benchmark for graph neural networks proposed by the Stanford SNAP Lab in 2020), GATv2 improved the accuracy from 79.04% to 80.63% and reduced the error by more than 11% on the QM9 dataset, with them both outperforming conventional GNNs combined with attention mechanisms. Liu et al. designed GMTP by integrating GATv2 with BERT, which comprehensively outperformed existing baselines on the Porto and Beijing datasets [

26].

To further enhance the performance of graph attention networks in dynamic graph construction and heterogeneous information modeling, researchers have begun to introduce edge features into the attention mechanism to strengthen the expressive power of modeling relationships between nodes. Chen et al. [

27] constructed an edge embedding matrix to feed edge features and node features jointly into the attention mechanism, enabling the joint training of edges and nodes. This approach improved discriminative performance in structured tasks such as graph classification and social network modeling. Building on this, Zhao et al. proposed a dynamic edge construction module that integrates the GATv2 attention mechanism with temporal encoding to capture the evolution of sensor relationships in multivariate time series, enabling high-precision early fault detection in industrial Internet of Things (IIoT) systems [

28]. Although existing studies have achieved notable progress in areas such as industrial monitoring and structured graph classification, the integration of edge features into graph attention mechanisms has not yet seen in-depth exploration or practical application in the context of spatial behavior modeling for taxi or ride-hailing pick-up demand prediction. This provides an important space for innovation in this study.

Table 1 summarizes the commonly used components in existing research on taxi pick-up demand prediction, including the adopted methods, whether graph neural networks are introduced, whether LSTM or GRU is used for time series modeling, whether attention mechanisms are employed, and whether multi-dimensional edge features are integrated. Through a systematic review of the literature, it was found that most current models still exhibit limitations in modeling inter-regional relationships. Specifically, edge features are generally not incorporated into the computation of attention mechanisms, resulting in the insufficient utilization of edge information. In addition, the integration of graph modeling, attention mechanisms, and temporal structures remains incomplete, with most studies adopting only one or two of these components. Moreover, more expressive architectures such as GATv2 have not yet been effectively applied. In contrast, the prediction framework adopted in this study achieves innovations and improvements in the following aspects:

First, an edge feature encoder is designed to explicitly incorporate temporal similarity, spatial proximity, and POI similarity into the attention mechanism, thereby enhancing the model’s expressive capacity and predictive performance for heterogeneous graphs.

Second, GATv2 is introduced to capture heterogeneous associations between nodes and is combined with LSTM to effectively model the temporal evolution of taxi pick-up demand.

The remainder of this paper is organized as follows:

Section 2 provides a detailed description of the structure of the adopted Edge-GATv2-LSTM model and the construction of multi-dimensional edge features.

Section 3 presents the experimental setup, evaluation metrics, and prediction results and discusses the model’s adaptability to multi-region traffic flow. Finally,

Section 4 concludes the paper and outlines future research directions.

2. Dynamic Prediction Model for Multi-Region Taxi Pick-Up Demand

2.1. Problem Definition

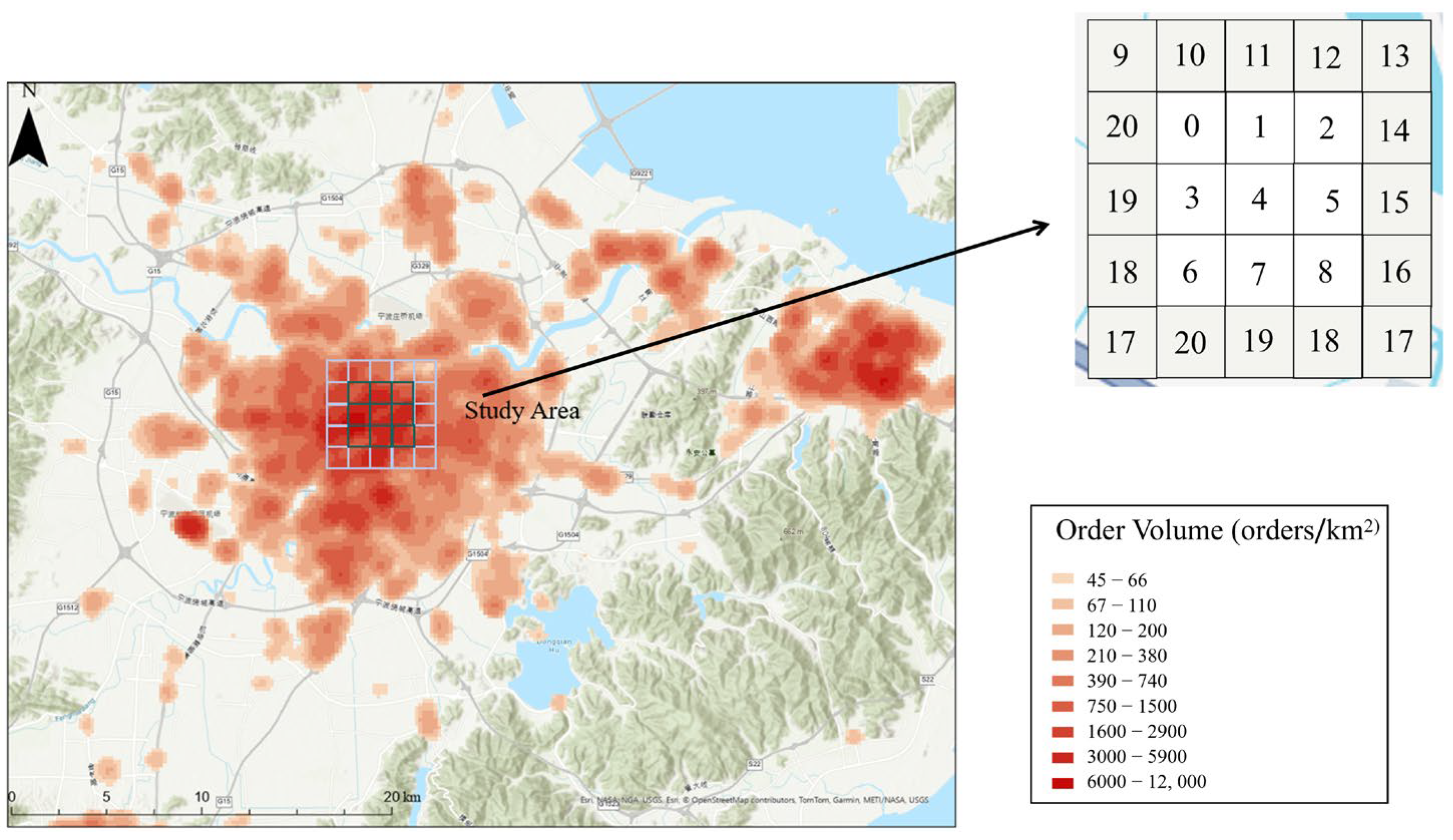

The modeling parameters for the taxi pick-up demand prediction task are shown in

Table A1.

This study focuses on the region-level prediction of urban taxi pick-up demand. The study area is partitioned into N non-overlapping spatial units (1 km × 1 km), and the number of trip requests initiated in each region is counted at a fixed temporal granularity of 15 min. We model the urban regional system as an undirected graph , where V denotes the set of nodes, consisting of nodes representing spatial units; E denotes the set of edges, used to describe the connectivity between nodes; and is the adjacency matrix of graph G. Each node in the graph corresponds to an urban region, where a one-dimensional time series is recorded at a uniform sampling frequency, representing the number of taxi orders in that region during each time slot.

Suppose that the one-dimensional time series recorded by each node in the traffic network G represents the taxi order volume of the corresponding region. Denote the observed value of node i at time slot t as , which represents the number of taxi orders at that node during this time slot. Concatenate the observed values of node i over the past τ time slots to obtain a time series vector . Denote the observed values of all nodes at time period t as , which represents the state of all nodes at time period t. denotes the value of all the features of all the nodes over τ time slices.

2.1.1. Input Variables

Suppose the sampling frequency is q times per day. Let t denote the current time and

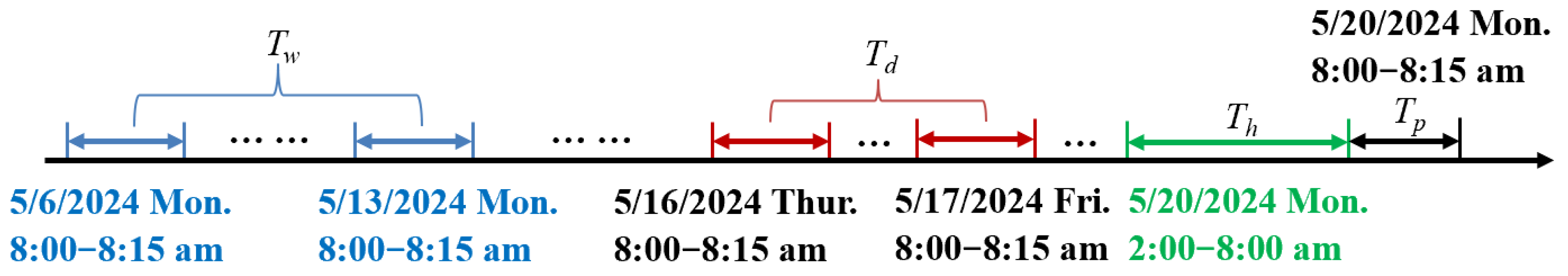

be the length of the prediction window. As illustrated in

Figure 1, three time series segments with lengths

,

, and

are extracted along the temporal axis to serve as the inputs for the recent, daily periodic, and weekly periodic components, respectively. Each of

,

, and

is assumed to be an integer multiple of

. The details of these three segments are described as follows:

The recent segment:

, a segment of historical time series directly adjacent to the predicting period, as shown by the green part of

Figure 1.

The daily periodic segment:

consists of the segments of the past few days at the same time period as the predicting period, as shown by the red part of

Figure 1.

The weekly periodic segment:

is composed of the segments of the last few weeks, which have the same week attributes and time intervals as the forecasting period, as shown by the blue part of

Figure 1.

To comprehensively model the dependencies of taxi pick-up demand across different temporal scales, we concatenate the recent segment

, daily periodic segment , and weekly periodic segment to form a unified input tensor . The concatenation operation merges the three segments each representing a distinct feature source into a single tensor. Each time period in the final tensor contains three feature dimensions, corresponding to recent, daily, and weekly temporal dependencies. The concatenated tensor serves as the overall input to the model, enabling the simultaneous extraction of temporal features at different time scales for predicting future taxi pick-up demand data.

Figure 1.

An example of constructing the input of time series segments.

Figure 1.

An example of constructing the input of time series segments.

2.1.2. Output Variables

The objective of this study is to predict the taxi pick-up demand at each node for time period t + 1 based on the given historical observation data. The predicted pick-up demand values for all nodes at time t + 1 are denoted as .

2.2. Overview of the Edge-GATv2-LSTM Model Architecture

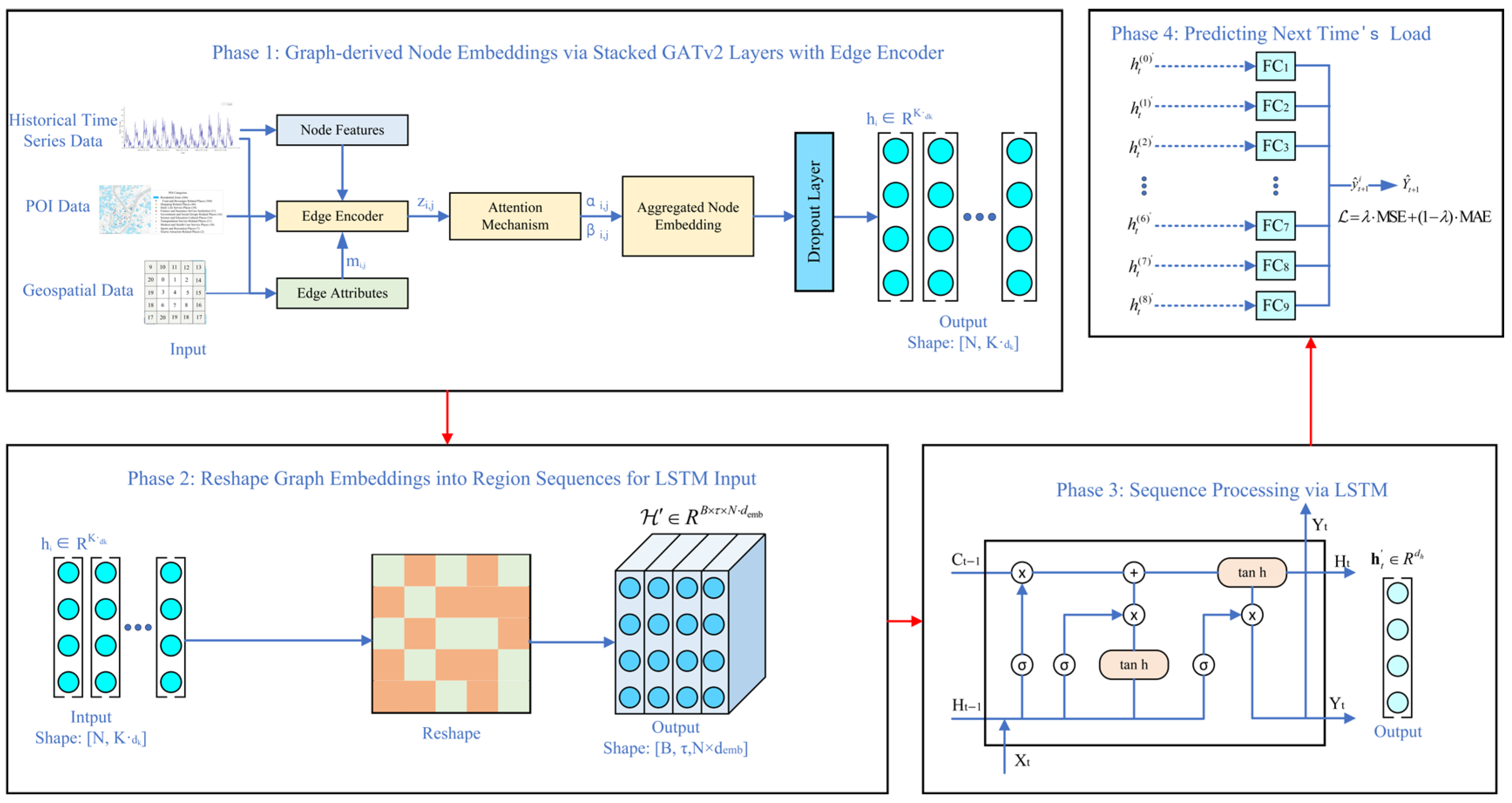

This study adopts a predictive architecture, Edge-GATv2-LSTM, which integrates graph neural networks with temporal modeling capabilities, aiming to achieve the short-term accurate forecasting of taxi pick-up demand across different urban regions. The model architecture, as illustrated in

Figure 2, consists of four main stages.

First, the urban spatial structure is modeled as an undirected graph based on the similarity of historical order behavior, and multiple types of information—such as the correlation coefficient of pick-up orders, functional similarity of regions, and geographic proximity—are incorporated as edge features. These features are embedded via an edge encoder to obtain multi-dimensional edge representations, which are then used to construct a weighted adjacency matrix. Subsequently, the GATv2 attention mechanism is introduced to adaptively model the interactions between each node and its neighbors while incorporating edge features to generate multi-head attention weights, thereby enabling the efficient modeling of heterogeneous relationships between different urban regions. A dropout mechanism is also applied to reduce the risk of overfitting, and node embedding vectors encoding spatial relationship information are ultimately generated through graph convolution operations.

Subsequently, the output of the graph neural network is reorganized into a time series format and used as the input to the LSTM network. The LSTM dynamically models the embedding sequences of different urban regions, learning the temporal patterns of taxi pick-up demand variations over time, thereby providing a temporal foundation for predicting taxi pick-up volumes.

Finally, a multi-layer fully connected network is employed to map the output of the LSTM to the predicted taxi pick-up demand for the next time period in each region. The overall prediction is optimized by jointly minimizing the weighted mean squared error (MSE) and mean absolute error (MAE) loss functions, thereby enabling the accurate prediction of future taxi pick-up demand across different regions.

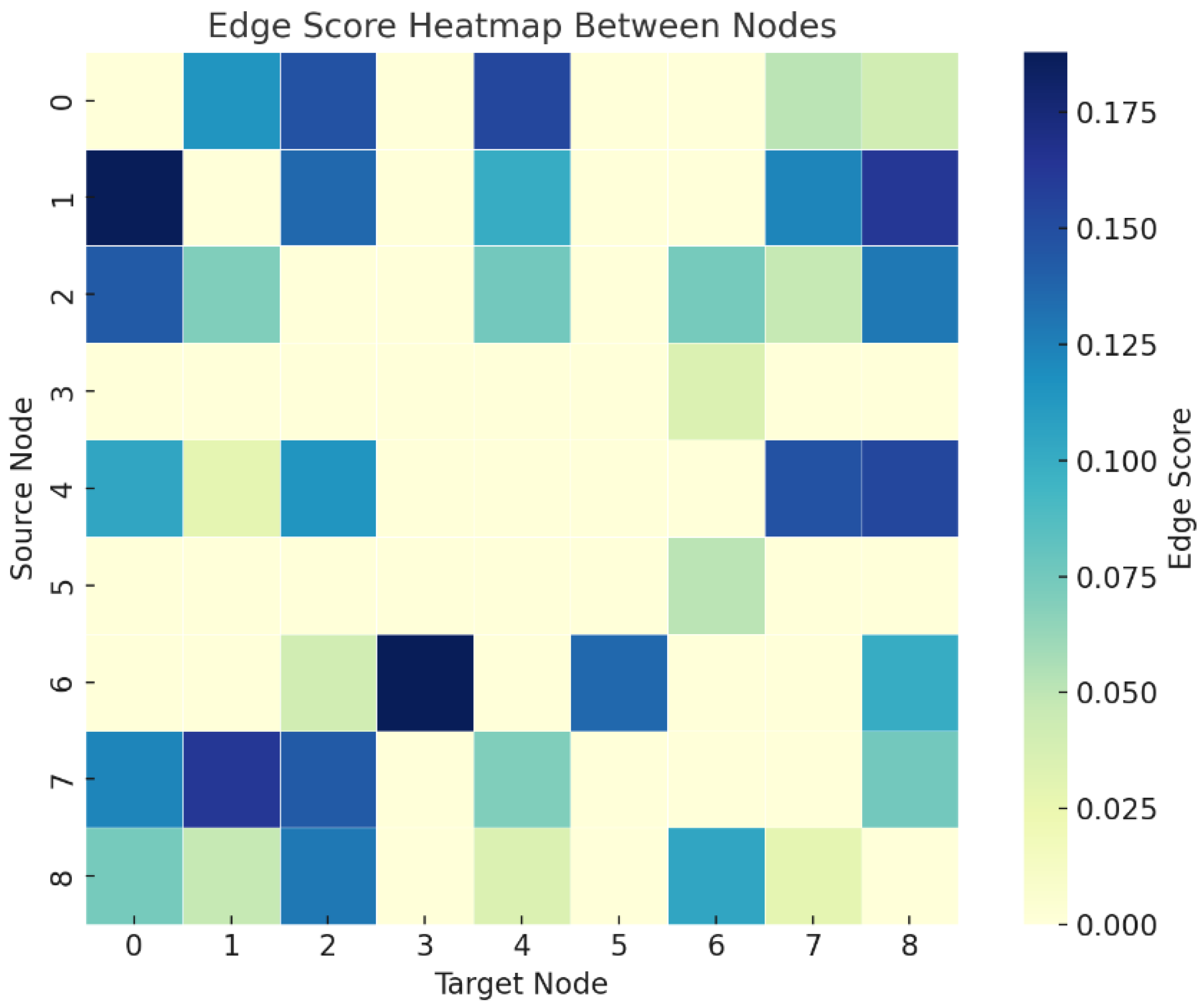

2.3. Graph Structure Construction and Edge Feature Definition

2.3.1. Regional Graph Structure Construction

The adjacency matrix of the graph is generated based on the time series similarity of historical order behavior between regions. Specifically, for any two regional nodes, we extract their order volume sequences over all historical time periods in the training set and compute the Pearson correlation coefficient between the two sequences, based on which the adjacency matrix is constructed as follows:

Here, denotes the Pearson correlation coefficient, and is the predefined correlation threshold.

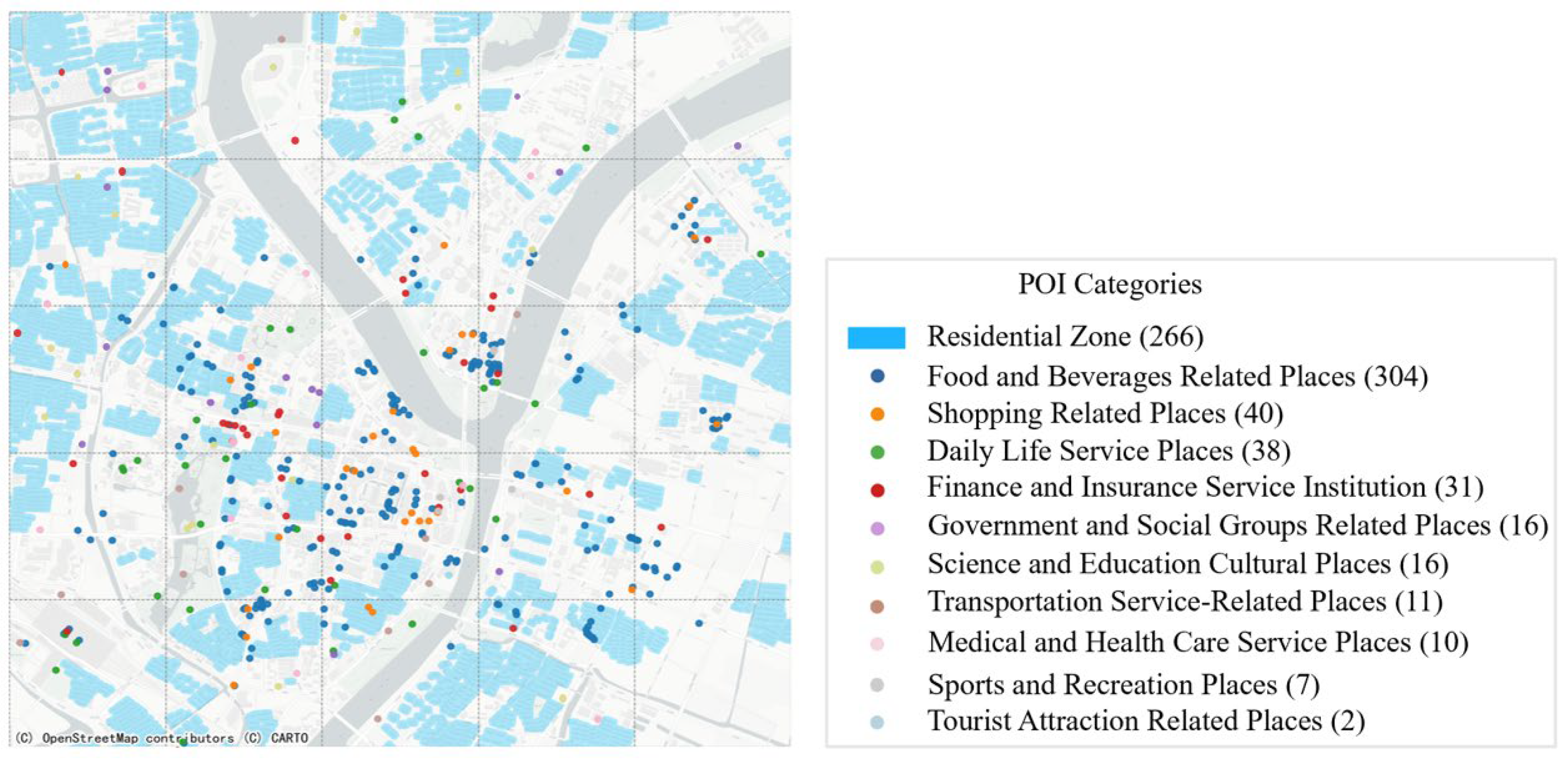

2.3.2. Definition of Three-Dimensional Edge Features

To better describe the connections between different urban regions, we construct a three-dimensional edge feature vector that includes demand correlation, geographic proximity, and functional similarity. These three features have been widely used in previous research as important factors for modeling spatial relationships. Specifically, the demand correlation reflects how similar the changes in taxi orders are between two regions over time, helping to capture behavioral links; the geographic proximity describes how close two regions are in space, based on the distance between their center points, and it is commonly used in graph construction; and the functional similarity is based on the types and numbers of POIs in each region, which reflects how similar the two areas are in terms of land use and urban functions. Many studies have used these features separately or in pairs [

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

29,

30,

31]. This study combines all three into a unified vector by embedding them into the GATv2 attention mechanism through a two-layer nonlinear encoder. This design enables the model to dynamically adjust attention weights based on both node attributes and edge characteristics, allowing for the more accurate modeling of inter-regional dependencies and temporal dynamics when integrated with LSTM.

To enhance the semantic representation of edges in the graph, we construct a three-dimensional edge feature vector

for each edge

that is determined to exist (i.e.,

) by the adjacency matrix, as follows:

We adopt 11 types of POIs and construct a vector , where denotes the number of POIs of type m in region i. The functional similarity between two regions is calculated using the centered cosine similarity. The specific calculation formulas for each component are given as follows:

The meanings of the parameters are as follows:

: Geographic proximity (normalized distance; larger value indicates that it is closer);

: Euclidean distance between the geographic centroids of region i and region j;

: Minimum and maximum geographic distances among all region pairs;

: Mean number of POIs of each type in region i.

2.4. Edge-GATv2 Multi-Head Attention Network

2.4.1. Multi-Head Attention Mechanism

Prior to detailing the core components of our method, we briefly review the multi-head attention mechanism [

32], which is employed in both the spatial embedding and attention modules of our model.

A multi-head self-attention mechanism uses a learned feedforward neural network to map the input sequences into three

dimensional vectors: query vector

Q, key vector

µ, and value vector

. First, the scaled dot-product attention between

and

is calculated, and then a softmax function is applied to obtain the attention weight

α as follows:

where

represents the query vector of the k-th attention head;

represents the key vector of the k-th attention head;

denotes the dimensionality of each attention head, satisfying , where demb is the overall output dimension of the attention mechanism and K is the number of attention heads;

represents the attention weights between all node pairs in the k-th head.

Based on the attention weights, the output of each head is computed as follows:

where

: the value vector in the k-th attention head;

: the output feature of the k-th attention head.

After computing the attention outputs from all K heads, the results are spliced together and passed through a linear transformation to obtain the final output representation:

where

: concatenated outputs of the K heads along the feature dimension;

: the final output of the multi-head attention mechanism.

2.4.2. GATv2: An Attention Mechanism with Structural Improvements

The graph attention network (GAT) is a type of graph neural network architecture based on neighbor-weighted aggregation, originally proposed by Veličković et al. Its core idea is to treat the input features of the target node as the query and those of its neighboring nodes as keys, dynamically computing the importance scores of neighbors via an attention mechanism. These scores are then used to perform a weighted aggregation, thereby updating the node representations [

33].

The query vector is generated from the features of the target node and is used to measure its degree of attention to each neighbor, while the key vector is generated from the features of the neighboring nodes and is involved in the attention scoring function. The attention score function is defined as follows:

where

d: the dimensionality of the input features.

: the original input features of the target node i and its neighbor node j, respectively. The input feature dimension d is equal to the time window length τ, i.e., d = τ.

: the dimensionality of each attention head.

: the linear transformation matrix for the query in the k-th attention head of the attention module in GAT.

: the attention weight vector in the k-th attention head of GAT.

: the feature concatenation operation.

LeakyReLU: a nonlinear activation function.

: the attention score from node iii to its neighbor node j in the k-th attention head.

However, the scoring function in GAT essentially follows a static attention mechanism, where all query nodes rank their neighbors in the same way. This prevents the model from differentiating the importance of neighbors based on the query node’s own features, thereby limiting its representational capacity.

To address the above limitation, Brody et al. proposed an improved attention mechanism called GATv2, which rearranges the computation order of the attention function. Specifically, the concatenated query and key are first passed through a nonlinear transformation, followed by scoring using an attention weight vector. This design enhances the expressive power of the model [

30]. The attention scoring function is defined as follows:

where

: the linear transformation matrix in the attention module of GATv2 for the k-th attention head;

: the attention weight vector of the k-th attention head in GATv2.

Equations (8) and (9) enable the transition from static to dynamic attention, allowing for each target node to adaptively select neighbor information based on its own state. GATv2 has demonstrated stronger generalization and robustness across various tasks and has become a mainstream alternative in graph attention modeling.

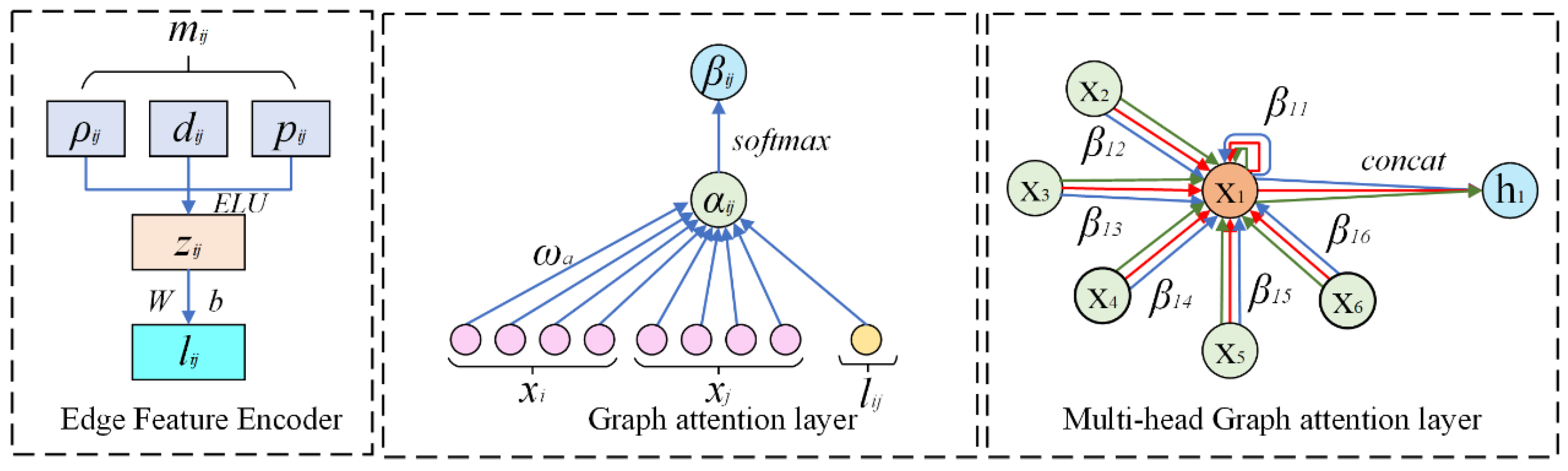

Building on the GATv2 attention mechanism, this study introduces edge feature awareness by feeding a combined input of three-dimensional edge attributes and node concatenation features into the attention scoring function, enhancing the expressiveness of the edge information.

2.4.3. Edge-GATv2: An Improved Graph Attention Network Algorithm with Edge Weight Consideration

To transform the three-dimensional edge features into scalar weights for input into the graph attention mechanism, we design an edge feature encoder consisting of a two-layer feedforward network. Let H denote the hidden dimension, which is used to map the initial three-dimensional edge features into an H-dimensional nonlinear vector representation, in order to enhance the representational capacity of the edge information.

where

: the first-layer linear transformation parameters for the k-th attention head;

: the second-layer linear transformation parameters for the k-th attention head;

: the intermediate representation of the edge features between nodes (i, j) in the k-th attention head after the first layer of the feedforward network;

: a nonlinear activation function;

: the final output edge weight.

The obtained edge weights are further utilized to refine the scoring mechanism of the

k-th attention head, enabling the attention weights to simultaneously capture both node features and edge structural information, and the modified attention scoring function is defined as follows:

where

: the linear transformation matrix of the k-th attention head in the attention module after incorporating edge features.

This mechanism explicitly incorporates edge features rather than relying on simple concatenation of the node features. In terms of the attention scoring function, it performs deep encoding of multi-dimensional information, such as structural properties, spatial distance, and travel patterns, similarity through a two-layer learnable nonlinear transformation. In computing adjacency strengths, this modeling approach empowers the attention mechanism to integrate historical behavioral relationships and spatial semantics between regions, thereby achieving a more nuanced differentiation of regional influence on the target node.

To further regulate the attention distribution and ensure that the weights are properly normalized, the attention scores described above are passed through a softmax function to obtain the normalized attention weights, as follows:

where

: the normalized attention weight from node i to its neighboring node j in the k-th attention head;

: the unnormalized attention score;

: the set of neighboring nodes of node i.

After obtaining the normalized attention weights, the model performs a weighted aggregation of the features of each node’s neighbors to generate an updated representation for the node. The computation is given as follows:

where

: the output vector of node i under the k-th attention head;

: the linear transformation matrix of the k-th attention head;

: the input feature vector of neighboring node j;

: the dimensionality of the original input features;

: the output dimensionality of each attention head.

where

: the final representation of node i obtained by concatenating the outputs from all K attention heads.

To effectively integrate the heterogeneous multi-source relational information between regions, an edge feature encoder is designed to project the three-dimensional edge feature vector into a scalar weight

. Subsequently, the node features

, neighboring nodes

, and edge weights

are jointly considered within the GATv2 attention mechanism to compute attention scores

, which are then normalized using the softmax function to obtain the final attention weights

. Finally, a multi-head attention mechanism is employed to aggregate the weighted information from different neighboring nodes, resulting in the updated representation of the target node

. As illustrated in

Figure 3, the complete architecture of the graph attention network consists of an edge feature encoder, a graph attention layer, and a multi-head attention aggregation module.

The GATv2 module outputs an embedding representation

for each node at time step

t. The embeddings of all nodes at time

t are assembled into an embedding matrix

, and the concatenation of such matrices over

τ consecutive time steps forms the input tensor:

The resulting tensor is then fed into the temporal sequence modeling module (LSTM) for time series modeling. To meet the input format required by the LSTM, the tensor is reshaped as follows:

where

B: batch size;

: the reshaped tensor.

2.5. LSTM-Based Temporal Modeling

Subsequently, the spatially encoded node representations are fed into the LSTM network in temporal order to capture long-term temporal dependencies. The overall weighted LSTM mechanism is formulated as follows:

where

: the hidden state output of the LSTM at time step t;

: the dimensionality of the hidden state in the LSTM network;

: the cell state (memory state) of the LSTM;

: the input-to-gate weight matrix;

: the hidden-to-gate weight matrix;

: the bias term for each gate;

σ (⋅): the sigmoid function, used to generate the gate activations value;

tanh(⋅): the hyperbolic tangent function;

⊙: the Hadamard product (element-wise multiplication).

The hidden state output of the LSTM is mapped to the final prediction through a fully connected (FC) layer. The FC operation is defined as follows:

where

: the LSTM output vector of node i at time step t;

: the weight vector of the fully connected layer (for single-step prediction);

: the bias term;

: the predicted value of node i at time step t + 1.

The predictions for all nodes are concatenated to form the overall output representation as follows:

where

: the predicted pick-up demand of all nodes at time step t + 1;

: the LSTM hidden state output matrix of all nodes at time step t;

: the weight vector of the fully connected layer for linear mapping from the LSTM output to a scalar;

: the bias term of the fully connected layer.

2.6. Design of the Loss Function

During the model training phase, to more comprehensively evaluate the deviation between predicted and actual values, a combined loss function integrating the mean squared error (MSE) and mean absolute error (MAE) is adopted as the optimization objective. Specifically, the loss function is defined as follows:

where

: the weighting coefficient between MSE and MAE;

: the final objective of the loss function.

4. Conclusions

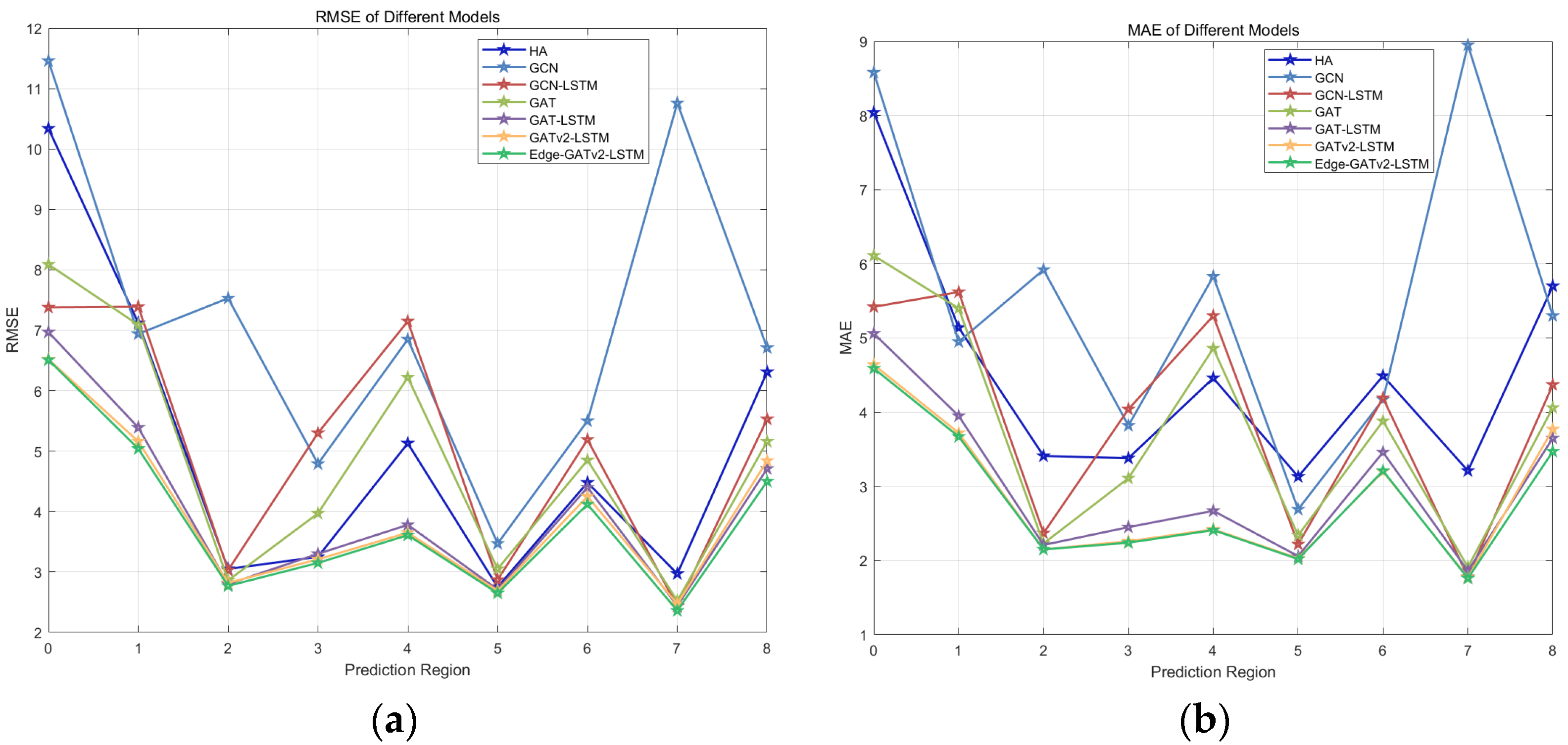

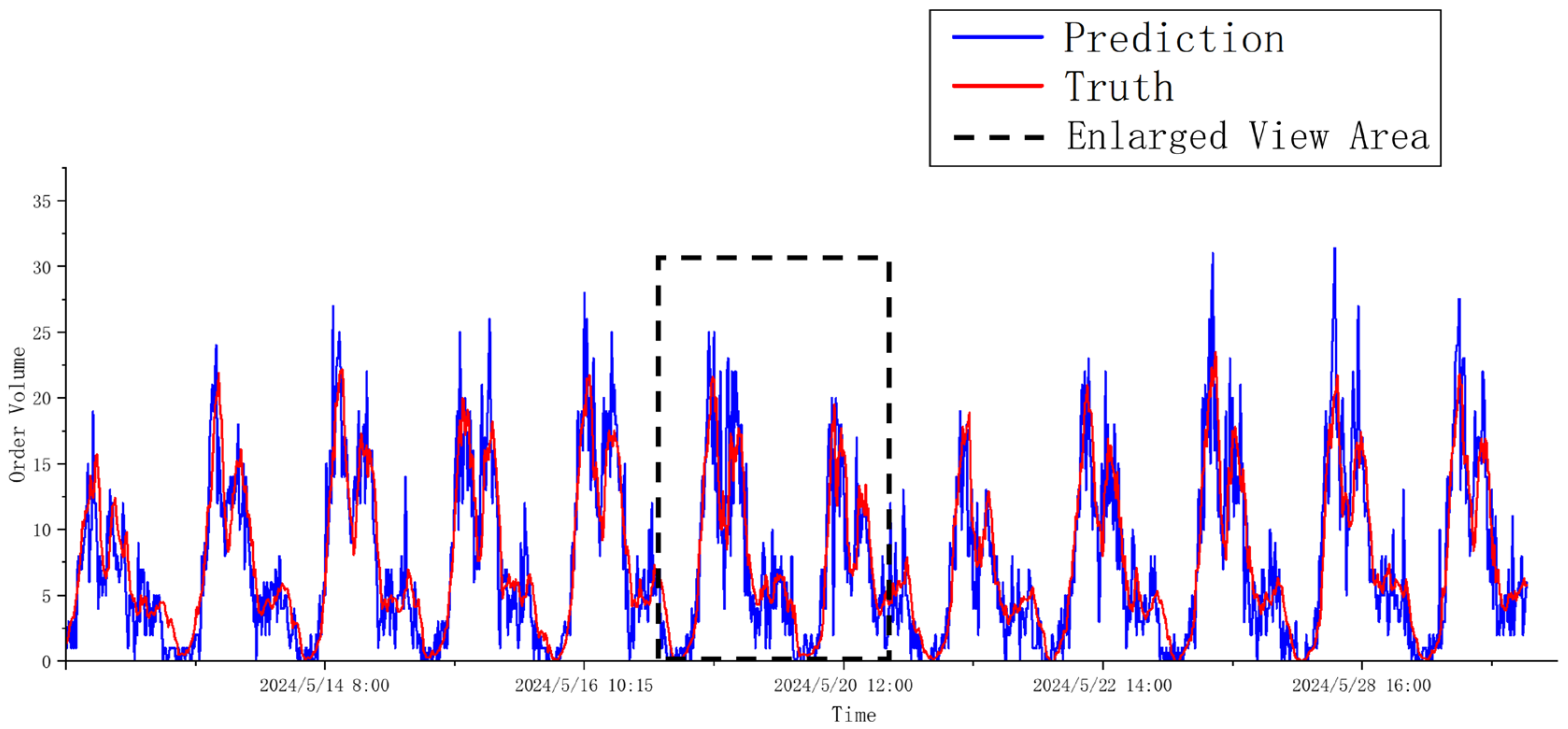

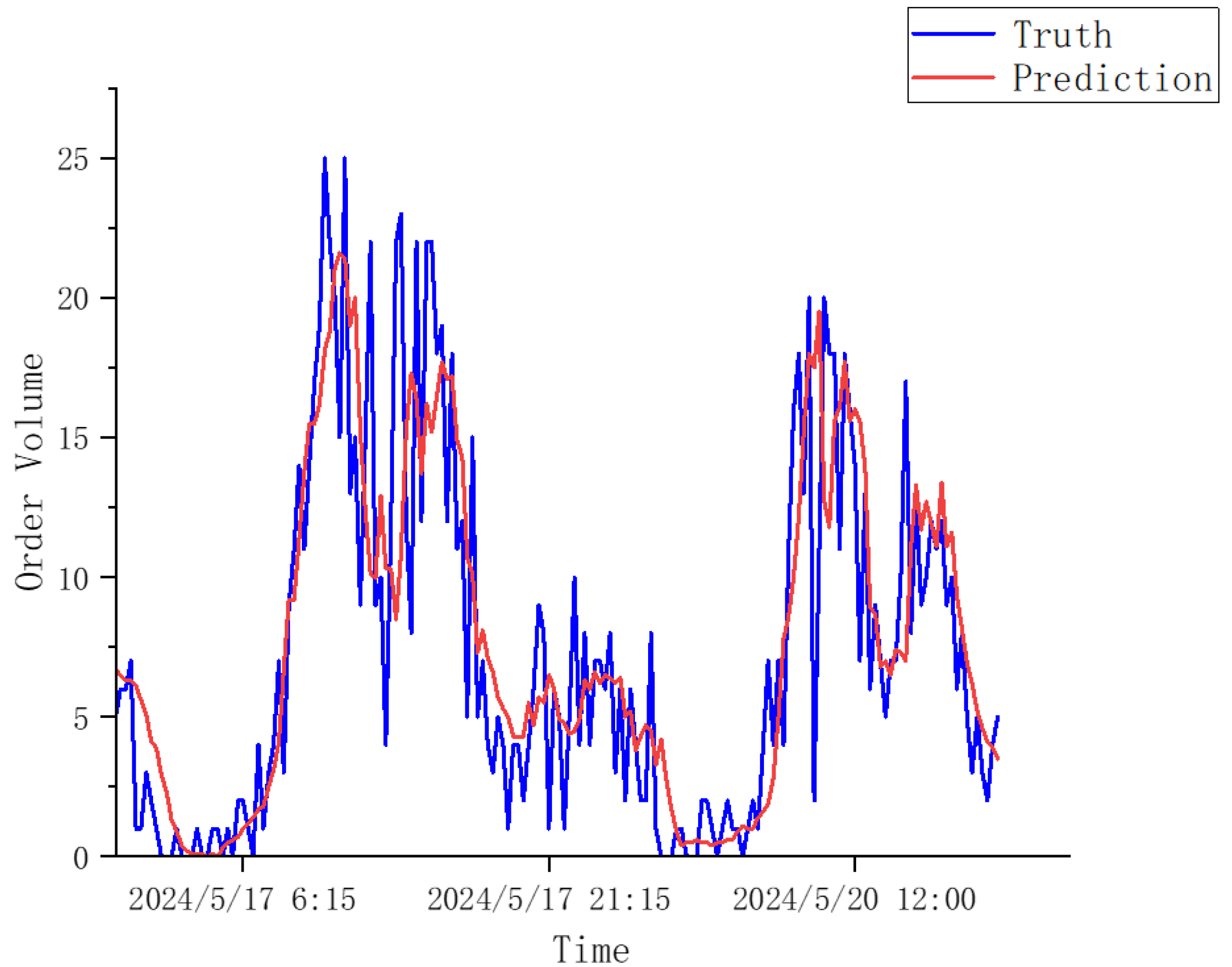

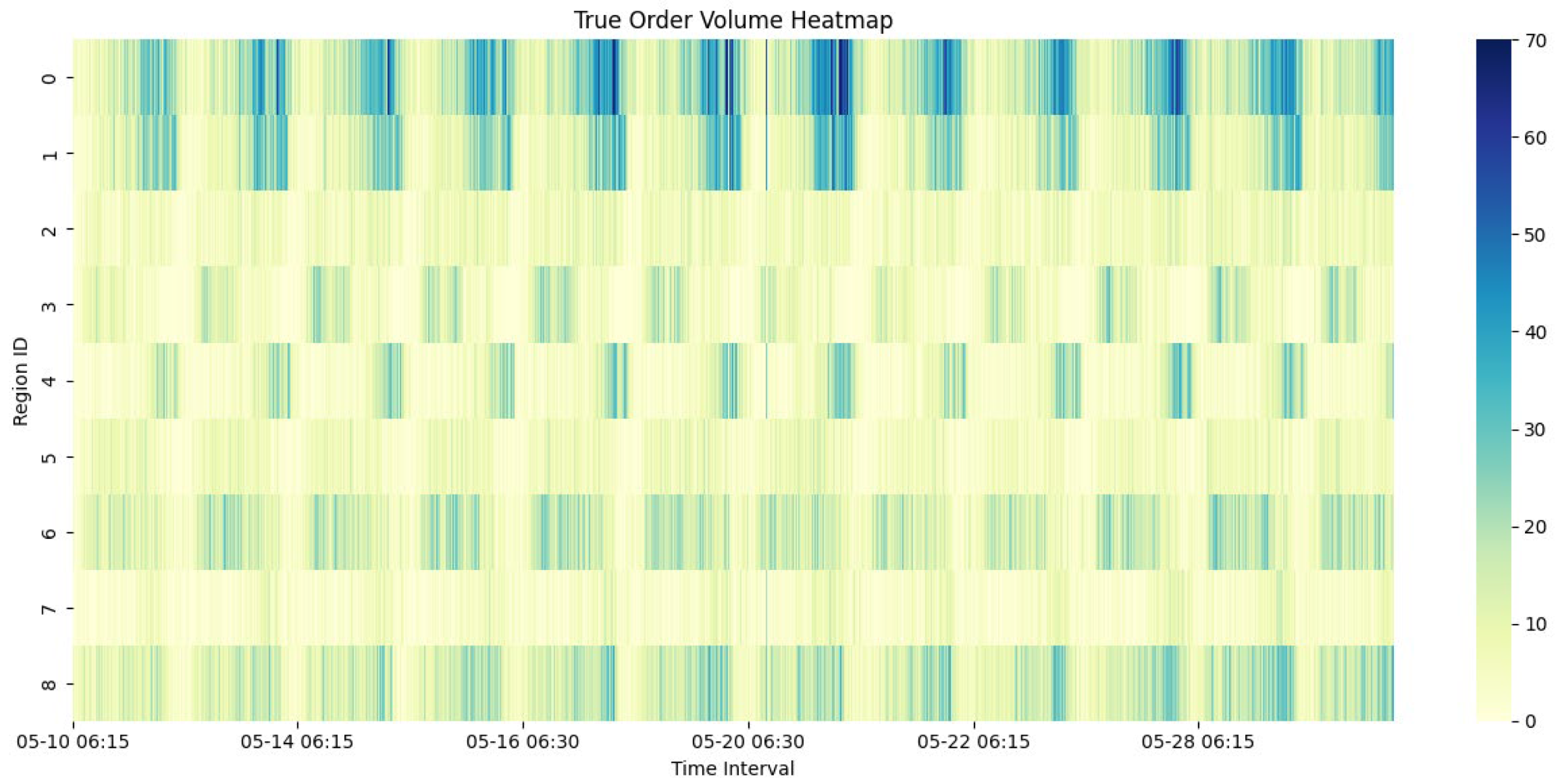

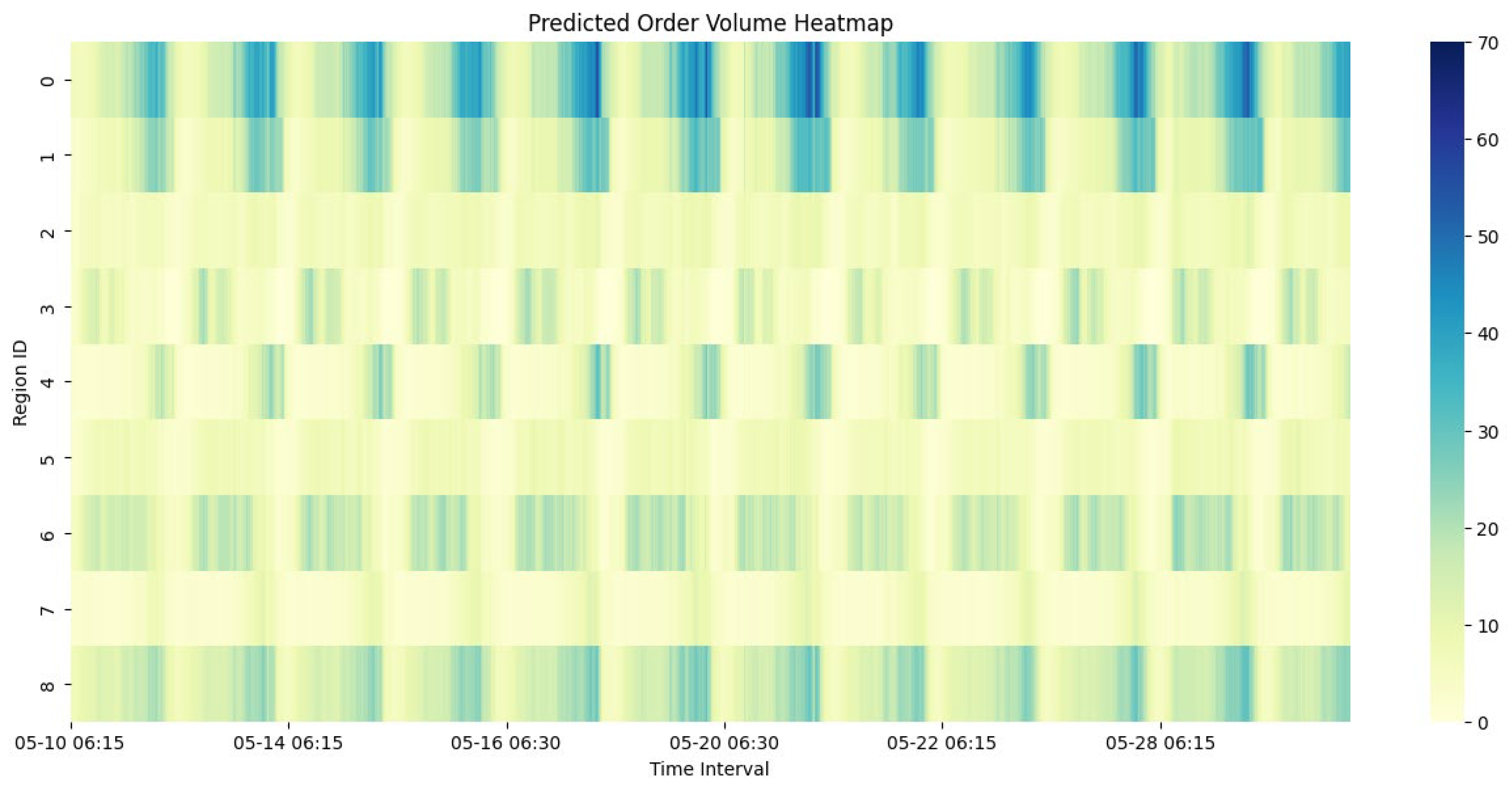

This study adopts a hybrid spatiotemporal prediction model, Edge-GATv2-LSTM, to enhance the accuracy of short-term taxi pick-up demand forecasting across different urban regions. Unlike conventional approaches that primarily rely on node-level information, the model incorporates multi-dimensional edge features—including temporal similarity, geographic proximity, and functional similarity—into the graph attention mechanism. By integrating an edge-aware attention network (GATv2) with a temporal modeling component (LSTM), the framework captures both spatial interactions and temporal variations in a unified structure. The edge-aware attention mechanism dynamically computes the inter-node influence by considering both node embeddings and edge attributes, allowing the model to reflect structural heterogeneity across urban regions. The LSTM module processes recent trends and periodic patterns in demand, enabling the model to adapt to both short-term fluctuations and recurring cycles. This joint modeling approach improves the interpretability and expressiveness of spatial–temporal dependencies.

Experimental results based on real-world taxi data from Ningbo demonstrate that the Edge-GATv2-LSTM model outperforms four representative baselines—GCN, GCN-LSTM, GAT, and GAT-LSTM—achieving the lowest RMSE (3.85) and MAE (2.86). The model shows strong performance across different regions and peak periods, confirming the effectiveness of incorporating edge semantics into attention-based graph modeling.

The proposed model can provide useful support for several practical applications in urban transportation. For example, it can help taxi drivers identify where future pick-up demand is likely to be high so that they can plan routes more efficiently when vehicles are empty. The prediction results can also help platforms arrange vehicle dispatch in advance, balance supply and demand between regions, and improve service during rush hours. For traffic management departments, the model can support the monitoring of regional travel patterns and help design better traffic control strategies.

Future research can be carried out in the following directions. First, incorporating attention mechanisms into the LSTM component may further enhance the model’s ability to capture long-term fluctuations in taxi pick-up demand. Second, different combinations of graph neural network layers and temporal modeling units may have varying effects on performance; future work could explore more expressive architectures to further improve the prediction accuracy and generalization capability. Third, since this study is based solely on data from Ningbo, future studies could apply the proposed model to cities with different urban structures and mobility patterns to evaluate its transferability and robustness. Finally, external factors such as weather conditions, public holidays, and special events were not included in this study due to limited data availability. However, the proposed model can be adapted to incorporate such inputs in future work when relevant data become available.