Modeling Student Loyalty in the Age of Generative AI: A Structural Equation Analysis of ChatGPT’s Role in Higher Education

Abstract

1. Introduction

2. Literature Review

2.1. Novelty, Intelligence, Knowledge, and Creepiness

2.2. Task Attraction and Hedonic Value

2.3. Trust

3. Research Model

3.1. Novelty Value

3.2. Perceived Intelligence

3.3. Knowledge Acquisition

3.4. Creepiness

3.5. Task Attraction

3.6. Hedonic Value

3.7. Trust

3.8. Satisfaction

3.9. Gender and Age

4. Research Methodology

4.1. Development of Measurement Tools

4.2. Subject and Data Collection

4.3. Statistical Analyses

5. Results

5.1. Common Method Bias (CMB)

5.2. Measurement Model

5.3. Model Fit

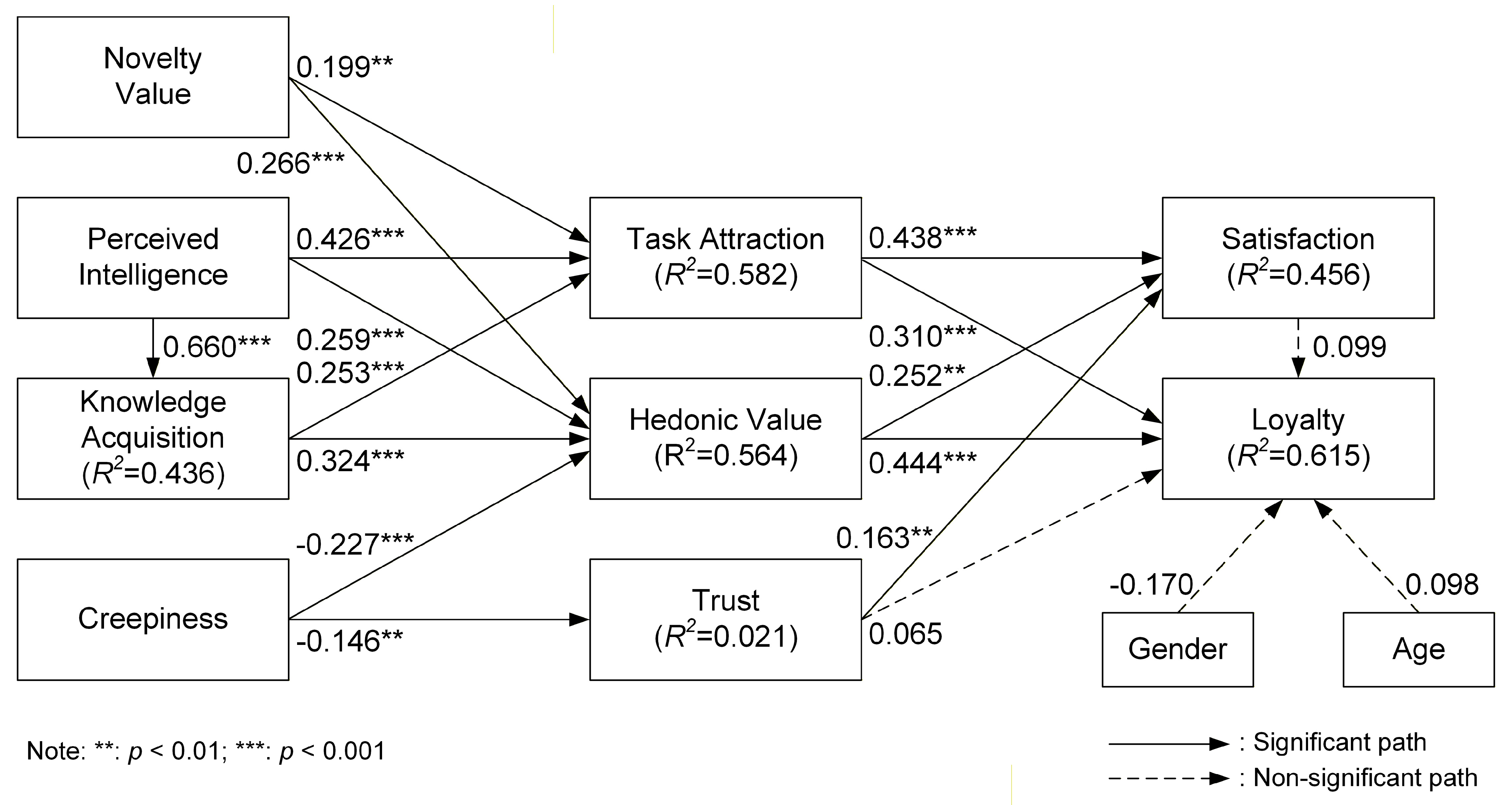

5.4. Testing of Hypotheses

6. Discussion

7. Conclusions

7.1. Theoretical Contributions

7.2. Practical Implications

7.3. Limitation and Future Research

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Construct | Item | Description | Source |

|---|---|---|---|

| Novelty Value | NVT1 | Using ChatGPT is a unique experience. | Hasan, Shams [83] |

| NVT2 | Using ChatGPT is an educational experience. | ||

| NVT3 | The experience of using ChatGPT satisfies my curiosity. | ||

| Perceived Intelligence | PIE1 | I feel that ChatGPT for learning is competent. | Rafiq, Dogra [13] |

| PIE2 | I feel that ChatGPT for learning is knowledgeable. | ||

| PIE3 | I feel that ChatGPT for learning is intelligent. | ||

| Knowledge Acquisition | KAQ1 | ChatGPT allows me to generate new knowledge based on my existing | Al-Sharafi, Al-Emran [29] |

| KAQ2 | ChatGPT enables me to acquire knowledge through various resources. | ||

| KAQ3 | ChatGPT assists me to acquire the knowledge that suits my needs. | ||

| Creepiness | CPN1 | When using ChatGPT, I had a queasy feeling. | Rajaobelina, Prom Tep [52] |

| CPN2 | When using ChatGPT, I felt uneasy. | ||

| CPN3 | When using ChatGPT, I somehow felt threatened. | ||

| Task Attraction | TAT1 | ChatGPT is beneficial for my tasks. | Han and Yang [54] |

| TAT2 | ChatGPT aids me in accomplishing tasks more quickly. | ||

| TAT3 | ChatGPT enhances my productivity. | ||

| Hedonic Value | HEV1 | I enjoy using ChatGPT. | Kim and Han [108] |

| HEV2 | ChatGPT elicits positive feelings. | ||

| HEV3 | Engaging with ChatGPT is genuinely enjoyable. | ||

| Trust | TRU1 | I trust that my personal information won’t be misused. | Nguyen, Ta [78] |

| TRU2 | I am confident that my personal data is safeguarded. | ||

| TRU3 | I believe my personal data is securely stored. | ||

| Satisfaction | SAT1 | I am very satisfied with ChatGPT. | Kim, Wong [133] |

| SAT2 | ChatGPT meets my expectations. | ||

| SAT3 | ChatGPT fits my needs/wants. | ||

| Loyalty | LYT1 | I prefer ChatGPT to other Chatbots. | Daud, Farida [106] |

| LYT2 | I will continue to use ChatGPT in the future. | ||

| LYT3 | I am willing to refer ChatGPT to other people or friends. |

References

- van Dis, E.A.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: Five priorities for research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef]

- George, A.S.; George, A.H. A review of ChatGPT AI’s impact on several business sectors. Partn. Univers. Int. Innov. J. 2023, 1, 9–23. [Google Scholar]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Cheng, K.; Sun, Z.; He, Y.; Gu, S.; Wu, H. The potential impact of ChatGPT/GPT-4 on surgery: Will it topple the profession of surgeons? Int. J. Surg. 2023, 109, 1545–1547. [Google Scholar] [CrossRef]

- Gilson, A.; Safranek, C.W.; Huang, T.; Socrates, V.; Chi, L.; Taylor, R.A.; Chartash, D. How does ChatGPT perform on the united states medical licensing examination? The implications of large language models for medical education and knowledge assessment. JMIR Med. Educ. 2023, 9, e45312. [Google Scholar] [CrossRef]

- Lin, C.-C.; Huang, A.Y.; Yang, S.J. A review of ai-driven conversational chatbots implementation methodologies and challenges (1999–2022). Sustainability 2023, 15, 4012. [Google Scholar] [CrossRef]

- Alkaissi, H.; McFarlane, S.I. Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus 2023, 15, e35179. [Google Scholar] [PubMed]

- Firat, M. How Chat GPT Can Transform Autodidactic Experiences and Open Education; Department of Distance Education Open Education Faculty, Anadolu University: Eskişehir, Turkey, 2023. [Google Scholar]

- Bardach, L.; Emslander, V.; Kasneci, E.; Eitel, A.; Lindner, M.; Bailey, D. Research Syntheses on AI in Education Offer Limited Educational Insights; Technical University of Munich (TUM): Munich, Germany, 2025. [Google Scholar]

- Kalla, D.; Smith, N. Study and Analysis of Chat GPT and its Impact on Different Fields of Study. Int. J. Innov. Sci. Res. Technol. 2023, 8, 827–833. [Google Scholar]

- Essel, H.B.; Vlachopoulos, D.; Tachie-Menson, A.; Johnson, E.E.; Baah, P.K. The impact of a virtual teaching assistant (chatbot) on students’ learning in Ghanaian higher education. Int. J. Educ. Technol. High. Educ. 2022, 19, 57. [Google Scholar] [CrossRef]

- Feroz, H.M.B.; Zulfiqar, S.; Noor, S.; Huo, C. Examining multiple engagements and their impact on students’ knowledge acquisition: The moderating role of information overload. J. Appl. Res. High. Educ. 2022, 14, 366–393. [Google Scholar] [CrossRef]

- Rafiq, F.; Dogra, N.; Adil, M.; Wu, J.-Z. Examining consumer’s intention to adopt AI-chatbots in tourism using partial least squares structural equation modeling method. Mathematics 2022, 10, 2190. [Google Scholar] [CrossRef]

- Sun, G.H.; Hoelscher, S.H. The ChatGPT storm and what faculty can do. Nurse Educ. 2023, 48, 119–124. [Google Scholar] [CrossRef]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Köchling, A.; Wehner, M.C.; Warkocz, J. Can I show my skills? Affective responses to artificial intelligence in the recruitment process. Rev. Manag. Sci. 2023, 17, 2109–2138. [Google Scholar] [CrossRef]

- Woźniak, P.W.; Karolus, J.; Lang, F.; Eckerth, C.; Schöning, J.; Rogers, Y.; Niess, J. Creepy technology: What is it and how do you measure it? In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–13. [Google Scholar]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Selamat, M.A.; Windasari, N.A. Chatbot for SMEs: Integrating customer and business owner perspectives. Technol. Soc. 2021, 66, 101685. [Google Scholar] [CrossRef]

- Rahim, N.I.M.; Iahad, N.A.; Yusof, A.F.; Al-Sharafi, M.A. AI-Based Chatbots Adoption Model for Higher-Education Institutions: A Hybrid PLS-SEM-Neural Network Modelling Approach. Sustainability 2022, 14, 12726. [Google Scholar] [CrossRef]

- Følstad, A.; Brandtzaeg, P.B. Users’ experiences with chatbots: Findings from a questionnaire study. Qual. User Exp. 2020, 5, 3. [Google Scholar] [CrossRef]

- Jenneboer, L.; Herrando, C.; Constantinides, E. The impact of chatbots on customer loyalty: A systematic literature review. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 212–229. [Google Scholar] [CrossRef]

- Derico, B. ChatGPT Bug Leaked Users’ Conversation Histories. Available online: https://www.bbc.com/news/technology-65047304 (accessed on 5 May 2025).

- Gurman, M. Samsung Bans Staff’s AI Use After Spotting ChatGPT Data Leak. Available online: https://www.bloomberg.com/news/articles/2023-05-02/samsung-bans-chatgpt-and-other-generative-ai-use-by-staff-after-leak#xj4y7vzkg (accessed on 5 May 2025).

- Matemba, E.D.; Li, G. Consumers’ willingness to adopt and use WeChat wallet: An empirical study in South Africa. Technol. Soc. 2018, 53, 55–68. [Google Scholar] [CrossRef]

- Cheng, L.; Liu, F.; Yao, D. Enterprise data breach: Causes, challenges, prevention, and future directions. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2017, 7, e1211. [Google Scholar] [CrossRef]

- Al-Sharafi, M.A.; Al-Emran, M.; Iranmanesh, M.; Al-Qaysi, N.; Iahad, N.A.; Arpaci, I. Understanding the impact of knowledge management factors on the sustainable use of AI-based chatbots for educational purposes using a hybrid SEM-ANN approach. Interact. Learn. Environ. 2022, 31, 7491–7510. [Google Scholar] [CrossRef]

- Tussyadiah, I.P.; Wang, D.; Jung, T.H.; Tom Dieck, M.C. Virtual reality, presence, and attitude change: Empirical evidence from tourism. Tour. Manag. 2018, 66, 140–154. [Google Scholar] [CrossRef]

- Yu, C.-E. Humanlike robots as employees in the hotel industry: Thematic content analysis of online reviews. J. Hosp. Mark. Manag. 2020, 29, 22–38. [Google Scholar] [CrossRef]

- Graw, M. 50+ ChatGPT Statistics for May 2023—Data on Usage & Revenue. Available online: https://www.business2community.com/statistics/chatgpt (accessed on 4 May 2025).

- Koc, T.; Bozdag, E. Measuring the degree of novelty of innovation based on Porter’s value chain approach. Eur. J. Oper. Res. 2017, 257, 559–567. [Google Scholar] [CrossRef]

- Jo, H. Continuance intention to use artificial intelligence personal assistant: Type, gender, and use experience. Heliyon 2022, 8, e10662. [Google Scholar] [CrossRef] [PubMed]

- Merikivi, J.; Nguyen, D.; Tuunainen, V.K. Understanding perceived enjoyment in mobile game context. In Proceedings of the 2016 49th Hawaii International Conference on System Sciences (HICSS), Koloa, HI, USA, 5–8 January 2016; pp. 3801–3810. [Google Scholar]

- Lv, Y.; Hu, S.; Liu, F.; Qi, J. Research on Users’ Trust in Customer Service Chatbots Based on Human-Computer Interaction; Springer: Singapore, 2022; pp. 291–306. [Google Scholar]

- Alam, A. Possibilities and apprehensions in the landscape of artificial intelligence in education. In Proceedings of the 2021 International Conference on Computational Intelligence and Computing Applications (ICCICA), Nagpur, India, 26–27 November 2021; pp. 1–8. [Google Scholar]

- McGinn, C.; Cullinan, M.F.; Otubela, M.; Kelly, K. Design of a terrain adaptive wheeled robot for human-orientated environments. Auton. Robot. 2019, 43, 63–78. [Google Scholar] [CrossRef]

- Petisca, S.; Dias, J.; Paiva, A. More social and emotional behaviour may lead to poorer perceptions of a social robot. In Proceedings of the Social Robotics: 7th International Conference, ICSR 2015, Paris, France, 26–30 October 2015; Proceedings 7; pp. 522–531. [Google Scholar]

- Ashfaq, M.; Yun, J.; Yu, S.; Loureiro, S.M.C. I, Chatbot: Modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telemat. Inform. 2020, 54, 101473. [Google Scholar] [CrossRef]

- Rapp, A.; Curti, L.; Boldi, A. The human side of human-chatbot interaction: A systematic literature review of ten years of research on text-based chatbots. Int. J. Hum. Comput. Stud. 2021, 151, 102630. [Google Scholar] [CrossRef]

- Al-Emran, M.; Mezhuyev, V.; Kamaludin, A. Towards a conceptual model for examining the impact of knowledge management factors on mobile learning acceptance. Technol. Soc. 2020, 61, 101247. [Google Scholar] [CrossRef]

- Al-Maroof, R.; Ayoubi, K.; Alhumaid, K.; Aburayya, A.; Alshurideh, M.; Alfaisal, R.; Salloum, S. The acceptance of social media video for knowledge acquisition, sharing and application: A comparative study among YouYube users and TikTok users’ for medical purposes. Int. J. Data Netw. Sci. 2021, 5, 197. [Google Scholar] [CrossRef]

- Khan, M.N.; Ashraf, M.A.; Seinen, D.; Khan, K.U.; Laar, R.A. Social media for knowledge acquisition and dissemination: The impact of the COVID-19 pandemic on collaborative learning driven social media adoption. Front. Psychol. 2021, 12, 648253. [Google Scholar] [CrossRef] [PubMed]

- Al-Emran, M.; Teo, T. Do knowledge acquisition and knowledge sharing really affect e-learning adoption? An empirical study. Educ. Inf. Technol. 2020, 25, 1983–1998. [Google Scholar] [CrossRef]

- Al-Emran, M.; Mezhuyev, V.; Kamaludin, A. Is M-learning acceptance influenced by knowledge acquisition and knowledge sharing in developing countries? Educ. Inf. Technol. 2021, 26, 2585–2606. [Google Scholar] [CrossRef]

- Olivera-La Rosa, A.; Arango-Tobón, O.E.; Ingram, G.P. Swiping right: Face perception in the age of Tinder. Heliyon 2019, 5, e02949. [Google Scholar] [CrossRef] [PubMed]

- Phelan, C.; Lampe, C.; Resnick, P. It’s creepy, but it doesn’t bother me. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 5240–5251. [Google Scholar]

- Laakasuo, M.; Palomäki, J.; Köbis, N. Moral uncanny valley: A robot’s appearance moderates how its decisions are judged. Int. J. Soc. Robot. 2021, 13, 1679–1688. [Google Scholar] [CrossRef]

- Oravec, J.A. Negative Dimensions of Human-Robot and Human-AI Interactions: Frightening Legacies, Emerging Dysfunctions, and Creepiness. In Good Robot, Bad Robot: Dark and Creepy Sides of Robotics, Autonomous Vehicles, and AI; Springer: Berlin/Heidelberg, Germany, 2022; pp. 39–89. [Google Scholar]

- McWhorter, R.R.; Bennett, E.E. Creepy technologies and the privacy issues of invasive technologies. In Research Anthology on Privatizing and Securing Data; IGI Global: Hershey, PA, USA, 2021; pp. 1726–1745. [Google Scholar]

- Rajaobelina, L.; Prom Tep, S.; Arcand, M.; Ricard, L. Creepiness: Its antecedents and impact on loyalty when interacting with a chatbot. Psychol. Mark. 2021, 38, 2339–2356. [Google Scholar] [CrossRef]

- Söderlund, M. Service robots with (perceived) theory of mind: An examination of humans’ reactions. J. Retail. Consum. Serv. 2022, 67, 102999. [Google Scholar] [CrossRef]

- Han, S.; Yang, H. Understanding adoption of intelligent personal assistants: A parasocial relationship perspective. Ind. Manag. Data Syst. 2018, 118, 618–636. [Google Scholar] [CrossRef]

- Moussawi, S.; Koufaris, M.; Benbunan-Fich, R. How perceptions of intelligence and anthropomorphism affect adoption of personal intelligent agents. Electron. Mark. 2021, 31, 343–364. [Google Scholar] [CrossRef]

- Shetu, S.N.; Islam, M.M.; Promi, S.I. An Empirical Investigation of the Continued Usage Intention of Digital Wallets: The Moderating Role of Perceived Technological Innovativeness. Future Bus. J. 2022, 8, 43. [Google Scholar] [CrossRef]

- Gatzioufa, P.; Saprikis, V. A literature review on users’ behavioral intention toward chatbots’ adoption. Appl. Comput. Inform. 2022, ahead-of-print. [Google Scholar] [CrossRef]

- Burton, S.L. Grasping the cyber-world: Artificial intelligence and human capital meet to inform leadership. Int. J. Econ. Commer. Manag. 2019, 7, 707–759. [Google Scholar]

- Seo, K.; Tang, J.; Roll, I.; Fels, S.; Yoon, D. The impact of artificial intelligence on learner–instructor interaction in online learning. Int. J. Educ. Technol. High. Educ. 2021, 18, 54. [Google Scholar] [CrossRef]

- Deranty, J.-P.; Corbin, T. Artificial intelligence and work: A critical review of recent research from the social sciences. AI Soc. 2022, 39, 675–691. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, W.; Xu, J. Bandwidth-efficient multi-task AI inference with dynamic task importance for the Internet of Things in edge computing. Comput. Netw. 2022, 216, 109262. [Google Scholar] [CrossRef]

- Yang, B.; Wei, L.; Pu, Z. Measuring and Improving User Experience Through Artificial Intelligence-Aided Design. Front. Psychol. 2020, 11, 595374. [Google Scholar] [CrossRef]

- Fares, O.H.; Butt, I.; Lee, S.H.M. Utilization of artificial intelligence in the banking sector: A systematic literature review. J. Financ. Serv. Mark. 2022, 28, 835–852. [Google Scholar] [CrossRef]

- Zhang, Q.; Lu, J.; Jin, Y. Artificial intelligence in recommender systems. Complex Intell. Syst. 2021, 7, 439–457. [Google Scholar] [CrossRef]

- Akdim, K.; Casaló, L.V.; Flavián, C. The role of utilitarian and hedonic aspects in the continuance intention to use social mobile apps. J. Retail. Consum. Serv. 2022, 66, 102888. [Google Scholar] [CrossRef]

- Hamouda, M. Purchase intention through mobile applications: A customer experience lens. Int. J. Retail Distrib. Manag. 2021, 49, 1464–1480. [Google Scholar] [CrossRef]

- Lee, S.; Kim, D.-Y. The effect of hedonic and utilitarian values on satisfaction and loyalty of Airbnb users. Int. J. Contemp. Hosp. Manag. 2018, 30, 1332–1351. [Google Scholar] [CrossRef]

- Evelina, T.Y.; Kusumawati, A.; Nimran, U.; Sunarti. The influence of utilitarian value, hedonic value, social value, and perceived risk on customer satisfaction: Survey of e-commerce customers in Indonesia. Bus. Theory Pract. 2020, 21, 613–622. [Google Scholar] [CrossRef]

- Ponsignon, F.; Lunardo, R.; Michrafy, M. Why are international visitors more satisfied with the tourism experience? The role of hedonic value, escapism, and psychic distance. J. Travel Res. 2021, 60, 1771–1786. [Google Scholar] [CrossRef]

- Xie, C.; Wang, Y.; Cheng, Y. Does artificial intelligence satisfy you? A meta-analysis of user gratification and user satisfaction with AI-powered chatbots. Int. J. Hum. Comput. Interact. 2022, 40, 613–623. [Google Scholar] [CrossRef]

- Kim, J.; Merrill Jr, K.; Collins, C. AI as a friend or assistant: The mediating role of perceived usefulness in social AI vs. functional AI. Telemat. Inform. 2021, 64, 101694. [Google Scholar] [CrossRef]

- Pillai, R.; Sivathanu, B. Adoption of AI-based chatbots for hospitality and tourism. Int. J. Contemp. Hosp. Manag. 2020, 32, 3199–3226. [Google Scholar] [CrossRef]

- Balakrishnan, J.; Dwivedi, Y.K.; Hughes, L.; Boy, F. Enablers and Inhibitors of AI-Powered Voice Assistants: A Dual-Factor Approach by Integrating the Status Quo Bias and Technology Acceptance Model. Inf. Syst. Front. 2021, 26, 921–942. [Google Scholar] [CrossRef]

- Belanche, D.; Casaló, L.V.; Flavián, C. Artificial Intelligence in FinTech: Understanding robo-advisors adoption among customers. Ind. Manag. Data Syst. 2019, 119, 1411–1430. [Google Scholar] [CrossRef]

- Vlačić, B.; Corbo, L.; e Silva, S.C.; Dabić, M. The evolving role of artificial intelligence in marketing: A review and research agenda. J. Bus. Res. 2021, 128, 187–203. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Belen Saglam, R.; Nurse, J.R.; Hodges, D. Privacy concerns in Chatbot interactions: When to trust and when to worry. In Proceedings of the HCI International 2021-Posters: 23rd HCI International Conference, HCII 2021, Virtual Event, 24–29 July 2021; Proceedings, Part II 23. pp. 391–399. [Google Scholar]

- Nguyen, Q.N.; Ta, A.; Prybutok, V. An integrated model of voice-user interface continuance intention: The gender effect. Int. J. Hum. Comput. Interact. 2019, 35, 1362–1377. [Google Scholar] [CrossRef]

- Følstad, A.; Nordheim, C.B.; Bjørkli, C.A. What Makes Users Trust a Chatbot for Customer Service? An Exploratory Interview Study; Springer: Cham, Switzerland, 2018; pp. 194–208. [Google Scholar]

- Hamad, S.; Yeferny, T. A Chatbot for Information Security. arXiv 2020, arXiv:2012.00826. [Google Scholar]

- Wang, X.; Lin, X.; Shao, B. Artificial intelligence changes the way we work: A close look at innovating with chatbots. J. Assoc. Inf. Sci. Technol. 2023, 74, 339–353. [Google Scholar] [CrossRef]

- Brill, T.M.; Munoz, L.; Miller, R.J. Siri, Alexa, and other digital assistants: A study of customer satisfaction with artificial intelligence applications. J. Mark. Manag. 2019, 35, 1401–1436. [Google Scholar] [CrossRef]

- Hasan, R.; Shams, R.; Rahman, M. Consumer trust and perceived risk for voice-controlled artificial intelligence: The case of Siri. J. Bus. Res. 2021, 131, 591–597. [Google Scholar] [CrossRef]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Wellsandta, S.; Rusak, Z.; Ruiz Arenas, S.; Aschenbrenner, D.; Hribernik, K.A.; Thoben, K.-D. Concept of a Voice-Enabled Digital Assistant for Predictive Maintenance in Manufacturing. In Proceedings of the 9th International Conference on Through-life Engineering Services, Cranfield University, Bedford, UK, 3–4 November 2020. [Google Scholar]

- Lai, P.C. The literature review of technology adoption models and theories for the novelty technology. J. Inf. Syst. Technol. Manag. 2017, 14, 21–38. [Google Scholar] [CrossRef]

- Jeong, S.C.; Kim, S.-H.; Park, J.Y.; Choi, B. Domain-specific innovativeness and new product adoption: A case of wearable devices. Telemat. Inform. 2017, 34, 399–412. [Google Scholar] [CrossRef]

- Adapa, S.; Fazal-e-Hasan, S.M.; Makam, S.B.; Azeem, M.M.; Mortimer, G. Examining the antecedents and consequences of perceived shopping value through smart retail technology. J. Retail. Consum. Serv. 2020, 52, 101901. [Google Scholar] [CrossRef]

- Oyman, M.; Bal, D.; Ozer, S. Extending the technology acceptance model to explain how perceived augmented reality affects consumers’ perceptions. Comput. Hum. Behav. 2022, 128, 107127. [Google Scholar] [CrossRef]

- Matt, C.; Benlian, A.; Hess, T.; Weiß, C. Escaping from the filter bubble? The effects of novelty and serendipity on users’ evaluations of online recommendations. In Proceedings of the 2014 International Conference on Information Systems (ICIS 2014), Auckland, New Zealand, 14–17 December 2014. [Google Scholar]

- Nguyen, D. Understanding Perceived Enjoyment and Continuance Intention in Mobile Games. Master’s Thesis, Aalto University, Espoo, Finland, 2015. [Google Scholar]

- Hernández-Orallo, J. Evaluation in artificial intelligence: From task-oriented to ability-oriented measurement. Artif. Intell. Rev. 2017, 48, 397–447. [Google Scholar] [CrossRef]

- Yang, Y.; Luo, J.; Lan, T. An empirical assessment of a modified artificially intelligent device use acceptance model—From the task-oriented perspective. Front. Psychol. 2022, 13, 975307. [Google Scholar] [CrossRef]

- Lee, J.; Park, D.-H.; Han, I. The effect of negative online consumer reviews on product attitude: An information processing view. Electron. Commer. Res. Appl. 2008, 7, 341–352. [Google Scholar] [CrossRef]

- Fergusson, L. Learning by… Knowledge and skills acquisition through work-based learning and research. J. Work-Appl. Manag. 2022, 14, 184–199. [Google Scholar] [CrossRef]

- Joseph, R.P.; Arun, T.M. Models and Tools of Knowledge Acquisition. In Computational Management: Applications of Computational Intelligence in Business Management; Patnaik, S., Tajeddini, K., Jain, V., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 53–67. [Google Scholar] [CrossRef]

- Ngoc Thang, N.; Anh Tuan, P. Knowledge acquisition, knowledge management strategy and innovation: An empirical study of Vietnamese firms. Cogent Bus. Manag. 2020, 7, 1786314. [Google Scholar] [CrossRef]

- Rotar, O. Online student support: A framework for embedding support interventions into the online learning cycle. Res. Pract. Technol. Enhanc. Learn. 2022, 17, 2. [Google Scholar] [CrossRef]

- Tene, O.; Polonetsky, J. A theory of creepy: Technology, privacy and shifting social norms. Yale JL Tech. 2013, 16, 59. [Google Scholar]

- Langer, M.; König, C.J.; Fitili, A. Information as a double-edged sword: The role of computer experience and information on applicant reactions towards novel technologies for personnel selection. Comput. Hum. Behav. 2018, 81, 19–30. [Google Scholar] [CrossRef]

- Yang, R.; Wibowo, S. User trust in artificial intelligence: A comprehensive conceptual framework. Electron. Mark. 2022, 32, 2053–2077. [Google Scholar] [CrossRef]

- Lukyanenko, R.; Maass, W.; Storey, V.C. Trust in artificial intelligence: From a Foundational Trust Framework to emerging research opportunities. Electron. Mark. 2022, 32, 1993–2020. [Google Scholar] [CrossRef]

- Jo, H. Examining the key factors influencing loyalty and satisfaction toward the smart factory. J. Bus. Ind. Mark. 2023, 38, 484–493. [Google Scholar] [CrossRef]

- Jo, H.; Park, S. Success factors of untact lecture system in COVID-19: TAM, benefits, and privacy concerns. Technol. Anal. Strateg. Manag. 2022, 36, 1385–1397. [Google Scholar] [CrossRef]

- Doménech-Betoret, F.; Abellán-Roselló, L.; Gómez-Artiga, A. Self-Efficacy, Satisfaction, and Academic Achievement: The Mediator Role of Students’ Expectancy-Value Beliefs. Front. Psychol. 2017, 8, 1193. [Google Scholar] [CrossRef]

- Daud, A.; Farida, N.; Razak, M. Impact of customer trust toward loyalty: The mediating role of perceived usefulness and satisfaction. J. Bus. Retail Manag. Res. 2018, 13, 235–242. [Google Scholar] [CrossRef]

- Almahamid, S.; Mcadams, A.C.; Al Kalaldeh, T.; MO’TAZ, A.-S.E. The relationship between perceived usefulness, perceived ease of use, perceived information quality, and intention to use e-government. J. Theor. Appl. Inf. Technol. 2010, 11, 30–44. [Google Scholar]

- Kim, B.; Han, I. The role of utilitarian and hedonic values and their antecedents in a mobile data service environment. Expert Syst. Appl. 2011, 38, 2311–2318. [Google Scholar] [CrossRef]

- Rodríguez-Ardura, I.; Meseguer-Artola, A.; Fu, Q. The utilitarian and hedonic value of immersive experiences on WeChat: Examining a dual mediation path leading to users’ stickiness and the role of social norms. Online Inf. Rev. 2023, ahead-of-print. [Google Scholar] [CrossRef]

- Weli, W. Student satisfaction and continuance model of Enterprise Resource Planning (ERP) system usage. Int. J. Emerg. Technol. Learn. 2019, 14, 71. [Google Scholar] [CrossRef]

- Isaac, O.; Abdullah, Z.; Ramayah, T.; Mutahar, A.M.; Alrajawy, I. Integrating user satisfaction and performance impact with technology acceptance model (TAM) to examine the internet usage within organizations in Yemen. Asian J. Inf. Technol. 2018, 17, 60–78. [Google Scholar]

- Bae, S.; Jung, T.H.; Moorhouse, N.; Suh, M.; Kwon, O. The influence of mixed reality on satisfaction and brand loyalty in cultural heritage attractions: A brand equity perspective. Sustainability 2020, 12, 2956. [Google Scholar] [CrossRef]

- Lee, R.; Murphy, J. The moderating influence of enjoyment on customer loyalty. Australas. Mark. J. 2008, 16, 11–21. [Google Scholar] [CrossRef]

- Rao, Q.; Ko, E. Impulsive purchasing and luxury brand loyalty in WeChat Mini Program. Asia Pac. J. Mark. Logist. 2021, 33, 2054–2071. [Google Scholar] [CrossRef]

- Kim, J.; Lennon, S.J. Effects of reputation and website quality on online consumers’ emotion, perceived risk and purchase intention: Based on the stimulus-organism-response model. J. Res. Interact. Mark. 2013, 7, 33–56. [Google Scholar] [CrossRef]

- Shin, D. The effects of explainability and causability on perception, trust, and acceptance: Implications for explainable AI. Int. J. Hum. Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Kassim, E.S.; Jailani, S.F.A.K.; Hairuddin, H.; Zamzuri, N.H. Information System Acceptance and User Satisfaction: The Mediating Role of Trust. Procedia Soc. Behav. Sci. 2012, 57, 412–418. [Google Scholar] [CrossRef]

- Martínez-Navalón, J.-G.; Gelashvili, V.; Gómez-Ortega, A. Evaluation of User Satisfaction and Trust of Review Platforms: Analysis of the Impact of Privacy and E-WOM in the Case of TripAdvisor. Front. Psychol. 2021, 12, 750527. [Google Scholar] [CrossRef]

- Montesdioca, G.P.Z.; Maçada, A.C.G. Measuring user satisfaction with information security practices. Comput. Secur. 2015, 48, 267–280. [Google Scholar] [CrossRef]

- Pal, D.; Babakerkhell, M.D.; Zhang, X. Exploring the Determinants of Users’ Continuance Usage Intention of Smart Voice Assistants. IEEE Access 2021, 9, 162259–162275. [Google Scholar] [CrossRef]

- Oliver, R.L. A cognitive model of the antecedents and consequences of satisfaction decisions. J. Mark. Res. 1980, 17, 460–469. [Google Scholar] [CrossRef]

- Kim, D.J.; Ferrin, D.L.; Rao, H.R. A trust-based consumer decision-making model in electronic commerce: The role of trust, perceived risk, and their antecedents. Decis. Support Syst. 2008, 44, 544–564. [Google Scholar] [CrossRef]

- Oliver, R.L. Satisfaction: A Behavioral Perspective on the Consumer New York; McGraw-Hill: New York, NY, USA, 1997. [Google Scholar]

- Chen, Q.; Lu, Y.; Gong, Y.; Xiong, J. Can AI chatbots help retain customers? Impact of AI service quality on customer loyalty. Internet Res. 2023, ahead-of-print. [Google Scholar] [CrossRef]

- Singh, P.; Singh, V. The power of AI: Enhancing customer loyalty through satisfaction and efficiency. Cogent Bus. Manag. 2024, 11, 2326107. [Google Scholar] [CrossRef]

- Koenig, P.D. Attitudes toward artificial intelligence: Combining three theoretical perspectives on technology acceptance. AI Soc. 2024, 40, 1333–1345. [Google Scholar] [CrossRef]

- Palmquist, A.; Jedel, I. Influence of Gender, Age, and Frequency of Use on Users’ Attitudes on Gamified Online Learning; Springer: Cham, Switzerland, 2021; pp. 177–185. [Google Scholar]

- Chawla, D.; Joshi, H. The moderating role of gender and age in the adoption of mobile wallet. Foresight 2020, 22, 483–504. [Google Scholar] [CrossRef]

- White Baker, E.; Al-Gahtani, S.S.; Hubona, G.S. The effects of gender and age on new technology implementation in a developing country. Inf. Technol. People 2007, 20, 352–375. [Google Scholar] [CrossRef]

- Cirillo, D.; Catuara-Solarz, S.; Morey, C.; Guney, E.; Subirats, L.; Mellino, S.; Gigante, A.; Valencia, A.; Rementeria, M.J.; Chadha, A.S. Sex and gender differences and biases in artificial intelligence for biomedicine and healthcare. NPJ Digit. Med. 2020, 3, 81. [Google Scholar] [CrossRef] [PubMed]

- Kim, A.; Cho, M.; Ahn, J.; Sung, Y. Effects of Gender and Relationship Type on the Response to Artificial Intelligence. Cyberpsychology Behav. Soc. Netw. 2019, 22, 249–253. [Google Scholar] [CrossRef]

- Yap, Y.-Y.; Tan, S.-H.; Choon, S.-W. Elderly’s intention to use technologies: A systematic literature review. Heliyon 2022, 8, e08765. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.-K.; Wong, S.F.; Chang, Y.; Park, J.-H. Determinants of customer loyalty in the Korean smartphone market: Moderating effects of usage characteristics. Telemat. Inform. 2016, 33, 936–949. [Google Scholar] [CrossRef]

- Neuman, C.; Rossman, G. Basics of Social Research Methods Qualitative and Quantitative Approaches; Allyn and Bacon: Boston, MA, USA, 2006. [Google Scholar]

- Palinkas, L.A.; Horwitz, S.M.; Green, C.A.; Wisdom, J.P.; Duan, N.; Hoagwood, K. Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Adm. Policy Ment. Health Ment. Health Serv. Res. 2015, 42, 533–544. [Google Scholar] [CrossRef]

- Hair, J.; Hollingsworth, C.L.; Randolph, A.B.; Chong, A.Y.L. An updated and expanded assessment of PLS-SEM in information systems research. Ind. Manag. Data Syst. 2017, 117, 442–458. [Google Scholar] [CrossRef]

- Hair Jr, J.; Hair Jr, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2021. [Google Scholar]

- Sarstedt, M.; Cheah, J.-H. Partial least squares structural equation modeling using SmartPLS: A software review. J. Mark. Anal. 2019, 7, 196–202. [Google Scholar] [CrossRef]

- Chin, W.W. Issues and opinions on structural equation modeling. MIS Q. 1998, 22, 7–16. [Google Scholar]

- Podsakoff, P.M.; MacKenzie, M.; Scott, B.; Lee, J.-Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef] [PubMed]

- Kock, N. WarpPLS 5.0 User Manual; ScriptWarp Systems: Laredo, TX, USA, 2015. [Google Scholar]

- Nunnally, J.C. Psychometric Theory, 2nd ed.; Mcgraw Hill Book Company: New York, NY, USA, 1978. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Roemer, E.; Schuberth, F.; Henseler, J. HTMT2—An improved criterion for assessing discriminant validity in structural equation modeling. Ind. Manag. Data Syst. 2021, 121, 2637–2650. [Google Scholar] [CrossRef]

- Henseler, J.; Hubona, G.; Ray, P.A. Using PLS path modeling in new technology research: Updated guidelines. Ind. Manag. Data Syst. 2016, 116, 2–20. [Google Scholar] [CrossRef]

- Henseler, J.; Dijkstra, T.K.; Sarstedt, M.; Ringle, C.M.; Diamantopoulos, A.; Straub, D.W.; Ketchen, D.J.; Hair, J.F.; Hult, G.T.M.; Calantone, R.J. Common beliefs and reality about PLS: Comments on Rönkkö and Evermann (2013). Organ. Res. Methods 2014, 17, 182–209. [Google Scholar] [CrossRef]

- Gefen, D. E-commerce: The role of familiarity and trust. Omega 2000, 28, 725–737. [Google Scholar] [CrossRef]

- McKnight, D.H.; Choudhury, V.; Kacmar, C. Developing and validating trust measures for e-commerce: An integrative typology. Inf. Syst. Res. 2002, 13, 334–359. [Google Scholar] [CrossRef]

- Limayem, M.; Hirt, S.G.; Cheung, C.M. How habit limits the predictive power of intention: The case of information systems continuance. MIS Q. 2007, 31, 705–737. [Google Scholar] [CrossRef]

- Ouellette, J.A.; Wood, W. Habit and intention in everyday life: The multiple processes by which past behavior predicts future behavior. Psychol. Bull. 1998, 124, 54. [Google Scholar] [CrossRef]

- Fotheringham, D.; Wiles, M.A. The effect of implementing chatbot customer service on stock returns: An event study analysis. J. Acad. Mark. Sci. 2022, 51, 802–822. [Google Scholar] [CrossRef]

- Xu, L.; Sanders, L.; Li, K.; Chow, J.C. Chatbot for health care and oncology applications using artificial intelligence and machine learning: Systematic review. JMIR Cancer 2021, 7, e27850. [Google Scholar] [CrossRef]

- Nadarzynski, T.; Miles, O.; Cowie, A.; Ridge, D. Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: A mixed-methods study. Digit. Health 2019, 5, 2055207619871808. [Google Scholar] [CrossRef]

- Powell, J. Trust Me, I’ma chatbot: How artificial intelligence in health care fails the turing test. J. Med. Internet Res. 2019, 21, e16222. [Google Scholar] [CrossRef]

- Benke, I.; Gnewuch, U.; Maedche, A. Understanding the impact of control levels over emotion-aware chatbots. Comput. Hum. Behav. 2022, 129, 107122. [Google Scholar] [CrossRef]

- Kumar, J.A. Educational chatbots for project-based learning: Investigating learning outcomes for a team-based design course. Int. J. Educ. Technol. High. Educ. 2021, 18, 65. [Google Scholar] [CrossRef] [PubMed]

- Mageira, K.; Pittou, D.; Papasalouros, A.; Kotis, K.; Zangogianni, P.; Daradoumis, A. Educational AI Chatbots for Content and Language Integrated Learning. Appl. Sci. 2022, 12, 3239. [Google Scholar]

- Moraes, C.L. Chatbot as a Learning Assistant: Factors Influencing Adoption and Recommendation. Master’s Thesis, Universidade NOVA de Lisboa, Lisboa, Portugal, 2021. [Google Scholar]

- Davies, J.N.; Verovko, M.; Verovko, O.; Solomakha, I. Personalization of E-Learning Process Using AI-Powered Chatbot Integration; Springer: Cham, Switzerland, 2023; pp. 209–216. [Google Scholar]

- Hidayatulloh, I.; Pambudi, S.; Surjono, H.D.; Sukardiyono, T. Gamification on chatbot-based learning media: A review and challenges. Electron. Inform. Vocat. Educ. 2021, 6, 71–80. [Google Scholar] [CrossRef]

- Nicolescu, L.; Tudorache, M.T. Human-Computer Interaction in Customer Service: The Experience with AI Chatbots—A Systematic Literature Review. Electronics 2022, 11, 1579. [Google Scholar]

- Pataranutaporn, P.; Danry, V.; Leong, J.; Punpongsanon, P.; Novy, D.; Maes, P.; Sra, M. AI-generated characters for supporting personalized learning and well-being. Nat. Mach. Intell. 2021, 3, 1013–1022. [Google Scholar] [CrossRef]

- Van Pinxteren, M.M.E.; Pluymaekers, M.; Lemmink, J.G.A.M. Human-like communication in conversational agents: A literature review and research agenda. J. Serv. Manag. 2020, 31, 203–225. [Google Scholar] [CrossRef]

| Author(s) | Key Variable | Method | Key Findings |

|---|---|---|---|

| Koc and Bozdag [32] | novelty | conceptual model, case study | Proposed value chain-based model; fuel cell technology had highest novelty among alternatives. |

| Jo [33] | novelty, hedonic value, continuance intention | survey, Partial Least Squares (PLS-SEM) | Novelty value affects utilitarian and hedonic value; continuance intention influenced by utilitarian and hedonic factors. |

| Merikivi, Nguyen [34] | novelty, perceived enjoyment | survey, SEM | Design aesthetics, ease of use, and novelty influence perceived enjoyment; enjoyment drives continuance intention in games. |

| Rafiq, Dogra [13] | perceived intelligence, AI-chatbot adoption | survey, PLS-SEM | Adoption influenced by multiple factors under S-O-R framework; supported ten hypotheses on chatbot adoption in tourism. |

| Ashfaq, Yun [39] | Information quality, perceived usefulness, satisfaction, continuance intention | survey, SEM | Information and service quality enhance satisfaction; satisfaction predicts continuance intention; need for human interaction moderates effects. |

| Al-Emran, Mezhuyev [41] | knowledge management, M-learning | survey, PLS-SEM | Knowledge acquisition, application, protection positively influence perceived usefulness and ease of use; knowledge sharing partly supported. |

| Al-Emran, Mezhuyev [45] | knowledge acquisition, knowledge sharing | survey, PLS-SEM | Knowledge acquisition positively influences ease of use and usefulness in both countries; sharing affects usefulness in Oman but not Malaysia. |

| Rajaobelina, Prom Tep [51] | creepiness, loyalty | survey, SEM | Creepiness reduces loyalty directly and indirectly through trust and emotions; usability reduces creepiness, privacy concerns increase it. |

| Author(s) | Key Variable | Method | Key Findings |

|---|---|---|---|

| Han and Yang [53] | continuance intention, interpersonal attraction, privacy risk | survey, PLS-SEM | Task, social, and physical attraction, along with privacy/security risk, influence IPA adoption; PSR is validated in this context. |

| Shetu, Islam [55] | digital wallet adoption, technological innovativeness | survey, SEM | Perceived usefulness, ease of use, compatibility, and insecurity influence adoption; innovativeness did not moderate intention. |

| Akdim, Casaló [64] | utilitarian vs. hedonic value, continuance intention | survey, PLS-SEM, multi-group analysis | Perceived usefulness, ease of use, and enjoyment explain continuance intention; utilitarian factors dominate in utility apps, enjoyment in hedonic apps. |

| Balakrishnan, Dwivedi [72] | AI voice assistants adoption, resistance | survey, SEM | Status quo bias factors and TAM variables explain adoption resistance; perceived value reduces resistance; inertia varies by gender/age. |

| Belanche, Casaló [73] | AI in FinTech, robo-advisors adoption | survey, SEM, multi-sample analysis | Attitude, mass media, and subjective norms drive adoption; familiarity with robots moderates effects of usefulness and norms across demographics. |

| Pillai and Sivathanu [71] | chatbot adoption in tourism | interviews + survey, mixed-methods, PLS-SEM | Ease of use, usefulness, trust, perceived intelligence, and anthropomorphism predict adoption; technological anxiety not significant; human-agent stickiness negatively moderates intention-usage link. |

| Author(s) | Key Variable(s) | Method | Key Findings |

|---|---|---|---|

| Okonkwo and Ade-Ibijola [75] | chatbot use in education | systematic review (53 articles) | Identified benefits, challenges, and future research directions of chatbots in education; emphasized personalized services for students and staff. |

| Nguyen, Ta [77] | trust, continuance intention, perceived enjoyment, risk | survey, SEM | Trust, risk, enjoyment, and self-efficacy influenced continuance intention; gender differences shaped perception and behavior. |

| Wang, Lin [80] | trust, innovative use of chatbots | survey, SEM | Trust conceptualized as functionality, reliability, and data protection; knowledge support and work-life balance increased trust and innovative use. |

| Brill, Munoz [81] | trust, satisfaction with digital assistants | survey, PLS-SEM | Expectation and confirmation significantly influenced satisfaction; evidence of positive customer experience with AI assistants. |

| Hasan, Shams [82] | trust, risk, novelty, loyalty | survey, SEM | Perceived risk negatively affected loyalty, while trust, interaction, and novelty value positively influenced brand loyalty in Siri users. |

| Rajaobelina, Prom Tep [51] | trust, creepiness, loyalty | survey, SEM | Creepiness reduced loyalty directly and indirectly via trust and emotions; usability reduced creepiness, privacy concerns increased it. |

| Demographics | Item | Subjects (N = 242) | |

|---|---|---|---|

| Frequency | Percentage | ||

| Gender | Male | 102 | 42.1% |

| Female | 140 | 57.9% | |

| Age | 20 or younger | 101 | 41.7% |

| 21 | 21 | 8.7% | |

| 22 | 26 | 10.7% | |

| 23 or older | 94 | 38.8% | |

| Construct | Items | Mean | St. Dev. | Factor Loading | Cronbach’s Alpha | CR | AVE |

|---|---|---|---|---|---|---|---|

| Novelty Value | NVT1 | 5.686 | 1.370 | 0.721 | 0.700 | 0.833 | 0.625 |

| NVT2 | 5.360 | 1.402 | 0.787 | ||||

| NVT3 | 5.554 | 1.304 | 0.858 | ||||

| Perceived Intelligence | PIE1 | 5.488 | 1.220 | 0.878 | 0.864 | 0.917 | 0.785 |

| PIE2 | 5.178 | 1.329 | 0.888 | ||||

| PIE3 | 5.289 | 1.354 | 0.893 | ||||

| Knowledge Acquisition | KAQ1 | 4.901 | 1.320 | 0.861 | 0.870 | 0.920 | 0.794 |

| KAQ2 | 5.066 | 1.261 | 0.907 | ||||

| KAQ3 | 5.095 | 1.264 | 0.904 | ||||

| Creepiness | CPN1 | 5.017 | 1.774 | 0.885 | 0.887 | 0.929 | 0.813 |

| CPN2 | 4.508 | 1.752 | 0.934 | ||||

| CPN3 | 3.727 | 1.820 | 0.886 | ||||

| Task Attraction | TAT1 | 5.384 | 1.225 | 0.908 | 0.904 | 0.940 | 0.840 |

| TAT2 | 5.438 | 1.226 | 0.940 | ||||

| TAT3 | 5.335 | 1.216 | 0.901 | ||||

| Hedonic Value | HEV1 | 4.806 | 1.402 | 0.899 | 0.812 | 0.889 | 0.728 |

| HEV2 | 4.917 | 1.283 | 0.867 | ||||

| HEV3 | 4.343 | 1.533 | 0.790 | ||||

| Trust | TRU1 | 3.748 | 1.656 | 0.928 | 0.936 | 0.959 | 0.886 |

| TRU2 | 3.649 | 1.600 | 0.956 | ||||

| TRU3 | 3.463 | 1.598 | 0.940 | ||||

| Satisfaction | SAT1 | 5.012 | 1.235 | 0.932 | 0.924 | 0.952 | 0.868 |

| SAT2 | 4.917 | 1.283 | 0.953 | ||||

| SAT3 | 4.905 | 1.284 | 0.910 | ||||

| Loyalty | LYT1 | 5.066 | 1.478 | 0.899 | 0.901 | 0.938 | 0.835 |

| LYT2 | 5.045 | 1.427 | 0.938 | ||||

| LYT3 | 5.331 | 1.295 | 0.902 |

| Construct | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|

| 1. Novelty Value | 0.790 | ||||||||

| 2. Perceived Intelligence | 0.568 | 0.886 | |||||||

| 3. Knowledge Acquisition | 0.586 | 0.660 | 0.891 | ||||||

| 4. Creepiness | 0.042 | 0.166 | −0.025 | 0.902 | |||||

| 5. Task Attraction | 0.589 | 0.706 | 0.650 | 0.054 | 0.916 | ||||

| 6. Hedonic Value | 0.594 | 0.586 | 0.657 | −0.180 | 0.613 | 0.853 | |||

| 7. Trust | 0.189 | 0.181 | 0.135 | −0.146 | 0.138 | 0.233 | 0.941 | ||

| 8. Satisfaction | 0.548 | 0.706 | 0.613 | 0.079 | 0.615 | 0.557 | 0.282 | 0.932 | |

| 9. Loyalty | 0.562 | 0.565 | 0.537 | −0.164 | 0.660 | 0.711 | 0.239 | 0.565 | 0.914 |

| Construct | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|

| 1. Novelty Value | |||||||||

| 2. Perceived Intelligence | 0.706 | ||||||||

| 3. Knowledge Acquisition | 0.736 | 0.756 | |||||||

| 4. Creepiness | 0.087 | 0.194 | 0.045 | ||||||

| 5. Task Attraction | 0.726 | 0.793 | 0.732 | 0.084 | |||||

| 6. Hedonic Value | 0.780 | 0.696 | 0.777 | 0.203 | 0.713 | ||||

| 7. Trust | 0.231 | 0.194 | 0.147 | 0.155 | 0.149 | 0.267 | |||

| 8. Satisfaction | 0.660 | 0.788 | 0.679 | 0.088 | 0.670 | 0.637 | 0.300 | ||

| 9. Loyalty | 0.704 | 0.635 | 0.606 | 0.183 | 0.730 | 0.830 | 0.259 | 0.614 |

| H | Predictor | Outcome | β | t | p | Hypothesis |

|---|---|---|---|---|---|---|

| H1a | Novelty Value | Task Attraction | 0.199 | 3.455 | 0.001 | Supported |

| H1b | Novelty Value | Hedonic Value | 0.266 | 3.790 | 0.000 | Supported |

| H2a | Perceived Intelligence | Knowledge Acquisition | 0.660 | 14.459 | 0.000 | Supported |

| H2b | Perceived Intelligence | Task Attraction | 0.426 | 6.466 | 0.000 | Supported |

| H2c | Perceived Intelligence | Hedonic Value | 0.259 | 3.603 | 0.000 | Supported |

| H3a | Knowledge Acquisition | Task Attraction | 0.253 | 3.534 | 0.000 | Supported |

| H3b | Knowledge Acquisition | Hedonic Value | 0.324 | 4.377 | 0.000 | Supported |

| H4a | Creepiness | Hedonic Value | −0.227 | 4.496 | 0.000 | Supported |

| H4b | Creepiness | Trust | −0.146 | 2.072 | 0.038 | Supported |

| H5a | Task Attraction | Satisfaction | 0.438 | 6.316 | 0.000 | Supported |

| H5b | Task Attraction | Loyalty | 0.310 | 4.096 | 0.000 | Supported |

| H6a | Hedonic Value | Satisfaction | 0.252 | 3.421 | 0.001 | Supported |

| H6b | Hedonic Value | Loyalty | 0.444 | 5.802 | 0.000 | Supported |

| H7a | Trust | Satisfaction | 0.163 | 3.164 | 0.002 | Supported |

| H7b | Trust | Loyalty | 0.065 | 1.545 | 0.122 | Not Supported |

| H8 | Satisfaction | Loyalty | 0.099 | 1.699 | 0.089 | Not Supported |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahn, H.Y. Modeling Student Loyalty in the Age of Generative AI: A Structural Equation Analysis of ChatGPT’s Role in Higher Education. Systems 2025, 13, 915. https://doi.org/10.3390/systems13100915

Ahn HY. Modeling Student Loyalty in the Age of Generative AI: A Structural Equation Analysis of ChatGPT’s Role in Higher Education. Systems. 2025; 13(10):915. https://doi.org/10.3390/systems13100915

Chicago/Turabian StyleAhn, Hyun Yong. 2025. "Modeling Student Loyalty in the Age of Generative AI: A Structural Equation Analysis of ChatGPT’s Role in Higher Education" Systems 13, no. 10: 915. https://doi.org/10.3390/systems13100915

APA StyleAhn, H. Y. (2025). Modeling Student Loyalty in the Age of Generative AI: A Structural Equation Analysis of ChatGPT’s Role in Higher Education. Systems, 13(10), 915. https://doi.org/10.3390/systems13100915