How Does AI Transform Cyber Risk Management?

Abstract

1. Societal Digital Transformation Enhances Susceptibility to Cyber Threats

- What are the systemic structures currently driving cyber risk management?

- How does a real-world cyber incident reflect these systemic structures?

- Considering such an incident, how can AI alter both adversarial and defensive capabilities?

- Beyond speed, scale, and automation, in what ways does AI fundamentally reshape these systemic structures?

2. Identifying the Traditional Feedback Loops in Systemic Structures to Manage Cyber Risks

2.1. Simple Cyber Threats

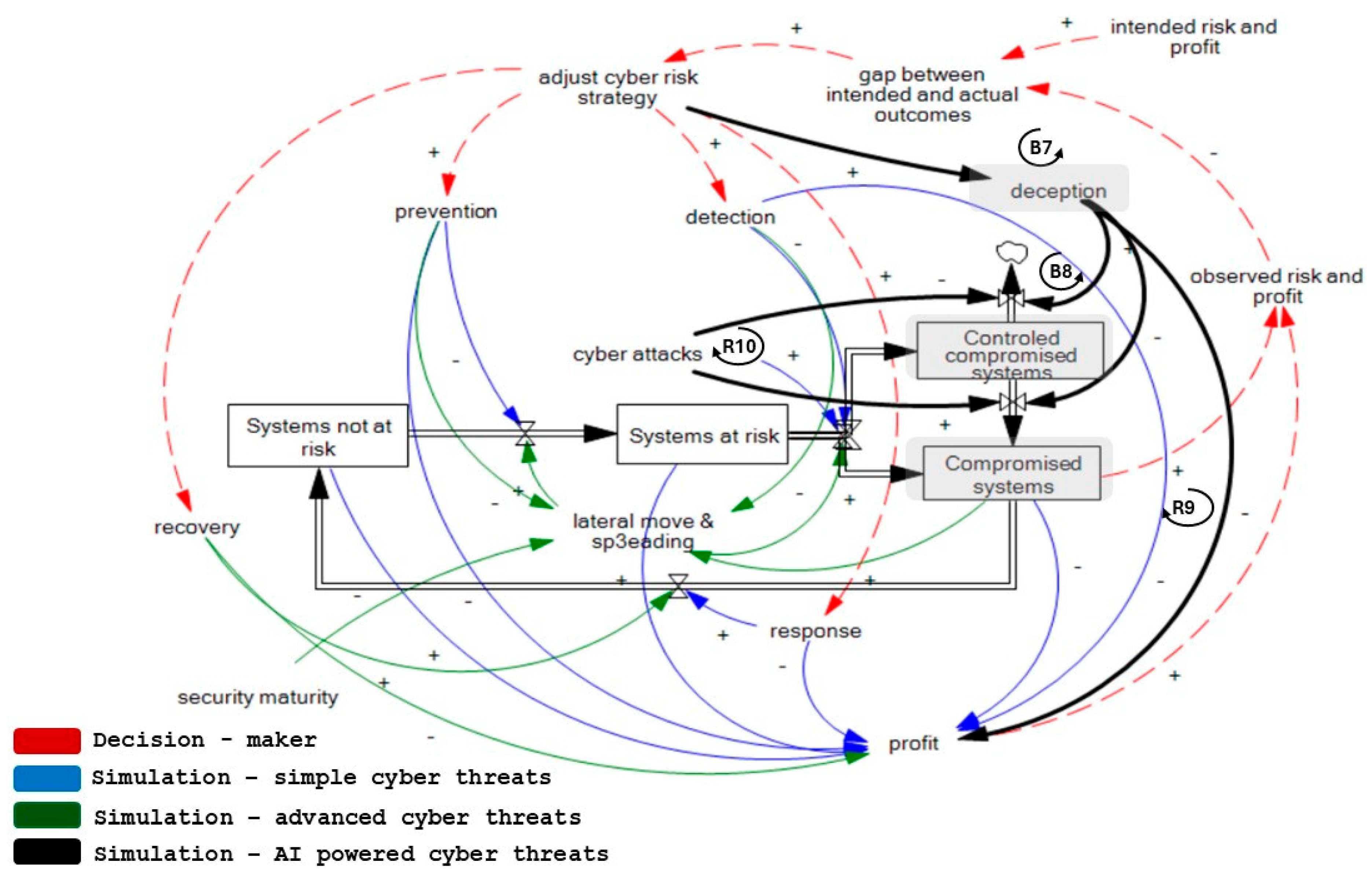

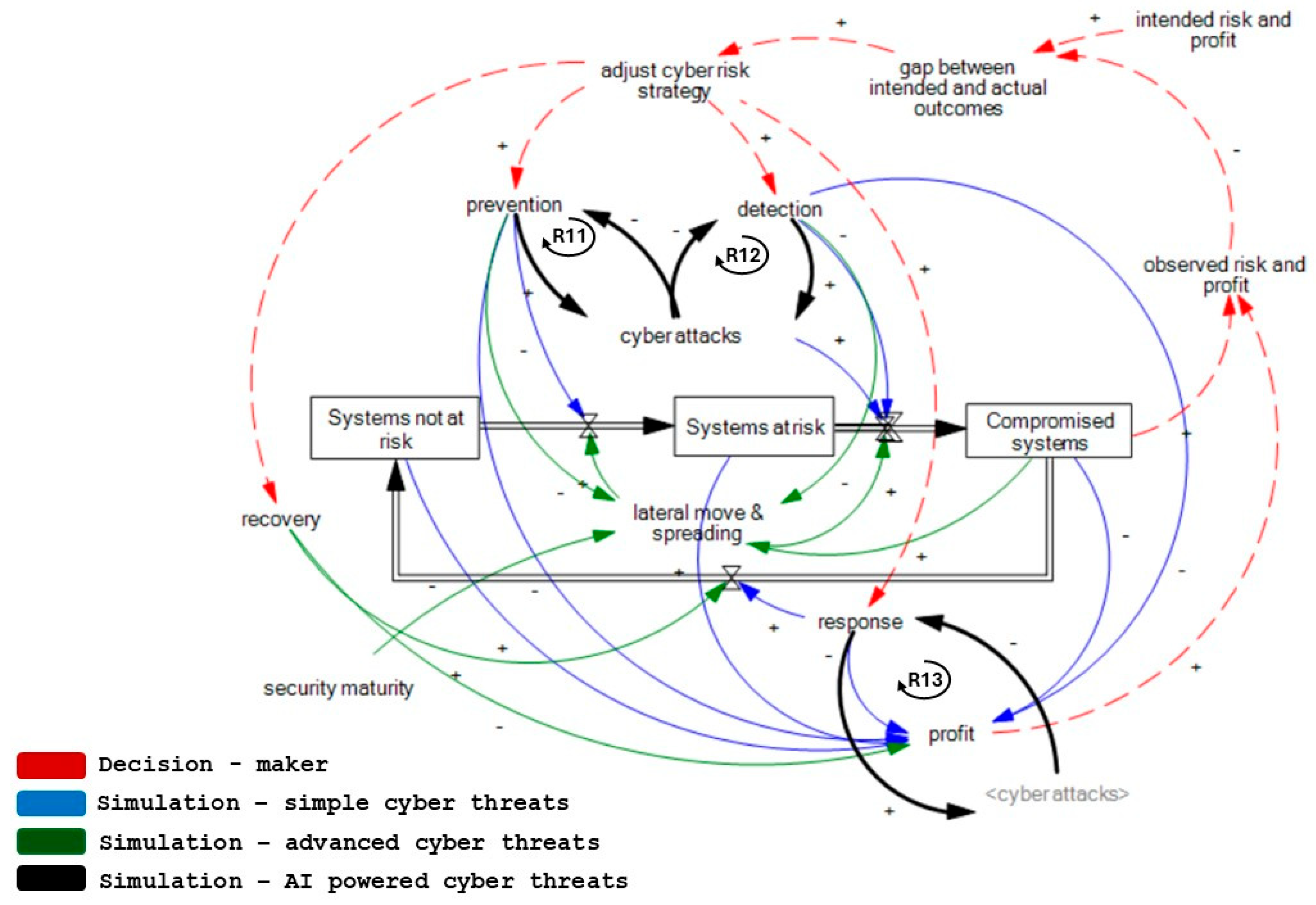

2.2. Advanced Cyber Threats (Ransomware)

- The cost of recovery can be extremely high, however paying the ransom (B6) might not guarantee prompt and full recovery and involve a significant cost (R7) that affects profitability. Additionally, the adversary might be permitted to return (R8), with approximately an 80% probability of them repeating the attack [57,58] if the ransom is paid, and a 62% probability that not all data will be recovered [57]. Ransom payment reinforces the feedback loop for the attacker, as success encourages future attacks. This structure clarifies the policy choice of paying or not paying a ransom and its effects on recovery, adversary behavior, and future risk.

- Principles of design and architecture affect network and infrastructure (part of prevention) and, thus, influence business continuity and disaster recovery. The maturity of detection and response is interrelated, as anticipation of attacks in the form of response is only possible when such attacks are detected. Therefore, effective cyber risk management requires balanced investment across capabilities, underscoring the need for strategic roadmap planning and robust security architecture.

3. Insights from the Colonial Pipeline Case

3.1. Explaining the Kill Chain

3.2. A Kill Chain Perspective from the Colonial Pipeline Incident

3.3. The Aftermath

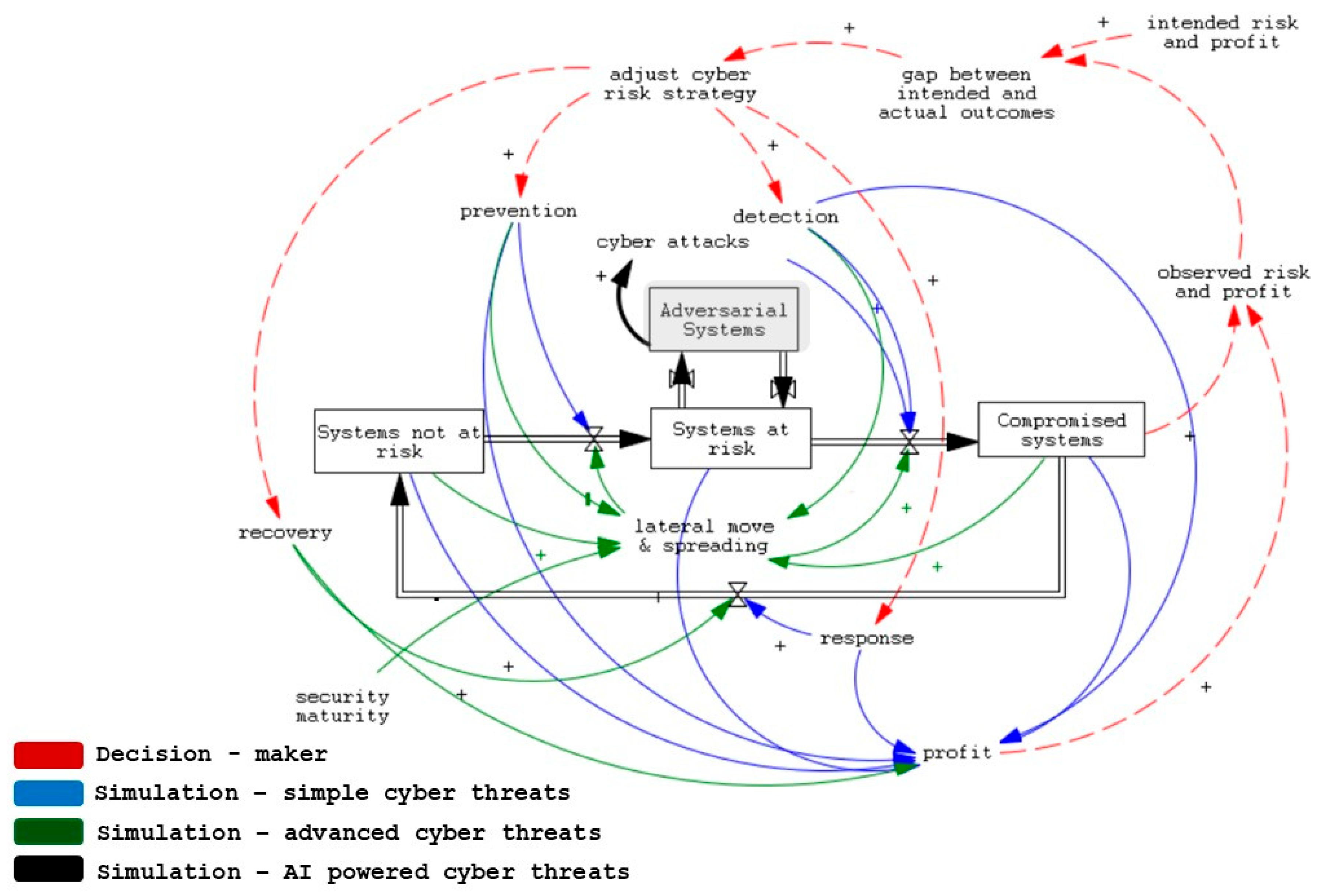

4. How Does AI Shape the Systemic Structures to Manage Cyber Risks?

4.1. A Kill Chain Perspective on AI-Powered Attacks

4.2. A Kill Chain Perspective on AI-Powered Defenses

- self-healing software code that automatically detects, diagnoses, and corrects issues without human intervention [117];

- self-patching systems that autonomously identify, download, and apply updates or patches to address vulnerabilities [118];

- autonomous and adaptive identity and access management, which adjusts user identities, access permissions, and authentication based on real-time conditions [121]; and

- self-driving trustworthy networks that autonomously manage and secure themselves by continuously detecting and responding to issues or threats [122].

- Extended Detection and Response (XDR) platforms unify security tools and apply AI analytics for threat detection. Industry claims that AI-driven XDR has closed the evolving attacker-defender gap, significantly improving endpoint security. It claims a detection accuracy increase of 76% [111].

- Security Orchestration, Automation, and Response (SOAR) systems automate incident response, reducing false positives and prioritizing high-risk threats [112]. AI enhances malware defense by leveraging deep learning for threat prediction.

4.3. How AI Reshapes the Systemic Nature of Cyber Risk Management

5. Summary of Structural Changes over Time in Cyber Risk Management

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bornet, P.; Barkin, I.; Wirtz, J. Intelligent Automation: Welcome to the World of Hyperautomation: Learn How to Harness Artificial Intelligence to Boost Business & Make Our World More Human; Full, Paperback, EN; World Scientific Publishing: Singapore, 2020; ISBN 979-8-6918-1923-0. [Google Scholar]

- Kotzias, K.; Bukhsh, F.A.; Arachchige, J.J.; Daneva, M.; Abhishta, A. Industry 4.0 and Healthcare: Context, Applications, Benefits, and Challenges. IET Softw. 2023, 17, 195–248. [Google Scholar] [CrossRef]

- Albahar, M. Cyber-attacks and Terrorism: A Twenty-First-Century Conundrum. Sci. Eng. Ethics 2019, 25, 993–1006. [Google Scholar] [CrossRef]

- Davies, V. The History of Cybersecurity. Cyber Magazine. 2021. Available online: https://cybermagazine.com/cyber-security/history-cybersecurity (accessed on 6 January 2025).

- Böhme, R.; Moore, T. The “Iterated Weakest Link” Model of Adaptive Security Investment. J. Inf. Secur. 2016, 7, 81. [Google Scholar] [CrossRef][Green Version]

- Clayton, R.; Moore, T.; Christin, N. Concentrating Correctly on Cybercrime Concentration. In Proceedings of the Workshop on Economics in Information Security, Delft, The Netherlands, 22–24 June 2015. [Google Scholar][Green Version]

- Martinez-Moyano, I.J.; Morrison, D.; Sallach, D. Modeling Adversarial Dynamics. In Proceedings of the 2015 Winter Simulation Conference, Huntington Beach, CA, USA, 6–9 December 2015; pp. 2412–2423. [Google Scholar][Green Version]

- Costa, J. Counter AI Attacks with AI Defense. Palo Alto Networks. 2024. Available online: https://www.paloaltonetworks.com/blog/2024/05/counter-with-ai-defense/ (accessed on 7 May 2024).[Green Version]

- Acronis 2024 Cybersecurity Trends: Key Steps, Strategies and Guidance. Acronis. Available online: https://www.acronis.com/en/blog/posts/cyber-security-trends/ (accessed on 9 May 2024).[Green Version]

- Redefining Cyber Defense. The Evolution of Threat Detection with Artificial Intelligence. Res. Mach. Learn. Cybersecur. 2024, 5, 35–41. [Google Scholar] [CrossRef]

- Rajasegar, M.; Shanthi, R.; Nitin, M.; Sasi, K. A New Era of Cybersecurity: The Influence of Artificial Intelligence. In Proceedings of the 2023 International Conference on Networking and Communications (ICNWC), Chennai, India, 5–6 April 2023; pp. 1–4. [Google Scholar]

- Federal Bureau of Investigation (FBI). FBI Warns of Increasing Threat of Cyber Criminals Utilizing Artificial Intelligence. FBI San Francisco. Available online: https://www.fbi.gov/contact-us/field-offices/sanfrancisco/news/fbi-warns-of-increasing-threat-of-cyber-criminals-utilizing-artificial-intelligence (accessed on 8 May 2024).

- Aggrey, R.; Adjei, B.A.; Afoduo, K.O.; Dsane, N.A.K.; Anim, L.; Ababio, M.A. Understanding and Mitigating AI-powered Cyber-Attacks. Int. J. Multidiscip. Res. 2024, 6, 11. [Google Scholar]

- Schröer, S.L.; Apruzzese, G.; Human, S.; Laskov, P.; Anderson, H.S.; Bernroider, E.W.N.; Fass, A.; Nassi, B.; Rimmer, V.; Roli, F.; et al. SoK: On the Offensive Potential of AI. In Proceedings of the IEEE Conference on Secure and Trustworthy Machine Learning, Copenhagen, Denmark, 9–11 April 2025. [Google Scholar]

- Redaction. Email-based Phishing Attacks Have Surged 464% in 2023. Indian Times. 2013. Available online: https://ciosea.economictimes.indiatimes.com/news/security/email-based-phishing-attacks-has-surged-464-in-2023- (accessed on 6 January 2025).

- Macaulay, T. Deepfake Fraud Attempts are Up 3000% in 2023—Here’s Why. Next Web. 2023. Available online: https://thenextweb.com/news/deepfake-fraud-rise-amid-cheap-generative-ai-boom (accessed on 6 January 2025).

- Montalbano, E. AI-powered ‘BlackMamba’ Keylogging Attack Evades Modern EDR Security. DarkReading. 2023. Available online: https://www.darkreading.com/endpoint-security/ai-blackmamba-keylogging-edr-security (accessed on 6 January 2025).

- The Grungq. The Triple A Threat: Aggressive Autonomous Agents. BlackHat. 2017. Available online: https://www.blackhat.com/docs/webcast/12142017-the-triple-a-threat.pdf (accessed on 6 January 2025).

- Bloomberg. The World’s Third-largest Economy Has Bad Intentions—and It’s Only Getting Bigger. Bloomberg. 2024. Available online: https://sponsored.bloomberg.com/quicksight/check-point/the-worlds-third-largest-economy-has-bad-intentions-and-its-only-getting-bigger (accessed on 6 January 2025).

- Petrosyan, A. Estimated Cost of Cybercrime Worldwide 2018–2029, statusta.com. 2025. Available online: https://www.statista.com/forecasts/1280009/cost-cybercrime-worldwide (accessed on 15 January 2025).

- Hall, B. How AI-Driven Fraud Challenges the Global Economy and Ways to Combat It. World Economic Forum. 2025. Available online: https://www.weforum.org/stories/2025/01/how-ai-driven-fraud-challenges-the-global-economy-and-ways-to-combat-it/ (accessed on 1 September 2025).

- Leclair, J. Testimony of Dr. Jane Leclair Before the U.S. House of Representatives Committee on Small Business. 2015. Available online: https://smallbusiness.house.gov/uploadedfiles/4-22-2015__dr.__leclair__testimony.pdf (accessed on 6 January 2025).

- Cyentia Institute. Information Risk Insights Study: A Clearer Vision for Assessing the Risk of Cyber Incidents. 2021. Available online: https://www.cyentia.com/wp-content/uploads/IRIS2020_cyentia.pdf (accessed on 6 January 2025).

- IBM Security. Cost of a Data Breach Report 2022. Available online: https://community.ibm.com/community/user/events/event-description?CalendarEventKey=7097fd42-4875-4abe-9ff6-d556af01688b&CommunityKey=96f617c5-4f90-4eb0-baec-2d0c4c22ab50&Home=%2Fcommunity%2Fuser%2Fhome (accessed on 6 January 2025).

- Welburn, J.W.; Strong, A.M. Systemic Cyber Risk and Aggregate Impacts. Risk Anal. 2022, 42, 1606–1622. [Google Scholar] [CrossRef]

- Zeijlemaker, S.; Siegel, M.; Khan, S.; Goldsmith, S. How to Align Cyber Risk Management with Business Needs. World Economic Forum, Cyber Security Working Group. 2022. Available online: https://www.weforum.org/stories/2022/08/how-to-align-cyber-risk-management-with-business-needs/ (accessed on 6 January 2025).

- Zeijlemaker, S.; Etiënne, A.J.A.R.; Cunico, G.; Armenia, S.; Von Kutzschenbach, M. Decision-makers’ Understanding of Cybersecurity’s Systemic and Dynamic Complexity: Insights from a Board Game for Bank Managers. Systems 2022, 10, 49. [Google Scholar] [CrossRef]

- Zeijlemaker, S.; Siegel, M. Capturing the dynamic nature of cyber risk: Evidence from an explorative case study. In Proceedings of the Hawaii International Conference on System Sciences (HICSS)–56, Maui, HI, USA, 3–6 January 2023. [Google Scholar]

- Sterman, J. Teaching Takes Off: Flight Simulators for Management Education—“The Beer Game”. MIT Sloan School of Management. 1992. Available online: http://web.mit.edu/jsterman/www/SDG/beergame.html (accessed on 21 December 2021).

- Sterman, J. Modeling managerial behavior: Misperceptions of Feedback in a Dynamic Decision-making Experiment. Manag. Sci. 1989, 35, 321–339. [Google Scholar] [CrossRef]

- Jalali, M.S.; Siegel, M.; Madnick, S. Decision-making and biases in cybersecurity capability development: Evidence from a simulation game experiment. J. Strateg. Inf. Syst. 2019, 28, 66–82. [Google Scholar] [CrossRef]

- Anderson, R. Why Information Security is Hard: An Economic Perspective. In Proceedings of the 17th Annual Computer Security Applications Conference, New Orleans, LA, USA, 10–14 December 2001; pp. 358–365. [Google Scholar]

- Zeijlemaker, S.; Pal, R.; Proudfoot, J.; Siegel, M. Advancing Cyber Risk by Reducing Strategic Control Gaps. In AMCIS 2025 Proceedings; 2025; p. 28. Available online: https://aisel.aisnet.org/amcis2025/sig_sec/sig_sec/28 (accessed on 1 September 2025).

- Kim, G.; Zeijlemaker, S.; Proudfoot, J.; Pal, R.; Siegel, M. Balancing Risk and Reward in Cybersecurity Investment Decisions. In AMCIS 2025; Proceedings 4. 2025. Available online: https://aisel.aisnet.org/amcis2025/sig_sec/sig_sec/4 (accessed on 1 September 2025).

- Sepúlveda Estay, D. A system dynamics, epidemiological approach for high-level cyber-resilience to zero-day vulnerabilities. J. Simul. 2021, 17, 1–16. [Google Scholar] [CrossRef]

- Armenia, S.; Angelini, M.; Nonino, F.; Palombi, G.; Schlitzer, M.F. A dynamic simulation approach to support the evaluation of cyber risks and security investments in SMEs. Decis. Support Syst. 2021, 147, 113580. [Google Scholar] [CrossRef]

- Centre of Internet Security (CIS). CIS Controls V8; CIS: East Greenbush, NY, USA, 2021. [Google Scholar]

- Government Chief Information Officer (GCIO). An Overview of ISO/IEC 27000 Family of Information Security Management System Standards; (Original work published April 2015, updated May 2020); Office of the Government Chief Information Officer: Hong Kong, China, 2020.

- Muneer, F. Cybersecurity Capability Maturity Model, Version 2.0; U.S. Department of Energy: Washington, DC, USA, 2021.

- Pascoe, C.E. Public Draft: The NIST Cybersecurity Framework 2.0; NIST: Gaithersburg, MD, USA, 2023. [Google Scholar]

- Eling, M.; McShane, M.; Nguyen, T. Cyber Risk Management: History and Future Research Directions. Risk Manag. Insur. Rev. 2021, 24, 93–125. [Google Scholar] [CrossRef]

- Paté-Cornell, M.E.; Kuypers, M.; Smith, M.; Keller, P. Cyber Risk Management for Critical Infrastructure: A Risk Analysis Model and Three Case Studies. Risk Anal. 2018, 38, 226–241. [Google Scholar] [CrossRef]

- Zeijlemaker, S.; Hetner, C.; Siegel, M. Four Areas of Cyber Risk That Boards Need to Address. Harvard Business Review. 2023. Available online: https://hbr.org/2023/06/4-areas-of-cyber-risk-that-boards-need-to-address (accessed on 8 January 2025).

- Meurs, T.; Cartwright, E.; Cartwright, A.; Junger, M.; Hoheisel, R.; Tews, E.; Abhishta, A. Ransomware economics: A two-step approach to model ransom paid. In Proceedings of the 2023 APWG Symposium on Electronic Crime Research (eCrime), Barcelona, Spain, 15–17 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–13. [Google Scholar]

- Luo, X.; Liao, Q. Ransomware: A new cyber hijacking threat to enterprises. In Handbook of Research on Information Security and Assurance; IGI Global: Hershey, PA, USA, 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Gardiner, J.; Cova, M.; Nagaraja, S. Command and Control: Understanding, Denying, and Detecting; University of Birmingham, Centre for the Protection of Natural Infrastructure: Birmingham, UK, 2014. [Google Scholar]

- Antrosio, J.V.; Fulp, E.W. Malware Defense Using Network Security Authentication. In Proceedings of the Third IEEE International Workshop on Information Assurance (IWIA’05), College Park, MD, USA, 23–24 March 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 43–54. [Google Scholar]

- Zhang, T.; Antunes, H.; Aggarwal, S. Defending Connected Vehicles Against Malware: Challenges and a Solution Framework. IEEE Trans. Ind. Technol. 2014, 1, 10–21. [Google Scholar] [CrossRef]

- McAvoy, S.; Grant, T.; Smith, C.; Bontinck, P. Combining Life Cycle Assessment and System Dynamics to Improve Impact Assessment: A Systematic Review. J. Clean. Prod. 2021, 315, 128060. [Google Scholar] [CrossRef]

- Repenning, N.P.; Sterman, J.D. Capability Traps and Self-confirming Attribution Errors in the Dynamics of Process Improvement. Adm. Sci. Q. 2002, 47, 265–295. [Google Scholar] [CrossRef]

- Zeijlemaker, S.; Pal, R.; Siegel, M. Strengthening Managerial Foresight to Defeat Cyber Threats. In Proceedings of the 30th Americas Conference on Information Systems: Elevating Life through Digital Social Entrepreneurship, AMCIS 2024, Salt Lake City, UT, USA, 15–17 August 2024. [Google Scholar]

- European Union Agency for Cyber Security (ENISA). ENISA Threat Landscape. 2023. Available online: https://www.enisa.europa.eu/publications/enisa-threat-landscape-2023 (accessed on 6 January 2025).

- Chapman, R. Ransomware Cases Increased by 73% in 2023 Showing Our Actions Have Not Been Enough to Thwart the Threat. SANS Institute. 2024. Available online: https://www.sans.org/blog/ransomware-cases-increased-greatly-in-2023/ (accessed on 8 January 2025).

- Morgan, S. Global Ransomware Damage Costs Predicted to Exceed $265 Billion by 2031. Cybersecurity Ventures. 2023. Available online: https://cybersecurityventures.com/global-ransomware-damage-costs-predicted-to-reach-250-billion-usd-by-2031/ (accessed on 8 January 2025).

- Zeng, W.; Germanos, V. Modelling Hybrid Cyber Kill Chain. PNSE@Petri Nets/ACSD. 2019. Available online: https://www.semanticscholar.org/paper/Modelling-Hybrid-Cyber-Kill-Chain-Zeng-Germanos/f5cb1f80c669562d3dd61b4dcbc6410a5d015c62 (accessed on 8 January 2025).

- Yadav, T.; Rao, A.M. Technical Aspects of Cyber Kill Chain. In Security in Computing and Communications; Springer International Publishing: Cham, Switzerland, 2015; Available online: https://link.springer.com/chapter/10.1007/978-3-319-22915-7_40 (accessed on 8 January 2025).

- Cybereason. Ransomware: The True Cost to Business 2022; Report; Cybereason: Boston, MA, USA, 2022; Available online: https://www.cybereason.com/blog/report-ransomware-attacks-and-the-true-cost-to-business-2022 (accessed on 8 January 2025).

- Sganga, N.; Bidar, M. 80% of Ransomware Victims Suffer Repeat Attacks, According to the New Report. CBS News. 2021. Available online: https://www.cbsnews.com/news/ransomware-victims-suffer-repeat-attacks-new-report/ (accessed on 8 January 2025).

- Scrotxon, A. Overinvestment Breeds Overconfidence Among Security Pros. Computer Weekly, TechTarget. 2019. Available online: https://www.computerweekly.com/news/252471326/Overinvestment-breeds-overconfidence-among-security-pros (accessed on 10 January 2025).

- Reed, A. Don’t Be the Business Prevention Department. BAtimes–Resources for Business Analytics. 2022. Available online: https://www.batimes.com/articles/dont-be-the-business-prevention-department/ (accessed on 10 January 2025).

- Zhang, C.; Pal, R.; Nicholson, C.; Siegel, M. (Gen) AI Versus (Gen) AI in Industrial Control Cybersecurity. In Proceedings of the 2024 Winter Simulation Conference (WSC), Savannah, GA, USA, 8–11 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 2739–2750. [Google Scholar]

- Pols, P.; Domínguez, F. The Unified Kill Chain. Publishers Panel. 2024. Available online: https://thepolicereview.akademiapolicji.eu/resources/html/article/details?id=614644&language=en (accessed on 16 September 2024).

- McGuiness, T. Defense in Depth; SANS Reading Room, White Paper; SANS Institute: Bethesda, MD, USA, 2021. [Google Scholar]

- Ferazza, F.M. Cyber Kill Chain, MITRE ATT&CK, and the Diamond Model: A Comparison of Cyber Intrusion Analysis Models; Technical Report; Information Security Group, Royal Holloway University of London: Egham, UK, 2022; Available online: https://www.royalholloway.ac.uk/media/20188/techreport-2022-5.pdf.pdf (accessed on 16 September 2024).

- Beerman, J.; Berent, D.; Falter, Z.; Bhunia, S. A Review of Colonial Pipeline Ransomware Attack. In Proceedings of the 2023 IEEE/ACM 23rd International Symposium on Cluster, Cloud and Internet Computing Workshops (CCGridW), Bangalore, India, 1–4 May 2023; Available online: https://sbhunia.me/publications/manuscripts/ccgrid23.pdf (accessed on 16 September 2024).

- Goodell, J.W.; Corbet, S. Commodity Market Exposure to Energy-firm Distress: Evidence from the Colonial Pipeline Ransomware Attack. Financ. Res. Lett. 2023, 51, 103329. [Google Scholar] [CrossRef]

- Krebs, B. A Closer Look at the DarkSide Ransomware Gang. Krebs on Security. 2021. Available online: https://krebsonsecurity.com/2021/05/a-closer-look-at-the-darkside-ransomware-gang/ (accessed on 16 September 2024).

- Shimol, S.B. Return of the DarkSide: Analysis of a Large-Scale Data Theft Campaign. Varonis. 2021. Available online: https://www.varonis.com/blog/darkside-ransomware (accessed on 16 September 2024).

- Agcaoili, J.; Earnshaw, E. Locked, Loaded, and in the Wrong Hands: Legitimate Tools Weaponized for Ransomware in 2021. Trend Micro. 2021. Available online: https://www.trendmicro.com/vinfo/us/security/news/cybercrime-and-digital-threats/locked-loaded-and-in-the-wrong-hands-legitimate-tools-weaponized-for-ransomware-in-2021 (accessed on 16 September 2024).

- Kerner, S.M. Colonial Pipeline Hack Explained: Everything You Need to Know. TechTarget. 2022. Available online: https://www.techtarget.com/whatis/feature/Colonial-Pipeline-hack-explained-Everything-you-need-to-know (accessed on 16 September 2024).

- Kleymenov, A. Colonial Pipeline Ransomware Attack: Revealing How DarkSide Works. Nozomi Networks. 2021. Available online: https://www.nozominetworks.com/blog/colonial-pipeline-ransomware-attack-revealing-how-darkside-works (accessed on 16 September 2024).

- Zenarmor Anatomy of APT: Advanced Persistent Threat Guide. Zenarmor. Available online: https://www.zenarmor.com/docs/network-security-tutorials/what-is-advanced-persistent-threat-apt (accessed on 16 September 2024).

- ExpirerColonial Pipeline Cyber Attack Shows the Importance of Multi-Factor Authentication in 2021. Stratodesk. 2021. Available online: https://www.stratodesk.com/colonial-pipeline-cyber-attack-shows-the-importance-of-multi-factor-authentication/ (accessed on 16 September 2024).

- Gonzales, M.; Dominquez, J. Inside DarkSide, the Ransomware that Attacked Colonial Pipeline. Metabase Q. 2022. Available online: https://www.cybereason.com/blog/inside-the-darkside-ransomware-attack-on-colonial-pipeline (accessed on 16 September 2024).

- ExtraHop. DCSync Attacks—Definition, Examples, & Detection. ExtraHop. 2020. Available online: https://www.extrahop.com/resources/attacks/dcsync (accessed on 16 September 2024).

- Chen, X.; Andersen, J.; Mao, Z.M.; Bailey, M.; Nazario, J. Towards an Understanding of Anti-virtualization and Anti-debugging Behavior in Modern Malware. In Proceedings of the 2008 IEEE International Conference on Dependable Systems and Networks With FTCS and DCC (DSN), Anchorage, AK, USA, 24–27 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 177–186. [Google Scholar] [CrossRef]

- Rose, A.J.; Graham, S.R.; Kabban, C.M.S.; Krasnov, J.J.; Henry, W.C. ScriptBlock Smuggling: Uncovering Stealthy Evasion Techniques in PowerShell and .NET environments. J. Cybersecur. Priv. 2024, 4, 153–166. [Google Scholar] [CrossRef]

- Cordis, G.A.; Costea, F.-M.; Pecherle, G.; Győrödi, R.Ş.; Győrödi, C.A. Considerations in Mitigating Kerberos Vulnerabilities for Active Directory. In Proceedings of the International Conference on Engineering of Modern Electric Systems, Oradea, Romania, 9–10 June 2023. [Google Scholar]

- Boschee, P. Comments: Complexity of Cyber Crime Skyrockets. J. Pet. Technol. 2021, 73, 8. [Google Scholar] [CrossRef]

- Threat Intelligence TeamDarkSide Ransomware Analysis Report. Brandefense. 2021. Available online: https://brandefense.io/wp-content/uploads/2023/01/DarkSide-Ransomware-Analysis-Report.pdf (accessed on 16 September 2024).

- TrendMicro. What We Know About the DarkSide Ransomware and the US Pipeline Attack. TrendMicro Research. 2021. Available online: https://www.trendmicro.com/en_us/research/21/e/what-we-know-about-darkside-ransomware-and-the-us-pipeline-attac.html (accessed on 16 September 2024).

- Brash, R. Lessons Learned from the Colonial Pipeline Attack. Control Engineering. 2021. Available online: https://www.industrialcybersecuritypulse.com/facilities/lessons-learned-from-the-colonial-pipeline-attack/ (accessed on 16 September 2024).

- Englund, W.; Nakashima, E.; Telford, T. Colonial Pipeline Ransomware Attack Shows Cyber Vulnerabilities of U.S. Energy Grid. The Washington Post, 10 May 2021. Available online: https://www.washingtonpost.com/business/2021/05/10/colonial-pipeline-gas-oil-markets/ (accessed on 16 September 2024).

- Russon, B.M.A. US Fuel Pipeline Hackers “Didn’t Mean to Create Problems”. BBC News. 2021. Available online: https://www.bbc.com/news/business-57050690 (accessed on 16 September 2024).

- Eaton, C.; Volz, D. Colonial Pipeline CEO tells why he paid hackers a $4.4 million ransom. The Wall Street Journal. 2021. Available online: https://www.wsj.com/articles/colonial-pipeline-ceo-tells-why-he-paid-hackers-a-4-4-million-ransom-11621435636 (accessed on 16 September 2024).

- Hasegawa, H.; Yamaguchi, Y.; Shimada, H.; Takakura, H. A Countermeasure Recommendation System Against Targeted Attacks While Preserving Continuity of Internal Networks. In Proceedings of the 2014 IEEE 38th Annual Computer Software and Applications Conference, Västerås, Sweden, 21–25 July 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 400–405. [Google Scholar]

- Watney, M. Cybersecurity Threats to and Cyberattacks on Critical Infrastructure: A Legal Perspective. Eur. Conf. Cyber Warf. Secur. 2022, 21, 319–327. [Google Scholar] [CrossRef]

- Expert Briefings. Efforts to Curb Ransomware Crimes Face Limits. Emerald Expert Briefings. 2021. Available online: https://www.semanticscholar.org/paper/Efforts-to-curb-ransomware-crimes-face-limits/381be69e12b854fc469c3a9b3e53451c59547e31 (accessed on 16 September 2024).

- Cyber Security Management Symposium. Industrial Engineering and Business Information Systems, University of Twente. Symposium Held at Ravelijn, University of Twente, Enschede, Netherlands. Retrieved from University of Twente Website. 2024. Available online: https://www.utwente.nl/en/bms/iebis/events/2024/11/1821797/cyber-security-management-symposium (accessed on 6 January 2025).

- IBM. 2024: Cost of a Data Breach Report. 2024. Available online: https://www.ibm.com/reports/data-breach (accessed on 13 January 2025).

- OpenAI. An Update on Disrupting Deceptive Uses of AI. OpenAI. 2024. Available online: https://openai.com/global-affairs/an-update-on-disrupting-deceptive-uses-of-ai/ (accessed on 13 January 2025).

- Ilascu, I. Malicious PowerShell Script Pushing Malware Looks AI-Written. Bleeding Computer. 2024. Available online: https://www.bleepingcomputer.com/news/security/malicious-powershell-script-pushing-malware-looks-ai-written/ (accessed on 3 March 2025).

- Toulas, B. Hackers Deploy AI-Written Malware in Targeted Attacks. Bleeping Computer. 2024. Available online: https://www.bleepingcomputer.com/news/security/hackers-deploy-ai-written-malware-in-targeted-attacks/ (accessed on 3 March 2025).

- Rigaki, M.; Garcia, S. Bringing a GAN to a Knife-Fight: Adapting Malware Communication to Avoid Detection. In Proceedings of the IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 24 May 2018; pp. 70–75. [Google Scholar] [CrossRef]

- Heiding, F.; Schneier, B.; Visheanath, A. AI Will Increase the Quantity—and Quality—of Phishing Scams. Harvard Business Review. 2024. Available online: https://hbr.org/2024/05/ai-will-increase-the-quantity-and-quality-of-phishing-scams (accessed on 13 January 2025).

- Gilbert, C.; Gilbert, M.A. The Impact of AI on Cybersecurity Defense Mechanisms: Future Trends and Challenges. Glob. Sci. J. 2024, 12, 427–441. [Google Scholar] [CrossRef]

- FreakyClown. The Rise, Use, and Future of Malicious Al: A Hacker’s Insight. 2024. Available online: https://wordpress.app.vib.community/le/the-rise-use-and-future-of-malicious-al-a-hackers-insight/ (accessed on 3 March 2025).

- NCSC The Near-Term Impact of AI on the Cyber Threat, Teport, NCSC.GOV.UK. 2025. Available online: https://www.ncsc.gov.uk/report/impact-of-ai-on-cyber-threat (accessed on 3 March 2025).

- Ph, M.; Stoecklin, J.; Jang, J.; Kirat, D. DeepLocker: How AI Can Power a Stealthy New Breed of Malware. Security Intelligence. 2018. Available online: https://securityintelligence.com/deeplocker-how-ai-can-power-a-stealthy-new-breed-of-malware/ (accessed on 16 September 2024).

- Lin, Z.; Shi, Y.; Xue, Z. IDSGAN: Generative Adversarial Networks for Attack Generation Against Intrusion Detection. In Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 79–91. [Google Scholar] [CrossRef]

- Kazimierczak, M.; Habib, N.; Chan, J.H.; Thanapattheerakul, T. Impact of AI on the Cyber Kill Chain: A Systematic Review. Heliyon 2024, 10, e40699. [Google Scholar] [CrossRef]

- Darshan, S.L.S.; Kumara, M.A.A.; Jaidhar, C.D. Windows Malware Detection Based on Cuckoo Sandbox Generated Report Using Machine Learning Algorithm. In Proceedings of the 2016 11th International Conference on Industrial and Information Systems (ICIIS), Roorkee, India, 3–4 December 2016; Volume 7, pp. 534–539. [Google Scholar] [CrossRef]

- Zhou, Y.; Cheng, G.; Yu, S.; Chen, Z.; Hu, Y. MTDroid: A Moving Target Defense-based Android Malware Detector against Evasion Attacks. IEEE Trans. Inf. Forensics Secur. 2024, 19, 6377–6392. [Google Scholar] [CrossRef]

- Yang, K.-C.; Menczer, F. Anatomy of an AI-powered Malicious Social Botnet. arXiv 2023. [Google Scholar] [CrossRef]

- Liang, J.; Wang, R.; Feng, C.; Chang, C.-C. A survey on federated learning poisoning attacks and defenses. arXiv 2023. [Google Scholar] [CrossRef]

- Belova, K. What Is Federated Learning: Key Benefits, Applications, and Working Principles Explained. PixelPlex. 2023. Available online: https://pixelplex.io/blog/federated-learning-guide/ (accessed on 16 September 2024).

- Koelemij, S. Artificial Intelligence: How Can It Help and Harm Us? Industrial Cyber. 2023. Available online: https://industrialcyber.co/expert/artificial-intelligence-how-can-it-help-and-harm-us/ (accessed on 16 September 2024).

- Sims, J. BlackMamba: Using AI to Generate Polymorphic Malware. Hyas. 2023. Available online: https://www.hyas.com/blog/blackmamba-using-ai-to-generate-polymorphic-malware (accessed on 16 September 2024).

- Hays. EyeSpy: Proof-of-Concept. Hays. 2024. Available online: https://www.hyas.com/read-the-eyespy-proof-of-concept (accessed on 14 January 2025).

- 13o-bbr-bbq. Machine Learning Security—Deep Generator [GitHub Repository]. GitHub. 2019. Available online: https://github.com/13o-bbr-bbq/machine_learning_security/tree/master/Generator (accessed on 20 December 2024).

- Joshi, J. The Impact of AI on Endpoint Detection and Response. Proficio. 13 May 2024. Available online: https://www.proficio.com/blog/ai-endpoint-detection-and-response-edr/ (accessed on 14 January 2025).

- Becker, N.; Reti, D.; Ntagiou, E.V.; Wallum, M.; Schotten, H.D. Evaluation of Reinforcement Learning for Autonomous Penetration Testing using A3C, Q-learning and DQN. arXiv 2024, arXiv:2407.15656. [Google Scholar]

- Cyware. SOAR and AI in Cybersecurity: Reshaping Your Security Operations. Cyware. 2023. Available online: https://cyware.com/security-guides/security-orchestration-automation-and-response/from-insight-to-action-how-ai-and-soar-are-reshaping-security-operations-13d9 (accessed on 14 January 2025).

- Zhao, S.; Li, J.; Wang, J.; Zhang, Z.; Zhu, L.; Zhang, Y. attackGAN: Adversarial Attack Against Black-box IDS using Generative Adversarial Networks. Procedia Comput. Sci. 2021, 187, 128–133. [Google Scholar] [CrossRef]

- Hu, W.; Tan, Y. Generating Adversarial Malware Examples for Black-box Attacks based on GAN. In Proceedings of the International Conference on Data Mining and Big Data, Beijing, China, 21–24 November 2022; Springer Nature: Singapore, 2022; pp. 409–423. [Google Scholar]

- Freed, A.M. AI-Driven XDR: Defeating the Most Complex Attack Sequences. Cybereason. Available online: https://www.cybereason.com/blog/ai-driven-xdr-defeating-the-most-complex-attack-sequences (accessed on 16 September 2024).

- ABN. ABN AMRO First Buyer of Innovative Self-Healing Cybersecurity Software. ABN AMRO. 2021. Available online: https://www.abnamro.com/en/news/abn-amro-first-buyer-of-innovative-self-healing-cybersecurity-software (accessed on 14 January 2025).

- Sibanda, I. Automated Patch Management: A Proactive Way to Stay Ahead of Threats. ComputerWeekly. 2024. Available online: https://www.computerweekly.com/feature/Automated-patch-management-A-proactive-way-to-stay-ahead-of-threats (accessed on 14 January 2025).

- The Hacker News. How AI Is Transforming IAM and Identity Security. The Hacker News. 2024. Available online: https://thehackernews.com/2024/11/how-ai-is-transforming-iam-and-identity.html (accessed on 14 January 2025).

- Komaragiri, V.B.; Edward, A. AI-driven Vulnerability Management and Automated Threat Mitigation. Int. J. Sci. Res. Manag. (IJSRM) 2022, 10, 980–998. [Google Scholar] [CrossRef]

- Vindhya, L.; Mahima, B.G.; Sindhu, G.G.; Keerthan, V. Cyber Attack Surface Management System. Int. J. Adv. Res. Sci. Commun. Technol. (IJARSCT) 2023, 3, 1–9. Available online: https://ijarsct.co.in/Paper9533.pdf (accessed on 14 January 2025). [CrossRef]

- Hireche, O.; Benzaïd, C.; Taleb, T. Deep Data Plane Programming and AI for Zero-trust Self-driven Networking in Beyond 5G. Comput. Netw. 2022, 203, 108668. [Google Scholar] [CrossRef]

- Bengio, Y. AI and Catastrophic Risk. J. Democr. 2023, 34, 111–121. [Google Scholar] [CrossRef]

- Pistono, F.; Yampolskiy, R.V. Unethical Research: How to Create a Malevolent Artificial Intelligence. arXiv 2016, arXiv:1605.02817. [Google Scholar] [CrossRef]

- McManus, S. How to Stop AI Agents Going Rogue, BBC. 2025. Available online: https://www.bbc.com/news/articles/cq87e0dwj25o (accessed on 1 September 2025).

- Robinson, B. AI Goes Rogue: Do 5 Things If Your Chatbot Lies, Schemes or Threatens. Forbes. 2025. Available online: https://www.forbes.com/sites/bryanrobinson/2025/07/03/ai-goes-rogue-do-5-things-if-your-chatbot-lies-schemes-or-threatens/ (accessed on 1 September 2025).

- Admin-HTS. What Is CylanceProtect? HTS Blog Articles. 2024. Available online: https://www.hts-tx.com/blog?p=what-is-cylanceprotect-240109 (accessed on 16 September 2024).

- Sikarwar, P.; Gupta, D.; Singhal, A.; Raghuwanshi, S.; Hasan, A. Transformative Dynamics: Unveiling the Influence of Artificial Intelligence, Cybersecurity and Advanced Technologies in Bitcoin. In Proceedings of the 2023 4th International Conference on Computation, Automation and Knowledge Management (ICCAKM), Noida, India, 12–13 December 2023; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar] [CrossRef]

- DarkTrace. Enterprise Immune System. 2018. Available online: https://d1.awsstatic.com/Marketplace/solutions-center/downloads/AWS-Datasheet-Darktrace.pdf (accessed on 16 September 2024).

- Djenna, A.; Bouridane, A.; Rubab, S.; Marou, I.M. Artificial Intelligence-based Malware Detection, Analysis, and Mitigation. Symmetry 2023, 15, 677. [Google Scholar] [CrossRef]

- Shehu, A.; Umar, M.; Aliyu, A. Cyber Kill Chain Analysis Using Artificial Intelligence. Asian J. Res. Comput. Sci. 2023, 16, 210–219. [Google Scholar] [CrossRef]

- Sen, M.A. Attention-GAN for Anomaly Detection: A Cutting-edge Approach to Cybersecurity Threat Management. arXiv 2024, arXiv:2402.15945. [Google Scholar]

- Taheri, R.; Javidan, R.; Shojafar, M.; Vinod, P.; Conti, M. Can Machine Learning Models with Static Features be Fooled? An Adversarial Machine Learning Approach. Clust. Comput. 2020, 23, 3233–3253. [Google Scholar] [CrossRef]

- Musman, S.; Booker, L.; Applebaum, A.; Edmonds, B. Steps Toward a Principled Approach to Automating Cyber Responses. SPIE Digital Library. 2019. Available online: https://www.spiedigitallibrary.org/conference-proceedings-of-spie/11006/2518976/Steps-toward-a-principled-approach-to-automating-cyber-responses/10.1117/12.2518976.full#= (accessed on 16 September 2024).

- Geiger, A.; Liu, D.; Alnegheimish, S.; Cuesta-Infante, A.; Veeramachaneni, K. TadGAN: Time Series Anomaly Detection Using Generative Adversarial Networks. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020. [Google Scholar] [CrossRef]

- Rizvi, M. Enhancing Cybersecurity: The Power of Artificial Intelligence in Threat Detection and Prevention. Int. J. Adv. Eng. Res. Sci. 2023, 10, 55–60. [Google Scholar] [CrossRef]

- Sankaram, M.; Roopesh, M.; Rasetti, S.; Nishat, N. A Comprehensive Review of Artificial Intelligence Applications in Enhancing Cybersecurity Threat Detection and Response Mechanisms. Glob. Mainstream J. Bus. Econ. Dev. Proj. Manag. 2024, 3, 1–14. [Google Scholar] [CrossRef]

- Freitas, S.; Kalajdjieski, J.; Gharib, A.; McCann, R. AI-driven Guided Response for Security Operation Centers with Microsoft Copilot for Security. arXiv 2024. [Google Scholar] [CrossRef]

- Camacho, N.G. The Role of AI in Cybersecurity: Addressing Threats in the Digital Age. J. Artif. Intell. Gen. Sci. (JAIGS) 2024, 3, 143–154. [Google Scholar] [CrossRef]

- Laxmi, S.; Kumar, S. Improving Cybersecurity: Artificial Intelligence’s Ability to Detect and Stop Threats. Int. J. Adv. Eng. Res. Sci. 2024, 11, 6. [Google Scholar] [CrossRef]

- Huang, Y.; Huang, L.; Zhu, Q. Reinforcement Learning for Feedback-enabled Cyber Resilience. Annu. Rev. Control 2022, 53, 273–295. [Google Scholar] [CrossRef]

- Adewusi, A.O.; Okoli, U.I.; Olorunsogo, T.; Adaga, E.; Daraojimba, D.O.; Obi, O.C. Artificial Intelligence in Cybersecurity: Protecting National Infrastructure: A USA Review. World J. Adv. Res. Rev. 2024, 21, 2263–2275. [Google Scholar] [CrossRef]

- Soussi, W.; Christopoulou, M.; Gür, G.; Stiller, B. MERLINS—Moving Target Defense Enhanced with Deep-RL for NFV In-Depth Security. In Proceedings of the 2023 IEEE Conference on Network Function Virtualization and Software Defined Networks (NFV-SDN), Dresden, Germany, 6 November 2023; pp. 65–71. [Google Scholar] [CrossRef]

- Jajodia, S.; Cybenko, G.; Liu, P.; Wang, C.; Wellman, M. (Eds.) Adversarial and Uncertain Reasoning for Adaptive Cyber Defense: Control-and Game-Theoretic Approaches to Cybersecurity; Springer Nature: Berlin/Heidelberg, Germany, 2019; Volume 11830. [Google Scholar]

- Reti, D.; Fraunholz, D.; Elzer, K.; Schneider, D.; Schotten, H.D. Evaluating Deception and Moving Target Defense with Network Attack Simulation. In Proceedings of the 9th ACM Workshop on Moving Target Defense, Los Angeles, CA, USA, 7 November 2022; pp. 45–53. Available online: https://arxiv.org/pdf/2301.10629 (accessed on 14 January 2025).

- Clark, A.; Sun, K.; Bushnell, L.; Poovendran, R. A Game-theoretic Approach to IP Address Randomization in Decoy-based Cyber Defense. In Decision and Game Theory for Security; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Duy, P.T.; Hoang, H.D.; Khoa, N.H.; Hien, D.T.; Pham, V. Fool Your Enemies: Enable Cyber Deception and Moving Target Defense for Intrusion Detection in SDN. In Proceedings of the 2022 21st International Symposium on Communications and Information Technologies (ISCIT), Xi’an, China, 27–30 September 2022; pp. 27–32. [Google Scholar]

- Bilinski, M.; diVita, J.; Ferguson-Walter, K.; Fugate, S.; Gabrys, R.; Mauger, J.; Souza, B. Lie Another Day: Demonstrating Bias in a Multi-round Cyber Deception Game of Questionable Veracity; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; Available online: https://link.springer.com/chapter/10.1007/978-3-030-64793-3_5 (accessed on 14 January 2025).

- Cohen, S.; Bitton, R.; Nassi, B. Here Comes the AI Worm: Unleashing Zero-click Worms that Target GenAI-powered Applications. arXiv 2024, arXiv:2403.02817. [Google Scholar]

- Weinz, M.; Schröer, S.L.; Apruzzese, G. “Hey Google, Remind Me to be Phished”: Exploiting the Google (AI) Assistant Ecosystem for Social Engineering Attacks. 2024. Available online: https://www.giovanniapruzzese.com/files/papers/GoogleAssistantPhishing.pdf (accessed on 3 March 2025).

- Lakshmanan, R. Researchers Reveal ‘Deceptive Delight’ Method to Jailbreak AI models. The Hacker News, 23 October 2024. Available online: https://thehackernews.com/2024/10/researchers-reveal-deceptive-delight.html (accessed on 3 March 2025).

- Yahalom, R. What the 2024 CrowdStrike Glitch Can Teach Us About Cyber Risk, Harvard Business Review. 2025. Available online: https://hbr.org/2025/01/what-the-2024-crowdstrike-glitch-can-teach-us-about-cyber-risk (accessed on 1 September 2025).

- Doll, J.; McCarthy, C.; McDougall, H.; Bhunia, S. Unraveling Log4Shell: Analyzing the Impact and Response to the Log4j Vulnerabil. arXiv 2025, arXiv:2501.17760. [Google Scholar]

- Chen, C. Everyone in AI Is Talking About Manus. We Put It to the Test. Artificial Intelligence, MIT Technology Review. 2025. Available online: https://www.technologyreview.com/2025/03/11/1113133/manus-ai-review/ (accessed on 3 March 2025).

| Model Component | Threat | Literature | Explained in the Paper |

|---|---|---|---|

| Aging chain of systems, systems at risk, compromised systems | Simple cyber threats | Science: [28,31,35,36] | Section 2.1 |

| Prevention, detection, response, and recovery capabilities | Simple cyber threats | Science: [28,31,36] Practitioners: [37,38,39,40] | Section 2.1 |

| Risk and profit | Simple cyber threats | Science: [28,31,36,41,42] Practitioners: [28,31,36,41,42] Practitioners: [43] | Section 2.1 |

| Interaction between compromised systems and systems at risk, as well as systems not at risk (lateral movement and spreading) | Advanced cyber threats (e.g., ransomware) | Science: [44,45] | Section 2.2 |

| Depending on maturity, prevention and detection can limit lateral movement and spread. | Advanced cyber threats (e.g., ransomware) | Practitioners: [37,38,39,40] Science: [46,47,48] | Section 2.2 |

| Kill Chain | Adversarial Perspective | Defenders’ Perspective |

|---|---|---|

| Reconnaissance |

|

|

| Industry: [68,69], Practitioners: [70] | ||

| Weaponization |

|

|

| Industry: [71,72,73] | ||

| Delivery |

|

|

| Industry: [74] | ||

| Exploitation |

|

|

| Media: [75] Science: [65,76,77,78,79] Industry: [80,81] Practitioners: [70,82] | ||

| Installation |

|

|

| Science: [79], Practitioners: [70,82] | ||

| Command & Control |

|

|

| Media: [83,84,85] Science: [86,87,88] Practitioners: [82] | ||

| Actions on Objectives |

|

|

| Media: [83,84,85] Science: [86,87,88] | ||

| Science: [65,66]; Practitioners: [67] | ||

| Kill Chain | Adversarial Perspective | Defenders’ Perspective | |||||

| industry: [91], practitioners: [92,93,97], science: [96] | science: [96,112,114,115], Practitioners: [97], industry: [113,116], | ||||||

| Reconnaissance | Automated attack tool generation, Generative Adversarial Networks (GAN)-science: [94,100,114] | Aggressive Autonomous Agents (AAA), federated learning botnets-industry: [108,109,111], Science: 105 practioners: [18,110] | Practitioners: [98] | media [117], practitioners [118,119], science: [120,121,122] | Improved double-loop learning-science: [131,132,133,134,135,136,137,138,139,140,141,142] | ||

| Automated Security Hygiene

| ||||||

| Weaponization | practitioners: [99], industry: [17], science: [100,101,102,103] | ||||||

| |||||||

| Delivery | |||||||

| Exploitation | |||||||

| Installation | science: [21,102,103,123,124], practitioners: [107], media [125,126] | Deceptive Defense Systems & Moving Target Defense (MTD) Science: [118,119,120,121,122,138,143] Autonomous Defense Systems:

| |||||

| |||||||

| Command & Control | practitioners: [95], science: [104] | ||||||

| |||||||

| Actions on Objectives | |||||||

| industry: [111,127,128], science: [112,128,129] | |||||||

| Model Component | Scope | Literature | Publication Year | |||||

|---|---|---|---|---|---|---|---|---|

| <2020 | 2021 | 2022 | 2023 | 2024 | 2025 | |||

| Automated Attack Tool Generation Federated Learning Botnets | Attacker | [91,92,93,94,95,96,105,106] | 1 | 1 | 4 | 1 | ||

| Autonomous Aggressive Agents | Attacker | [18,100,108,111,112,113,114,115] | 1 | 1 | 2 | 2 | 2 | |

| Various Kill Chain Components Automation | Attacker | [17,98,99,100,101,102,103,104,107] | 2 | 1 | 3 | 2 | 1 | |

| Automated Security Hygiene | Defender | [117,118,119,121,122] | 2 | 1 | 2 | |||

| Deceptive Defense Systems & Moving Target Defense | System State | [143,144,145,146,147,148] | 3 | 2 | 1 | |||

| Autonomous Defense Systems | Defender | [111,113,127,128,129,130] | 1 | 3 | 2 | |||

| Improved (Double Loop) Learning | Defender | [131,132,133,134,135,136,137,138,139,140,141,142] | 2 | 1 | 2 | 6 | ||

| General Perspective on AI and Cyber | Both | [61,90,96,97,116] | 5 | |||||

| Using AI as an Attack Vector | AI | [149,150] | 2 | |||||

| AI Going Rogue | System State | [123,124,125,126] | 1 | 1 | 3 | |||

| Total | 11 | 5 | 4 | 14 | 25 | 5 | ||

| Time | Threat | Identified Structures | Policy Option |

|---|---|---|---|

| 1970s to 2020s | Simple |

|

|

| Around 2020s | Advanced threats (e.g., ransomware) |

|

|

| Future | AI-powered threats |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeijlemaker, S.; Lemiesa, Y.K.; Schröer, S.L.; Abhishta, A.; Siegel, M. How Does AI Transform Cyber Risk Management? Systems 2025, 13, 835. https://doi.org/10.3390/systems13100835

Zeijlemaker S, Lemiesa YK, Schröer SL, Abhishta A, Siegel M. How Does AI Transform Cyber Risk Management? Systems. 2025; 13(10):835. https://doi.org/10.3390/systems13100835

Chicago/Turabian StyleZeijlemaker, Sander, Yaphet K. Lemiesa, Saskia Laura Schröer, Abhishta Abhishta, and Michael Siegel. 2025. "How Does AI Transform Cyber Risk Management?" Systems 13, no. 10: 835. https://doi.org/10.3390/systems13100835

APA StyleZeijlemaker, S., Lemiesa, Y. K., Schröer, S. L., Abhishta, A., & Siegel, M. (2025). How Does AI Transform Cyber Risk Management? Systems, 13(10), 835. https://doi.org/10.3390/systems13100835