1. Introduction

This paper contains data and evaluation of the automation processes conducted in a development project in KONGSBERG over a five-year period (2018–2023) for a complex cyber-physical system. The authors have extracted test data from the case company’s (referred to as the company from here on) test database, giving us high confidence in these data.

1.1. Background

The company has to execute projects faster and in parallel to cope with its future market situation. The availability of human resources is a challenge. The company needs to increase test coverage for all projects compared to today’s situation. The company also needs to improve the effectiveness and efficiency of test result analysis to cope with the number of tests on time. The company must present the test result documentation on time for all relevant testing on different formats like pickle, markdown, and JSON for further automatic processing and in pdf format for easy sharing both internally and externally. The company needs to document all test results, not only the mandatory part for customer delivery.

KONGSBERG is a large company cluster spanning multiple industry fields with its headquarters in Kongsberg and locations in forty other countries. The founders established the company in 1814, which has approximately 13,000 employees as of 2024. The KONGSBERG group consists of four companies [

1]:

1.2. Scope

The large development project under investigation in this study started about thirty years ago and has undergone several product upgrades. From the first commercial survey, KONGSBERG has been at the forefront of autonomy, with the system being capable of uninterrupted operations for its entire mission [

1].

The context of the research is within the maritime domain. The product we use in our case study is an Autonomous Underwater Vehicle (AUV) on a mission to map the seabed using a camera. See

Figure 1 for an illustration of the AUV from KONGSBERG.

We aim to mitigate the identified company bottlenecks through an approach where we do research in the industry to help them solve these bottlenecks in their test process. We selected automation processes suitable for the company test process, being:

- (1)

test setup using Orthogonal Arrays (OAs);

- (2)

test execution using machine readable procedures;

- (3)

test result analysis using scripts;

- (4)

test document generation using a framework for collecting relevant data and generating specified documentation.

The scripts for analyzing test results use Measures of Effectiveness (MoEs) and Measures of Performance (MoPs) as acceptance criteria for setting the verification status on test cases to be compliant or not. We did not select Machine Learning as we assessed that to be better suited for a later stage when we have more data available.

1.3. Research Objective and Questions

The goal of this research is to improve the test coverage for the company to facilitate detection of detrimental weak emergent behavior in their systems. We look to achieve this goal using automation processes based on findings from the literature review [

2]. We pose the following research question (RQ) and sub-research questions (SRQ) for this case study:

RQ: How can automation of test processes improve the verification of complex systems?

SRQ1: How can automation of test setup improve the verification of complex systems?

SRQ2: How can automation of test execution improve the verification of complex systems?

SRQ3: How can automation of test result analysis improve the verification of complex systems?

SRQ4: How can automation of test document generation improve the verification of complex systems?

SRQ5: Can the use of automated test processes for complex systems have a positive impact on usage of subject matter expert hours?

Figure 2 illustrates our research design. Haugen and Mansouri [

3] support the first part, problem exploration, and Haugen et al. [

2] support the second part, the literature review. The aim of this paper was to do a gap analysis between different project milestones before and after implementation of automation measures within four areas of interest, being (1) test setup, (2) test execution, (3) test result analysis, and (4) documentation.

1.4. Contributions of the Paper

The authors explored automation processes in an industrial case to improve verification of complex systems. Many organizations recognize manual testing as a bottleneck. The authors advocate combining strengths of human ability and machine capabilities to improve the effectiveness (what) and efficiency (how) of the test process. The research will contribute to the body-of-knowledge with findings from a case study in the Norwegian high-tech industry company KONGSBERG. Adding data from specific industrial contexts to the body-of-knowledge is a continuous effort for the research community to push the research front further and generalize findings. There is a recent boom in studies related to autonomous systems [

4,

5,

6,

7], which this research fits into.

The selected approach should be applicable, in general, for organizations developing complex systems and for having the potential to improve human–machine task balance. The potential value of using this approach is to increase the probability of on-time project delivery and reduce project delays.

1.5. Paper Structure

We have structured the rest of this paper as follows:

Section 2 provides a relevant state-of-the-art theoretical foundation and rationale for this research.

Section 3 presents methodology used for this research paper.

Section 4 describes our selected approach.

Section 5 provides a quantified comparison between two test campaigns: the first based on a manual test process and the second based on an automated test process.

Section 6 gives a short financial assessment in the form of SME hours.

Section 7 provides a synthesis on the results, addressing the research questions. Finally,

Section 8, summarizes the paper, provides gained knowledge, defines limitations of the study, and proposes future research.

2. Literature Review

We searched relevant literature for potential benefits and concerns related to automation processes, identifying a research gap, and our contribution.

Undesired system behavior typically emerges in complex systems as a surprise because of its non-intuitive nature, caused by interaction effects we cannot trace to any specific system part. The philosophical term emergence traces back to the Greek philosopher Aristotle (384–322 B.C.) and the saying “the whole is greater than the sum of its parts” [

8]. This undesired non-intuitive system behavior is related to the terms detrimental emergent behavior [

9] and weak emergent behavior [

10]. Mittal [

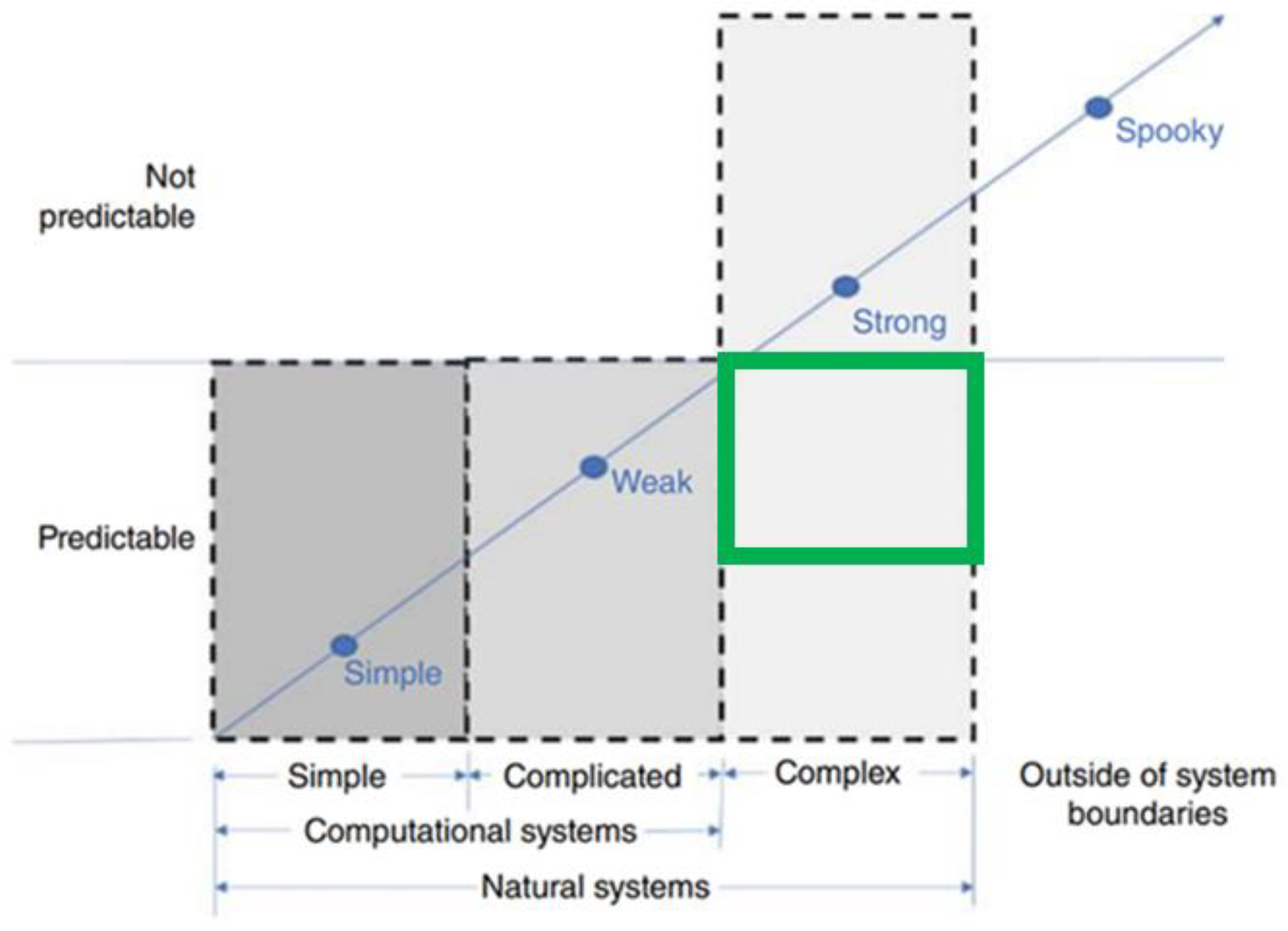

10] relates emergent behavior to complexity as these two terms are highly connected. See

Figure 3 for an overview of the relation between emergent behavior and complexity, including a green box showing where we focus our efforts in this research. The x-axis holds the complexity categories while the diagonal holds the corresponding emergent behavior categories. The Cynefin framework [

11] describes how to deal with the various levels of complexity in a system.

By exploiting the strengths of both humans and machines, the company can potentially increase test process efficiency. Automating test execution and test result analysis can remove these two bottlenecks in these two stages of the test process [

3,

12]. Utilizing metrics like MoEs and MoPs can give us information about the system that could become valuable feedback to system top level design [

13]. Raman [

14,

15,

16,

17,

18,

19] suggests an automation approach using Machine Learning (ML) techniques when monitoring MoEs and MoPs to look for changes that give or could trigger undesired system behavior.

Several research works claim to reduce the test time used for manual testing by more than 90% through automating the test procedures that are suitable for automation [

20,

21]. Wechner et al. [

4] claim to have validated a strategy for automated testing to efficiently verify and validate a complex system, such as an aircraft, utilizing a generic design, allowing reusability with minimum effort. Tools for automation with built-in simulators become essential for verifying and validating behavior logic in a reasonable amount of time [

22]. However, a key challenge is the need for abstractions of the micro and macro levels, which is difficult to achieve in an automated manner. Hence, most approaches rely on a post-mortem observation of the simulation by a system expert [

23].

Cho et al. [

5] conducted a case study on an autonomous robot vehicle for automatic generation of metamorphic relations for a cyber-physical system-of-systems using a genetic algorithm. They use metamorphic relations among multiple inputs and corresponding outputs of the system to address the challenge of system behavior uncertainties when operating in the physical environment. They propose a method to automatically generate metamorphic relations based on operational test data logs, applying a genetic algorithm to adapt the metamorphic relations generated by the engineers. They claim that this algorithm increases test effectiveness through capturing system behavior, including emergent behavior, more realistically than manually generated metamorphic relations. Also, they claim that this algorithm increases test efficiency as engineers can obtain metamorphic relations with minimal manual effort.

Zhang et al. [

6] conducted an industry case study researching scenario-driven metamorphic testing for autonomous driving simulators. Their focus was on validation of the simulators using a scenario-testing framework where testers iteratively can improve test cases and metamorphic relations to confirm detected issues. They claim that this scenario-testing framework can significantly reduce the time and effort required for testers to prepare and test a new system.

Barbie and Hasselbring [

7] introduce a concept using digital twin prototypes for automated integration testing, allowing for an agile verification and validation process. They claim to reduce bottlenecks in system integration testing by enabling fully automated integration testing through digital twins, avoiding costly hardware and manual testing.

The literature shows clear benefits of automation processes, and the company has the potential to improve its human–machine task balance to increase the efficiency of its test process. We use automated testing as we see in Wechner et al. [

4], cyber-physical systems as referred to in Cho et al. [

5], and test scenarios in line with Zhang et al. [

6]. We do not use digital twins as defined in Barbie and Hasselbring [

7], but we use simulators that could evolve into digital twins if we connect them to the real system. There is a gap in the body of knowledge on case studies providing data from various industry domains researching the benefits of different automation processes to build a solid foundation for generalization. The aim of this research is to fill a bit of this gap by providing a new case study from a maritime industry domain researching the benefits of a set of defined automation processes.

3. Methods

This research was conducted in the company based on an industry-as-laboratory approach [

24,

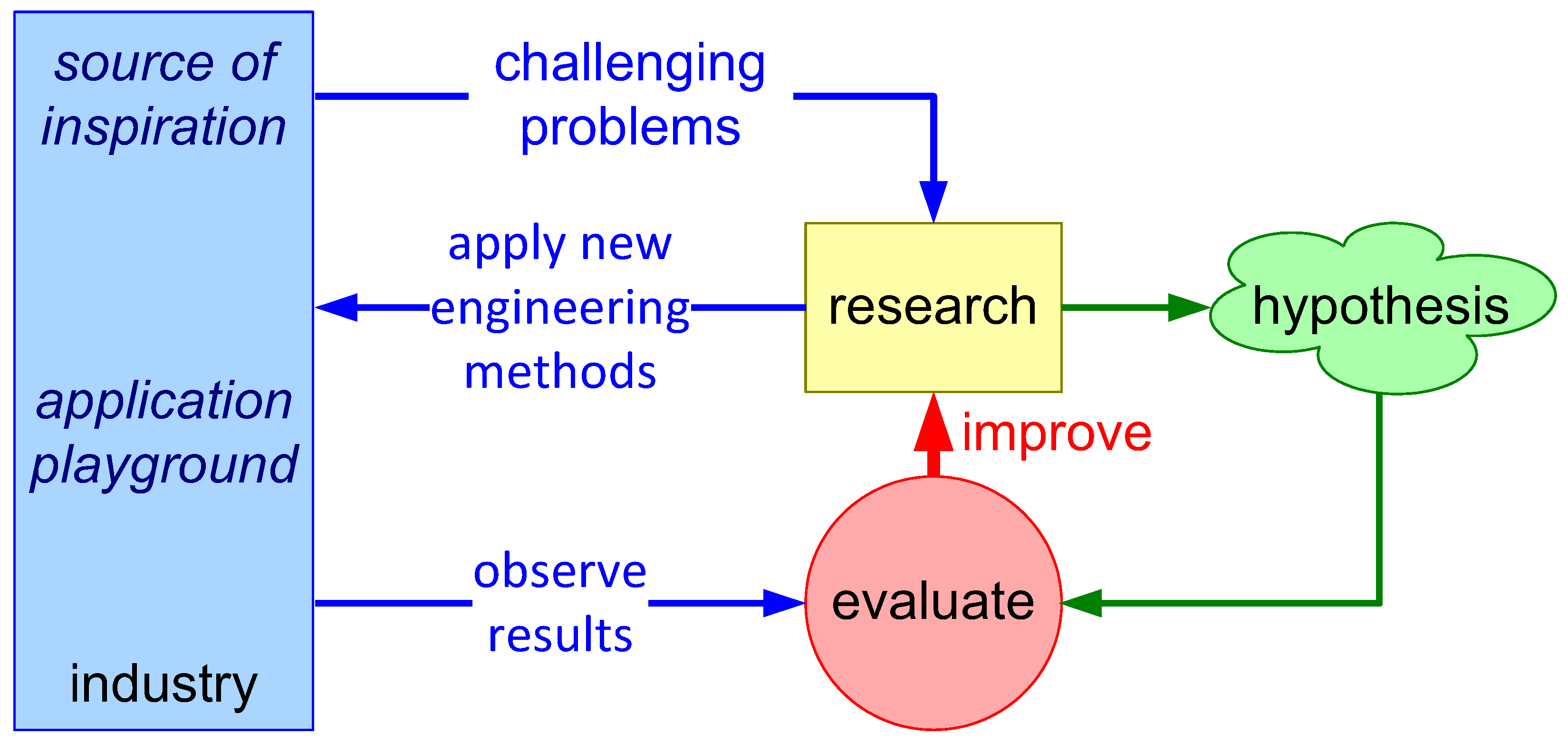

25]. This approach entails performing practical research in an industrial context, providing new research data to the body of knowledge. The main condition for using this approach is that research can benefit from collecting data in an industrial context. See

Figure 4 for an illustration of the industry-as-laboratory approach. We replace the hypothesis in

Figure 4 with a set of research questions. Also, this research used the action research strategy through active collaboration in the industry to solve an identified problem [

26]. In the company, one of the researchers had access to the system integration test group, relevant SMEs (testers and analysts), and historic test data. The nature of the research and the mentioned industry connection provided a rationale for using the industry-as-laboratory approach.

“The advantage with such an approach is the realism introduced into the research. Practical challenges in the theoretical frameworks can be hard to detect, unless one sees how it plays out in reality. One challenge emerging from such an approach is the potential of noise from company-specific problems that we cannot directly link to the research conducted.”

The digitalization process to automate earlier manual tasks included several steps of scripting. Developers use Python to script files for test setup transfer, test execution, test result analysis, and test documentation. The company stores test input and test output in separate distributed relational databases with the test input data under configuration management in a company developed system called Chaman. The test output is accessible from the company developed test web application. The reports created by the Highly Automated Document System (HADES) are stored in a product document management (PDM) system, named Enovia, from Dassault Systems [

27].

4. Case Study

We aim to generalize the case study for a complex cyber-physical system as the AUV is representative of other products across the KONGSBERG portfolio. The real case we investigated contains classified and sensitive data that we cannot share outside the company.

Test setup: We looked at automating today’s manual test setup for system integration testing based on OAs [

28]. OAs are a mechanism to increase test coverage without proportionally increasing the number of tests. Since we want to test more of the parameter space, we need automation. A previous case study in the company showed promising results using OAs [

29]. The researchers used the Minitab tool [

30] to design an experiment based on the Taguchi method [

28]. Then, the company transferred the experiment data in a machine-readable format to a simulator.

Test execution: We investigated automating today’s manual tester actions, including triggers for scenario actions. The company made manual actions machine readable; see

Table 1 and implemented support for this in one of the company’s test arenas.

Test result analysis: We researched automating today’s manual analysis. The company scripted checks that Subject Matter Experts (SMEs) perform manually when investigating log files for different data to compare against defined acceptance criteria, making machines able to execute these checks. In this case study, we focused on a subset of these scripts. This subset consisted of thirty out of the total one hundred system requirements. Then, the company transformed the analysis results using a Python [

31] script making a format suitable for regression analysis in Minitab. This transformation of analysis results involved a translation between a defined set of strings and a defined set of numbers (e.g., from [mission completed] to [

1]).

Documentation: We examined automating today’s manual documentation process. The company both tested and used its developed HADES. Analyzing results and generating reports are the functions of the HADES.

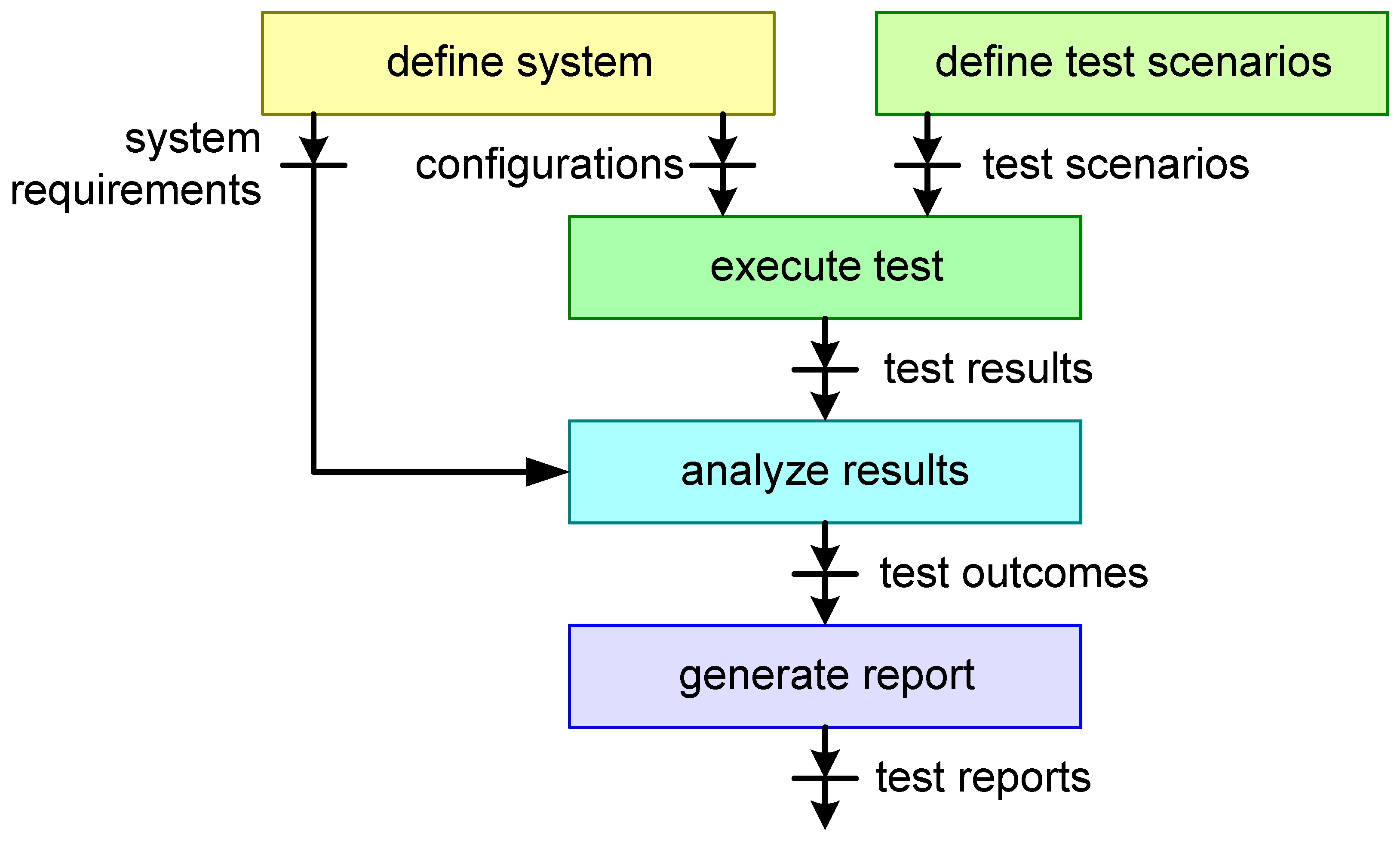

Figure 5 shows the complete test process in a data flow diagram where the company stores artifacts in a repository facilitating further testing, processing, analysis, and reporting.

A test scenario and system configuration provide input to the HADES for automatic creation of test descriptions. The test operator uses a test description as input to the simulator to execute tests. The test operator stores the test results from the simulator in a test results database, which provides input to the HADES for automatic analysis of test results. The HADES creates the test outcome based on test results and system requirements with acceptance criteria. The HADES then uses the test outcome from the analysis process in automatic creation of test reports. Test outcomes are machine readable, while test reports are human readable.

5. Results

The number of tests included approximately one hundred system level requirements with the test as the verification method. The sub-system level used system level tests for sub-system verification. The authors have included one sub-system in this research to show data for one typical sub-system, not for all sub-systems. We present the results from two project test campaigns (the initial project’s Final Design Review (FDR) and one later project’s updated FDR) in this section. We compare these two test campaigns looking at the period before a delivery milestone focusing on testing. System integration testing is the first step in system level testing, where the company through a trial-and-error approach ensures maturity before more formal testing. System testing is the second step in system level testing, where the company through a test plan ensures compliance with the system design. System interface testing is a part of system testing, focusing on testing input/output. System verification testing is the third step in system level testing, where the company, through a system verification plan, ensures compliance with the system requirements.

5.1. Benchmark Results

The benchmark results are based on manual tasks performed during a major project milestone.

Test setup: The company prioritized testing for verifying system requirements, based on the system verification plan. The development project had limited test coverage (10–30%) of the system design considering the parameter space of interest for the system. The time for the development project to set up a set of tests was low (2–4 h), only selecting and prioritizing among the described system verification tests for system integration testing.

Test execution: See

Table 2 for an overview of the number of tests executed and the duration of the system test campaign. The system test campaign we used is the functional testing part of FDR. The company had set the

system tests finished milestone to February 2018 and the

verification tests finished milestone to April 2018. However, the company ended up performing these serial test phases in parallel to finish on time, working double shifts to do so.

The project allowed sub-system verification testing to finish in May 2018, as they still had time before the FDR milestone meeting in June 2018. The project continued testing in June 2018 to further increase their confidence in the test reports delivered, in case of any discussions during the FDR milestone meeting.

A search into the company’s test result database revealed a maximum of 546 tests executed in one month in a hectic period (February 2018), ref.

Table 2. A hectic period is, here, a period with focus on testing in the development project. The testers used two to four test arenas of two types, System Integration Lab closed loop (SILc) and System Integration Lab open loop (SILo), dependent on their availability. SILc includes close to all hardware (HW), facilitating full operational testing. SILo includes less HW than SILc, only facilitating preparation for operation type of testing. Another search in the company’s test result database in a less hectic period (October 2019 to March 2020) revealed an average of fifty tests executed per month [

12].

The test operators performed tests manually, being resource demanding. One full test in SILc involves two operators sitting in the system lab for thirty minutes including pre- and post-work, without the opportunity to do other types of work. One full test in SILo involves the same as for SILc, but only about half the time is necessary.

Test result analysis: SMEs reported that they on average used 39% of their time for analysis work in hectic periods, but only 4% of their time in non-hectic periods [

12]. This analysis effort only covers 9% of tests executed, not considering potential overlap, leaving 91% of test data not used for analysis [

12]. In total, 8% of the analyses conducted did reveal an issue [

12].

Analysts reported varying times to detect an error, but they estimated an average of two hours. Furthermore, they reported the time to find the causal factor to vary between weeks and months [

12]. One analyst conducting analysis for fifteen hours per week during hectic periods is then likely to detect seven to eight errors per week.

Table 3 shows errors and numbers that a selection of seven analysts reported during a six month integration testing period (October 2019 to March 2020) [

12]. In addition to these 75 reported errors, we found 385 other errors from a project team status list used by the project team to follow up on issues during the system testing period (February and March 2018) and verification testing period (March and April 2018).

The test result analysis revealed detrimental emergent behaviors like failure to plan missions, running out of fuel, not covering steep terrain areas, and drifting away from the planned trajectory [

29]. See

Figure 6 for an emergent behavior example where the AUV camera does not cover an area with steep terrain.

Documentation: The documentation part of this case study consisted of two test reports, one at system level, and one at sub-system level. SMEs created both documents manually. The documents held compliance status and rationale for all hundred system requirements with test verification method. SMEs estimated the time spent creating these two test reports to one week (37.5 h) each.

5.2. Comparison Results

The comparison results are based on automated tasks performed during three project update increments, which we can see as regression testing [

32].

Test setup: The company has not tried automating the requirements-based test setup but has tested automating test setup based on OAs. OAs and automating test setup will both improve the company’s test process. We tried using one OA to set up testing to increase the test coverage of the parameter space of interest for the system (70–90%) and carried out so by selecting a suitable OA from the Taguchi framework [

28]. For example, we used an L25 OA to meet our needs. We wanted to test six parameters at five distinct levels each, which gave 15,625 combinations (entire system parameter space). However, the L25 OA provided us with sufficient test coverage in only twenty-five tests (parameter space of interest). To set up a test execution according to this matrix for our company test arena, we exported this OA to the simulator and generated twenty-five test cases from the L25 OA setting values in a simulator input file (simulated environment data).

Test execution: The test coverage included thirty system level test cases of the one hundred existing, plus nineteen more system level interface test cases. The company down selected test cases manually to avoid similar types of testing, only testing distinctly different functionality. See

Table 4 for an overview of tests executed and the period of the regression test campaign. The company still performed testing in the test arenas allocated for verification, but they also performed tests in an alternate test arena built for automatic testing. A test in the alternate test arena involved the test arena automatically executing the test according to a procedure (machine-readable version of the earlier manual list of actions). This alternate test arena is a simulator consisting of SW and digital models that can run 24/7 without human intervention. The main benefit of using this alternate test arena was that the operator could do other tasks in the office while the test arena was executing the test.

Test result analysis: The company automated the information flow from the test results database to the HADES, removing this earlier manual step and avoiding new test results not being analyzed by SMEs. The company used the HADES to analyze the test results, which they could do for a specified set of test cases or for all test cases being part of the test report. The time to conduct one analysis was about five seconds. Analyzing thirty interface and system level test cases took three minutes.

Table 5 shows forty-three errors that the company detected during this regression test period. Out of sixty-four system and sub-system level tests, they discovered forty-three errors. This gives a detection rate of 0.67 errors per test. In comparison, they discovered 385 errors during 467 system- and verification tests in the benchmark test campaign, giving a detection rate of 0.82 errors per test. The regression test campaign based on automated tasks has a detection rate of 0.67 errors per test when the company could expect zero, which is a significant contribution for the automation effort. We found 25% of the errors originated from new functionality/interfaces (11 errors in 21 tests) while the remaining 75% came from updated functionality/interfaces (32 errors in 43 tests) previously verified.

Documentation: The company used the HADES to create three test reports at system level, covering compliance and rationale for forty-nine interface and system level test cases. The time the HADES needed for creating these three test reports was approximately 1.5 min per report.

6. Cost Estimate

The company will have to invest money to implement and maintain the proposed automation processes. The cost of implementing and maintaining the different automation processes is dependent on the number of SME hours (

) needed and the cost of one SME hour (

). The total number of SME hours (

) for test result analysis depends on the SME hours needed for initial scripting (

), script maintenance (

), and manual analysis (

); see Equation (1).

The benchmark results in our case study revealed poor test result analysis coverage in the system integration phase. These results correspond well with survey results from one company in KONGSBERG, where SMEs stated they only use 4% of their time on test result analysis [

12]. Furthermore, the test result analysis coverage in the system- and verification test phases were high, which also corresponds with the survey, stating that SMEs use 39% of their time on test result analysis [

12].

SMEs in the company use about 12% of their time to create scripts for automatic analysis and about 6% of their time on average to maintain these scripts through different project increments. We have extracted these numbers from the company’s time management system. Furthermore, we assessed that SMEs only use about 4% of their time on test result analysis in the system- and verification phases when automatic test result analysis is in place. The latter, we base on an estimated 90% test analysis coverage in the system integration phase based on automatic analysis compared to only 9% coverage as previously reported [

12].

The above numbers clearly show the benefit of the company investing in the automation process for test result analysis. For establishment of the automatic test results analysis framework, we have a workload ratio of 3:1 compared to performing the analysis manually. During maintenance of this framework through different project increments, we have a workload ratio of 3:2 compared to manual efforts. Using this framework in the system testing and verification testing phases, we have a workload ratio of 1:10 compared to manual operations. Treating these three ratios equally, we achieve a total ratio of 7:13 (46%) in favor of the automation process. We found the ratio to be 26:35 (26%) for the company project update. The cost of detecting errors late in a project development being higher than detecting errors early further strengthens this assessment. A case study from Carnegie Mellon University claims that the cost of detecting errors in the system- and verification phases is 2–3 times higher than in the previous system integration phase [

33]. Also, the systems engineering handbook claims that the cost of extracting defects in late project testing is 500–1000 times higher than the cost in earlier testing [

34].

7. Discussion

This section answers the research questions considering the results and the cost estimate.

SRQ1: How can automation of test setup improve the verification of complex systems?

The company performed a minor test related to test setup where we used an L25 Taguchi OA, which showed promising results in supplying test data input to twenty-five tests without the need to set up the twenty-five tests manually. The OA approach increased the test coverage 60% in this case study. The company can set up testing effectively (test the right things) and efficiently (test the right way) through this approach, combining statistical based experiment design and automation.

SRQ2: How can automation of test execution improve the verification of complex systems?

The company made a small-scale testing effort looking at how the company could run tests automatically in a suitable test arena, which showed superior results. The test arena conducted the tests while the two operators could do other types of work anywhere, saving up to 1 h of manual work per test. The company can benefit from a potential increased test coverage using the system scenario sequencer (automated test arena), resolving the bottleneck with availability of test arenas in the system test lab.

SRQ3: How can automation of test result analysis improve the verification of complex systems?

The company performed a large-scale test of automated test result analysis scripts, which has proven to be both beneficial and challenging. The company spent a significant amount of time creating these scripts, but the potential gain is ever growing as we iterate and do regression testing. An automated analysis needs about five seconds while a manual analysis requires on average about two hours to complete. However, we have seen a weakness in the maintainability of these scripts as we apply changes to the system interfaces. The company must update the scripts manually for them to still work as intended. The company has the potential to increase the analysis coverage through use of scripts and the HADES, resolving the main bottleneck of available SMEs for manual analysis. We see the importance of regression testing in the comparison test campaign, where 75% of the errors came from functionality already verified in the benchmark test campaign.

SRQ4: How can automation of test document generation improve the verification of complex systems?

The company has used the HADES in its effort to automate analysis and documentation. In this case, we saw that the HADES is helpful in significantly reducing the time for documentation related to testing. The company was able to produce both test descriptions and test reports during a one-month test campaign, which they would never have been able to do previously. Where the HADES uses 1.5 min to generate a document automatically, a SME uses about 37.5 h to do the same manually. The time to manually create one test description prior to testing and one test report after testing consumes two weeks, which only leaves two weeks for testing in a one-month test campaign. However, the review process is the same whether the development project automatically or manually generates documents. Typically, a review process takes twelve days (two days to prepare the peer review, two days to conduct the peer review, one week to update the document, and one day to release the document through a formal review).

SRQ5: Can the use of automated test processes for complex systems have a positive impact on usage of subject matter expert hours?

The implementation of automated test processes and further maintenance of these will require a significant amount of SME hours initially and throughout the project development. However, the company can reduce the cost related to the amount of SME hours in the final stages of the project more than the investment cost. Based on a previous survey in a company in KONGSBERG [

12] and data from the company’s time management system, we clearly see the benefit of automation processes. The most critical automation process being the test result analysis, where we saw a 26% reduction in a typical SME position’s usage of time on test result analysis tasks in a development project.

RQ: How can automation of test processes improve the verification of complex systems?

We see an increased system robustness through the different SRQ discussions above. All the four areas we have looked at related to automation processes have proved to be beneficial for overall product robustness. The company will be able to detect more of the inherent errors and undesired behaviors in a complex system, and do so earlier, by increasing the test and analysis coverage and reducing the time spent performing these actions. The proposed semi-automated test process with a better human–machine task balance can reduce project delays for the company significantly. Based on prior knowledge from a previous case study [

29], the company is able to test a system more effectively through use of OAs matching the provided test input data to generate necessary test scenarios, contributing to earlier detection of errors.

8. Conclusions

This empirical case study serves as a “proof of concept” for automation processes when it comes to increased test coverage. This research has shown promising results within all the four categories we have looked at. First, we can set up testing to ensure the desired test coverage in an effective and efficient way using OAs, e.g., the company only needs to provide one input file to trigger twenty-five different tests as part of one OA to extract the desired level of information. Second, we can execute testing efficiently through removal of human-in-the-loop, e.g., the company can save fifteen to thirty minutes per test. Third, we can analyze significantly more test results during a given period using scripting, e.g., the company can analyze one test in about five seconds compared to typically two hours. However, an interface update strategy would be beneficial to reduce the maintenance cost. Fourth, we can create test documentation in a fraction of the time using an automated test documentation process, e.g., the company can produce a test report in 1.5 min compared to 1 week. Fifth, the company could reduce the overall project cost even with investment and maintenance cost for automated test processes, e.g., a typical SME can reduce 26% of the time spent on test result analysis by investing in automation processes and avoiding lengthy manual test result analysis tasks.

We assess these findings to be useful for the industry developing complex cyber-physical systems, in general, and for the company in specific. The main contribution of this research is to show the potential benefit of automation processes to exploit the strengths of both humans and machines, providing data to the body-of-knowledge through a case study in the industry.

We see a limitation in our study in the way that we have performed one case study in one project in one company. The techniques described may have a bias toward this case study. However, this case study has provided reference values that others can use for comparison in similar studies:

Test coverage increased by up to 60% by automating test setup

Testing saves up to 0.5 h (1 man-hour) per test by automating test execution

Analysis of a test in 5 s compared to 2 h, including a 26% reduction in SME hours by automating test result analysis

Documentation of test input or output in 1.5 min compared to 37.5 h by automating test documentation

Further research is necessary to establish the best practice for human–machine task balance, substantiating the effectiveness and efficiency in different systems in various contexts. We can see several layers of additional research to build the body-of-knowledge for generalization of the automation processes researched in this paper to find the effect of the proposed automation processes in the following:

Additional projects within the company;

other companies within the KONGSBERG group;

several companies developing complex cyber-physical systems anywhere in the world;

more institutions with a potential benefit of adjusting the human–machine task balance to exploit the strengths of both humans and machines.

The automation processes proposed in this paper may need adjustments to fit a more general use as robustification for different input and output types and formats. To further leverage the benefit of automation processes, we could look into the use of digital twins to mitigate undesirable emergent behavior in complex systems [

35].

Author Contributions

Conceptualization, R.A.H.; methodology, R.A.H. and N.-O.S.; validation, R.A.H.; formal analysis, R.A.H.; investigation, R.A.H.; resources, R.A.H.; data curation, R.A.H.; writing—original draft preparation, R.A.H.; writing—review and editing, R.A.H., N.-O.S. and G.M.; visualization, R.A.H. and G.M.; supervision, N.-O.S. and G.M.; project administration, R.A.H.; funding acquisition, R.A.H. All authors have read and agreed to the published version of the manuscript.

Funding

The Research Council of Norway funded this research, grant number 321830.

Data Availability Statement

Data are contained within the article.

Acknowledgments

We would like to acknowledge the Norwegian Industrial Systems Engineering Research Group at USN for their contributions. Also, the KONGSBERG group for sharing relevant industry information through their SMEs.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- KONGSBERG. Available online: www.kongsberg.com (accessed on 27 December 2023).

- Haugen, R.A.; Skeie, N.-O.; Muller, G.; Syverud, E. Detecting emergence in engineered systems: A literature review and synthesis approach. Syst. Eng. 2023, 26, 463–481. [Google Scholar] [CrossRef]

- Haugen, R.A.; Mansouri, M. Applying Systems Thinking to Frame and Explore a Test System for Product Verification; a Case Study in Large Defence Projects. In Proceedings of the INCOSE International Symposium 2020, Virtual, 20–22 July 2020. [Google Scholar]

- Wechner, M.A.; Marb, M.M.; Holzapfel, F. A Strategy for Efficient and Automated Validation and Verification of Maneuverability Requirements. In Proceedings of the AIAA AVIATION 2023 Forum, San Diego, CA, USA, 12–16 June 2023. [Google Scholar]

- Cho, E.; Shin, Y.J.; Hyun, S.; Kim, H.; Bae, D.H. Automatic Generation of Metamorphic Relations for a Cyber-Physical System-of-Systems Using Genetic Algorithm. In Proceedings of the 2022 29th Asia-Pacific Software Engineering Conference (APSEC), Virtual, 6–9 December 2022. [Google Scholar]

- Zhang, Y.; Towey, D.; Pike, M.; Han, J.C.; Zhou, Z.Q.; Yin, C.; Wang, Q.; Xie, C. Scenario-Driven Metamorphic Testing for Autonomous Driving Simulators. Softw. Test. Verif. Reliab. 2024, 34, e1892. [Google Scholar] [CrossRef]

- Barbie, A.; Hasselbring, W. From Digital Twins to Digital Twin Prototypes: Concepts, Formalization, and Applications. IEEE Access 2024, 12, 75337–75365. [Google Scholar] [CrossRef]

- Ross, W.D.; Aristotle. Aristotle’s Metaphysics: A Revised Text with Introduction and Commentary; Clarendon Press: Oxford, UK, 1924. [Google Scholar]

- Kopetz, H.; Bondavalli, A.; Brancati, F.; Frömel, B.; Höftberger, O.; Iacob, S. Emergence in Cyber-Physical Systems-of-Systems (Cpsoss). In Cyber-Physical Systems of Systems: Foundations—A Conceptual Model and Some Derivations: The Amadeos Legacy; Bondavalli, A., Bouchenak, S., Kopetz, H., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 73–96. [Google Scholar]

- Mittal, S.; Diallo, S.; Tolk, A. Emergent Behavior in Complex Systems Engineering: A Modeling and Simulation Approach; Wiley: Hoboken, NJ, USA, 2018. [Google Scholar]

- Snowden, D. Cognitive Edge. Available online: https://www.cognitive-edge.com/ (accessed on 25 November 2020).

- Kjeldaas, K.A.; Haugen, R.A.; Syverud, E. Challenges in Detecting Emergent Behavior in System Testing. In Proceedings of the INCOSE International Symposium 2021, Virtual, 17–22 July 2021. [Google Scholar]

- Skreddernes, O.; Haugen, R.A.; Haskins, C. Coping with Verification in Complex Engineered Product Development. In Proceedings of the INCOSE International Symposium, Honolulu, HI, USA, 15–20 July 2023. [Google Scholar]

- Raman, R.; Jeppu, Y. An Approach for Formal Verification of Machine Learning based Complex Systems. In Proceedings of the INCOSE International Symposium 2019, Orlando, FL, USA, 20–25 July 2019. [Google Scholar]

- Raman, R.; Jeppu, Y. Formal validation of emergent behavior in a machine learning based collision avoidance system. In Proceedings of the 14th Annual IEEE International Systems Conference, SYSCON 2020, Virtual, 24–27 August 2020. [Google Scholar]

- Raman, R.; D’Souza, M. Decision learning framework for architecture design decisions of complex systems and system-of-systems. Syst. Eng. 2019, 22, 538–560. [Google Scholar] [CrossRef]

- Raman, R.; Jeppu, Y. Does the Complex SoS Have Negative Emergent Behavior? Looking for Violations Formally. In Proceedings of the 15th Annual IEEE International Systems Conference, SysCon 2021, Virtual, 15 April–15 May 2021. [Google Scholar]

- Raman, R.; Gupta, N.; Jeppu, Y. Framework for Formal Verification of Machine Learning Based Complex System-of-System. In Proceedings of the INCOSE International Symposium 2021, Virtual, 17–22 July 2021. [Google Scholar]

- Murugesan, A.; Raman, R. Reinforcement Learning for Emergent Behavior Evolution in Complex System-of-Systems. In Proceedings of the ICONS: The Sixteenth International Conference on Systems, Porto, Portugal, 18–22 April 2021. [Google Scholar]

- Enoiu, E.; Sundmark, D.; Causevic, A.; Pettersson, P. A Comparative Study of Manual and Automated Testing for Industrial Control Software. In Proceedings of the IEEE International Conference on Software Testing, Verification and Validation (ICST), Tokyo, Japan, 13–17 March 2017. [Google Scholar]

- Øvergaard, A.; Muller, G. System Verification by Automatic Testing. In Proceedings of the INCOSE International Symposium, Philadelphia, PA, USA, 24–27 June 2013. [Google Scholar]

- Giammarco, K. Practical modeling concepts for engineering emergence in systems of systems. In Proceedings of the 12th System of Systems Engineering Conference (SoSE), Waikoloa, HI, USA, 18–21 June 2017. [Google Scholar]

- Szabo, C.; Teo, Y. An integrated approach for the validation of emergence in component-based simulation models. In Proceedings of the Winter Simulation Conference, Berlin, Germany, 9–12 December 2012. [Google Scholar]

- Muller, G.; Heemels, W.P.M. Five Years of Multi-Disciplinary Academic and Industrial Research: Lessons Learned. In Proceedings of the Conference on Systems Engineering Research 2007, Hoboken, NJ, USA, 14–16 March 2007. [Google Scholar]

- Muller, G. Industry-as-Laboratory Applied in Practice: The Boderc Project. Available online: www.gaudisite.nl/IndustryAsLaboratoryAppliedPaper.pdf (accessed on 29 December 2024).

- Saunders, M.N.K.; Lewis, P.; Thornhill, A. Research Methods for Business Students, 8th ed.; Pearson: New York, NY, USA, 2019. [Google Scholar]

- Dassault. Available online: www.3ds.com/ (accessed on 10 October 2023).

- Roy, R.K. A Primer on the Taguchi Method, 2nd ed.; Kehoe, E.J., Ed.; Society of Manufacturing Engineers: Dearborn, MI, USA, 2010. [Google Scholar]

- Haugen, R.A.; Ghaderi, A. Modelling and Simulation of Detection Rates of Emergent Behaviors in System Integration Test Regimes. In Proceedings of the SIMS, Virtual, 22–24 September 2020. [Google Scholar]

- Minitab. Available online: www.minitab.com (accessed on 29 March 2023).

- Python. Available online: www.python.org (accessed on 29 March 2023).

- SEBoK. System Integration; Cloutier, R., Ed.; International Council on Systems Engineering (INCOSE): San Diego, CA, USA, 2023. [Google Scholar]

- Feiler, P.H.; Hanson, J.; de Niz, D.; Wrage, L. System Architecture Virtual Integration: An Industrial Case Study; Software Engineering Institute: Pittsburgh, PA, USA, 2009. [Google Scholar]

- Incose; Wiley. INCOSE Systems Engineering Handbook, 5th ed.; John Wiley & Sons, Incorporated: New York, NY, USA, 2023. [Google Scholar]

- Grieves, M.; Vickers, J. Digital Twin: Mitigating Unpredictable, Undesirable Emergent Behavior in Complex Systems. In Transdisciplinary Perspectives on Complex Systems: New Findings and Approaches; Kahlen, F.-J., Flumerfelt, S., Alves, A., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 85–113. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).