Abstract

With the continuous development of information technology and the rapid increase in new users of social networking sites, recommendation technology is becoming more and more important. After research, it was found that the behavior of users on social networking sites has a great correlation with their personalities. The five characteristics of the OCEAN personality model can cover all aspects of a user’s personality. In this research, a micro-directional propagation model based on the OCEAN personality model and a Stacked Denoising Auto Encoder (SDAE) was built through the application of deep learning to a collaborative filtering technique. Firstly, the dimension of the user and item feature matrices was lowered using SDAE in order to extract deeper information. The user OCEAN personality model matrix and the reduced user feature matrix were integrated to create a new user feature matrix. Finally, the multiple linear regression approach was used to predict user-unrated goods and generate recommendations. This approach allowed us to leverage the relationships between various factors to deliver personalized recommendations. This experiment evaluated the RMSE and MAE of the model. The evaluation results show that the stacked denoising auto encoder collaborative filtering algorithm can improve the accuracy of recommendations, and the user’s OCEAN personality model improves the accuracy of the model to a certain extent.

1. Introduction

With the increasing usage of the Internet, recommendation systems have become an important application and research topic. For a recommendation system, it needs not only rich features but also well-learned useful features. Among the earliest recommendation systems, the collaborative filtering recommendation algorithm [1,2,3,4] is very famous. It discovers users’ preferences by mining their historical behavior data, classifies them by their different preferences, and then recommends similar products. In 2021, Y Ding et al. [4] proposed a concise and efficient multi-scale relational network, RICF, which combined with the collaborative filtering algorithm to improve recommendation systems. Experimental results on realistic datasets showed that RICF performs better than traditional item-based collaborative filtering algorithms and state-of-the-art sequence models, such as LSTM and GRU, and is more interpretable. As the scale of the scoring data has increased and auxiliary information is added, researchers have tried to find a way to learn the underlying characteristics by combining neural networks and collaborative filtering [5,6]. Among the current collaborative filtering algorithms, the most effective and common algorithm is to micro-directionally propagate users by extracting potential factors from the user–item scoring matrix based on singular value decomposition (SVD) [7,8]. In 2016, Braida et al. [9] proposed a collaborative filtering-to-supervised learning approach (COFILS), which transforms the collaborative filtering (CF) problem into a classical supervised learning (SL) problem. The experimental results showed that this method is much better than the traditional CF method. It is worth noting that the application of COFILS reduces the sparseness of the data. COFILS contains three main steps: extraction, mapping, and prediction. Firstly, the user feature matrix and the item feature matrix are generated from the user–item scoring matrix by singular value decomposition (SVD). Next, during the mapping phase, a new spatial matrix is generated, where each sample contains a set of potential variables from the user, each evaluation item, and a target corresponding to the user rating of the item. Finally, to predict the user’s score, a supervised learning approach is applied. The COFILS method reduces the sparseness of data; there seems to be another problem with its SVD reliance that causes low computational efficiency when dealing with sparse matrices. In addition, COFILS is unable to extract nonlinear features from the data and is not robust to noise data.

In recent years, the emergence and development of large language models (LLMs) have greatly promoted the performance of recommendation systems. LLMs deal with various recommendation tasks by translating them into linguistic instructions [10]. With superior natural language understanding, LLMs are able to understand user preferences, item descriptions, and contextual information to provide more satisfactory recommendations. In addition, deep learning has also performed very well in the field of recommendation systems [11,12,13]. This approach leverages the ability of neural networks to learn complex patterns from data, making it particularly powerful for recommendation tasks. At the same time, with the advent of multi-aspect temporal–textual embedding in author linking [14], many recommendation systems based on text emotion have also emerged. In particular, it should be noted that some deep learning models, such as context-aware models [15] and EmoDNN [16], make deep learning-based emotion understanding more accurate, which further improves the personalization of the recommendation system. In 2023, Baqach, A et al. proposed a new method called CLAS (CNN–LSTM–Attention–SVM) based on CNNs, LSTMs, Attention mechanisms, and support vector machines [17]. Then, a recommendation system was designed based on short text sentiment. And these researchers carried out experiments on three known sentiment-labeled datasets. However, there are several problems with deep learning as well, such as cold starts and sparse data, which need additional resources and methods to solve. Some improved methods are usually used to solve the sparseness of data and the lack of cold starts by adding additional information. To obtain additional information, there are a variety of linear techniques that can apply dimensionality reduction to extract potential factors from raw data. Auto encoder [18], which was introduced by Hinton and Salakhutdinov in 2006, is a neural network that can be stacked to form a deep architecture to perform nonlinear dimensionality reduction in raw data. Nowadays, it has been applied successfully in many areas, such as images [19], text [20], and audio [21]. An automatic encoder can be used to learn more useful and complex representations in neural networks with local denoising criteria [22,23].

The OCEAN personality model [24] is a theory that can represent personality characteristics. A user’s OCEAN personality model looks for words that can summarize the user’s personality in the form of word aggregation, which can describe their personality characteristics well [25]. After a long period of research and refinement, psychologists developed questionnaires to measure the various dimensions of the OCEAN personality model. Moreover, through cross-cultural research, it was found that the OCEAN personality model has a similar effect on different cultural backgrounds and has cross-cultural and cross-regional robustness [26,27,28]. Although the OCEAN personality model has been extensively studied in psychology and other fields, there has been less research linking it to collaborative filtering algorithms. Moreover, the current research on recommendation systems based on collaborative filtering algorithms mainly focuses on technical improvement while ignoring the potential mechanism of personalized recommendations. Therefore, exploring the relationship between personality traits and collaborative filtering is a field worthy of further research, which is helpful to improve the degree of individuation and user satisfaction of the recommendation system.

We analyzed the statistics’ correlation between the user and the feature space and found that user behavior data, including clicks and ratings, is closely related to product features, such as categories and ratings. This correlation provides a novel perspective for enhancing recommendation algorithms. To improve the micro-directional propagation algorithm, this paper combines deep learning and collaborative filtering to establish a hybrid micro-directional propagation model based on the user OCEAN personality model and an SDAE (Stacked Denoising Auto Encoder) [26]. The research goals of this work are as follows:

- (1)

- Feature extraction based on an SDAE

Firstly, the user–item rating matrix was generated by collecting the user’s movie scoring data, and the item feature matrix and the user feature matrix were extracted from the user–item scoring matrix. Secondly, the SDAE was used to reduce the dimensions of the user and item feature matrices to extract richer information.

- (2)

- Generation of the integrated feature matrix

After the feature dimension reduction based on the SDAE, the OCEAN personality model was added to the user feature matrix to form a novel user feature matrix. And then, the novel user feature matrix was combined with the item feature matrix to generate the comprehensive feature matrix and the corresponding user score vector.

- (3)

- Rating prediction

Linear regression was used to predict user ratings. And the recommendation system gave recommendations.

2. Method

In this study, we aimed to improve the personalization and accuracy of recommendation systems. Current recommendation systems often ignore the importance of users’ personality characteristics in the recommendation process. Therefore, this study aimed to solve this problem in recommendation systems, that is, how to effectively integrate the personality characteristics of users to improve the accuracy of recommendations. We propose a collaborative filtering-based model that achieves a more comprehensive representation of user characteristics by combining the OCEAN model features of users with traditional recommendation algorithms.

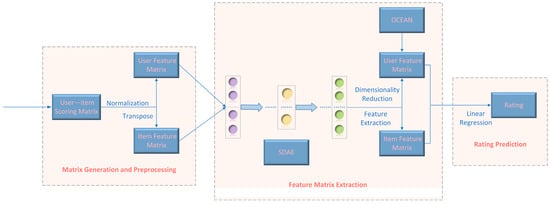

As shown in Figure 1, this model is mainly composed of three parts: matrix generation and preprocessing, feature matrix extraction based on the SDAE, and rating prediction with linear regression. In the first part, the model uses the data collected from Sina Weibo to create a user–item scoring matrix and then extracts the feature matrices of the user and item and normalizes them. In the second part of the model, the SDAE is used to complete the dimension reduction and feature extraction of the user and item feature matrices. However, this user feature matrix is one-sided. In order to obtain more accurate rating prediction results, we need a more comprehensive user feature matrix. In some ways, users’ ratings of movies are related to their personalities. And, as shown in Figure 2, the OCEAN model provides a more comprehensive description of the user’s personal characteristics in five dimensions: openness, conscientiousness, extroversion, agreeableness, and neuroticism [29]. Reference [29] showed that these five traits are a very comprehensive and accurate summary of a person’s personality. Selecting only the OCEAN model can achieve the purpose of building a recommendation system based on personality characteristics. Therefore, the OCEAN matrix is added to the user features to form a more comprehensive user feature matrix. Finally, in the third part, the model uses linear regression to predict the rating of the movies that the user has not rated.

Figure 1.

The overview framework of the SDAE-OCEAN.

Figure 2.

The five dimensions of the OCEAN personality model.

2.1. Matrix Generation and Preprocessing

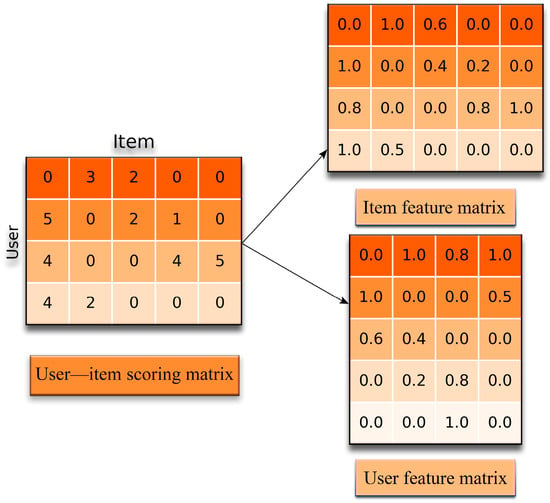

According to the website user’s rating of an item, this model creates a user–item scoring matrix , including m users and n items. represents user i’s rating of item j. indicates that user i is unobservable for item j, which means that user i does not score item j; otherwise, it is observable. Then, we obtain two matrices. One is the user feature matrix, the matrix , which will be used in later steps to extract a matrix containing the potential factors of the user. The other one is the item feature matrix, the transposition of the matrix , which will be used in subsequent steps to extract a matrix containing the underlying factors of the item.

Once the matrices are created, they need to be normalized. The normalization of the matrix is a basic operation in data processing, after which the data can be uniformly evaluated. There are many ways to normalize the matrix, such as through means and through standard deviations. Since each user has different rating habits, each user’s rating will be biased relative to the average. In order to make the user’s personal scoring habits have no effect on the creation of the user feature matrix and the item feature matrix, in the process of normalization, each user is normalized separately, that is, each row of the user–item scoring matrix is normalized as shown in Figure 3.

Figure 3.

Normalizing the user–item scoring matrix.

Therefore, the normalized matrix is calculated by dividing each value of the user–item scoring matrix by the maximum value of its row [30].

2.2. Feature Matrix Extraction Based on SDAE

This section introduces a nonlinear dimensionality reduction method for the user and item matrices based on the SDAE to extract latent factors of users and items. Then, to obtain more comprehensive user features, this paper adds the user’s OCEAN personality model matrix to the user feature matrix after dimension reduction to form a novel user feature matrix.

2.2.1. Dimensionality Reduction Based on SDAE

The normalized user feature matrix is used to perform the dimension reduction of the SDAE, and the potential factor matrix included in the user–item scoring matrix , that is, a new user feature matrix, is obtained. Similarly, the normalized item feature matrix is used to perform the dimensionality reduction of the SDAE, and the potential factor matrix contained in the user–item scoring matrix , that is, a new item feature matrix, is obtained.

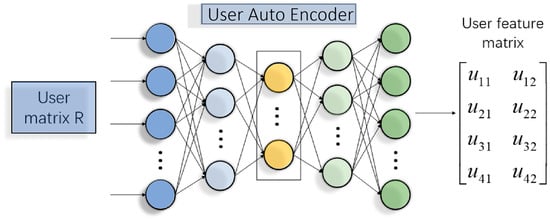

The SDAE dimension reduction process for the user matrix is shown in Figure 4. It is assumed that the user’s features become two-dimensional after the SDAE dimension reduction. Since the SDAE dimension reduction process for the item matrix is similar to the SDAE dimension reduction process for the user matrix, the specific process is not repeated here.

Figure 4.

Dimensionality reduction process for the user matrix.

The process of using the SDAE [26] to reduce the dimensionality of the user and item feature matrices is divided into two steps: pre-training and fine-tuning. It is assumed that is used to represent the corresponding to the k-th noise reduction encoder. The process of obtaining the dimensionality reduction matrix of the user matrix is as follows:

Noise is randomly added to the input data, sigmoid is used as the activation function, and the squared error algorithm is used as the cost function to train the first layer of the denoising encoder through unsupervised training. The parameters of one layer are obtained by optimizing the objective function, shown as Equation (1):

According to Equation (1), the first layer of the input data training model is used to obtain the parameter, , of the first layer through iterative correction. The output of the hidden layer of the upper layer noise reduction encoder is used as the input of the layer automatic encoder, the same noise as the previous layer is added, and the encoder is still trained in an unsupervised manner to obtain the parameter, , of the second layer.

Then, the previous step is repeated until all automatic encoder network parameter initialization is complete.

In the last hidden layer plus the decoded part, the expansion constitutes the entire neural network. At this point, the parameters of the entire network, from input to output, are

After the initialization, the parameters of the network are fine-tuned using the back propagation method to obtain the parameters of the layers that produce the best results. After the completion of the fine-tuning process, the middle layer of the SDAE in Figure 4, that is, the last layer of the coding process, is calculated as the user feature matrix after the dimension reduction of the user matrix.

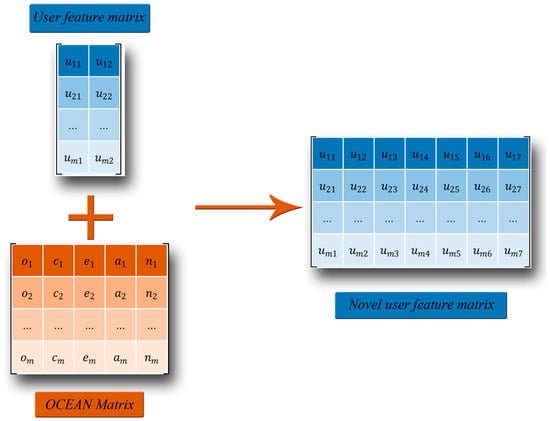

2.2.2. A More Comprehensive User Feature Matrix

However, the user feature matrix obtained above is only extracted from the user–item scoring matrix, and the user information it contains is often not comprehensive enough. A person’s personality traits, which affect their ratings of movies, are also part of the user feature. Therefore, in this section, the proposed model adds the OCEAN personality model of the user to the user feature matrix to form a novel and comprehensive user feature matrix.

To implement this process, it is first necessary to normalize the OCEAN personality model of the users. For m users, the OCEAN personality model matrix is a 5-dimensional matrix, and it is assumed that the user feature matrix after the dimensionality reduction of the previous SDAE is 2-dimensional. They have the same number of rows and can be concatenated horizontally, resulting in a novel user feature matrix as shown in Figure 5 below.

Figure 5.

A novel user feature matrix.

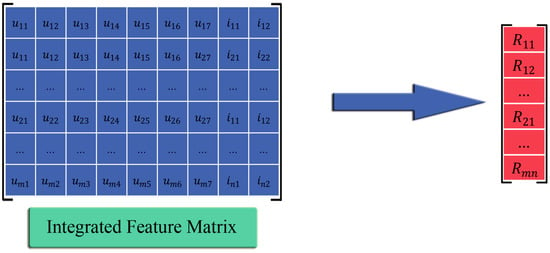

2.2.3. Rating Prediction with Linear Regression

Before using linear regression to predict the ratings of the unrated items of users, this model first generates an integrated feature matrix by using the item feature matrix and the novel user feature matrix obtained in the previous step according to the COFILS method [31]. The nonzero part is expanded into a one-dimensional matrix to generate a supervised dataset. Here, it is assumed that the item feature matrix becomes two-dimensional after the dimensionality reduction, as shown in Figure 6.

Figure 6.

A novel user feature matrix.

The integrated feature matrix is used as a sample input for supervised sets in the next step. The output of the supervised data is arranged by arranging the user–item scoring matrix by row number and transposing it, and then removing the vector consisting of 0 items. Assuming that the total of the K values in the matrix is not zero, the right vector is a vector of K dimensions. The vector on the right will be used as the sample output of the supervised dataset in the next step. Assuming that a value of the sample output vector is , it represents the score of the q-th movie by the p-th user. The row corresponding to the sample input is the p-th row of the novel user feature matrix and the q-th row of the item feature matrix. For example, the first row of the above matrix represents the first row of the novel user feature matrix and the first row of the item feature matrix, corresponding to the first user’s rating of the first item.

Multiple linear regression is applied to train the data according to the above supervised dataset [30].

The model of multiple linear regression is given by Equation (3):

where represents the feature component in the integrated feature matrix and represents the parameter to be trained. Using the squared error algorithm as a cost function, the model is optimized by minimizing the objective function, shown in Equation (4):

The method of minimizing the objective function is to update the parameter set by the gradient descent so that the fitted output value is as close as possible to the actual output value.

After the parameter set is trained, the user’s score on the item can be predicted based on the comprehensive feature matrix. And we can select items with high scores to recommend to users.

3. Experiments and Results

Before we start the experiment, we need to initialize the neural network. The initialization is divided into two parts: one is the initialization of the parameters of the SDAE model, and the other is the initialization of the parameters of the fine-tuning process. In the initialization of the SDAE model, first of all, 10% random noise should be introduced into each layer of the SDAE. The learning rate of each denoising encoder is then set to 0.001. Finally, the iteration number of the DAE is set. According to the previous study, the best results were obtained when the number of iterations was 14. This is followed by the initialization of the fine-tuning process. The set of pre-trained parameters , the iterations, and the number of hidden layers were set in turn. The effect of iteration and the number of hidden layers on the experiment and the best value is discussed in detail in Section 3.2. Now that we have completed the parameter setting and initialization process of the proposed model, the next thing we need to do is collect data.

3.1. Dataset

3.1.1. Collecting Data

The micro-directional propagation model is designed to recommend products to users based on their habits, which requires collecting historical data on users, including explicit and implicit data [32]. Explicit user behavior data refer to the digital footprint left by users on a website, including comments, ratings, etc., while implicit user behavior data refer to the product of users’ non-active consciousness in the process of using data space, including the number of visits, page pause time, etc.

This study tested OCEAN personality models by distributing NEO questionnaires [24] to Sina Weibo users. At the same time, to meet data accuracy requirements, some conditions were set:

- The user under test needed to provide an accurate account number.

- The account had to have posted more than 100 tweets.

- The time it took to answer the questionnaire needed to be more than 200 s.

Then, a crawler was developed to obtain some public data of these users as an experimental dataset. The filter standards were that the user’s Weibo homepage must have movie rating data, they must have posted more than 100 microblogs, there should not be multiple of the same ratings of the same movie, and more than three movies must have been reviewed.

3.1.2. Data Processing

Then, we counted the results from the screened questionnaire. Based on the screening criteria for Sina Weibo users, the microblog information of 2012 Sina Weibo users was finally obtained. And then, we obtained the 2012 users’ OCEAN personality models. Each user’s OCEAN personality model contained five eigenvalues forming a five-dimensional vector, in terms of and . Table 1 presents a subset of valid user OCEAN model cases.

Table 1.

OCEAN personality model data for some users.

After using a Java program to crawl the homepage data of Sina Weibo users who met the above screening criteria, the film score data of 2012 Sina Weibo users were obtained based on the screening criteria of Sina Weibo users, and a total of 4579 films were evaluated by these users. After processing, we obtained the user–item scoring matrices of the 2012 Sina Weibo users.

The data of each user were stored in the text document, and after processing, the user’s score of the movie was obtained, ranging from 0 to 5. The acquired data were converted into a user–item scoring matrix , and the missing scores in the matrix were filled with 0. Now that we had collected data, the next thing was to use the SDAE for pre-training. The reconstruction error was minimized by the gradient descent method to complete the initialization of the neural network parameters.

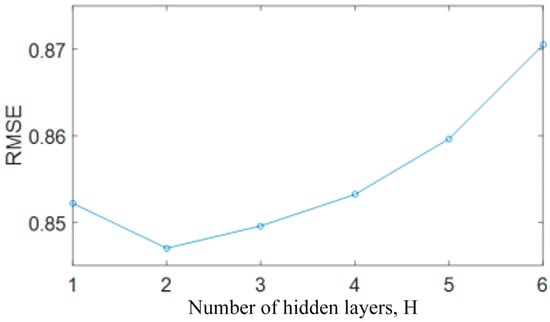

3.2. Experiment on Parameters

In this experiment, the influence of the number of hidden layers and the number of iterations on the experimental results were studied by the control variable method, and the optimal parameter value was determined to make the model optimal. In this paper, root mean square error (RMSE) [33] is used as the evaluation index to describe the influence of these two parameters on the experimental results, and the minimum RMSE means the best model results.

The experimental results obtained by increasing the number of hidden layers in the SDAE from one to six are shown in Figure 7. As can be seen from the figure, the best experimental results were obtained when the number of hidden layers in the SDAE was two.

Figure 7.

Effect of hidden layer number, H, on experimental results.

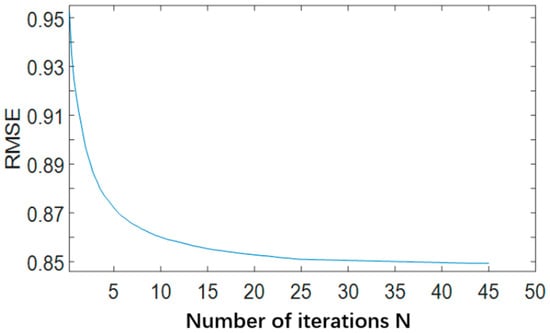

Based on the experimental results on the number of hidden layers in the SDAE, we set the number of hidden layers to two and increased the number of iterations from 1 to 45 to observe the influence of the number of iterations on the experiment. The experimental results are shown in Figure 8.

Figure 8.

Effect of the number of iterations, N, on the experimental results.

3.3. Comparative Experiment

Based on the above experiments, we set the number of iterations N to 30 and the number of hidden layers to two to complete the initialization of the model. Then, the proposed model was compared with several other models to verify its effectiveness. It is worth noting that in addition to RMSE, this experiment also used the mean absolute error (MAE) as an evaluation index [33].

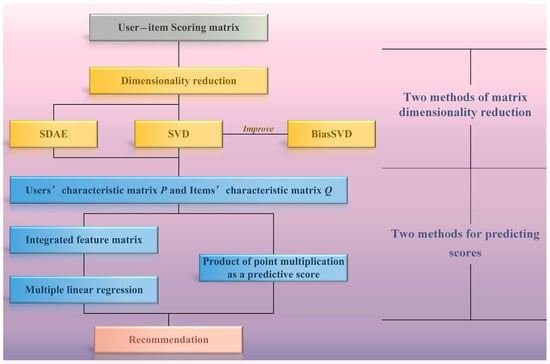

The process of micro-directional propagation is usually divided into three steps. Firstly, the algorithm simplified data representation by reducing the dimension of the user–item rating matrix. Then, the user feature matrix and the item feature matrix after dimensionality reduction were used to estimate the user’s score for the item. Finally, the top N items were recommended to the user based on the predicted scores. Figure 9 shows that the matrix dimensionality reduction in the first step can mainly be achieved by two methods, SDAE and SVD, and the score prediction of unrated items by users can also be achieved by two methods.

Figure 9.

Algorithm flow.

In this experiment, some of the above algorithms were evaluated and compared in the case of adding the user’s OCEAN personality model or not adding the user’s OCEAN personality model. An illustration of several evaluation methods is shown in Table 2.

Table 2.

The descriptions of several evaluation methods.

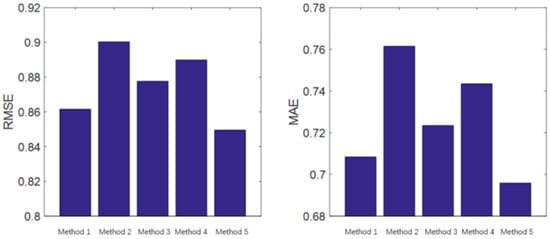

The experiments were implemented on the above five methods, separately. And the experimental results are shown in Figure 10 below.

Figure 10.

Comparison of method results.

4. Discussion

As can be seen from Figure 7, as the number of hidden layers increases from one to six, the model works better when the number of hidden layers is one to three. As the number of layers increases, the RMSE value above layer 3 is trending upward. This result is caused by the saturation of the sigmoid function, which has a gradient close to 0 when the value of the activation function is very close to 0 or 1. This shows that even if the initial weight can be determined first through greedy layer-by-layer training, to a certain extent, gradient diffusion can be prevented. However, it is not that the network effect of more layers is better, and it is necessary to choose the appropriate number of layers according to the actual situation. As can be seen from Figure 8, as the number of iterations increases from 1 to 45, the RMSE of both models decreases through constant parameter modification, making the recommended accuracy higher. This is perhaps because more unnecessary operations are added to the model as the number of iterations increases. For example, overfitting occurs when the recommended time becomes longer. Through the above experiments, it can be proven that optimal results can be obtained with between 25 and 45 iterations of the model.

According to the comparison chart of the results in Figure 10, the RMSE value and the MAE value of the experimental results using Method 5 are the smallest, which indicates that its recommended effect is the best. Specifically, from the perspective of whether to add the user’s OCEAN personality model, comparing the results of Method 5 and Method 4, it can be found that after adding the user’s OCEAN personality model to the recommendation system, the recommendation accuracy measured by the RMSE is improved by 4.53% and the recommendation accuracy measured by the MAE is improved by 6.41%. Comparing the results of Method 3 and Method 2, it can be found that after adding the user’s OCEAN personality model to the recommendation system, the recommendation accuracy measured by the RMSE is improved by 2.52% and the recommendation accuracy measured by the MAE is improved by 4.99%. The above two sets of comparative experiments prove the usefulness of the user’s OCEAN personality model for the recommendation system.

From the perspective of dimensionality reduction methods, comparing the results of Method 4 and Method 2, it can be found that in the model using the SDAE for dimensionality reduction, the accuracy of the proposal with the RMSE as the index is increased by 1.16%, and the accuracy of the proposal with the MAE as the index is increased by 2.32%. This proves that more information contained in the data can be extracted through the nonlinear dimension reduction of the SDAE, and richer information can make the recommendation system more accurate. Finally, the proposed model is compared with the BiasSVD + OCEAN personality model, and it can be seen from Figure 10 that the recommendation effects of Method 1 and Method 5 are the best among the five methods. From the RMSE index, the accuracy of Method 5 is 1.39% higher than that of Method 1. From the MAE metric, the accuracy of Method 5 is 1.77% higher than that of Method 1. However, Method 1 clusters users according to their OCEAN personality model, which can solve the cold start problem in some micropropagation systems.

5. Conclusions

In this paper, an innovative hybrid micro-directional propagation model is proposed by combining the OCEAN personality model of users to improve the micro-directed algorithm. By combining deep learning and collaborative filtering, the model can better understand the user’s personality characteristics and the relevance of items. Firstly, the SDAE was used to reduce the dimensions of the user feature matrix and the item feature matrix. Then, the OCEAN personality model of the user was introduced to the user feature matrix to form a novel user feature matrix. Finally, a linear regression training dataset was used to predict the user’s preference for unrated items. The control variable method was used to prove the importance of parameters on the performance of the model. The comparative experiments showed that the introduction of the OCEAN personality model improved the algorithm’s effect by at least 2.52% in the RMSE and 4.99% in the MAE. This shows that it is effective and feasible to introduce the personality model into the recommendation system.

In summary, the model proposed in this paper has a very broad application prospect in the field of personalized recommendations. However, this model still has some areas worth improving, and the following measures can be taken to further improve the micro-directional propagation model in future work: First, non-text feature information (such as pictures, videos, etc.) can be added to the study of the OCEAN personality model of users to identify the user’s personality model more comprehensively. In addition, considering that the user personality model and the popularity of items may change over time, a time factor can be added to the process of micro-directional propagation.

Author Contributions

Conceptualization, X.C., L.W. and W.Z.; methodology, R.W., S.L., Z.Y. and B.W.; software, R.W. and B.W.; formal analysis, B.W., S.L., L.W. and Z.Y.; data curation, R.W. and S.L.; writing—original draft preparation, B.W., L.Y. and W.Z.; writing—review and editing, W.Z., R.W. and L.Y.; funding acquisition, W.Z. and X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Sichuan Science and Technology Program [2023YFSY0026, 2023YFH0004].

Data Availability Statement

Data are available on request due to restrictions, e.g., privacy or ethical restrictions. The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors express their sincere appreciation and profound gratitude to the team for their help and support with the collection and sorting of the data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, C.; Kong, X.; Li, X.; Zhang, T. Collaborative Filtering Recommendation Algorithm Based on User Attributes and Item Score. Sci. Program. 2022, 2022, 4544152. [Google Scholar] [CrossRef]

- Pan, Z.; Chen, H. Efficient graph collaborative filtering via contrastive learning. Sensors 2021, 21, 4666. [Google Scholar] [CrossRef]

- Sun, Q.; Shi, L.-L.; Liu, L.; Han, Z.-X.; Jiang, L.; Lu, Y.; Panneerselvam, J. A Dynamic Collaborative Filtering Algorithm based on Convolutional Neural Networks and Multi-layer Perceptron. In Proceedings of the 2021 20th International Conference on Ubiquitous Computing and Communications (IUCC/CIT/DSCI/SmartCNS), London, UK, 20–22 December 2021; pp. 459–466. [Google Scholar]

- Yang, N.; Chen, L.; Yuan, Y. An improved collaborative filtering recommendation algorithm based on retroactive inhibition theory. Appl. Sci. 2021, 11, 843. [Google Scholar] [CrossRef]

- Biswas, P.K.; Liu, S. A hybrid recommender system for recommending smartphones to prospective customers. Expert Syst. Appl. 2022, 208, 118058. [Google Scholar] [CrossRef]

- Yin, P.; Ji, D.; Yan, H.; Gan, H.; Zhang, J. Multimodal deep collaborative filtering recommendation based on dual attention. Neural Comput. Appl. 2023, 35, 8693–8706. [Google Scholar] [CrossRef]

- Anwar, T.; Uma, V.; Srivastava, G. Rec-cfsvd++: Implementing recommendation system using collaborative filtering and singular value decomposition (svd)++. Int. J. Inf. Technol. Decis. Mak. 2021, 20, 1075–1093. [Google Scholar] [CrossRef]

- Huang, T.; Zhao, R.; Bi, L.; Zhang, D.; Lu, C. Neural embedding singular value decomposition for collaborative filtering. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6021–6029. [Google Scholar] [CrossRef] [PubMed]

- Braida, F.; Mello, C.E.; Pasinato, M.B.; Zimbrão, G. Transforming collaborative filtering into supervised learning. Expert Syst. Appl. 2015, 42, 4733–4742. [Google Scholar] [CrossRef]

- Hua, W.Y.; Li, L.; Xu, S.Y.; Chen, L.; Zhang, Y.F. Tutorial on Large Language Models for Recommendation. In Proceedings of the 17th ACM Conference on Recommender Systems (RecSys), Singapore, 18–22 September 2023; ACM: New York, NY, USA, 2023; pp. 1281–1283. [Google Scholar]

- Kim, J.; Wi, J.; Kim, Y. Sequential recommendations on github repository. Appl. Sci. 2021, 11, 1585. [Google Scholar] [CrossRef]

- Shareef, M.P.; Jimson, L.R.; Jose, B.R. Recommendation Systems: A Comparative Analysis of Classical and Deep Learning Approaches. In Proceedings of the 2021 8th International Conference on Smart Computing and Communications (ICSCC), Kochi, India, 1–3 July 2021; pp. 269–274. [Google Scholar]

- Tang, C.; Zhang, J. An intelligent deep learning-enabled recommendation algorithm for teaching music students. Soft Comput. 2022, 26, 10591–10598. [Google Scholar] [CrossRef]

- Najafipour, S.; Hosseini, S.; Hua, W.; Kangavari, M.R.; Zhou, X.F. SoulMate: Short-Text Author Linking through Multi-Aspect Temporal-Textual Embedding. Ieee Trans. Knowl. Data Eng. 2022, 34, 448–461. [Google Scholar] [CrossRef]

- Shenoy, A.; Sardana, A.; Assoc Comp, L. Multilogue-Net: A Context Aware RNN for Multi-modal Emotion Detection and Sentiment Analysis in Conversation. In Proceedings of the 58th Annual Meeting of the Association-for-Computational-Linguistics (ACL)/2nd Grand Challenge and Workshop on Multimodal Language (Challenge-HML), Electr Network, Online, 10 July 2020; pp. 19–28. [Google Scholar]

- Wang, S.; Xu, C.; Ding, A.S.; Tang, Z. A Novel Emotion-Aware Hybrid Music Recommendation Method Using Deep Neural Network. Electronics 2021, 10, 1769. [Google Scholar] [CrossRef]

- Baqach, A.; Battou, A. CLAS: A new deep learning approach for sentiment analysis from Twitter data. Multimed. Tools Appl. 2023, 82, 47457–47475. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Ghasrodashti, E.K.; Sharma, N. Hyperspectral image classification using an extended Auto-Encoder method. Signal Process. Image Commun. 2021, 92, 116111. [Google Scholar] [CrossRef]

- Li, A.; Zhang, F.; Li, S.; Chen, T.; Su, P.; Wang, H. Efficiently generating sentence-level textual adversarial examples with Seq2seq Stacked Auto-Encoder. Expert Syst. Appl. 2023, 213, 119170. [Google Scholar] [CrossRef]

- Kang, Y.; Liu, T.; Li, H.; Hao, Y.; Ding, W. Self-supervised audio-and-text pre-training with extremely low-resource parallel data. In Proceedings of the AAAI Conference on Artificial Intelligence, online, 22 February–1 March 2022; Volume 36, pp. 10875–10883. [Google Scholar]

- Chen, Z.; Lu, H.; Tian, S.; Qiu, J.; Kamiya, T.; Serikawa, S.; Xu, L. Construction of a hierarchical feature enhancement network and its application in fault recognition. IEEE Trans. Ind. Inform. 2020, 17, 4827–4836. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, S.; Li, X.; Zhao, H.; Zhu, L.; Chen, S. Ground target recognition using carrier-free UWB radar sensor with a semi-supervised stacked convolutional denoising autoencoder. IEEE Sens. J. 2021, 21, 20685–20693. [Google Scholar] [CrossRef]

- Qin, X.; Liu, Z.; Liu, Y.; Liu, S.; Yang, B.; Yin, L.; Liu, M.; Zheng, W. User OCEAN personality model construction method using a BP neural network. Electronics 2022, 11, 3022. [Google Scholar] [CrossRef]

- Ban, Y.; Liu, Y.; Yin, Z.; Liu, X.; Liu, M.; Yin, L.; Li, X.; Zheng, W. Micro-Directional Propagation Method Based on User Clustering. Comput. Inform. 2023, 42, 1445–1470. [Google Scholar] [CrossRef]

- Janowski, A.; Szczepanska-Przekota, A. The Trait of Extraversion as an Energy-Based Determinant of Entrepreneur’s Success-The Case of Poland. Energies 2022, 15, 4533. [Google Scholar] [CrossRef]

- Mak, K.K.; Scheer, B.; Yeh, C.-H.; Ohno, S.; Nam, J.K. Associations of Personality Traits with Internet Addiction: A Cross-Cultural Meta-Analysis with a Multilingual Approach. Cyberpsychol. Behav. Soc. Netw. 2021, 24, 777–798. [Google Scholar] [CrossRef] [PubMed]

- Orlando Lopez-Pabon, F.; Rafael Orozco-Arroyave, J. Evaluation of Different Word Embeddings to Create Personality Models in Spanish. In Proceedings of the 8th Workshop on Engineering Applications (WEA), Medellin, Colombia, 6–8 October 2021; pp. 121–132. [Google Scholar]

- Redelmeier, D.A.; Najeeb, U.; Etchells, E.E. Understanding patient personality in medical care: Five-factor model. J. Gen. Intern. Med. 2021, 36, 2111–2114. [Google Scholar] [CrossRef] [PubMed]

- Jeon, E.I.; Kim, S.; Park, S.; Kwak, J.; Choi, I. Semantic segmentation of seagrass habitat from drone imagery based on deep learning: A comparative study. Ecol. Inform. 2021, 66, 101430. [Google Scholar] [CrossRef]

- Barbieri, J.; Alvim, L.G.M.; Braida, F.; Zimbrao, G. Autoencoders and recommender systems: COFILS approach. Expert Syst. Appl. 2017, 89, 81–90. [Google Scholar] [CrossRef]

- Zhao, J.L.; Zheng, S.D.; Huo, H.; Gong, M.G.; Zhang, T.H.; Qu, L.J. Fast weighted CP decomposition for context-aware recommendation with explicit and implicit feedback. Expert Syst. Appl. 2022, 204, 117591. [Google Scholar] [CrossRef]

- Shrivastava, N.; Gupta, S. Analysis on Item-Based and User-Based Collaborative Filtering for Movie Recommendation System. In Proceedings of the 2021 5th International Conference on Electrical, Electronics, Communication, Computer Technologies and Optimization Techniques (ICEECCOT), Mysuru, India, 10–11 December 2021; pp. 654–656. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).