Abstract

Digital transformation efforts as part of the Fourth Industrial Revolution promise to revolutionize engineering practices. However, given the multitude of technological choices and the diversity of potential investment decisions, many engineering entities are slow and haphazard in their adoption of digital innovations and fail to meet the expectations set for digital engineering and digital transformation. In this study, we analyze the literature on adoption, including a systematic literature review on adoption theory and a characterization of where the research is focused. We introduce the term strategic adoption to represent adoption associated with not a single innovation but rather a digitally transformed and hyperconnected set of innovations in a digital ecosystem. From the analysis of 22 adoption theories/models and 178 adoption factors, we introduce twelve strategic adoption influencers and make recommendations for their use in accelerating the strategic adoption of digital innovations leading to digital engineering transformation. We discuss the theoretical and practical considerations for strategic adoption influencers and suggest future research directions.

1. Introduction

The Fourth Industrial Revolution—also called the “digital” revolution—is bringing about rapid transdisciplinary innovation [1,2]. Digital transformation across the engineering lifecycle relies on the emergence of new technologies, new digital engineering practices, and digital engineering ecosystems. In the United States (U.S.), digital transformation and digital engineering are strategies being used to ensure national security and global leadership [3,4]. Advances in digital technologies, and digital innovations using these technologies, are creating a wide variety of capabilities that promise to significantly reduce cycle times and improve competitiveness [5,6].

U.S. defense contractors have well-established methods that have been honed over many decades by acquisition requirements, regulations, and the need for workforce stability. Despite the strategic imperative and pioneering efforts of the U.S. Department of Defense (DoD) [3], many entities struggle with achieving digital transformation throughout their enterprise versus “random acts of digital” [7] that do not accomplish strategic goals. Achieving strategic adoption in the digital revolution requires changes to governance and acquisition models at a strategic level. George Westerman—a digital transformation research scientist at the Massachusetts Institute of Technology—said, “This idea that a thousand flowers will bloom and we will all be okay is a great way to get some ideas, but we have not seen any transformations that happen bottom-up” [8].

While adoption of digital technologies and digital innovations may occur “bottom-up” within an enterprise, strategic objectives such as digital transformation and digital engineering require a coordinated application of multiple technologies and innovations to achieve strategic results. Consequently, there is a need for research that bridges the gap between current adoption research, which is generally focused on individual technologies or innovations, and adoption in the context of digital engineering and digital transformation, which is dependent on strategic application of a multitude of ever-changing digital innovations. We refer to this new type of adoption as strategic adoption.

The motivation behind this research paper is to leverage the wide body of existing adoption research toward finding mechanisms to accelerate the strategic adoption of digital innovations in engineering entities supporting the United States (U.S.) defense industry. Two research questions (RQ) are addressed:

- What gaps exist in existing research relative to the adoption of digital innovations leading to digital engineering and digital transformation within the U.S. defense industry? (RQ#1)

- Can adoption factors found in the literature be useful in the development of a research instrument for assessing strategic adoption? (RQ#2)

This study draws on the current literature to identify adoption influencers that may assist in advancing strategic adoption. We hypothesize that strategic adoption influencers can be utilized to advance implementation frameworks of entities for the digital age. Further research is needed to validate this idea; however, we discuss important considerations for accelerating the adoption of digital innovations in a strategic manner.

Given the inconsistent application and multiple definitions of key terms related to the research questions, a glossary of essential terms is listed below to aid in the comprehension of this paper.

- Adoption: “a decision to make full use of an innovation as the best course of action available” [9]. Adoption is not an act of creating or implementing digital innovation.

- Adoption factor: a determinant of adoption as identified by an adoption theory/model or adoption study. Hundreds of unique adoption factors are identified in the numerous adoption theories/models and studies found in the literature.

- Adoption theory/model: a theoretical framework for describing the significance of adoption factors.

- Adoption study: an empirical study using an adoption theory/model to study adoption for a particular technology or innovation.

- Capability: the ability to achieve an outcome or effect using features of a system of interest, which contributes to a desired benefit or goal [10]. An example capability is the ability to shorten design cycle time using digital twin innovations.

- Digital engineering (DE): “an integrated digital approach that uses authoritative sources of systems’ data and models as a continuum across disciplines to support lifecycle activities from concept through disposal” [11]. A digital engineering framework used by the DoD for understanding and discussing digital engineering includes a digital system model (engineering data), program and system supporting data, digital threads, tools, analytics, processes, and governance [12].

- Digital engineering ecosystem (DEE): “the interconnected infrastructure, environment, and methodology (process, methods, and tools) used to store, access, analyze, and visualize evolving systems’ data and models to address the needs of the stakeholders” [13]. The digital engineering ecosystem defined by the DoD has the following five goals: (1) formalize the development, integration, and use of models; (2) provide an authoritative source of truth; (3) incorporate technological innovation; (4) establish infrastructure and environments; and (5) transform culture and workforce [3].

- Digital engineering transformation: digital transformation of engineering practices. Digital engineering transformation is expected to fundamentally “change the way engineering is done, change the way DoD acquisition is done, change the way we view quality and agility, change the way systems get deployed, and change our workforce” [14].

- Digital innovation: “the creation […] of an inherently unbounded, value-adding novelty (e.g., product, service, process, or business model) through the incorporation of digital technology” [15].

- Digital technology: the “electronic tools, systems, devices, and resources that generate, store, or process data” [16] that are the “basis for developing digital innovation” [17]. Examples of digital technologies include secure cloud computing, artificial intelligence, robotics, and virtual reality.

- Digital transformation (DT): “a fundamental change process, enabled by the innovative use of digital technologies, accompanied by the strategic leverage of key resources and capabilities, aiming to radically improve an entity and radically improve its value proposition for its stakeholders” [18]. An alternative definition is the application of innovative digital technologies that disrupt or cause a radical change in industry “to enable major business improvements (such as enhancing customer experience, streamlining operations, or creating new business models)” [8]. In addition, digital transformation aims to develop an organic digital workforce that can continually adapt and adopt new digital innovations [19].

- Engineering entity: an entity engaged primarily in one or more of the lifecycle phases associated with engineered systems, i.e., material solution analysis (MSA), technology maturation and risk reduction (TMRR), engineering and manufacturing development (EMD), production and deployment (P&D), and operations and support (O&S).

- Entity: “an entity can be an organization, a business network, an industry, or society” [18].

- Strategic adoption: adoption aligned with the strategic goals or strategic renewal of an entity for successful digital transformation.

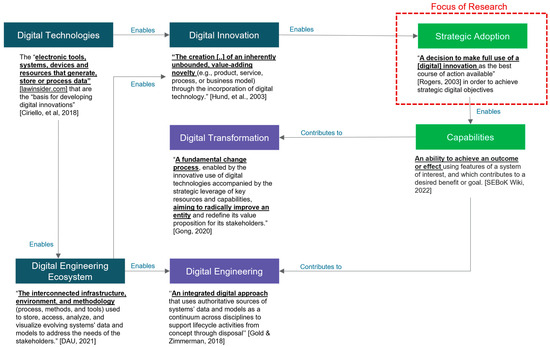

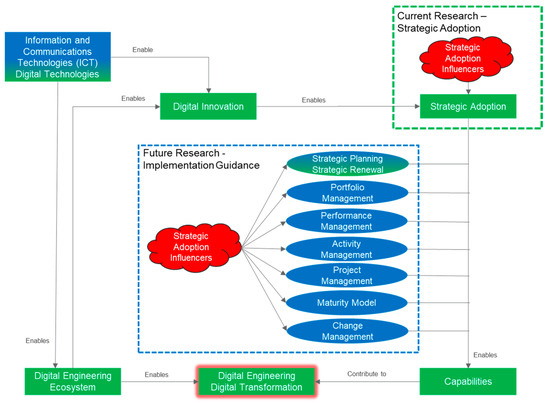

Figure 1 shows the relationships between key terms related to digital transformation and digital engineering, including the role of strategic adoption. These relationships provide a cognitive map that illustrates how adoption research is central to understanding the mechanisms that accelerate the strategic adoption of digital innovations. The definitions in Figure 1 were selected or adapted from the literature.

Figure 1.

Relationships of key terms [9,10,11,13,15,16,18].

In summary, this review discusses the gaps in adoption literature as it relates to strategic adoption of digital innovations and identifies common adoption factors that exist in the prevalent adoption theories/models. The research investigation plan described in the next section outlines the methods and datasets used for this purpose.

2. Materials and Methods

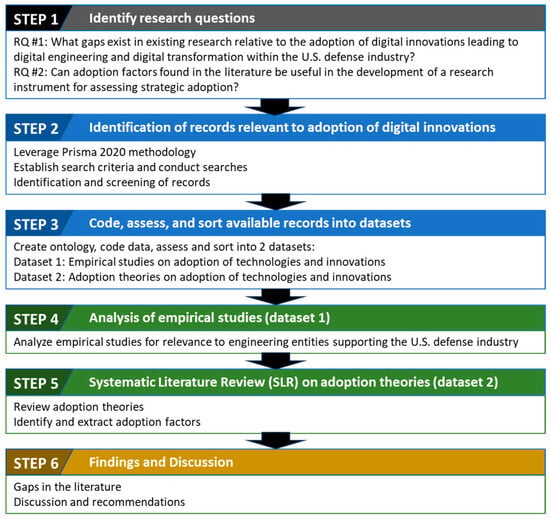

Addressing the specific research questions identified in the introduction, a six-step research investigation plan (RIP) was developed, as shown in Figure 2. The research questions in Step 1 were identified by observing that this is a unique period in history, in which digital engineering in the U.S. defense industry is being strategically driven and at the same time a digital revolution is occurring in industry. Technology adoption is fundamental to the success of both digital engineering and digital transformation, and a review of the literature in this area is relevant to assessing and discussing strategic adoption.

Figure 2.

Research investigation plan.

Step 2 of the RIP describes the search criteria used in establishing the knowledgebase of records for addressing the research questions. Step 3 describes the methods utilized to create two different datasets from the knowledgebase. The two datasets are used to address RQ#1 and RQ#2. Step 4 utilizes DS#1 to characterize and identify gaps in the existing adoption research in response to RQ#1. Step 5 utilizes DS#2 to perform a systematic literature review (SLR) on adoption theories in response to RQ #2. Findings from the analyses undertaken in Steps 4 and 5 are discussed in Step 6.

2.1. Step 2 Create Knowledgebase of Records

The methodology selected was Prisma 2020 for “new systematic reviews that include searches of databases and other sources” [20]. The lack of a consistent ontology for identifying adoption theories made it impractical to rely on a database search alone; therefore, the methodology involved performing an initial search of two databases, using defined criteria and then researching specific citations from articles within that dataset. The database searches resulted in a combined knowledgebase of 591 records. The data associated with each record includes the title, author, date, and abstract.

The details of the database searches are as follows:

- Database query: Google Scholar

- Search mechanism: software application “Publish or Perish” (macOS GUI Edition)

- Title words: adoption AND technology AND (model or theory)

- Keywords: innovation AND digital AND engineer*

- Returned 370 results.

- Database query: Scopus

- Search query string: TITLE (“adoption” AND “technology” AND (model OR theory)) AND innovation AND digital AND engineer*

- Returned 151 results.

- Individual citation searches: multiple databases

- Returned 221 results.

The citation research added another 221 records, resulting in an initial knowledgebase of 742 records. The citation research focused on identifying original adoption theories/models versus empirical studies that utilized an established adoption theory/model. The 742 knowledgebase records were screened into two datasets to support the two different research questions, as described in Step 3.

2.2. Step 3 Code and Screen Available Records into Datasets

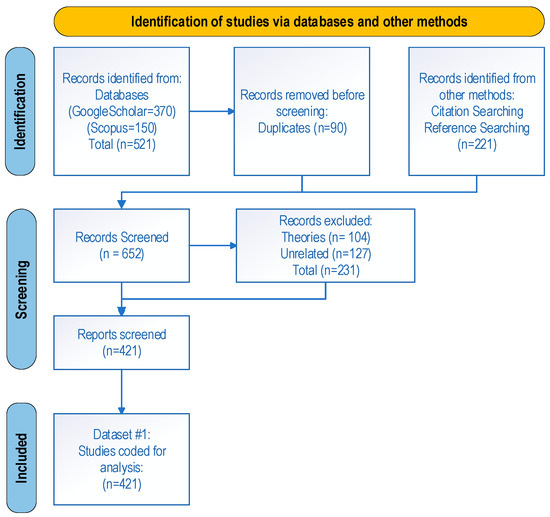

The first dataset (DS#1) was created to evaluate RQ#1 and assess what gaps exist in existing adoption research relative to digital engineering and digital transformation within the U.S. defense industry. Specifically, empirical studies on the adoption of digital technologies or digital innovations were included, and all others were excluded. With this data, adoption studies were characterized by digital technology and industry, and gaps were assessed in relation to RQ#1. Starting with the 742 knowledgebase records, 90 were removed as duplicates, leaving 652 records resulting from the identification stage (Figure 3). In the screening phase, 104 were excluded as adoption theories and 127 were excluded as unrelated to adoption of digital technology, leaving 421 empirical adoption studies to be included and analyzed in Step 4. All 421 records were screened using their titles, abstracts, and publisher.

Figure 3.

Flow diagram of review data for Dataset #1 based on PRISMA [20].

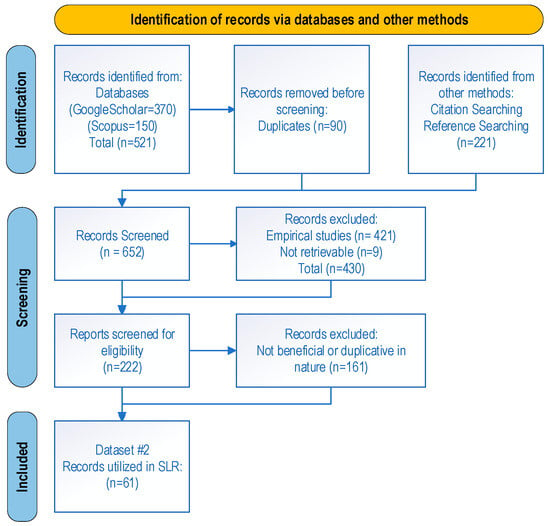

The second dataset (DS#2) was created to evaluate RQ#2 and assess whether adoption factors can be useful in the development of a research instrument for assessing strategic adoption. Original and prevalent adoption theories/models were included, and empirical studies were excluded unless part of an original adoption theory/model. With this data, a systematic literature review was conducted, and adoption factors were identified and categorized.

For DS#2, the identification stage started with the same 742 records in the knowledgebase created from Step 2, of which 652 were moved to the screening stage after 90 duplicates were removed, as shown in Figure 4. In the screening phase, an additional 591 records were removed because they were not original adoption theories/models, could not be retrieved, or were duplicative in nature. The remaining 61 records were included in the SLR on technology adoption theories/models. Database queries were not found to be as effective as citation tracing for finding original and relevant adoption theory/model records. This is attributed to the lack of an ontology for isolating theory/models from empirical studies, and the relatively large number of empirical studies relative to the number of theories/models. Citation tracing from the studies proved to be a better technique in isolating adoption theories/models.

Figure 4.

Flow diagram of review data for Dataset #2 based on PRISMA [20].

2.3. Step 4 Analysis of Dataset #1

Examining the 421 records associated with empirical adoption studies revealed significant variation in how digital technologies and innovations are characterized. Using Bachman notation [21], Figure 5 shows a conceptual entity relationship diagram for understanding the relationships between digital technology, digital innovation, and industry represented by the records in DS#1. As illustrated, digital innovation can be enabled by one or more digital technologies and applied to one or more industries. Digital technologies can be used in many industries and one industry can utilize many digital technologies.

Figure 5.

Conceptual entity relationship diagram.

Using the many-to-many relationship between digital technology and industry shown in Figure 5, the 421 records of DS#1 were categorized. Excluding the 128 records not specific to one industry, the top 15 represented industries account for ninety-seven percent of the total industry specific records as shown in Figure 6. The available literature shows a strong preference for adoption research in the education, consumer retail, finance, healthcare, and construction industries. Aerospace or defense was not specifically represented in the records, although industries relevant to defense were, such as transportation and logistics and telecommunications. Figure 6 also shows the number of digital technologies represented in the record counts. For example, the 70 records representing the education industry focused on the following 12 unique digital technologies: e-learning, mobile and wearable, social media, blockchain, cloud, learning management system, smart technology, artificial intelligence, augmented reality, model-based, information and communications technology, and big data. Similarly, each of the other industries had a variety of digital technologies that drive innovation.

Figure 6.

Top 12 industries represented in Dataset #1.

Examining the data from a digital technology perspective shows that eighty seven percent of the total records are represented by the top 25 digital technologies as shown in Table 1. Fifty-nine digital technologies were represented overall. While many of these digital technologies could be applicable to the U.S. defense industry (e.g., Industry 4.0, smart factory, mobile/wearable, etc.), none of the records analyzed for adoption of digital technologies represented the U.S. defense industry specifically.

Table 1.

Most represented digital technologies.

Owing to the dynamic nature of the digital revolution, it is expected that the list of represented digital technologies will continue to evolve and transform as new innovations emerge and research interests change.

2.4. Step 5 Analysis of Dataset #2

Considerable research exists on adoption theories and models and their origins, effectiveness, evolution, strengths, and weaknesses. The SLR focused on identifying a comprehensive set of adoption theories and models from which a list of adoption factors can be generated, analyzed, and categorized for use in assessing methods to accelerate adoption. From the 61 records included in DS#2 (Figure 4), 22 adoption theories/models were identified, reviewed, and summarized in Table 2.

Table 2.

Summary of adoption theories and models and their adoption factors.

The adoption factors selected in the fourth column of Table 2 are explicitly discussed in the adoption theories/models cited. Since adoption theories are often extended or improved over time, there is some duplication and similarity in the adoption factors identified. Additional details on the origin and context of the adoption factors can be seen in Appendix A. A glossary of all adoption factor terms was created to facilitate further analysis in addressing RQ#2. This glossary is available as supplementary materials (DOI: 10.5281/zenodo.10648083).

3. Findings and Discussion (Step 6)

Using the analysis performed on DS#1 in Step 4 of the RIP, and the SLR on DS#2 in Step 5 of the RIP, we discuss findings in the context of the two research questions in the following sections.

3.1. Research Question #1

Research question #1 asks what gaps exist in existing research relative to the adoption of digital innovations leading to digital engineering and digital transformation within the U.S. defense industry. Five gaps are identified and discussed, forming the basis for future research opportunities.

- Lack of Adoption Research supporting the U.S. Defense IndustryFew scholarly articles exist in the public domain related to the adoption of digital innovations within the U.S. defense industry. DS#1 did not contain any research articles that directly addressed adoption of digital technology in the aerospace and defense industry. Numerous digital innovation presentations and strategies have been approved for general release by the U.S. DoD; however, adoption-related literature has not been found. Additionally, considerable media attention has been paid to successful engineering entities that utilize digital innovations (e.g., SpaceX, Boeing, Apple), but adoption-related literature is sparse. The lack of adoption-related literature for the defense industry and the lack of adoption-related literature for digitally innovative engineering entities is likely due to the privacy and secrecy requirements of these entities. This is understandable, given the profound effects of digital innovation on competitiveness and national security.

- Lack of Adoption Research on Digital Engineering Technologies and InnovationsAdoption research is sparse for many digital engineering innovations, such as digital threads, digital twins, model-based systems engineering, continuous integration and delivery, and engineering analytics. This provides fertile ground for additional research—particularly in the U.S. defense sector, which has a well-defined and well-documented strategy for digital engineering transformation [3]. Interestingly, computer-aided design (CAD), computer-aided engineering (CAE), and DevOps are prerequisites for digital engineering transformation; however, these are minimally represented in the literature. Research is increasingly being conducted on cloud infrastructure, blockchain, Industry 4.0, smart manufacturing, and virtual reality. It is unclear why some digital engineering technologies and innovations receive greater attention from researchers than others. A framework for model-based systems engineering (MBSE) adoption was published by the Systems Engineering Research Center (SERC) [60]. The SERC MBSE adoption framework is based on insights from a survey of obstacles and enablers to MBSE adoption, which it maps to the Baldridge Excellence Framework’s® seven criteria categories for performance excellence, including strategy, leadership, operations, workforce, customers, measurement and analysis, and results [61]. This paper further contributes to SERC’s research by providing a comprehensive literature review on adoption and making recommendations for further research in the discussion of RQ#2.

- Lack of a Common Glossary and Ontology of Adoption FactorsFrom the literature, 178 determinants of adoption are identified from the theoretical models; however, for practical reasons, individual adoption studies utilize only a small fraction of these. While many of the terms utilized are unique, some have only nuanced distinctions in their definitions or are synonyms. For example, top management support, leadership, superior’s influence, championship, and presence of champions have nuanced definitions. It is left for future research to advance the use of a common glossary of adoption factor terms as the basis for a common ontology. A standardized ontology and common glossary can simplify future adoption research. This is important because researchers are increasingly using combinations of theoretical models and continue to develop unique terms to represent variations of the original adoption theory terms for adoption factors.

- Lack of Assessment Models for Digital Transformation and Digital EngineeringIn the digital age, digital technologies and digital innovations continuously emerge, creating a vast array of potential innovation areas. Adoption research focuses primarily on a single digital innovation or digital technology and not on digital transformation within an entity or digital engineering in general. Digital engineering and transformation rely on multiple digital technologies interconnected in a digital engineering ecosystem to radically transform and improve capabilities. As digital transformation and digital engineering are relatively new concepts in scholarly literature [62], there are no models currently available for assessing strategic adoption leading to these digital objectives.

- Lack of Implementation Frameworks and Actionable Guidance“One of the most common criticisms of [adoption models] has been the lack of actionable guidance to practitioners” [63]. Adoption research should ideally influence the design of enterprise strategies and implementation frameworks. “While existing literature demonstrates how a strategy for digital transformation can be developed, we know little about how it is implemented” [64]. Further research on implementation frameworks and solutions to accelerate the adoption of digital innovations is required.“Well articulated models, grounded in research and literature, are the most potent kinds of frameworks, yielding clear and lasting outcomes” [65]. Further research into the design and evaluation of implementation frameworks and their effects on strategic adoption will provide actionable guidance to practitioners. Differently from the SERC adoption research, which leverages the Baldridge Framework for Performance Excellence [61], the authors identify alternatives for applying adoption research to many different implementation frameworks in the discussion of RQ#2.

In summary, these five gaps support the need for additional research in addressing the difficulties experienced within the U.S. defense industry in achieving the promises of digital engineering and digital transformation. They also highlight the fundamental shift in adoption research that we believe is necessary to address adoption in a strategic manner. This strategic shift is being necessitated by the Fourth Industrial Revolution, which is rapidly introducing new digital technologies and innovations that need to be interconnected to radically improve an entity.

3.2. Research Question #2

RQ#2 asks whether adoption factors found in the literature can be useful in the development of a research instrument for assessing strategic adoption. From the SLR in Step 5 of the RIP, 22 adoption theories/models were reviewed, representing a total of 178 unique adoption factors. To answer RQ#2, the adoption factors were categorized as 12 strategic adoption influencers (SAI). We hypothesize that these SAIs, being derived from the vast body of adoption research, can be useful in the development of a research instrument for assessing strategic adoption within the U.S. defense industry and across multiple industries.

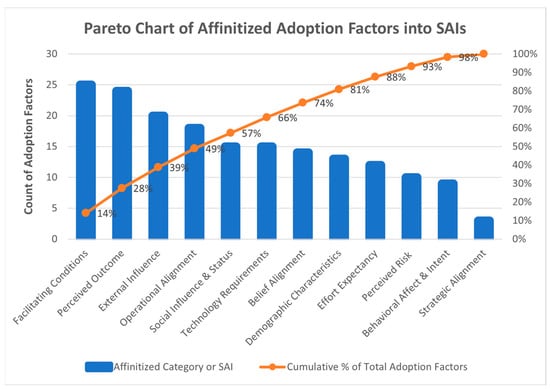

A systems-thinking approach known as affinity diagramming [66] was used as the categorization method. The benefit of this method is that it utilizes convergent thinking, which is congruent with survey design and the development of a future research instrument. The affinity-diagramming method was utilized to develop 12 categories of strategic adoption influencers based on the logical binning of 178 adoption factors as shown in Figure 7. A glossary of adoption factor terms was available during the affinity diagramming process. As each category emerged, a category label, and a general question for each category was formulated to ensure that each adoption factor in that category had the potential to contribute to its assigned grouping. The final affinity diagram is presented in Table 3 and the affinitized categories shown in column two are the strategic adoption influencers. We intend to validate that these categories are relevant to strategic adoption leading to DE and DT with a larger stakeholder group in future research.

Figure 7.

Count of adoption factors affinitized into categories.

Table 3.

Adoption influencers for DE/DT: affinitized adoption factor categories.

The potential relationship between each strategic adoption influencer and either the individual, the entity, or the environment is shown in the Relationship to Entity columns of Table 3 with check marks. This provides additional insight into the SAIs and the extent of their influence. For example, the SAI facilitating conditions describes the conditions within the entity alone, whereas external pressure and influence describes the conditions in the environment alone. Other SAIs may have to do with the individual or a combination of individual and entity. Understanding these relationships based on the origin of the affinitized adoption factors in relation to their adoption theory/model can assist with the development of future survey instruments.

3.3. Strategic Adoption Influencers and Digital Transformation (DT)

Using the principles of systems thinking and the affinity diagramming method [66], our research introduces a simplified set of strategic adoption influencers. We created these SAIs to support the development of a future research instrument that can provide actionable implementation guidance leading to digital engineering and digital transformation in engineering entities supporting the U.S. defense industry. Figure 8 starts with the relationships identified in Figure 1 and overlays the influence of SAIs for both current research and future research opportunities related to implementation guidance. The green boxes and their connecting arrows indicate the flow of digital innovation, and the blue ovals represent the implementation frameworks needed to support this flow. The gradient blue and green shapes represent digital transitions that are currently occurring as part of the digital revolution. For example, traditional strategic planning is now being discussed in the context of strategic renewal, and traditional ICT is being supplanted by digital technology. In the following sections, Figure 8 provides context for further discussion on the relationship of SAIs to future research.

Figure 8.

Strategic adoption influencers’ relationship to DE/DT.

3.4. Strategic Renewal vs. Strategic Planning

Strategic renewal is the “process that allows organizations to alter their path dependence by transforming their strategic intent and capabilities” [67]. In the digital transformation context, this implies a level of strategic planning that significantly changes the business model through digital innovation. “Firms should ensure close integration of digital technology diffusion with their strategy function and processes to ensure they inform and reinforce each other” [68]. These ideas are supported by Hess et al.’s conceptual framework for formulating a digital transformation strategy, which has four key dimensions: (1) the use of technologies, (2) changes in value creation, (3) structural changes, and (4) financial aspects [69]. The SAIs strategic alignment, operational alignment, facilitating conditions, perceived outcomes & value, and technology requirements & ecosystem recognize the importance of these dimensions. However, there is an inherent limitation in that the adoption factors that they are based on are focused on individual technologies and innovations as opposed to significant structural changes. By generalizing the adoption factors into strategic adoption influencers these limitations can be mitigated. For example, the technology requirements & ecosystem adoption influencer considers the structural changes and interconnectedness needed in a digital ecosystem.

3.5. Strategic Adoption Influencers and Implementation Frameworks

To accelerate the strategic adoption of digital innovations, implementation frameworks must evolve to better support digital transformation. Derived from a vast and mature body of scholarly adoption research, strategic adoption influencers can be used to guide the evolution of implementation frameworks (e.g., Maturity Model, Portfolio Management, Project Management, Change Management, Activity Management, Performance Management). By examining implementation frameworks in the context of SAIs, opportunities to accelerate DE and DT can be exploited. For example, a maturity model framework can be used to build external pressure and influence by benchmarking the DE maturity of the entity against competing entities [70]. Similarly, portfolio management can publish a catalog of strategically important and mature DE solutions that lowers perceived risk and effort expectancy, thereby improving adoption and accelerating DE. As these two examples illustrate, the ability to view implementation frameworks in the context of SAIs creates limitless opportunities to improve adoption. Further research is needed to establish the significance SAIs may have in accelerating adoption leading to DE/DT.

3.6. Limitations and Future Research

Despite being systematically developed, our research is not without limitations. Firstly, the adoption factors utilized were from adoption theories/models which have not always produced consistent results in terms of their significance in empirical studies of specific technologies. The affinity diagramming methodology lessens any potential effect of this by aggregating many adoption factors into strategic categories, thereby reducing any one adoption factor’s influence.

Secondly, the affinity diagramming process itself may produce different categorizations depending on the participants. Since the SAIs are a direct result of this categorization, they should be considered preliminary until validated in future research.

Thirdly, since digital technologies and digital innovations are constantly evolving, it is likely that SAIs and their significance will change over time as entities evolve and individuals’ perception of DE/DT change. Given the desire to use SAIs in determining implementation guidance, it is recommended that future research benchmark SAI significance periodically to account for these expected trends, similarly to how Prosci Inc. monitors change management factors in its biannual survey [71].

Finally, whether there are industry-specific variations in strategic adoption influencers is an unexplored area of research. As more research becomes available on adoption leading to DE/DT, future research could examine any industry-specific tendencies of the SAIs. This could confirm the universality of the SAIs or make the argument for more industry-specific SAIs.

4. Conclusions

Adoption research is mature, particularly regarding ICT. Numerous adoption theories and models have been developed and researched over the past 50 years, and thousands of adoption studies representing various industries, technologies, innovation areas, and demographics have been conducted. While much of the empirical research has focused on a single technology-based innovation, we know that the Fourth Industrial Revolution, i.e., the digital revolution, requires the strategic integration of multiple innovations across an entire digital ecosystem [70]. We also know that almost infinite innovations are possible, as “Moore’s and Metcalf’s laws govern the broadening availability and commoditization of digital technologies” [72], and that numerous approaches can be used by entities to achieve digital transformation [73]. As such, there is a need for a new type of adoption research that focuses on strategic adoption and guides the adoption choices of digital innovations in accordance with strategic renewal. This new research must be grounded in implementation frameworks, and adoption research can help these frameworks to adapt to the changes brought about by the digital revolution.

Our research contributes by introducing the concept of strategic adoption leading to digital transformation, and suggests ways to leverage the existing body of research to accelerate strategic adoption. First, we examined a large dataset of adoption studies and characterized its industry focus and gaps. We found that the defense industry is not well represented in the currently available adoption research and yet may benefit from the research theories/models being applied across many industries. Second, we conducted an SLR of adoption-related theories/models and compiled a comprehensive list of adoption factors. We then categorized these adoption factors into strategic adoption influencers. Third, we discussed how SAIs can be utilized to better adapt implementation frameworks to the digital age and, in doing so, accelerate the strategic adoption of digital innovations leading to DE/DT. We hope our recommendations for leveraging the large body of adoption research for this purpose may provide novel insights into accelerating strategic adoption across many industries, including the U.S. defense industry.

Supplementary Materials

The following supporting information can be downloaded at: http://doi.org/10.5281/zenodo.10648083 (accessed on 11 February 2024), Glossary of Adoption Factor Terms Version v1.

Author Contributions

Conceptualization, J.M.C.; methodology, J.M.C. and S.V.B.; validation, J.M.C. and S.V.B.; formal analysis, J.M.C.; investigation, J.M.C.; data curation, J.M.C.; writing—original draft preparation, J.M.C.; writing—review and editing, J.M.C. and S.V.B.; visualization, J.M.C.; supervision, S.V.B.; project administration, J.M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material. The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

The authors wish to acknowledge the RTX Employee Scholar Program which provides funding towards the primary author’s education.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

This appendix provides additional details beyond those shown in Table 2 of the SLR performed in Step 5 of the RIP. These details include additional context around the origins of the theory/model, additional citations, and, where appropriate, definitions of adoption factor terms. For ease of reading, adoption factor terms from the adoption theories/models cited are italicized.

Appendix A.1. Diffusion of Innovation (DOI) Theory

The DOI theory was first published in a book titled Diffusion of Innovation in 1962 by Everett M. Rogers [22] and has evolved over the decades. The fourth [23] and fifth [9] editions are the most frequently cited. Diffusion is defined as “how innovations, defined as ideas or practices that are perceived as new, are spread” [74].

DOI has four main elements for adoption: characteristics of the decision-making unit (social system), perceived characteristics of the innovation, communication channels, and time. It is often represented as an s-shaped adoption curve, with the percentage of adopters on the y-axis and time on the x-axis, illustrating a critical point where adoption accelerates and then tapers off. “Innovations that are perceived as (a) relatively advantageous (over ideas or practices they supersede), (b) compatible with existing values, beliefs, and experiences, (c) relatively easy to comprehend and adapt, (d) observable or tangible, and (e) divisible (separable) for trial, are adopted more rapidly” [74]. Moore and Benbasat’s article titled Development of an Instrument to Measure the Perceptions of Adopting an Information Technology Innovation summarizes DOI adoption factors as follows: relative advantage, compatibility, complexity, trialability, and observability [75].

Tarhini et al.’s article A Critical Review of the Theories and Models of Technology Adoption and Acceptance in Information Systems Research is a more recent DOI review [76]. Additional DOI adoption factors identified in this review include the following: previous practice, felt needs/problems, innovativeness, norms of the social system, socioeconomic characteristics, personality variables, and communication behavior.

Appendix A.2. Theory of Reasoned Action (TRA)

Rooted in social psychology, the TRA was introduced in 1967 by Martin Fishbein [24] and later extended by Icek Ajzen [25].

The theory has three main elements of adoption: attitude toward act or behavior, subjective norm, and behavioral intention [26]. Behavioral intention is a key tenet of this theory and differs from behavior itself. Behavioral intention is influenced by attitudes and subjective norms. The model assumes that behavior cannot be predicted unless intention is known. The limitations of the theory are widely known [76]; however, the TRA has served as the basis for other theories, such as the theory of planned behavior [32], the theory of attitude and behavior [30], and the technology acceptance model [77].

Appendix A.3. Social Cognitive Theory (SCT)

First published in a journal article entitled Toward a Unifying Theory of Behavioral Change in 1977 by Albert Bandura [27], SCT has theoretical roots in social learning theory dating from earlier decades [77]. Bandura traces its evolution to Chapter 2 of the 2005 edition of Great Minds in Management, The Process of Theory Development [78]. SCT has been widely used in the study of human psychology and behavior [79] for the purpose of improving organizational effectiveness [80].

SCT has three main elements of adoption: personal factors and behaviors (e.g., cognitive, affective, and biological), environmental factors, and behavior [28]. The model relies on the feedback between all three elements. SCT posits that behavior is driven by self-efficacy, outcome expectancies (both personal and performance-related), and goals [80]. Self-efficacy is the cornerstone of this theory and is embedded throughout SCT research. It is defined as the ability of a person to produce a desired outcome through a belief in his/her own capability.

Appendix A.4. Theory of Attitude and Behavior (TAB)

The TAB was introduced by H. C. Triandis in 1980 [30]. The determinants of adoption behavior utilized in TAB are facilitating conditions and intentions, with intentions driven by social factors, affect, and perceived consequences. Affect is an imprecise term that refers to “the feelings of joy, elation, pleasure, or depression, disgust, displeasure, or hate associated with an individual” [30].

Appendix A.5. Upper Echelon Theory (UET)

UET was introduced by Donald C. Hambrick and Phyllis A. Mason in 1984 [31]. It uses the term strategic choice, which is a generalized term leading to general management outcomes, versus the strategic adoption term introduced herein, which focuses on achieving digital transformation outcomes. The determinants of strategic choice are based on the theory that the characteristics of an organization’s top management (i.e., upper echelons) influence strategic choice. The determinants are divided into two categories: (1) psychological, which includes cognitive-based values, and (2) observable, which includes demographic characteristics of age, functional tracks, other career experiences, education, socioeconomic roots, financial position, and group characteristics.

The age composition, experience, and gender sensitivity factors of senior management teams were shown to “have significantly strong power of predicting the extent of adoption”, while group homogeneity and education had negative or weak impacts in a study performed on small and medium enterprises in 2011 [81].

Appendix A.6. Theory of Planned Behavior (TPB)

The TRB was introduced in 1985 by Icek Ajzen as an extension of the TRA [32]. A complete and commonly referenced version was published in 1991 [33]. TPB adds perceived behavioral control to the TRA model and thus overcomes the TRA’s limitation of a relatively fixed construct of attitude [82]. Perceived behavior control is defined as the perceived “ease or difficulty of performing a behavior” [33].

In 2013, Ajzen and Sheikh responded to critiques that the TPB may not adequately account for anticipatory affect. They “were able to show that when all variables are assessed either with respect to performing the behavior or with respect to avoiding the behavior, anticipated affect makes no independent contribution to the prediction of intentions” [83]. In 2015, Ajzen once again responded to various critiques regarding TPB, with a full discussion of the impact of behavior change intervention [84].

Appendix A.7. Structuration Theory (ST) and Adaptive Structuration Theory (AST)

ST was introduced by Anthony Giddens in his book The Constitution of Society: Outline of the Theory of Structuration [34]. AST was later introduced in 1994 by DeSanctis and Poole [35] to address “perceived weaknesses of previous structuration theories, which were seen as giving only weak consideration to IT” [85].

In AST, social interaction influences decision outcomes (i.e., adoption), and includes appropriation of structures and decision processes as factors. There are five other determinants that influence social interactions: structure of advanced information technology (e.g., structural features, leadership, efficiency, conflict management, atmosphere), other sources of structure (e.g., task, organization environment), group’s internal system (e.g., styles of interacting, knowledge and experience, perceptions), emergent sources of structure, and new social structures (e.g., rules, resources). Time is also a significant factor in ST and AST.

Lewis and Suchan conducted a case study using ST to discuss the adoption of a global transportation network by the U.S. DoD [86]. “It is reasonable to believe that Structuration Theory based concepts and the qualitative case study methodology are promising for tackling the long existing difficulties in the traditional IT/IS adoption research” [87].

Appendix A.8. Technology Acceptance Model (TAM/TAM2/TAM3)

The TAM was introduced in 1989 by Fred D. Davis as a variant of the TRA [36]. It was “developed in [the] information technology field, while TRA and TPB developed in the psychology field, so that it is less general than TRA and TPB” [77]. The TAM includes the following determinants of adoption: behavioral intention, attitude toward behavior, perceived usefulness, perceived ease of use, and external variables. It “has consistently outperformed the TRA and TPB in terms of explained variance across many studies” [88]; however, it has limitations because of its simplicity.

The TAM was extended by Venkatesh and Davis in 1996 and 2000 to include the social influence processes of subjective norm, voluntariness, and image, as well as cognitive instrumental processes of job relevance, output quality, and result demonstrability [38,89]. The resulting model TAM2 was significantly better than the TAM for explaining variance [76].

The TAM was again extended in 2008 by Viswanath Venkatesh and Hillol Bala, resulting in TAM3 [39]. Leveraging the TAM and TAM2, TAM3 includes the following additional determinants of perceived ease of use: computer self-efficacy, perceptions of external control, computer anxiety, computer playfulness, perceived enjoyment, and objective usability. The most important aspect of this study was Venkatesh and Bala’s recognition of the gap in practical interventions. Their key tenet “is that unless organizations can develop effective interventions to enhance IT adoption and use, there is no practical utility of our rich understanding of IT adoption”. They go on to say that “there is little or no scientific research aimed at identifying and linking interventions with specific determinants of IT adoption” [39].

A literature review of the development and extension of TAM and its future was presented in Joseph Bradley’s article If We Build It, They Will Come? The Technology Acceptance Model [90].

Appendix A.9. Technology-Organization-Environment (TOE) Model

The TOE model was introduced in 1990 by Louis G. Tornatzky and Fleischer in their book The Processes of Technological Innovation [40]. Jeff Baker distills the TOE model and provides an overview and literature review of TOE in his book, in the chapter The Technology-Organization-Environment Framework [91].

As its name implies, the TOE model includes three contexts. The technological context includes availability and technology characteristics as determinants of adoption. The organizational context includes formal and informal linking structures, communication processes, size, and slack. The environmental context includes industry characteristics and market structure, technology support infrastructure, and government regulation. Baker’s literature review identified 11 studies in which the TOE model was utilized for extracting the statistically significant adoption factors [91]. Using these data, the following additional adoption factors were included in the technological context: perceived barriers, compatibility, complexity, perceived direct benefits, relative advantage, trialability, technology readiness, technology competence, and technology integration. For the organizational context, the following additional factors were identified: satisfaction with existing systems, firm size, strategic planning, infrastructure, top management support, championship, perceived financial cost, perceived technical competence, presence of champions, organizational readiness, employee’s IS knowledge, firm scope, financial resources, and financial commitment. For the environmental context, the following additional factors were identified: competitive pressure, regulatory environment, regulatory support, role of IT, management risk position, adaptable innovations, perceived industry pressure, perceived government pressure, performance gap, and market uncertainty.

Appendix A.10. Model of PC Utilization (MPCU)

The MPCU was introduced by Ronal L. Thompson, Christopher A. Higgins, and Jane M. Howell in 1991 [41]. It has its roots in the TRA [25] and the TAB [30]. In the MPCU, the significant determinants of adoption are complexity, job fit, long-term consequences, and social factors.

Appendix A.11. Motivational Model (MM)

“Since the 1940s, many theories have resulted from motivation research” [77]. Motivational theory was utilized by Fred D. Davis, Richard P. Bagozzi, and Paul R. Warshaw to investigate the effect of enjoyment on usage (a proxy for adoption). It identifies both the intrinsic and extrinsic classes of motivation. “Within this dichotomy, perceived usefulness is an example of extrinsic motivation, whereas enjoyment is an example of intrinsic motivation” [42]. In their empirical study, “usefulness, enjoyment, ease of use, and quality account for more than eighty-five percent of the variance”, and “both usefulness and enjoyment have significant effects on usage intentions”, although usefulness “was roughly four to five times more influential than enjoyment in determining [behavioral] intentions” [42].

Appendix A.12. Task-Technology Fit (TTF) Model

TTF was introduced in 1995 by Dale Goodhue and Ronald Thompson. Although they focused on the Technology-to-Performance Chain (TPC) and the impact of information technology on individual performance, the model they developed sought to evaluate the influence of TTF on utilization (i.e., adoption).

The eight factors of TTF evaluated with regards to their effects on adoption are data quality, locatability, authorization, compatibility, timeliness, reliability, ease of use/training, and relationship. Goodhue and Thompson concluded that the “evidence of the causal link between TTF and utilization was more ambiguous”, although TTF continues to be utilized in adoption research [43]. Brent Furneaux’s article Task-Technology Fit Theory: A Survey and Synopsis of the Literature provides an overview of the literature on TTF [92].

Appendix A.13. Institutional Theory (INST) and Process-Institutional-Market-Technology (PIMT) Framework

INST is a broad and complex field of research; thus, it cannot be entirely attributed to one person, although Scott’s textbook Institutions and Organizations is widely cited as it pertains to the use of INST in studying technology adoption [44]. In 2009, Wendy Currie examined the use of INST in information systems (IS) research. Her objective was to “demonstrate that IS researchers need to engage more fully with the INST literature as the body of work is conceptually rich and is more appropriately used to analyze and understand complex social phenomena” [93].

The INST factors include coercive, mimetic, and normative forces. Coercive pressures are defined as “both formal and informal pressures exerted on social actors to adopt the same attitudes, behaviors, and practices, because they feel pressured”; normative pressures are defined as “social actors voluntarily, but unconsciously, replicating other actors’ same beliefs, attitudes, behaviors, and practices”; and mimetic pressures are defined as “voluntarily and consciously copying the same behaviors and practices of other high status and successful actors” [94].

Janssen et al. introduced the Process, Institutional, Market, Technology (PIMT) framework [46] based on the institutional frameworks of Koppenjan and Groenewegen [45]. In PIMT, the following factors affect adoption: institutional factors of norms and cultures, regulations and legislations, and governance; market factors of market structure, contracts and agreements, and business process; technical factors of information exchange, distributed ledger, and shared infrastructure; and change factors of change strategies and change instruments.

Appendix A.14. Decomposed TPB (DTPB) and Augmented TAM (A-TAM)

In 1995, Shirley Taylor and Peter A. Todd introduced the DTPB [48] and A-TAM [95]. The authors believed that “the traditional forms of the TRA and the TPB may understate the complex interrelationships between belief structures and the determinants of intention” [47]. The DTPB decomposes the TPB factors of attitude, subjective norm, and perceived behavioral control. Empirical studies have indicated that relative advantage and complexity influence attitude, normative influences influence attitude and subjective norm, and efficacy and facilitating conditions influence perceived behavioral control. A-TAM combines the influencing factors of TPB [32] with perceived usefulness and perceived ease of use from TAM [36].

Appendix A.15. Lifecycle Behavior Model (LBM)

The LBM was introduced in 1997 by Charles E. Swanson, Kenneth J. Kopecky, and Alan Tucker. It is a discrete time-based model based on the assumption that “finite-lived individuals choose consumption, leisure, work hours and adoption time over the course of their lifetimes” in four distinct phases. The empirical study associated with the model “suggests that older workers are more productive, even though they are disinclined to adopt new technology”; therefore, “when members of the oldest worker cohort spend no time adopting, a wave of technological innovation will have a smaller effect on aggregate productivity if there are relatively more members of that oldest cohort” [49]. Thus, the construct of adoption in this model is age (lifecycle phase). The authors noted the many limitations of the study, and research continues in this area. In 2009, Botao Yang expanded the LBM by introducing a finite-horizon dynamic lifecycle model that includes the effects of adoption costs and total expected discounted benefits [96].

Appendix A.16. Fit-Viability Model (FVM)

In 2001, Anthony Tjan introduced the concept of a fit-viability model to assist with portfolio management of Internet initiatives [50]. This was later utilized by researchers, such as Tin-Peng Liang and Chih-Ping Wei, for evaluating mobile commerce technology, where the TTF model was extended to include organizational viability in a theoretical framework [51].

In Tjan’s model, the determinants for assessing fit are alignment with core capabilities, alignment with other company initiatives, fit with organizational structure, fit with company’s culture and values, and ease of technical implementation, and the determinants for assessing viability are market value potential, time to positive cash flow, personnel requirement, and funding requirement. Liang and Wei identified three aspects for assessing viability: economic, organizational, and societal, where economic includes cost benefit, organizational includes user’s willingness and user’s ability to use the technology, and societal includes the maturity of the environment [51].

Appendix A.17. Practice Theory (PT) and Ecosystem Adoption of Practices over Time (EAPT)

“Practice theories constitute [...] a broad family of theoretical approaches connected by a web of historical and conceptual similarities” [97]. Davide Nicolini provides an in-depth look at contemporary practice theories in his book Practice Theory, Work, and Organization [97].

Herbjorn Nysveen, Per E. Pederson, and Siv Skard proposed EAPT as an alternative view of technology adoption [53]. In the EAPT model, adoption is viewed from the standpoint of practice rather than technology. EAPT views adoption as a social and collective resource, integrating activity versus actions of individuals. Additionally, it views adoption as a dynamic process rather than occurring at a single point in time. Nysveen et al. discussed the research design implications of the EAPT theoretical framework regarding three PT dimensions: object, subject, and temporality. The authors noted that PT is an emerging alternative view that can “complement traditional perspectives” [53]. Time and the ecosystem are the factors influencing adoption, and the authors noted that additional research is needed.

Appendix A.18. Unified Theory of Acceptance and Use (UTAUT/UTAUT2)

UTAUT was introduced in 2003 by Viswanath Venkatesh et al. [54]. At the time, Venkatesh et al. saw a need to consolidate and improve upon several theoretical models in the literature to explain user acceptance of new technology. They empirically compared eight different models (TRA, TAM, MM, TPB, C-TAM-TPB (or A-TAM), MPCU, IDT, and SCT) and formulated the UTAUT. In their words, “there is a need for a review and synthesis in order to progress toward a unified view” [54].

The UTAUT has four key constructs: performance expectancy, effort expectancy, social influence, and facilitating conditions. Venkatesh et al. further clarified these four constructs by defining additional determinants and their origins. Performance expectancy includes perceived usefulness, extrinsic motivation, job fit, relative advantage, and outcome expectations. Effort expectancy includes perceived ease of use, complexity, and ease of use. Social influence includes subjective norm, social factors, and image. Facilitating conditions include perceived behavioral control, facilitating conditions, and compatibility.

Venkatesh et al. showed that the UTAUT is “able to explain seventy percent of the variance while the earlier theories were explaining only thirty to forty percent variance in the adoption behavior”; however, it continues to be “used more for theory-building” work due to “being overly complex, not being parsimonious in its approach and its inability to explain individual behavior” [82].

Michael Williams et al. conducted an analysis and systematic review of the literature on the UTAUT [98]. In 2012, Venkatesh et al. introduced an extension of the UTAUT known as UTAUT2 to include the consumer use context [55]. In UTAUT2, additional factors include hedonic motivation, price value, and experience and habit.

Appendix A.19. Value-Based Adoption Model (VAM)

In 2007, Hee-Woong Kim, Hock Chuan Chan, and Sumeet Gupta introduced a VAM for mobile Internet (M-Internet) by integrating adoption theory with “the theory of consumer choice and decision-making from economics and marketing research” [56]. Marketing research was utilized to include “effects of price, brand and store information on buyers’ product evaluations” [99]; thus, the determinants of a consumer’s willingness to buy (i.e., adoption factors) were considered, making the model relevant.

Kim et al.’s article reviews previous research on perceived value and identifies the following determinants of adoption: adoption intention driven by perceived value. Perceived value has four determinants: usefulness, enjoyment, technicality, and perceived fee, with the first two being perceived benefits and the latter two being perceived sacrifices. Kim et al. concluded that “the findings demonstrate that consumers’ perception of the value of M-Internet is a principal determinant of adoption intention, and the other beliefs are mediated through perceived value” [56].

Appendix A.20. Model of Acceptance with Peer Support (MAPS)

The MAPS was introduced in 2009 by Tracy Ann Sykes, Viswanath Venkatesh, and Sanjay Gosain, who argued that “an individual’s embeddedness in the social network of the organizational unit implementing a new information system can enhance our understanding of technology use” [57].

Drawing from social network theory (SNT), the MAPS incorporates three constructs: coping, influencing, and individual-level technology adoption research. For the coping construct, the following two determinants were identified: network density and value network density. For the influencing construct, the following two determinants were identified: network centrality and value network centrality. For the individual-level technology adoption research construct, the following two determinants were identified: network centrality and valued network centrality.

Through an empirical study, Sykes et al. provided evidence that the MAPS explains a significant amount of variance in accounting for technology adoption. The MAPS also provides practical considerations for “managerial interventions” designed to improve technology adoption through social networks [57].

Appendix A.21. Socio-Psycho Networks Complexity Theory (SPNCT)

The SPNCT was introduced by Ojiabo Ukoha, Okorie Awa Hart, Christen A. Nwuche, and Ikechukwu Asiegbu in 2011 [58] as an extension to TAM2 [89]. It draws from SNT and actor–network theory (ANT) and focuses on the adoption behavior of small- and medium-sized enterprises (SMEs). The SPNCT has five dimensions to classify adoption factors. The economic/technical dimension includes serviceability, reliability, interoperability, accessibility, actability, interactivity, perceived usefulness (PU), perceived ease of use (PEOU), perceived service quality, and compatibility. The social/cultural dimension includes trust, participation, work pattern, perceived enjoyment, image visibility, result demonstrability, and subjective norms. The user/decision-maker dimension includes age, education, experience, computer self-efficacy, information-searching behavior, gender sensitivity, and group homogeneity/heterogeneity. The environmental dimension includes consumer readiness, competitive pressures, trading partners’ readiness, scope of business operation, firm size, organization mission, facilitating conditions, and owner/family influence. The psychological dimension includes trialability and voluntariness.

Appendix A.22. Firm Technology Adoption Model (F-TAM)

In 2017 Joshua Kofie Doe, Roger Van de Wetering, Ben Honyuenuga, and Johan Versendaal introduced the F-TAM [59]. The F-TAM focuses on adoption in a developing-country context. F-TAM propositions that individual factors lead to a firm’s adoption of digital innovations and influence firm-level factors, firm-level factors lead to adoption, and firm-level adoption is moderated by societal factors. The F-TAM determinants of adoption are divided into three categories: (1) personal, (2) firm-level, and (3) societal factors. Personal factors include perceived ease of use, perceived usefulness, perceived indispensability, and perceived social factors. Firm-level factors include technological readiness, managerial innovativeness, organizational readiness, strategic fit with operations, and industry adoption. Societal factors include government championship, government policy, risk-taking culture, and trust in digital operations. The model is based on a literature review.

References

- Liao, Y.; Deschamps, F.; Loures, E.; Ramos, L. Past, present and future of Industry 4.0—A systematic literature review and research agenda proposal. Int. J. Prod. Res. 2017, 55, 3609–3629. [Google Scholar] [CrossRef]

- Guoping, L.; Yun, H.; Aizhi, W. Fourth Industrial Revolution: Technological Drivers, Impacts and Coping Methods. Chin. Geogr. Sci. 2017, 27, 626–637. [Google Scholar]

- Department of Defense. Digital Engineering Strategy; Office of the Deputy Assistant Secretary of Defense for Systems Engineering: Washington, DC, USA, 2018. [Google Scholar]

- Office of the under Secretary of Defense for Research and Engineering. DoD Instruction 5000.97 Digital Engineering. 21 December 2023. Available online: https://www.esd.whs.mil/Portals/54/Documents/DD/issuances/dodi/500097p.PDF?ver=bePIqKXaLUTK_Iu5iTNREw%3d%3d (accessed on 28 January 2024).

- Roper, W. Bending the Spoon, Guidebook for Digital Engineering and e-Series; DoD: Washington, DC, USA, 2021. [Google Scholar]

- Zimmerman, P. DoD Digital Engineering Implementation Challenges and Recommendations. In Proceedings of the 21st Annual National Defense Industrial Association Systems and Mission Engineering Conference, Tampa, FL, USA, 22–25 October 2018. [Google Scholar]

- Deloitte. Model Based Enterprise; Deloitte: London, UK, 2021. [Google Scholar]

- Fitzgerald, M.; Kruschwitz, N.; Bonnet, D.; Welch, M. Research Report: Embracing Digital Technology—A New Strategic Imperative; MIT Sloan Management Review: Cambridge, MA, USA, 2013. [Google Scholar]

- Rogers, E. Diffusion of Innovations, 5th ed.; Free Press Simon & Schuster: New York, NY, USA, 2003; p. 576. [Google Scholar]

- SEBoK. Guide to the Systems Engineering Body of Knowledge. 2022. Available online: https://sebokwiki.org/wiki/Capability_(glossary) (accessed on 17 December 2022).

- Gold, R.; Zimmerman, P. DAU Lunch and Learn: Digital Engineering; Department of Defense (DoD): Washington, DC, USA, 2018. [Google Scholar]

- Zimmerman, P.M. Digital Engineering Discussions; Department of Defense: Washington, DC, USA, 2021. [Google Scholar]

- DAU. Glossary of Defense Acquisition Acronyms and Terms. Available online: https://www.dau.edu/glossary (accessed on 30 March 2024).

- Dinesh, V. Keynote: Model Based Space Systems and Software Engineering—MBSE2020. In Proceedings of the Digital Engineering Research, Noordwijk, The Amsterdam, 28–29 September 2020. [Google Scholar]

- Hund, A.; Wagner, H.-T.; Beimborn, D.; Weitzel, T. Digital Innovation: Review and novel perspective. J. Strat. Inf. Syst. 2021, 30, 101695. [Google Scholar] [CrossRef]

- Law Insider Dictionary. Available online: https://www.lawinsider.com/dictionary/digital-technology (accessed on 12 January 2024).

- Ciriello, R.F.; Richter, A.; Schwabe, G. Digital Innovation. Bus. Inf. Syst. Eng. 2018, 60, 563–569. [Google Scholar] [CrossRef]

- Gong, C.; Ribiere, V. Developing a unified definition of digital transformation. Technovation 2020, 102, 102217. [Google Scholar] [CrossRef]

- Department of the Army. Army Digital Transformation Strategy; US Army: Washington, DC, USA, 2021. [Google Scholar]

- PRISMA. Transparent Reporting of Systematic Reviews and Meta-Analyses. 2022. Available online: https://www.prisma-statement.org/ (accessed on 17 December 2022).

- Song, I.Y.; Evans, M.; Park, E.K. A Comparative Analysis of Entity Relationship Diagrams. J. Comput. Softw. Eng. 1995, 3, 427–459. [Google Scholar]

- Rogers, E. Diffusion on Innovations, 1st ed.; Free Press of Glencoe: New York, NY, USA, 1962. [Google Scholar]

- Rogers, E. Diffusion of Innovations, 4th ed.; Free Press Simon & Schuster: New York, NY, USA, 1995. [Google Scholar]

- Fishbein, M. Attitudes and the prediction of behavior. In Readings in Attitude Theory and Measurement; Fishbein, M., Ed.; Wiley: New York, NY, USA, 1967; pp. 477–492. [Google Scholar]

- Fishbein, M.; Ajzen, I. Belief, Attitude, Intention and Behavior: An Introduction to Theory and Research; Addison-Wesley: Reading, MA, USA, 1975. [Google Scholar]

- Ajzen, I.; Fishbein, M. Understanding Attitudes and Predicting Social Behavior; Prentice Hall: Englewood Cliffs, NJ, USA, 1980. [Google Scholar]

- Bandura, A. Toward a unifying theory of behavioral change. Psychol. Rev. 1977, 84, 191–215. [Google Scholar] [CrossRef]

- Bandura, A. The Explanatory and Predictive Scope of Self-Efficacy Theory. J. Soc. Clin. Psychol. 1986, 4, 359–373. [Google Scholar] [CrossRef]

- Bandura, A. Social Cognitive Theory: An Agentic Perspective. Annu. Rev. Psychol. 2001, 52, 1–26. [Google Scholar] [CrossRef]

- Triandis, H.C. Beliefs, Attitudes, and Values; University of Nebraska Press: Lincoln, NE, USA, 1980; pp. 195–259. [Google Scholar]

- Hambrick, D.C.; Mason, P.M.A. Upper Echelons: The Organization as a Reflection of Its Top Managers. Acad. Manag. Rev. 1984, 9, 193–206. [Google Scholar] [CrossRef]

- Ajzen, I. From Intentions to actions: A theory of planned behavior. In Action-Control: From Cognition to Behavior; Springer: Berlin/Heidelberg, Germany, 1985; pp. 11–39. [Google Scholar]

- Ajzen, I. The Theory of Planned Behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Giddens, A. The Constitution of Society: Outline of the Theory of Structuration; Polity Press: Berkeley, CA, USA, 1986. [Google Scholar]

- DeSanctis, G.; Poole, M.S. Capturing the complexity in advanced technology use: Adaptive structuration theory. Organ. Sci. 1994, 5, 121–147. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. Manag. Inf. Syst. Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Studies. Manag. Sci. 2000, 46, 169–332. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Intervention. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Tornatzky, L.G.; Fleischer, M. The Processes of Technological Innovation; Lexington Books: Lexington, MA, USA, 1990. [Google Scholar]

- Thompson, R.L.; Higgins, C.A.; Howell, J.M. Personal Computing: Toward a Conceptual Model of Utilization. MIS Q. 1991, 15, 125–143. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. Extrinsic and Intrinsic Motivation to Use Computers in the Workplace. J. Appl. Soc. Psychol. 1992, 22, 1111–1132. [Google Scholar] [CrossRef]

- Goodhue, D.L.; Thompson, R.L. Task-Technology Fit and Individual Performance. Manag. Inf. Syst. (MIS) Q. 1995, 19, 213–236. [Google Scholar] [CrossRef]

- Scott, R. Institutions and Organizations: Ideas, Interests, and Identities; Sage: Newcastle upon Tyne, UK, 1995. [Google Scholar]

- Koppenjan, J.; Groenewegen, J. Institutional design for complex technological systems. Int. J. Technol. Policy Manag. 2005, 5, 240–257. [Google Scholar] [CrossRef]

- Janssen, M.; Weerakkody, V.; Ismagilova, E.; Sivarajah, U.; Irani, Z. A framework for analysing blockchain technology adoption: Integrating institutional, market and technical factors. Int. J. Inf. Manag. 2020, 50, 302–309. [Google Scholar] [CrossRef]

- Taylor, S.; Todd, P.A. Decomposition and crossover effects in the theory of planned behavior: A study of consumer adoption intentions. Int. J. Res. Mark. 1995, 12, 137–155. [Google Scholar] [CrossRef]

- Taylor, S.; Todd, P.A. Understanding Information Technology Usage: A Test of Competing Models. Inf. Syst. Res. 1995, 6, 144–176. [Google Scholar] [CrossRef]

- Swanson, C.E.; Kopecky, K.J.; Tucker, A. Technology Adoption over the Life Cycle and Aggregate Technological Progress. South. Econ. J. 1997, 63, 872–887. [Google Scholar] [CrossRef]

- Tjan, K. Finally, a way to put your internet portfolio in order. Harv. Bus. Rev. 2001, 79, 76–85. [Google Scholar] [PubMed]

- Liang, T.P.; Wei, C.P. Introduction to the special issue: A framework for mobile commerce applications. Int. J. Electron. Commer. 2004, 8, 7–17. [Google Scholar] [CrossRef]

- Reckwitz, A. Toward a theory of social practices: A development in culturalist theorizing. Eur. J. Soc. Theory 2002, 5, 243–263. [Google Scholar] [CrossRef]

- Nysveen, H.; Pedersen, P.E.; Skard, S. Ecosystem Adoption of Practices Over Time (EAPT): Toward an alternative view of contemporary technology adoption. J. Bus. Res. 2020, 116, 542–551. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Kim, H.; Chan, H.; Gupta, S. Value-based Adoption of Mobile Internet: An empirical investigation. Decis. Support Syst. 2007, 43, 111–126. [Google Scholar] [CrossRef]

- Sykes, T.A.; Venkatesh, V.; Gosain, S. Model of Acceptance with Peer Support: A Social Network Perspective to Understand Employees’ System Use. MIS Q. 2009, 33, 371–393. [Google Scholar] [CrossRef]

- Ukoha, O.; Awa, H.O.; Nwuche, C.A.; Asiegbu, I. Analysis of Explanatory and Predictive Architectures and the Relevance in Explaining the Adoption of IT in SMEs. Interdiscip. J. Inf. Knowl. Manag. 2011, 6, 217–230. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Doe, J.K.; Van de Wetering, R.; Honyenuga, B.; Versendaal, J. Toward a Firm Technology Adoption Model (F-TAM) in a Developing Country Context. In Proceedings of the 11th Mediterranean Conference on Information Systems (MCIS), Genoa, Italy, 27–28 September 2017. [Google Scholar]

- McDermott, T.; Van Aken, E.; Hutchison, N.; Blackburn, M.; Clifford, M.; Yu, Z.; Chen, N.; Salado, A. Task Order WRT-1001: Digital Engineering Metrics—Technical Report SERC-2020-TR-002; Systems Engineering Research Center (SERC): Hoboken, NJ, USA, 2020. [Google Scholar]

- NIST. 2023–2024 Baldrige Excellence Framework (Business/Nonprofit). 2023. Available online: www.nist.gov/baldrige/products-services/baldrige-excellence-framework (accessed on 5 January 2024).

- Reis, J.; Amorim, M.; Melão, N.; Matos, P. Digital Transformation: A Literature Review and Guidelines for Future Research. In WorldCIST’18 2018: Trends and Advances in Information Systems and Technologies; Springer: Cham, Switzerland, 2018; Volume 745. [Google Scholar]

- Lee, Y.; Kozar, K.A.; Larson, K.R. The Technology Acceptance Model: Past, Present, and Future. Commun. Assoc. Inf. Syst. 2003, 12, 752–780. [Google Scholar] [CrossRef]

- Barthel, P.; Hess, T. Are Digital Transformation Projects Special? In Proceedings of the Twenty-Third Pacific Asia Conference on Information Systems, Xi’an, China, 8–12 July 2019.

- Wilson, B.; Dobrovolny, J.; Lowry, M. A Critique of How Technology Models are Utilized. Perform. Improv. Q. 1999, 12, 24–29. [Google Scholar] [CrossRef]

- Burge, S. The Systems Thinking Toolbox: Affinity Diagram; Burge Hughes Walsh: Rugby, Warwickshire, UK, 2011. [Google Scholar]

- Schmitt, A.; Raisch, S.; Volberda, H.W. Strategic Renewal: Past Research, Theoretical Tensions and Future Challenges. Int. J. Manag. Rev. 2018, 20, 81–98. [Google Scholar] [CrossRef]

- Bughin, J.; Kretschmer, T.; van Zeebroeck, N. Strategic Renewal for Successful Digital Transformation. IEEE Eng. Manag. Rev. 2021, 49, 103–108. [Google Scholar] [CrossRef]

- Hess, T.; Matt, C.; Benlian, A.; Wiesbok, F. Options for Formulating a Digital Transformation Strategy. MIS Q. Exec. 2016, 15, 123–140. [Google Scholar]

- Campagna, J.M.; Bhada, S.V. A Capability Maturity Assessment Framework for Creating High Value Digital Engineering Opportunities. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021. [Google Scholar]

- Prosci Inc. Best Practices in Change Management 11th Edition: 1863 Participants share Lessons and Best Practices in Change Management; Prosci, Inc.: San Diego, CA, USA, 2020. [Google Scholar]

- Gimpel, H.; Hosseini, S.; Huber, R.; Probst, L.; Roglinger, M.; Faisst, U. Structuring Digital Transformation: A Framework of Action Fields and its Application at ZEISS. J. Inf. Technol. Theory Appl. 2018, 19, 31–54. [Google Scholar]

- Berghaus, S.; Back, A. Disentangling the Fuzzy Front End of Digital Transformation: Activities and Approaches. In Proceedings of the ICIS 2017 Proceedings, Seoul, Republic of Korea, 10–13 December 2017. [Google Scholar]

- Rogers, M.; Medina, U.E.; Rivera, M.A.; Wiley, C.J. Complex Adaptive Systems and the Diffusion of Innovations. Innov. J. Public Sect. Innov. J. 2005, 10, 1–26. [Google Scholar]

- Moore, G.; Benbasat, I. Development of an Instrument to Measure the Perceptions of Adopting an Information Technology Innovation. Inf. Syst. Res. 1991, 2, 192–222. [Google Scholar] [CrossRef]

- Tarhini, A.; Arachchilage, N.A.G.; Masa’Deh, R.; Abbasi, M.S. A Critical Review of Theories and Models of Technology Adoption and Acceptance in Information Systems Research. Int. J. Technol. Diffus. 2015, 6, 58–77. [Google Scholar] [CrossRef]

- Momani, M.; Jamous, M.M. The evolution of technology acceptance theories. Int. J. Contemp. Comput. Res. 2017, 1, 51–58. [Google Scholar]

- Bandura, A. The Evolution of Social Cognitive Theory. In Great Minds in Management: The Process of Theory Development; Oxford University Press: Oxford, UK, 2005; pp. 9–35. [Google Scholar]

- Luszczynska, A.; Schwarzer, R. Chapter 7 Social Cognitive Theory. In Predicting and Changing Health Behavior—Research and Practice with Social Cognition Models, 3rd ed.; Connor, M., Norman, P., Eds.; Open University Press: Maidenhead, UK, 2015. [Google Scholar]

- Bandura, A. Cultivate self-efficacy for personal and organizational effectiveness. In The Blackwell Handbook of Principles of Organizational Behavior; Locke, E.A., Ed.; Blackwell: Oxford, UK, 2000; pp. 120–136. [Google Scholar]

- Awa, H.O.; Eze, S.C.; Urieto, J.E.; Inyang, B.J. Upper echelon theory (UET) A major determinant of Information Technology (IT) adoption by SMEs in Nigeria. J. Syst. Inf. Technol. 2011, 13, 144–162. [Google Scholar] [CrossRef]

- Sharma, R.; Mishra, R. A Review of Evolution of Theories and Models of Technology Adoption. Indore Manag. J. 2014, 6, 17–29. [Google Scholar]

- Ajzen, I.; Sheikh, S. Action versus inaction: Anticipated affect in the theory of planned behavior. J. Appl. Soc. Psychol. 2013, 43, 155–162. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior is alive and well, and not ready to retire. Health Psychol. Rev. 2015, 9, 131–137. [Google Scholar] [CrossRef] [PubMed]