Abstract

Systems engineering (SE) solves the most complex problems, bringing together societal issues, theoretical engineering, and the transformation of theory into products and services to better humanity and reduce suffering. In industry, the effort to transform theoretical concepts into practical solutions begins with the product life cycle concept stage, where systems engineering estimates and derives technologies, costs, and schedules. It is crucial to have a successful concept stage as today’s industries focus on producing the most capable technologies at an affordable cost and faster time to market than ever before. The research of this paper utilizes a transdisciplinary SE process model in the concept stage to develop and propose training for early-in-career engineers, effectively bridging the gap from university learning to industry practice. With a focus on the concept stage of the product life cycle and the industry’s demands of expeditiously proposing complex technical solutions, the paper aims to create an efficient learning program. The main objective of this research is to create a learning program to bring up-to-speed early-in-career engineers using a transdisciplinary SE process model, with six key components: (1) disciplinary convergence—creating a collective impact; (2) TD collaboration; (3) collective intelligence; (4) TD research integration; (5) TD engineering tools; and (6) analysis and TD assessment. The research will then conclude with a case study piloting the TD learning program and analyzing its effectiveness, ultimately aiming to enhance early-in-career engineers’ skills in proposing technical solutions that meet customer demands and drive business profitability.

1. Introduction

In the 21st century, systems engineers face a world of ever-so-complex problems, hyperconnectivity, and convergence [1] This research explores new ways of thinking, new ways of understanding problem sets, and new ways of learning in industry.

While conceptualizing and designing the learning program, this research strongly underscores the concept stage. Why is that? The Defense Acquisition University (DAU) studies report that after the concept stage, 8% of the program’s actual costs have been accrued, and 70% of the total life cycle costs are committed [2]. This statistic emphasizes that the concept stage sets the tone for program success or failure.

Customers’ need for complex system solutions in an expedited time frame highlights the need for a mature concept stage [3]. Typically, industries will shift a portion of the design phase into the concept phase. This modality requires significant time and funding to develop the technical baseline fully. Whereas we usually turn to systems engineering processes to address such complex problems, we have found that current SE methodologies [4,5,6] for the concept stage do not address such complexities. Current SE methodologies simply describe the concept stage in a few words. For example, the Waterfall methodology states the concept stage as requirements, eliciting, and analysis [6]. The Vee methodology and Spiral methodology state the concept stage as the Concepts of Operation [6]. This research expands the methodology of the concept stage to include the TD SE process model.

The research of this paper utilizes a transdisciplinary SE process model in the concept stage to develop and propose training for early-in-career engineers, effectively bridging the gap from university learning to industry practice. Typically, newly hired systems engineers to industry come directly from universities, where they have studied engineering academically, where some may have degrees in mechanical, electrical, or systems engineering. Industries create learning programs to bridge terminology from academics to industry, teach systems engineering principles that may have yet to be included in their academic learning, and describe the variety of engineering roles that work together to propose solutions for our customers.

The importance of a transdisciplinary process is the integrated use of the tools, techniques, and methods from various disciplines [7]. This process effectively converges disciplines to create a collective impact to solve the problem of interest, in this case study aiming to enhance early-in-career engineers’ skills in proposing technical solutions that meet customer demands and drive business profitability.

This research will answer the question, “Does the utilization of the TD SE process model in the concept stage, effectively assist in the proposal and understanding of the baseline maturity for a TD SE learning program?”

2. Method Overview: TD SE Process Model

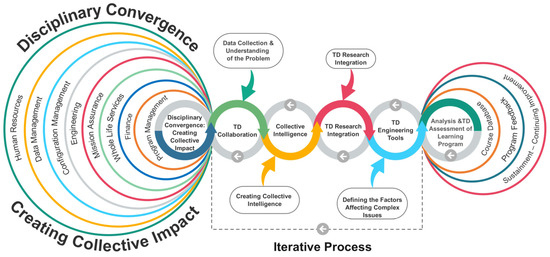

The case study described below utilizes a TD SE process model in the concept stage to effectively understand the baseline maturity for a TD SE learning program for proposal generation in the context of an immediate industry need. The TD SE process model adapted from Dr. Ertas utilizes transdisciplinary thinking skills such as visible thinking, systemic thinking, computational thinking, and critical/creative skills [8]. These skills are imperative to systems engineering during proposal generation. The TD SE process model illustrated in Figure 1 is composed of six steps: (1) disciplinary convergence: creating a collective impact; (2) TD collaboration; (3) collective intelligence; (4) TD research integration; (5) TD engineering tools; and (6) analysis and TD assessment.

Figure 1.

Sequence of steps to develop the TD SE learning model (adapted from [8]).

2.1. Method Step 1: Disciplinary Convergence—Creating a Collective Impact

Disciplinary convergence is the key enabler of transdisciplinary systems engineering [1]. Disciplinary convergence creates a collective impact through two or more disciplines collaborating to derive concepts, principles, and perspectives and create a meaningful impact. For example, familiar to industry, engineering, program management, and finance collaborate to provide accurate estimates at complete (EACs). EACs provide program health metrics to leadership so that business executives can make informed decisions.

In this paper’s case study, disciplinary convergence is the first step in the TD SE process model. For industry to deliver products and services to customers, it takes the coordination of many disciplines across the business to contribute to overall program success. High-tech industries are known for their use of advanced systems and highly skilled engineers to solve high-consequence problems. However, it is not solely the responsibility of engineering to place the products and services into the customer’s hands. It takes multiple disciplines across the business to interact successfully and have smooth handoffs to enable on-time delivery within the contract budget. Disciplinary convergence, in this case study, allows conversations to take place on how engineering may assist in harmonizing disciplines to improve proposal generation. For example, if finance recommends that engineers better understand how their role affects EACs, we may see the convergence of engineering and finance make sense. We can then add this topic to learning curriculum plans. This broadens the skillsets of engineers, which follows Dr. Madni’s research recognizing the need for an expanded role [1].

2.2. Method Step 2: Transdisciplinary Collaboration

Transdisciplinary collaboration is imperative to solve complex problems and develop social–technical systems to address such problems [9]. The cross-discipline team will collectively meet to define and understand the problem [10,11]. Each discipline will bring data and resources from their expertise and share them with the cross-discipline team to discuss possible solutions to the problem [12].

TD collaboration is the second step in the TD SE process model. At this point in the process model, the cross-discipline team have established team building and begun strategically thinking about how their interactions play a crucial role in successful program execution. Discussions continue to gain a specific understanding of the problem at hand. The problem in this case study is how we train newly hired engineers, either from out of college or from another company, to understand what it means to be a systems engineer in industry and quickly contribute to programs effectively.

2.3. Method Step 3: Creating Collective Intelligence

Collective intelligence is a structured approach to gaining knowledge from a diverse group of people about a difficult problem. In this research, transdisciplinary collective impact will bring multiple disciplines together to solve the difficult problem of creating a systems engineering learning program for 21st-century engineers. The diversity of thoughts to solve a common problem stimulates new and innovative solutions that would have most often been unattainable otherwise, ultimately resulting in a collective intelligence on the business [13,14].

Creating collective intelligence is the third step in the TD SE process model. The cross-discipline team identified in Table 1 will identify and define the key attributes of a systems engineering learning program for 21st-century engineers.

Table 1.

Disciplinary convergence—creating a collective impact.

Table 2 was created by the lead author for a preliminary conversation structure to stimulate conversations in the room. The multiple disciplines will identify and define a similar table to represent the cross-discipline team’s ideas, resulting in a new table of attributes with definitions of each. Lastly, the cross-discipline team will establish contextual relationships between the identified attributes, developing a structural self-interaction matrix (SSIM). The SSIM will be used in a later step.

Table 2.

TD collaboration: topic ideas.

2.4. Method Step 4: Transdisciplinary Research Integration

Transdisciplinary research integration assesses data received in the collective intelligence step and integrates the knowledge into a useful form [12].

TD research integration is the fourth step in the TD SE process model. This research will create a database of current programs and modules for early-in-career systems engineers with respective attributes and compare it to the list created in the TD collaboration step. Lastly, feedback provided by discipline leads, subject matter experts (SMEs), and learning participants will be recorded per key attribute of the current modules.

2.5. Method Step 5: Transdisciplinary Engineering Tools

A transdisciplinary engineering tool called Interpretive Structural Modeling (ISM), proposed by Warfield in 1973 [10], will be used. ISM was chosen as it provides systems engineers with a systematic and comprehensive method for developing first-version models ideal for proposal generation [22]. ISM will display the contextual relationships between the key attributes [12,23]. The contextual relationships will later result in level partitioning to develop a digraph or flow of factors [24]. The last activity of ISM is the Matrix Impact Cross-Reference Multiplication Applied to a Classification (MICMAC) analysis [25]. The MICMAC will identify the driving power and dependencies of the identified key attributes [26]. Acknowledging which learning attributes have the highest driving power or those attributes with dependencies will assist leadership decisions to propose a learning program with the highest value possible for industry.

The TD engineering tool is the fifth step in the TD process model. The digraph and MICMAC will be created using the ATLAS online ISM tool [27]. The input required for the ATLAS tool is the contextual relationships between the key attributes, which will be recorded in the SSIM from step 3. The digraph and MICMAC will be used to discuss the key attributes’ driving power and dependences and how they relate to proposals of new learning programs.

2.6. Method Step 6: Analysis and Transdisciplinary Assessment

Analysis and TD Assessment is the last step of the TD SE process model.

Current State, Gaps, and Proposed Modules per Key Attribute

In this step, the following activities will occur.

- Assess the current modules concerning the proposed key attribute definitions and identify whether gaps exist.

- If a gap is identified and can be filled by amending a current module, this research will provide recommendations to current module owners.

- If a gap is identified, and the current modules cannot be amended, this research will fill the gap by proposing a new module meeting the key attributes for 21st-century engineering learning.

- If applicable, pilot the new proposed modules.

- Perform a quantitative analysis of current SE modules by scoring the maturity level per key attribute. If applicable, perform a quantitative analysis of pilot SE modules by scoring the maturity level per key attribute.

- Perform a qualitative analysis for feedback received for current modules. If applicable, perform a qualitative analysis for feedback received for pilot SE modules.

3. Case Study: TD SE Learning Program

This section includes the results of the case study performed in this research.

3.1. Case Study Step 1: Disciplinary Convergence—Creating a Collective Impact

This research invited leaders from disciplines outside of systems engineering to team build and strategically think about how engineers may expand their skillsets for the betterment of the collective business.

The following are critical disciplines across the business that were included in this research; they are considered vital participants to produce accurate proposals to meet customer demands.

3.2. Case Study Step 2: TD Collaboration

Attributes were discussed specific to the TD SE learning program being developed for 21st-century engineers [7]. The Nominal Group Technique (NGT) was chosen to allow each discipline to comment and provide data based on their experiences and expertise [28]. NGT bridged discipline relationships/communications and explored stakeholder views face-to-face in small groups, allowing an expedited path for research [29]. Table 2 provides a list of topics experienced in today’s industry for disciplines to discuss. The basic NGT steps were followed: explanation of a trigger question, group members’ silent idea generation in writing, round-robin recording of the ideas, continuous discussion of each idea for revision and clarification, and voting to determine a preliminary significance ranking of the ideas [12]. This research used Table 2 as an initial list of topics and allowed the group to amend the topics as needed to communicate their ideas and thoughts to the group.

3.3. Case Study Step 3: Collective Intelligence

3.3.1. Key Attributes Identified and Defined

The NGT allowed each discipline in Table 1 to comment and provide data based on their experiences and expertise. Table 3 lists the attributes and definitions that resulted from the NGT exercise.

Table 3.

TD collaboration: key attributes identified.

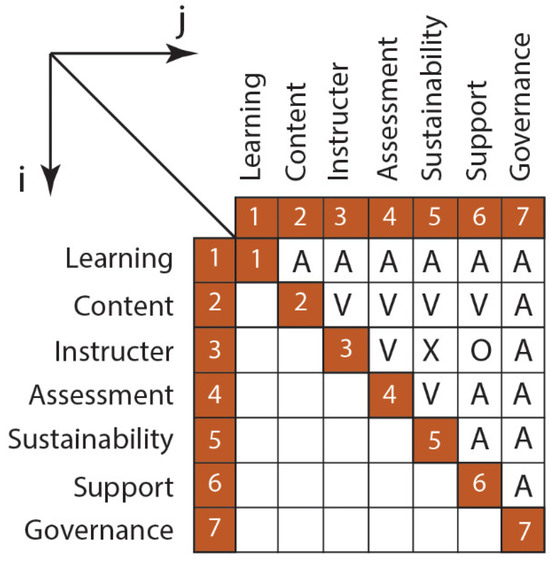

3.3.2. Creating the Structural Self-Interaction Matrix (SSIM)

After identifying and defining the key attributes, the NGT group established contextual relationships of said attributes as part of the collective intelligence step. The contextual relationships between attributes were recorded in the Structural Self-Interaction Matrix (SSIM). Figure 2 illustrates the SSIM from the NGT exercise.

Figure 2.

Structural Self-Interaction Matrix.

- If the relationship between factors was from i to j, a V was entered.

- If the relationship between factors was from j to I, an A was entered.

- If the relationship between factors was bidirectional, an X was entered.

- If there was no relationship between i and j, an O was entered.

3.4. Case Study Step 4: TD Research Integration

3.4.1. Control Group—Scoring Current Modules for Key Attributes

The cross-discipline team brought forth current SE learning modules for early-in-career systems engineers and created a database of modules for analysis. The systems engineering council members, a group of nine systems engineering SMEs representing and harmonizing the corporation’s businesses to advance systems engineering, analyzed the current SE learning modules. The modules were analyzed for the identified key attributes to understand the current maturity of early-in-career learning modules. A Likert score of 1–5, one being immature and five being mature, was assigned to indicate the level of maturity of each key attribute for each module. Table 4 depicts the scoring.

Table 4.

Current SE modules—maturity level per key attribute.

3.4.2. Control Group—Feedback per Key Attribute on Current Modules

In addition to the Likert scores in Table 4, feedback was captured from SE council members, discipline leads, SMEs, and learning participants for each of the key attributes regarding the current SE learning modules. Table 5 contains the feedback.

Table 5.

Feedback per key attribute on current modules.

3.5. Case Study Step 5: TD Engineering Tool

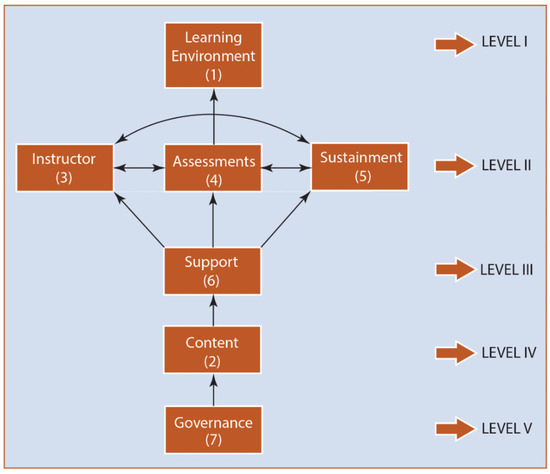

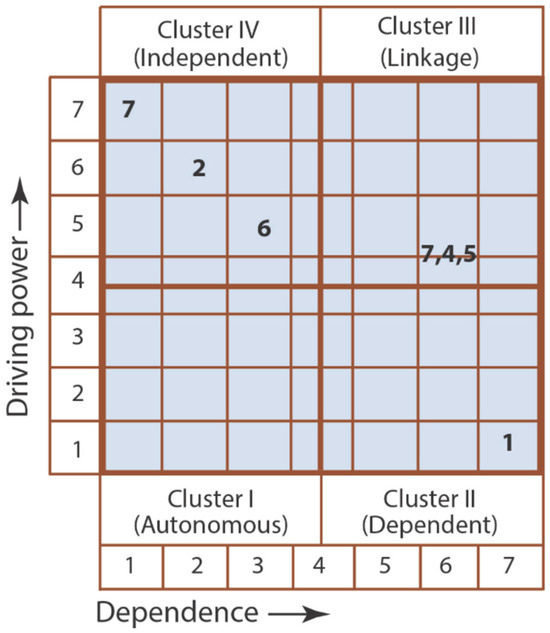

Interpretive Structural Modeling—Digraph and MICMAC from SSIM

The digraph and MICMAC were created using the ATLAS online ISM tool [27]. The input required for the ATLAS tool was the contextual relationships between the key attributes captured from the NGT group. The contextual relationships were recorded in the SSIM shown in Figure 2. Figure 3 and Figure 4 illustrate the digraph and MICMAC of the key attributes, respectively.

Figure 3.

Digraph of key attributes.

Figure 4.

MICMAC of key attributes.

The ISM digraph is a visual representation of the flow and relations to the key attributes of learning programs, as illustrated in Figure 3. Governance is the source factor linearly connected to content. Content has a linear relation to support. Support is linearly connected to the instructor, assessments, and sustainment. The instructor and assessments are bidirectionally connected. Assessments and sustainment are bidirectionally connected. The instructor and sustainment are bidirectionally connected. Assessments are linearly connected to the learning environment. The flow and relations of the attributes are important during proposal design to be certain that the limited resources and limited time are focused on attributes that drive results and prevent roadblocks. In this case study, the proposal team needs to focus on governance, content, and support prior to focusing on the instructor, assessments, and sustainment. Then, lastly, the proposal team may focus on the learning environment if resources and time permit.

The MICMAC analysis arranges the factors of system performance with respect to driving power and dependence into four clusters, as illustrated in Figure 4.

- Cluster I includes no factors that are autonomous. Autonomous factors have low driving power and low dependence.

- Cluster II includes one factor (learning environment) that is dependent. Dependent factors have low driving power and high dependence.

- Cluster III includes three factors (instructor, assessment, and sustainability) that are linked. Linkage factors have high driving power and high dependence. Note: Factors instructor, assessment, and sustainability are on the border of Cluster II and III, but because the digraph depicts linkages, these three attributes are moved to Cluster III [30].

- Cluster IV includes three factors (governance, content, and support) that are independent. Independent factors have high driving power and low dependence. These factors have a direct impact on the success of learning programs.

- The MICMAC indicates that the governance, content, and support learning attributes directly impact the success of learning programs. Therefore, in order of priority, the proposal team should address governance, content, and support attributes for effective proposal generation. If time permits, the learning attributes instructor, assessment, and sustainability should be addressed by the proposal team. Because instructor, assessment, and sustainability are linked, these three learning attributes are equally important to address. If time permits, the learning environment should be addressed by the proposal team. The learning environment has low driving power, so research shows that it is of the lowest priority of the seven identified learning attributes.

3.6. Case Study Step 6: Analysis and TD Assessment of Learning Program

Current State, Gaps, and Proposed State of Key Attributes

Analysis and TD assessment is the last step in the TD SE process model. Table 6 defines the current state of attributes, identifies gaps, and describes the proposed state of attributes.

Table 6.

Current state, gaps, and proposed state of key attributes.

4. Pilot Program

4.1. Pilot Program—Definition

The pilot program was designed utilizing the TD SE process model. By utilizing the TD SE process model and including discipline leads from across the business, this research was able to put into practice learning modules containing the identified seven key learning attributes. The proposed learning modules are part of the newly adopted SE learning vision because of this research for the business to successfully train early-in-career engineers to effectively contribute to programs and ultimately deliver products and services meeting the technical scope within cost and schedule to the customers.

The proposed state of attributes in Table 6 was used to create a pilot program for SE learning modules in the industry. The program was designed utilizing the seven key learning attributes, placing a special emphasis on the high-driving-power attributes: governance, content, and support.

In the pilot program, governance was addressed first as it was the source factor driving content. Governance was defined as having the responsibility to set the vision for SE learning and define SE learning content while concurrently exploring content gaps. Governance would also establish a shared measurement system to assess the learning program’s effectiveness.

- The pilot began by meeting with the SE council, a group of SE SMEs representing and harmonizing the corporation’s businesses to advance systems engineering, to agree on an SE learning vision. As defined in the Systems Engineering Vision 2035 from the International Council on Systems Engineering (INCOSE), the council accepted the INCOSE learning framework as the SE learning vision as defined in Table 7 [31], as proposed by the lead author of this paper. The rational for following INCOSE’s learning framework is the broad range of skillsets that include core competencies, professional skills, technical skills, management competencies, and integrating skills required for systems engineering success in industry.

- The SE council members agreed that the INCOSE learning framework would define the SE learning content, and the framework would be utilized to identify content gaps in industry learning.

- The council agreed that the seven attributes as defined in this research, with a special emphasis on the high-driving-power attributes of governance, content, and support, would be utilized to measure existing and future learning programs’ effectiveness.

Table 7.

SE competency areas—SE Vision 2035 [31].

Table 7.

SE competency areas—SE Vision 2035 [31].

| Core SE Principles |

|

| Professional Competencies |

|

| Technical Competencies |

|

| SE Management Competencies |

|

| Integrating Competencies |

|

Recall governance was the source factor linearly connected to content. Therefore, in the pilot program, content was addressed second. Content is the resources used to develop the skills and knowledge of systems engineers. The SE council accepted content as defined in Table 8, as proposed by the lead author of this paper. The content in Table 8 is from the published INCOSE Handbook v5. The INCOSE Handbook is published every 3–5 years by INCOSE and is thoroughly reviewed by INCOSE systems engineering SMEs prior to publication. In this case study, the business decided that the INCOSE Handbook content would meet the need to expose new systems engineers to industry and introduce the core systems engineering concepts needed in industry. It was acknowledged that some portion of the handbook may not align to the business, but the facilitators of the course had conversations regarding where gaps or differences arose from the handbook to industry. The facilitators were part of the support learning attribute, which will be discussed in a subsequent paragraph.

Table 8.

INCOSE Handbook v5—table of contents [2].

As part of the corporation’s INCOSE membership, corporations are afforded corporate advisory board (CAB) associate seats to be utilized at their discretion. The SE council agreed to utilize 350 INCOSE CAB associate seats for early-in-career engineers to be exposed to INCOSE for one year. After one year of being afforded CAB associate benefits, the participant will be encouraged to become a full INCOSE member and give the newly vacant CAB associate seat to another early-in-career employee, as proposed by the lead author. As part of the CAB associate membership, it allows a free soft copy of the INCOSE Handbook, providing those nominated to the learning program with the needed learning material.

In the pilot, the learning objectives will be used to describe what the participants should be able to accomplish because of the study. Lastly, the content was modulated to include one topic, 30 min or less in duration, and tagged with the difficulty level, i.e., entry, intermediate, advanced. Future work for the content and learning environment attributes will be to build a repository of learning modules for on-demand learning.

Recall content was linearly connected to support. Therefore, in the pilot program, support was addressed third. Support is having SE SMEs actively measure learning program performance and effectiveness. Effectiveness was measured through multiple engagements with the participants, such as (1) pre- and post-knowledge checks, (2) end-of-the-course surveys, (3) SE SMEs offered office hours at a defined time each week to answer SE discipline questions, and (4) SE SMEs offered career coaching services for systems engineers. Feedback from the engagements exposed what the participants learned or did not learn; this led to revising instructor presentations and polling questions. Two SE SMEs were dedicated to the learning program in the pilot, actively engaging with the participants. From the engagements, (1) knowledge checks were modified, (2) end-of-course surveys resulted in amended agendas, (3) SE SMEs offered office hours, which led to a make-up session of a previously held session, and (4) SE SME coaching services led to one-on-one support of learning.

The pilot program is in the fifth week of execution. This research will continue to gain data to measure program effectiveness, and the SE SMEs will continue to modify the program from the feedback received. The following section reports the data to date.

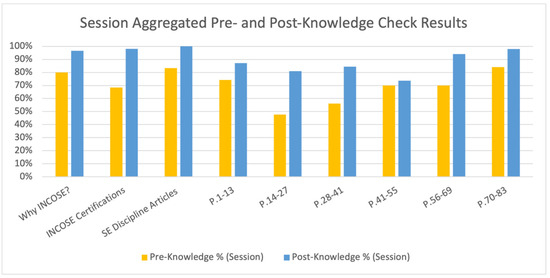

4.2. Pilot Program—Quantitative Analysis

As described in Section 4.1, engagements were held during the pilot modules to gain information from the participants. Session-aggregated pre- and post-knowledge check results are shown in Figure 5. Across every session, participants performed better on the assessment after the session; there were only five questions out of 60 where the participants regressed. This means that, 92% of the time, the participants improved their knowledge, and, only 8% of the time, they regressed. While learning retention rates vary, it is generally accepted that a learner retains 10% of what he or she hears, 20% of what he or she reads, 50% of what he or she sees, and 90% of what he or she does [32]. The case study results show favorable improvements in knowledge. Table 9 provides a statistical summary of this improvement on a session-aggregated level, including the results of a difference of two population proportions hypothesis test, with a null hypothesis that there is no improvement and an alternative that there is; the results are significant at an α = 0.05 level for eight of nine sessions. This means that the results have a 95 percent confidence interval for eight of nine sessions, with a 5 percent chance of being wrong. For the one session without an improvement, pp. 42–55, the hypothesis test would have had a confidence interval of 64 percent, with a 36 percent chance of being wrong, which is significantly less than the case study defined. Session pp. 42–55 did not show a significant knowledge improvement. Thus, the facilitators reviewed the identified knowledge gaps at the beginning of the next session, which did not change the knowledge check scores but helped the participants to understand the material.

Figure 5.

Session-aggregated scores for pre- and post- knowledge checks.

Table 9.

Session-aggregated hypothesis testing.

An additional end-of-course survey gathered sentiments from the participants regarding their experiences of the sessions. For the purposes of these data, the Likert scale results are simply split into ‘positive’, ‘neutral’, or ‘negative’ sentiments. Each survey includes 10 questions and is repeated across sessions. Responses are significantly positive across sessions; this supports the data from Table 10, suggesting that the sessions effectively deliver relevant information to participants.

Table 10.

Session-aggregated summary of end-of-the-course survey results.

The SE council members analyzed the proposed SE learning modules for the identified key attributes to understand the maturity of early-in-career learning modules utilizing data from Table 9 and Table 10. A Likert score of 1–5, one being immature and five being mature, was assigned to indicate the level of maturity of each key attribute for each module. Table 11 depicts the scoring.

Table 11.

New proposed SE modules—maturity level per key attribute.

The average score for the proposed SE learning modules was 4.71 for the maturity level; the proposed SE learning modules were designed to focus on the key attributes, so favorable results were expected. Content and learning environment fell short of the 5 score, as the repository of on-demand learning modules will not exist until next year.

4.3. Pilot Program—Qualitative Analysis

As described in Section 4.2. Pilot Program—Definition, engagements were held during the pilot modules to gain information from the participants. One of the engagements included an end-of-course survey qualitative section for participants to give open feedback. The SE SMEs that facilitated the course also had comments, which are marked in Table 12 as facilitator input. The SE SME facilitators analyzed all comments, and immediate improvements were made before the next module started, as noted in Table 12. The action of the SE SMEs is part of the support, sustainment, and assessment of learning attributes.

Table 12.

New proposed SE modules—qualitative feedback from participants/facilitators.

Having in place engagements with the participants, such as knowledge checks, surveys, office hours, and coaching services, allowed the SE SMEs to understand which content was learned and not learned and then adjust accordingly before the next module. This process to date is effective and will be continued to complete the program.

5. Closing

5.1. Discussion of TD SE Process Model to Propose a TD SE Learning Program

To complete this case study, the SE council members met to discuss the use of the TD SE process model in the concept stage to understand whether using the TD SE process model in the concept stage stimulated the relationships and conversations needed to effectively understand the problem set for the entire product life cycle over current practices.

Table 13 provides highlights from the SE council members’ discussion.

Table 13.

SE council members—discussion highlights.

Let us address the research question, “Does the utilization of the TD SE process model in the concept stage effectively assist in the proposal and understanding of the baseline maturity for a TD SE learning program?” The SE council members found the use of the TD SE process model in the concept stage to be effective in proposing a TD SE learning program for early-in-career engineers. This research highlights the benefits of a collective impact on the business when the entire product life cycle is considered in the concept stage.

Early in the process, there were questions about why disciplines outside of systems engineering were utilized for this research. The lead author explained the importance of a collective impact, and, after this research was briefed to leadership and the learning effectiveness was reported, the collective intelligence gained by the business was realized. Disciplinary convergence was then understood and accepted in industry.

5.2. Final Remarks

The pace of new discoveries, the expansion of human knowledge, and the rate at which research can contribute to understanding the problem can all be accelerated through transdisciplinary collaboration. Transdisciplinary approaches complement existing systems engineering techniques and offer a useful framework. Transdisciplinary collective intelligence is a new mode of information gathering, knowledge creation, and decision-making that draws on expertise from a broader range of organizations and collaborative partnerships, hence selectively and collectively initiating a successful collective impact.

In this research, the authors used a transdisciplinary process model in the concept stage to understand whether it could provide a broader and more expedient understanding of the problem set over the product life cycle, to provide maturity to proposal generation effectively. This case study showed that using a transdisciplinary process model in the concept stage allowed for the successful proposal generation of an effective learning program for early-in-career engineers.

The NASA Jet Propulsion Laboratory (JPL) Team X approach was reviewed to discuss an alternate approach to streamline proposal generation. Team X was created in 1995 as a multi-discipline team of engineers that utilizes concurrent engineering methodologies for proposal generation [33]. Concurrent engineering allows teams to build knowledge of conceptual solutions in parallel, before narrowing the sets by inferiority [4]. The proposal team workstations are networked, supporting an interactive environment for data management, modeling, and simulation tools to design, analyze, and evaluate concepts [33].

The similarity of NASA JPL to the TD SE process model is the drive to improve proposal generation by including experts from multiple disciplines, increasing collaboration, and using tools to make decisions earlier rather than later. The difference between NASA JPL and the TD SE process model is that the TD SE process model seeks experts outside of engineering, which facilitates the collective impact to solve complex problems, and the use of TD engineering tools, such as Interpretive Structural Modeling, which was used in this paper’s case study, providing a systematic and comprehensive method for the development of first-version models ideal for proposal generation [22].

The basis of this research stems from the need to expedite proposal generation. Industry proposal generation is a complex problem often married to societal problems. Industry’s proposal process is insufficient to meet the real-time needs of customers, who require products and services immediately.

Author Contributions

This paper was written as L.F.’s dissertation. A.E. was the advisor of L.F. Conceptualization. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was supported by Raytheon Technologies (RTX). RTX funded Ford’s Ph.D. study (yearly tuition payment). No funding number to report.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to restrictions (e.g. privacy).

Acknowledgments

The authors would like to acknowledge the support of those who participated in the Nominal Group Technique and focus groups for this research. A special thank you to numerous colleagues at Raytheon Technologies (RTX), who provided support in their absence while performing this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Madni, M.A. Transdisciplinary Systems Engineering; Springer: Berlin/Heidelberg, Germany, 2018; ISBN 978-3-319-62183-8. [Google Scholar]

- INCOSE. INCOSE Systems Engineering Handbook: A Guide for System Life Cycle Processes and Activities, 5th ed.; Wiley: Hoboken, NJ, USA, 2023. [Google Scholar]

- De Luce, D.; Dilanian, K. Can the U.S. and NATO Provide Ukraine with Enough Weapons? What is Kanban? NBC News, 31 March 2022. Available online: https://www.nbcnews.com/politics/national-security/can-us-nato-provide-ukraine-enough-weapons-rcna22066digit’e (accessed on 1 September 2022).

- Flores, M. Set-Based Concurrent Engineering (SBCE): Why Should You Be Interested? Lean Analytics Association. 2 December 2018. Available online: http://lean-analytics.org/set-based-concurrent-engineering-sbce-why-should-you-be-intereste (accessed on 1 September 2022).

- Schwaber, K. Agile Project Management with Scrum, 1st ed.; Microsoft Press: Redmond, WA, USA, 2004. [Google Scholar]

- Sols Rodriguez-Candela, A. Systems Engineering Theory and Practice, 1st ed.; Universidad Pontificia Comillas: Madrid, Spain, 2014. [Google Scholar]

- Ertas, A.; Maxwell, T.; Rainey, V.P.; Tanik, M.M. Transformation of higher education: The transdisciplinary approach in engineering. IEEE Trans. Educ. 2003, 46, 289–295. [Google Scholar] [CrossRef]

- Ertas, A. Creating a Culture of Transdisciplinary Learning in STEAM Education for K-12 Students. Transdiscipl. J. Eng. Sci. 2022, 13, 233–244. [Google Scholar] [CrossRef]

- Ertas, A.; Rohman, J.; Chillakanti, P.; Baturalp, T.B. Transdisciplinary Collaboration as a Vehicle for Collective Intelligence: A Case Study of Engineering Design Education. Int. J. Eng. Educ. 2015, 31, 1526–1536. [Google Scholar]

- Warfield, J. Structuring Complex Systems; Battelle Monograph, No. 3; Battelle Memorial Institute: Columbus, OH, USA, 1974. [Google Scholar]

- Warfield, J. A Philosophy of Design; Document Prepared for Integrated Design & Process Technology Conference; Wiley: Austin, TX, USA, 1995; p. 10. [Google Scholar]

- Ertas, A. Transdisciplinary Design Process; John Wiley & Sons: Hoboken, NJ, USA, 2018; pp. 9–13. [Google Scholar]

- Denning, P.J. Mastering the mess. Commun. ACM 2007, 50, 21–25. [Google Scholar] [CrossRef][Green Version]

- Rittel, H.W.J.; Webber, M.M. Dilemmas in a general theory of planning. Policy Sci. 1973, 4, 155–169. [Google Scholar] [CrossRef]

- ISO/IEC/IEEE 15288:2023; Systems and Software Engineering—System Life Cycle Processes. The International Organization for Standardization, The International Electrotechnical Commission, and The Institute of Electrical and Electronics Engineers: Geneva, Switzerland, 2023.

- Sinakou, E.; Donche, V.; Boeve-de Pauw, J.; Van Petegem, P. Designing Powerful Learning Environments in Education for Sustainable Development: A Conceptual Framework. Sustainability 2019, 11, 5994. [Google Scholar] [CrossRef]

- Hwang, S. Effects of Engineering Students’ Soft Skills and Empathy on Their Attitudes toward Curricula Integration. Educ. Sci. 2022, 12, 452. [Google Scholar] [CrossRef]

- Hughes, A.J.; Love, T.S.; Dill, K. Characterizing Highly Effective Technology and Engineering Educators. Educ. Sci. 2023, 13, 560. [Google Scholar] [CrossRef]

- Hartikainen, S.; Rintala, H.; Pylvas, L.; Nokelainen, P. The Concept of Active Learning and the Measurement of Learning Outcomes: A Review of Research in Engineering Higher Education. Educ. Sci. 2019, 9, 276. [Google Scholar] [CrossRef]

- Van Bossuyt, D.L.; Beery, P.; O’Halloran, B.M.; Hernandez, A.; Paulo, E. The Naval Postgraduate School’s Department of Systems Engineering Approach to Mission Engineering Education through Capstone Projects. Systems 2019, 7, 38. [Google Scholar] [CrossRef]

- Kans, M. A Study of Maintenance-Related Education in Swedish Engineering Programs. Educ. Sci. 2021, 11, 535. [Google Scholar] [CrossRef]

- Watson, R. Interpretive Structural Modeling—A Useful Tool for Technology Assessment? Technol. Forecast. Soc. Chang. 1978, 11, 165–185. [Google Scholar] [CrossRef]

- Ertas, A.; Gulbulak, U. Managing System Complexity through Integrated Transdisciplinary Design Tools; Atlas Publishing: San Diego, CA, USA, 2021; ISBN 978-0-9998733-1-1. [Google Scholar]

- Harary, F.; Norman, R.V.; Cartwright, D. Structural Models: An Introduction to the Theory of Directed Graphs; Wiley: New York, NY, USA, 1965. [Google Scholar]

- Duperrin, J.C.; Godet, M. Methode de Hierarchisation des Elements d’un Système; Rapport Economique du CEA Publishing; CEA: Budapest, Hungary, 1973; R-45-51. [Google Scholar]

- Mandal, A.; Deshmukh, S.G. Vender selection using interpretive structural modeling (ISM). Int. J. Oper. Prod. Manag. 1994, 14, 52–59. [Google Scholar] [CrossRef]

- ATLAS. (n.d.). ATLAS ISM Tool. Available online: https://theatlas.org (accessed on 1 August 2023).

- Delbecq, A.L.; VandeVen, A.H. A group process model for problem identification and program planning. J. Appl. Behav. Sci. 1971, 7, 466–491. [Google Scholar] [CrossRef]

- McMillan, S.S.; King, M.; Tully, M.P. How to use the nominal group and Delphi techniques. Natl. Libr. Med. Natl. Cent. Biotechnol. Inf. 2016, 38, 655–662. [Google Scholar] [CrossRef] [PubMed]

- Cearlock, D.B. Common Properties and Limitations of Some Structural Modeling Techniques. Ph.D. Thesis, University of Washington, Seattle, WA, USA, 1977. [Google Scholar]

- INCOSE Systems Engineering Vision 2035 Copyright 2021 by INCOSE. 2023. Available online: https://www.incose.org/docs/default-source/se-vision/incose-se-vision-2035.pdf?sfvrsn=e32063c7_10 (accessed on 1 August 2023).

- Galindo, I. The Formula for Successful Learning: Retention. Columbia Theological Seminary. 2022. Available online: https://www.ctsnet.edu/the-formula-for-sucessful-learning-retention/ (accessed on 1 December 2023).

- NASA Jet Propulsion Laboratory. Team X. 1995. Available online: https://jplteamx.jpl.nasa.gov (accessed on 1 December 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).