1. Introduction

The rising level of uncertainty in most organizations, industries, and other areas predetermines the growing complexity of decision-making. Parties tasked with making critical decisions are supposed to take into account the increasing number of criteria in order to identify and address all the risks and meet the expectations of varied stakeholders [

1]. In this situation, a traditional approach toward decision-making that is based on intuition and previous experience might no longer capture all the relevant nuances of particular settings. Accordingly, scientists and practitioners are looking for formalized decision-making instruments that would minimize bias risks and maximize the quality of final decisions. One possible solution is to use mathematical techniques such as multi-criteria decision-making (MCDM) methods. MCDM is a useful instrument that is frequently used in several fields of research and industry. The mechanism of MCDM emerged as a set of novel techniques for facilitating and improving decision-making in situations where the consideration of multiple criteria is required [

1]. One of the advantages of using MCDM is that it can generate consistent, structured, rational, robust, and transparent information in complex situations [

2]. The academic literature defines MCDM as an approach that involves the analysis of various available choices in a particular situation [

3]. MCDM is successfully utilized in numerous areas, including business, medicine, engineering, government, and even daily life [

4,

5,

6,

7,

8,

9]. There are many customized MCDM tools that were specifically designed for use in particular areas. The paradigm of MCDM implies following a series of steps. In accordance with Karunathilake et al. [

10], a typical MCDM process entails formulating a problem, identifying requirements, setting goals, determining alternatives, developing criteria, and applying a decision-making technique. Decision-makers often apply the MCDM technique when they intend to consider conflicting criteria in decision-making. For instance, risks and potential returns are examples of conflicting criteria of portfolio management, as investors try to choose an optimal portfolio based on their risk tolerance levels and return expectations [

11]. In comparison with many other instruments, MCDM might encompass many different factors. For example, the research by Kabassi [

7], which reviews MCDM models for evaluating environmental education programs, considers such criteria as adaptivity, completeness, pedagogy, clarity, effectiveness, knowledge, skills, behaviors, enjoyment, and multimodality. A critical analysis of the ways in which MCDM techniques could be applied in various settings remains a topical research area. However, decision-makers, analysts, and specialists are entities involved in identifying which MCDM models are to be implemented in the decision-making process in organizations [

2,

12].

1.1. Motivation of the Study

One of the areas in which the MCDM approach can be applied is the educational sector. Higher education institutions may face challenges in many aspects, such as providing high-quality education, obtaining top world rankings, or increasing self-funds. The fast growth of education models makes the evaluation models gradually attract the attention of scholars [

13]. These challenges are due to many factors facing educational institutions. A successful education system requires evaluation at all levels, from the institution to the departmental to the study programs. Therefore, it is crucial to continuously measure and evaluate the performance of faculties, departments, and centers in an educational institution so that decision-makers can make decisions that contribute to the achievement of organizational goals.

On the college scale, the recent high demand for engineers with excellent capabilities and skills to apply their knowledge creatively and innovatively to solve real-life problems necessitates the need for an engineering education institution to produce graduates who meet the high standards required by industry, government, and other sectors of society [

14]. The effective administration must find the best means and methods to evaluate its engineering colleges in an optimal manner and take the necessary measures to ensure that they continue to improve their performance [

14,

15,

16].

Hence, this paper aims to present an effective method for evaluating and ranking departments within the college using integrated MCDM methods. The College of Engineering, one of the major public universities in the Middle East, was chosen to be under investigation because of the importance of the department’s outputs in general worldwide and also because of the cooperation of its management in the evaluation process at that university.

This paper selected a combined MCDM between The Analytical Hierarchy Process (AHP) and Ranking Alternatives by Perimeter Similarity (RAPS). The use of AHP-RAPS as the AHP was chosen due to its power to translate management needs, preferences, and strategies on a qualitative concepts scale. Thus, the AHP in this study was used to calculate the weight of each criterion specified by university experts. On the other hand, RAPS was chosen due to its logical procedures, generalization, and credibility and validity of the approach [

17]. Therefore, RAPS was used in this study to rank the Engineering college’s departments using the weights derived from the AHP calculations. This paper presents the first use of AHP-RAPS in the education sector.

1.2. Structure of the Study

The remainder of the paper is structured as follows:

Section 2 presents an overview of current research using MCDM techniques in general and in the educational sector.

Section 3 describes specifically step by step in both AHP and RAPS methods.

Section 4 depicts the numerical phases of the case study as well as the decision-making process’s application methodologies.

Section 5 presents the study’s findings as well as a comparative analysis of different methods with the advantages and disadvantages of the proposed approach.

Section 6 introduces sensitivity analysis by switching the weights of the most sensitive criterion and performing three different cases; thus, the ratings obtained under these cases are represented.

Section 7 gives the recommendations for improving the department’s outcomes are presented in the conclusion section.

2. Background of the MCDM Techniques

The MCDM technique was used to solve complex real-life problems because of its ability to examine various alternatives and choose the best option [

18]. There are a large number of MCDM techniques in the literature. However, this paper covers some of the known and used techniques in education. For instance, Elimination Et Choix Traduisant la Realité (ELECTRE) is an MCDM tool that allows identifying and removing alternatives that are outranked by others, thus leaving a set of suitable actions [

19]. Instead of trying to solve a problem in the form of a function, ELECTRE focuses on relations between varied solutions. Experts use ELECTRE to analyze the consequences of criteria and compare them with each other based on projected performance [

20]. It is important to emphasize that, unlike many other techniques, ELECTRE can be successfully used to analyze the performance of qualitative criteria that cannot be measured using common quantifiable indicators.

Simple Additive Weighting (SAW) is another MCDM technique that seeks to analyze the performance of alternative solutions. Sembiring et al. [

21] stated that its goal is to find the weighted sum of performance ratings on each alternative on all attributes. Implementation of SAW strongly depends on decision-makers, as they are supposed to decide on weight preferences for all the criteria. For example, in research by Ibrahim and Surya [

5], which sought to offer a SAW method for selecting the best school in Jambi, decision-makers were supposed to agree on the weight preferences concerning school achievements, school environment, school accreditation, implementation of curricula, and availability of extracurricular activities. In this situation, it seems justified to claim that the effectiveness of SAW to a large extent depends on the ability of decision-makers to determine adequate weighting criteria.

Stepwise Weight Assessment Ratio Analysis (SWARA) is part of MCDM. The SWARA technique provides an opportunity for policymakers to make the best choice depending on dissimilar situations. When using the SWARA technique, criteria requirements are arranged with respect to their importance. Experts try to rank the defined criteria according to their importance [

22]. For instance, the most important criteria appear in the first position, while the least important one rank the last. Experts are the main elements of the SWARA method.

The Analytic Hierarchy Process (AHP) is a relatively old MCDM technique that still remains popular. It was developed in the 1980s by L.Saaty [

23] and has been enhanced since then. AHP provides a coherent framework for a necessary choice by quantifying its criteria and alternative possibilities and linking those parts to the overall purpose. The approach is a systematic MCDM method to generate weights based on the well-defined mathematical structures of pairwise comparison metrics [

24]. Application of the AHP technique starts with problem structuring, which encompasses the definition of a problem and the identification and structuring of criteria and alternatives [

19,

20]. Evaluation of alternatives occurs through the steps of judging relative values, judging relative importance, grouping aggregation of judgments, and carrying out an analysis of judgments’ inconsistency [

25]. Finally, decision-makers select optimal alternatives based on the calculation of the criteria’s weights and alternatives’ priorities as well as the results of the sensitivity analysis [

26]. An ability to quantify complex and ambiguous criteria and alternatives predetermines the popularity of AHP.

Best Worst Method (BWM) is an MCDM technique that was introduced by Rezaei [

27]. The BWM technique can evaluate data in a structured manner along with fewer input data than comparable methods [

28], such as the AHP technique. Many authors in a variety of fields and sectors have used the application of the BWM technique [

29]. It may be used to assess alternatives in relation to the criteria and to determine the relevance of the criteria that are utilized in finding a solution to achieve the major objective(s) of the problem. The BWM involves fewer pairwise comparisons and fewer data points as compared to other MCDM techniques, and it is characterized by its reference pairwise comparison. Data for pairwise comparisons can be obtained in the BWM technique using the survey method [

29]. According to Khan et al. [

29], a complete detailed overview of the BWM technique is provided in Mi et al.’s [

30] study.

VlseKriterijuska Optimizacija I Komoromisno Resenje (VIKOR) is another MCDM technique. The name of the VIKOR method could be loosely translated from the Serbian language as a “multi-criteria optimization and compromise solution” [

31]. The emergence of this methodology was a response to the popular call for developing tools aimed at finding a compromise solution, which was first expressed by Yu [

32]. The method implies evaluating and choosing alternatives on the basis of conflicting criteria by specifically describing how close each alternative is located to the “ideal” hypothetical solution.

Unlike VIKOR, the Technique for Order of Preference by Similarity to Ideal Solution (TOPSIS) considers both the positive and the negative ideal solutions [

26]. The primary purpose of the methodology is to locate an optimal solution that would have the longest and the shortest distance to the positive and negative ideal solutions, respectively [

33], [

34]. TOPSIS was introduced in 1981 by Yoon and Hwang [

35]. The TOPSIS method has a number of advantages over many other MCDM techniques. In particular, it is relatively simple in use and could be applied in different areas [

6]. Furthermore, whereas most methods exclude alternative solutions solely because they do not meet certain criteria, TOPSIS considers trade-offs between criteria, thus ensuring more realistic modeling [

26]. TOPSIS is a comprehensive technique that could be effectively used in many areas where the exclusion of alternatives based on predefined criteria would be counterproductive.

A universal method is considered to be the Organizing Preference Ranking for Evaluation Enrichment (PROMETHEE), which is used in a variety of fields [

36]. The technique allows for structuring a problem, determining conflicts and actions, and identifying alternatives [

25]. The eventual goal of PROMETHEE is to find those solutions that would be suitable for specific parties [

36]. Therefore, if another practitioner uses the method to solve the same problem, the results of its application might be fundamentally different. PROMETHEE is used by experts in a range of scenarios, including prioritization, ranking, resource allocation, conflict resolution, and decision-making [

25].

Grey Relational Analysis (GRA) is a method that was designed in 1989 by Deng [

37] for problems that were to be solved in an environment with a high level of uncertainty. In comparison with other techniques, GRA proved its effectiveness in systems with incomplete information [

38]. Similarly, with VIKOR, GRA considers both positive and negative ideal solutions, using the term “degree of grey relation” to determine their closeness to a particular alternative [

36]. The main advantages of GRA are connected with the reliance on the original data and the simplicity of calculations [

38]. The technique offers a flexible process that could be integrated with other MCDM techniques. For example, Chen [

4] applied the IF-GRA technique along with the TOPSIS method to select the best supplier of building materials.

Multi-Objective Optimization on the Basis of Ratio Analysis (MOORA) is a relatively novel MCDM technique that was introduced in the 2000s by Brauers and Zavadskas [

39]. The main goal of the method is to compare all the alternatives with each other based on their overall performance, which is calculated using sums of normalized performances. Application of the MOORA method implies passing the stages of determining the decision-making matrix, calculating the normalized matrix, calculating the weighted normalized matrix, calculating the overall ratings of alternatives’ cost and benefit criteria, and computing alternatives’ contributions [

40]. In practice, however, decision-makers usually employ MULTIMOORA, which is a recent variation of MOORA that is characterized by robustness and an ability to involve all the relevant stakeholders [

6,

35].

Complex Proportional Assessment (COPRAS) technique was designed by Zavadskas and Kaklauskas [

41] to select constructors in Lithuania. In the most general view, implementation of the method entails constructing a decision matrix, normalizing the decision-making matrix, determining the weighted matrix, calculating maximizing and minimizing indices for alternatives, calculating alternatives’ relative weights, determining the priority of alternatives, and calculating the performance index for each solution [

42]. The method has a number of advantages over other MCDM techniques, such as an ability to consider qualitative criteria, simplicity, the focus on both minimizing and maximizing criteria, and a focus on the utility degree [

42]. Simultaneously, Kraujalienė [

6] criticized this method for being sensitive to data variation.

Another MCDM tool is the Additive Ratio Assessment (ARAS) technique. In accordance with Thakkar [

9], the main principle of ARAS is that “a utility function value determining the complex relative efficiency of a feasible alternative is directly proportional to the relative effect of values and weights of the main criteria considered in a project”. Similarly, with many other tools, ARAS ranks criteria using a set of steps, including the formation of a decision-making matrix, its normalization, determination of the normalized matrix’s weights, determination of the optimization function’s value, and determination of the highest rank level [

43].

Overview of MCDM Techniques Used in the Educational Sector

The academic literature offers a significant amount of information about different MCDM techniques employed in the educational sector. Higher education institutions may face the problem of providing quality education due to the lack of qualified educators. In order to acquire quality teachers, it is vital to develop a suitable technique for the selection and ranking of teachers. In this section, previous MCDM techniques that were used in the educational sector are covered. Note that the RAPS technique has not been used in any studies so far except for the original research conducted by Urošević et al. [

17]. Therefore, important previous studies that used other MCDM techniques were covered.

The Fuzzy TOPSIS technique was applied to a set of data gathered from various institutions related to higher education sectors, and weights were obtained with the assistance of the AHP method [

44]. By using a set of data for 5 criteria and 10 teachers, the Fuzzy TOPSIS method was used to rank the candidates. A university’s position and quality of training are greatly influenced by the research productivity of its lecturers [

41,

45]. Various objective and subjective criteria should be considered when rating the research productivity of lecturers, such as the number of books produced, research grants secured as project leaders, number of scientific publications, the average number of citations per publication, and many others [

42,

43,

44]. Tuan et al. [

46] used combined MCDM techniques between fuzzy AHP and TOPSIS to rank the evaluation of several lectures.

Because of the rising significance of distance education due to its many advantages, a study conducted by Turan [

47] dealt with the application of both the AHP and the SWARA methods, both of which represent multi-criteria decision-making methods. The purpose of Turan’s [

47] study is to evaluate the evaluation factors that affect e-learning technology in the Industrial Engineering department in one of the Turkish universities. Thus, the study demonstrates that multi-criteria decision-making methods can be used in e-learning applications to evaluate many factors.

Biswas et al. [

48] used a modified SAW technique to analyze the quality of the operation of several Indian institutes of technology. The authors included criteria such as “the percentage of vacant seats during student intake”, “the strength of the faculty”, “research publications”, “the sponsored research fund”, “the number of the students who are employed through the placement cell”, and “the number of the students who opted for higher studies and the number of Ph.Ds. awarded”.

TOPSIS was applied by Kazan et al. [

49] to examine student learning performance based on subject names as selection criteria for schools, such as Turkish, Mathematics, and Science and Technology. In another study by Koltharkar et al. [

50], based on the factual performance and importance of eight decision criteria measured by TOPSIS, the authors prioritized the requirements of students in the case of a techno-managerial institute. Moreover, Mohammed et al. [

51] used the AHP-TOPSIS technique to rank the best appropriate e-learning type.

Shekhovtsov and Sałabun [

52] demonstrated the usage of TOPSIS and VIKOR methods in measuring webometrics ranking to dependable quantitative data on universities’ websites. The measures significantly helped in changing the competitive nature of universities across the world. Often, the method was dogged by controversies. Higher education stakeholders and researchers identified better models such as the VIKOR method to assist in providing better webometrics results and rankings for university websites [

53]. The approach will be instrumental in improving to quality of education and academic prestige. VIKOR will also eliminate major methodological flaws that have led to controversial debates on rankings. Quality evaluations and modeling in rankings aim at ensuring reliable tools of website ranking and foster improvements to education and scholarship quality in higher learning institutions. Ayouni et al. [

54] explored the usage of the VIKOR method in the evaluation and choice of Learning Management systems LMS in higher learning institutions in a complex environment. The authors proposed a quality framework that selects alternatives from a number of universities. The findings indicate that policymakers in institutions should consider time behavior and understandability. This is instrumental toward the development of standards and guidelines for LMS that foster the attainment of education quality.

A multi-criteria methodology was applied in the educational sector using the PROMETHEE method in selecting the best teacher competition, and the results were extracted by means of multiple criteria set by the educational authorities [

55].

Hanifatulqolbi et al. [

56] applied a MOORA technique in a web-based management information system to choose the top-performing teacher at Islamic Boarding School. It can assist Islamic Boarding School in rapidly and objectively selecting and deciding on the top-performing instructor [

56].

3. The AHP-RAPS Approach for Evaluating the Productivity of Engineering Departments

Multi-criteria decision-making (MCDM) approach integrates alternative performance measures in conflicting options and results to generate a feasible solution [

18]. This methodology involves an assessment of several available choices in complex situations in areas such as medicine, social sciences, technology, and engineering. The qualitative and quantitative data used in the method foster the attainment of a compromising alternative.

This paper proposes an integrated MCDM technique between AHP and RAPS. The AHP is used to identify the weights of each criterion involved in this study. On the other hand, RAPS is used to rank the alternatives using the weights derived from the AHP technique.

RAPS is a relatively new MCMD technique. The instruments were proposed in the paper by Urošević et al. [

17] as a novel method to which the authors refer as Ranking Alternatives by Perimeter Similarity (RAPS). The idea of developing this method originates from the critical shortcomings of the previous MCDM tools. In particular, despite the fact that TOPSIS is capable of using allocated information, it poorly addresses the issue of attributes’ correlations, which might result in the adverse influence of a deviation of indicators on final results [

4]. VIKOR does not have this shortcoming but is problematic in practice due to the need to use initial weights and quantitative data [

31]. These issues predetermine the interest of practitioners in developing new instruments that could help effectively solve problems with multiple criteria, addressing the limitations of the previous tools. Urošević et al. [

17] argued that the idea of developing RAPS originated from the three constraints of MCDM techniques, including the complexity of comparing MCDM methods to each other and the achievement of different results using the same methods, and reporting of different results utilizing different techniques. Despite these limitations, the development of new MCDM methods is a surprisingly unpopular research area [

57]. Urošević et al. [

17] believed that the availability of numerous MCDM techniques, a low amount of information about new methods, and significant requirements for new methods in terms of their validation are the key reasons behind this regularity. The development of RAPS, therefore, could be considered a rare contribution to the academic literature on MCDM techniques.

3.1. Analytical Hierarchy Process (AHP)

As indicated before in this article, Engineering departments at one of the public institutions in the Middle East were ranked using a combination of AHP and RAPS approaches. The methodology of the AHP can be explained in the following steps [

58]:

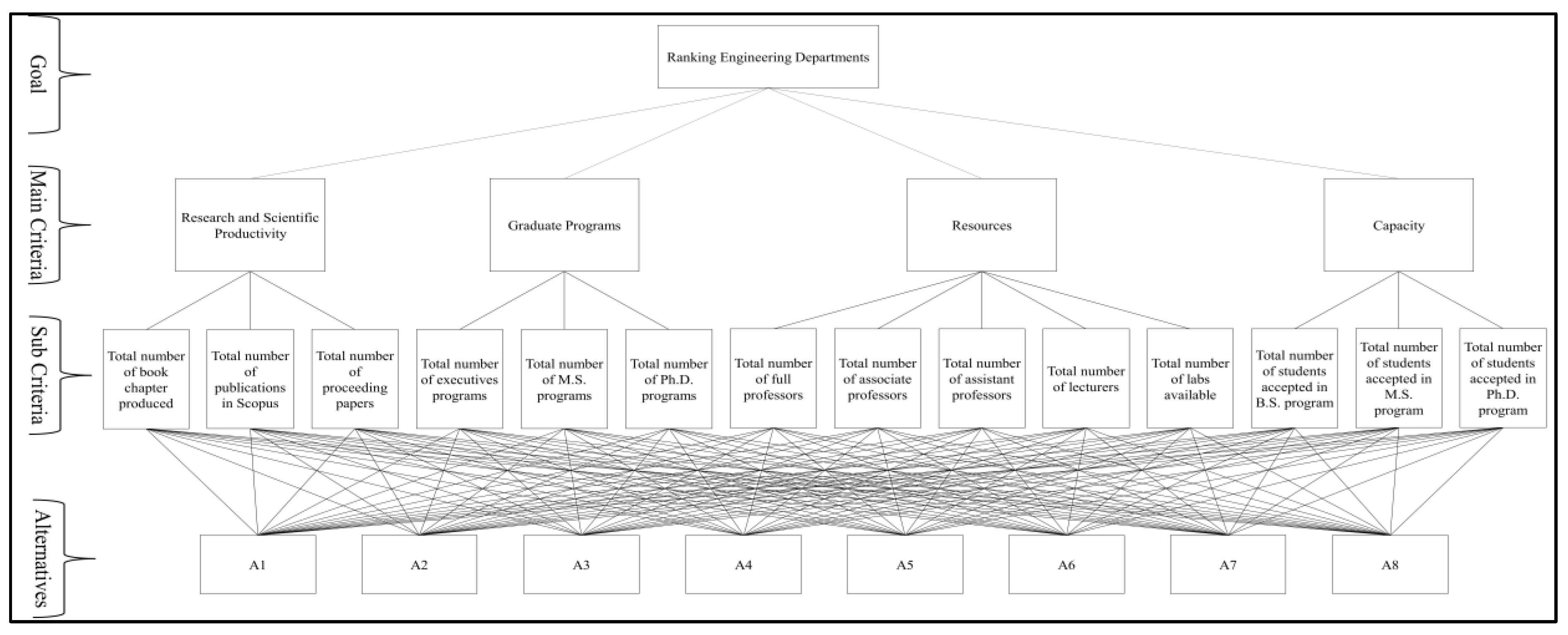

Step #1: A hierarchy of goals, criteria, sub-criteria, and alternatives is used to break down the problem. The hierarchy suggests that components on one level are related to those on the next level down;

Step #2: Data are collected from decision-makers/experts corresponding to the hierarchic structure to create a pairwise evaluation of options on a qualitative scale. The Saaty 1–9 preferred scale is used to create the similarity matrix [

59];

Step #3: For multiple factors, the pairwise comparisons created in step 2 are grouped into a square matrix, with one as the diagonal member. If the value of the element (i, j) is greater than 1, the ith row criterion is superior to the jth column criterion; otherwise, the jth column criterion is superior to the ith row criterion. The (j, i) matrix element is the inverse of the (i, j) element;

Step #4: The comparison matrix’s major eigenvalue and related normalized right eigenvector show the relative relevance of the many criteria being evaluated. With regard to the criteria or sub-criteria, the elements of the normalized eigenvector are referred to as weights, and with regard to the alternatives, they are referred to as ratings;

Step #5: Calculate the consistency index (CI) as where the maximum eigenvalue of the judgment matrix is presented as . Then, a random index called (RI) is compared with the calculated CI, which needs to be compared. is termed the consistency ratio (CR). It is suggested that the value of CR should be no more than 10%. However, CR values greater than 10% are still considered acceptable in certain circumstances;

Step #6: Obtain the local rating by multiplying the rating of each alternative by the weights of the sub-criteria and summing the result with respect to each criterion. Then, obtain the global ranking by multiplying the weights of the criteria and summing the results.

3.2. Ranking Alternatives by Perimeter Similarity (RAPS)

The methodology of the RAPS technique can be explained in the following steps [

17]:

Step #1: In this step, the input data are normalized, which is necessary to convert from a multidimensional into a nondimensional decision space. Equation (1) is used to perform normalization for max criteria, and for the min criteria Equation (2) is used to perform normalization:

where

is the decision-making matrix of m alternatives and

n criteria,

i = 1, …,

m and

j = 1, …,

n;

is the maximization criteria set;

is the minimization criteria set.

Step #2: The process of normalization yields the normalized decision matrix as shown in Equation (3);

Step #3: Weighted normalization. For each normalized assessment

, weighted normalization in Equation (4) is used. The result value of the normalized weight is the weighted normalized matrix, and its shown in Equation (5);

Step #4: Determine the optimal alternative by identifying each element of the optimal alternative using Equation (6) which leads to the optimal alternative set in Equation (7);

Step #5: Decomposition of the optimal alternative implies the decomposition of the optimal alternative in the two subsets or two components. The set

Q can be represented as the union of the two subsets, as shown in Equation (8). If k represents the total number of criteria that should be maximized, then h = n−k represents the total number of criteria that should be minimized. Hence, the optimal alternative is shown in Equation (9);

Step #6: The decomposition of the alternative is similar to step #5. This step is the decomposition of each alternative, as shown in Equations (10) and (11);

Step #7: This step is related to the magnitude of the component, which needs to calculate each component of the optimal alternative. Thus, calculate the magnitude using Equations (12) and (13) and for each alternative using Equations (14) and (15);

Step #8: Ranking the Alternatives by Perimeter Similarity (RAPS). The optimal alternative perimeter is represented as the right-angle triangle perimeter. Components

and

are the base and perpendicular sides of this triangle, respectively, are expressed in Equation (16). For each alternative, using Equation (17), calculate the perimeter. The ratio between the perimeter of each alternative and the optimal alternative is expressed in Equation (18). Arrange and rank alternatives according to the descending order of

values of each alternative.

5. Discussion

Based on the weights calculated from the AHP technique,

Figure 2 shows the importance of each criterion calculated using the AHP technique. The data were obtained from the public university and were used in the RAPS technique and presented in

Table 9. The final rank score for Engineering departments for 2019, 2020, and 2021, respectively, is presented in

Table 10.

The most important criterion is C12, which represents the total number of publications by the department in the Scopus database at 0.5. The second highest weight criterion is C11, which represents the total number of book chapters produced by the departments at 0.15. The third most important criterion is the total number of students accepted in the B.S. program at 0.10. The remaining weights are disputed to the rest of the criteria between 0.01 and 0.04 based on the importance of the criteria.

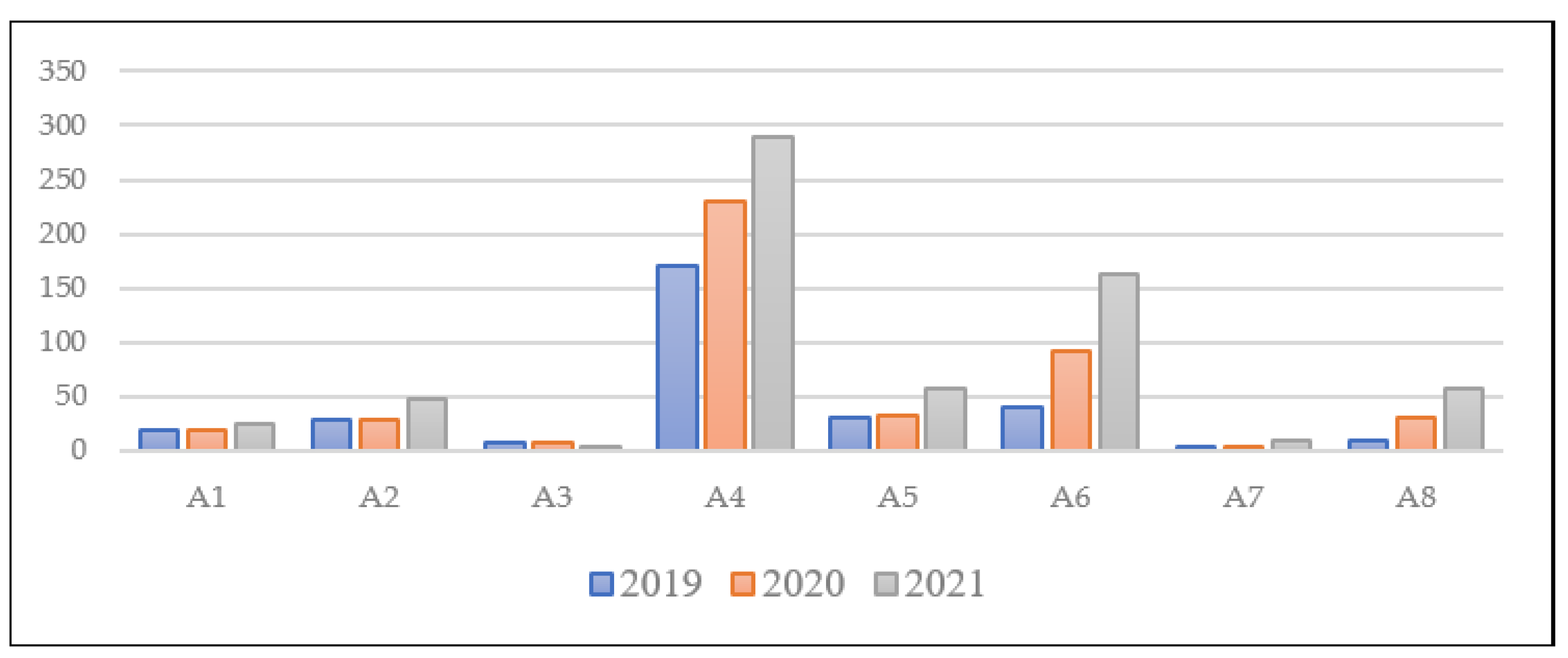

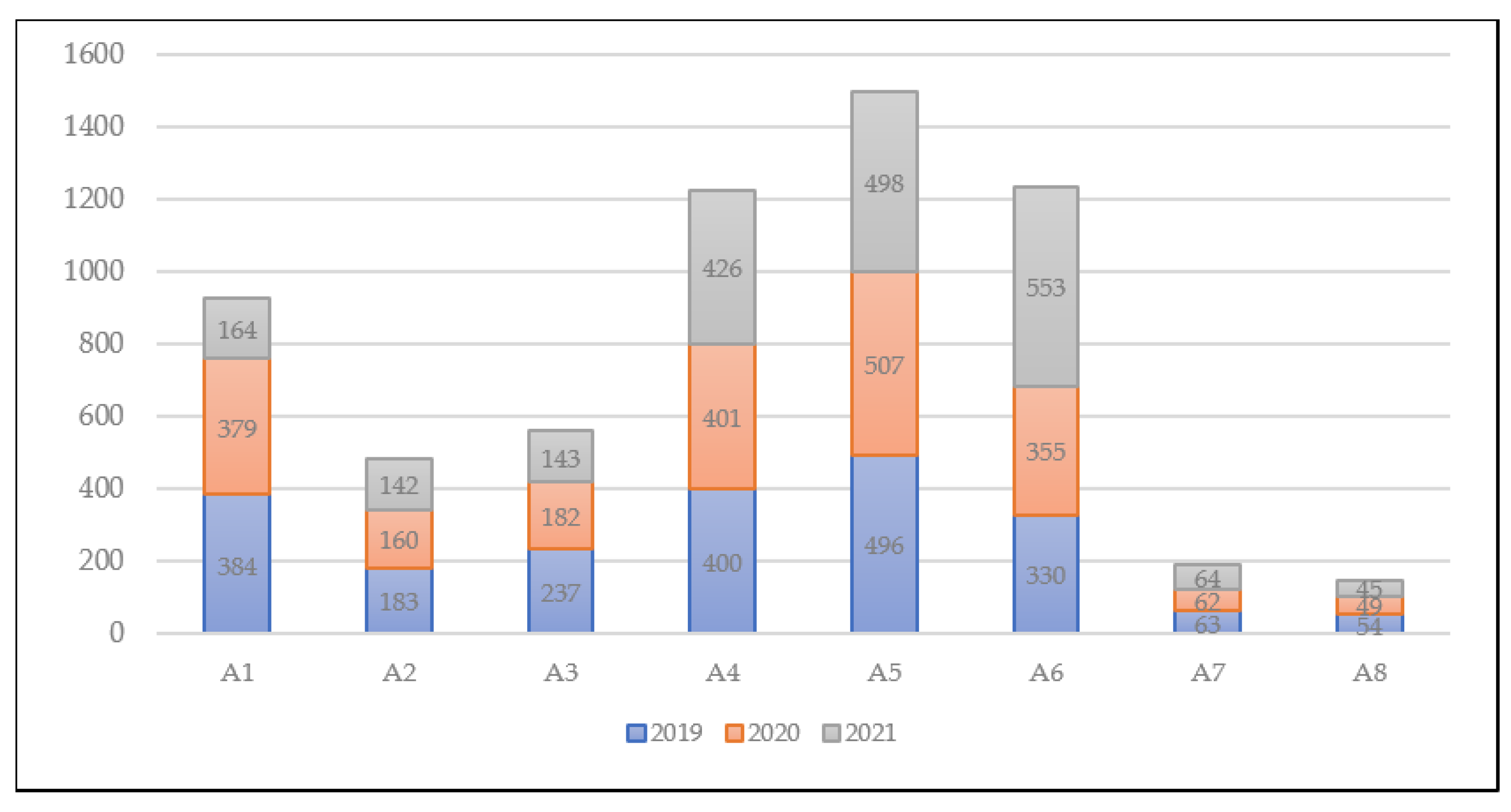

As shown in

Table 10, there are departments whose ranking was stable in the three years and did not change. For instance, “A4” is in the top ranking in 2019, 2020, and 2021, respectively. The number of department publications in the Scopus database contributes to placing “A4” in the highest rank. It is very clear that “A4” is superior in the number of published research among other departments in all three years. In contrast, “A7” ranked in the last position for all three years, and the low number of published papers is a contributing factor. Another advantage that made “A4” to be ranked the highest was the number of engineering students enrolled for the bachelor’s degree, which is an important criterion from the college’s point of view. On the other hand, the enrollment of students in department “A7” ranks low among other departments. Moreover, one of the important criteria from the institution’s point of view is producing a book chapter, which that criterion has been missing in “A7” for three years. All these factors mentioned led to the declining position of department “A7” among other departments.

Table 10 also reveals a fluctuation in the ranking for some departments, such as “A2”, “A5”, and “A6”. For example, in 2019, “A2” ranked fourth; in 2020, it ranked third; and in 2021, it ranked fifth. Despite the lack of published research for department A2 in all three years, the department had a book chapter published in 2019 and 2020, which helped the department to obtain advanced positions in 2019 and 2020.

Table 10 indicates that there is competition between some departments, such as “A5” and “A6”. In 2019, A5 ranked second, while “A6” ranked third. In 2020, “A5” ranked fourth, while “A6” ranked second. In 2021, “A5” ranked third, and “A6” ranked second. The department’s superiority “A6” in 2021 was due to the department’s superiority in publishing research and publishing a book, and the total number of students joining the department contributed to its progression.

Figure 2 and

Figure 3 show a comparison between departments for the most important criteria.

Comparative Analysis of Different MCDM Methods

Currently, there are numerous MCDM methods available, each with its own advantages and disadvantages. In this study, the AHP method was chosen over the rest of the criteria assessment methods because of its strength in arranging the criteria’s importance as well as the presence of the consistency test to ensure evaluation consistency. On the other hand, AHP has a subjective nature and relies on emotions to be transferred to numerical judgments. In addition to that, having a large number of criteria demands a long time and effort to carry out all pairwise comparisons.

The RAPS method was chosen over the rest of the alternative assessment methods due to its logical procedure, justification, generalization, validity, and credibility of the method. While RAPS is considered a robust method, it is also considered a relatively complex procedure.

With a large number of different available MCDM methods, it is necessary to compare the results of the proposed method with other MCDM methods. Therefore, the result obtained from the proposed method used (AHP-RAPS) was compared with other well-known MCDM methods, including TOPSIS, MOORA, and VIKOR. The outcome results of the comparison are presented in

Table 11. From the result obtained from the comparison analysis, the ranking order of the alternatives did not change over all MCDM methods in 2019 and 2021. This gives an indication of the stability and validity of the results for the performance of the eight sections in 2019 and 2021. However, there was a slight difference in the ranking order of the alternatives between different MCDM methods in 2020, but it is worth noting that all MCDM methods in

Table 11 agreed on the consistency of the top two rankings among all MCDM methods, A4 and A6.

6. Sensitivity Analysis

For a deeper understanding of the sensitivity of the weights assigned for each criterion, conducting a sensitivity analysis offers a number of benefits to decision-makers. First, it serves as an in-depth study of some sensitive criteria. Second, it allows decision-makers to identify places where they can make improvements in the future. Finally, it allows the ability to make decisions about the continuous improvement of departments and colleges. However, there are some drawbacks to using sensitivity analysis. For example, the results coming from the sensitivity analysis are based on assumptions because the criteria are all based on historical data. This means that it is not entirely accurate, so there may be room for error when applying the analysis to future predictions.

In this study, three different cases were undertaken to increase understanding of the relationships between the most sensitive criteria. Since the highest weight value was in favor of the C12 criterion, which expresses the top management’s interest in scientific research, the weights were exchanged with other criteria that have a direct or indirect relationship to scientific research. The rankings obtained under these cases are represented in

Table 12.

6.1. Case 1

In the first case, the weights of the total number of publications in Scopus (C12) and the number of proceeding papers (C13) were changed to be equally weighted. Hence, C12 and C13 were both carrying an equal weight of 0.275. Regarding changing the values given by the decision makers for C12 and C13, it was found that the ranking order of alternatives changed dramatically in 2019, 2020, and 2021. This indicates that C13 is one of the factors responsible for changing the ranking order of engineering departments.

6.2. Case 2

In the second case, the weights of the total number of publications (C12) and the total number of M.S. students (C42) were changed to be equally weighted. Hence, both C12 and C42 carried an equal weight of 0.26. Regarding changing the given values by decision-makers for C12 and C42, it was found that the ranking order of alternatives changed slightly. This shows that C42 is one of the factors that have little impact on the ranking of the Engineering departments. It was noted that the number of master’s students might contribute to an increase in the number of research publications, as some universities require graduate students to publish their research thesis as one of the requirements for obtaining the degree.

6.3. Case 3

In the third case, the weights of the total number of publications (C12) and the total number of Ph.D. students (C43) were changed to be equally weighted. Hence, both C12 and C43 carried an equal weight of 0.255. Regarding changing the given values by decision-makers for C12 and C43, it was found that the ranking changed very slightly. This shows that C43 has a very slight impact on the ranking of the Engineering departments. It was noted that the number of Ph.D. students might contribute to an increase in the number of research publications, as some universities require graduate students to publish their research thesis as one of the requirements for obtaining the degree.

7. Conclusions

In complex real-life problems, a decision-making team of experts needs to evaluate and improve the performance of the worst alternatives. There are many methods available in the field of MCDM that rank the alternatives based on the level of performance. One of the areas in which MCDM can be applied is the field of education.

Education institutions may face challenges in many aspects, such as providing high-quality education, obtaining the highest global ratings, or increasing self-financing. These challenges are due to many factors facing educational institutions. A successful education system requires evaluation at all levels. Therefore, it is necessary to continuously measure and evaluate the performance of faculties, departments, and centers in the educational institution so that decision-makers can make decisions that will contribute to achieving organizational goals.

Among all available MCDM methods, this paper primarily focused on the use of integrated MCDM tools between AHP and RAPS for ranking the departments within a college. The RAPS method was used to rank the alternatives, while the AHP technique was utilized to establish the weight of each criterion specified in this paper. The data from 2019 to 2021 were used in this study. The use of AHP-RAPS in the educational sector was presented for the first time. Based on the results of the proposed method, the levels of superiority and inferiority of alternatives can be identified, which in turn gives more room for decision-makers to take necessary actions to raise the overall performance of low-ranked alternatives. The College of Engineering in a public university was chosen to be the case of this study. The selected university is considered one of the top 150 universities in the world according to the Shanghai classification 2022, which depends on the outcomes of the educational and research process, in addition to the fact that the college of Engineering is accredited by the Accreditation Board for Engineering and Technology (ABET).

Based on the calculated criteria weights, the “total number of publications in the Scopus database” C12 had the highest weight among other criteria, and this indicates the importance of scientific research at the selected university. Therefore, the performance of departments that have high publication records in the Scopes database was high.

Sensitivity analysis was performed in this study, and its results showed that the ranking might fluctuate when the weights change between the criteria.

This study recommends that the departments in the last ranking need more support from faculty members such as encouragement to publish research papers in the Scopus database or produce and publish book chapters.

Moreover, this study recommends providing different types of motivation to the low-ranking department to encourage faculty members to publish more research papers, which will help and contribute to improving the department’s ranking positions.

This paper also recommends decision-makers who are attentive to scientific research to study the increase in the number of postgraduate students in the departments, which may contribute to an increase in the number of research publications, as some universities require graduate students to publish their research thesis as one of the requirements for obtaining the degree.

Finally, the outcomes of this study suggest that combining AHP and RAPS to evaluate and rank university departments yields highly promising results.