How to Promote Online Education through Educational Software—An Analytical Study of Factor Analysis and Structural Equation Modeling with Chinese Users as an Example

Abstract

:1. Introduction

2. Literature

2.1. Current Status of Online Education Development

2.2. The Use of Pertinent Theories in Online Classes

3. Materials and Methods

3.1. Participant

3.2. Measures

3.3. Data Analysis

4. Results

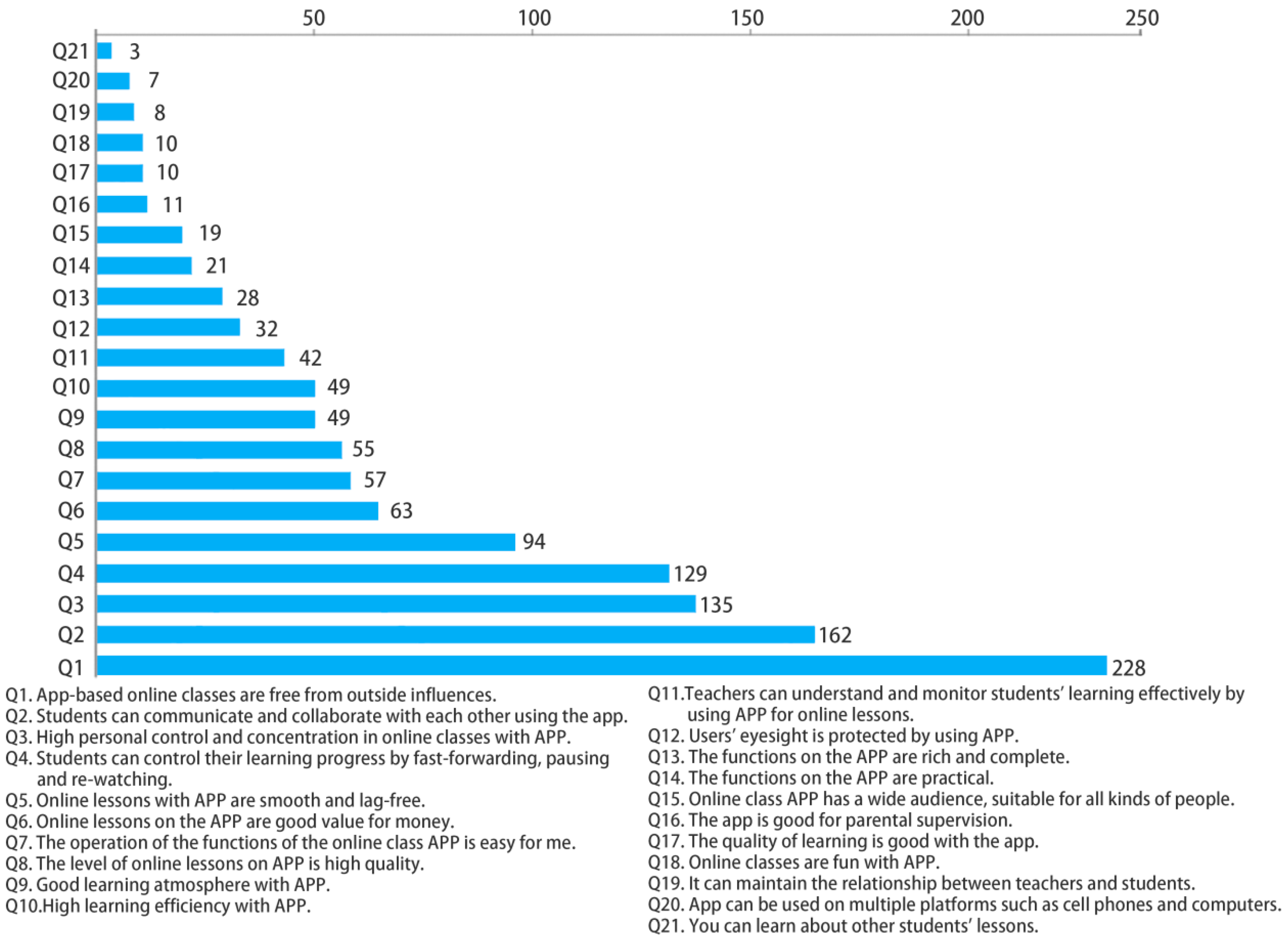

4.1. Results of Exploratory Factor Analysis

4.2. Confirmatory Factor Analysis

5. Discussion

5.1. Discussion of the Results of the Factor Analysis

5.2. Discussion on the Relationship among Factors

5.3. Discussion on Management Significance and Research Contribution

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Watch, H.R. Pandemic’s Dire Global Impact on Education: Remedy Lost Learning; Make School Free, Accessible; Expand Internet Access. Available online: https://tinyurl.com/4adchm55 (accessed on 23 June 2022).

- Kumar, B.A.; Chand, S.S. Mobile learning adoption: A systematic review. Educ. Inf. Technol. 2019, 24, 471–487. [Google Scholar] [CrossRef]

- Holst, A. Forecast Number of Mobile Users Worldwide from 2019 to 2023 (in Billion). 2019. Available online: https://www.statista.com/statistics/218984/number-of-global-mobile-users-since-2010/ (accessed on 23 June 2022).

- Napp, A.; Kosan, J.; Hoffend, C.; Häge, A.; Breitfeld, P.; Doehn, C.; Daubmann, A.; Kubitz, J.; Beck, S.J.R. Implementation of basic life support training for school children: Online education for potential instructors? Results of a cluster randomised, controlled, non-inferiority trial. Resuscitation 2020, 152, 141–148. [Google Scholar] [CrossRef] [PubMed]

- Lamb, L.R.; Baird, G.L.; Roy, I.T.; Choi, P.H.; Lehman, C.D.; Miles, R.C. Are English-language online patient education materials related to breast cancer risk assessment understandable, readable, and actionable? Breast 2022, 61, 29–34. [Google Scholar] [CrossRef] [PubMed]

- Brinia, V.; Psoni, P. Online teaching practicum during COVID-19: The case of a teacher education program in Greece. J. Appl. Res. High. Educ. 2021, 14, 610–624. [Google Scholar] [CrossRef]

- Gorfinkel, L.; Muscat, T.; Ollerhead, S.; Chik, A. The role of government’s ‘Owned Media’in fostering cultural inclusion: A case study of the NSW Department of Education’s online and social media during COVID-19. Media Int. Aust. 2021, 178, 87–100. [Google Scholar] [CrossRef]

- Han, H.; Lien, D.; Lien, J.W.; Zheng, J. Finance. Online or face-to-face? Competition among MOOC and regular education providers. Int. Rev. Econ. Financ. 2022, 80, 857–881. [Google Scholar]

- Barros-del Río, M.A.; Nozal, C.L.; Mediavilla-Martínez, B. Practicum management and enhancement through an online tool in foreign language teacher education. Soc. Sci. Humanit. Open 2022, 6, 100273. [Google Scholar]

- Tsironis, A.; Katsanos, C.; Xenos, M. Comparative usability evaluation of three popular MOOC platforms. In Proceedings of the Global Engineering Education Conference, Abu Dhabi, United Arab Emirates, 10–13 April 2016. [Google Scholar]

- Park, B.; Knoerzer, L.; Plass, J.L.; Bruenken, R. Emotional design and positive emotions in multimedia learning: An eyetracking study on the use of anthropomorphisms. Comput. Educ. 2015, 86, 30–42. [Google Scholar] [CrossRef]

- Ip, H.H.S.; Li, C.; Leoni, S.; Chen, Y.B.; Ma, K.F.; Wong, C.H.T.; Li, Q. Design and Evaluate Immersive Learning Experience for Massive Open Online Courses (MOOCs). IEEE Trans. Learn. Technol. 2019, 12, 503–515. [Google Scholar] [CrossRef]

- Wang, S.R.; Liu, Y.; Song, F.H.; Xie, X.J.; Yu, D. Research on Evaluation System of User Experience With Online Live Course Platform. IEEE Access 2021, 9, 23863–23875. [Google Scholar] [CrossRef]

- Lew, S.-L.; Lau, S.-H.; Leow, M.-C. Usability factors predicting continuance of intention to use cloud e-learning application. Heliyon 2019, 5, e01788. [Google Scholar]

- Chaker, R.; Bouchet, F.; Bachelet, R.J.C.i.H.B. How do online learning intentions lead to learning outcomes? The mediating effect of the autotelic dimension of flow in a MOOC. Comput. Hum. Behav. 2022, 134, 107306. [Google Scholar] [CrossRef]

- Xu, Y.; Jin, L.; Deifell, E.; Angus, K. Chinese character instruction online: A technology acceptance perspective in emergency remote teaching. System 2021, 100, 102542. [Google Scholar] [CrossRef]

- Nikolopoulou, K.; Gialamas, V.; Lavidas, K. Habit, hedonic motivation, performance expectancy and technological pedagogical knowledge affect teachers’ intention to use mobile internet. Comput. Educ. Open 2021, 2, 100041. [Google Scholar] [CrossRef]

- de Freitas, S.I.; Morgan, J.; Gibson, D. Will MOOCs transform learning and teaching in higher education? Engagement and course retention in online learning provision. Br. J. Educ. Technol. 2015, 46, 455–471. [Google Scholar] [CrossRef] [Green Version]

- Deng, R.; Benckendorff, P.; Gannaway, D. Progress and new directions for teaching and learning in MOOCs. Comput. Educ. 2018, 129, 48–60. [Google Scholar] [CrossRef]

- Kraľovičová, D. Online vs. Face to face teaching in university background. Megatrendy A Médiá 2020, 7, 199–205. [Google Scholar]

- Hurajova, A.; Kollarova, D.; Ladislav, H. Trends in education during the pandemic: Modern online technologies as a tool for the sustainability of university education in the field of media and communication studies. Heliyon 2022, 8, e09367. [Google Scholar] [CrossRef]

- Ma, L.; Lee, C.S. Understanding the barriers to the use of MOOCs in a developing country: An innovation resistance perspective. J. Educ. Comput. Res. 2019, 57, 571–590. [Google Scholar] [CrossRef]

- Veletsianos, G.; Shepherdson, P. A systematic analysis and synthesis of the empirical MOOC literature published in 2013–2015. Int. Rev. Res. Open Distrib. Learn. 2016, 17, 198–221. [Google Scholar] [CrossRef] [Green Version]

- Mozahem, N.A. The online marketplace for business education: An exploratory study. Int. J. Manag. Educ. 2021, 19, 100544. [Google Scholar] [CrossRef]

- Mangan, K. MOOCs could help 2-year colleges and their students, says Bill Gates. Chron. High. Educ. 2013. Available online: https://www.chronicle.com/article/moocs-could-help-2-year-colleges-and-their-students-says-bill-gates/ (accessed on 23 June 2022).

- Lucas, H.C. Can the current model of higher education survive MOOCs and online learning? Educ. Rev. 2013, 48, 54–56. [Google Scholar]

- Breslow, L.; Pritchard, D.E.; DeBoer, J.; Stump, G.S.; Ho, A.D.; Seaton, D.T. Studying learning in the worldwide classroom research into edX’s first MOOC. Res. Pract. Assess. 2013, 8, 13–25. [Google Scholar]

- Sandeen, C. Assessment’s Place in the New MOOC World. Res. Pract. Assess. 2013, 8, 5–12. [Google Scholar]

- Major players in the MOOC Universe. 2013. Available online: https://www.chronicle.com/article/major-players-in-the-mooc-universe/ (accessed on 23 June 2022).

- Lněnička, M.; Nikiforova, A.; Saxena, S.; Singh, P. Investigation into the adoption of open government data among students: The behavioural intention-based comparative analysis of three countries. Aslib J. Inf. Manag. 2022, 74, 549–567. [Google Scholar] [CrossRef]

- World Health Organization. Q&As on COVID-19 and Related Health Topics. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/question-and-answers-hub (accessed on 27 May 2022).

- Lin, X.; Gao, L.-L. Students’ sense of community and perspectives of taking synchronous and asynchronous online courses. Asian J. Dist. Educ. 2020, 15, 169–179. [Google Scholar]

- Basri, M.; Husain, B.; Modayama, W. University students’ perceptions in implementing asynchronous learning during covid-19 era. Metathesis J. Eng. Lang. Lit. Teach 2021, 4, 263–276. [Google Scholar] [CrossRef]

- Amelia, A.R.; Qalyubi, I.; Qamariah, Z. Qamariah. Lecturer and students’ perceptions toward synchronous and asynchronous in speaking learning during covid-19 pandemic. Proc. Int. Conf. Engl. Lang. Teach. 2021, 5, 8–18. [Google Scholar]

- Khan, M.; Vivek, V.; Nabi, M.; Khojah, M.; Tahir, M. Students’ Perception towards E-Learning during COVID-19 Pandemic in India: An Empirical Study. Sustainability 2020, 13, 57. [Google Scholar] [CrossRef]

- Moorhouse, B.L.; Kohnke, L. Thriving or Surviving Emergency Remote Teaching Necessitated by COVID-19: University Teachers’ Perspectives. Asia-Pac. Educ. Res. 2021, 30, 279–287. [Google Scholar] [CrossRef]

- Bojović, Ž.; Bojović, P.D.; Vujošević, D.; Šuh, J. Education in times of crisis: Rapid transition to distance learning. Comput. Appl. Eng. Educ. 2020, 28, 1467–1489. [Google Scholar] [CrossRef]

- Cutri, R.M.; Mena, J.; Whiting, E.F. Whiting. Faculty readiness for online crisis teaching: Transitioning to online teaching during the COVID-19 pandemic. Eur. J. Teach. Educ 2020, 43, 523–541. [Google Scholar] [CrossRef]

- Moorhouse, B.L. Beginning teaching during COVID-19: Newly qualified Hong Kong teachers’ preparedness for online teaching. Educ. Stud. 2021, 1–17. [Google Scholar] [CrossRef]

- Almazova, N.; Krylova, E.; Rubtsova, A.; Odinokaya, M. Challenges and opportunities for Russian higher education amid COVID-19: Teachers’ perspective. Educ. Sci. 2020, 10, 368. [Google Scholar] [CrossRef]

- Ali, W. Online and remote learning in higher education institutes: A necessity in light of COVID-19 pandemic. High. Educ. Stud. 2020, 10, 16–25. [Google Scholar] [CrossRef]

- Phillips, F.; Linstone, H. Key ideas from a 25-year collaboration at technological forecasting & social change. Technol. Forecast. Soc. Change 2016, 105, 158–166. [Google Scholar]

- Liu, S.Q.; Liang, T.Y.; Shao, S.; Kong, J. Evaluating Localized MOOCs: The Role of Culture on Interface Design and User Experience. IEEE Access 2020, 8, 107927–107940. [Google Scholar] [CrossRef]

- Zaharias, P.; Poylymenakou, A. Developing a Usability Evaluation Method for e-Learning Applications: Beyond Functional Usability. Int. J. Hum. Interact. 2009, 25, 75–98. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Processes 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef] [Green Version]

- Bandura, A. Social foundations of thought and action. Englewood Cliffs 1986, 1986, 23–28. [Google Scholar]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. Extrinsic and intrinsic motivation to use computers in the workplace 1. J. Appl. Soc. Psychol. 1992, 22, 1111–1132. [Google Scholar] [CrossRef]

- Heutte, J.; Fenouillet, F.; Martin-Krumm, C.; Gute, G.; Raes, A.; Gute, D.; Bachelet, R.; Csikszentmihalyi, M. Optimal experience in adult learning: Conception and validation of the flow in education scale (EduFlow-2). Front. Psychol. 2021, 12, 828027. [Google Scholar] [CrossRef]

- Lakhal, S.; Khechine, H. Student intention to use desktop web-conferencing according to course delivery modes in higher education. Int. J. Manag. Educ. 2016, 14, 146–160. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef] [Green Version]

- Kang, D.; Park, M.J. Interaction and online courses for satisfactory university learning during the COVID-19 pandemic. Int. J. Manag. Educ. 2022, 20, 100678. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar]

- Sun, P.-C.; Tsai, R.J.; Finger, G.; Chen, Y.-Y.; Yeh, D. What drives a successful e-Learning? An empirical investigation of the critical factors influencing learner satisfaction. Comput. Educ. 2008, 50, 1183–1202. [Google Scholar] [CrossRef]

- Al-Fraihat, D.; Joy, M.; Masa’Deh, R.; Sinclair, J. Evaluating E-learning systems success: An empirical study. Comput. Hum. Behav. 2019, 102, 67–86. [Google Scholar] [CrossRef]

- Liu, I.-F.; Chen, M.C.; Sun, Y.S.; Wible, D.; Kuo, C.-H. Extending the TAM model to explore the factors that affect Intention to Use an Online Learning Community. Comput. Educ. 2010, 54, 600–610. [Google Scholar] [CrossRef]

- Agudo-Peregrina, Á.F.; Hernández-García, Á. Pascual-Miguel, F.J. Behavioral intention, use behavior and the acceptance of electronic learning systems: Differences between higher education and lifelong learning. Comput. Hum. Behav. 2014, 34, 301–314. [Google Scholar] [CrossRef]

- Bhattacherjee, A. Understanding information systems continuance: An expectation-confirmation model. MIS Q. 2001, 25, 351–370. [Google Scholar] [CrossRef]

- Oliver, R.L. A cognitive model of the antecedents and consequences of satisfaction decisions. J. Mark. Res. 1980, 17, 460–469. [Google Scholar] [CrossRef]

- Liao, C.; Palvia, P.; Chen, J.-L. Information technology adoption behavior life cycle: Toward a Technology Continuance Theory (TCT). Int. J. Inf. Manag. 2009, 29, 309–320. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. Information systems success: The quest for the dependent variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef] [Green Version]

- Dağhan, G.; Akkoyunlu, B. Modeling the continuance usage intention of online learning environments. Comput. Hum. Behav. 2016, 60, 198–211. [Google Scholar] [CrossRef]

- Dai, H.M.; Teo, T.; Rappa, N.A.; Huang, F. Explaining Chinese university students’ continuance learning intention in the MOOC setting: A modified expectation confirmation model perspective. Comput. Educ. 2020, 150, 103850. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, J.; He, S.; Zhu, R.; Shen, S.; Liu, B. E-learning intention of students with anxiety: Evidence from the first wave of COVID-19 pandemic in China. J. Affect. Disord. 2022, 309, 115–122. [Google Scholar] [CrossRef]

- Price, B. A First Course in Factor Analysis. Technometrics 1993, 35, 453. [Google Scholar] [CrossRef]

- Lawley, D.N.; Maxwell, A.E. Factor Analysis as a Statistical Method. J. R. Stat. Soc. Ser. D 1962, 12, 209. [Google Scholar] [CrossRef]

- Lamash, L.; Josman, N. Full-information factor analysis of the daily routine and autonomy (DRA) questionnaire among adolescents with autism spectrum disorder. J. Adolesc. 2020, 79, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Wei, W.; Cao, M.; Jiang, Q.; Ou, S.-J.; Zou, H. What Influences Chinese Consumers’ Adoption of Battery Electric Vehicles? A Preliminary Study Based on Factor Analysis. Energies 2020, 13, 1057. [Google Scholar] [CrossRef] [Green Version]

- Slaton, J.D.; Hanley, G.P.; Raftery, K.J. Interview-informed functional analyses: A comparison of synthesized and isolated components. J. Appl. Behav. Anal. 2017, 50, 252–277. [Google Scholar] [CrossRef] [PubMed]

- Ho, H.-H.; Tzeng, S.-Y. Using the Kano model to analyze the user interface needs of middle-aged and older adults in mobile reading. Comput. Hum. Behav. Rep. 2021, 3, 100074. [Google Scholar] [CrossRef]

- Mellor, D.; Cummins, R.A.; Loquet, C. The gold standard for life satisfaction: Confirmation and elaboration using an imaginary scale and qualitative interview. Int. J. Soc. Res. Methodol. 1999, 2, 263–278. [Google Scholar] [CrossRef]

- Comrey, A.L.; Lee, H.B. A First Course in Factor Analysis; Göttingen University Press: Göttingen, Germany, 1992. [Google Scholar]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Southend Oaks, CA, USA, 2021. [Google Scholar]

- Nunnally, J.C. Psychometric Theory 3E; Tata McGraw-Hill: New York, NY, USA, 1994. [Google Scholar]

- Eisinga, R.; Grotenhuis, M.T.; Pelzer, B. The reliability of a two-item scale: Pearson, Cronbach, or Spearman-Brown? Int. J. Public Health 2012, 58, 637–642. [Google Scholar] [CrossRef]

- Muilenburg, L.Y.; Berge, Z.L. Student barriers to online learning: A factor analytic study. Distance Educ. 2005, 26, 29–48. [Google Scholar] [CrossRef]

- Shevlin, M.; Miles, J. Effects of sample size, model specification and factor loadings on the GFI in confirmatory factor analysis. Pers. Individ. Differ. 1998, 25, 85–90. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Ahmad, S.; Zulkurnain, N.N.A.; Khairushalimi, F.I. Assessing the Validity and Reliability of a Measurement Model in Structural Equation Modeling (SEM). Br. J. Math. Comput. Sci. 2016, 15, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Graham-Rowe, E.; Gardner, B.; Abraham, C.; Skippon, S.; Dittmar, H.; Hutchins, R.; Stannard, J. Mainstream consumers driving plug-in battery-electric and plug-in hybrid electric cars: A qualitative analysis of responses and evaluations. Transp. Res. Part A Policy Pract. 2012, 46, 140–153. [Google Scholar] [CrossRef]

- Kaiser, H.F. The varimax criterion for analytic rotation in factor analysis. Psychometrika 1958, 23, 187–200. [Google Scholar] [CrossRef]

- Hair, J.F.; Black, B.; Babin, B.J.; Anderson, R. Multivariate Data Analysis; Upper Saddle River: Prentice Hall: New York, NY, USA, 2011. [Google Scholar]

- Costello, A.B.; Osborne, J.W. Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Pract. Assess. 2005, 10, 7. [Google Scholar]

- Joshi, M.A.; Krishnappa, P.; Prabhu, A.V. Faculty satisfaction and perception regarding emergency remote teaching: An exploratory study. Med. J. Armed. Forces India 2022. [Google Scholar] [CrossRef]

- Tsujikawa, N.; Tsuchida, S.; Shiotani, T. Changes in the Factors Influencing Public Acceptance of Nuclear Power Generation in Japan Since the 2011 Fukushima Daiichi Nuclear Disaster. Risk Anal. 2015, 36, 98–113. [Google Scholar] [CrossRef] [PubMed]

- Orbell, S.; Crombie, I.; Johnston, G. Social cognition and social structure in the prediction of cervical screening uptake. Br. J. Health Psychol. 1996, 1, 35–50. [Google Scholar] [CrossRef]

- Childers, T.L.; Carr, C.L.; Peck, J.; Carson, S. Hedonic and utilitarian motivations for online retail shopping behavior—ScienceDirect. J. Retail. 2001, 77, 511–535. [Google Scholar] [CrossRef]

- Kahn, M.B. Cross-category effects of induced arousal and pleasure on the internet shopping experience. J. Retail. 2002, 78, 31–40. [Google Scholar]

- Ajzen, I.; Fishbein, M. Understanding attitudes and predicting social behavior; Prentice-Hall: Hoboken, NJ, USA, 1980. [Google Scholar]

- Khan, F.; Ahmed, W.; Najmi, A. Understanding consumers’ behavior intentions towards dealing with the plastic waste: Perspective of a developing country. Resour. Conserv. Recycl. 2018, 142, 49–58. [Google Scholar] [CrossRef]

- Piazza, A.J.; Knowlden, A.P.; Hibberd, E.; Leeper, J.; Paschal, A.M.; Usdan, S. Mobile device use while crossing the street: Utilizing the theory of planned behavior. Accid. Anal. Prev. 2019, 127, 9–18. [Google Scholar] [CrossRef] [PubMed]

- Arora, R.; Stoner, C. The effect of perceived service quality and name familiarity on the service selection decision. J. Serv. Mark. 1996, 10, 22–34. [Google Scholar] [CrossRef]

- Gronroos, C. Service quality: The six criteria of good perceived service. Rev. Bus. 1988, 9, 10. [Google Scholar]

- Bernardo, M.; Marimon, F.; Alonso-Almeida, M.D.M. Functional quality and hedonic quality: A study of the dimensions of e-service quality in online travel agencies. Inf. Manag. 2012, 49, 342–347. [Google Scholar] [CrossRef]

- Bandura, A. Self-efficacy: Toward a unifying theory of behavioral change. Psychol. Rev. 1977, 84, 191. [Google Scholar] [CrossRef]

- Bandura, A.; Freeman, W.H.; Lightsey, R. Self-efficacy: The exercise of control. J. Cogn. Psychother. 1999, 13, 158–166. [Google Scholar] [CrossRef]

- Kim, J.; Park, H.-A. Development of a Health Information Technology Acceptance Model Using Consumers’ Health Behavior Intention. J. Med. Internet Res. 2012, 14, e133. [Google Scholar] [CrossRef]

- Huang, G.; Ren, Y. Linking technological functions of fitness mobile apps with continuance usage among Chinese users: Moderating role of exercise self-efficacy. Comput. Hum. Behav. 2020, 103, 151–160. [Google Scholar] [CrossRef]

- Alirezaee, S.; Ozgoli, G.; Majd, H.A. Comparison of sexual self-efficacy and sexual function in fertile and infertile women referred to health centers in Mashhad in 1392. Pajoohandeh J. 2014, 19, 131–136. [Google Scholar]

- Estévez-López, F.; Álvarez-Gallardo, I.C.; Segura-Jiménez, V.; Soriano-Maldonado, A.; Borges-Cosic, M.; Pulido-Martos, M.; Aparicio, V.A.; Carbonell-Baeza, A.; Delgado-Fernández, M.; Geenen, R. The discordance between subjectively and objectively measured physical function in women with fibromyalgia: Association with catastrophizing and self-efficacy cognitions. The al-Ándalus project. Disabil. Rehabil. 2016, 40, 1–9. [Google Scholar] [CrossRef]

- Lovett, A.A.; Brainard, J.S.; Bateman, I.J. Improving Benefit Transfer Demand Functions: A GIS Approach. J. Environ. Manag. 1997, 51, 373–389. [Google Scholar] [CrossRef]

- Rouhani, S.; Ashrafi, A.; ZareRavasan, A.; Afshari, S. The impact model of business intelligence on decision support and organizational benefits. J. Enterp. Inf. Manag. 2016, 29, 19–50. [Google Scholar] [CrossRef]

- Landis, B.D.; Altman, J.D.; Cavin, J.D. Underpinnings of Academic Success: Effective Study Skills Use as a Function of Academic Locus of Control nd Self-Efficacy. Psi Chi J. Undergrad. Res. 2007, 12, 126–130. [Google Scholar] [CrossRef]

- Pajares, F.; Valiante, G. Students’ self-efficacy in their self-regulated learning strategies: A developmental perspective. Psychologia 2002, 45, 211–221. [Google Scholar] [CrossRef] [Green Version]

- Maheshwari, G.; Kha, K.L. Investigating the relationship between educational support and entrepreneurial intention in Vietnam: The mediating role of entrepreneurial self-efficacy in the theory of planned behavior. Int. J. Manag. Educ. 2022, 20. [Google Scholar] [CrossRef]

- Valtonen, T.; Kukkonen, J.; Kontkanen, S.; Sormunen, K.; Dillon, P.; Sointu, E. The impact of authentic learning experiences with ICT on pre-service teachers’ intentions to use ICT for teaching and learning. Comput. Educ. 2015, 81, 49–58. [Google Scholar] [CrossRef]

- Gong, Z.; Han, Z.; Li, X.; Yu, C.; Reinhardt, J.D. Factors Influencing the Adoption of Online Health Consultation Services: The Role of Subjective Norm, Trust, Perceived Benefit, and Offline Habit. Front. Public Health 2019, 7, 286. [Google Scholar] [CrossRef]

- Cislaghi, B.; Heise, L. Theory and practice of social norms interventions: Eight common pitfalls. Glob. Health 2018, 14, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Forsythe, S.; Liu, C.; Shannon, D.; Gardner, L.C. Development of a scale to measure the perceived benefits and risks of online shopping. J. Interact. Mark. 2006, 20, 55–75. [Google Scholar] [CrossRef]

| Expert | Gender | Age | Working Time | Research Direction | Professional Ranks |

|---|---|---|---|---|---|

| 1 | Male | 48 | 22 years | Product design; Human–computer interaction | Doctoral supervisor; Professor |

| 2 | Female | 32 | 4 years | user perception and preference | Associate professor |

| Number | Problem |

|---|---|

| Q1. | What are the benefits of online lessons through APP, in your opinion? |

| Q2. | What are the downsides of online classes through APP, in your opinion? |

| Q3. | What additional features do you believe might be added to the present online class software to make it more efficient? |

| Number | Item | Factor Load Factor | Common Degree | |||

|---|---|---|---|---|---|---|

| Factor 1 | Factor 2 | Factor 3 | Factor 4 | |||

| Q11 | Teachers can understand and monitor students’ learning effectively by using APP for online lessons | 0.233 | 0.755 | 0.122 | 0.149 | 0.661 |

| Q16 | The app is good for parental supervision | 0.080 | 0.756 | 0.077 | 0.091 | 0.592 |

| Q21 | You can learn about other students’ lessons | 0.322 | 0.678 | −0.076 | 0.032 | 0.57 |

| Q19 | It can maintain the relationship between teachers and students | 0.225 | 0.700 | 0.148 | 0.075 | 0.568 |

| Q2 | Students can communicate and collaborate with each other using the app. | 0.648 | 0.325 | 0.133 | 0.028 | 0.543 |

| Q10 | High learning efficiency with APP | 0.687 | 0.157 | 0.178 | 0.207 | 0.571 |

| Q8 | The level of online lessons on APP is high quality | 0.763 | 0.092 | 0.132 | 0.178 | 0.64 |

| Q6 | Online lessons on the APP are good value for money. | 0.486 | 0.248 | 0.370 | 0.042 | 0.437 |

| Q17 | The quality of learning is good with the app | 0.770 | 0.187 | 0.120 | 0.15 | 0.665 |

| Q9 | Good learning atmosphere with APP | 0.619 | 0.284 | 0.098 | 0.128 | 0.490 |

| Q3 | High personal control and concentration in online classes with APP | 0.217 | 0.324 | 0.056 | 0.633 | 0.556 |

| Q7 | The operation of the functions of the online class APP is easy for me | 0.163 | 0.055 | 0.131 | 0.744 | 0.600 |

| Q4 | Students can control their learning progress by fast-forwarding, pausing and re-watching | 0.107 | 0.012 | 0.237 | 0.773 | 0.665 |

| Q13 | The functions on the APP are rich and complete | 0.262 | 0.134 | 0.765 | 0.087 | 0.680 |

| Q14 | The functions on the APP are practical | 0.339 | −0.071 | 0.579 | 0.139 | 0.475 |

| Q20 | App can be used on multiple platforms such as cell phones and computers | −0.007 | 0.141 | 0.793 | 0.255 | 0.713 |

| Explained variance before rotation (%) | 34.417 | 10.57 | 7.641 | 6.291 | ||

| Explained variance after rotation (%) | 19.814 | 15.985 | 11.889 | 11.231 | ||

| Eigenroots | 3.17 | 2.558 | 1.902 | 1.797 | ||

| The total proportion of variance (%) | 58.919 | |||||

| Cronbach α for each factor | 0.829 | 0.759 | 0.669 | 0.634 | ||

| Overall factor Cronbach α | 0.866 | |||||

| KMO | 0.89 | |

|---|---|---|

| Bartlett’s sphericity | spherical test | 1657.688 |

| df-value | 120 | |

| p-value | 0 | |

| Factor | Item | Coef. | Std. Error | z | p | Std. Estimate | AVE | CR |

|---|---|---|---|---|---|---|---|---|

| Factor 1 | Q2 | 1 | - | - | - | 0.654 | 0.465 | 0.775 |

| Factor 1 | Q10 | 1.039 | 0.098 | 10.604 | 0 | 0.698 | ||

| Factor 1 | Q8 | 1.123 | 0.099 | 11.308 | 0 | 0.758 | ||

| Factor 1 | Q6 | 1.066 | 0.099 | 10.777 | 0 | 0.712 | ||

| Factor 1 | Q17 | 0.728 | 0.081 | 9.017 | 0 | 0.575 | ||

| Factor 1 | Q9 | 1.025 | 0.104 | 9.81 | 0 | 0.634 | ||

| Factor 2 | Q11 | 1 | - | - | - | 0.790 | 0.455 | 0.832 |

| Factor 2 | Q16 | 1.112 | 0.108 | 10.294 | 0 | 0.628 | ||

| Factor 2 | Q19 | 0.845 | 0.079 | 10.7 | 0 | 0.655 | ||

| Factor 2 | Q21 | 1.043 | 0.099 | 10.503 | 0 | 0.642 | ||

| Factor 3 | Q13 | 1 | - | - | - | 0.73 | 0.379 | 0.646 |

| Factor 3 | Q14 | 0.655 | 0.085 | 7.695 | 0 | 0.535 | ||

| Factor 3 | Q20 | 0.948 | 0.108 | 8.78 | 0 | 0.652 | ||

| Factor 4 | Q7 | 1 | - | - | - | 0.594 | 0.415 | 0.677 |

| Factor 4 | Q3 | 1.36 | 0.184 | 7.41 | 0 | 0.62 | ||

| Factor 4 | Q4 | 1.073 | 0.144 | 7.469 | 0 | 0.632 |

| Factor 1 | Factor 2 | Factor 3 | Factor 4 | |

|---|---|---|---|---|

| Factor 1 | 0.668 | |||

| Factor 2 | 0.546 | 0.677 | ||

| Factor 3 | 0.339 | 0.446 | 0.616 | |

| Factor 4 | 0.262 | 0.475 | 0.425 | 0.652 |

| Hypothesis | X | → | Y | Unstd | SE | CR | p | Std. |

|---|---|---|---|---|---|---|---|---|

| H1 | Self-efficacy | → | Perceived benefits | 0.195 | 0.142 | 1.372 | 0.17 | 0.149 |

| H2 | Self-efficacy | → | Functional quality | 0.681 | 0.106 | 6.438 | 0 | 0.655 |

| H3 | Functional quality | → | Perceived benefits | 0.443 | 0.124 | 3.573 | 0 | 0.352 |

| H4 | Subjective norms | → | Self-efficacy | 0.327 | 0.056 | 5.857 | 0 | 0.505 |

| H5 | Subjective norms | → | Perceived benefits | 0.396 | 0.066 | 6.044 | 0 | 0.467 |

| Common Indicators | χ2 | df | χ2/df | GFI | RMSEA | CFI | NNFI |

|---|---|---|---|---|---|---|---|

| Judgment criteria | - | - | <3 | >0.9 | <0.10 | >0.9 | >0.9 |

| value | 175.967 | 99 | 1.777 | 0.939 | 0.049 | 0.951 | 0.941 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Jiang, Q.; Li, Z. How to Promote Online Education through Educational Software—An Analytical Study of Factor Analysis and Structural Equation Modeling with Chinese Users as an Example. Systems 2022, 10, 100. https://doi.org/10.3390/systems10040100

Wang Z, Jiang Q, Li Z. How to Promote Online Education through Educational Software—An Analytical Study of Factor Analysis and Structural Equation Modeling with Chinese Users as an Example. Systems. 2022; 10(4):100. https://doi.org/10.3390/systems10040100

Chicago/Turabian StyleWang, Zheng, Qianling Jiang, and Zichao Li. 2022. "How to Promote Online Education through Educational Software—An Analytical Study of Factor Analysis and Structural Equation Modeling with Chinese Users as an Example" Systems 10, no. 4: 100. https://doi.org/10.3390/systems10040100

APA StyleWang, Z., Jiang, Q., & Li, Z. (2022). How to Promote Online Education through Educational Software—An Analytical Study of Factor Analysis and Structural Equation Modeling with Chinese Users as an Example. Systems, 10(4), 100. https://doi.org/10.3390/systems10040100