Enhancing Sex Estimation Accuracy with Cranial Angle Measurements and Machine Learning

Simple Summary

Abstract

1. Introduction

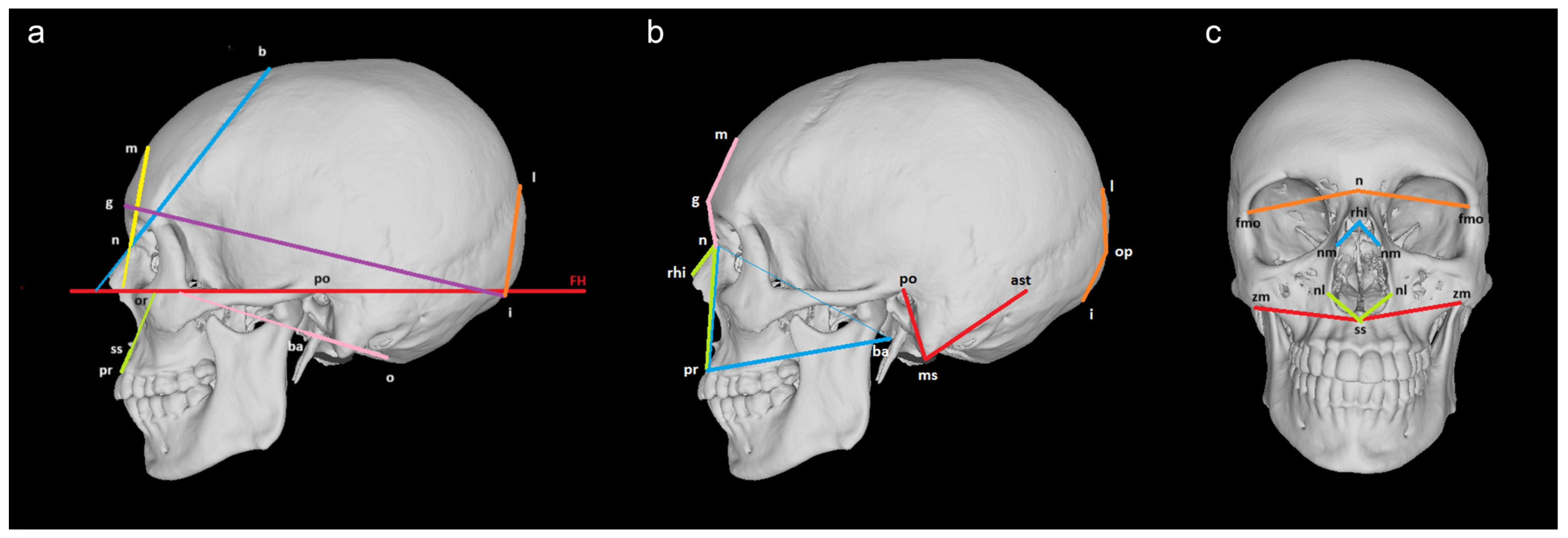

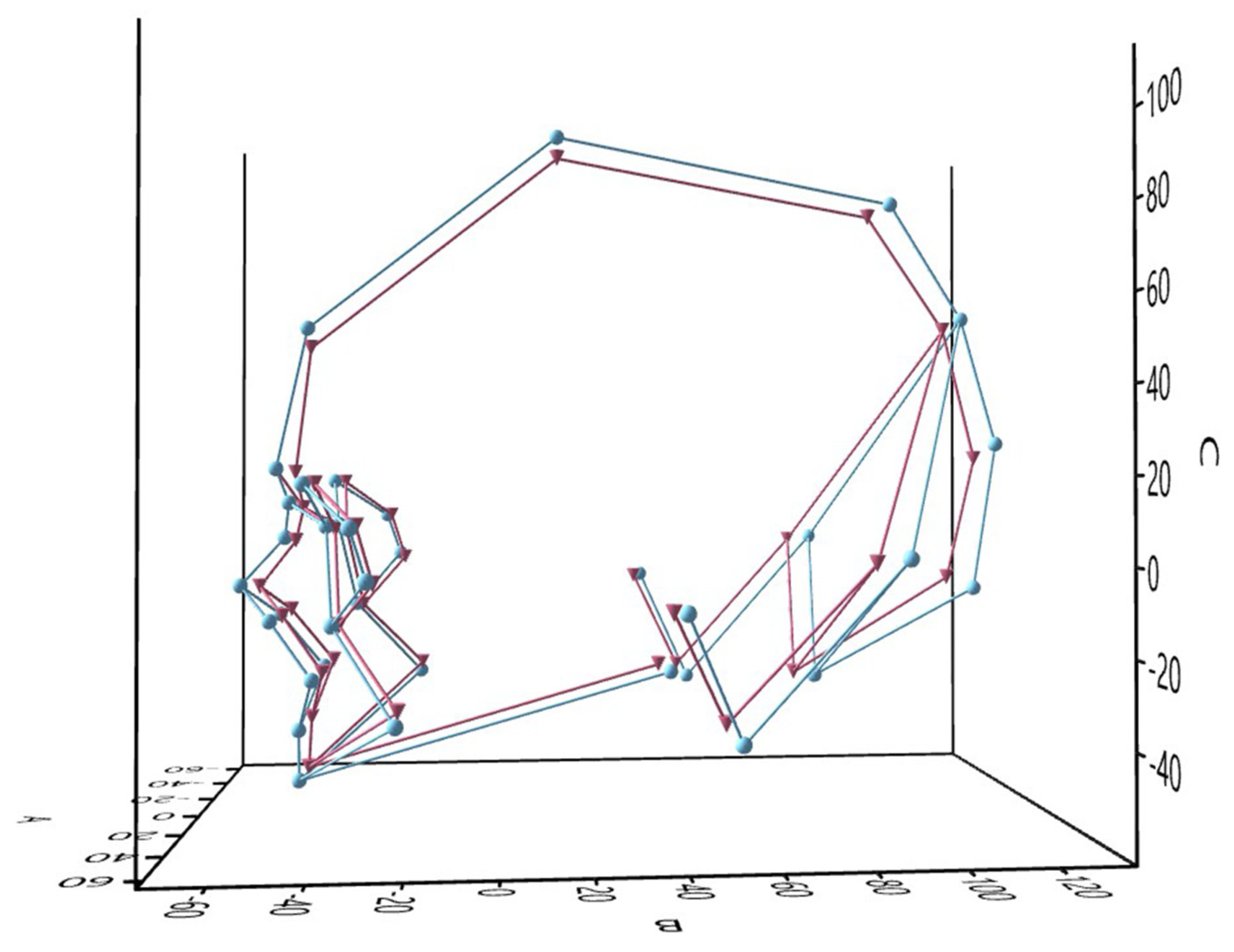

2. Material and Methods

3. Results

3.1. Sex Differences

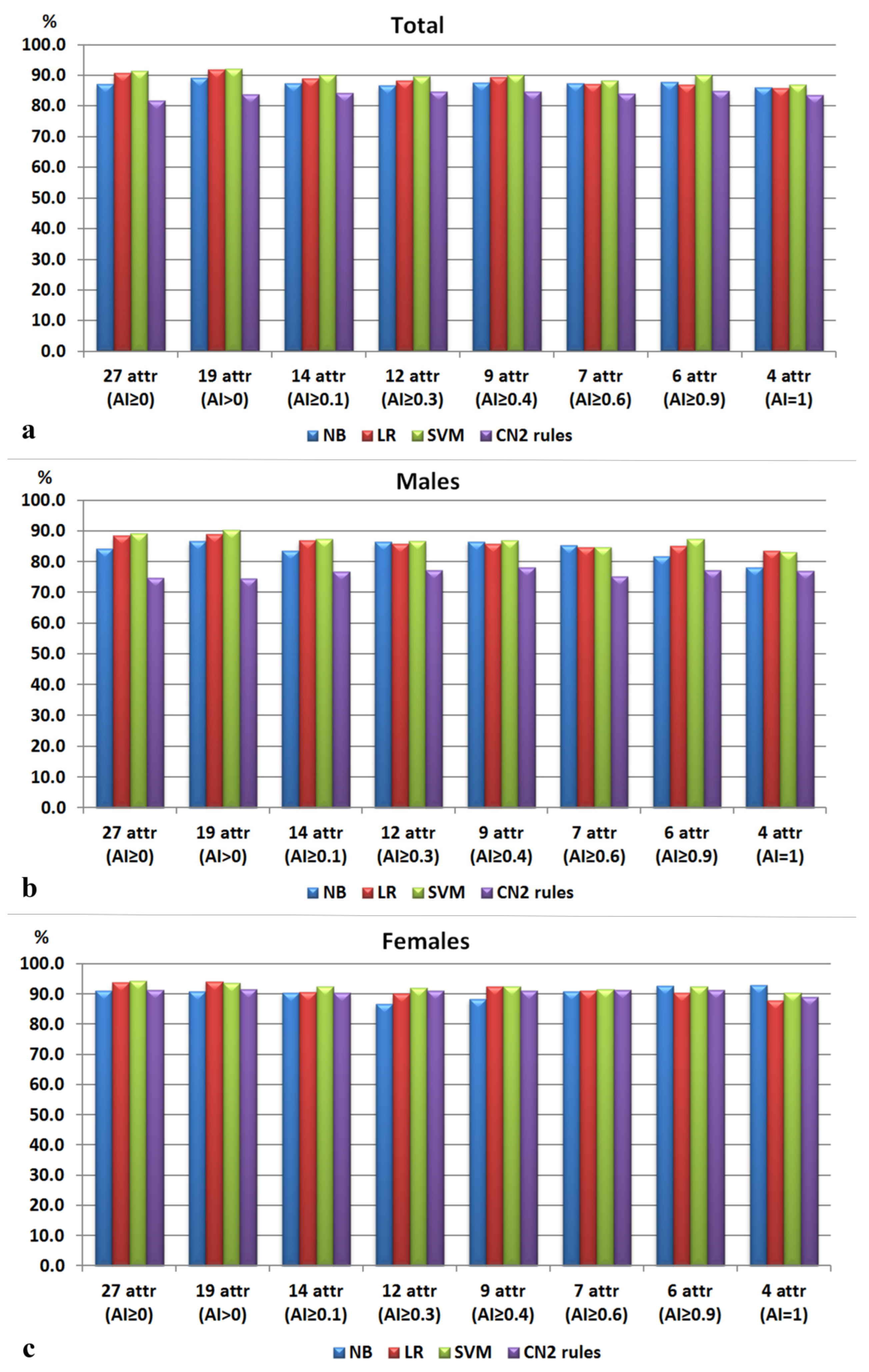

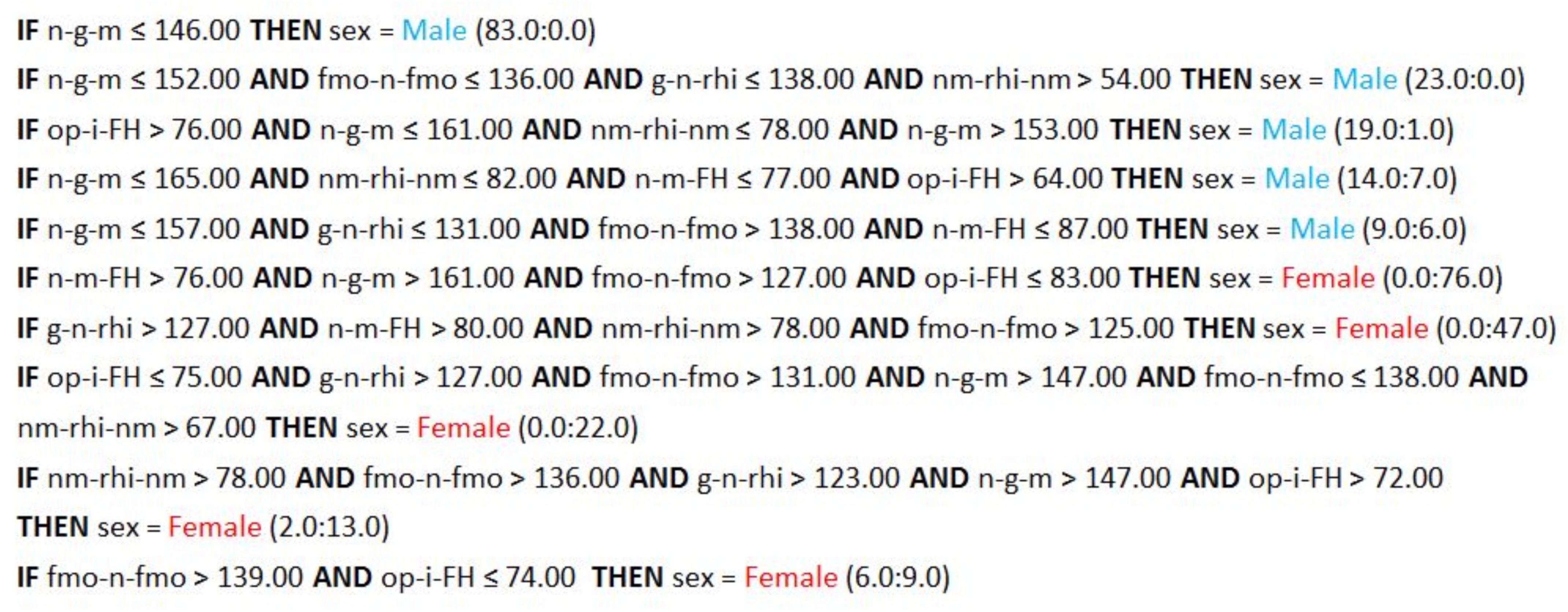

3.2. ML Classifiers

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Veyre-Goulet, S.A.; Mercier, C.; Robin, O.; Gurin, C. Recent human sexual dimorphism study using cephalometric plots on lateral teleradiography and discriminant function analysis. J. Forensic Sci. 2008, 53, 786–789. [Google Scholar] [CrossRef] [PubMed]

- Hsiao, T.H.; Chang, H.P.; Liu, K.M. Sex determination by discriminant function analysis of lateral radiographic cephalometry. J. Forensic Sci. 1996, 41, 792–795. [Google Scholar] [CrossRef] [PubMed]

- Abdel Fatah, E.E.; Shirley, N.R.; Jantz, R.L.; Mahfouz, M.R. Improving sex estimation from crania using a novel three-dimensional quantitative method. J. Forensic Sci. 2014, 59, 590–600. [Google Scholar] [CrossRef] [PubMed]

- Qaq, R.; Manica, S.; Revie, G. Sex estimation using lateral cephalograms: A Statistical Analysis. Forensic Sci. Int. Rep. 2019, 1, 100034. [Google Scholar] [CrossRef]

- Toneva, D.; Nikolova, S.; Agre, G.; Zlatareva, D.; Hadjidekov, V.; Lazarov, N. Machine learning approaches for sex estimation using cranial measurements. Int. J. Legal Med. 2021, 135, 951–966. [Google Scholar] [CrossRef]

- Petaros, A.; Garvin, H.M.; Sholts, S.B.; Schlager, S.; Wärmländer, S.K.T.S. Sexual dimorphism and regional variation in human frontal bone inclination measured via digital 3D models. Legal Med. 2017, 29, 53–61. [Google Scholar] [CrossRef]

- Koelzer, S.C.; Kuemmel, I.V.; Koelzer, J.T.; Ramsthaler, F.; Holz, F.; Gehl, A.; Verhoff, M.A. Definitions of frontal bone inclination: Applicability and quantification. Forensic Sci. Int. 2019, 303, 109929. [Google Scholar] [CrossRef]

- Ningtyas, A.H.; Widyaningrum, R.; Shantiningsih, R.R.; Yanuaryska, R.D. Sex estimation using angular measurements of nasion, sella, and glabella on lateral cephalogram among Indonesian adults in Yogyakarta. Egypt. J. Forensic Sci. 2023, 13, 48. [Google Scholar] [CrossRef]

- Franklin, D.; Freedman, L.; Milne, N.; Oxnard, C.E. Geometric morphometric study of population variation in indigenous southern African crania. Am. J. Hum. Biol. 2007, 19, 20–33. [Google Scholar] [CrossRef]

- Green, H.; Curnoe, D. Sexual dimorphism in Southeast Asian crania: A geometric morphometric approach. HOMO 2009, 60, 517–534. [Google Scholar] [CrossRef]

- Bigoni, L.; Veleminska, J.; Bružek, J. Three-dimensional geometric morphometric analysis of craniofacial sexual dimorphism in a Central European sample of known sex. HOMO 2010, 61, 16–32. [Google Scholar] [CrossRef] [PubMed]

- Toneva, D.H.; Nikolova, S.Y.; Tasheva-Terzieva, E.D.; Zlatareva, D.K.; Lazarov, N.E. Sexual dimorphism in shape and size of the neurocranium. Int. J. Legal. Med. 2022, 136, 1851–1863. [Google Scholar] [CrossRef] [PubMed]

- Toneva, D.H.; Nikolova, S.N.; Tasheva-Terzieva, E.D.; Zlatareva, D.K.; Lazarov, N.E. A Geometric Morphometric Study on Sexual Dimorphism in Viscerocranium. Biology 2022, 11, 1333. [Google Scholar] [CrossRef] [PubMed]

- Musilová, B.; Dupej, J.; Velemínská, J.; Chaumoitre, K.; Bruzek, J. Exocranial surfaces for sex assessment of the human cranium. Forensic Sci. Int. 2016, 269, 70–77. [Google Scholar] [CrossRef]

- Gao, H.; Geng, G.; Yang, W. Sex determination of 3D skull based on a novel unsupervised learning method. Comput. Math. Methods Med. 2018, 2018, 4567267. [Google Scholar] [CrossRef]

- Yang, W.; Reziwanguli, X.; Xu, J.; Wang, P.; Hu, J.; Liu, X. Sex determination of skull based on fuzzy decision tree. In Proceedings of the 4th Workshop on Advanced Research and Technology in Industry (WARTIA 2018), Advances in Engineering Research, Dalian, China, 28–29 September 2018; Volume 173, pp. 14–20. [Google Scholar] [CrossRef]

- Bewes, J.; Low, A.; Morphett, A.; Pate, F.; Henneberg, M. Artificial intelligence for sex determination of skeletal remains: Application of a deep learning artificial neural network to human skulls. J. Forensic Legal Med. 2019, 62, 40–43. [Google Scholar] [CrossRef]

- Nikita, E.; Nikitas, P. Sex estimation: A comparison of techniques based on binary logistic, probit and cumulative probit regression, linear and quadratic discriminant analysis, neural networks, and naïve Bayes classification using ordinal variables. Int. J. Legal Med. 2020, 134, 1213–1225. [Google Scholar] [CrossRef]

- Toneva, D.; Nikolova, S.; Agre, G.; Zlatareva, D.; Hadjidekov, V.; Lazarov, N. Data mining for sex estimation based on cranial measurements. Forensic Sci. Int. 2020, 315, 110441. [Google Scholar] [CrossRef]

- Yang, W.; Zhou, M.; Zhang, P.; Geng, G.; Liu, X.; Zhang, H. Skull sex estimation based on wavelet transform and Fourier transform. Biomed. Res. Int. 2020, 2020, 8608209–8608210. [Google Scholar] [CrossRef]

- Mota, M.J.S.; da Silva, F.R.; Santos, G.S.; Pereira, L.S.; Almeida, R.S.; Costa, M.J.; Rodrigues, A.P.; Gomes, P.L. Sex determination based on craniometric parameters: A comparative approach between linear and non-linear machine learning algorithms. J. Arch. Health 2024, 5, 15–27. [Google Scholar] [CrossRef]

- Cignoni, P.; Callieri, M.; Corsini, M.; Dellepiane, M.; Ganovelli, F.; Ranzuglia, G. MeshLab: An open-source mesh processing tool. In Sixth Eurographics Italian Chapter Conference; Scarano, V., de Chiara, R., Erra, U., Eds.; Eurographics Association: Salerno, Italy, 2008; pp. 129–136. [Google Scholar]

- Hammer, Ø.; Harper, D.A.T.; Ryan, P.D. PAST: Paleontological statistics software package for education and data analysis. Palaeontol. Electron. 2001, 4, 9–18. [Google Scholar]

- von Cramon-Taubadel, N.; Frazier, B.C.; Lahr, M.M. The problem of assessing landmark error in geometric morphometrics: Theory, methods, and modifications. Am. J. Phys. Anthropol. 2007, 134, 24–35. [Google Scholar] [CrossRef] [PubMed]

- Clark, P.; Niblett, T. The CN2 induction algorithm. Mach. Learn. 1989, 3, 261–283. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Domingos, P.; Pazzani, M. Beyond independence: Conditions for optimality of the simple Bayesian classifier. In Proceedings of the 13th International Conference on Machine Learning, Bari, Italy, 3–6 July 1996; pp. 105–112. [Google Scholar]

- Hosmer, D.; Lemeshow, S. Applied Logistic Regression, 2nd ed.; John Wiley & Sons: New York, NY, USA, 2000. [Google Scholar]

- Mitchell, T. Machine Learning; McGraw Hill: New York, NY, USA, 1997. [Google Scholar]

- Hall, M. Correlation-Based Feature Selection for Machine Learning. Ph.D. Thesis, University of Waikato, Waikato, New Zealand, 1999. [Google Scholar]

- Witten, I.; Eibe, F.; Hall, M. Data Mining: Practical Machine Learning Tools and Techniques, 3rd ed.; Morgan Kaufmann: San Mateo, CA, USA, 2011. [Google Scholar]

- Bibby, R.E. A cephalometric study of sexual dimorphism. Am. J. Orthod. 1979, 76, 256–259. [Google Scholar] [CrossRef]

- Steyn, M.; Iscan, M.Y. Sexual dimorphism in the crania and mandibles of South African whites. Forensic Sci. Int. 1998, 98, 9–16. [Google Scholar] [CrossRef]

- Dayal, M.R.; Spocter, M.A.; Bidmos, M.A. An assessment of sex using the skull of black south Africans by discriminant function analysis. HOMO 2008, 59, 209–221. [Google Scholar] [CrossRef]

- Franklin, D.; Cardini, A.; Flavel, A.; Kuliukas, A. Estimation of sex from cranial measurements in a Western Australian population. Forensic Sci. Int. 2013, 229, 158.e1–158.e8. [Google Scholar] [CrossRef]

- Dillon, A. Cranial Sexual Dimorphism and the Population Specificity of Anthropological Standards. Master’s Thesis, University of Western Australia, Crawley, WA, Australia, 2014. [Google Scholar]

- Ekizoglu, O.; Hocaoglu, E.; Inci, E.; Can, I.O.; Solmaz, D.; Aksoy, S.; Buran, C.F.; Sayin, I. Assessment of sex in a modern Turkish population using cranial anthropometric parameters. Legal Med. 2016, 21, 45–52. [Google Scholar] [CrossRef]

- Ibrahim, A.; Alias, A.; Nor, F.M.; Swarhib, M.; Bakar, A.N.; Das, S. Study of sexual dimorphism of Malaysian crania: An important step in identification of the skeletal remains. Anat. Cell Biol. 2017, 50, 86–92. [Google Scholar] [CrossRef]

- Zaafrane, M.; Ben, K.M.; Naccache, I.; Ezzedine, E.; Savall, F.; Telmon, N.; Mnif, N.; Hamdoun, M. Sex determination of a Tunisian population by CT scan analysis of the skull. Int. J. Legal Med. 2017, 132, 853–862. [Google Scholar] [CrossRef] [PubMed]

- Franklin, D.; Cardini, A.; Flavel, A.; Kuliukas, A. The application of traditional and geometric morphometric analyses for forensic quantification of sexual dimorphism: Preliminary investigations in a Western Australian population. Int. J. Legal Med. 2012, 26, 549–558. [Google Scholar] [CrossRef] [PubMed]

- Ogawa, Y.; Imaizumi, K.; Miyasaka, S.; Yoshino, M. Discriminant functions for sex estimation of modern Japanese skulls. J. Forensic Legal Med. 2013, 20, 234–238. [Google Scholar] [CrossRef] [PubMed]

- Marinescu, M.; Panaitescu, V.; Rosu, M.; Maru, N.; Punga, A. A sexual dimorphism of crania in a Romanian population: Discriminant function analysis approach for sex estimation. Rom. J. Leg. Med. 2014, 22, 21–26. [Google Scholar] [CrossRef]

- Toneva, D.; Nikolova, S.; Agre, G.; Zlatareva, D.; Fileva, N.; Lazarov, N. Sex estimation based on mandibular measurements. Anthropol. Anz. 2024, 81, 19–42. [Google Scholar] [CrossRef]

- Best, K.C.; Garvin, H.M.; Cabo, L.L. An investigation into the relationship between human cranial and pelvic sexual dimorphism. J. Forensic Sci. 2017, 63, 990–1000. [Google Scholar] [CrossRef]

- Maass, P.; Friedling, L.J. Morphometric variation of the frontal bone in an adult South African cadaveric sample. HOMO 2020, 71, 205–218. [Google Scholar] [CrossRef]

- Murphy, R.E.; Garvin, H.M. A morphometric outline analysis of ancestry and sex differences in cranial shape. J. Forensic Sci. 2018, 63, 1001–1009. [Google Scholar] [CrossRef]

- del Bove, A.; Profico, A.; Riga, A.; Bucchi, A.; Lorenzo, C. A geometric morphometric approach to the study of sexual dimorphism in the modern human frontal bone. Am. J. Phys. Anthropol. 2020, 173, 643–654. [Google Scholar] [CrossRef]

- Bannister, J.J.; Juszczak, H.; Aponte, J.D.; Katz, D.C.; Knott, P.D.; Weinberg, S.M.; Hallgrímsson, B.; Forkert, N.D.; Seth, R. Sex Differences in Adult Facial Three-Dimensional Morphology: Application to Gender-Affirming Facial Surgery. Facial Plast. Surg. Aesthet. Med. 2022, 24, S24–S30. [Google Scholar] [CrossRef]

- Toneva, D.H.; Nikolova, S.Y.; Zlatareva, D.K.; Hadjidekov, V.G.; Lazarov, N.E. Sex estimation by Mastoid Triangle using 3D models. HOMO 2019, 70, 63–73. [Google Scholar] [CrossRef] [PubMed]

- Scheuer, L.; Black, S. Developmental Juvenile Osteology; Elsevier: Amsterdam, The Netherlands; Academic Press: San Diego, CA, USA, 2000. [Google Scholar]

- Vidarsdottir, U.S.; O’Higgins, P.; Stringer, C. A geometric morphometrics study of regional differences in the ontogeny of the modern human facial skeleton. J. Anat. 2002, 201, 221–229. [Google Scholar] [CrossRef] [PubMed]

- Avelar, L.E.T.; Cardoso, M.A.; Santos Bordoni, L.; de Miranda Avelar, L.; de Miranda Avelar, J.V. Aging and Sexual Differences of the Human Skull. Plast. Reconstr. Surg. Glob. Open 2017, 5, e1297. [Google Scholar] [CrossRef] [PubMed]

- Milella, M.; Franklin, D.; Belcastro, M.G.; Cardini, A. Sexual differences in human cranial morphology: Is one sex more variable or one region more dimorphic? Anat. Rec. 2021, 304, 2789–2810. [Google Scholar] [CrossRef] [PubMed]

- Bejdová, Š.; Dupej, J.; Krajíček, V.; Velemínská, J.; Velemínský, P. Stability of upper face sexual dimorphism in central European populations (Czech Republic) during the modern age. Int. J. Legal Med. 2018, 132, 321–330. [Google Scholar] [CrossRef] [PubMed]

- Pretorius, E.; Steyn, M.; Scholtz, Y. Investigation into the usability of geometric morphometric analysis in assessment of sexual dimorphism. Am. J. Phys. Anthropol. 2006, 129, 64–70. [Google Scholar] [CrossRef] [PubMed]

- Toneva, D.; Nikolova, S.; Georgiev, I. Reliability and accuracy of angular measurements on laser scanning created 3D models of dry skulls. J. Anthropol. 2016, 2016, 6218659. [Google Scholar] [CrossRef]

- Toneva, D.; Nikolova, S.; Georgiev, I.; Lazarov, N. Impact of resolution and texture of laser scanning generated 3D models on landmark identification. Anat. Rec. 2020, 303, 1950–1965. [Google Scholar] [CrossRef]

- Pessa, J.E.; Zadoo, V.P.; Yuan, C.; Ayedelotte, J.D.; Cuellar, F.J.; Cochran, C.S.; Mutimer, K.L.; Garza, J.R. Concertina effect and facial aging: Nonlinear aspects of youthfulness and skeletal remodeling, and why, perhaps, infants have jowls. Plast. Reconstr. Surg. 1999, 103, 635–644. [Google Scholar] [CrossRef]

- Mendelson, B.; Wong, C.H. Changes in the facial skeleton with aging: Implications and clinical applications in facial rejuvenation. Aesthetic Plast. Surg. 2012, 36, 753–760. [Google Scholar] [CrossRef]

| Landmarks | Definition | |

|---|---|---|

| Midsagittal landmarks | Nasion (n) | The point of intersection between the frontonasal suture and the midsagittal plane. |

| Bregma (b) | The point of intersection between the coronal and sagittal sutures. | |

| Glabella (g) | The most forward-projecting point at the level of the supraorbital ridge in the midsagittal plane. | |

| Metopion (m) | The point of intersection between the horizontal line connecting the frontal eminences and the midsagittal plane. | |

| Obelion (ob) | The point of intersection between the line connecting the parietal foramina and the sagittal suture. | |

| Lambda (l) | The point of intersection between the sagittal and lambdoid sutures. | |

| Opisthocranion (op) | The most posterior point on the occipital bone in the midsagittal plane; the most distant point from the landmark glabella. | |

| Inion (i) | The point of intersection between the superior nuchal lines and the midsagittal plane. | |

| Basion (ba) | The midpoint of the anterior margin of foramen magnum. | |

| Opisthion (o) | The midpoint of the posterior margin of foramen magnum. | |

| Rhinion (rhi) | The point of intersection between the internasal suture and the margin of the piriform aperture. | |

| Subspinale (ss) | The most posterior point on the intermaxillary suture, immediately below the anterior nasal spine. | |

| Prosthion (pr) | The most anterior point on the upper alveolar process in the midsagittal plane. | |

| Midnasale (mn) | The deepest midline point on the nasal bones. | |

| Bilateral landmarks | Frontomalare-orbitale (fmo) | The point of intersection between the zygomaticofrontal suture and the lateral orbital margin. |

| Asterion (ast) | The point of intersection between the lambdoid, parietomastoid, and occipitomastoid sutures. | |

| Mastoidale (ms) | The most inferior point on the tip of the mastoid process. | |

| Maxillofrontale (mf) | The point of intersection between the frontomaxillary suture and the medial orbital margin. | |

| Ektokonchion (ek) | The point of intersection between the line beginning from the landmark maxillofrontale and crossing the orbit parallel to the superior orbital margin and the lateral orbital margin. | |

| Supraorbitale (so) | The most superior point on the superior orbital margin. | |

| Zygoorbitale (zo) | The point of intersection between the zygomaxillary suture and the inferior orbital margin. | |

| Nasolaterale (nl) | The most lateral point on the margin of the piriform aperture. | |

| Zygomaxillare (zm) | The most inferior point on the zygomaticomaxillary suture. | |

| Nasomaxillare (nm) | The point of intersection between the nasomaxillary suture and the margin of the piriform aperture. | |

| Porion (po) | The most superior point on the margin of the external auditory meatus. | |

| Orbitale # (or) | The most inferior point on the inferior margin of the orbit. | |

| Angles | Description | |

|---|---|---|

| Angles between a line and a plane | Frontal slope angle (n-b-FH) | The angle between the line nasion-bregma and the FH *. |

| Frontal profile angle (n-m-FH) | The angle between the line nasion-metopion and the FH. | |

| Parietal slope angle(ob-l-FH) | The angle between the line obelion-lambda and the FH. | |

| Lambda-opisthocranion angle (l-op-FH) | The angle between the line lambda-opisthocranion and the FH. | |

| Lambda-inion angle (l-i-FH) | The angle between the line lambda-inion and the FH. | |

| Opisthocranion-inion angle (op-i-FH) | The angle between the line opisthocranion-inion and the FH. | |

| Foramen magnum tilt angle (ba-o-FH) | The angle between the line basion-opisthion and the FH. | |

| Calottebasis angle (n-i-FH) | The angle between the line nasion-inion and the FH. | |

| Glabella-lambda angle (g-l-FH) | The angle between the line glabella-lambda and the FH. | |

| Glabella-inion angle (g-i-FH) | The angle between the line glabella-inion and the FH. | |

| Facial profile angle (n-pr-FH) | The angle between the line nasion-prosthion and the FH. | |

| Nasal profile angle (n-ss-FH) | The angle between the line nasion-subspinale and the FH. | |

| Alveolar profile angle (ss-pr-FH) | The angle between the line subspinale-prosthion and the FH. | |

| Nasal bones angle (n-rhi-FH) | The angle between the line nasion-rhinion and the FH. | |

| Zygomaticomaxillary suture tilt angle b (zm-zo-FH) | The angle between the line zygomaxillare-zygoorbitale and the FH. | |

| Sagittal tilt of the orbit entrance b (so-zo-FH) | The angle between the line supraorbitale-zygoorbitale and the FH. | |

| Horizontal tilt of the orbit entrance b (ek-mf-FH) | The angle between the line ektokonchion-maxillofrontale and the FH. | |

| Angles between two lines | Frontal curvature angle (n-m-b) | The angle constructed between the landmarks nasion, metopion, and bregma with vertex at the metopion. |

| Glabellar angle (n-g-m) | The angle constructed between the landmarks nasion, glabella, and metopion with vertex at the glabella. | |

| Upper occipital angle (l-op-i) | The angle constructed between the landmarks lambda, opisthocranion, and inion with vertex at the opisthocranion. | |

| Prosthion-glabella-lambda angle (pr-g-l) | The angle constructed between the lines prosthion-glabella and glabella-lambda. | |

| Facial triangle angle (n-pr-ba) | The angle at prosthion in the facial triangle nasion-prosthion-basion. | |

| Nasomalar angle (fmo-n-fmo) | The angle constructed between the right frontomalare orbitale, nasion, and the left frontomalare orbitale, with vertex at the nasion. | |

| Zygomaxillary angle (zm-ss-zm) | The angle constructed between the right zygomaxillare, subspinale, and the left zygomaxillare, with vertex at the subspinale | |

| Nasofrontal angle (g-n-rhi) | The angle constructed between the landmarks glabella, nasion, and rhinion with vertex at the nasion. | |

| Nasal bone projection angle towards upper facial height (rhi-n-pr) | The angle constructed between the lines nasion-rhinion and nasion-prosthion. | |

| Nasal bone projection angle towards nasal height (rhi-n-ss) | The angle constructed between the lines nasion-rhinion and nasion-subspinale. | |

| Nasal bone curvature angle (n-mn-rhi) | The angle constructed between the landmarks nasion, midnasale, and rhinion with vertex at the midnasale. | |

| Nasomaxillare-rhinion angle (nm-rhi-nm) | The angle constructed between the right nasomaxillare, rhinion, and the left nasomaxillare, with vertex at the rhinion. | |

| Maxillofrontal angle (mf-n-mf) | The angle constructed between the right maxillofrontale, nasion, and the left maxillofrontale, with vertex at the nasion. | |

| Nasolateral angle (nl-ss-nl) | The angle constructed between the right nasolaterale, subspinale, and the left nasolaterale, with vertex at the subspinale. | |

| Mastoid angle b (po-ms-ast) | The angle constructed between the landmarks porion, mastoidale, and asterion, with vertex at the mastoidale. | |

| Angles | Males | Females | Sex Differences p-Value | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| n | Mean | SD | Min | Max | n | Mean | SD | Min | Max | ||

| n-b-FH | 154 | 49.77 | 3.00 | 43.50 | 57.12 | 180 | 50.24 | 2.77 | 39.18 | 57.44 | 0.113 t |

| n-m-FH | 154 | 79.62 | 4.18 | 68.98 | 89.47 | 180 | 82.89 | 3.84 | 69.49 | 89.54 | <0.001 *t |

| n-m-b | 154 | 133.76 | 4.42 | 117.81 | 143.91 | 180 | 130.45 | 4.10 | 120.62 | 142.62 | <0.001 *t |

| n-g-m | 154 | 146.67 | 8.05 | 130.00 | 165.17 | 180 | 160.15 | 6.58 | 146.97 | 174.77 | <0.001 *U |

| ob-l-FH | 154 | 62.23 | 5.18 | 47.91 | 80.83 | 180 | 59.71 | 5.09 | 41.76 | 74.67 | <0.001 *t |

| l-op-FH | 154 | 77.95 | 4.07 | 67.11 | 89.13 | 180 | 79.33 | 4.07 | 67.19 | 89.93 | 0.002 *t |

| l-i-FH | 154 | 85.28 | 2.86 | 73.78 | 89.82 | 180 | 84.77 | 3.16 | 74.62 | 89.55 | 0.207 U |

| op-i-FH | 154 | 76.72 | 5.66 | 59.04 | 88.45 | 180 | 72.50 | 6.18 | 59.01 | 89.53 | <0.001 *t |

| l-op-i | 154 | 155.37 | 6.03 | 141.12 | 169.07 | 180 | 152.36 | 5.99 | 137.73 | 167.22 | <0.001 *t |

| ba-o-FH | 154 | 6.66 | 4.57 | 0.04 | 30.73 | 180 | 8.17 | 4.61 | 0.01 | 21.50 | 0.002 *U |

| n-i-FH | 154 | 11.60 | 2.82 | 4.63 | 17.99 | 180 | 10.46 | 3.09 | 1.68 | 21.41 | <0.001 *t |

| g-l-FH | 154 | 8.05 | 2.92 | 0.25 | 15.13 | 180 | 8.36 | 3.10 | 0.59 | 17.54 | 0.347 t |

| g-i-FH | 154 | 14.16 | 2.75 | 7.22 | 20.35 | 180 | 13.38 | 3.06 | 5.14 | 23.93 | 0.014 *t |

| n-pr-FH | 136 | 86.49 | 2.38 | 74.32 | 89.77 | 166 | 86.16 | 2.42 | 77.19 | 89.67 | 0.225 U |

| pr-g-l | 136 | 98.45 | 3.84 | 86.50 | 108.61 | 166 | 99.92 | 3.61 | 90.76 | 108.94 | 0.001 *U |

| n-pr-ba | 136 | 76.21 | 3.49 | 64.89 | 86.17 | 166 | 75.31 | 2.88 | 67.71 | 82.00 | 0.015 *t |

| n-ss-FH | 154 | 86.55 | 2.22 | 74.61 | 89.95 | 180 | 86.61 | 2.14 | 79.59 | 89.63 | 0.691 U |

| ss-pr-FH | 136 | 81.45 | 5.90 | 39.27 | 89.37 | 166 | 80.54 | 5.70 | 57.84 | 89.04 | 0.101 U |

| fmo-n-fmo | 154 | 133.54 | 5.35 | 121.13 | 149.32 | 180 | 137.21 | 4.13 | 128.43 | 149.04 | <0.001 *U |

| zm-ss-zm | 154 | 115.88 | 5.30 | 101.46 | 131.04 | 180 | 118.30 | 4.85 | 105.00 | 133.01 | <0.001 *t |

| g-n-rhi | 154 | 129.32 | 8.10 | 107.22 | 149.65 | 180 | 137.99 | 6.34 | 119.77 | 153.61 | <0.001 *U |

| zm-zo-FH (R) | 154 | 43.80 | 5.04 | 25.10 | 58.20 | 180 | 42.18 | 4.63 | 28.58 | 59.29 | 0.002 *t |

| zm-zo-FH (L) | 154 | 43.92 | 4.52 | 32.11 | 55.64 | 180 | 42.23 | 4.24 | 30.19 | 53.84 | <0.001 *t |

| so-zo-FH (R) | 154 | 79.30 | 3.72 | 63.28 | 87.15 | 180 | 80.88 | 3.51 | 70.44 | 89.29 | <0.001 *U |

| so-zo-FH (L) | 154 | 80.25 | 3.78 | 70.90 | 89.14 | 180 | 81.52 | 3.24 | 67.69 | 88.74 | 0.002 *U |

| ek-mf-FH (R) | 154 | 15.24 | 3.24 | 7.71 | 23.98 | 180 | 15.45 | 3.10 | 7.74 | 24.60 | 0.558 t |

| ek-mf-FH (L) | 154 | 16.51 | 3.39 | 7.87 | 23.95 | 180 | 16.40 | 3.36 | 7.42 | 26.31 | 0.771 t |

| rhi-n-ss | 154 | 32.42 | 4.67 | 20.68 | 47.95 | 180 | 31.30 | 4.58 | 21.01 | 45.39 | 0.032 *U |

| rhi-n-pr | 136 | 30.09 | 5.23 | 18.65 | 48.88 | 166 | 30.11 | 4.78 | 19.75 | 45.20 | 0.002 *U |

| n-mn-rhi | 154 | 150.28 | 8.74 | 120.12 | 174.04 | 180 | 154.79 | 7.60 | 130.97 | 175.47 | <0.001 *t |

| nm-rhi-nm | 154 | 74.53 | 9.48 | 48.49 | 108.32 | 180 | 80.91 | 10.08 | 54.07 | 104.78 | <0.001 *t |

| mf-n-mf | 154 | 87.53 | 9.01 | 68.25 | 116.56 | 180 | 90.23 | 9.62 | 64.53 | 117.97 | 0.009 *t |

| nl-ss-nl | 154 | 80.20 | 7.23 | 49.34 | 101.81 | 180 | 83.87 | 7.32 | 64.28 | 106.35 | <0.001 *U |

| n-rhi-FH | 154 | 55.82 | 5.78 | 36.23 | 71.21 | 180 | 56.79 | 5.39 | 41.53 | 70.19 | 0.112 t |

| po-ms-ast (R) | 154 | 65.40 | 6.42 | 50.73 | 81.75 | 180 | 68.02 | 6.48 | 50.78 | 89.83 | <0.001 *t |

| po-ms-ast (L) | 154 | 65.45 | 5.99 | 51.78 | 86.72 | 180 | 67.83 | 5.98 | 56.32 | 84.62 | <0.001 *U |

| AI | Attributes | Number |

|---|---|---|

| >0 | n-m-FH *, n-g-m, ob-l-FH, l-op-FH, op-i-FH, l-op-i, ba-o-FH, n-i-FH, po-ms-ast (left), n-pr-ba, fmo-n-fmo, zm-ss-zm, g-n-rhi, zm-zo-FH (left), so-zo-FH (right), so-zo-FH (left), n-mn-rhi, nm-rhi-nm, nl-ss-nl | 19 |

| ≥0.1 | n-m-FH, n-g-m, ob-l-FH, op-i-FH, po-ms-ast (left), fmo-n-fmo, zm-ss-zm, g-n-rhi, zm-zo-FH (left), so-zo-FH (right), so-zo-FH (left), n-mn-rhi, nm-rhi-nm, nl-ss-nl | 14 |

| ≥0.3 | n-m-FH, n-g-m, ob-l-FH, op-i-FH, po-ms-ast (left), fmo-n-fmo, g-n-rhi, zm-zo-FH (left), so-zo-FH (right), n-mn-rhi, nm-rhi-nm, nl-ss-nl, | 12 |

| ≥0.4 | n-m-FH, n-g-m, ob-l-FH, op-i-FH, fmo-n-fmo, g-n-rhi, n-mn-rhi, nm-rhi-nm, nl-ss-nl | 9 |

| ≥0.6 | n-m-FH, n-g-m, op-i-FH, fmo-n-fmo, g-n-rhi, nm-rhi-nm, nl-ss-nl | 7 |

| ≥0.9 | n-m-FH, n-g-m, op-i-FH, fmo-n-fmo, g-n-rhi, nm-rhi-nm | 6 |

| =1 | n-m-FH, n-g-m, fmo-n-fmo, nm-rhi-nm | 4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Toneva, D.; Nikolova, S.; Agre, G.; Harizanov, S.; Fileva, N.; Milenov, G.; Zlatareva, D. Enhancing Sex Estimation Accuracy with Cranial Angle Measurements and Machine Learning. Biology 2024, 13, 780. https://doi.org/10.3390/biology13100780

Toneva D, Nikolova S, Agre G, Harizanov S, Fileva N, Milenov G, Zlatareva D. Enhancing Sex Estimation Accuracy with Cranial Angle Measurements and Machine Learning. Biology. 2024; 13(10):780. https://doi.org/10.3390/biology13100780

Chicago/Turabian StyleToneva, Diana, Silviya Nikolova, Gennady Agre, Stanislav Harizanov, Nevena Fileva, Georgi Milenov, and Dora Zlatareva. 2024. "Enhancing Sex Estimation Accuracy with Cranial Angle Measurements and Machine Learning" Biology 13, no. 10: 780. https://doi.org/10.3390/biology13100780

APA StyleToneva, D., Nikolova, S., Agre, G., Harizanov, S., Fileva, N., Milenov, G., & Zlatareva, D. (2024). Enhancing Sex Estimation Accuracy with Cranial Angle Measurements and Machine Learning. Biology, 13(10), 780. https://doi.org/10.3390/biology13100780