An Improved Cascade R-CNN-Based Fastener Detection Method for Coating Workshop Inspection

Abstract

1. Introduction

2. Fastener Detection Method for Coating Workshop Inspection

2.1. Overall System Architecture

2.2. Original Cascade R-CNN Framework

2.3. Structure of the Improved Cascade R-CNN

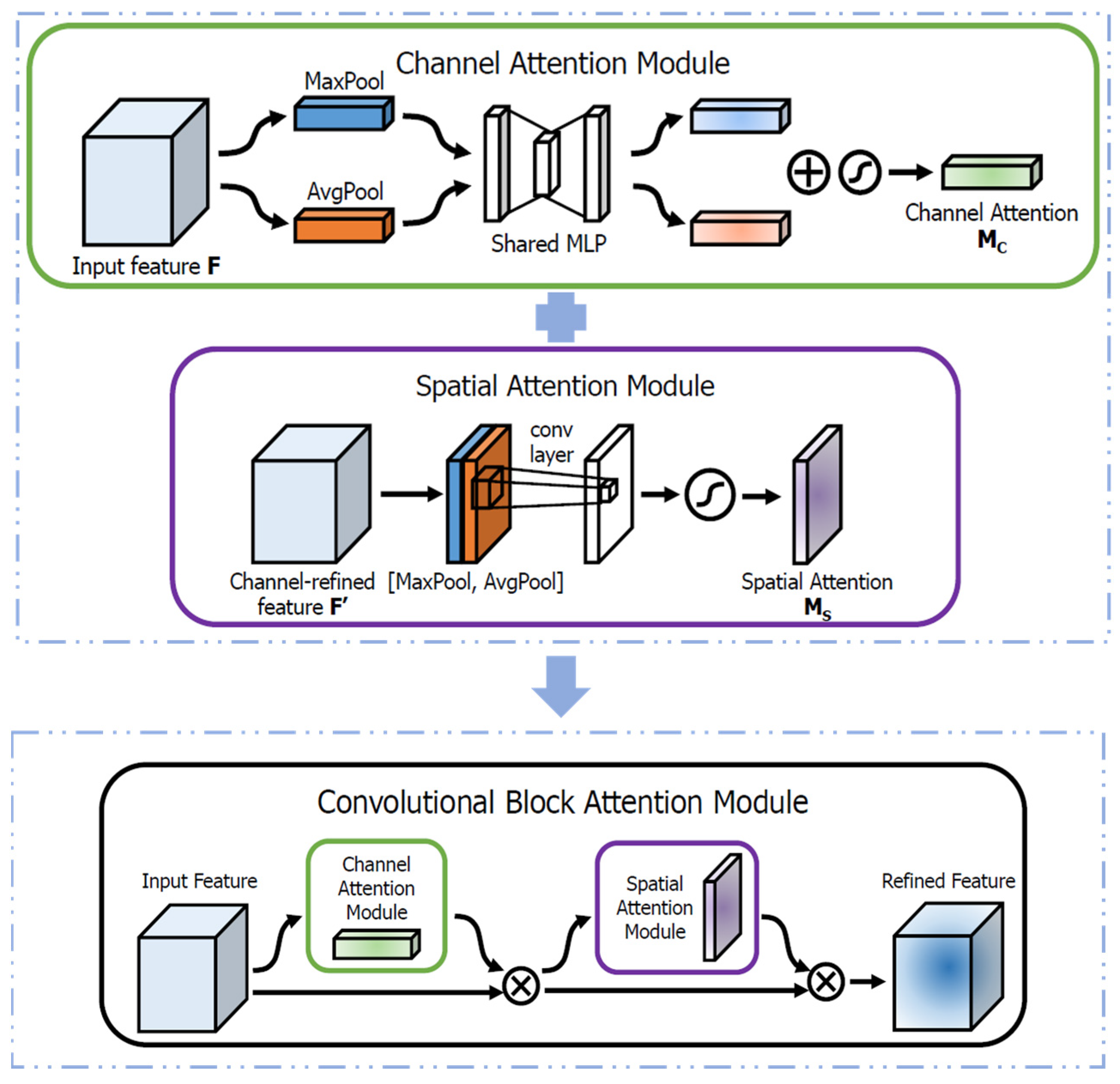

2.3.1. Integration of the CBAM Attention Mechanism

2.3.2. Anchor Size Optimization

2.3.3. Replacing Traditional NMS with Soft-NMS

3. Experiments and Results Analysis

3.1. Experimental Setup and Dataset Construction

3.1.1. Experimental Environment and Parameter Settings

3.1.2. Dataset Construction

3.2. Evaluation Metrics and Comparative Methods

3.2.1. Evaluation Metrics

3.2.2. Comparative Experimental Results

3.3. Ablation Study

3.4. Visualization of Detection Results

4. Summary

4.1. Discussion

4.2. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, X.; Liu, S.; Zhang, H.; Li, Y.; Ren, H. Defects Detection of Lithium-Ion Battery Electrode Coatings Based on Background Reconstruction and Improved Canny Algorithm. Coatings 2024, 14, 392. [Google Scholar] [CrossRef]

- Kowalski, S. The Use of PVD Coatings for Anti-Wear Protection of the Press-In Connection Elements. Coatings 2024, 14, 432. [Google Scholar] [CrossRef]

- Hütten, N.; Alves Gomes, M.; Hölken, F.; Andricevic, K.; Meyes, R.; Meisen, T. Deep Learning for Automated Visual Inspection in Manufacturing and Maintenance: A Survey of Open-Access Papers. Appl. Syst. Innov. 2024, 7, 11. [Google Scholar] [CrossRef]

- Aldoseri, A.; Al-Khalifa, K.N.; Hamouda, A.M. AI-Powered Innovation in Digital Transformation: Key Pillars and Industry Impact. Sustainability 2024, 16, 1790. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhou, J. A Review of Image Edge Detection Methods. Comput. Eng. Appl. 2023, 59, 40–54. [Google Scholar] [CrossRef]

- Kang, J.; Zhang, L.; Sun, Y.; Yang, X.; Wang, R.; Zhao, T. A Bolt Loosening Angle Detection Method Based on Color Segmentation. J. Mech. Strength 2025, 47, 102–109. [Google Scholar]

- Li, Y.; Wu, X. Survey of Multilevel Feature Extraction Methods for RGB-D Images. J. Image Graph. 2024, 29, 1346–1363. [Google Scholar] [CrossRef]

- Dou, Y.; Huang, Y.; Li, Q.; Luo, S. A Fast Template Matching-Based Algorithm for Railway Bolts Detection. Int. J. Mach. Learn. Cybern. 2014, 5, 835–844. [Google Scholar] [CrossRef]

- Li, J.; Gao, X.; Yang, K. Locomotive Vehicle Bolt Detection Based on HOG Feature and Support Vector Machine. Inf. Technol. 2016, 3, 125–127+135. [Google Scholar]

- Lu, S.; Liu, Z. Fast Localization Method of Bolt Under China Railway High-Speed. Comput. Eng. Appl. 2017, 53, 31–35. [Google Scholar]

- Ramana, L.; Choi, W.; Cha, Y.J. Fully Automated Vision-Based Loosened Bolt Detection Using the Viola–Jones Algorithm. Struct. Health Monit. 2019, 18, 422–434. [Google Scholar] [CrossRef]

- Min, Y.; Xiao, B.; Dang, J.; Yin, C.; Yue, B.; Ma, H. Machine Vision Rapid Detection Method of the Track Fasteners Missing. J. Shanghai Jiao Tong Univ. 2017, 51, 1268–1272. [Google Scholar]

- Peralta, P.E.; Ferre, M.; Sánchez-Urán, M.Á. Robust Fastener Detection Based on Force and Vision Algorithms in Robotic (Un)Screwing Applications. Sensors 2023, 23, 4527. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 18–20 June 1996; IEEE: Las Vegas, NV, USA, 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Volume Part I, pp. 21–37. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Salt Lake City, UT, USA, 2018; pp. 6154–6162. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Su, Z.; Xing, Z. An Improved SSD and Its Application in Train Bolt Detection. In Proceedings of the 4th International Conference on Electrical and Information Technologies for Rail Transportation (EITRT) 2019: Rail Transportation Information Processing and Operational Management Technologies; Springer: Singapore, 2020; pp. 97–104. [Google Scholar]

- Ge, L.; Xu, J.; Wu, X.L.; Hou, R.J.; Shi, C.J.; Jia, G. Research on Tower Bolt Identification Technology Based on Convolution Network. J. Phys. Conf. Ser. 2021, 1852, 022055. [Google Scholar] [CrossRef]

- Luo, L.; Ye, W.; Wang, J. Defect Detection of the Puller Bolt in High-Speed Railway Catenary Based on Deep Learning. J. Railw. Sci. Eng. 2021, 18, 605–614. [Google Scholar]

- Li, Y.; Shi, X.; Xu, X.; Zhang, H.; Yang, F. YOLOv5s-PSG: Improved YOLOv5s-Based Helmet Recognition in Complex Scenes. IEEE Access 2025, 13, 34915–34924. [Google Scholar] [CrossRef]

- Wang, X.; Yang, M.; Zheng, S.; Mei, Y. Bolt Detection and Positioning System Based on YOLOv5s-T and RGB-D Camera. J. Beijing Inst. Technol. 2022, 42, 1159–1166. [Google Scholar]

- Zhang, H.; Shao, F.; Chu, W.; Dai, J.; Li, X.; Zhang, X.; Gong, C. Faster R-CNN Based on Frame Difference and Spatiotemporal Context for Vehicle Detection. Signal Image Video Process. 2024, 18, 7013–7027. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Dang, Y. Research on Bolt Detection of Railway Passenger Cars Based on Improved Faster R-CNN. J. China Saf. Sci. 2021, 31, 82–89. [Google Scholar]

| Method | mAP (%) | Precision (%) | Recall (%) |

|---|---|---|---|

| Faster R-CNN | 91.42 | 92.10 | 89.35 |

| Cascade R-CNN | 93.84 | 93.92 | 92.37 |

| Improved Cascade R-CNN (Ours) | 96.60 | 95.85 | 95.72 |

| Category | Precision (%) | Recall (%) |

|---|---|---|

| Fallen-off | 95.23 | 96.85 |

| Fastener | 96.28 | 95.41 |

| Marking-painted fastener | 96.67 | 95.72 |

| Model Variant | mAP (%) | Description |

|---|---|---|

| Cascade R-CNN | 93.84 | Original structure |

| +Anchor Size Optimization | 94.72 | Improves coverage of small targets |

| +Anchor Size Optimization + CBAM | 95.53 | Enhances feature extraction and representation |

| +Anchor Size Optimization + CBAM+ Soft-NMS | 96.60 | Reduces overlapping targets |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Liu, J.; Liu, S.; Chen, Y.; Zhao, J.; Fu, J. An Improved Cascade R-CNN-Based Fastener Detection Method for Coating Workshop Inspection. Coatings 2026, 16, 37. https://doi.org/10.3390/coatings16010037

Liu J, Liu S, Chen Y, Zhao J, Fu J. An Improved Cascade R-CNN-Based Fastener Detection Method for Coating Workshop Inspection. Coatings. 2026; 16(1):37. https://doi.org/10.3390/coatings16010037

Chicago/Turabian StyleLiu, Jiaqi, Shanhui Liu, Yuhong Chen, Jiawen Zhao, and Jiahao Fu. 2026. "An Improved Cascade R-CNN-Based Fastener Detection Method for Coating Workshop Inspection" Coatings 16, no. 1: 37. https://doi.org/10.3390/coatings16010037

APA StyleLiu, J., Liu, S., Chen, Y., Zhao, J., & Fu, J. (2026). An Improved Cascade R-CNN-Based Fastener Detection Method for Coating Workshop Inspection. Coatings, 16(1), 37. https://doi.org/10.3390/coatings16010037