Weed Detection on Architectural Heritage Surfaces in Penang City via YOLOv11

Abstract

1. Introduction

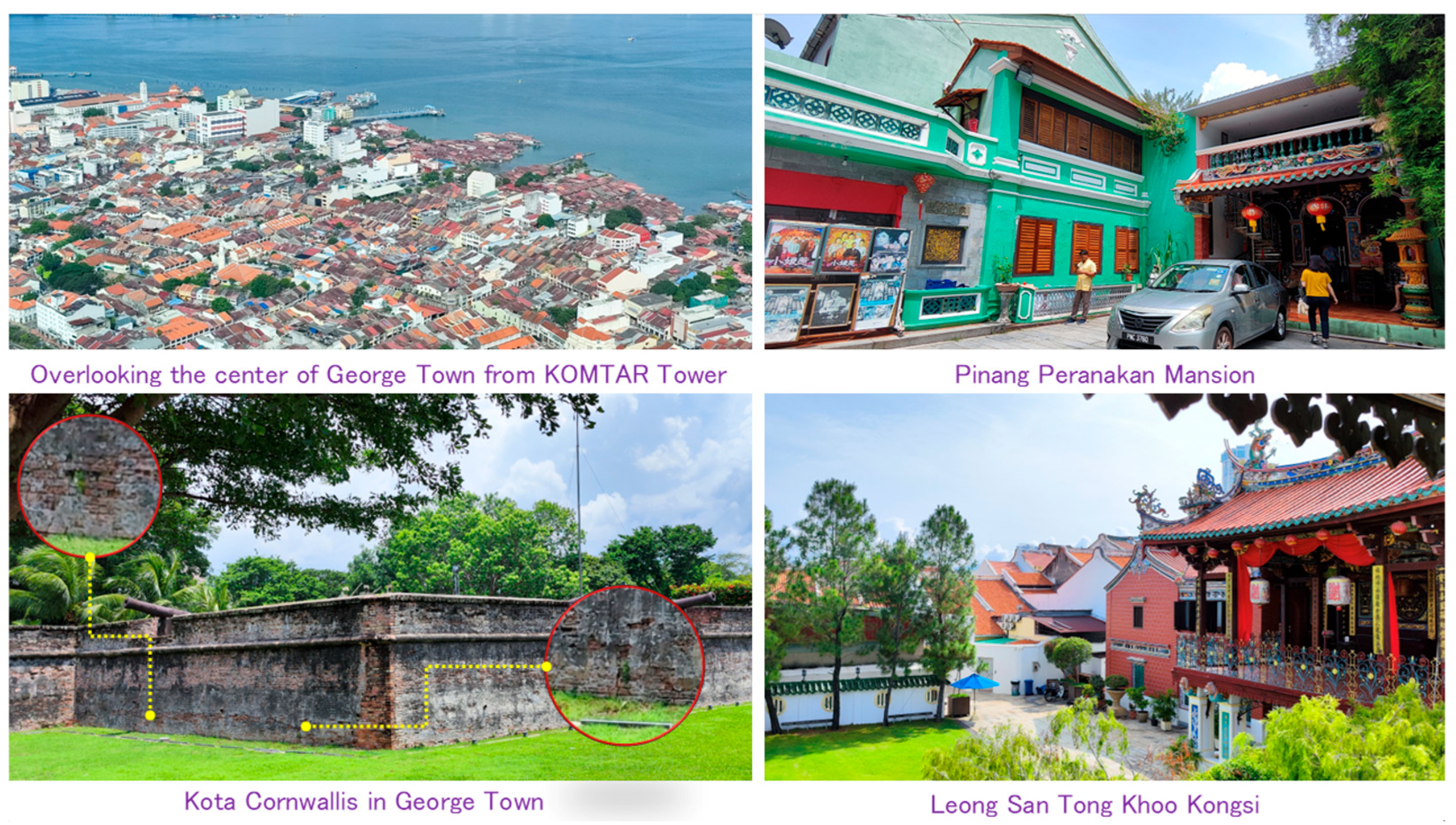

1.1. An Essential Heritage of UNESCO: George Town

1.2. Threats from Weeds

1.3. The Application of Computer Vision Technology to Architectural Heritage

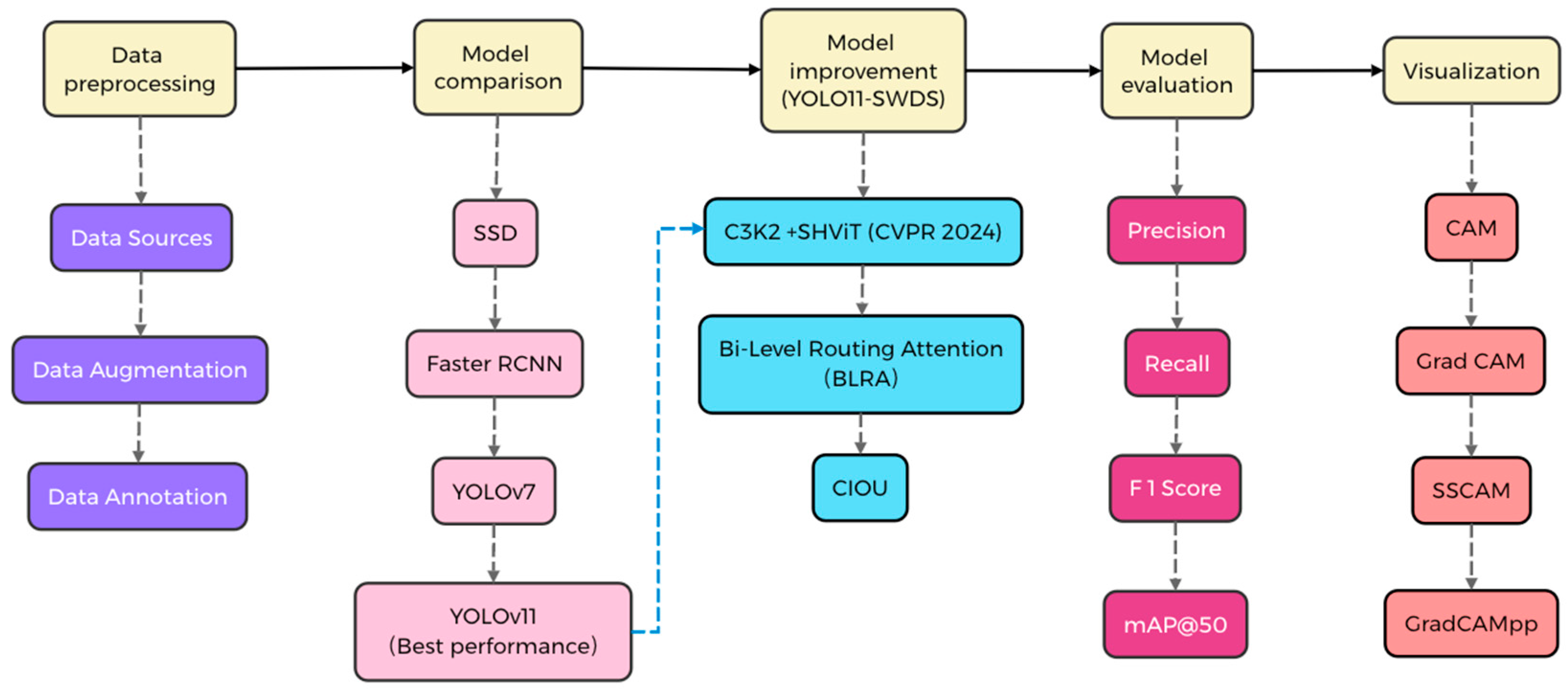

2. Materials and Methods

2.1. Data Prerocessing

2.1.1. Data Collection and Processing

2.1.2. Data Augmentation

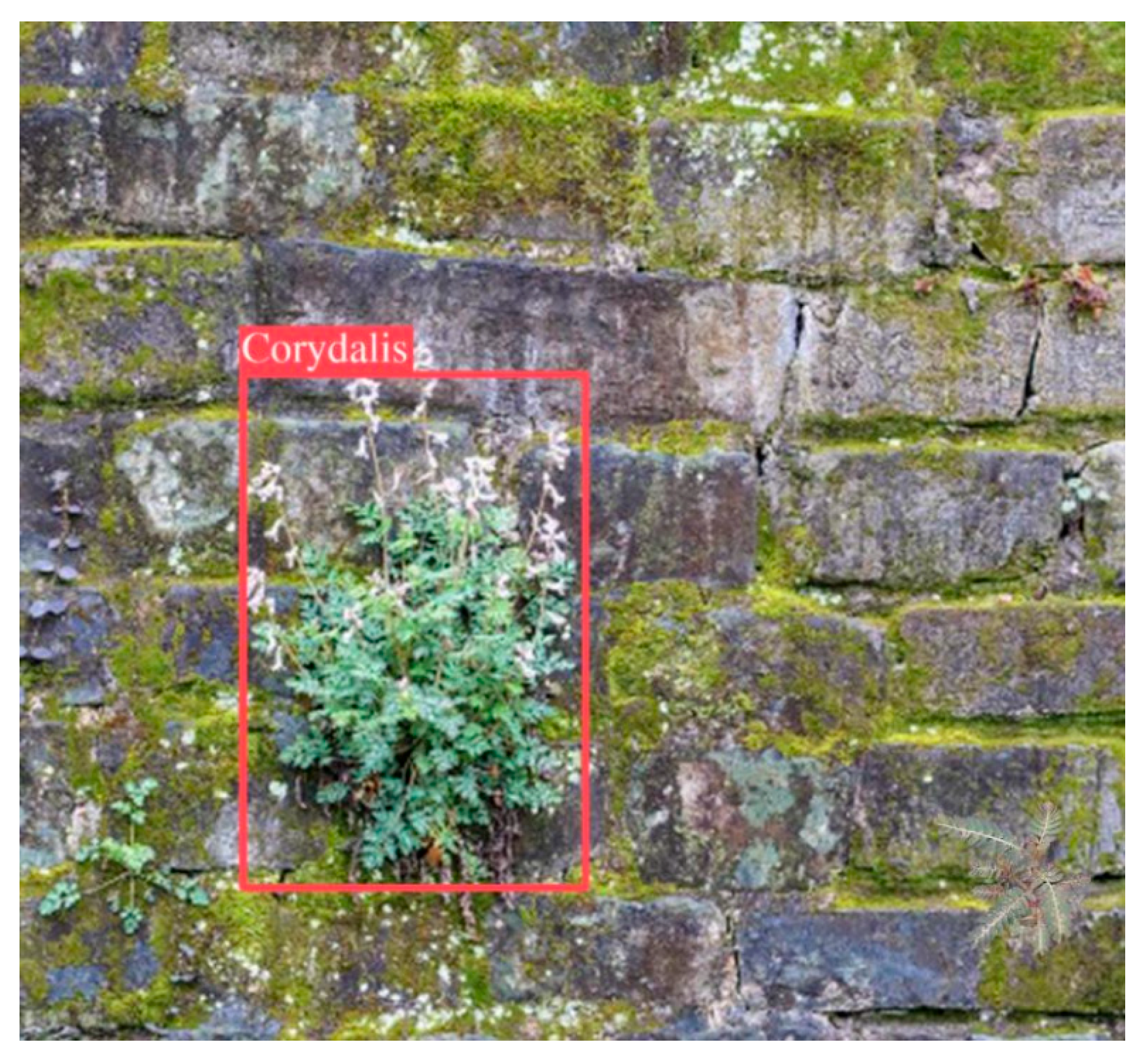

2.1.3. Data Annotation

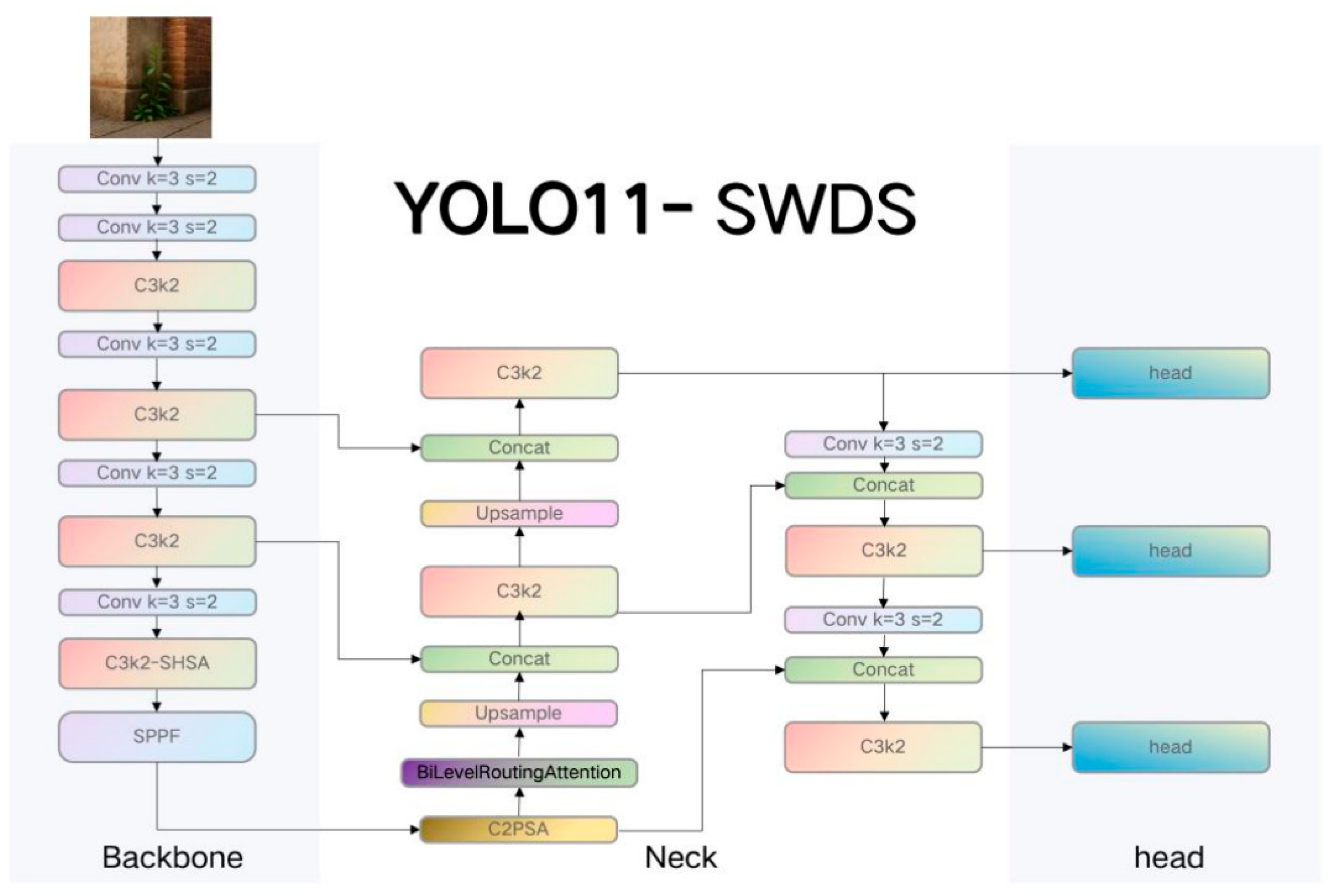

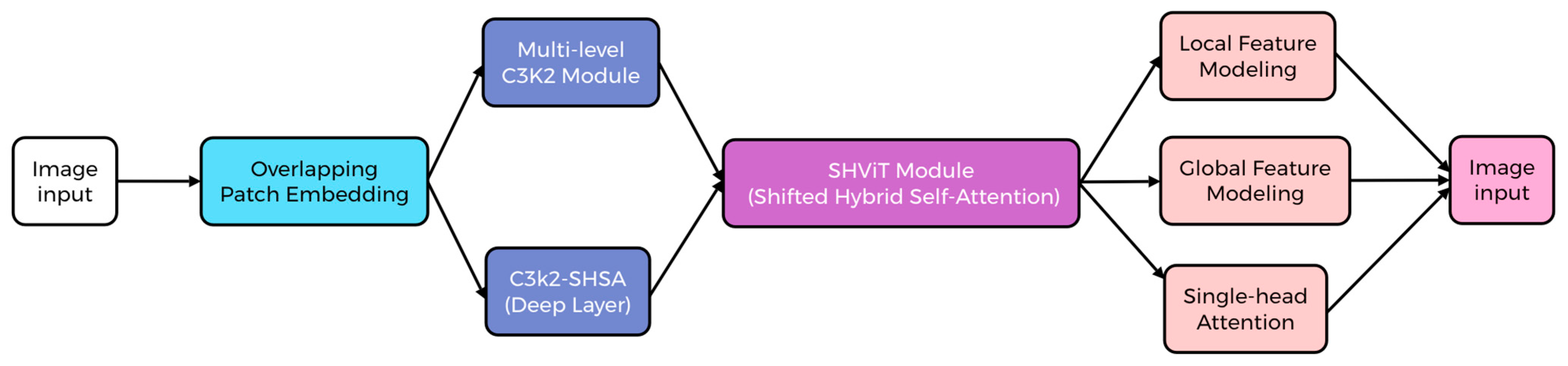

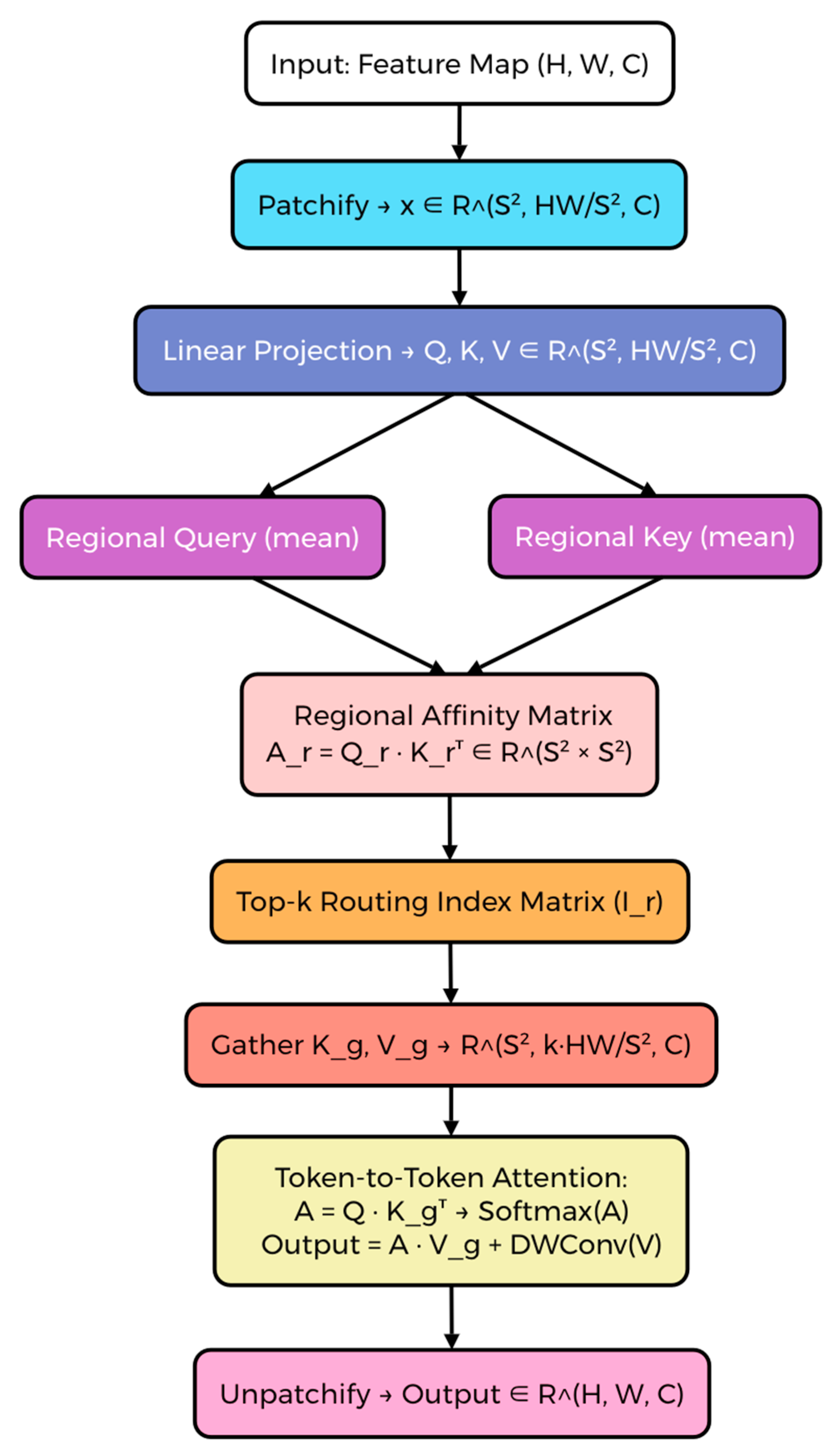

2.2. Model Comparison and Improvement

2.3. Evaluation Metrics

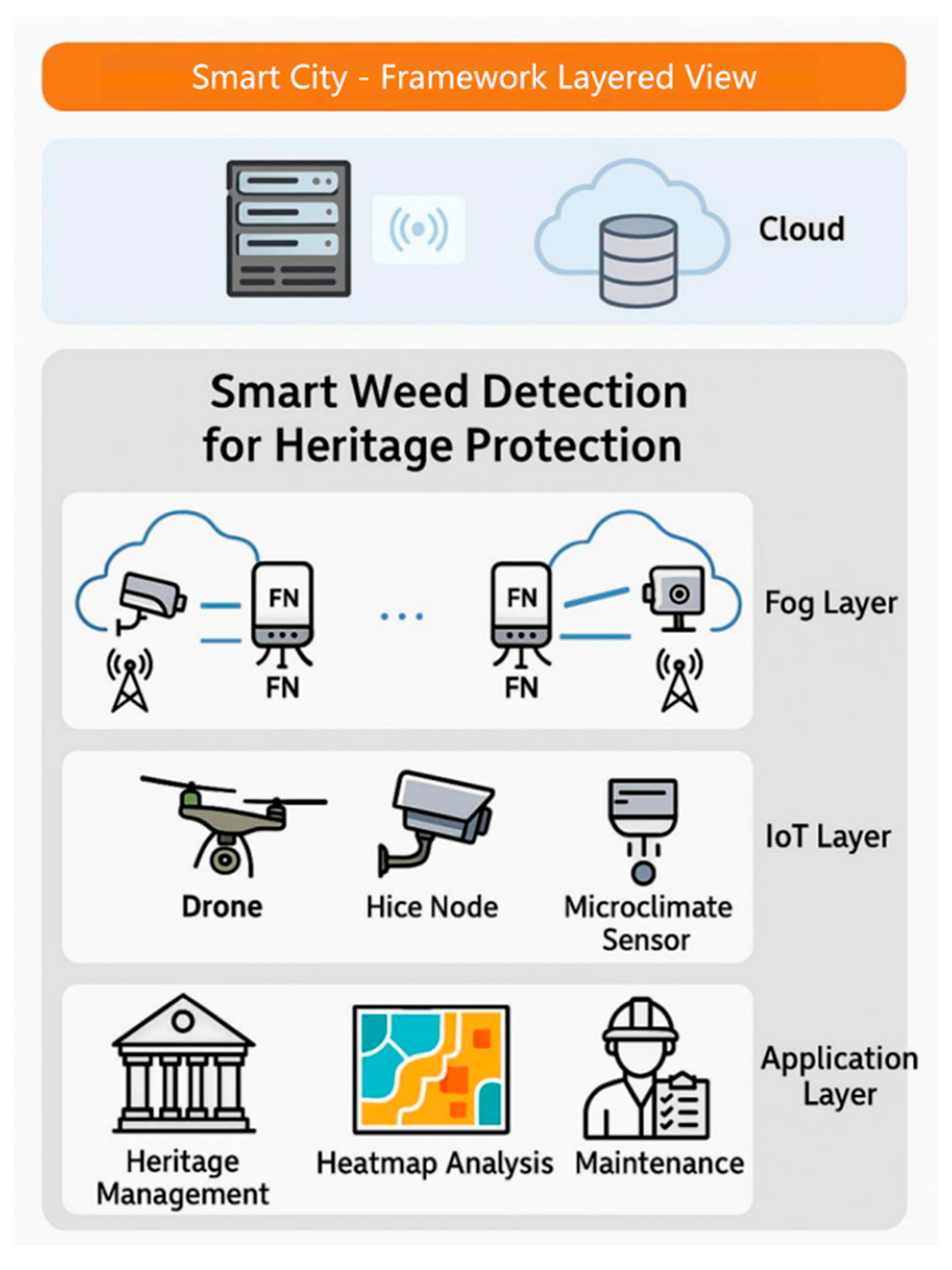

2.4. System Architecture for Weed Detection and Heritage Monitoring

2.5. Integration of Surface Protection Measures

3. Results

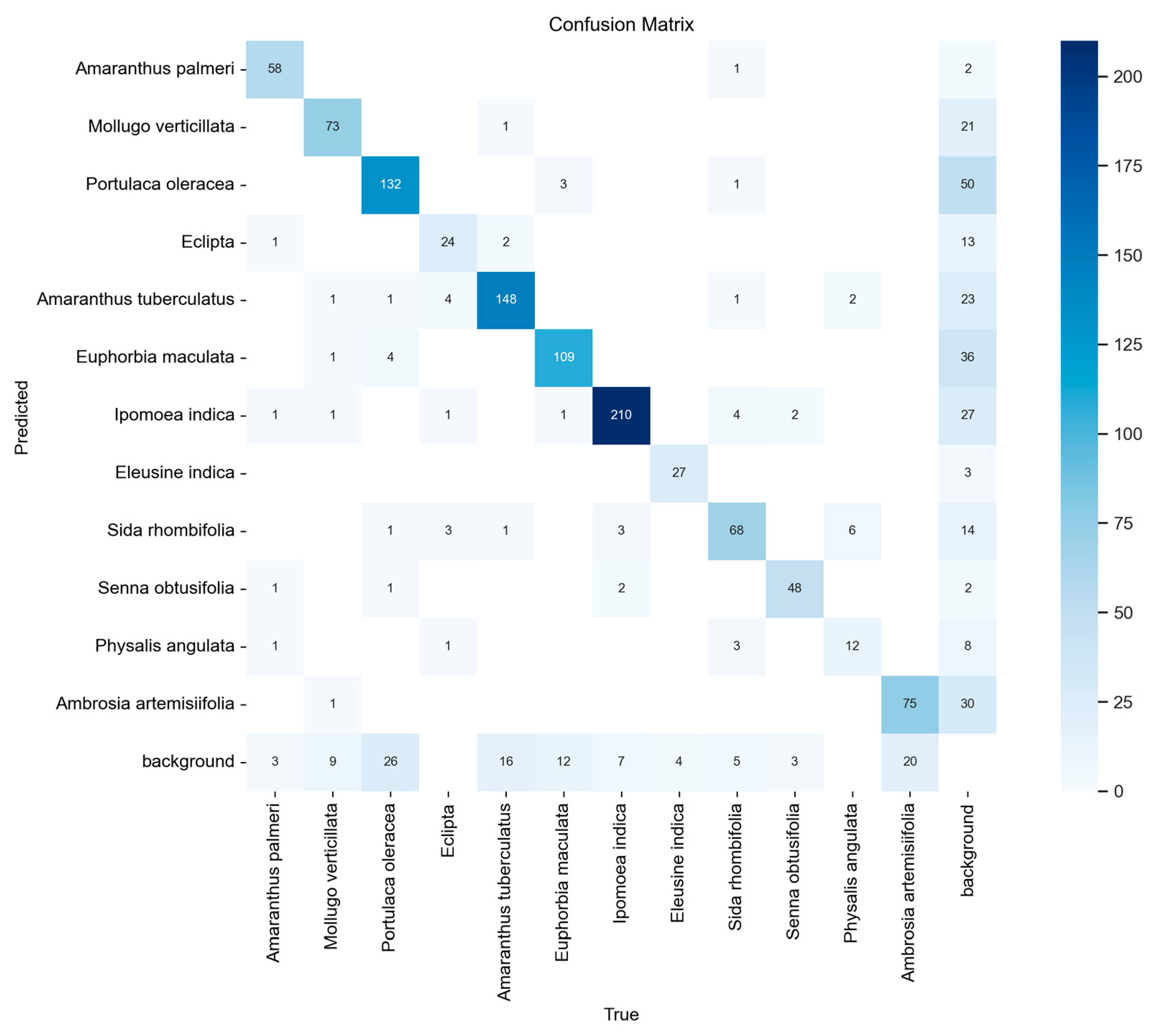

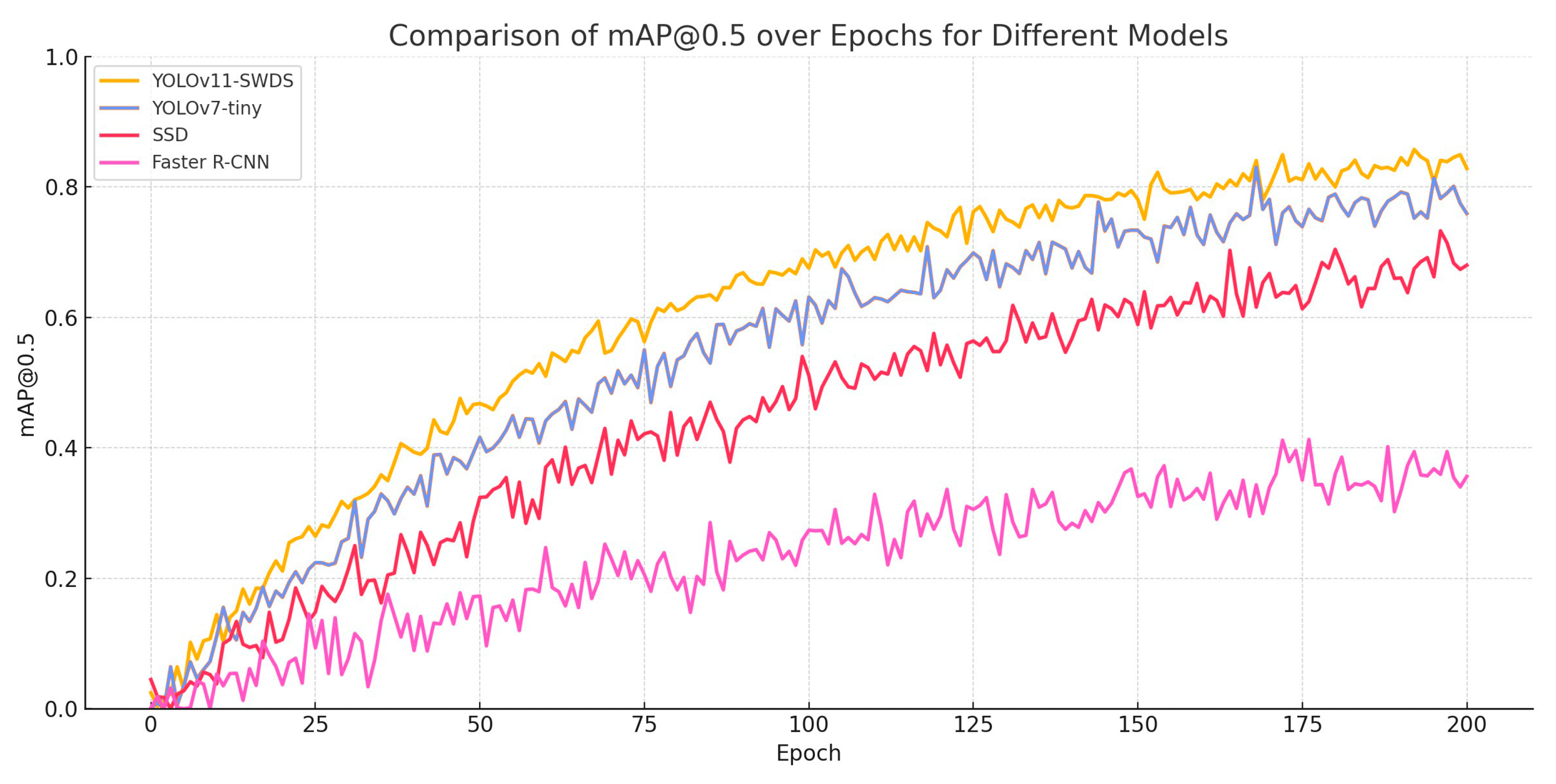

3.1. Result of Model Comparison

3.2. Result of Ablation Experiments

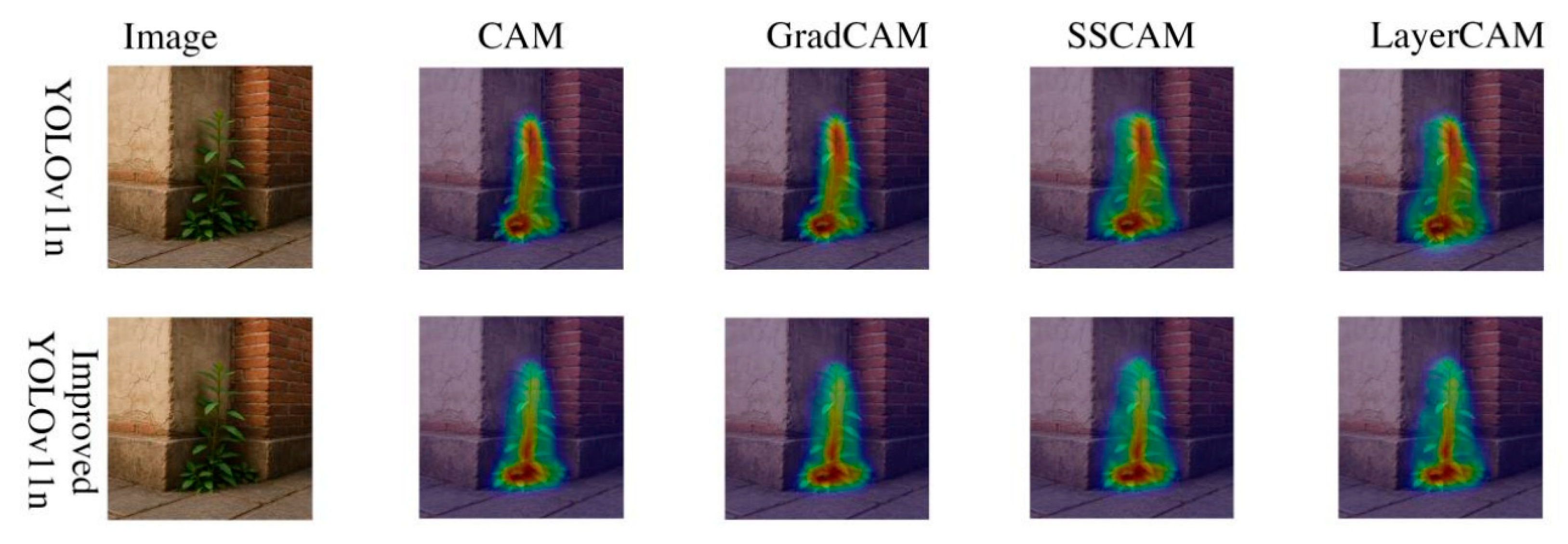

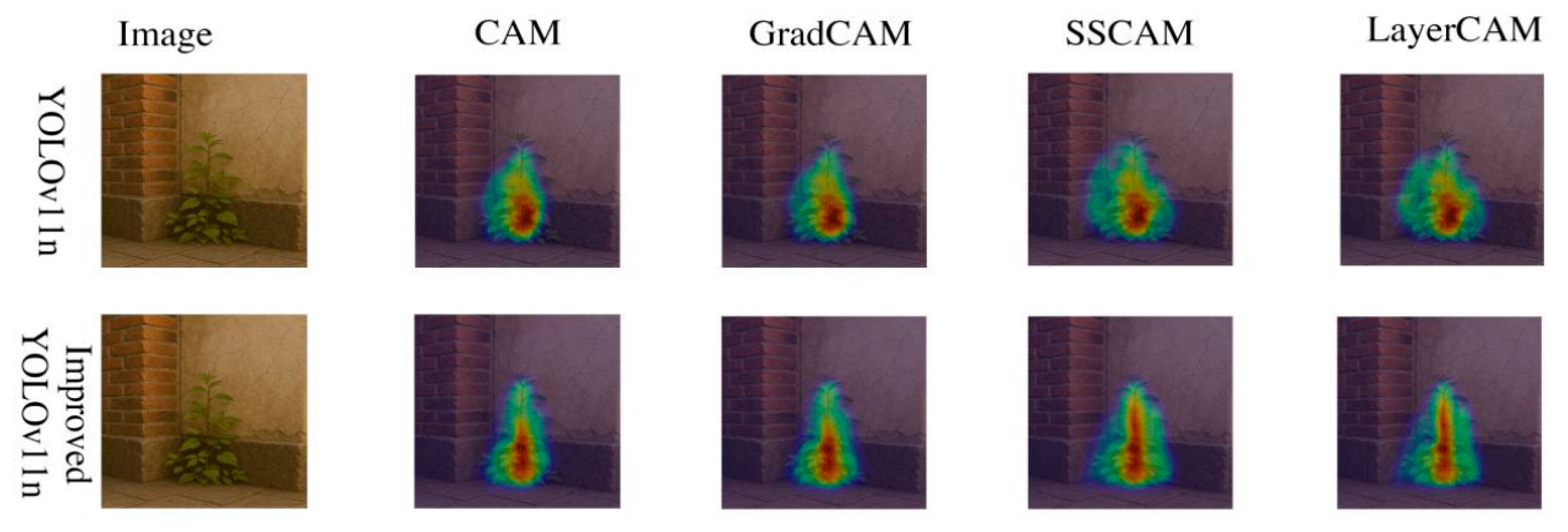

3.3. Performance Evaluation of CAM, Grad-CAM, LayerCAM, SSCAM for Deep Learning Model

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| UNESCO | United Nations Educational, Scientific and Cultural Organization |

| YOLO | You Only Look Once |

| SWDS | Smart Weed Detection System |

| CNN | Convolutional Neural Network |

| IoU | Intersection over Union |

| mAP | Mean Average Precision |

| GFLOPs | Giga Floating Point Operations per Second |

| CAM | Class Activation Mapping |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| SSCAM | Self-Supervised Class Activation Mapping |

| Grad-CAM++ | Gradient-weighted Class Activation Mapping++ |

| SHViT | Shifted Hybrid Vision Transformer |

| BLRA | Bi-Level Routing Attention |

| PIOU | Pixels-IoU Loss |

| CIOU | Complete IoU Loss |

| FPN | Feature Pyramid Network |

| PAN | Path Aggregation Network |

| C2PSA | Channel–Position Spatial Attention |

| C3k2 | Cross Stage Partial with K2 blocks (network module) |

| GPU | Graphics Processing Unit |

| CPU | Central Processing Unit |

| RTX | NVIDIA GeForce RTX (Graphics Processing Unit family) |

| SGD | Stochastic Gradient Descent |

| IAA | Inter-Annotator Agreement |

| MB | Megabyte |

References

- Moazzeni Khorasgani, A. Sustainable Development Strategies for Historic Cities. In Using Data Science and Landscape Approach to Sustain Historic Cities; Springer Nature: Cham, Switzerland, 2024; pp. 63–81. [Google Scholar] [CrossRef]

- Theodora, Y. Cultural heritage as a means for local development in Mediterranean historic cities—The need for an urban policy. Heritage 2020, 3, 152–175. [Google Scholar] [CrossRef]

- Labadi, S.; Logan, W. Approaches to Urban Heritage, Development and Sustainability. In Urban Heritage, Development and Sustainability; Routledge: London, UK, 2015; pp. 1–20. [Google Scholar]

- Otero, J. Heritage conservation future: Where we stand, challenges ahead, and a paradigm shift. Glob. Chall. 2022, 6, 2100084. [Google Scholar] [CrossRef] [PubMed]

- Hassan, A.S.; Yahaya, S.R.C. Architecture and Heritage Buildings in George Town, Penang; Penerbit USM: Penang, Malaysia, 2012. [Google Scholar]

- Bideau, F.G.; Kilani, M. Multiculturalism, cosmopolitanism, and making heritage in Malaysia: A view from the historic cities of the Straits of Malacca. Int. J. Herit. Stud. 2012, 18, 605–623. [Google Scholar] [CrossRef]

- OECD. Higher Education in Regional and City Development: State of Penang, Malaysia 2011; OECD Publishing: Paris, France, 2011. [Google Scholar] [CrossRef]

- Bennett, B.M. Model invasions and the development of national concerns over invasive introduced trees: Insights from South African history. Biol. Invasions 2014, 16, 499–512. [Google Scholar] [CrossRef]

- Elgohary, Y.M.; Mansour, M.M.; Salem, M.Z. Assessment of the potential effects of plants with their secreted biochemicals on the biodeterioration of archaeological stones. Biomass Convers. Biorefin. 2024, 14, 12069–12083. [Google Scholar] [CrossRef]

- Baliddawa, C.W. Plant species diversity and crop pest control: An analytical review. Int. J. Trop. Insect Sci. 1985, 6, 479–487. [Google Scholar] [CrossRef]

- Dewey, S.A.; Jenkins, M.J.; Tonioli, R.C. Wildfire suppression—A paradigm for noxious weed management. Weed Technol. 1995, 9, 621–627. [Google Scholar] [CrossRef]

- Cozzolino, A.; Bonanomi, G.; Motti, R. The role of stone materials, environmental factors, and management practices in vascular plant-induced deterioration: Case studies from Pompeii, Herculaneum, Paestum, and Velia Archaeological Parks (Italy). Plants 2025, 14, 514. [Google Scholar] [CrossRef]

- Trotta, G.; Savo, V.; Cicinelli, E.; Carboni, M.; Caneva, G. Colonization and damages of Ailanthus altissima (Mill.) Swingle on archaeological structures: Evidence from the Aurelian Walls in Rome (Italy). Int. Biodeterior. Biodegrad. 2020, 153, 105054. [Google Scholar] [CrossRef]

- Celesti-Grapow, L.; Ricotta, C. Plant invasion as an emerging challenge for the conservation of heritage sites: The spread of ornamental trees on ancient monuments in Rome, Italy. Biol. Invasions 2021, 23, 1191–1206. [Google Scholar] [CrossRef]

- Chicouene, D. Mechanical destruction of weeds: A review. In Sustainable Agriculture; Springer: Dordrecht, The Netherlands, 2009; pp. 399–410. [Google Scholar] [CrossRef]

- Sabri, A.M.; Suleiman, M.Z. Study of the use of lime plaster on heritage buildings in Malaysia: A case study in George Town, Penang. In MATEC Web of Conferences; EDP Sciences: Les Ulis, France, 2014; Volume 17, p. 01005. [Google Scholar]

- Hall, C.M. Biological invasion, biosecurity, tourism, and globalisation. In Handbook of Globalisation and Tourism; Edward Elgar Publishing: Cheltenham, UK, 2019; pp. 114–125. [Google Scholar] [CrossRef]

- Matsuzaka, Y.; Yashiro, R. AI-based computer vision techniques and expert systems. AI 2023, 4, 289–302. [Google Scholar] [CrossRef]

- Ali, M.L.; Zhang, Z. The YOLO framework: A comprehensive review of evolution, applications, and benchmarks in object detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Lee, J.; Hwang, K.I. YOLO with adaptive frame control for real-time object detection applications. Multimed. Tools Appl. 2022, 81, 36375–36396. [Google Scholar] [CrossRef]

- Hussain, M. Yolov1 to v8: Unveiling each variant—A comprehensive review of YOLO. IEEE Access 2024, 12, 42816–42833. [Google Scholar] [CrossRef]

- Vijayakumar, A.; Vairavasundaram, S. YOLO-based object detection models: A review and its applications. Multimed. Tools Appl. 2024, 83, 83535–83574. [Google Scholar] [CrossRef]

- Reddy, K.U.K.; Shaik, F.; Swathi, V.; Sreevidhya, P.; Yashaswini, A.; Maheswari, J.U. Design and implementation of theft detection using YOLO-based object detection methodology and Gen AI for enhanced security solutions. In Proceedings of the 2025 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 24–26 April 2025; IEEE: New York, NY, USA, 2025; pp. 583–589. [Google Scholar]

- Nguyen, H.H.; Ta, T.N.; Nguyen, N.C.; Bui, V.T.; Pham, H.M.; Nguyen, D.M. YOLO-based real-time human detection for smart video surveillance at the edge. In Proceedings of the 2020 IEEE 8th International Conference on Communications and Electronics (ICCE), Phu Quoc, Vietnam, 13–15 January 2021; IEEE: New York, NY, USA, 2021; pp. 439–444. [Google Scholar]

- Xu, L.; Yan, W.; Ji, J. The research of a novel WOG-YOLO algorithm for autonomous driving object detection. Sci. Rep. 2023, 13, 3699. [Google Scholar] [CrossRef]

- Zhao, R.; Tang, S.H.; Shen, J.; Supeni, E.E.B.; Rahim, S.A. Enhancing autonomous driving safety: A robust traffic sign detection and recognition model TSD-YOLO. Signal Process. 2024, 225, 109619. [Google Scholar] [CrossRef]

- Ragab, M.G.; Abdulkadir, S.J.; Muneer, A.; Alqushaibi, A.; Sumiea, E.H.; Qureshi, R.; Alhussian, H. A comprehensive systematic review of YOLO for medical object detection (2018 to 2023). IEEE Access 2024, 12, 57815–57836. [Google Scholar] [CrossRef]

- Hu, Y.; Wu, S.; Ma, Z.; Cheng, S.; Xie, M.; Li, S.; Wu, S. Integrating deep learning and machine learning for ceramic artifact classification and market value prediction. npj Herit. Sci. 2025, 13, 306. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, Y.; Liu, J.; Lan, Y.; Zhang, T. Human figure detection in Han portrait stone images via enhanced YOLO-v5. Herit. Sci. 2024, 12, 123. [Google Scholar] [CrossRef]

- Siountri, K.; Anagnostopoulos, C.N. The classification of cultural heritage buildings in Athens using deep learning techniques. Heritage 2023, 6, 3673–3705. [Google Scholar] [CrossRef]

- Raushan, R.; Singhal, V.; Jha, R.K. Damage detection in concrete structures with multi-feature backgrounds using the YOLO network family. Autom. Constr. 2025, 170, 105887. [Google Scholar] [CrossRef]

- Pratibha, K.; Mishra, M.; Ramana, G.V.; Lourenço, P.B. Deep learning-based YOLO network model for detecting surface cracks during structural health monitoring. In Proceedings of the International Conference on Structural Analysis of Historical Constructions, Rome, Italy, 12–15 September 2023; Springer Nature: Cham, Switzerland, 2023; pp. 179–187. [Google Scholar] [CrossRef]

- Verhoeven, G.; Taelman, D.; Vermeulen, F. Computer vision-based orthophoto mapping of complex archaeological sites: The ancient quarry of Pitaranha (Portugal–Spain). Archaeometry 2012, 54, 1114–1129. [Google Scholar] [CrossRef]

- Cuca, B.; Zaina, F.; Tapete, D. Monitoring of Damages to Cultural Heritage across Europe Using Remote Sensing and Earth Observation: Assessment of Scientific and Grey Literature. Remote Sens. 2023, 15, 3748. [Google Scholar] [CrossRef]

- Agapiou, A.; Lysandrou, V. Remote Sensing Archaeology: Tracking and Mapping Evolution in European Scientific Literature from 1999 to 2015. J. Archaeol. Sci. Rep. 2015, 4, 192–200. [Google Scholar] [CrossRef]

- Luo, L.; Wang, X.; Guo, H.; Lasaponara, R.; Zong, X.; Masini, N.; Wang, G.; Shi, P.; Khatteli, H.; Chen, F.; et al. Airborne and Spaceborne Remote Sensing for Archaeological and Cultural Heritage Applications: A Review of the Century (1907–2017). Remote Sens. Environ. 2019, 232, 111280. [Google Scholar] [CrossRef]

- Chen, F.; Guo, H.; Tapete, D.; Cigna, F.; Piro, S.; Lasaponara, R.; Masini, N. The Role of Imaging Radar in Cultural Heritage: From Technologies to Applications. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102907. [Google Scholar] [CrossRef]

- Tapete, D.; Cigna, F. Trends and Perspectives of Space-Borne SAR Remote Sensing for Archaeological Landscape and Cultural Heritage Applications. J. Archaeol. Sci. Rep. 2017, 14, 716–726. [Google Scholar] [CrossRef]

- Tapete, D.; Cigna, F. Detection of Archaeological Looting from Space: Methods, Achievements and Challenges. Remote Sens. 2019, 11, 2389. [Google Scholar] [CrossRef]

- Yun, S.; Ro, Y. SHViT: Single-head vision transformer with memory efficient macro design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 5756–5767. [Google Scholar]

- Zhu, L.; Wang, X.; Ke, Z.; Zhang, W.; Lau, R.W. BiFormer: Vision transformer with bi-level routing attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 10323–10333. [Google Scholar]

- Chen, Z.; Chen, K.; Lin, W.; See, J.; Yu, H.; Ke, Y.; Yang, C. PIOU loss: Towards accurate oriented object detection in complex environments. In Proceedings of the Computer Vision—ECCV 2020: Proceedings of the 16th European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020. Part V. pp. 195–211. [Google Scholar] [CrossRef]

- Bishop, F.L.; Lewith, G.T. Who uses CAM? A narrative review of demographic characteristics and health factors associated with CAM use. Evid.-Based Complement. Altern. Med. 2010, 7, 11–28. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Das, A.; Vedantam, R.; Cogswell, M.; Parikh, D.; Batra, D. Grad-CAM: Why did you say that? arXiv 2016, arXiv:1611.07450. [Google Scholar] [CrossRef]

- Wang, H.; Naidu, R.; Michael, J.; Kundu, S.S. SS-CAM: Smoothed Score-CAM for sharper visual feature localization. arXiv 2020, arXiv:2006.14255. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Mishra, M.; Lourenço, P.B. Artificial Intelligence-Assisted Visual Inspection for Cultural Heritage: State-of-the-Art Review. J. Cult. Herit. 2024, 66, 536–550. [Google Scholar] [CrossRef]

- Karimi, N.; Mishra, M.; Lourenço, P.B. Deep Learning-Based Automated Tile Defect Detection System for Portuguese Cultural Heritage Buildings. J. Cult. Herit. 2024, 68, 86–98. [Google Scholar] [CrossRef]

- Colmenero-Fernández, A. Novel VSLAM Positioning through Synthetic Data and Deep Learning: Applications in Virtual Archaeology, ArQVIA. J. Cult. Herit. 2025, 73, 347–357. [Google Scholar] [CrossRef]

- Hatir, M.E.; Barstuğan, M.; İnce, İ. Deep Learning-Based Weathering Type Recognition in Historical Stone Monuments. J. Cult. Herit. 2020, 45, 193–203. [Google Scholar] [CrossRef]

| Model | F1 Score | P (%) | R (%) | mAP@50 (%) | GFLOPs | Params (MB) |

|---|---|---|---|---|---|---|

| Faster R-CNN | 41.17 | 32.41 | 60.2 | 47.67 | 83 | 37 |

| SSD | 81.63 | 87.03 | 77.57 | 82.41 | 31.3 | 26.14 |

| YOLOv7-tiny | 82.46 | 86.5 | 78.8 | 85.2 | 13.2 | 12.3 |

| YOLOv11n | 83.2 | 87.0 | 79.7 | 85.4 | 6.6 | 2.85 |

| SHViT | BLRA | PIOU | F1 Score | P (%) | R (%) | mAP@50 (%) | GFLOPs | Params (MB) |

|---|---|---|---|---|---|---|---|---|

| √ | 82.9 | 86.8 | 79.3 | 85.8 | 6.3 | 2.58 | ||

| √ | 83.8 | 87.3 | 80.4 | 86.8 | 6.6 | 2.85 | ||

| √ | 82.7 | 86.7 | 79.1 | 86.0 | 6.3 | 2.58 | ||

| √ | √ | 84.1 | 88.8 | 79.7 | 87.1 | 6.2 | 2.46 | |

| √ | √ | 82.0 | 89.9 | 75.5 | 85.5 | 6.6 | 2.85 | |

| √ | √ | 85.0 | 89.0 | 81.3 | 87.8 | 6.5 | 2.75 | |

| √ | √ | √ | 85.0 | 89.0 | 81.3 | 87.8 | 6.5 | 2.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Hu, Y.; Chen, Y.; Chen, J.; Cheng, S. Weed Detection on Architectural Heritage Surfaces in Penang City via YOLOv11. Coatings 2025, 15, 1322. https://doi.org/10.3390/coatings15111322

Chen S, Hu Y, Chen Y, Chen J, Cheng S. Weed Detection on Architectural Heritage Surfaces in Penang City via YOLOv11. Coatings. 2025; 15(11):1322. https://doi.org/10.3390/coatings15111322

Chicago/Turabian StyleChen, Shaokang, Yanfeng Hu, Yile Chen, Junming Chen, and Si Cheng. 2025. "Weed Detection on Architectural Heritage Surfaces in Penang City via YOLOv11" Coatings 15, no. 11: 1322. https://doi.org/10.3390/coatings15111322

APA StyleChen, S., Hu, Y., Chen, Y., Chen, J., & Cheng, S. (2025). Weed Detection on Architectural Heritage Surfaces in Penang City via YOLOv11. Coatings, 15(11), 1322. https://doi.org/10.3390/coatings15111322