Baru-Net: Surface Defects Detection of Highly Reflective Chrome-Plated Appearance Parts

Abstract

1. Introduction

- High requirements for lighting technology. It is necessary to ensure the stability of lighting and avoid the influence of ambient light to obtain reliable digital image quality to meet the threshold condition.

- Image prepossessing and feature extraction algorithms are complex; several thresholds are commonly needed in these sequential algorithms, which influence the reliability and robustness of detection.

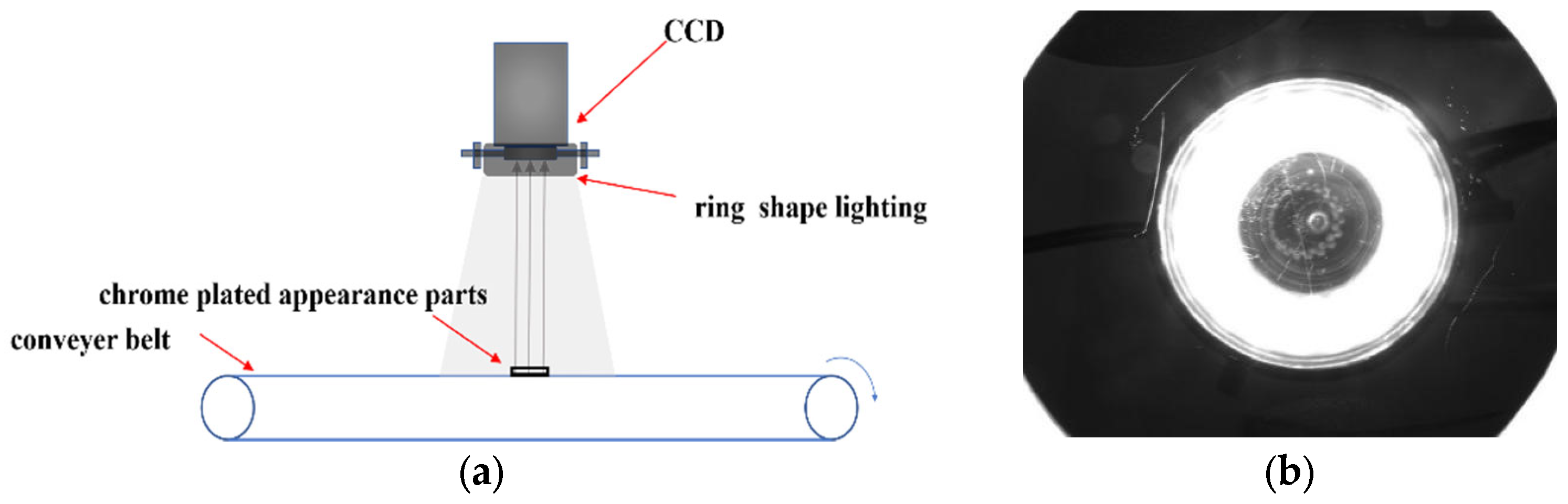

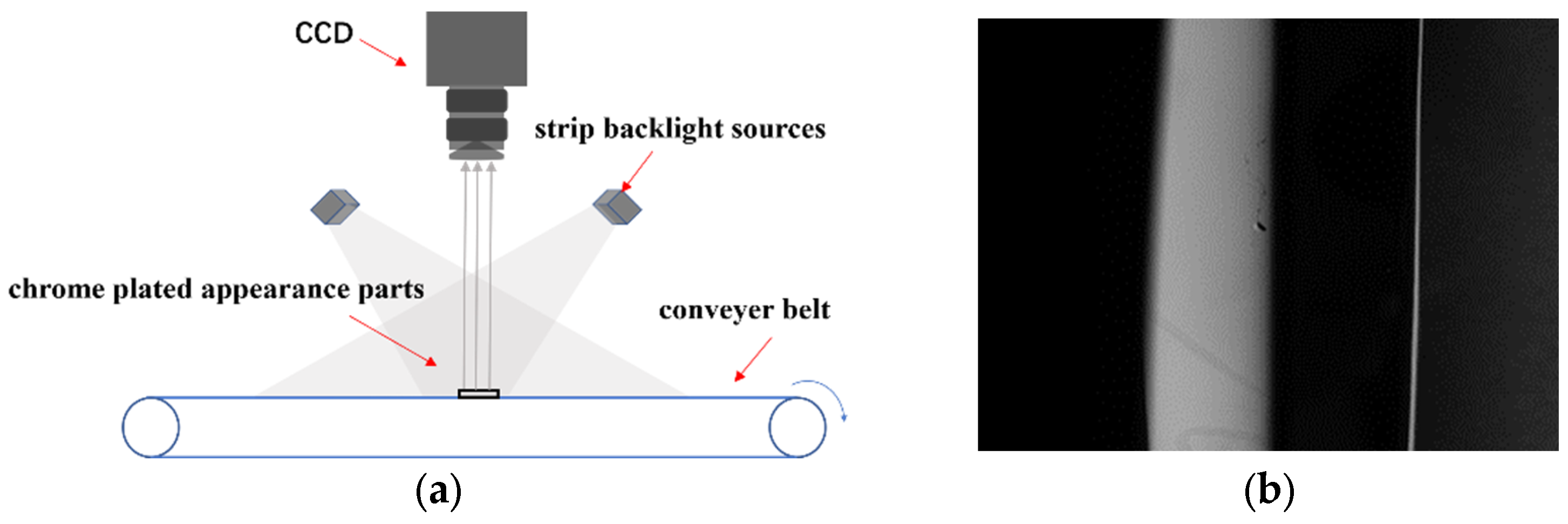

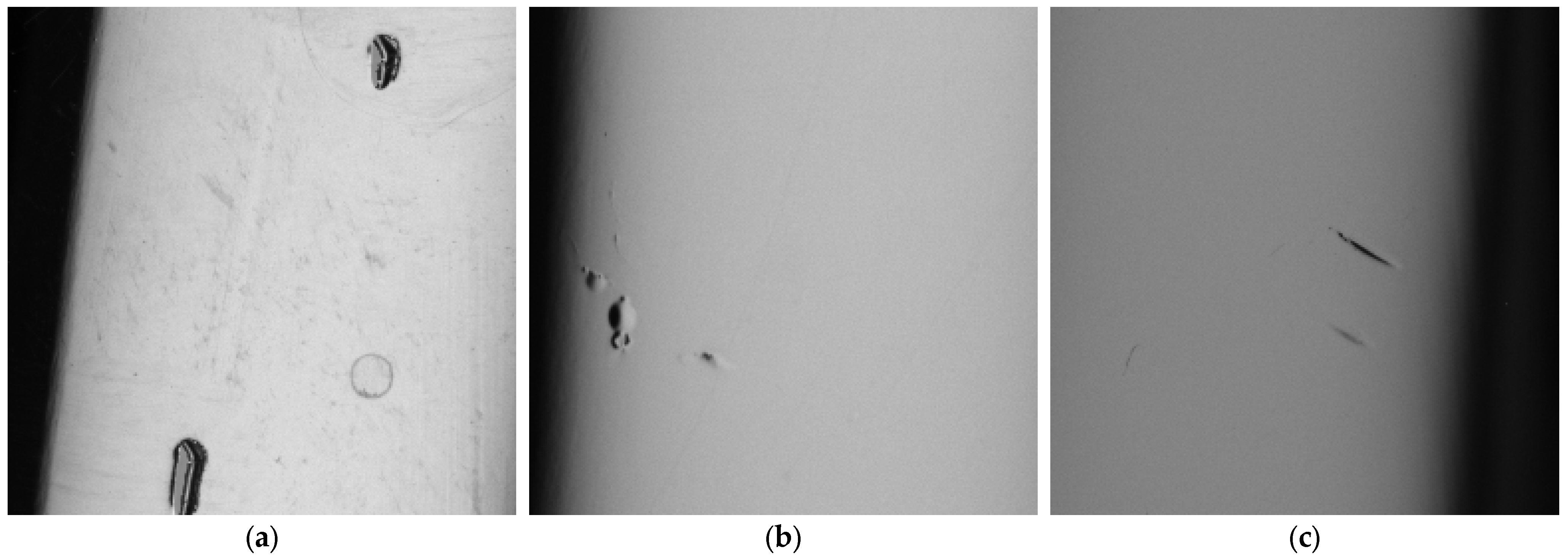

2. High Reflective Chrome-Plated Surface Image Acquisition

3. Identification and Location of Highly Reflective Surface Defects Based on Baru-Net

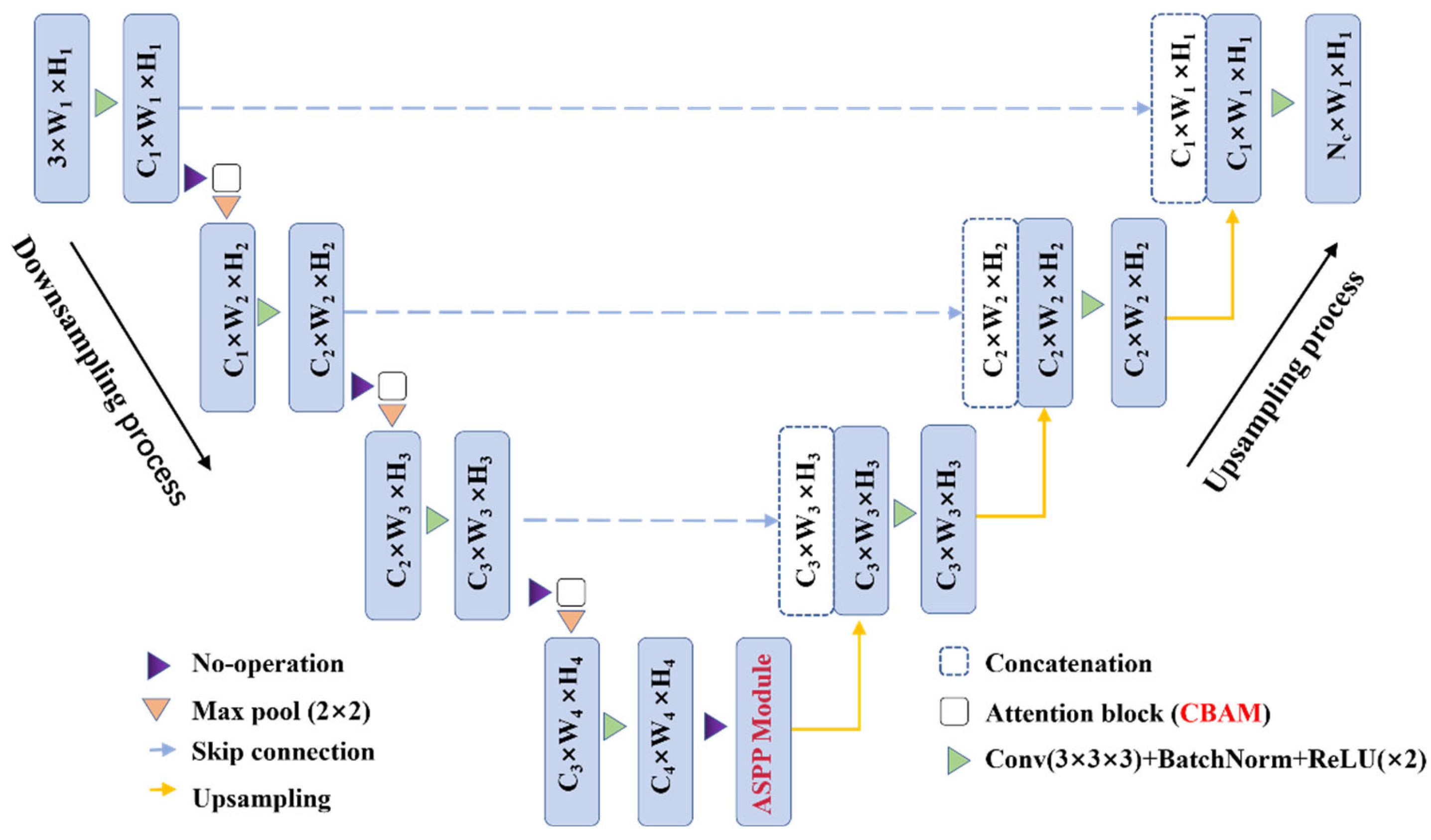

3.1. Network Structure

3.1.1. Unet Architecture

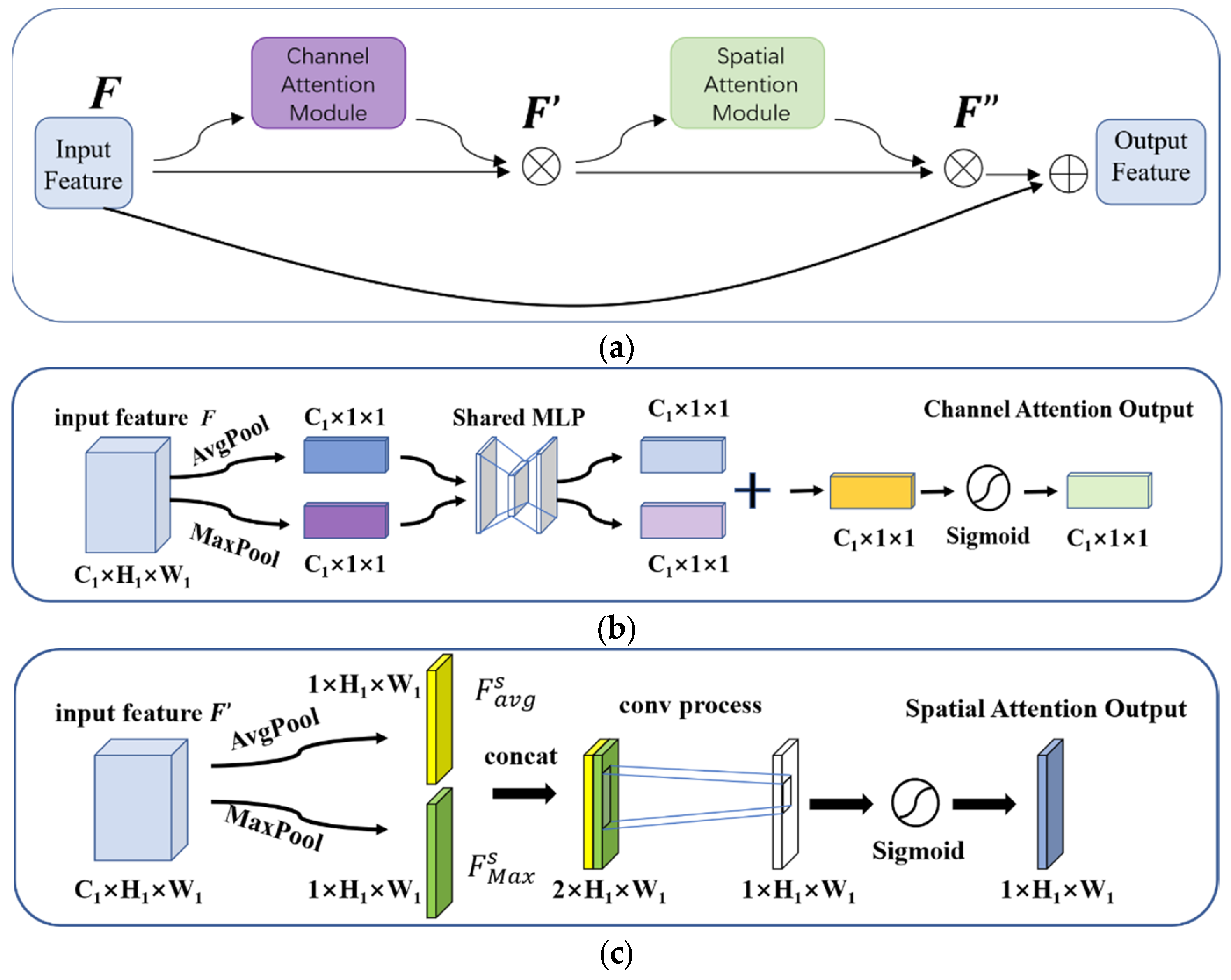

3.1.2. CBAM Module

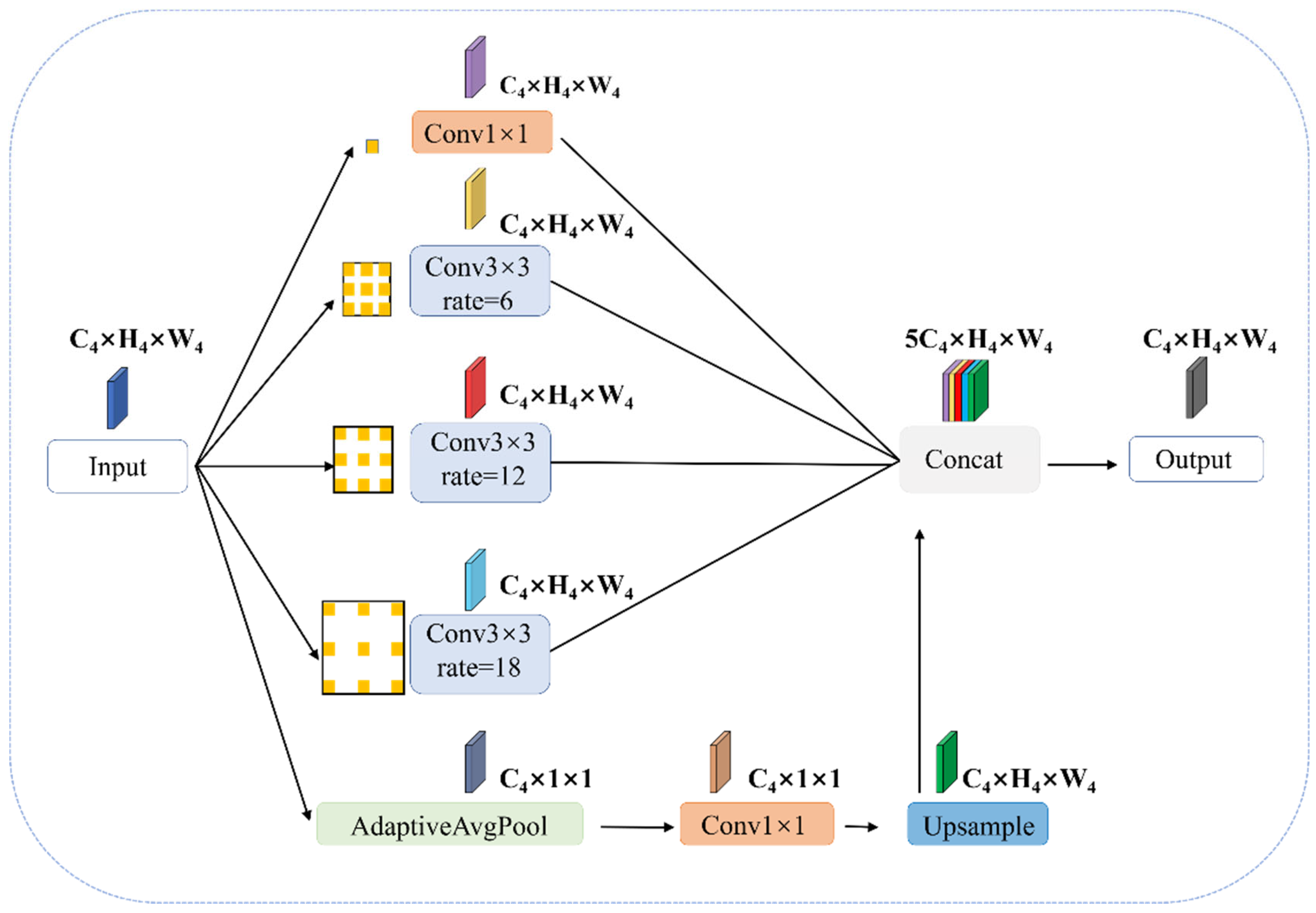

3.1.3. ASPP Module

3.2. Experiments Setup

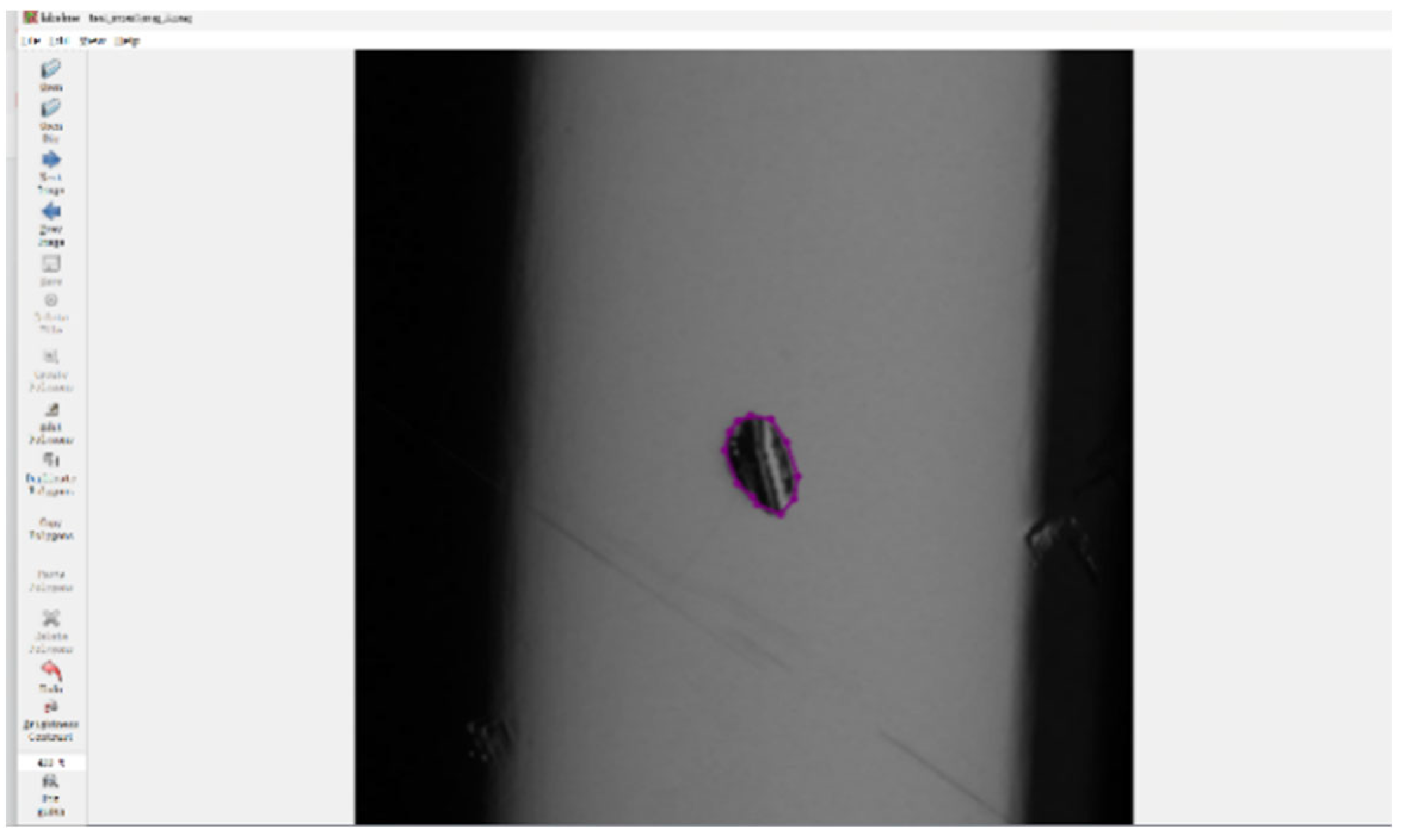

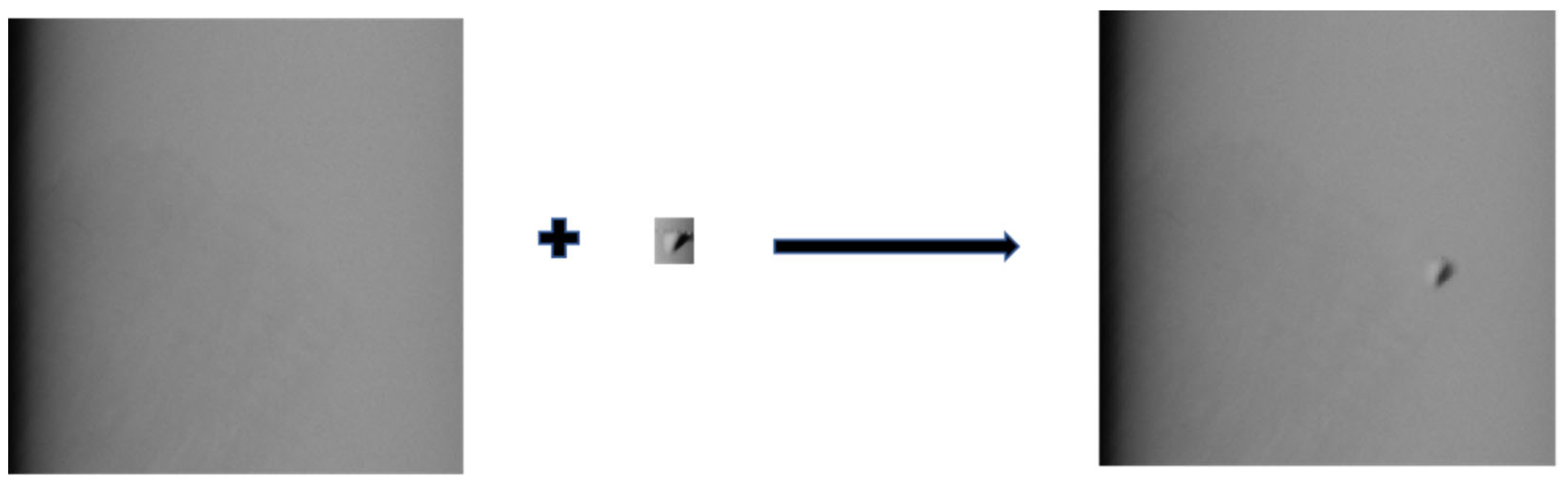

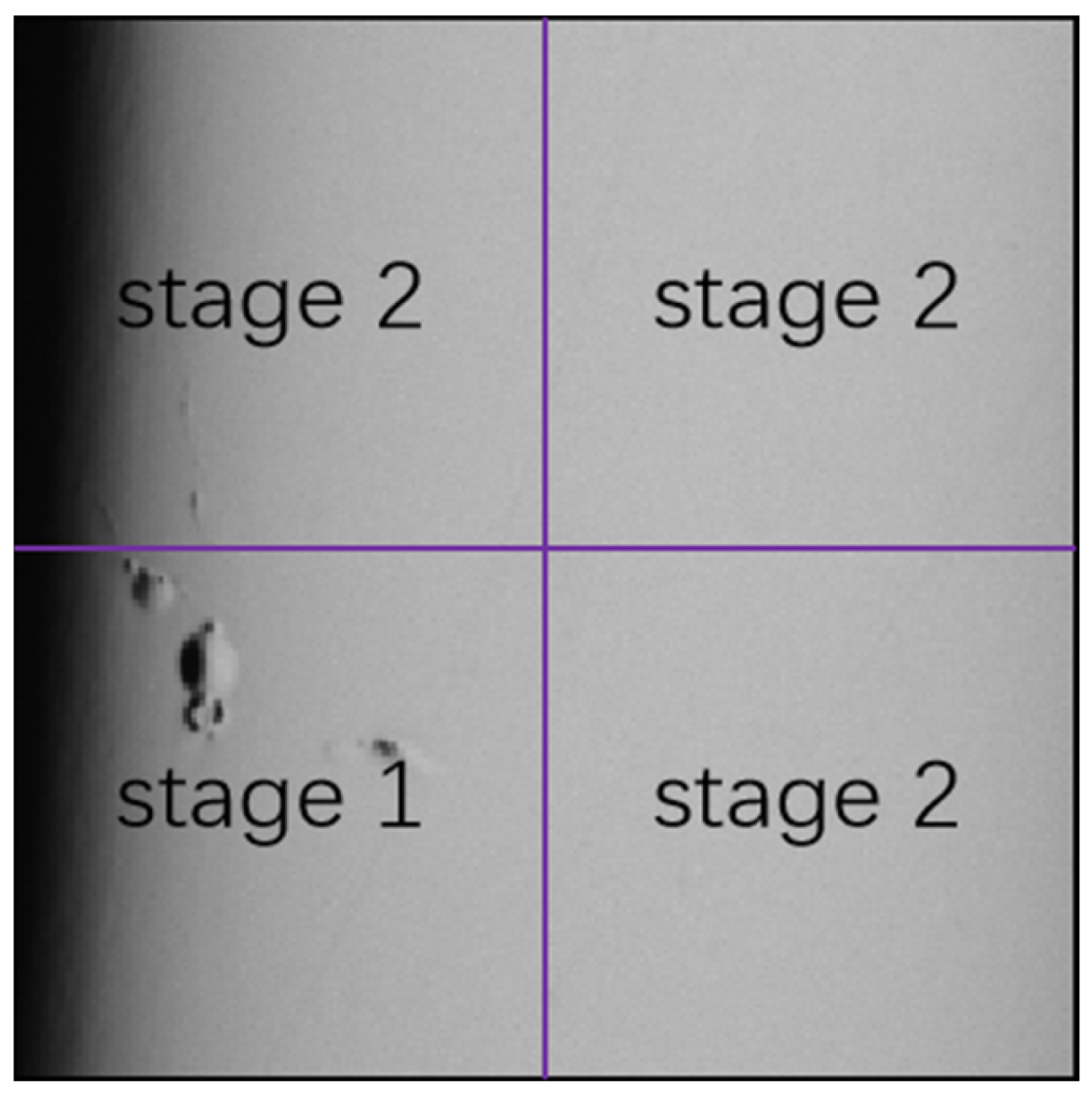

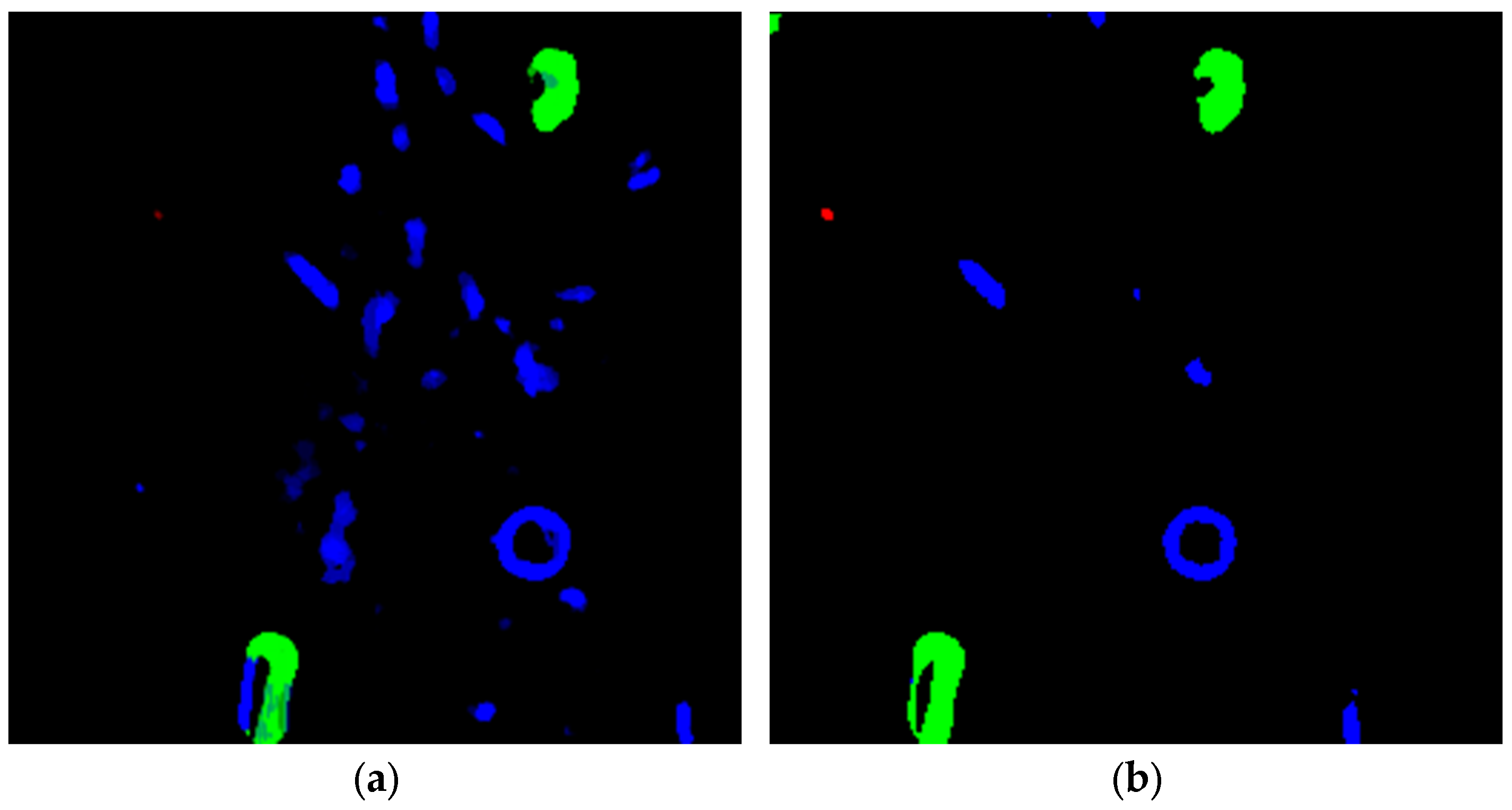

3.2.1. Dataset Making

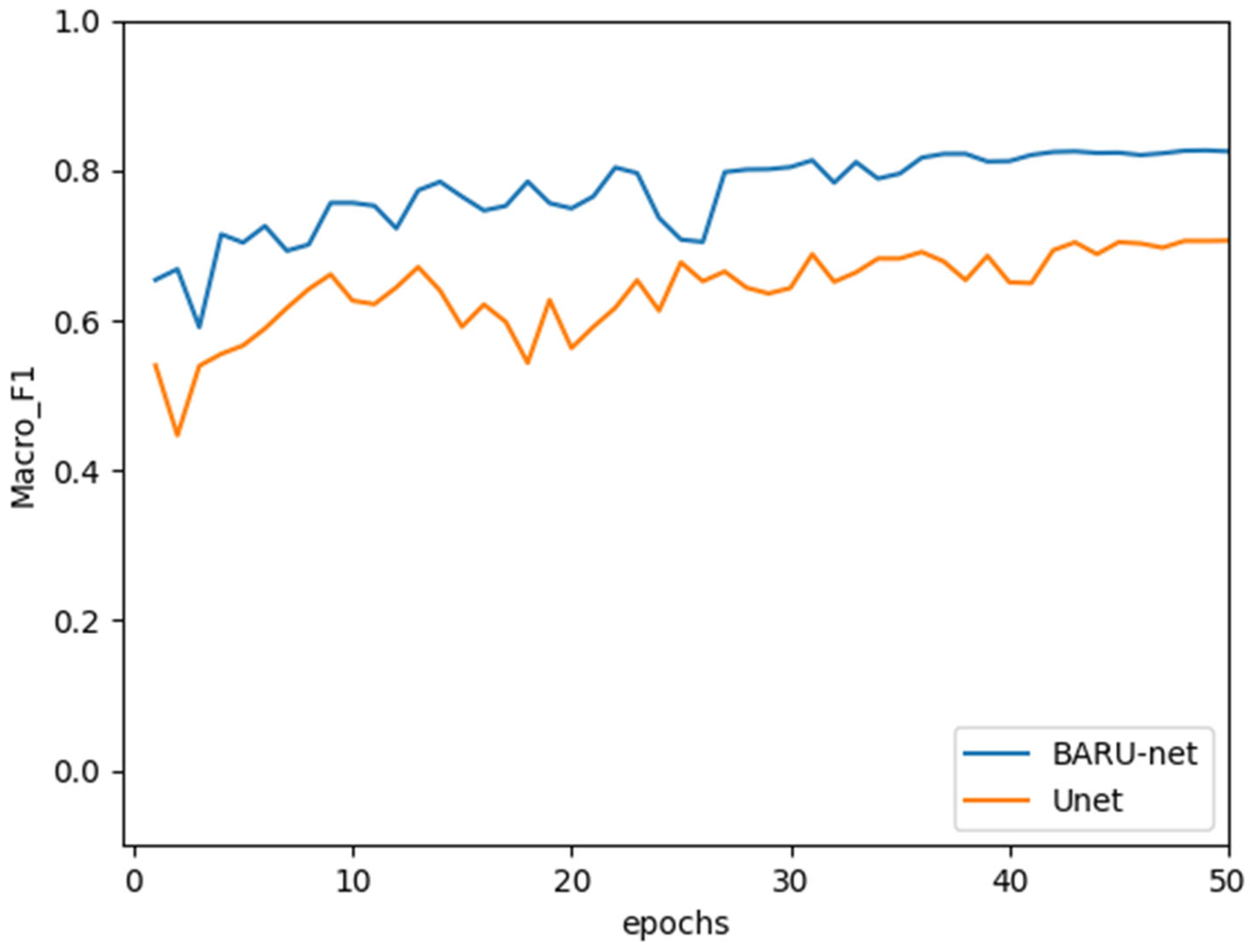

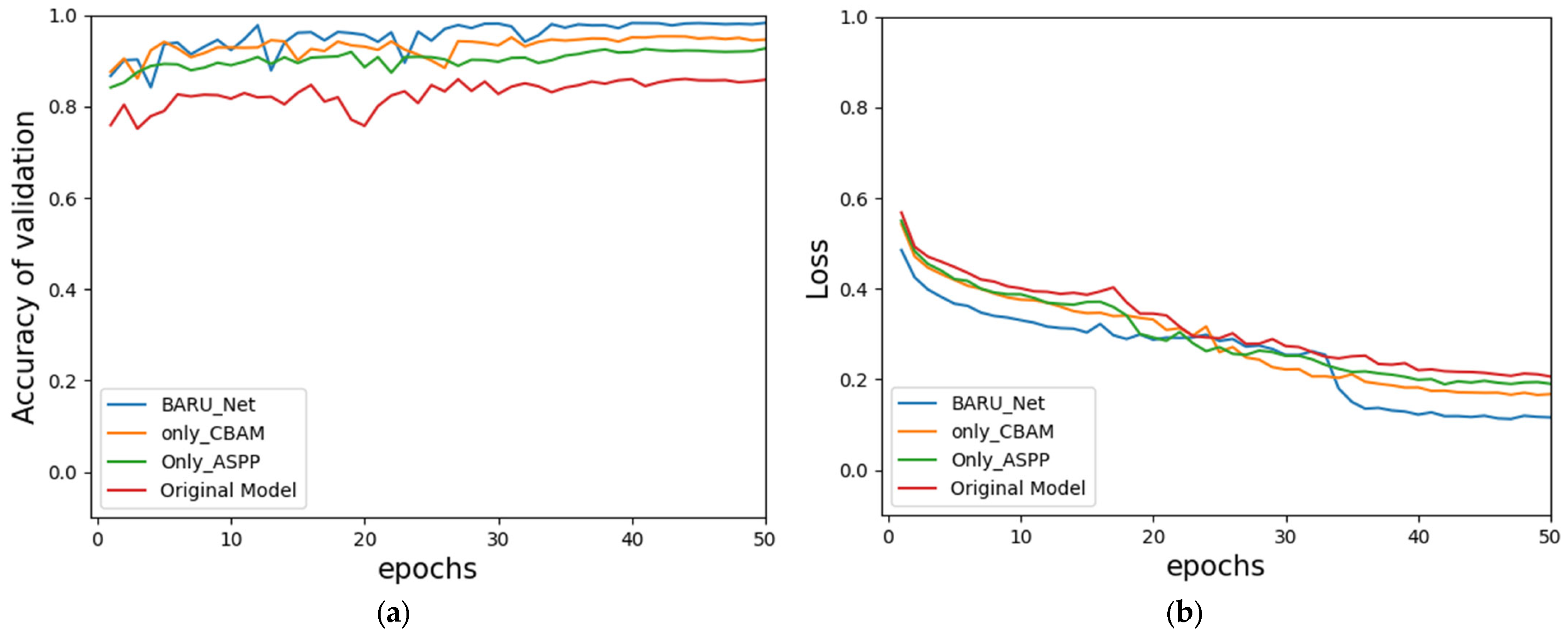

3.2.2. Model Training and Evaluation

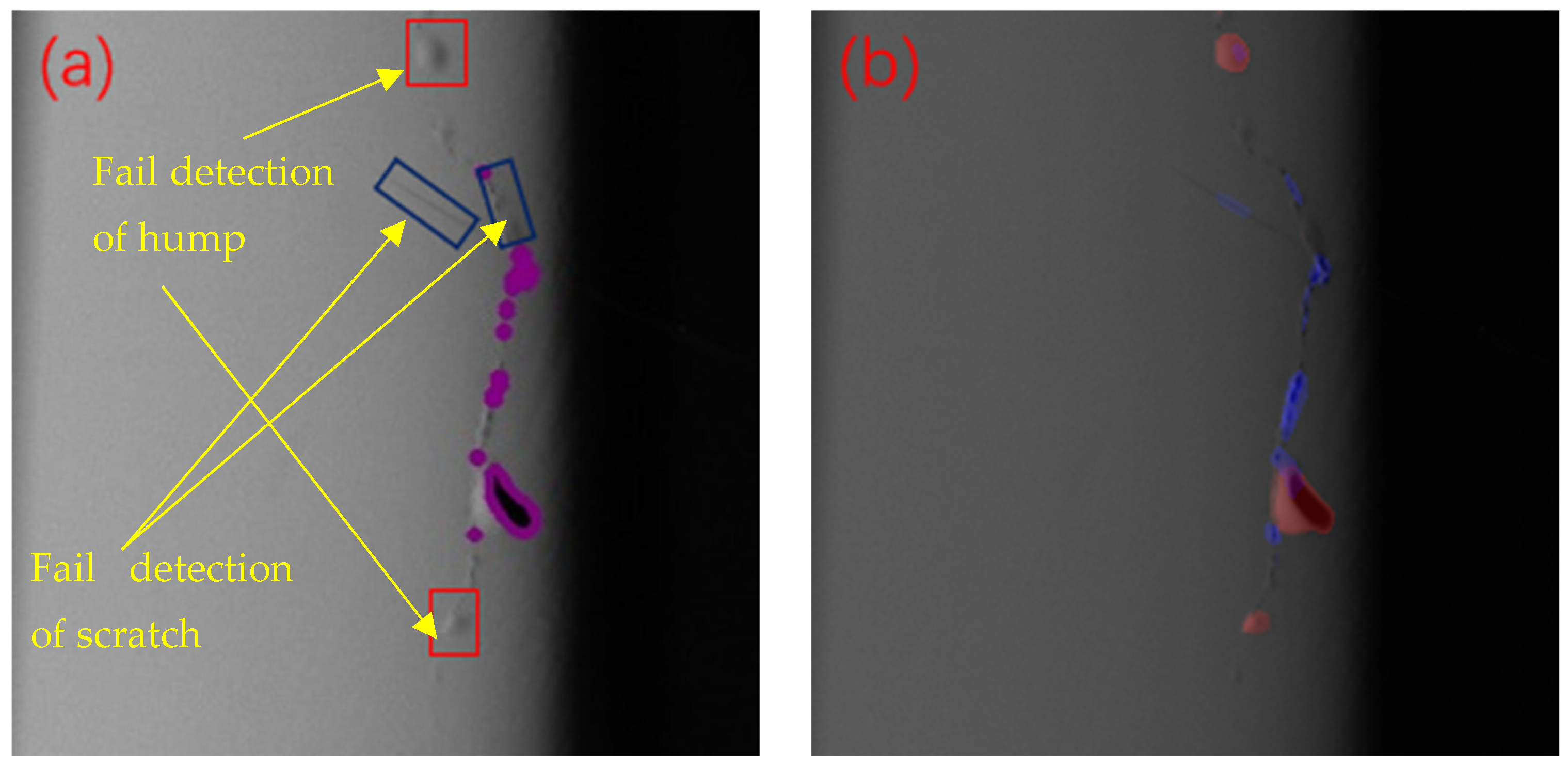

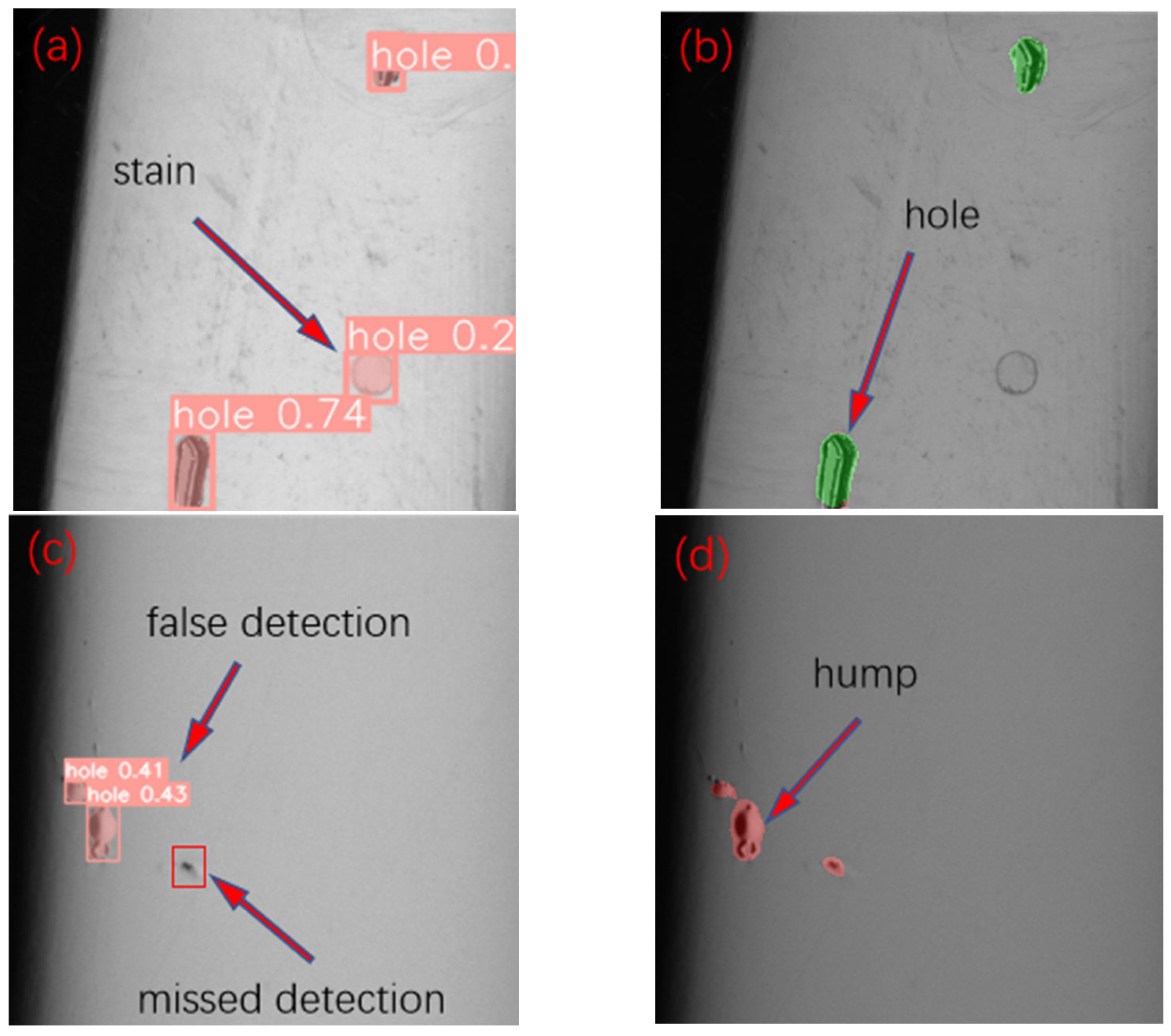

3.3. Defect Identification and Experimental Result Analysis

3.4. Model Deployment and Practical Application

- Transform the trained weight file into ONNX type and OpenVINO intermediate IR file.

- Configure OpenVINO, OpenCV and libtorch environments in QT.

- Call the API of Pytorch in OpenVINO, load the converted ONNX and IR files into the OpenVINO interface, and encapsulate this process into a reasoning and prediction class.

- Use OpenCV to read the image to be predicted, and put it into the inference port for inference and prediction.

- Convert the prediction result into the image type used in QT, and the sorting mechanism will be triggered according to the defect detection result.

4. Conclusions

- (1)

- Firstly, we proposed a network for surface defect detection in a highly reflective chrome plating work-piece that combined dual attention mechanisms and semantic segmentation to detect three kinds of defects. Secondly, a fusion of images from different light angles was used to avoid the effects of high reflectivity. In addition, the dataset was enhanced by creating artificial defect images to solve the problem of insufficient datasets. Finally, a step-by-step training strategy could solve the problem of category imbalance caused by the defect size being too minor compared to the background.

- (2)

- The model achieved a detection accuracy of 98.3 IEEE and a detection speed of around 800 ms on a single GPU. The Baru-Net and dual-angle light source approach could be applied to the industrial scene of small-sized samples and highly reflective surface.

- (3)

- In the future, the dataset will be further expanded in terms of the image number and defect type to ensure better generalization. The method based on transfer learning can also be implemented to improve the model performance. In addition, for the detection of special-shaped parts, we will further optimize the inspection device so that it can meet practical needs.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, A.; Ai, B.; Qu, P.; Shao, W. Defect Detection for Highly Reflective Rotary Surfaces: An Overview. Meas. Sci. Technol. 2021, 32, 062001. [Google Scholar] [CrossRef]

- Neogi, N.; Mohanta, D.K.; Dutta, P.K. Defect Detection of Steel Surfaces with Global Adaptive Percentile Thresholding of Gradient Image. J. Inst. Eng. India Ser. B 2017, 98, 557–565. [Google Scholar] [CrossRef]

- Shao, W.; Peng, P.; Shao, Y.; Zhou, A. A Method for Identifying Defects on Highly Reflective Roller Surface Based on Image Library Matching. Math. Probl. Eng. 2020, 2020, 1837528. [Google Scholar] [CrossRef]

- Feng, W.; Liu, H.; Zhao, D.; Xu, X. Research on Defect Detection Method for High-Reflective-Metal Surface Based on High Dynamic Range Imaging. Optik 2020, 206, 164349. [Google Scholar] [CrossRef]

- He, Z.; Wang, Y.; Yin, F.; Liu, J. Surface Defect Detection for High-Speed Rails Using an Inverse P-M Diffusion Model. Sens. Rev. 2016, 36, 86–97. [Google Scholar] [CrossRef]

- Yun, J.P.; Choi, D.; Jeon, Y.; Park, C.; Kim, S.W. Defect Inspection System for Steel Wire Rods Produced by Hot Rolling Process. Int. J. Adv. Manuf. Technol. 2014, 70, 1625–1634. [Google Scholar] [CrossRef]

- Guo, Z.; Ye, S.; Wang, Y.; Lin, C. Resistance Welding Spot Defect Detection with Convolutional Neural Networks. In Computer Vision Systems; Liu, M., Chen, H., Vincze, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 10528, pp. 169–174. ISBN 978-3-319-68344-7. [Google Scholar]

- Öztürk, Ş.; Akdemir, B. Fuzzy Logic-Based Segmentation of Manufacturing Defects on Reflective Surfaces. Neural Comput. Appl. 2018, 29, 107–116. [Google Scholar] [CrossRef]

- Park, J.-K.; Kwon, B.-K.; Park, J.-H.; Kang, D.-J. Machine Learning-Based Imaging System for Surface Defect Inspection. Int. J. Precis. Eng. Manuf.-Green Technol. 2016, 3, 303–310. [Google Scholar] [CrossRef]

- Zhang, Z.; Wen, G.; Chen, S. Weld Image Deep Learning-Based on-Line Defects Detection Using Convolutional Neural Networks for Al Alloy in Robotic Arc Welding. J. Manuf. Process. 2019, 45, 208–216. [Google Scholar] [CrossRef]

- Baskaran, R.; Fernando, P. Steel Frame Structure Defect Detection Using Image Processing and Artificial Intelligence. In Proceedings of the 2021 International Conference on Smart Generation Computing, Communication and Networking (SMART GENCON), Pune, India, 29 October 2021; IEEE: Piscataway, NJ, USA; pp. 1–6. [Google Scholar]

- Ma, Z.; Li, Y.; Huang, M.; Huang, Q.; Cheng, J.; Tang, S. A Lightweight Detector Based on Attention Mechanism for Aluminum Strip Surface Defect Detection. Comput. Ind. 2022, 136, 103585. [Google Scholar] [CrossRef]

- Xu, L.M.; Yang, Z.Q.; Jiang, Z.H.; Chen, Y. Light Source Optimization for Automatic Visual Inspection of Piston Surface Defects. Int. J. Adv. Manuf. Technol. 2017, 91, 2245–2256. [Google Scholar] [CrossRef]

- Rosati, G.; Boschetti, G.; Biondi, A.; Rossi, A. Real-Time Defect Detection on Highly Reflective Curved Surfaces. Opt. Lasers Eng. 2009, 47, 379–384. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Li, H.; Chen, H.; Jia, Z.; Zhang, R.; Yin, F. A Parallel Multi-Scale Time-Frequency Block Convolutional Neural Network Based on Channel Attention Module for Motor Imagery Classification. Biomed. Signal Process. Control 2023, 79, 104066. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, K.; Yamane, S. CBAM-Unet++:Easier to Find the Target with the Attention Module “CBAM”. In Proceedings of the 2021 IEEE 10th Global Conference on Consumer Electronics (GCCE), Kyoto, Japan, 12 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 655–657. [Google Scholar]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R.S., Bradley, A., Papa, J.P., Belagiannis, V., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11045, pp. 3–11. ISBN 978-3-030-00888-8. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

| Hyperparameters | Batch Size | Width | Height | Loss | Optimizer |

|---|---|---|---|---|---|

| Parameter value | 4 | 96 | 96 | Cross Entropy Loss | Adam |

| Model | Accuracy (%) | Macro-F1 (%) |

|---|---|---|

| UNet++ | 96.1 | 89.9 |

| UNet | 91.4 | 89.4 |

| AttentionUNet | 97.8 | 90.1 |

| Res_UNet | 89.3 | 88.4 |

| Baru-Net | 98.3 | 91.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Zhang, B.; Jiang, Q.; Chen, X. Baru-Net: Surface Defects Detection of Highly Reflective Chrome-Plated Appearance Parts. Coatings 2023, 13, 1205. https://doi.org/10.3390/coatings13071205

Chen J, Zhang B, Jiang Q, Chen X. Baru-Net: Surface Defects Detection of Highly Reflective Chrome-Plated Appearance Parts. Coatings. 2023; 13(7):1205. https://doi.org/10.3390/coatings13071205

Chicago/Turabian StyleChen, Junying, Bin Zhang, Qingshan Jiang, and Xiuyu Chen. 2023. "Baru-Net: Surface Defects Detection of Highly Reflective Chrome-Plated Appearance Parts" Coatings 13, no. 7: 1205. https://doi.org/10.3390/coatings13071205

APA StyleChen, J., Zhang, B., Jiang, Q., & Chen, X. (2023). Baru-Net: Surface Defects Detection of Highly Reflective Chrome-Plated Appearance Parts. Coatings, 13(7), 1205. https://doi.org/10.3390/coatings13071205