An Image Generation Method of Unbalanced Ship Coating Defects Based on IGASEN-EMWGAN

Abstract

1. Introduction

- (1)

- To address the dilemma of a ship coating defect database’s insufficient historical labeled data and class imbalance, this paper proposes a novel hybrid defect image generation model called IGASEN-EMWGAN for generating the new high-quality defect images of the minority class for the first time.

- (2)

- In order to alleviate the problem of a vanishing gradient and model collapse in original minimax mutation and provide smoother gradients for updating the generators and stabilizing the training process, we also employ the modified hinge mutation and the wasserstein_gp mutation to inject genome mutation diversity into the training of complementary generators, which is conducive to steady training between generators and discriminators.

- (3)

- To remedy the deficiencies existing in vanilla EGANs, such as a lack of a crossover mutation operator, it being limited by the single specified environment (discriminator), and it easily getting stuck in the local optimum, we also propose adding the two-point crossover mutations operator (TP) into the proposed IGASEN-EMWGAN model to evolve the generators, which is conducive to fostering diversity in generators and discriminators.

- (4)

- In order to solve the limitations of those preveniently proposed methods, such as the difficult balance between the discriminative accuracy and diversity of base discriminators and the high possibility of falling in local optima, we propose a novel model, which integrates selective ensemble learning and GA-based SA into the training of multi-discriminators in IGASEN-EMWGAN to escape from the local optimum caused by the class imbalance.

- (5)

- The extensive experiments are conducted on a real unbalanced ship coating defect database, and the experimental results demonstrate that, compared with the baselines and different state-of-the-art GANs, the values of the ID and FID scores are significantly improved and deceased, respectively, which proves the superior effectiveness of the proposed model in this paper.

2. Proposed Method

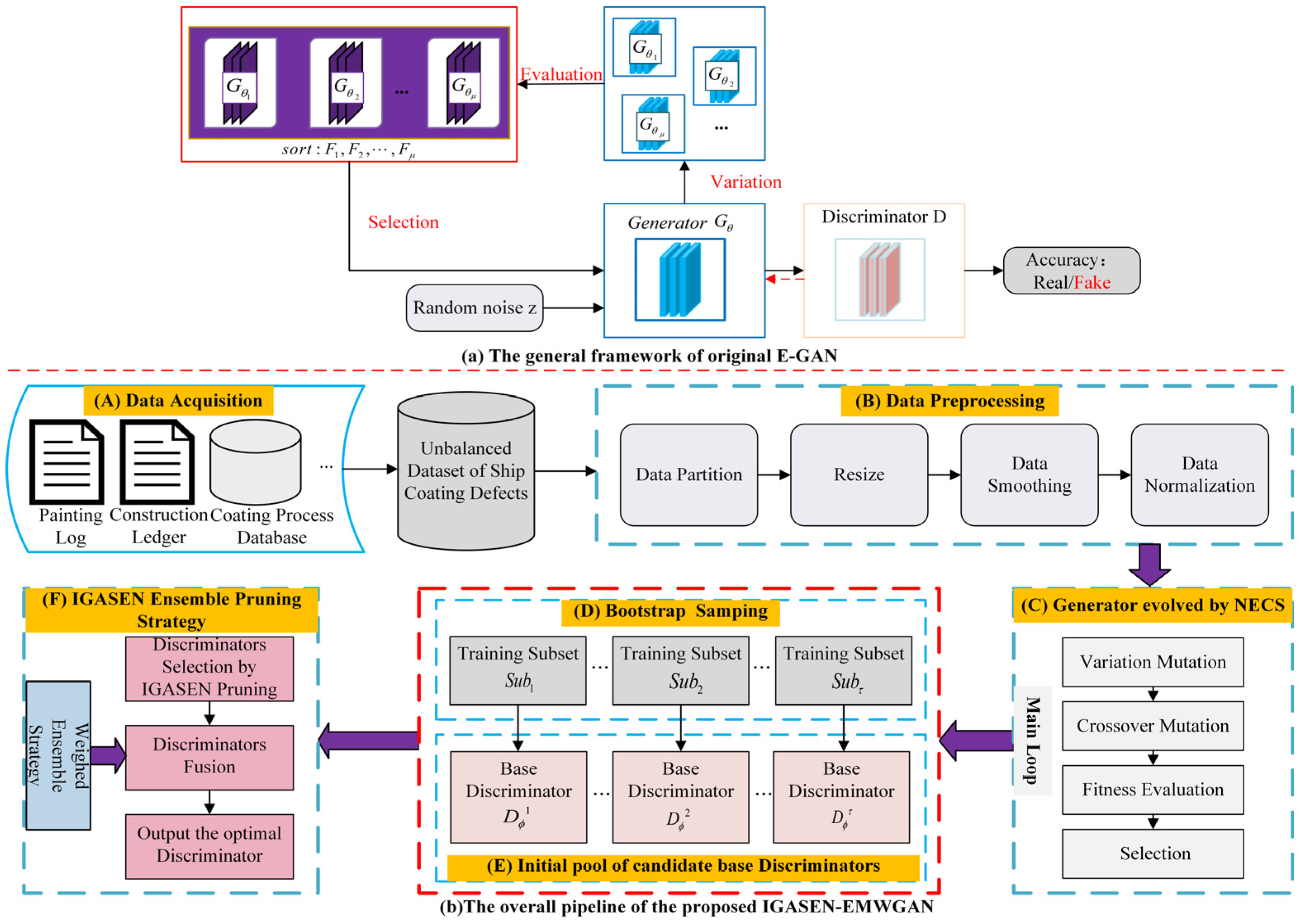

2.1. Evolutionary Generative Adversarial Networks

2.2. Generators in IGASEN-EMWGAN

2.2.1. Variation Mutations of Generators

2.2.2. Crossover Mutations of Generators

2.2.3. Evaluation of Generators

2.2.4. Selection of Generators

2.3. Discriminators in IGASEN-EMWGAN

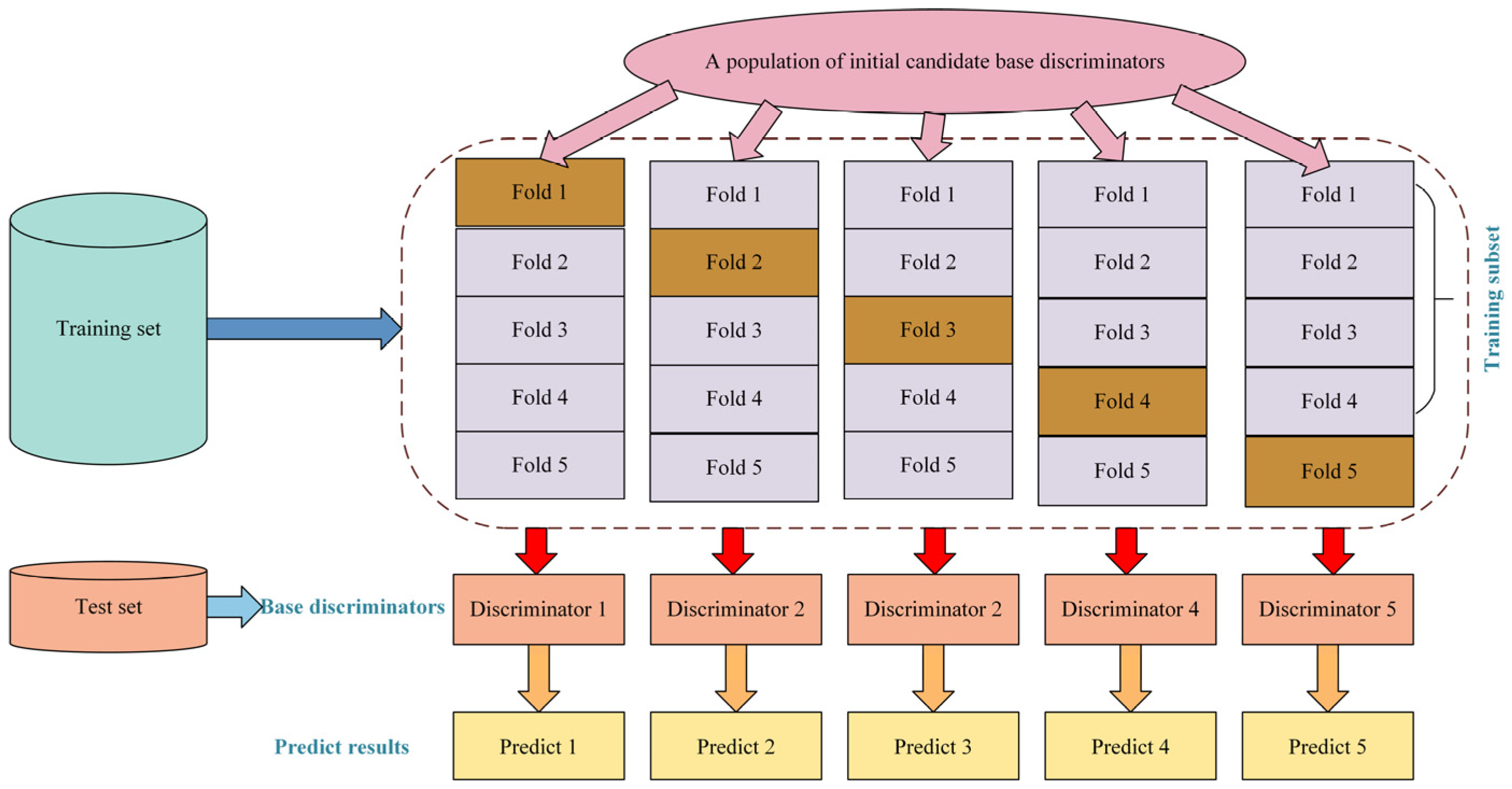

2.3.1. Generation of Base Discriminators

2.3.2. Encoding of Base Discriminators

2.3.3. Evolution of Base Discriminators

- ➀

- Variation mutations of discriminators

- ➁

- Crossover mutations of base discriminators

- ➂

- Evaluation of base discriminators

- ➃

- Selection of base discriminators

2.3.4. Update of Discriminators

2.3.5. Combination of Discriminators

| Algorithm 1: IGASEN-EMWGAN |

| Require: mini-batch size ψ; the number of iterations T; the generator ; the discriminator ; the updating steps of the discriminator per iteration ; the number of parents for generator ; the number of parents for the base discriminator ; the number of mutations for generator M; the number of mutations for discriminator N; the number of variation mutations ; the number of crossover mutations ; the spatial dimension of the noise z; the initial temperature of annealing ; the annealing coefficient α; the hyperparameter of the fitness function of generators; the hyperparameter δ of the fitness function of base discriminators. Initialize base discriminators, parameters and generators, parameters. 1: Construct multiple training subsets with a diversity degree from by using the Bootstrap Sampling Algorithm. 2: Obtain the initial homogeneous base discriminators set by using these multiply training subsets to train independently, and each parameter is optimized by the parallel optimization strategy. ▷Discriminators Generation 3: Encoding the homogeneous candidate base discriminators as the binary chromosome individuals in the genetic space, where 1 means the base discriminators are selected for an ensemble member, while 0 means the opposite. ▷Discriminators Encoding 4: for t =1, …, T do 5: for i =1, …, do 6: for n = 1, …, τ do ▷Discriminators Evolution 7: ←mini-batch sampling randomly from the real ship coating defects training set. 8: ←mini-batch sampling randomly from noise samples, and generate a batch of generated samples. 9: generates N offspring via Equation (12) or Equation (13), respectively. ▷D-Variation Mutation 10: generates N offspring via D-crossover mutation, that is, updating . ▷D-Crossover Mutation 11: Calculate the individual fitness of the N evolved offspring of discriminators via Equation (16). ▷D-Evaluation 12: Sort , and express the largest one as . ▷D-Selection 13: end for 14: if > then ▷D-Update 15: Update to 16: else 17: 18: 19: Update to with a probability of P which ranges from 0 to 1 20: end if 21: 21: end for 22: end for 23: Output the remaining filtered base discriminators combination. ▷D-Combination 24: for j = 1,…, do ▷Generators Evolution 25: ←mini-batch sampling randomly from noise samples. 26: generates M offspring via variation mutation, that is, updating via Equations (1) and (4), respectively. ▷G-Variation Mutation 27: generates M offspring via G-variation mutation, that is, updating . ▷G-Crossover Mutation 28: Calculate the individual fitness of the M evolved offspring of discriminators via Equation (7). ▷G-Evaluation 29: Sort , and select the largest offspring as the next generation’s parents of the generators via Equations (8) and (9). ▷G-Selection 30: end for 31:end for 32: Print (New Structure) 33: end |

3. Experimental Results and Analysis

3.1. Dataset Setup

3.2. Evaluation Metrics

3.3. Implementation Details

3.4. Experimental Results and Analysis

3.4.1. Hyperparameters Analysis

- (1)

- Balance Weight coefficients λ

- (2)

- Number of base discriminators τ

- (3)

- The initial temperature T and the annealing coefficient α

3.4.2. Comparisons with Different Existing GANs in Generative Performance

3.4.3. Ablation Study

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Salem, M.H.; Li, Y.; Liu, Z.; Abdel Tawab, A.M. A Transfer Learning and Optimized CNN Based Maritime Vessel Classification System. Appl. Sci. 2023, 13, 1912. [Google Scholar] [CrossRef]

- Dev, A.K.; Saha, M. Analysis of Hull Coating Renewal in Ship Repairing. J. Ship Prod. Des. 2017, 33, 197–211. [Google Scholar] [CrossRef]

- Bu, H.; Yuan, X.; Niu, J.; Yu, W.; Ji, X.; Lyu, H.; Zhou, H. Ship Painting Process Design Based on IDBSACN-RF. Coatings 2021, 11, 1458. [Google Scholar] [CrossRef]

- Cho, D.-Y.; Swan, S.; Kim, D.; Cha, J.-H.; Ruy, W.-S.; Choi, H.-S.; Kim, T.-S. Development of paint area estimation software for ship compartments and structures. Int. J. Nav. Archit. Ocean Eng. 2016, 8, 198–208. [Google Scholar] [CrossRef]

- Bu, H.; Ji, X.; Zhang, J.; Lyu, H.; Yuan, X.; Pang, B.; Zhou, H. A Knowledge Acquisition Method of Ship Coating Defects Based on IHQGA-RS. Coatings 2022, 12, 292. [Google Scholar] [CrossRef]

- Xin, Y.; Henan, B.; Jianmin, N.; Wenjuan, Y.; Honggen, Z.; Xingyu, J.; Pengfei, Y. Coating matching recommendation based on improved fuzzy comprehensive evaluation and collaborative filtering algorithm. Sci. Rep. 2021, 11, 14035. [Google Scholar] [CrossRef]

- Davies, J.; Truong-Ba, H.; Cholette, M.E.; Will, G. Optimal inspections and maintenance planning for anti-corrosion coating failure on ships using non-homogeneous Poisson Processes. Ocean Eng. 2021, 238, 109695. [Google Scholar] [CrossRef]

- Bu, H.; Ji, X.; Yuan, X.; Han, Z.; Li, L.; Yan, Z. Calculation of coating consumption quota for ship painting: A CS-GBRT approach. J. Coat. Technol. Res. 2020, 17, 1597–1607. [Google Scholar] [CrossRef]

- Liang, K.; Liu, F.; Zhang, Y. Household Power Consumption Prediction Method Based on Selective Ensemble Learning. IEEE Access 2020, 8, 95657–95666. [Google Scholar] [CrossRef]

- Barua, S.; Islam, M.; Yao, X.; Murase, K. MWMOTE-Majority Weighted Minority Oversampling Technique for Imbalanced Data Set Learning. IEEE Trans. Knowl. Data Eng. 2014, 26, 405–425. [Google Scholar] [CrossRef]

- Ji, X.; Wang, J.; Li, Y.; Sun, Q.; Jin, S.; Quek, T.Q.S. Data-Limited Modulation Classification with a CVAE-Enhanced Learning Model. IEEE Commun. Lett. 2020, 24, 2191–2195. [Google Scholar] [CrossRef]

- Garciarena, U.; Mendiburu, A.; Santana, R. Analysis of the transferability and robustness of GANs evolved for Pareto set approximations. Neural Netw. 2020, 132, 281–296. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.C.; Bengio, Y. Generative Adversarial Networks. arXiv 2014. [Google Scholar] [CrossRef]

- Schellenberg, M.; Gröhl, J.; Dreher, K.K.; Nölke, J.-H.; Holzwarth, N.; Tizabi, M.D.; Seitel, A.; Maier-Hein, L. Photoacoustic image synthesis with generative adversarial networks. Photoacoustics 2022, 28, 100402. [Google Scholar] [CrossRef]

- Bharti, V.; Biswas, B. EMOCGAN: A novel evolutionary multiobjective cyclic generative adversarial network and its application to unpaired image translation. Neural Comput. Appl. 2021, 34, 21433–21447. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.P.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. arXiv 2016. [Google Scholar] [CrossRef]

- Kavousi-Fard, A.; Dabbaghjamanesh, M.; Jin, T.; Su, W.; Roustaei, M. An Evolutionary Deep Learning-Based Anomaly Detection Model for Securing Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4478–4486. [Google Scholar] [CrossRef]

- Zou, L.; Zhang, H.; Wang, C.; Wu, F.; Gu, F. MW-ACGAN: Generating Multiscale High-Resolution SAR Images for Ship Detection. Sensors 2020, 20, 6673. [Google Scholar] [CrossRef] [PubMed]

- Fiore, U.; De Santis, A.; Perla, F.; Zanetti, P.; Palmieri, F. Using generative adversarial networks for improving classification effectiveness in credit card fraud detection. Inf. Sci. 2017, 479, 448–455. [Google Scholar] [CrossRef]

- Li, J.; Zhang, J.; Gong, X.; Lu, S. Evolutionary Generative Adversarial Networks with Crossover Based Knowledge Distillation. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Chen, S.; Wang, W.; Xia, B.; You, X.; Peng, Q.; Cao, Z.; Ding, W. CDE-GAN: Cooperative Dual Evolution-Based Generative Adversarial Network. IEEE Trans. Evol. Comput. 2021, 25, 986–1000. [Google Scholar] [CrossRef]

- Wang, C.; Xu, C.; Yao, X.; Tao, D. Evolutionary Generative Adversarial Networks. IEEE Trans. Evol. Comput. 2019, 23, 921–934. [Google Scholar] [CrossRef]

- Xiao, Y.; Li, W.; Qiang, S.; Li, Q.; Xiao, H.; Liu, Y. A Rumor & Anti-Rumor Propagation Model Based on Data Enhancement and Evolutionary Game. IEEE Trans. Emerg. Top. Comput. 2022, 10, 690–703. [Google Scholar] [CrossRef]

- Chen, M.; Yu, R.; Xu, S.; Luo, Y.; Yu, Z. An Improved Algorithm for Solving Scheduling Problems by Combining Generative Adversarial Network with Evolutionary Algorithms. In Proceedings of the Computer Science and Application Engineering, Sanya, China, 22–24 October 2019. [Google Scholar]

- Erivaldo, F.F.; Gary, G.Y. Pruning of generative adversarial neural networks for medical imaging diagnostics with evolution strategy. Inf. Sci. 2021, 558, 91–102. [Google Scholar] [CrossRef]

- Zheng, Y.-J.; Gao, C.-C.; Huang, Y.-J.; Sheng, W.-G.; Wang, Z. Evolutionary ensemble generative adversarial learning for identifying terrorists among high-speed rail passengers. Expert Syst. Appl. 2022, 261, 118430. [Google Scholar] [CrossRef]

- Meng, A.; Chen, S.; Ou, Z.; Xiao, J.; Zhang, J.; Zhang, Z.; Liang, R.; Zhang, Z.; Xian, Z.; Wang, C.; et al. A novel few-shot learning approach for wind power prediction applying secondary evolutionary generative adversarial network. Energy 2022, 210, 125276. [Google Scholar] [CrossRef]

- He, J.; Zhu, Q.; Zhang, K.; Yu, P.; Tang, J. An evolvable adversarial network with gradient penalty for COVID-19 infection segmentation. Appl. Soft Comput. 2021, 113, 107947. [Google Scholar] [CrossRef] [PubMed]

- Cai, X.; Lan, Y.; Zhang, Z.; Wen, J.; Cui, Z.; Zhang, W. A Many-objective Optimization based Federal Deep Generation Model for Enhancing Data Processing Capability in IOT. IEEE Trans. Ind. Inform. 2021, 19, 561–569. [Google Scholar] [CrossRef]

- Hazra, D.; Kim, M.-R.; Byun, Y.-C. Generative Adversarial Networks for Creating Synthetic Nucleic Acid Sequences of Cat Genome. Int. J. Mol. Sci. 2022, 23, 3701. [Google Scholar] [CrossRef]

- Talas, L.; Fennell, J.G.; Kjernsmo, K.; Cuthill, I.C.; Scott-Samuel, N.E.; Baddeley, R.J. CamoGAN: Evolving optimum camouflage with Generative Adversarial Networks. Methods Ecol. Evol. 2020, 11, 240–247. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Y.; Kirschen, D.S.; Zhang, B. Model-Free Renewable Scenario Generation Using Generative Adversarial Networks. IEEE Trans. Power Syst. 2018, 33, 3265–3275. [Google Scholar] [CrossRef]

- Han, C.; Wang, J. Face Image Inpainting With Evolutionary Generators. IEEE Signal Process. Lett. 2021, 28, 190–193. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein GANs. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5769–5779. [Google Scholar]

- Yin, H.; Ou, Z.; Zhu, Z.; Xu, X.; Fan, J.; Meng, A. A novel asexual-reproduction evolutionary neural network for wind power prediction based on generative adversarial networks. Energy Convers. Manag. 2021, 247, 114714. [Google Scholar] [CrossRef]

- Gong, X.; Jia, L.; Li, N. Research on mobile traffic data augmentation methods based on SA-ACGAN-GN. Math. Biosci. Eng. 2022, 19, 11512–11532. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.-H.; Aslam, M.S.; Harfiya, L.N.; Chang, C.-C. Conditional Wasserstein Generative Adversarial Networks for Rebalancing Iris Image Datasets:Regular Section. IEICE Trans. Inf. Syst. 2021, E104.D, 1450–1458. [Google Scholar] [CrossRef]

- Liang, Z.; Xu, X.; Liu, L.; Tu, Y.; Zhu, Z. Evolutionary Many-Task Optimization Based on Multisource Knowledge Transfer. IEEE Trans. Evol. Comput. 2022, 26, 319–333. [Google Scholar] [CrossRef]

- Hakimi, D.; Oyewola, D.O.; Yahaya, Y.; Bolarin, G. Comparative Analysis of Genetic Crossover Operators in Knapsack Problem. J. Appl. Sci. Environ. Manag. 2016, 20, 593. [Google Scholar] [CrossRef]

- Xue, Y.; Zhu, H.; Liang, J.; Słowik, A. Adaptive crossover operator based multi-objective binary genetic algorithm for feature selection in classification. Knowl.-Based Syst. 2021, 227, 107218. [Google Scholar] [CrossRef]

- Hu, B.; Xiao, H.; Yang, N.; Jin, H.; Wang, L. A hybrid approach based on double roulette wheel selection and quadratic programming for cardinality constrained portfolio optimization. Concurr. Comput. Pract. Exp. 2021, 34, e6818. [Google Scholar] [CrossRef]

- Sun, Y.; Xue, B.; Zhang, M.; Yen, G.G. Completely Automated CNN Architecture Design Based on Blocks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 1242–1254. [Google Scholar] [CrossRef]

- Kramer, O. Machine Learning for Evolution Strategies; Springer: Berlin/Heidelberg, Germany, 2016; Volume 20. [Google Scholar]

- Rezaei, M.; Näppi, J.J.; Lippert, C.; Meinel, C.; Yoshida, H. Generative multi-adversarial network for striking the right balance in abdominal image segmentation. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1847–1858. [Google Scholar] [CrossRef]

- Durugkar, I.P.; Gemp, I.; Mahadevan, S. Generative Multi-Adversarial Networks. arXiv 2016. [Google Scholar] [CrossRef]

- Albuquerque, I.; Monteiro, J.; Doan, T.; Considine, B.; Falk, T.; Mitliagkas, I. Multi-objective training of Generative Adversarial Networks with multiple discriminators. arXiv 2019. [Google Scholar] [CrossRef]

- Hao, J.; Wang, C.; Yang, G.; Gao, Z.; Zhang, J.; Zhang, H. Annealing Genetic GAN for Imbalanced Web Data Learning. IEEE Trans. Multimed. 2022, 24, 1164–1174. [Google Scholar] [CrossRef]

- Hao, J.; Wang, C.; Zhang, H.; Yang, G. Annealing genetic GAN for minority oversampling. In Proceedings of the 31st British Machine Vision (Virtual) Conference 2020, Virtual Event, 7–10 September 2020; pp. 243.1–243.12. [Google Scholar]

- Yun, J.P.; Shin, W.C.; Koo, G.; Kim, M.S.; Lee, C.; Lee, S.J. Automated defect inspection system for metal surfaces based on deep learning and data augmentation. J. Manuf. Syst. 2020, 55, 317–324. [Google Scholar] [CrossRef]

- Yang, Y.; Hu, Y.; Zhang, X.; Wang, S. Two-Stage Selective Ensemble of CNN via Deep Tree Training for Medical Image Classification. IEEE Trans. Cybern. 2021, 52, 9194–9207. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Yu, Z.; Cao, W.; Chen, C.L.P. Adaptive Dense Ensemble Model for Text Classification. IEEE Trans. Cybern. 2022, 52, 7513–7526. [Google Scholar] [CrossRef] [PubMed]

- Ma, T.; Yu, T.; Wu, X.; Cao, J.; Al-Abdulkarim, A.; Al-Dhelaan, A.; Al-Dhelaan, M. Multiple clustering and selecting algorithms with combining strategy for selective clustering ensemble. Soft Comput. A Fusion Found. Methodol. Appl. 2020, 20, 15129–15141. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, S.; Zhang, X.; Han, L.; Zhang, Z. Slope stability prediction method based on the margin distance minimization selective ensemble. Catena 2022, 212, 106055. [Google Scholar] [CrossRef]

- La Fé-Perdomo, I.; Ramos-Grez, J.A.; Jeria, I.; Guerra, C.; Barrionuevo, G.O. Comparative analysis and experimental validation of statistical and machine learning-based regressors for modeling the surface roughness and mechanical properties of 316L stainless steel specimens produced by selective laser melting. J. Manuf. Process. 2022, 80, 666–682. [Google Scholar] [CrossRef]

- Naqvi, F.B.; Shad, M.Y. Seeking a balance between population diversity and premature convergence for real-coded genetic algorithms with crossover operator. Evol. Intel. 2022, 15, 2651–2666. [Google Scholar] [CrossRef]

- Zhou, J.; Wu, Z.; Xue, Y.; Li, M.; Zhou, D. Network unknown-threat detection based on a generative adversarial network and evolutionary algorithm. Int. J. Intell. Syst. 2021, 37, 4307–4328. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, S.; Zhang, Z. Prediction of Uniaxial Compressive Strength of Rock Via Genetic Algorithm—Selective Ensemble Learning. Nat. Resour. Res. 2022, 31, 1721–1737. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, Z.; Cao, W.; Chen, C.L.P.; You, J.J. Adaptive Classifier Ensemble Method Based on Spatial Perception for High-Dimensional Data Classification. IEEE Trans. Knowl. Data Eng. 2019, 33, 2847–2862. [Google Scholar] [CrossRef]

- Amgad, M.M.; Enrique, O. Selective ensemble of classifiers trained on selective samples. Neurocomputing 2022, 482, 197–211. [Google Scholar] [CrossRef]

- Lin, C.; Chen, W.; Qiu, C.; Wu, Y.; Krishnan, S.; Zou, Q. LibD3C: Ensemble classifiers with a clustering and dynamic selection strategy. Neurocomputing 2014, 123, 424–435. [Google Scholar] [CrossRef]

- Wolfe, K.; Seaman, M.A. The influence of data characteristics on interrater agreement among visual analysts. J. Appl. Behav. Anal. 2023. [Google Scholar] [CrossRef]

- Wei, L.; Wan, S.; Guo, J.; Wong, K.K. A novel hierarchical selective ensemble classifier with bioinformatics application. Artif. Intell. Med. 2017, 83, 82–90. [Google Scholar] [CrossRef]

- Chaofan, D.; Xiaoping, L.P. Classification of Imbalanced Electrocardiosignal Data using Convolutional Neural Network. Comput. Methods Programs Biomed. 2022, 214, 106483. [Google Scholar] [CrossRef]

- Douzas, G.; Bacao, F. Effective data generation for imbalanced learning using conditional generative adversarial networks. Expert Syst. Appl. 2018, 91, 464–471. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, J. CatGAN: Category-Aware Generative Adversarial Networks with Hierarchical Evolutionary Learning for Category Text Generation. Proc. AAAI Conf. Artif. Intell. 2020, 34, 8425–8432. [Google Scholar] [CrossRef]

- Wu, M. Heuristic parallel selective ensemble algorithm based on clustering and improved simulated annealing. J. Supercomput. 2018, 76, 3702–3712. [Google Scholar] [CrossRef]

- Xue, Y.; Tong, W.; Neri, F.; Zhang, Y. PEGANs: Phased Evolutionary Generative Adversarial Networks with Self-Attention Module. Mathematics 2022, 10, 2792. [Google Scholar] [CrossRef]

- Kim, D.; Joo, D.; Kim, J. TiVGAN: Text to Image to Video Generation with Step-by-Step Evolutionary Generator. IEEE Access 2020, 8, 153113–153122. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015. [Google Scholar] [CrossRef]

- Wu, Z.; He, C.; Yang, L.; Kuang, F. Attentive evolutionary generative adversarial network. Appl. Intell. 2020, 51, 1747–1761. [Google Scholar] [CrossRef]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.K.; Wang, Z.; Smolley, S.P. Least Squares Generative Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2813–2821. [Google Scholar] [CrossRef]

- Lin, Q.; Fang, Z.; Chen, Y.; Tan, K.C.; Li, Y. Evolutionary Architectural Search for Generative Adversarial Networks. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 783–794. [Google Scholar] [CrossRef]

| Discriminator (i) | Discriminator (j) | |

|---|---|---|

| Predict (0) | Predict (1) | |

| Predict (0) | ||

| Predict (1) | ||

| Category of Defects | Sample Image | Sample Number (N) | IR |

|---|---|---|---|

| Holiday coating |  | 5 | 107.2 |

| Sagging |  | 86 | 6.23 |

| Orange skin |  | 536 | 1 |

| Cracking |  | 77 | 6.96 |

| Exudation |  | 71 | 7.54 |

| Wrinkling |  | 63 | 8.51 |

| Bitty appearance |  | 13 | 41.23 |

| Blistering |  | 108 | 4.96 |

| Pinholing |  | 26 | 20.62 |

| Delamination |  | 8 | 67 |

| Generator Network | Discriminator Network |

|---|---|

| Input: Random noise (100 dimensions) and Class label C (10 dimensions) | Input: Images, (128,128,3) |

| [layer 1] Embedding, Dense, BN; Reshape to (8,8,1024) and Concatenate; ReLU; | [layer 1] Conv2D (64,64,128), stride = 2; Dropout; LeakyReLU; |

| [layer 2] Conv2DT (8,8,1024), stride = 2; ReLU; | [layer 2] Conv2D (32,32,256), stride = 2; BN; Dropout; LeakyReLU; |

| [layer 3] Conv2DT (16,16,512), stride = 2; ReLU; | [layer 3] Conv2D (16,16,512), stride = 2; BN; Dropout; LeakyReLU; |

| [layer 4] Conv2DT (32,32,256), stride = 2; ReLU; | [layer 4] Conv2D (8,8,1024), stride = 2; BN; Dropout; LeakyReLU; |

| [layer 5] Conv2DT (64,64,128), stride = 2; ReLU; | [layer 5] Flatten (1,1,1), Dropout; Dense; Sigmoid/Least Squares; |

| [layer 6] Conv2DT (128,128,3), stride = 2; Tanh; | [layer 6] Flatten (1,1,1), Dropout; Dense; Softmax; |

| Output: Generated images, (128,128,3) | Output: Accuracy: Real or Fake (probability); Sample class label C |

| Hyperparameters | Default Values |

|---|---|

| Number of iterations | 2000 |

| Population size | 100 |

| Updating steps of discriminator per iteration | 2 |

| Number of variation mutations | 2 |

| Number of crossover mutation | 1 |

| Probability of variation mutations | 0.1 |

| Probability of crossover mutation | 0.9 |

| Mini-batch size | 64 |

| Learning rate of generator | 0.0004 |

| Learning rate of discriminator | 0.0001 |

| Dropout | 0.5 |

| Slope of LeakyReLU | 0.2 |

| Optimizer | RMSProp |

| Initial learning rate of optimizer | 0.0002 |

| Initial annealing temperature | 100 |

| Annealing coefficient | 0.9 |

| Methods | Inception Score (IS) ↑ | Fréchet Inception Distance (FID) ↓ |

|---|---|---|

| Real data | 11.68 ± 0.14 | 7.6 |

| -Standard CNN- | ||

| DCGAN [68] | 6.52 ± 0.09 | 36.3 |

| WGAN-GP [34] | 6.61 ± 0.33 | 39.59 |

| E-GAN [22] | 6.93 ± 0.08 | 35.3 |

| AEGAN [70] | 6.43 ± 0.52 | 49.68 |

| LSGAN [71] | - | 44.16 |

| EASGAN [72] | 7.48 ± 0.06 | 21.94 |

| E-GAN-GP (μ = 1) [22] | 7.18 ± 0.05 | 32.8 |

| E-GAN-GP (μ = 2) [22] | 7.25 ± 0.11 | 31.4 |

| E-GAN-GP (μ = 4) [22] | 7.33 ± 0.08 | 29.5 |

| E-GAN-GP (μ = 8) [22] | 7.35 ± 0.07 | 27.4 |

| (ours) IGASEN-EMWGAN (τ = 1) | 7.11 ± 0.06 | 32.3 |

| (ours) IGASEN-EMWGAN (τ = 2) | 7.18 ± 0.11 | 29.7 |

| (ours) IGASEN-EMWGAN (τ = 4) | 7.31 ± 0.09 | 27.8 |

| (ours) IGASEN-EMWGAN (τ = 8) | 7.56 ± 0.07 | 26.3 |

| (ours) IGASEN-EMWGAN-GP (τ = 1) | 7.21 ± 0.05 | 30.2 |

| (ours) IGASEN-EMWGAN-GP (τ = 2) | 7.33 ± 0.07 | 28.9 |

| (ours) IGASEN-EMWGAN-GP (τ = 4) | 7.68 ± 0.10 | 26.5 |

| (ours) IGASEN-EMWGAN-GP (τ = 8) | 7.73 ± 0.06 1 | 25.4 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bu, H.; Hu, C.; Yuan, X.; Ji, X.; Lyu, H.; Zhou, H. An Image Generation Method of Unbalanced Ship Coating Defects Based on IGASEN-EMWGAN. Coatings 2023, 13, 620. https://doi.org/10.3390/coatings13030620

Bu H, Hu C, Yuan X, Ji X, Lyu H, Zhou H. An Image Generation Method of Unbalanced Ship Coating Defects Based on IGASEN-EMWGAN. Coatings. 2023; 13(3):620. https://doi.org/10.3390/coatings13030620

Chicago/Turabian StyleBu, Henan, Changzhou Hu, Xin Yuan, Xingyu Ji, Hongyu Lyu, and Honggen Zhou. 2023. "An Image Generation Method of Unbalanced Ship Coating Defects Based on IGASEN-EMWGAN" Coatings 13, no. 3: 620. https://doi.org/10.3390/coatings13030620

APA StyleBu, H., Hu, C., Yuan, X., Ji, X., Lyu, H., & Zhou, H. (2023). An Image Generation Method of Unbalanced Ship Coating Defects Based on IGASEN-EMWGAN. Coatings, 13(3), 620. https://doi.org/10.3390/coatings13030620