1. Introduction

Human health is closely affected by the environment, particularly the quality and safety of food. According to Acosta et al. [

1], the global consumption of veterinary antibiotics in livestock and poultry farming was estimated to have reached 110,777 tons in 2019 and is projected to increase to 143,481 tons by 2040. This reflects the rising demand for animal-based food products and the need for effective control of infectious diseases in livestock populations. To address these needs, the use of veterinary antibiotics has been steadily increasing [

2,

3]. To mitigate the risk of antimicrobial resistance, many countries have restricted the use of veterinary antibiotics to therapeutic purposes only; for example, Europe and the USA have banned their use as growth promoters [

4]. Veterinary antibiotics play a critical role in treating and preventing animal diseases, mitigating malnutrition, and regulating physiological, psychological, and biological functions to support animal health [

5]. However, residues of these veterinary antibiotics in meat products pose considerable risks to human health. These risks include antimicrobial resistance [

6], carcinogenic effects, acute toxicity, allergic reactions, and disruption of the intestinal microbiota [

7,

8,

9,

10].

Colloidal gold immunoassay (CGIA) is a rapid detection method based on antigen–antibody immune reactions and is widely used in veterinary antibiotic residue detection, food safety testing, and environmental monitoring [

11,

12,

13,

14]. Due to its fast detection speed, ease of operation, and minimal equipment requirements, CGIA has become the preferred method for on-site rapid screening. Antigen–antibody test cards, which are commonly employed in CGIA, are extensively utilized in medical diagnostics, animal disease prevention, food safety control, and environmental monitoring, owing to their portability, efficiency, and rapid response. For example, CGIA-based antigen–antibody test cards can be used to screen for residues of antibiotics such as sulfonamides, trimethoprim, and florfenicol in livestock. However, CGIA generally exhibits a relatively high detection limit, which restricts its suitability for high-precision detection scenarios [

15]. Sun et al. [

16] reported that the competitive indirect enzyme-linked immunosorbent assay (CI-ELISA) achieved a limit of detection (LOD) of 0.35 ng/mL for ofloxacin, while the corresponding CGIA method, showed a higher LOD of 10 ng/mL. Moreover, the interpretation of conventional antigen–antibody test cards primarily relies on visual assessment, which is prone to subjectivity and susceptible to variations in ambient lighting conditions, leading to inconsistent results and compromised reliability. However, image-based methods can keep the environment and lighting consistent. With trained models, they also reduce subjectivity in visual interpretation. For example, they can minimize differences among individuals, including those with color weakness.

In recent years, researchers worldwide have actively explored the application of image processing and artificial intelligence technologies in the interpretation of diagnostic test cards, aiming to enhance detection accuracy and efficiency. To address the challenge of missed detections caused by the difficulty of visually identifying weakly positive results, Miikki et al. [

17] constructed a windowed macro-imaging system based on Raspberry Pi to collect a dataset, and analyzed the RGB images of the test regions to interpret results. However, such RGB image-based analytical approaches often fail to account for the complexity of real-world conditions such as variable lighting and camera angles, and as a result, the system’s robustness is limited. In the frameworks described in [

18,

19], users are required to capture test card images using a smartphone or tablet and upload them to a remote server for processing. While this architecture supports centralized computation, it suffers from significant limitations in scenarios with unstable network connectivity, potentially leading to delays or failures in result delivery and thereby compromising real-time performance and reliability. Turbé et al. [

20] categorized the annotated images into two classes based on the type of HIV rapid diagnostic test (RDT), and compared the performance of support vector machines (SVMs) [

21] and convolutional neural networks (CNNs). Their study identified MobileNetV2 as the most suitable model, demonstrating that deep learning can substantially improve the reliability and generalizability of on-site rapid testing. Lee et al. [

22] proposed a deep learning-assisted diagnostic system named TIMESAVER. Unlike conventional binary classification methods, this study categorized test results into five classes based on real-world data, enabling more comprehensive analysis of false positives and false negatives. To achieve precise classification, Dastagir et al. [

23] employed YOLOv8 to detect and localize the membrane region of the test card, which serves as the region of interest (ROI) for subsequent interpretation. The extracted ROI images were then converted to grayscale and resized before being input into a custom-designed CNN model. These studies demonstrate the value of deep learning and image processing for interpreting antigen–antibody test cards. They mainly focus on single-card analysis. As a result, they lack the capability for high-throughput interpretation, which limits their overall detection efficiency. In addition, due to the scarcity of standardized benchmark datasets for test cards, most existing studies have relied on bespoke collections.

To enable more scalable and automated test card interpretation, more and more researchers have begun turning to object detection algorithms. Object detection does not involve classifying an entire image, but having the computer both find and identify the region of interest (result discrimination area) within an image that might contain multiple cards.These algorithms can automatically locate and classify the test regions within an image. This capability is crucial for high-throughput analysis, where many cards need to be processed quickly and consistently. Currently, object detection algorithms can be broadly categorized into two types: two-stage and one-stage approaches. Two-stage algorithms generate possible regions of interest and then analyze each region. This is similar to a clinician identifying suspicious areas on an X-ray and then examining them in detail. While this process usually achieves higher accuracy, it requires more computing time. Representative models in this category include the Region-based Convolutional Neural Network (R-CNN) series, which includes R-CNN [

24], Fast R-CNN [

25], and Faster R-CNN [

26]. R-CNN generates many possible regions in the image, and then classifies each region separately. This method is accurate, but very time-consuming. Fast R-CNN improves efficiency by extracting features from the image and classifying candidate boxes within the same network. Faster R-CNN further enhances the process by allowing the computer to automatically suggest the most likely regions, instead of checking every possible area. As a result, the algorithm becomes much faster while still maintaining high accuracy. In contrast, one-stage algorithms analyze the entire image in a single step, directly predicting the location and category of objects. This design is simpler and much faster, which makes it particularly suitable for real-time or embedded applications. Representative examples include Single-Shot MultiBox Detector (SSD) [

27] and the You Only Look Once (YOLO) series [

28,

29,

30,

31,

32,

33,

34,

35,

36]. Notably, YOLOv9 introduces several improvements that make it faster and more efficient: it can better capture both large and small details in an image, while keeping the model size and computing requirements relatively low. Building on this, YOLOv10 further refines the network structure and training methods, enhancing both accuracy and speed. Xie et al. [

37] proposed YOLO-ACE, an improved YOLOv10 model for vehicle and pedestrian detection in autonomous driving scenarios. They introduced a novel double distillation strategy to transfer knowledge from a stronger teacher model to a student model. In addition, they redesigned the network structure to improve both detection speed and accuracy.

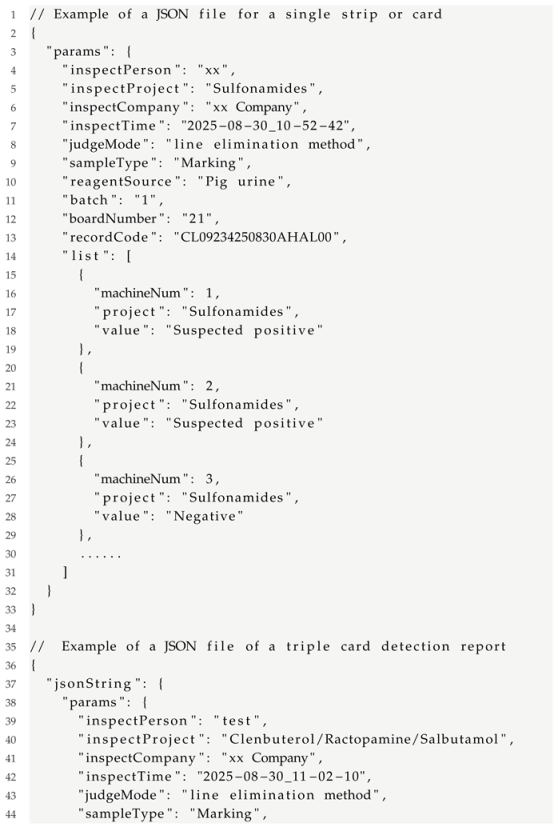

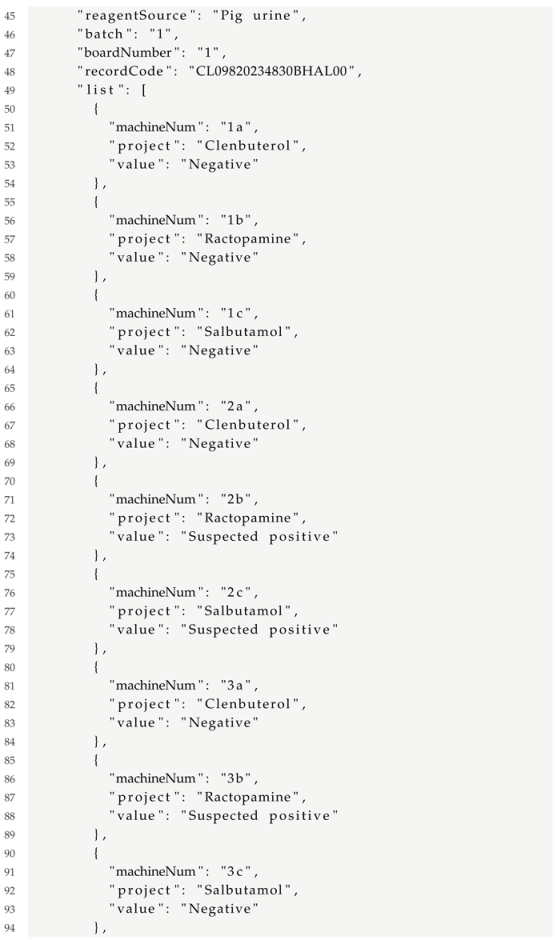

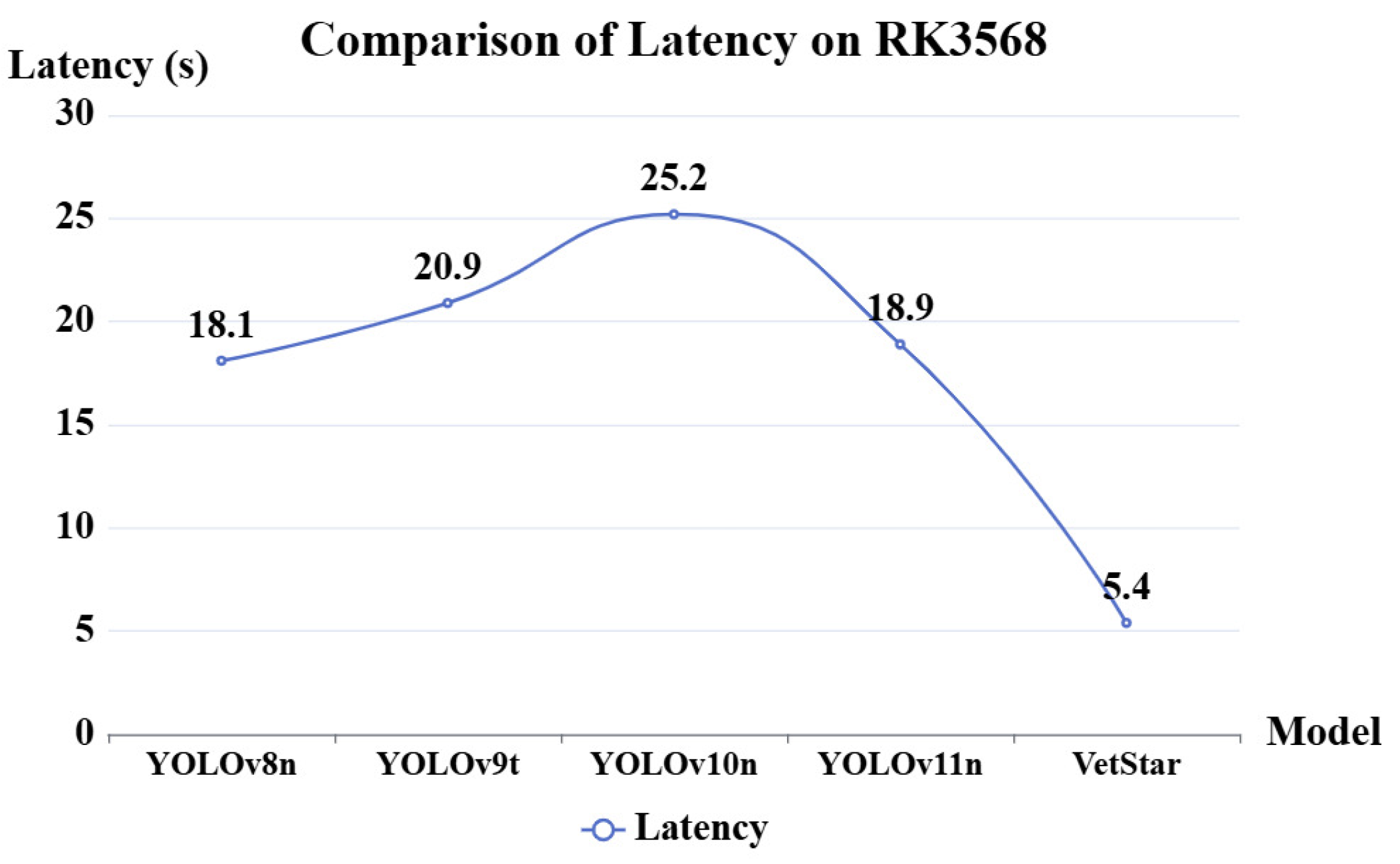

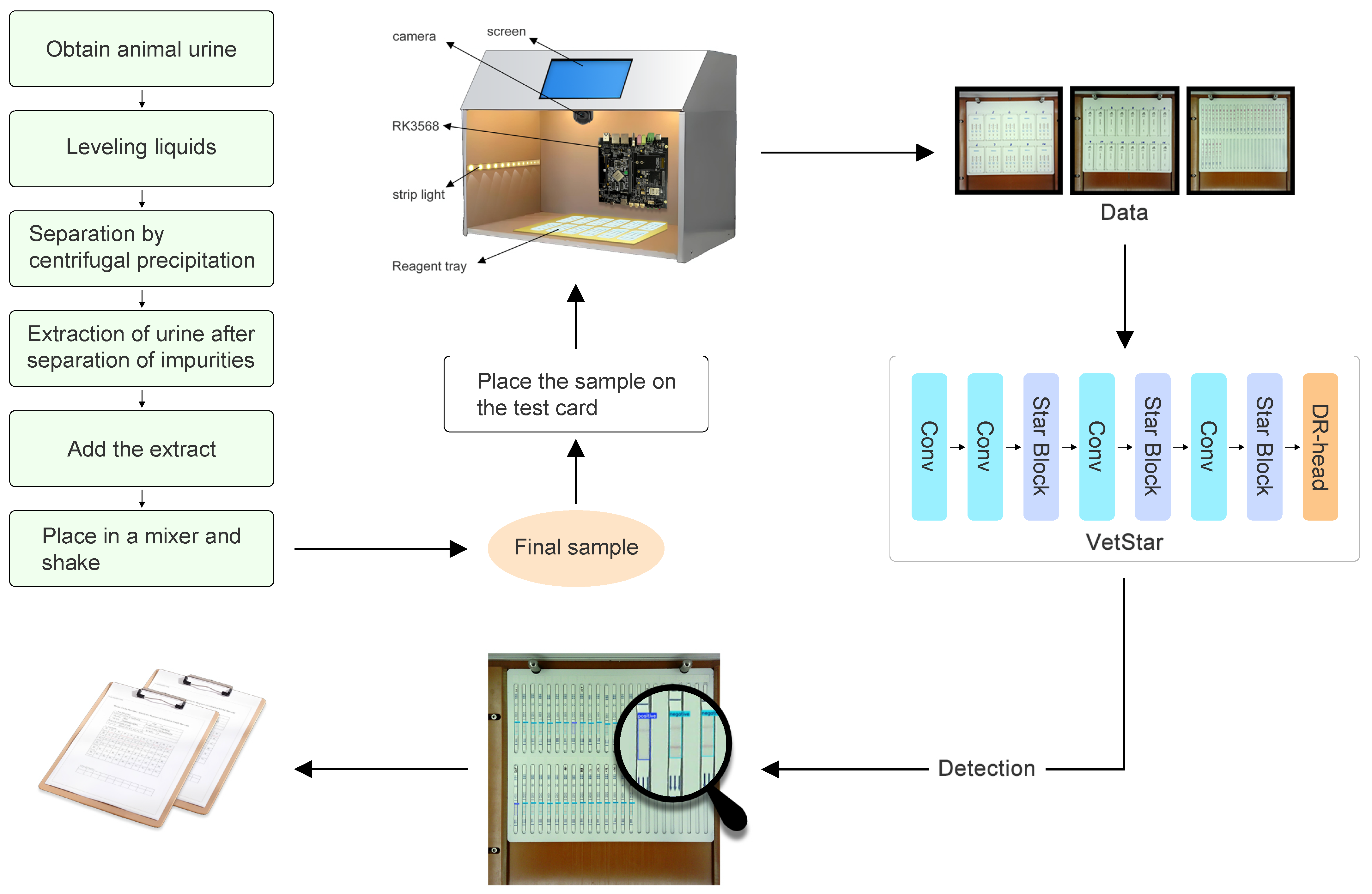

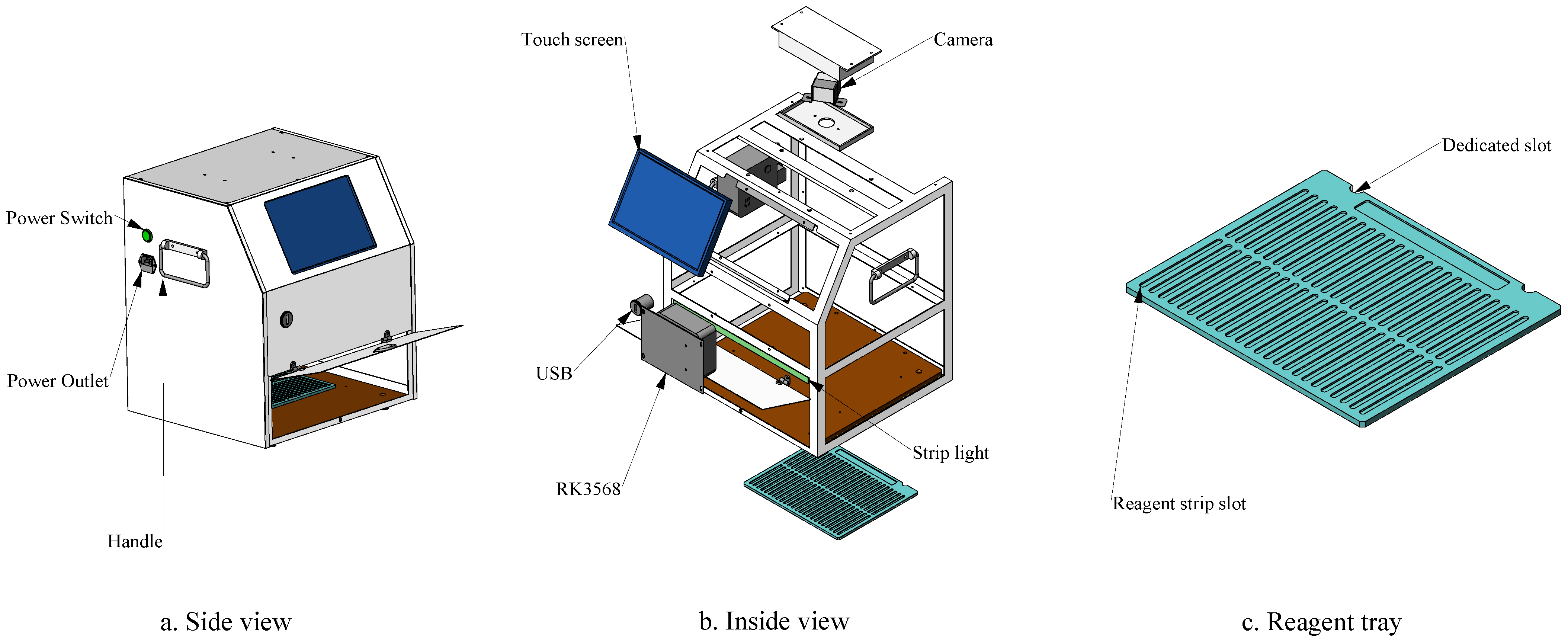

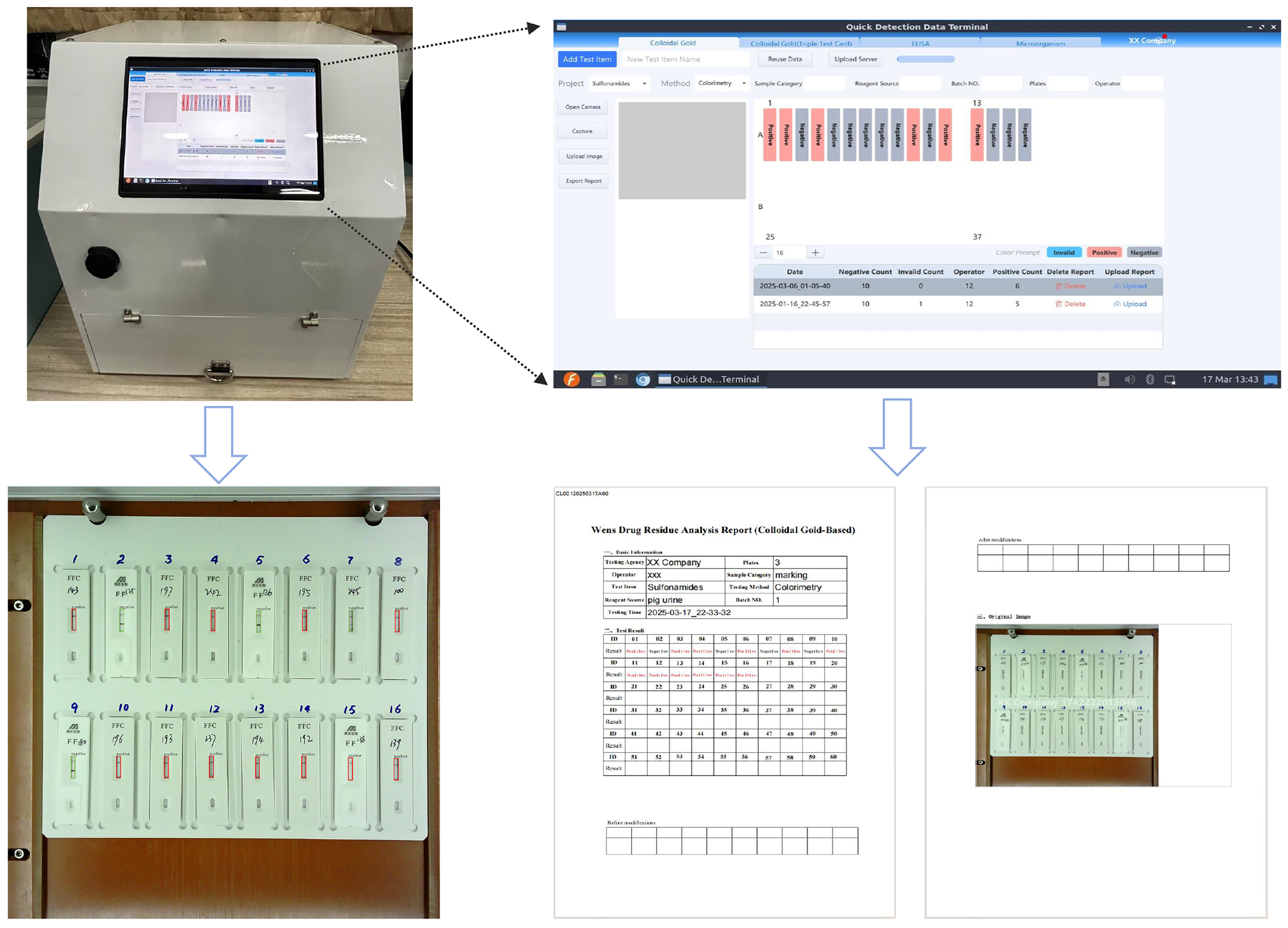

To overcome the limitations of manual interpretation and CGIA’s inherent shortcomings, we developed VetStar—a portable, cost-effective system for veterinary antibiotic residue detection for primary food safety laboratories. These laboratories, which are often located in remote areas with unstable network connectivity, require devices capable of offline, high-throughput analysis. Therefore, the system must support real-time image acquisition, result interpretation, and reporting without reliance on cloud-based services.The core challenge of this study is to efficiently perform object detection of antigen–antibody test cards on resource-constrained embedded devices. Because of the real-time demands of on-site processing, a high detection speed is essential. While the YOLO series of algorithms have achieved a good balance between speed and accuracy, our tests using YOLOv8n, YOLOv9t, YOLOv10n, and YOLOv11n to detect antigen–antibody test card images on the RK3568 platform showed that the average inference time exceeded 20 s, which exceeds the acceptable limits for practical use.

Lightweight deep learning models could potentially improve the inference speed without significantly sacrificing the accuracy. For example, Ma et al. [

38] demonstrated that the Star Operation can map input to a high-dimensional nonlinear feature space and proposed the StarNet structure as a compact and effective deep learning network. Ding et al. [

39] introduced the RepVGG architecture, which employs different topological structures during the training and inference stages. Through reparameterization, it simplifies into a single-branch structure at the inference stage, thus improving inference speed. Additionally, Yang et al. [

40] proposed a logic-based knowledge distillation method: Bridging Cross-task Protocol Inconsistency Knowledge Distillation (BCKD), a machine learning technique distinct from traditional chemical distillation. This method enhances distillation by mapping classification logits into multiple binary classification tasks, allowing the student model to achieve higher accuracy without increasing its parameter count. Building on these prior advancements, we designed a small, lightweight, and efficient object detection model tailored for interpreting antigen–antibody test cards.

The main contributions of this paper are as follows:

We develop a portable, AI-powered veterinary antibiotic residue detection system that enables rapid, high-throughput interpretation of antigen–antibody test cards, and is designed for both laboratory and field use to improve efficiency and reduce subjectivity.

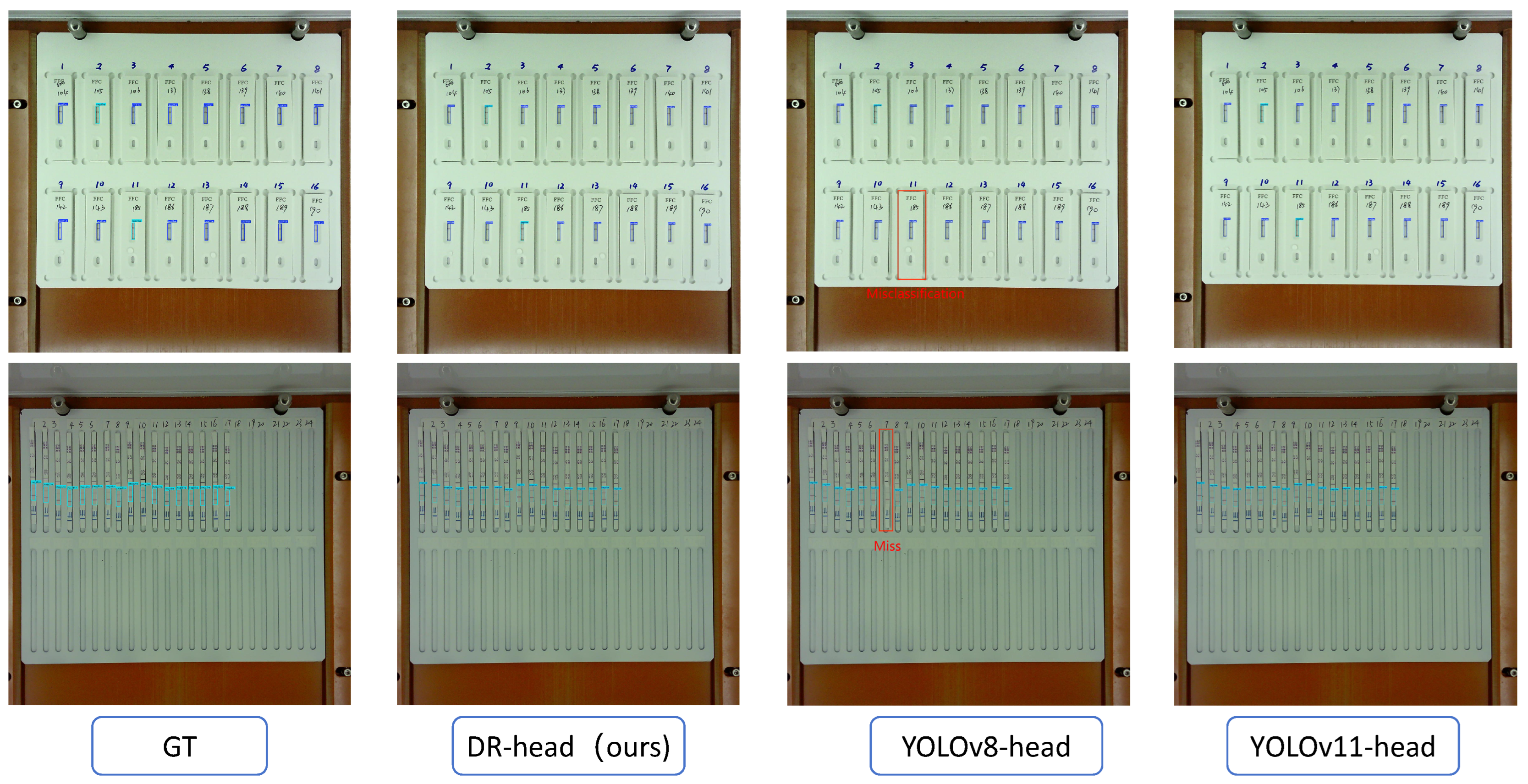

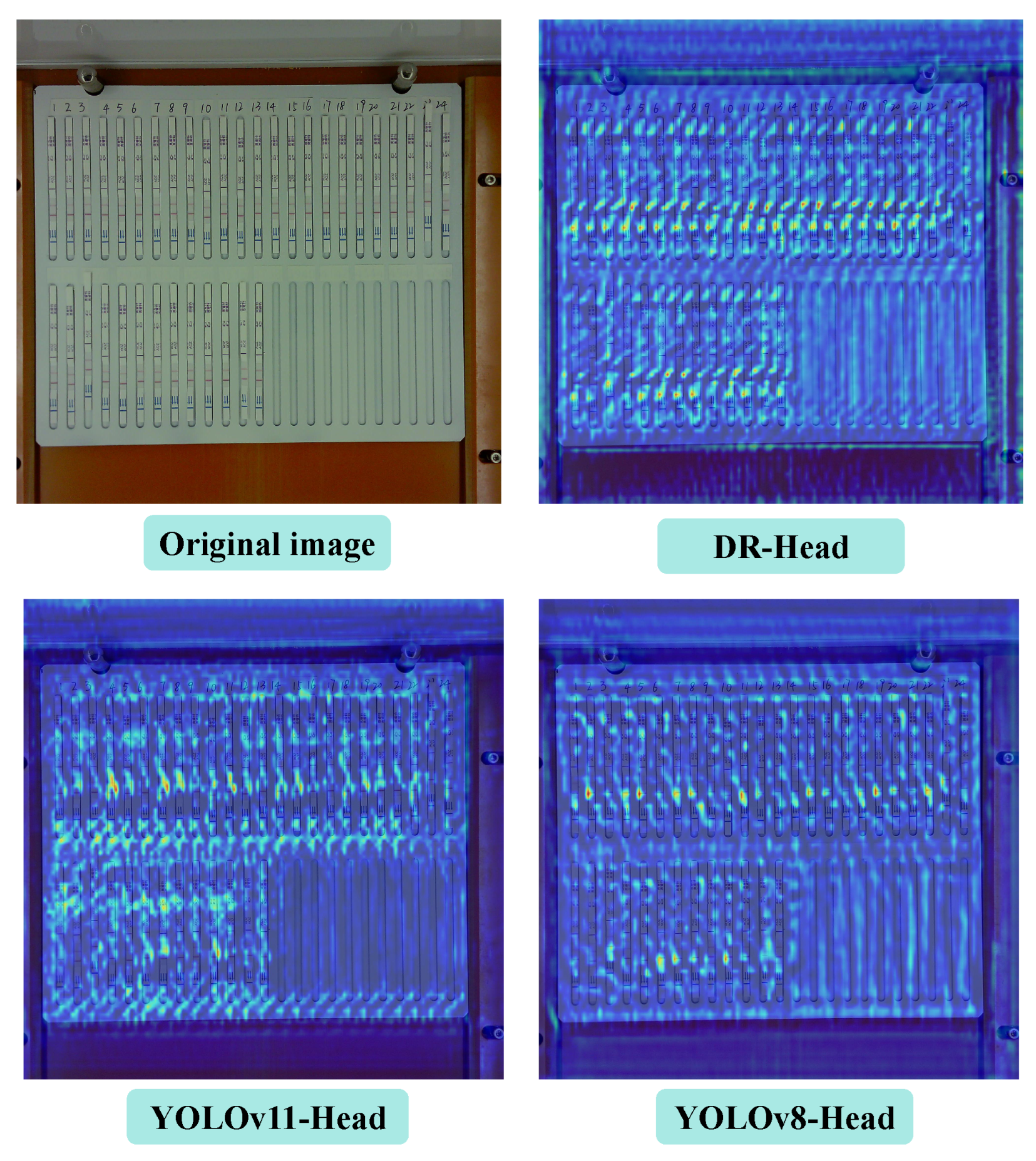

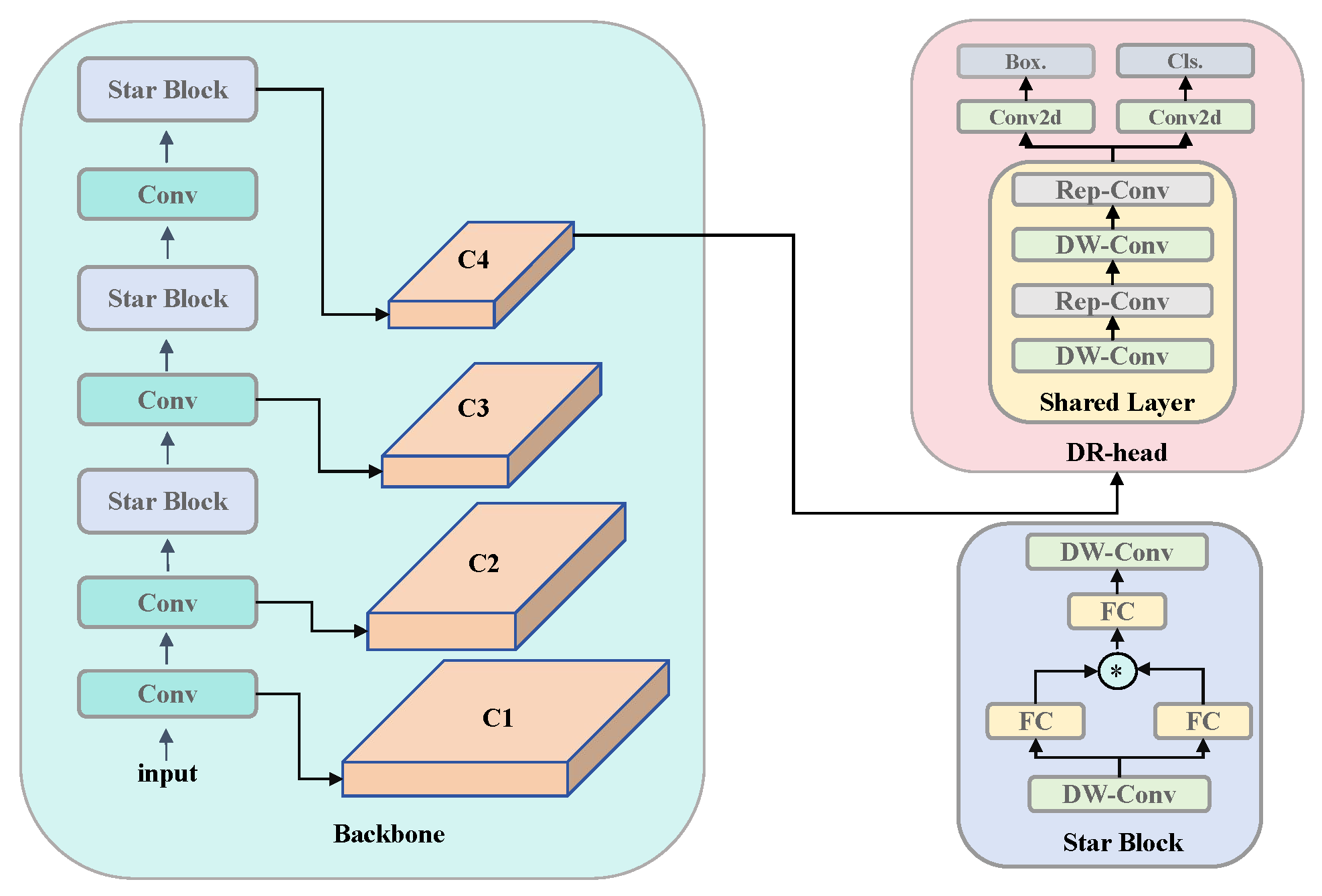

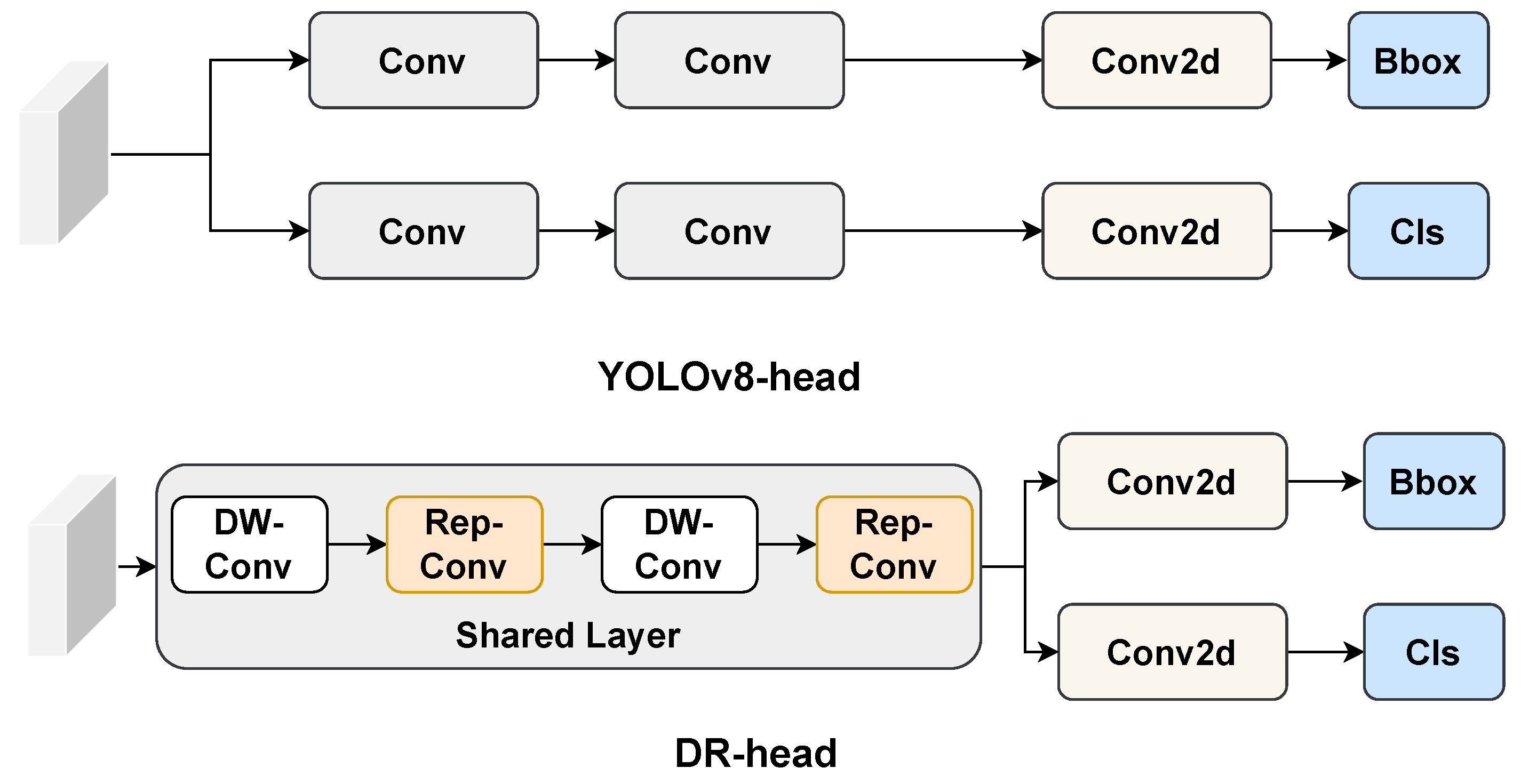

We present VetStar, a lightweight and accurate detection model tailored for embedded devices. It incorporates StarBlock for efficient feature extraction and a novel Depthwise Separable–Reparameterization Detection Head (DR-head), keeping accuracy while reducing parameters compared to YOLOv8 and YOLOv11.

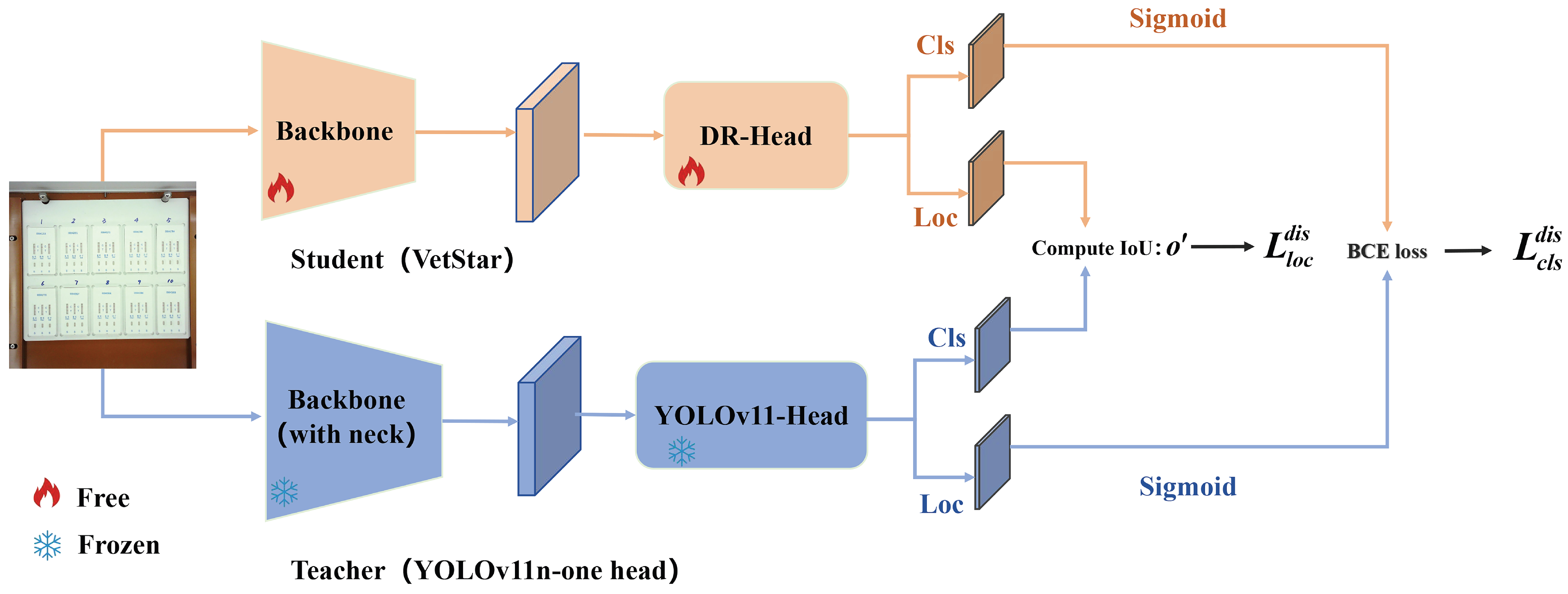

We apply BCKD to train VetStar, enabling it to distill knowledge from a larger teacher model without an increase in the parameter count, enhancing accuracy while maintaining compactness.

4. Limitations

This study has several limitations. First, while the system provides rapid and automated interpretation, a direct comparison with manual interpretation results was not included in this study. Such an analysis could provide additional insights into the advantages and limitations of automated detection. Second, although our system has already been deployed in 17 laboratories, where it is mainly used for detecting antibiotic residues in pig urine and chicken meat, the experiments reported in this paper were conducted only on pig urine samples. Other livestock-derived products, such as milk, meat, and poultry, could also be used for further validation to demonstrate the robustness and generalizability of the system. Future research will extend the evaluation to these sample types. Third, the evaluation of bottom plate color was limited to qualitative visual analysis. Quantitative measurements such as luminance or signal-to-noise ratio, together with detailed lighting spectrum and color-rendering index characterization, will be included in future work. Finally, the cost-effectiveness of the proposed system was not compared with conventional methodologies. A systematic cost analysis in future work would help highlight the economic advantages of our device for large-scale use.

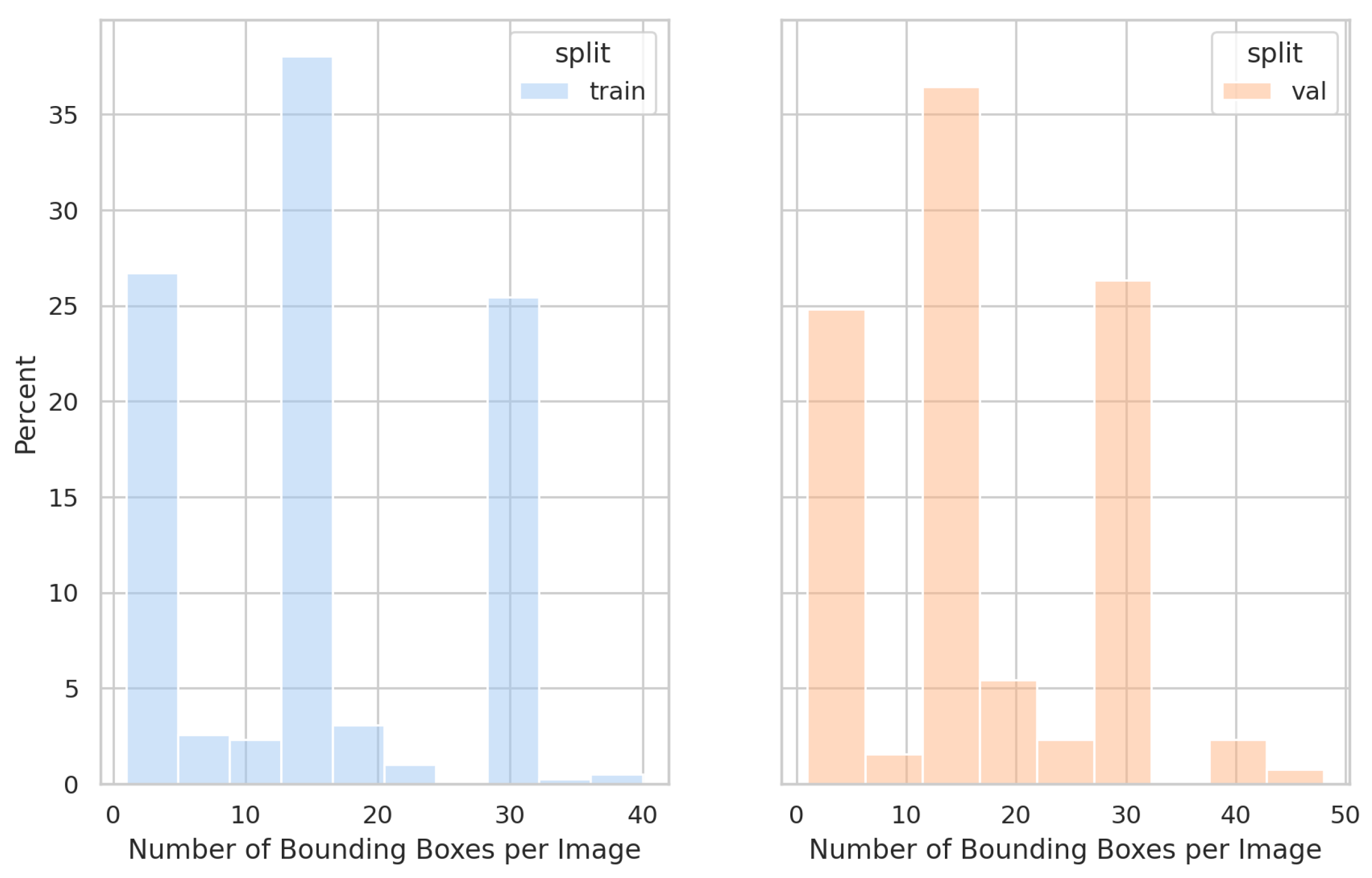

Furthermore, the data were collected under fixed lighting conditions, which resulted in a limited dataset. No external datasets were used to validate the proposed model. Additionally, due to the challenges in collecting invalid results, we did not include invalid class modeling in this study. Future research will focus on gathering data from diverse regions, incorporating invalid classes, and conducting robustness testing to evaluate the model’s generalization under various conditions.

5. Conclusions

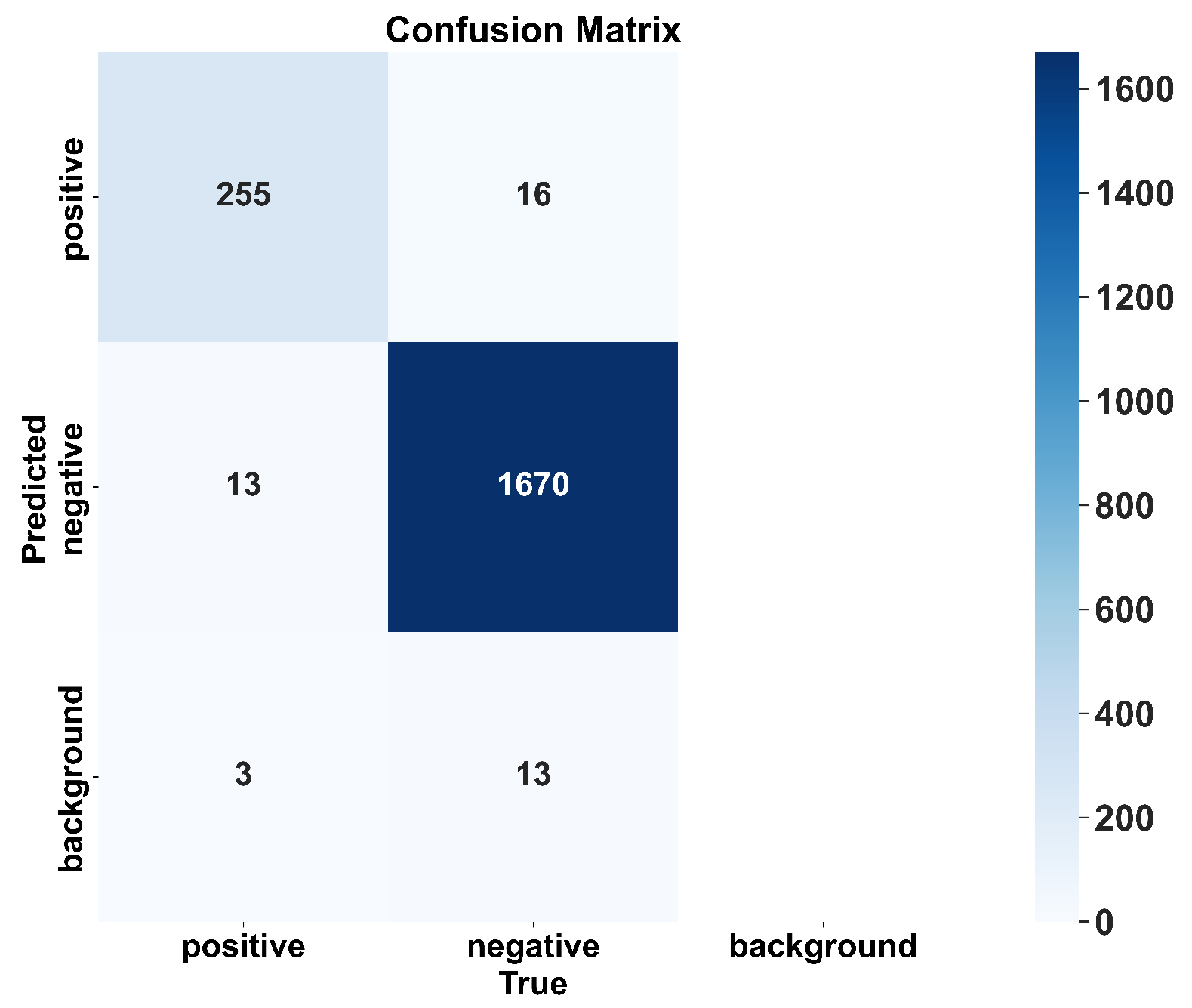

In veterinary antibiotic residue detection, colloidal gold antigen–antibody tests are often manually interpreted. However, due to variability in personnel and lighting conditions, interpretation results can be inconsistent. To accelerate the automation process in food safety laboratories, we developed a veterinary antibiotic residue detection system that operates on an embedded platform. Traditional intelligent interpretation methods for antigen–antibody test cards typically treat the task as an image classification problem. However, in order to achieve high-throughput result interpretation, we employed an object detection approach and implemented an efficient antigen–antibody test card detection system on the RK3568 chip. To improve detection efficiency, we proposed the VetStar algorithm, which is designed specifically for rapid and accurate antigen–antibody test card on the embedded hardware. VetStar incorporates a custom feature extraction module, StarBlock, which is known for its strong representation capabilities even in shallow networks. Additionally, we developed a lightweight detection head based on the YOLOv8 architecture, tailored for the antigen–antibody test card interpretation task. Compared to the YOLO series models evaluated in this study, VetStar demonstrates significant advantages in terms of model size, although a slight gap in detection accuracy remains. To further improve accuracy, we incorporated the logic-based knowledge distillation method, BCKD, using the YOLOv11n model with a single detection head as the teacher. After distillation, VetStar’s mAP50 accuracy increased to 97.4%, narrowing the accuracy gap with YOLOv11n to just 0.4%. Deployment experimental on the RK3568 embedded device revealed that VetStar is 4.6 times faster than YOLOv10n and 13.5 s faster than YOLOv11n, which achieved the highest mAP50. In conclusion, the veterinary antibiotic residue detection system we developed delivers both high detection accuracy and fast inference speed, making it suitable for the high-throughput result interpretation demands of colloidal gold antigen–antibody test cards in food safety laboratories.