Evaluating the Feasibility and Acceptability of a Prototype Hospital Digital Antibiotic Review Tracking Toolkit: A Qualitative Study Using the RE-AIM Framework

Abstract

1. Introduction

Study Purpose

2. Results

2.1. Key User-Reported Themes on the DARTT Intervention

2.1.1. Theme 1: Tailoring System Functionalities and Design

“There are too many data fields, and I’m not sure if having all of those questions…is worthwhile.”[P5, Infectious Diseases Consultant]

“So, in another [Health Board], antibiotics are ‘locked down’ to a much greater degree, and you need an authorisation code from the on-call microbiologist to prescribe.”[P11, Clinical Pharmacist]

“Could you have a calculator on another tab for Vancomycin and Gentamicin? Then you can click it and calculate [dosage] on the Tracker.”[P12, Advanced Nurse Practitioner]

2.1.2. Theme 2: Bridging the Technology Gap

“IT [Information Technology] in the NHS generally doesn’t work for what you need. The computer in my office is 20 years old! The problem is also Wi-Fi. We have upload speeds of like 1.”[P15, Infection Surveillance Nurse]

“There’s a massive fear around electronic prescribing. I work with a lot of practitioners who have IT issues and find it difficult to navigate online.”[P14, Advanced Nurse Practitioner]

“I recently did my [NHS prescribing] online training and found the video training really useful, especially for seeing the functionality.”[P2, Consultant Physician]

“You need a named person to contact for advice, so if I come across something on the Tracker I’m not sure about, I can call for advice and say: ‘How do I deal with this?’.”[P17, Health Service User]

2.1.3. Theme 3: Maintaining Organisational Leadership

“Don’t just put it out and expect it to be taken up—it won’t be. It needs to be engaged with and sold.”[P15, Infection Surveillance Nurse]

“If the medical director, director of nursing and director of pharmacy all say, “We support this”, people are more likely to use it.”[P3, Consultant Microbiologist]

“Engage patients as well—that helps build trust and endorse the value of the whole project.”[P18, Health Service User]

“Maybe having two versions, a shorter and a longer one, would work better. You could pre-record it and play it during staff meetings.”[P10, Clinical Pharmacist]

2.1.4. Theme 4: Lessons Learned and Sharing of Experiences

“Once people start to use it, get their feedback on benefits and share with others… and if something isn’t right, review and change it.”[P11, Clinical Pharmacist]

“Individual feedback has to be contextualised and fairly diplomatic, because if it’s not done carefully, it could be perceived as criticism.”[P7, Resident Physician]

“It would be good to present some data at team meetings, and compare performance to other hospitals, just to keep it fresh and maintain the benefits.”[P9, Clinical Pharmacist]

2.2. User-Reported Themes Related to the DARTT Prototype Mapped to RE-AIM Domains

2.3. Table of Changes: User-Informed Modifications to DARTT

3. Discussion

3.1. Strengths and Limitations

3.2. Future Implications

4. Materials and Methods

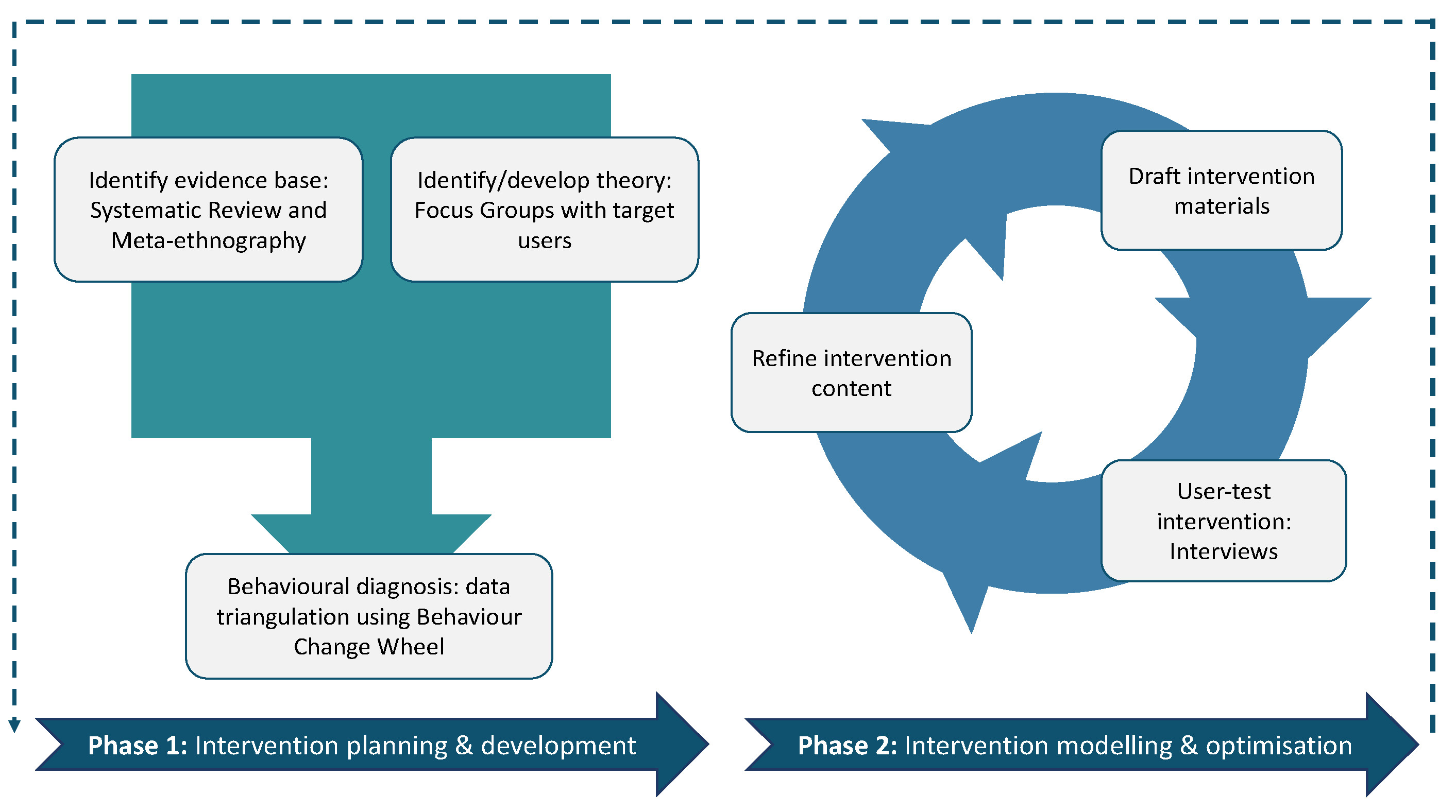

4.1. Design

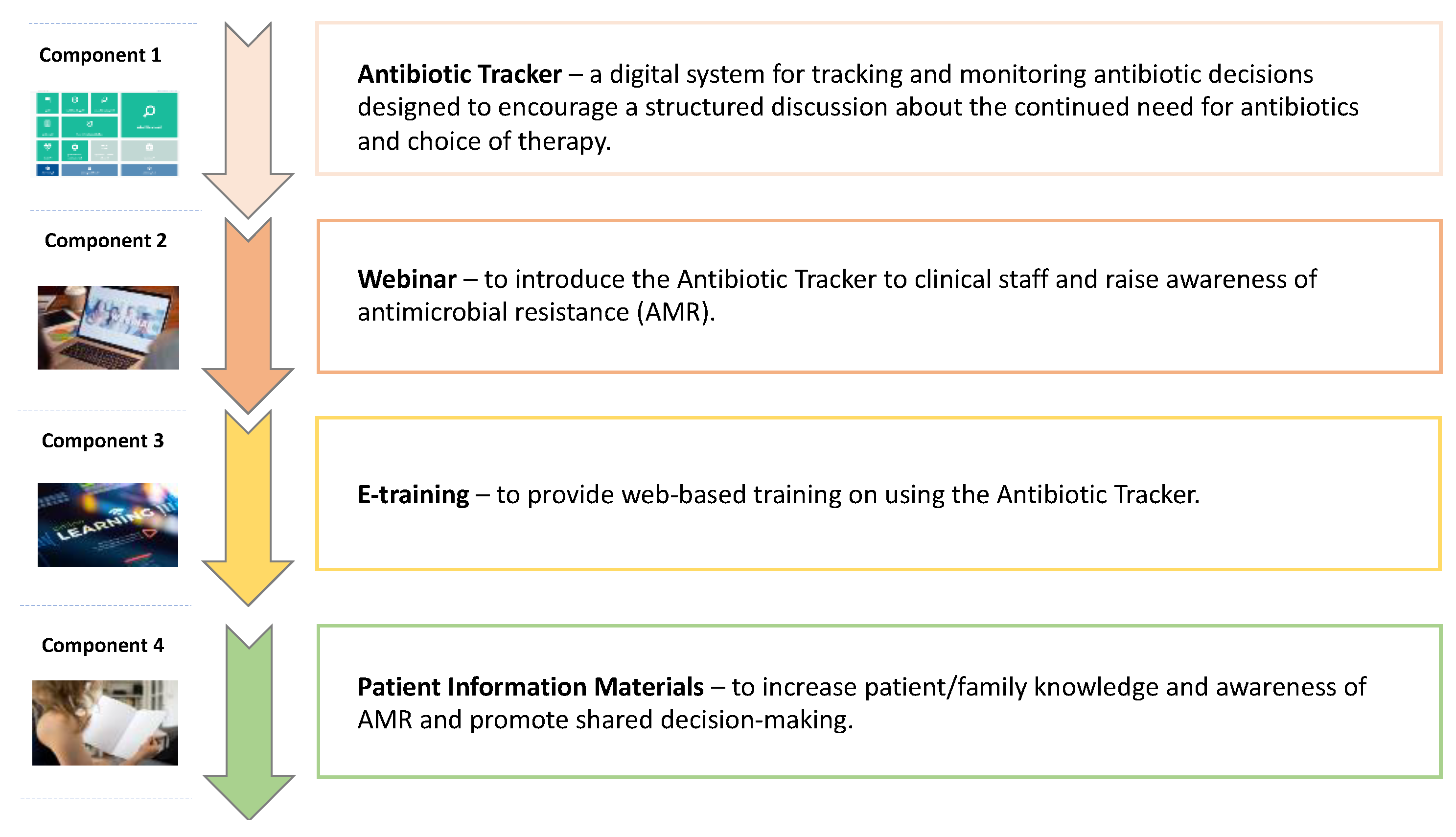

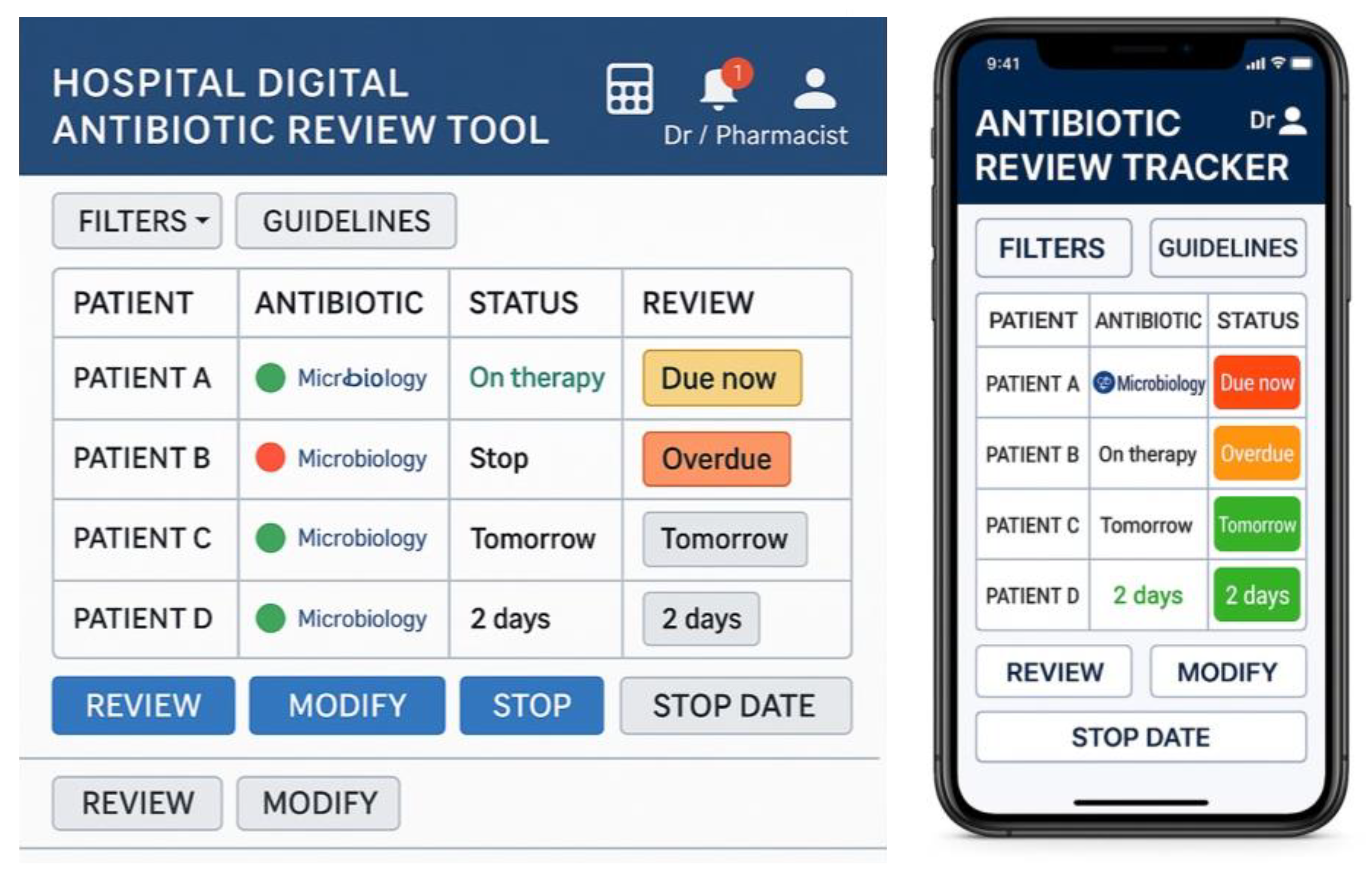

4.2. Prototype Intervention

4.3. Recruitment and Procedure

4.4. Data Analysis

4.4.1. Interview Data

4.4.2. Mapping the User-Informed Modifications to DARTT

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Correction Statement

Abbreviations

| AMR | Antimicrobial Resistance |

| APEASE | Acceptability, Practicability, Effectiveness, Affordability, Side-effects, Equity |

| COREQ | Consolidated Criteria for Reporting Qualitative Research |

| DARTT | Digital Antibiotic Review Tracking Toolkit |

| DHT | Digital Health Technologies |

| eMMS | Electronic Medication Management System |

| HCPs | Healthcare Professionals |

| MRC | Medical Research Council |

| NHS | National Health System |

| RE-AIM | Reach, Effectiveness, Adoption, Implementation, Maintenance |

| ToC | Table of Changes |

References

- Rawson, T.M.; Zhu, N.; Galiwango, R.; Cocker, D.; Islam, M.S.; Myall, A.; Vasikasin, V.; Wilson, R.; Shafiq, N.; Das, S.; et al. Using digital health technologies to optimise antimicrobial use globally. Lancet Digit. Health. 2024, 6, e914–e925. [Google Scholar] [CrossRef] [PubMed]

- Public Health England. English Surveillance Programme for Antimicrobial Utilisation and Resistance (ESPAUR) Report 2023 to 2024. Available online: https://assets.publishing.service.gov.uk/media/6734e208b613efc3f1823095/ESPAUR-report-2023-2024.pdf (accessed on 1 June 2025).

- Sahota, R.S.; Rajan, K.K.; Comont, J.M.; Lee, H.H.; Johnston, N.; James, M.; Patel, R.; Nariculam, J. Increasing the documentation of 48- hour antimicrobial reviews. BMJ Open Qual. 2020, 9, e000805. [Google Scholar] [CrossRef]

- Skivington, K.; Matthews, L.; Simpson, S.A.; Craig, P.; Baird, J.; Blazeby, J.M.; Boyd, K.A.; Craig, N.; French, D.P.; McIntosh, E.; et al. A new framework for developing and evaluating complex interventions: Update of Medical Research Council guidance. Br. Med. J. 2021, 374, n2061. [Google Scholar] [CrossRef]

- West, R.; Michie, S.; Atkins, L.; Chadwick, P.; Lorencatto, F. Achieving Behaviour Change: A Guide for Local Government and Partners. Public Health England. 2020. Available online: https://assets.publishing.service.gov.uk/media/5e7b4e85d3bf7f133c923435/PHEBI_Achieving_Behaviour_Change_Local_Government.pdf (accessed on 12 May 2024).

- Levati, S.; Campbell, P.; Frost, R.; Dougall, N.; Wells, M.; Dobnaldson, C.; Hagen, S. Optimisation of complex health interventions prior to a randomised controlled trial: A scoping review of strategies used. Pilot. Feasibility Stud. 2016, 2, 17. [Google Scholar] [CrossRef] [PubMed]

- Craig, P.; Dieppe, P.; Macintyre, S.; Michie, S.; Nazareth, I.; Petticrew, M. Developing and evaluating complex interventions: The new Medical Research Council guidance. Br. Med. J. 2008, 337, a1655. [Google Scholar] [CrossRef]

- Wolfenden, L.; Bolsewicz, K.; Grady, A.; McCrabb, S.; Kingsland, M.; Wiggers, J.; Bauman, A.; Wyse, R.; Nathan, N.; Sutherland, R.; et al. Optimisation: Defining and exploring a concept to enhance the impact of public health initiatives. Health Res. Policy Syst. 2019, 17, 108. [Google Scholar] [CrossRef]

- Glasgow, R.E.; Vogt, T.M.; Boles, S.M. Evaluating the public health impact of health promotion interventions: The RE-AIM framework. Am. J. Public Health 1999, 89, 1322–1327. [Google Scholar] [CrossRef]

- Gude, W.T.; van der Veer, S.N.; de Keizer, N.F.; Coiera, E.; Peek, N. Optimising Digital Health Informatics Interventions Through Unobtrusive Quantitative Process Evaluations. Stud. Health Technol. Inform. 2016, 228, 594–598. [Google Scholar] [CrossRef] [PubMed]

- Iivari, J.; Isomäki, H.; Pekkola, S. The user—The great unknown of systems development: Reasons, forms, challenges, experiences and intellectual contributions of user involvement. Inform. Syst. J. 2010, 20, 109–117. [Google Scholar] [CrossRef]

- Rutherford, C.; Boehnke, J.R. Introduction to the special section “Reducing research waste in (health-related) quality of life research”. Qual Life Res. 2022, 31, 2881–2887. [Google Scholar] [CrossRef]

- Escoffery, C.; Lebow-Skelley, E.; Haardoerfer, R.; Boing, E.; Udelson, H.; Wood, R.; Hartman, M.; Fernandez, M.E.; Mullen, P.D. A systematic review of adaptations of evidence-based public health interventions globally. Implement. Sci. 2018, 13, 125. [Google Scholar] [CrossRef] [PubMed]

- Baker, T.B.; Gustafson, D.H.; Shah, D. How can research keep up with eHealth? Ten strategies for increasing the timeliness and usefulness of e-health research. J. Med. Internet Res. 2014, 16, e36. [Google Scholar] [CrossRef] [PubMed]

- Rawson, T.M.; Moore, L.S.P.; Hernandez, B.; Charani, E.; Castro-Sanchez, E.; Herrero, P.; Hayhoe, B.; Hope, W.; Georgiou, P.; Holmes, A. A systematic review of clinical decision support systems for antimicrobial management: Are we failing to investigate these interventions appropriately? Clin. Microbiol. Infect. 2017, 23, 524–532. [Google Scholar] [CrossRef]

- Bradbury, K.; Watts, S.; Arden-Close, E.; Yardley, L.; Lewith, G. Developing digital interventions: A methodological guide. Evid-Based Complement. Altern. Med. 2014, 1, 561320. [Google Scholar] [CrossRef] [PubMed]

- Yardley, L.; Morrison, L.; Bradbury, K.; Muller, I. The person-based approach to intervention development: Application to digital health-related behaviour change interventions. J. Med. Internet Res. 2015, 17, e30. [Google Scholar] [CrossRef]

- Yardley, L.; Spring, B.J.; Riper, H.; Morrison, L.G.; Crane, D.; Curtis, K.; Merchant, G.C.; Naughton, F.; Blandford, A. Understanding and Promoting Effective Engagement with Digital Behaviour Change Interventions. Am. J. Prev. Med. 2016, 51, 833–842. [Google Scholar] [CrossRef]

- Kim, E.D.; Kuan, K.K.; Vaghasiya, M.R.; Penm, J.; Gunja, N.; El Amrani, R.; Poon, S.K. Passive resistance to health information technology implementation: The case of electronic medication management system. Behav. Inf. Technol. 2023, 42, 2308–2329. [Google Scholar] [CrossRef]

- Zhou, X.; Kuan, K.K.Y.; Wu, Y.; Kim, E.D.; Vaghasiya, M.R.; El Amrani, R.; Penm, J. The role of human, organization, and technology in health IS success: A configuration theory approach. In Proceedings of the Pacific Asia Conference on Information Systems (PACIS), Nanchang, China, 8–12 July 2023; p. 146. Available online: https://aisel.aisnet.org/pacis2023/146 (accessed on 9 March 2025).

- Wu, Y.; Kuan, K.K.Y.; Zhou, X.; Kim, E.D.; Vaghasiya, M.R.; El Amrani, R.; Penm, J. Temporal changes in determinants of electronic medication management systems benefits: A qualitative comparative analysis. In Proceedings of the Pacific Asia Conference on Information Systems (PACIS), Nanchang, China, 8–12 July 2023; p. 154. Available online: https://aisel.aisnet.org/pacis2023/154 (accessed on 23 July 2024).

- Kim, E.D.; Kuan, K.K.; Vaghasiya, M.R.; Gunja, N.; Poon, S.K. A repeated cross-sectional study on the implementation of electronic medication management system. Inf. Technol. Manag. 2024, 25, 33–50. [Google Scholar] [CrossRef]

- Alohali, M.; Carton, F.; O’Connor, Y. Investigating the Antecedents of Perceived Threats and User Resistance to Health Information Technology: A Case Study of a Public Hospital. J. Dec. Syst. 2020, 29, 27–52. [Google Scholar] [CrossRef]

- Wojcik, G.; Ring, N.; McCulloch, C.; Willis, D.S.; Williams, B.; Kydonaki, K. Understanding the complexities of antibiotic prescribing behaviour in acute hospitals: A systematic review and meta-ethnography. Arch. Public Health 2021, 79, 134. [Google Scholar] [CrossRef]

- Wojcik, G.; Ring, N.; Willis, D.S.; Williams, B.; Kydonaki, K. Improving antibiotic use in hospitals: Development of a digital antibiotic review tracking toolkit (DARTT) using the behaviour change wheel. Psychol. Health 2023, 39, 1635–1655. [Google Scholar] [CrossRef] [PubMed]

- Michie, S.; Atkins, L.; West, R. The Behaviour Change Wheel: A Guide to Designing Interventions; Silverback Publishing: London, UK, 2014. [Google Scholar]

- Cresswell, K.M.; Bates, D.W.; Sheikh, A. Ten key considerations for the successful optimisation of large-scale health information technology. J. Am. Med. Inform. Assoc. 2017, 24, 182–187. [Google Scholar] [CrossRef] [PubMed]

- Greenhalgh, T.; Wherton, J.; Papoutsi, C.; Lynch, J.; Hughes, G.; A’Court, C.; Hinder, S.; Fahy, N.; Procter, R.; Shaw, S. Beyond adoption: A new framework for theorising and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. J. Med. Internet Res. 2017, 19, e367. [Google Scholar] [CrossRef] [PubMed]

- Gagnon, M.P.; Ghandour, E.K.; Talla, P.K.; Simonyan, D.; Godin, G.; Labrecque, M.; Ouimet, M.; Rousseau, M. Electronic health record acceptance by physicians: Testing an integrated theoretical model. J. Biomed. Inform. 2016, 60, 138–146. [Google Scholar] [CrossRef]

- Tripp, J.F.; Armstrong, D. Agile methodologies: Organisational adoption motives, tailoring, and performance. J. Comput. Inf. Syst. 2016, 58, 170–179. [Google Scholar] [CrossRef]

- Ross, J.; Stevenson, F.; Dack, C.; Pal, K.; May, C.; Michie, S.; Barnard, M.; Murray, E. Developing an implementation strategy for a digital health intervention: An example in routine healthcare. BMC Health Serv. Res. 2018, 18, 794. [Google Scholar] [CrossRef]

- Santillo, M.; Sivyer, K.; Krusche, A.; Mowbray, F.; Jones, N.; Peto, T.E.A.; Walker, A.S.; Llewelyn, M.J.; Yardley, L. Intervention planning for Antibiotic Review Kit (ARK): A digital and behavioural intervention to safely review and reduce antibiotic prescriptions in acute and general medicine. J. Antimicrob. Chemother. 2019, 74, 3362–3370. [Google Scholar] [CrossRef]

- Bakkum, M.J.; Tichelaar, J.; Wellink, A.; Richir, M.C.; van Agtmael, M.A. Digital learning to improve safe and effective prescribing: A systematic review. Clin. Pharmacol. Ther. 2019, 106, 1236–1245. [Google Scholar] [CrossRef]

- Jones, A.S.; Isaac, R.E.; Price, K.L.; Price, K.L.; Plunkett, A.C. Impact of Positive Feedback on Antimicrobial Stewardship in a Pediatric Intensive Care Unit: A Quality Improvement Project. Pediatr. Qual. Saf. 2019, 4, e206. [Google Scholar] [CrossRef]

- British Medical Association. Technology, Infrastructure and Data Supporting NHS Staff. 2019. Available online: https://www.bma.org.uk/media/2080/bma-vision-for-nhs-it-report-april-2019.pdf (accessed on 17 October 2024).

- Yardley, L.; Morrison, L.G.; Andreou, P.; Joseph, J.; Little, P. Understanding reactions to an internet-delivered health-care intervention: Accommodating user preferences for information provision. BMC Med. Inform. Decis. Mak. 2010, 10, 52. [Google Scholar] [CrossRef]

- Health Information and Quality Authority. e-Prescribing: An International Review. 2018. Available online: https://www.hiqa.ie/sites/default/files/2018-05/ePrescribing-An-Intl-Review.pdf (accessed on 3 February 2025).

- Lyng, H.B.; Macrae, C.; Guise, V.; Haraldseid-Driftland, C.; Fagerdal, B.; Schibevaag, L.; Alsvik, J.G.; Wiig, S. Balancing adaptation and innovation for resilience in healthcare—A metasynthesis of narratives. BMC Health Serv. Res. 2021, 21, 759. [Google Scholar] [CrossRef] [PubMed]

- Tong, A.; Sainsbury, P.; Craig, J. Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. Int. J. Qual. Health Care 2007, 19, 349–357. [Google Scholar] [CrossRef] [PubMed]

- Lincoln, Y.S.; Guba, E.G. Naturalistic Inquiry; Sage Publications: Beverly Hills, CA, USA, 1985. [Google Scholar]

- Connell, L.E.; Carey, R.N.; de Bruin, M.; Rothman, A.J.; Johnston, M.; Kelly, M.P.; Michie, S. Links between behaviour change techniques and mechanisms of action: An expert consensus study. Ann. Behav. Med. 2019, 53, 708–720. [Google Scholar] [CrossRef]

- Holtrop, J.S.; Rabin, B.A.; Glasgow, R.E. Qualitative approaches to use of the RE-AIM framework: Rationale and methods. BMC Health Serv. Res. 2018, 18, 177. [Google Scholar] [CrossRef]

- Kirby, E.; Broom, A.; Overton, K.; Kenny, K.; Post, J.J.; Broom, J. Reconsidering the nursing role in antimicrobial stewardship: A multisite qualitative interview study. BMJ Open 2020, 10, e042321. [Google Scholar] [CrossRef] [PubMed]

- Wiklund, M.E.; Kendler, J.; Strochlic, A. Usability Testing of Medical Devices, 2nd ed.; CRC Press: Florida, FL, USA, 2016. [Google Scholar]

- Ritchie, J.; Lewis, J. Qualitative Research Practice: A Guide for Social Science Students and Researchers, 2nd ed.; SAGE Publications Ltd.: London, UK, 2013. [Google Scholar]

- Moore, G.F.; Audrey, S.; Barker, M.; Bond, L.; Bonell, C.; Hardeman, W.; Moore, L.; O’Cathain, A.; Tinati, T.; Wight, D.; et al. Process evaluation of complex interventions: Medical Research Council guidance. Br. Med. J. 2015, 350, h1258. [Google Scholar] [CrossRef]

- Bradbury, K.; Morton, K.; Band, R.; van Woezik, A.; Grist, R.; McManus, R.J.; Little, P.; Yardley, L.; Aslani, P. Using the person-based approach to optimise a digital intervention for the management of hypertension. PLoS ONE 2018, 13, e0196868. [Google Scholar] [CrossRef]

- Smith, R.C.; Loi, D.; Winschiers-Theophilus, H.; Huybrechts, L.; Simonsen, J. (Eds.) Routledge International Handbook of Contemporary Participatory Design, 1st ed.; Routledge: London, UK, 2025. [Google Scholar]

| Themes | RE-AIM Domains | ||||

|---|---|---|---|---|---|

| Reach | Effectiveness | Adoption | Implementation | Maintenance | |

| Tailoring System Functionalities and Design | ✓ | ✓ | |||

| Bridging the Technology Gap | ✓ | ✓ | |||

| Maintaining Organisational Leadership | ✓ | ✓ | |||

| Lessons Learned and Sharing of Experiences | ✓ | ✓ | |||

| Component 1: Antibiotic Tracker | ||||

|---|---|---|---|---|

| Purpose of Change | Issues Targeted | Modifications Made | Incorporated BCTs * | Mechanisms of Action (↑ COM-B) * |

| Improve design and functionality. | Tracker perceived as onerous; too many alerts; risk of bypassing reviews; limited integration with other systems. |

| Environmental restructuring (dashboard, streamlined process); Prompts/cues (prioritised alerts); Adding objects to the environment (medical calculator). Feedback on behaviour (user tracking). Instruction on how to perform behaviour (links to guidelines). Behavioural regulation (mandatory fields, authorisation codes). | Physical Opportunity (easier to interact with the system); Psychological Capability (support decision-making with summaries/tools); Reflective Motivation (reduced burden increases intention to comply). |

| Support and feedback for healthcare professionals. | Lack of technical support and feedback mechanisms. |

| Instruction on how to perform the behaviour (manuals, help tools); Social support (practical) (IT support, peer roles); Feedback on behaviour (emails); Social support (emotional) (champion roles). | Psychological Capability (through guidance and help tools); Social Opportunity (via peer and IT support); Reflective Motivation (through feedback and recognition). |

| Component 2: Webinar | ||||

| Purpose of change | Issues targeted | Modifications made | Incorporated BCTs | Mechanisms of action (↑ COM-B) |

| Improve engagement and integration. | Webinar too long; unclear rationale for DARRT. |

| Restructuring the social environment (changing delivery format); Information about health consequences (clarifying rationale); Instruction on how to perform behaviour (engaging formats). | Physical and Social Opportunity (making engagement easier and more accessible); Reflective Motivation (clarifying purpose to enhance motivation. |

| Component 3: E-training | ||||

| Purpose of change | Issues targeted | Modifications made | Incorporated BCTs | Mechanisms of action (↑ COM-B) |

| Improve accessibility and practicality. | Difficulty accessing training; lack of practical elements. |

| Instruction on how to perform the behaviour (training content); Adding objects to the environment (access links); Habit formation (embedded in induction); Behavioural practice/rehearsal (interactive elements). | Physical Opportunity (easier access); Psychological Capability (hands-on learning); Automatic Motivation (routine through induction). |

| Functionality | Description |

|---|---|

| Login and Role Recognition | Secure login with role-based access for doctors and pharmacists |

| Dashboard Overview | Displays patient list, antibiotic data, review due dates, and traffic light status. |

| Traffic Light System | Colour-coded indicators (Red = overdue, Amber = due within 24 h, Green = reviewed and up to date) for triage. |

| Dosing Calculator | Supports accurate, guideline-based antibiotic prescribing and reduces clinician workload. |

| Reminders & Alerts | Real-time prompts for due/overdue reviews, updated lab results, and non-guideline prescriptions. |

| Microbiology Integration | Links lab results to prescriptions; updates automatically with new sensitivities. |

| Guideline Access | Contextual links to local/national antibiotic prescribing guidelines. |

| Review Actions | Prescribers can view details, document decisions (continue, stop, change), and mark as reviewed. |

| Communication Tools | Shared notes and alerts notify pharmacy or microbiology teams of updates or issues. |

| Audit & Feedback | Personal dashboards with review compliance, decision history, and performance metrics. |

| Characteristics | Total Number of Participants (n = 18) |

|---|---|

| Healthcare professionals | 15 |

| Health service users | 3 |

| Gender | |

| Male | 9 |

| Female | 9 |

| Age range | |

| 21–30 | 3 |

| 31–40 | 4 |

| 41–50 | 5 |

| 51–60 | 5 |

| >60 | 1 |

| Ethnicity | |

| White British | 17 |

| Black African | 1 |

| Current clinical position and pseudonyms (HCPs only) | |

| Consultant physician—* Robert [P1], Tom [P2] | 2 |

| Microbiology & infectious diseases consultant—Daniel [P3], Peter [P4] | 2 |

| Infectious diseases consultant—Miles [P5] | 1 |

| Medical trainees (FY1/2/Registrar)—Maya [P6], Wesley [P7], Matthew [P8] | 3 |

| Clinical pharmacist—Alex [P9], Kirstin [P10], Jane [P11] | 3 |

| Advanced nurse practitioner—Anna [P12], Caroline [P13], Hannah [P14] | 3 |

| Nurse (infection surveillance)—Mary [P15] | 1 |

| Years of clinical experience | |

| <5 | 3 |

| 5–10 | 2 |

| 21–30 | 8 |

| >30 | 2 |

| Health service users’ occupation and pseudonyms | |

| National Health policy officer—Sophie [P16] | 1 |

| Secondary school teacher—Veronica [P17] | 1 |

| Retired engineer—James [P18] | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Colquhoun, G.; Ring, N.; Smith, J.; Willis, D.; Williams, B.; Kydonaki, K. Evaluating the Feasibility and Acceptability of a Prototype Hospital Digital Antibiotic Review Tracking Toolkit: A Qualitative Study Using the RE-AIM Framework. Antibiotics 2025, 14, 660. https://doi.org/10.3390/antibiotics14070660

Colquhoun G, Ring N, Smith J, Willis D, Williams B, Kydonaki K. Evaluating the Feasibility and Acceptability of a Prototype Hospital Digital Antibiotic Review Tracking Toolkit: A Qualitative Study Using the RE-AIM Framework. Antibiotics. 2025; 14(7):660. https://doi.org/10.3390/antibiotics14070660

Chicago/Turabian StyleColquhoun, Gosha, Nicola Ring, Jamie Smith, Diane Willis, Brian Williams, and Kalliopi Kydonaki. 2025. "Evaluating the Feasibility and Acceptability of a Prototype Hospital Digital Antibiotic Review Tracking Toolkit: A Qualitative Study Using the RE-AIM Framework" Antibiotics 14, no. 7: 660. https://doi.org/10.3390/antibiotics14070660

APA StyleColquhoun, G., Ring, N., Smith, J., Willis, D., Williams, B., & Kydonaki, K. (2025). Evaluating the Feasibility and Acceptability of a Prototype Hospital Digital Antibiotic Review Tracking Toolkit: A Qualitative Study Using the RE-AIM Framework. Antibiotics, 14(7), 660. https://doi.org/10.3390/antibiotics14070660