Machine Learning-Based Framework for Pre-Impact Same-Level Fall and Fall-from-Height Detection in Construction Sites Using a Single Wearable Inertial Measurement Unit

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

2.1.1. Subjects

2.1.2. Apparatus

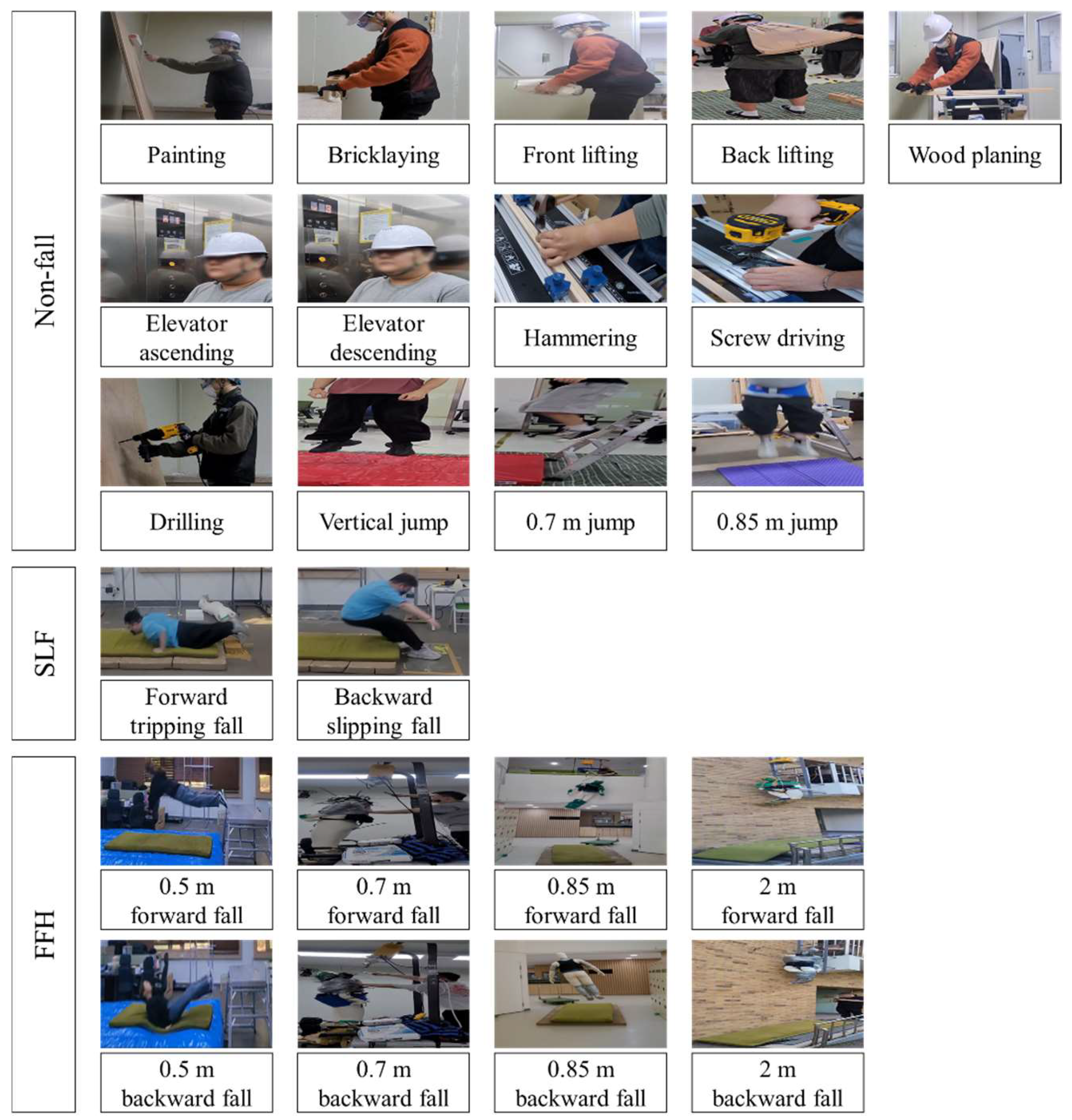

2.1.3. Experimental Protocol

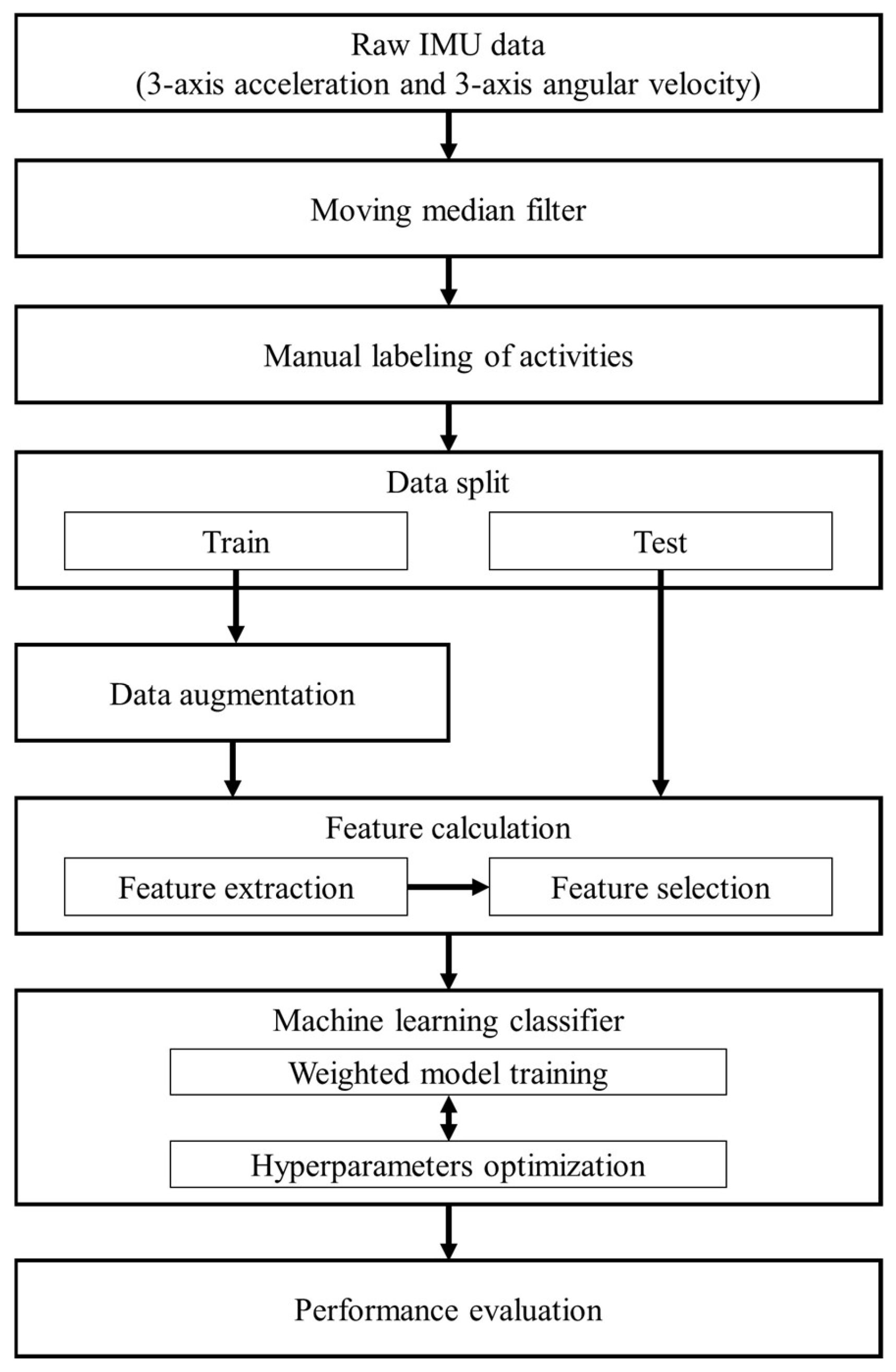

2.2. Proposed Prediction Method for Non-Fall, SLF, and FFH Events

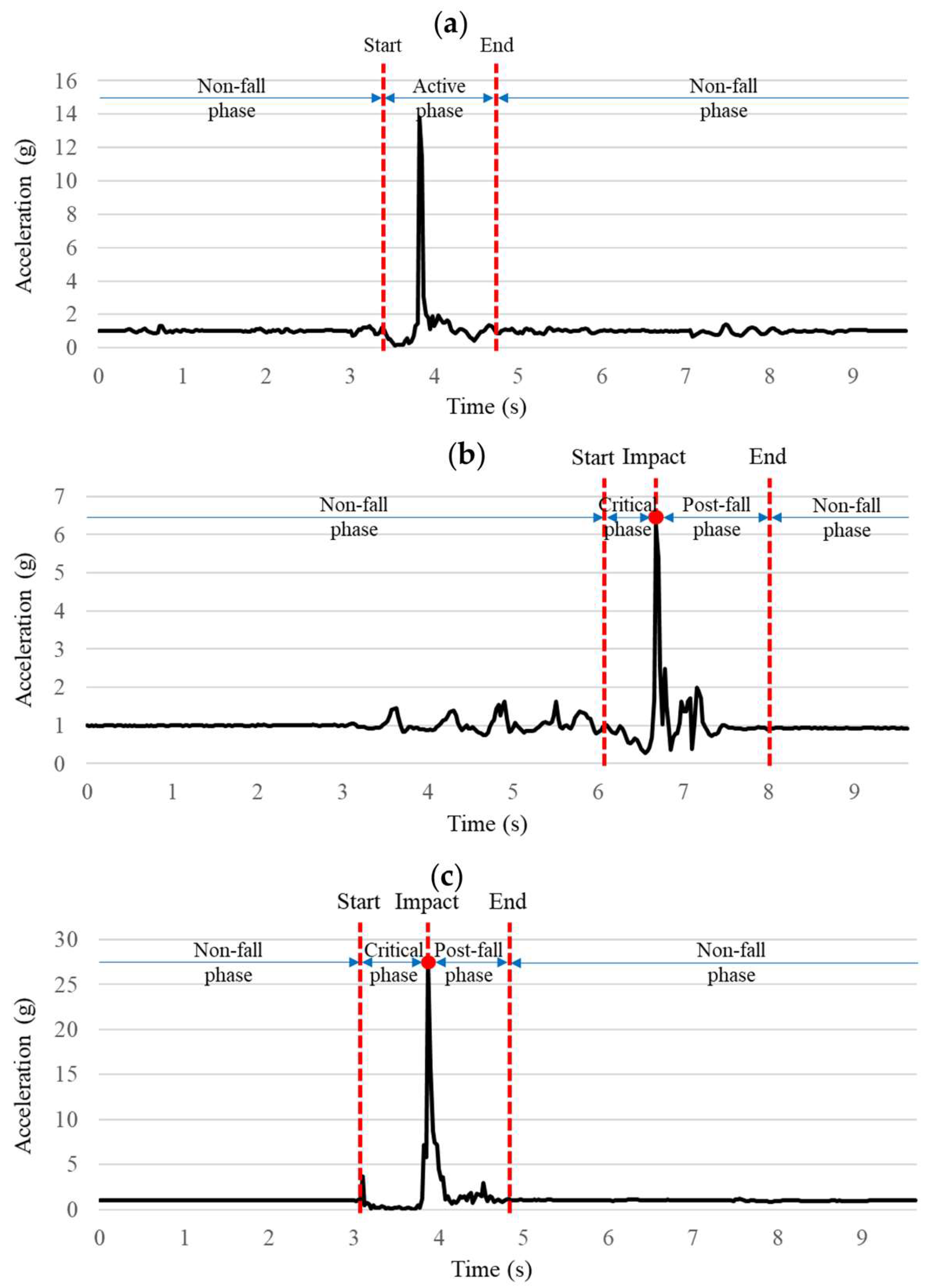

2.2.1. Data Pre-Processing and Labeling

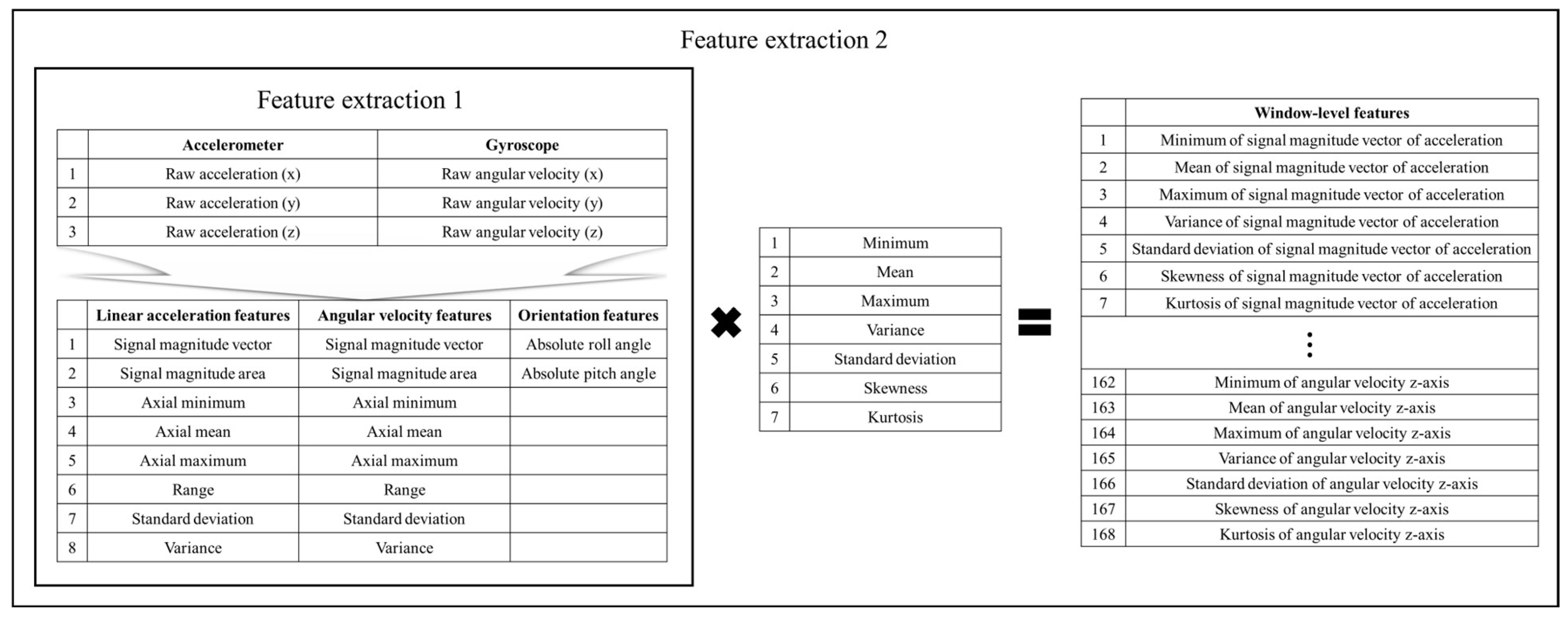

2.2.2. Two-Stage Feature Extraction

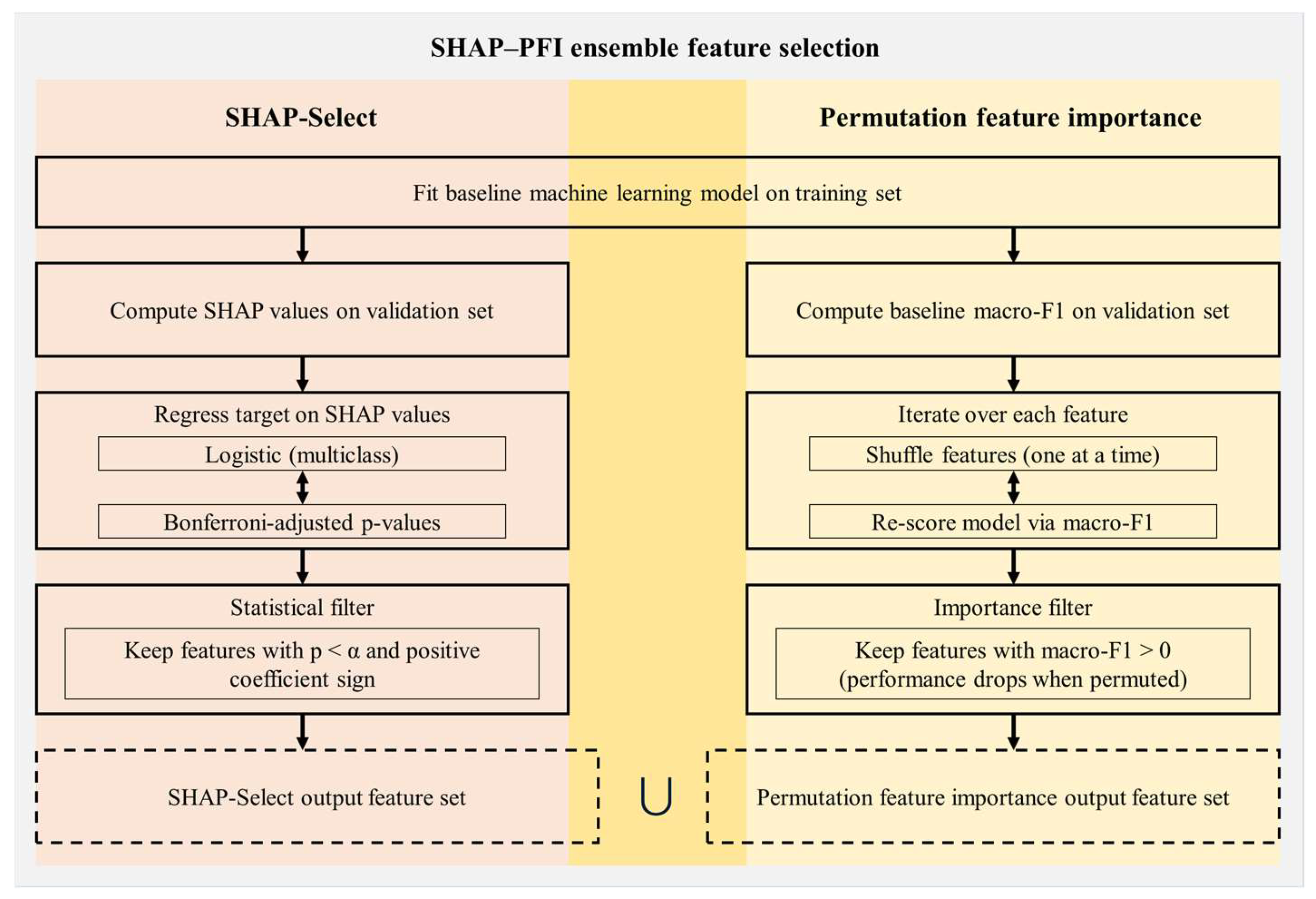

2.2.3. Ensemble Feature Selection

2.2.4. Weighted Machine Learning Models

2.2.5. Performance Measure

- (1)

- Macro accuracy, which evaluates the average classification accuracy across all classes.

- (2)

- Macro sensitivity, which quantifies the model’s capacity to correctly detect positive cases in each class.

- (3)

- Macro specificity, indicating the ability of the model to identify negative samples accurately across classes.

- (4)

- Macro MCC, selected for its insensitivity to class imbalance and comprehensive integration of all confusion matrix terms.

- (5)

- Class-calculated PR-AUC, providing a robust assessment of classification performance under class imbalance by quantifying the precision-recall trade-off.

3. Results and Discussion

3.1. Hyperparameter Optimization Results

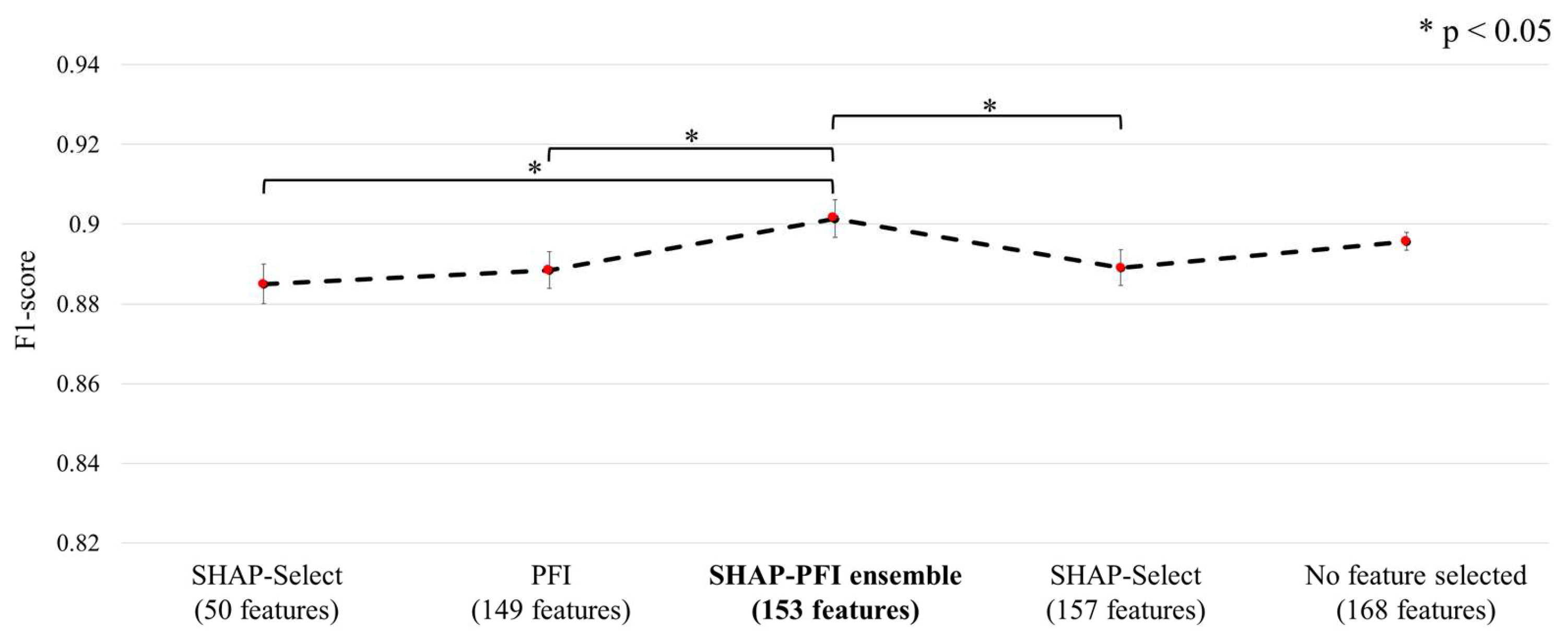

3.2. Comparative Analysis of Feature Selection Methods

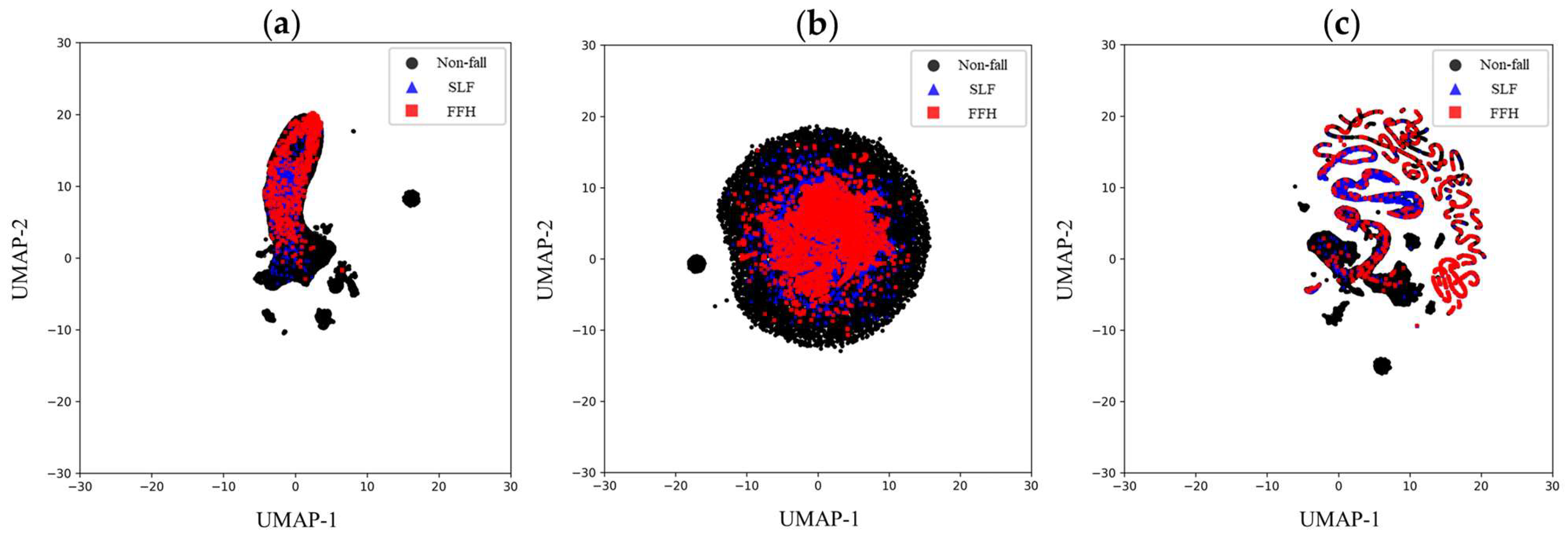

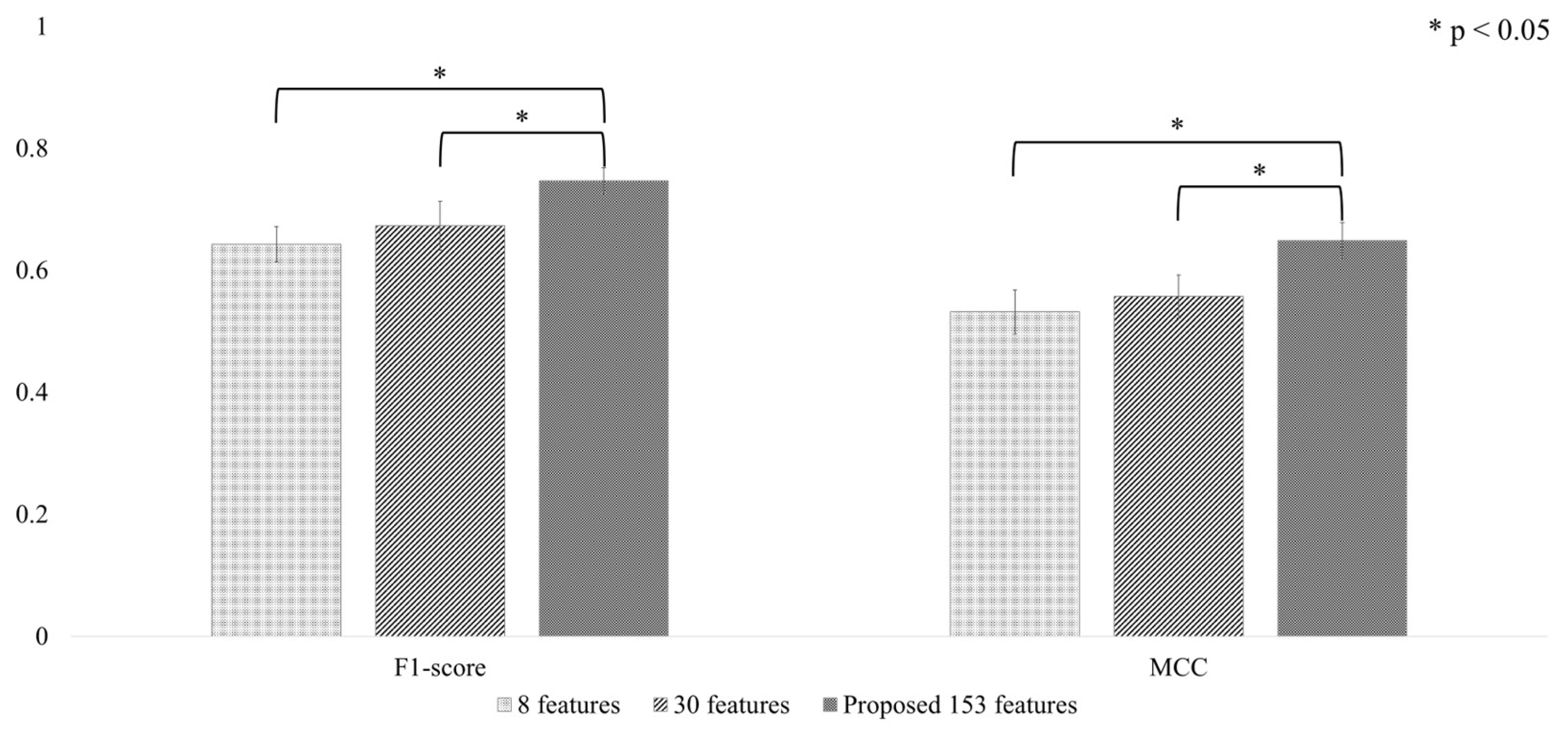

3.3. Comparative Analysis of the Discriminative Capability of Proposed and Previous IMU Feature Sets

3.4. Comparison of Boosting Models Performance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, M.; Fang, D. A cognitive analysis of why Chinese scaffolders do not use safety harnesses in construction. Constr. Manag. Econ. 2013, 31, 207–222. [Google Scholar] [CrossRef]

- Nadhim, E.A.; Hon, C.; Xia, B.; Stewart, I.; Fang, D. Falls from Height in the Construction Industry: A Critical Review of the Scientific Literature. Int. J. Environ. Res. Public Health 2016, 13, 638. [Google Scholar] [CrossRef]

- Khan, M.; Nnaji, C.; Khan, M.S.; Ibrahim, A.; Lee, D.; Park, C. Risk factors and emerging technologies for preventing falls from heights at construction sites. Autom. Constr. 2023, 153, 104955. [Google Scholar] [CrossRef]

- Kayastha, R.; Kisi, K. Assessing Factors Affecting Fall Accidents among Hispanic Construction Workers: Integrating Safety Insights into BIM for Enhanced Life Cycle Management. Buildings 2024, 14, 3017. [Google Scholar] [CrossRef]

- Newaz, M.T.; Jefferies, M.; Ershadi, M. A critical analysis of construction incident trends and strategic interventions for enhancing safety. Saf. Sci. 2025, 187, 106865. [Google Scholar] [CrossRef]

- Cheng, M.Y.; Vu, Q.T.; Teng, R.K. Real-time risk assessment of multi-parameter induced fall accidents at construction sites. Autom. Constr. 2024, 162, 105409. [Google Scholar] [CrossRef]

- Kang, Y.; Siddiqui, S.; Suk, S.J.; Chi, S.; Kim, C. Trends of Fall Accidents in the U.S. Construction Industry. J. Constr. Eng. Manage. 2017, 143, 04017043. [Google Scholar] [CrossRef]

- Rafindadi, A.D.; Napiah, M.; Othman, I.; Mikić, M.; Haruna, A.; Alarifi, H.; Al-Ashmori, Y.Y. Analysis of the causes and preventive measures of fatal fall-related accidents in the construction industry. Ain Shams Eng. J. 2022, 13, 101712. [Google Scholar] [CrossRef]

- Harris, W.; Brooks, R.D.; Trueblood, A.B.; Yohannes, T.; Bunting, J.; CPWR—The Center for Construction Research and Training; National Institute for Occupational Safety and Health. Fatal and Nonfatal Falls in the US Construction Industry, 2011–2022. CPWR Data Bulletin, March 2024. Available online: https://www.cpwr.com/wp-content/uploads/DataBulletin-March2024.pdf (accessed on 30 June 2025).

- Lipscomb, H.J.; Glazner, J.E.; Bondy, J.; Guarini, K.; Lezotte, D. Injuries from slips and trips in construction. Appl. Ergon. 2006, 37, 267–274. [Google Scholar] [CrossRef]

- Almaskati, D.; Kermanshachi, S.; Pamidimukkala, A.; Loganathan, K.; Yin, Z. A Review on Construction Safety: Hazards, Mitigation Strategies, and Impacted Sectors. Buildings 2024, 14, 526. [Google Scholar] [CrossRef]

- Choi, S.D.; Guo, L.; Kim, J.; Xiong, S. Comparison of fatal occupational injuries in construction industry in the United States, South Korea, and China. Int. J. Ind. Ergon. 2019, 71, 64–74. [Google Scholar] [CrossRef]

- Socias-Morales, C.; Konda, S.; Bell, J.L.; Wurzelbacher, S.J.; Naber, S.J.; Earnest, G.S.; Garza, E.P.; Meyers, A.R.; Scharf, T. Construction industry workers’ compensation injury claims due to slips, trips, and falls-Ohio, 2010-2017. J. Saf. Res. 2023, 86, 80–91. [Google Scholar] [CrossRef] [PubMed]

- Yoon, H.Y.; Lockhart, T.E. Nonfatal occupational injuries associated with slips and falls in the United States. Int. J. Ind. Ergon. 2006, 36, 83–92. [Google Scholar] [CrossRef]

- Dong, X.S.; Largay, J.A.; Choi, S.D.; Wang, X.; Cain, C.T.; Romano, N. Fatal falls and PFAS use in the construction industry: Findings from the NIOSH FACE reports. Accid. Anal. Prev. 2017, 102, 136–143. [Google Scholar] [CrossRef] [PubMed]

- Karatas, I. Deep learning-based system for prediction of work at height in construction site. Heliyon 2025, 11, e41779. [Google Scholar] [CrossRef]

- Kaskutas, V.; Dale, A.M.; Lipscomb, H.; Evanoff, B. Fall prevention and safety communication training for foremen: Report of a pilot project designed to improve residential construction safety. J. Saf. Res. 2013, 44, 111–118. [Google Scholar] [CrossRef]

- Marín, L.S.; Roelofs, C. Promoting Construction Supervisors’ Safety-Efficacy to Improve Safety Climate: Training Intervention Trial. J. Constr. Eng. Manage. 2017, 143, 04017037. [Google Scholar] [CrossRef]

- Bunting, J.; Branche, C.; Trahan, C.; Goldenhar, L. A national safety stand-down to reduce construction worker falls. J. Saf. Res. 2017, 60, 103–111. [Google Scholar] [CrossRef]

- Robson, L.S.; Lee, H.; Amick, B.C., III.; Landsman, V.; Smith, P.M.; Mustard, C.A. Preventing fall-from-height injuries in construction: Effectiveness of a regulatory training standard. J. Saf. Res. 2020, 74, 271–278. [Google Scholar] [CrossRef]

- Lee, C.; Porter, K.M. Suspension trauma. Emerg. Med. J. 2007, 24, 237–238. [Google Scholar] [CrossRef]

- Lipscomb, H.J.; Schoenfisch, A.L.; Cameron, W.; Kucera, K.L.; Adams, D.; Silverstein, B.A. How well are we controlling falls from height in construction? Experiences of union carpenters in Washington State, 1989–2008. Am. J. Ind. Med. 2014, 57, 69–77. [Google Scholar] [CrossRef]

- Awolusi, I.; Marks, E.; Hallowell, M. Wearable technology for personalized construction safety monitoring and trending: Review of applicable devices. Autom. Constr. 2018, 85, 96–106. [Google Scholar] [CrossRef]

- Fukaya, K.; Uchida, M. Protection against Impact with the Ground Using Wearable Airbags. Ind. Health 2008, 46, 59–65. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, H.Q.; Ning, Y.K.; Liang, D.; Zhao, G.R. Design and Realization of a Wearable Hip-Airbag System for Fall Protection. Appl. Mech. Mater. 2014, 461, 667–674. [Google Scholar] [CrossRef]

- Ahn, S.; Choi, D.; Kim, J.; Kim, S.; Jeong, Y.; Jo, M.; Kim, Y. Optimization of a Pre-impact Fall Detection Algorithm and Development of Hip Protection Airbag System. Sens. Mater. 2018, 30, 1743–1752. [Google Scholar] [CrossRef]

- Hongsakun, T.; Tangtrakulwanich, B.; Vittayaphadung, N.; Thongruang, W.; Srewaradachpisal, S. The Effect of Airbag Design on Impact Attenuation for Hip Protection. Eng. Technol. Appl. Sci. Res. 2025, 15, 24236–24245. [Google Scholar] [CrossRef]

- Li, Y.; Hu, Y.; Tsang, Y.P.; Lee, C.K.M.; Li, H. Prioritizing the Product Features for Wearable Airbag Design Using the Best–Worst Method. In Lecture Notes in Operations Research; Springer: Berlin/Heidelberg, Germany, 2025; pp. 143–159. [Google Scholar]

- Tamura, T.; Yoshimura, T.; Sekine, M.; Uchida, M. Development of a Wearable Airbag for Preventing Fall Related Injuries. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; pp. 335–339. [Google Scholar]

- Lee, B.C.; Ji, B.C. Design and Evaluation of a Prototype of an Airbag-Based Wearable Safety Jacket for Fall Accidents in Construction Working Environments. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2020; pp. 529–542. [Google Scholar]

- Gelmini, S.; Strada, S.; Tanelli, M.; Savaresi, S.; Guzzon, A. Automatic detection of human’s falls from heights for airbag deployment via inertial measurements. Autom. Constr. 2020, 120, 103358. [Google Scholar] [CrossRef]

- Aranda-Marco, R.; Peldschus, S. Virtual Assessment of a Representative Torso Airbag under the Fall from Height Impact Conditions. Safety 2023, 9, 53. [Google Scholar] [CrossRef]

- Jung, H.; Koo, B.; Kim, J.; Kim, T.; Nam, Y.; Kim, Y. Enhanced Algorithm for the Detection of Preimpact Fall for Wearable Airbags. Sensors 2020, 20, 1277. [Google Scholar] [CrossRef] [PubMed]

- Antwi-Afari, M.F.; Li, H.; Seo, J.; Lee, S.; Edwards, J.D.; Yu Lok Wong, A. Wearable insole pressure sensors for automated detection and classification of slip-trip-loss of balance events in construction workers. In Proceedings of the Construction Research Congress, New Orleans, Louisiana, 2–4 April 2018; pp. 73–83. [Google Scholar]

- Yuhai, O.; Kim, H.; Choi, A.; Mun, J.H. Deep learning-based slip-trip falls and near-falls prediction model using a single inertial measurement unit sensor for construction workplace. In Proceedings of the 4th International Conference on Big Data Analytics and Practices (IBDAP), Bangkok, Thailand, 25–27 August 2023. [Google Scholar]

- Dzeng, R.-J.; Fang, Y.-C.; Chen, I.C. A feasibility study of using smartphone built-in accelerometers to detect fall portents. Autom. Constr. 2014, 38, 74–86. [Google Scholar] [CrossRef]

- Dogan, O.; Akcamete, A. Detecting Falls-from-Height with Wearable Sensors and Reducing Consequences of Occupational Fall Accidents Leveraging IoT. In Advances in Informatics and Computing in Civil and Construction Engineering; Springer: Cham, Switzerland, 2019; pp. 207–214. [Google Scholar]

- Kim, Y.; Jung, H.; Koo, B.; Kim, J.; Kim, T.; Nam, Y. Detection of Pre-Impact Falls from Heights Using an Inertial Measurement Unit Sensor. Sensors 2020, 20, 5388. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Koo, B.; Yang, S.; Kim, J.; Nam, Y.; Kim, Y. Fall-from-Height Detection Using Deep Learning Based on IMU Sensor Data for Accident Prevention at Construction Sites. Sensors 2022, 22, 6107. [Google Scholar] [CrossRef] [PubMed]

- Kim, T.H.; Choi, A.; Heo, H.M.; Kim, K.; Lee, K.; Mun, J.H. Machine Learning-Based Pre-Impact Fall Detection Model to Discriminate Various Types of Fall. J. Biomech. Eng. 2019, 141. [Google Scholar] [CrossRef] [PubMed]

- Kim, T.H.; Choi, A.; Heo, H.M.; Kim, H.; Mun, J.H. Acceleration Magnitude at Impact Following Loss of Balance Can Be Estimated Using Deep Learning Model. Sensors 2020, 20, 6126. [Google Scholar] [CrossRef]

- Choi, A.; Kim, T.H.; Yuhai, O.; Jeong, S.; Kim, K.; Kim, H.; Mun, J.H. Deep Learning-Based Near-Fall Detection Algorithm for Fall Risk Monitoring System Using a Single Inertial Measurement Unit. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2385–2394. [Google Scholar] [CrossRef]

- Cheng, W.C.; Jhan, D.M. Triaxial Accelerometer-Based Fall Detection Method Using a Self-Constructing Cascade-AdaBoost-SVM Classifier. IEEE J. Biomed. Health Inform. 2013, 17, 411–419. [Google Scholar] [CrossRef]

- Koffi, T.Y.; Mourchid, Y.; Hindawi, M.; Dupuis, Y. Machine Learning and Feature Ranking for Impact Fall Detection Event Using Multisensor Data. In Proceedings of the IEEE 25th International Workshop on Multimedia Signal Processing (MMSP), Poitiers, France, 27–29 September 2023. [Google Scholar]

- Cao, H.; Yan, B.; Dong, L.; Yuan, X. Multipopulation Whale Optimization-Based Feature Selection Algorithm and Its Application in Human Fall Detection Using Inertial Measurement Unit Sensors. Sensors 2024, 24, 7879. [Google Scholar] [CrossRef]

- Cates, B.; Sim, T.; Heo, H.M.; Kim, B.; Kim, H.; Mun, J.H. A Novel Detection Model and Its Optimal Features to Classify Falls from Low- and High-Acceleration Activities of Daily Life Using an Insole Sensor System. Sensors 2018, 18, 1227. [Google Scholar] [CrossRef]

- Tamura, T.; Yoshimura, T.; Sekine, M.; Uchida, M.; Tanaka, O. A Wearable Airbag to Prevent Fall Injuries. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 910–914. [Google Scholar] [CrossRef]

- Kraev, E.; Koseoglu, B.; Traverso, L.; Topiwalla, M. Shap-Select: Lightweight Feature Selection Using SHAP Values and Regression. arXiv 2024, arXiv:2410.06815. [Google Scholar] [CrossRef]

- Fisher, A.; Rudin, C.; Dominici, F. All models are wrong, but many are useful: Learning a variable’s importance by studying an entire class of prediction models simultaneously. J. Mach. Learn. Res. 2019, 20, 1–81. [Google Scholar]

- Florek, P.; Zagdański, A. Benchmarking state-of-the-art gradient boosting algorithms for classification. arXiv 2023, arXiv:2305.17094. [Google Scholar] [CrossRef]

- Santoyo-Ramón, J.A.; Casilari, E.; Cano-García, J.M. A study of the influence of the sensor sampling frequency on the performance of wearable fall detectors. Measurement 2022, 193, 110945. [Google Scholar] [CrossRef]

- Ascioglu, G.; Senol, Y. Activity Recognition Using Different Sensor Modalities and Deep Learning. Appl. Sci. 2023, 13, 10931. [Google Scholar] [CrossRef]

- Rodríguez-Molinero, A.; Samà, A.; Pérez-Martínez, D.A.; Pérez López, C.; Romagosa, J.; Bayés, À.; Sanz, P.; Calopa, M.; Gálvez-Barrón, C.; De Mingo, E. Validation of a Portable Device for Mapping Motor and Gait Disturbances in Parkinson’s Disease. JMIR Mhealth Uhealth 2015, 3, e9. [Google Scholar] [CrossRef]

- Choi, A.; Jung, H.; Mun, J.H. Single Inertial Sensor-Based Neural Networks to Estimate COM-COP Inclination Angle During Walking. Sensors 2019, 19, 2974. [Google Scholar] [CrossRef]

- Özdemir, A.T. An Analysis on Sensor Locations of the Human Body for Wearable Fall Detection Devices: Principles and Practice. Sensors 2016, 16, 1161. [Google Scholar] [CrossRef]

- Koskimaki, H.; Huikari, V.; Siirtola, P.; Laurinen, P.; Roning, J. Activity recognition using a wrist-worn inertial measurement unit: A case study for industrial assembly lines. In Proceedings of the 2009 17th Mediterranean Conference on Control and Automation, Thessaloniki, Greece, 24–26 June 2009; pp. 401–405. [Google Scholar]

- Bangaru, S.S.; Wang, C.; Busam, S.A.; Aghazadeh, F. ANN-based automated scaffold builder activity recognition through wearable EMG and IMU sensors. Autom. Constr. 2021, 126, 103653. [Google Scholar] [CrossRef]

- Ryu, J.; Seo, J.; Jebelli, H.; Lee, S. Automated Action Recognition Using an Accelerometer-Embedded Wristband-Type Activity Tracker. J. Constr. Eng. Manage. 2019, 145, 04018114. [Google Scholar] [CrossRef]

- Lee, H.; Lee, G.; Lee, S.; Ahn, C.R. Assessing exposure to slip, trip, and fall hazards based on abnormal gait patterns predicted from confidence interval estimation. Autom. Constr. 2022, 139, 104253. [Google Scholar] [CrossRef]

- Hsieh, C.-Y.; Liu, K.-C.; Huang, C.-N.; Chu, W.-C.; Chan, C.-T. Novel Hierarchical Fall Detection Algorithm Using a Multiphase Fall Model. Sensors 2017, 17, 307. [Google Scholar] [CrossRef]

- Mao, A.; Ma, X.; He, Y.; Luo, J. Highly Portable, Sensor-Based System for Human Fall Monitoring. Sensors 2017, 17, 2096. [Google Scholar] [CrossRef] [PubMed]

- Chen, K.; Zhang, D.; Yao, L.; Guo, B.; Yu, Z.; Liu, Y. Deep Learning for Sensor-based Human Activity Recognition: Overview, Challenges, and Opportunities. ACM Comput. Surv. 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Manakitsa, N.; Maraslidis, G.S.; Moysis, L.; Fragulis, G.F. A Review of Machine Learning and Deep Learning for Object Detection, Semantic Segmentation, and Human Action Recognition in Machine and Robotic Vision. Technologies 2024, 12, 15. [Google Scholar] [CrossRef]

- Hussain, F.; Umair, M.B.; Ehatisham-ul-Haq, M.; Pires, I.M.; Valente, T.; Garcia, N.M.; Pombo, N. An efficient machine learning-based elderly fall detection algorithm. arXiv 2019, arXiv:1911.11976. [Google Scholar] [CrossRef]

- Potharaju, S.; Tambe, S.N.; Srikanth, N.; Tirandasu, R.K.; Amiripalli, S.S.; Mulla, R. Smartphone Based Real-Time Detection of Postural and Leg Abnormalities using Deep Learning Techniques. J. Curr. Sci. Technol. 2025, 15, 112. [Google Scholar] [CrossRef]

- Wu, J.; Chen, X.-Y.; Zhang, H.; Xiong, L.-D.; Lei, H.; Deng, S.-H. Hyperparameter Optimization for Machine Learning Models Based on Bayesian Optimizationb. J. Electron. Sci. Technol. 2019, 17, 26–40. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4765–4774. [Google Scholar]

- Armstrong, R.A. When to use the Bonferroni correction. Ophthalmic Physiol. Opt. 2014, 34, 502–508. [Google Scholar] [CrossRef]

- King, G.; Zeng, L. Logistic Regression in Rare Events Data. Political. Anal. 2001, 9, 137–163. [Google Scholar] [CrossRef]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Basu, I.; Maji, S. Multicollinearity Correction and Combined Feature Effect in Shapley Values. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2022; pp. 79–90. [Google Scholar]

- Molnar, C. Interpretable Machine Learning. 2020. Available online: https://christophm.github.io/interpretable-ml-book/ (accessed on 14 July 2025).

- Altmann, A.; Toloşi, L.; Sander, O.; Lengauer, T. Permutation importance: A corrected feature importance measure. Bioinformatics 2010, 26, 1340–1347. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 52. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. Adv. Neural Inf. Process. Syst. 2018, 31, 6639–6649. [Google Scholar]

- Krupitzer, C.; Sztyler, T.; Edinger, J.; Breitbach, M.; Stuckenschmidt, H.; Becker, C. Beyond position-awareness—Extending a self-adaptive fall detection system. Pervasive Mob. Comput. 2019, 58, 101026. [Google Scholar] [CrossRef]

- Yuhai, O.; Cho, Y.; Choi, A.; Mun, J.H. Enhanced Three-Axis Frame and Wand-Based Multi-Camera Calibration Method Using Adaptive Iteratively Reweighted Least Squares and Comprehensive Error Integration. Photonics 2024, 11, 867. [Google Scholar] [CrossRef]

- Abu-Samah, A.; Ghaffa, D.; Abdullah, N.F.; Kamal, N.; Nordin, R.; Dela Cruz, J.C.; Magwili, G.V.; Mercado, R.J. Deployment of TinyML-Based Stress Classification Using Computational Constrained Health Wearable. Electronics 2025, 14, 687. [Google Scholar] [CrossRef]

- Betgeri, S.S.; Shukla, M.; Kumar, D.; Khan, S.B.; Khan, M.A.; Alkhaldi, N.A. Enhancing seizure detection with hybrid XGBoost and recurrent neural networks. Neurosci. Inform. 2025, 5, 100206. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar] [CrossRef]

- Grinsztajn, L.; Oyallon, E.; Varoquaux, G. Why do tree-based models still outperform deep learning on typical tabular data? Adv. Neural Inf. Process. Syst. 2022, 35, 507–520. [Google Scholar]

- O’Halloran, J.; Curry, E. A Comparison of Deep Learning Models in Human Activity Recognition and Behavioural Prediction on the MHEALTH Dataset. In Proceedings of the 2019 International Conference on Artificial Intelligence and Computer Science (AICS), Wuhan, China, 12–13 July 2019; pp. 212–223. [Google Scholar]

- Zhou, Y.; Zhao, H.; Huang, Y.; Riedel, T.; Hefenbrock, M.; Beigl, M. TinyHAR: A Lightweight Deep Learning Model Designed for Human Activity Recognition. In Proceedings of the 2022 ACM International Symposium on Wearable Computers, Cambridge, UK, 11–15 September 2022; pp. 89–93. [Google Scholar]

- Zhang, J.; Li, J.; Wang, W. A Class-Imbalanced Deep Learning Fall Detection Algorithm Using Wearable Sensors. Sensors 2021, 21, 6511. [Google Scholar] [CrossRef]

- Tang, J.; He, B.; Xu, J.; Tan, T.; Wang, Z.; Zhou, Y.; Jiang, S. Synthetic IMU Datasets and Protocols Can Simplify Fall Detection Experiments and Optimize Sensor Configuration. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 1233–1245. [Google Scholar] [CrossRef] [PubMed]

- Luque, R.; Casilari, E.; Morón, M.-J.; Redondo, G. Comparison and Characterization of Android-Based Fall Detection Systems. Sensors 2014, 14, 18543–18574. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Fang, W.; Wang, C.; Bai, J.; Wang, K.; Lu, Q. Investigation of the Influence of Temperature and Humidity on the Bandwidth of an Accelerometer. Micromachines 2021, 12, 860. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Zhang, Z.; Chang, L.; Yu, J.; Ren, Y.; Chen, K.; Cao, H.; Xie, H. Temperature Compensation for MEMS Accelerometer Based on a Fusion Algorithm. Micromachines 2024, 15, 835. [Google Scholar] [CrossRef]

- Rocha, Á.B.; Fernandes, E.D.M.; Souto, J.I.V.; Gomez, R.S.; Delgado, J.M.P.Q.; Lima, F.S.; Alves, R.M.N.; Bezerra, A.L.D.; Lima, A.G.B. Development of Anemometer Based on Inertial Sensor. Micromachines 2024, 15, 1186. [Google Scholar] [CrossRef]

| Hyper-Parameter | Data Type | Search Range | XGBoost | LightGBM | CatBoost |

|---|---|---|---|---|---|

| Learning rate | float, log-uniform | [0.005–0.30] | 0.298 | 0.020 | 0.092 |

| Number of boosting iterations | integer, uniform | [100–800] | 254 | 760 | 539 |

| Maximum tree depth | integer, uniform | [3–10] | 9 | 10 | 8 |

| Sub-sample ratio of training rows | float, uniform | [0.50–1.00] | 0.959 | 0.894 | — |

| Column sampling ratio per tree | float, uniform | [0.30–1.00] | 0.948 | 0.382 | 0.892 |

| Minimum loss-reduction to split | float, uniform | [0–5] | 0.036 | 0.919 | — |

| Minimum child weight | (XGB) integer, uniform | [1–10] | 4 | 0.038 | — |

| (LGB) float, log-uniform | [1 × 10−3–10] | ||||

| Maximum number of leaves | integer, uniform | [15–255] | — | 203 | — |

| Bagging temperature | float, uniform | [0–1] | — | — | 0.657 |

| L2 leaf regularization | float, log-uniform | [1 × 10−3–10] | — | — | 1.146 |

| L1 regularization coefficient | float, log-uniform | [1 × 10−6–10] | 0.006 | 0.030 | — |

| L2 regularization coefficient | float, log-uniform | [1 × 10−6–10] | 1.13 × 10−5 | 7.47 × 10−4 | — |

| XGBoost (1) | LightGBM (2) | CatBoost (3) | ANOVA Results | Post Hoc Test | |

|---|---|---|---|---|---|

| Accuracy | 0.985 ± 0.001 | 0.984 ± 0.001 | 0.976 ± 0.001 | F = 139.317, p = 0.000 | (1), (2) > (3) |

| Sensitivity | 0.881 ± 0.005 | 0.890 ± 0.007 | 0.907 ± 0.008 | F = 20.732, p = 0.000 | (3) > (2), (1) |

| Specificity | 0.949 ± 0.001 | 0.954 ± 0.003 | 0.962 ± 0.002 | F = 39.958, p = 0.000 | (3) > (2) > (1) |

| F1-Score | 0.901 ± 0.005 | 0.897 ± 0.005 | 0.860 ± 0.007 | F = 82.651, p = 0.000 | (1), (2) > (3) |

| MCC | 0.869 ± 0.005 | 0.864 ± 0.006 | 0.811 ± 0.009 | F = 118.898, p = 0.000 | (1), (2) > (3) |

| PR-AUC (Non-fall) | 0.999 ± 0.000 | 0.999 ± 0.000 | 0.999 ± 0.000 | F = 0.784, p = 0.478 | (1), (2), (3) |

| PR-AUC (SLFs) | 0.953 ± 0.003 | 0.951 ± 0.002 | 0.937 ± 0.005 | F = 30.585, p = 0.000 | (1), (2) > (3) |

| PR-AUC (FFHs) | 0.871 ± 0.004 | 0.872 ± 0.006 | 0.856 ± 0.010 | F = 8.633, p = 0.005 | (2), (1) > (3) |

| Train Time [ms] | Inference Time [ms per Fold] | System Latency [ms per Window] | |

|---|---|---|---|

| XGboost | 43,621 | 22.061 | 1.51 × 10−3 |

| LightGBM | 136,783 | 218.965 | 1.50 × 10−2 |

| CatBoost | 208,539 | 29.288 | 2.01 × 10−3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuhai, O.; Cho, Y.; Mun, J.H. Machine Learning-Based Framework for Pre-Impact Same-Level Fall and Fall-from-Height Detection in Construction Sites Using a Single Wearable Inertial Measurement Unit. Biosensors 2025, 15, 618. https://doi.org/10.3390/bios15090618

Yuhai O, Cho Y, Mun JH. Machine Learning-Based Framework for Pre-Impact Same-Level Fall and Fall-from-Height Detection in Construction Sites Using a Single Wearable Inertial Measurement Unit. Biosensors. 2025; 15(9):618. https://doi.org/10.3390/bios15090618

Chicago/Turabian StyleYuhai, Oleksandr, Yubin Cho, and Joung Hwan Mun. 2025. "Machine Learning-Based Framework for Pre-Impact Same-Level Fall and Fall-from-Height Detection in Construction Sites Using a Single Wearable Inertial Measurement Unit" Biosensors 15, no. 9: 618. https://doi.org/10.3390/bios15090618

APA StyleYuhai, O., Cho, Y., & Mun, J. H. (2025). Machine Learning-Based Framework for Pre-Impact Same-Level Fall and Fall-from-Height Detection in Construction Sites Using a Single Wearable Inertial Measurement Unit. Biosensors, 15(9), 618. https://doi.org/10.3390/bios15090618