Abstract

To assist patients with restricted mobility to control wheelchair freely, this paper presents an eye-movement-controlled wheelchair prototype based on a flexible hydrogel biosensor and Wavelet Transform-Support Vector Machine (WT-SVM) algorithm. Considering the poor deformability and biocompatibility of rigid metal electrodes, we propose a flexible hydrogel biosensor made of conductive HPC/PVA (Hydroxypropyl cellulose/Polyvinyl alcohol) hydrogel and flexible PDMS (Polydimethylsiloxane) substrate. The proposed biosensor is affixed to the wheelchair user’s forehead to collect electrooculogram (EOG) and strain signals, which are the basis to recognize eye movements. The low Young’s modulus (286 KPa) and exceptional breathability (18 g m−2 h−1 of water vapor transmission rate) of the biosensor ensures a conformal and unobtrusive adhesion between it and the epidermis. To improve the recognition accuracy of eye movements (straight, upward, downward, left, and right), the WT-SVM algorithm is introduced to classify EOG and strain signals according to different features (amplitude, duration, interval). The average recognition accuracy reaches 96.3%, thus the wheelchair can be manipulated precisely.

1. Introduction

Data from the World Health Organization (WHO) indicate that wheelchairs are indispensable for 75 million people on a daily basis, which accounts for 1% of the world’s total population [1]. Those who are highly handicapped (e.g., ALS patients) are incapable of maneuvering their wheelchairs manually. Given this, it is significant to develop a novel method to assist them to control the wheelchair.

The hand gesture control [2,3,4,5] and voice control [6,7,8] has been explored by several scholars. However, the main challenge for hand gesture control is the laborious operation and misrecognition of gestures [9]. In addition, it is not applicable for people with limited limb movement. As for voice control, the speech commands are susceptible to ambient noise, which greatly reduces the practicality in noisy environments [10]. Multiple studies have demonstrated the utilization of video-based eye-tracking systems [11,12,13]. These systems require specific illumination conditions and are expensive, so it is not viable for large-scale applications [14]. Brain–computer interface (BCI) technology has been employed in human–wheelchair interactions previously [15,16,17,18]. The acquisition of Electroencephalogram (EEG) signals is usually realized by invasive electrodes, which brings great difficulty to implementation and may impact the physical health of the user [19].

Eye movement is a conscious and subjective behavior, and its directions can be identified by the analysis of electrooculogram (EOG). There are also some studies about eye movement control in wheelchairs [20,21,22,23,24,25,26]. EOG is a kind of electrophysiological method, which records the potential difference between the cornea and retina [27,28,29]. A wireless human–wheelchair interface has been exhibited in [20], where the EOG signal is measured by a soft electrode. This electrode is prepared by depositing Au on a membrane and transferring it into a specific pattern. Huang et al. placed three metal electrodes on the left eyebrow, left mastoid, and right mastoid, respectively, to record vertical EOG signals [22]. In the above studies, the electrodes are rigid and have poor biocompatibility, which may cause allergies or irritations during direct contact with skin.

Signal classification is the basis for identifying the diverse movement states of eyeballs. Various algorithms have emerged in the past decade, including the Hidden Markov Model (HMM) [30,31,32,33], transfer learning [34,35,36,37], and linear classifiers [38,39,40,41]. A hierarchical HMM statistical algorithm [33] was utilized to classify ternary eye movements and the classification result of fixations, saccades and smooth pursuits was evaluated as “good”. However, this model cannot label multiple features simultaneously, which limits the efficiency of classification. Abdollahpour et al. reported a transfer learning convolutional neural network (TLCNN) and applied it in the classification of the EEG signal’s feature set [34]. It trains a general classifier instead of an optimal one, so the accuracy of the classification is relatively low.

Linear discriminant analysis (LDA) and threefold support vector machine (SVM) approaches were used to classify six natural facial expressions and the designed framework minimized the generation of false labels and increased the classification accuracy [38]. Nevertheless, the traditional SVM has problems of complex calculation and long running times when dealing with the superimposed signals with linear inseparability [42,43,44]. Therefore, it cannot be implemented in the real-time classification of electrophysiological signals independently.

In this paper, an eye-movement-controlled wheelchair prototype based on flexible hydrogel biosensor is presented. The proposed biosensor owns high deformability and excellent biocompatibility. Moreover, Kalman filtering and Wavelet Transform-Support Vector Machine (WT-SVM) algorithms are applied in the processing and classification of EOG and strain signals to distinguish various eye movement states. The algorithm’s recognition rate of eye movement reaches 96.3%, which ensures the high-precision control of the wheelchair.

2. Design and Method

2.1. Human–Wheelchair Interaction

To achieve eye movement control in wheelchairs, we have performed the following three aspects of work: the fabrication of a flexible hydrogel biosensor, signal classification, and the manipulation of a wheelchair. The biosensor is responsible for collecting the wheelchair user’s EOG and strain signals. After being processed by the peripheral circuit, signals will be input into the laptop (Surface Pro 7) in digital form. The application of the classification algorithm enables different eye movement states to be identified. Eventually, the laptop generates instructions to drive stepper motors, and then control the wheelchair.

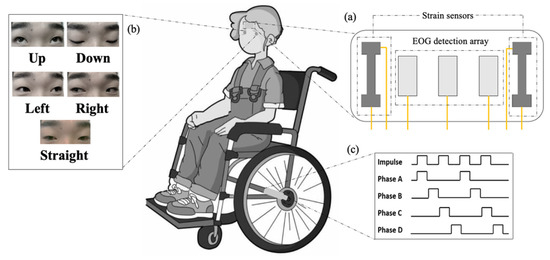

Five eye movement states (up, down, left, right, and straight) correspond to the different mobile modes of the wheelchair: ‘up’ to move forward, ‘down’ to move back, ‘left’ to turn left, ‘right’ to turn right, and ‘straight’ to stay still. The overall framework of the study is presented in Figure 1.

Figure 1.

Eye-movement-controlled wheelchair prototype based on a flexible biosensor. (a) Structure of flexible biosensor. (b) Diverse movement states of eyeballs. (c) Pulse signal for driving stepper motors.

2.2. Fabrication of A Flexible Biosensor

The flexible biosensor is comprised of three layers. The HPC/PVA (Hydroxypropyl cellulose/Polyvinyl alcohol) layer is sandwiched between two PDMS (Polydimethylsiloxane) layers. Due to its dielectric and biocompatible properties, the PDMS substrate is in direct contact with epidermis to insulate electrical interference [45,46]. As a sensing layer, the function of the HPC/PVA hydrogel membrane is to collect the electrophysiological signals.

The fabrication procedures of the PDMS substrate are as follows: mix PDMS aqueous dispersion (Shanghai Macklin Biochemical Technology Co., Ltd., Shanghai, China) with a coagulant at the ratio of 10:1 in the flask and stir evenly; set it aside until all the bubbles disappear; coat the mixture onto a glass slide; transfer the glass slide on a heating plate (IKA, C-MAG HP 4); and heat it at 75 °C. About half an hour later, a piece of PDMS film can be detached from the glass slide.

The conductive hydrogel membrane can be manufactured by the steps below. Add 5 mL DMSO (Dimethyl sulfoxide, Aladdin Co., Shanghai, China) and 0.5 g HPC (Aladdin Co., Shanghai, China) to 20 mL DI water and heat the mixture in a water bath (70 °C) with constant stirring. After 15 min, add 3 g PVA (Sigma-Aldrich Co., Saint Louis, MO, USA) to the mixed solution. Adjust the temperature to 85 °C and continue heating for two hours. Pour the mixed solution into a metal groove and let it cool down naturally. Transfer the cooled solution to the refrigerator at −20 °C and take it out after half an hour. When rising to room temperature, put it into the refrigerator again. Through three cycles, the HPC/PVA hydrogel membrane can be peeled off from the bottom of the metal groove.

The smaller size of biosensor guarantees an unobtrusive experience, but it has limited accuracy and sensitivity. To obtain a preferred dimension of the PDMS substrate and hydrogel membrane, we designed an elastic cantilever device according to Equation (1):

where K is the elastic constant of the cantilever, E is the tensile modulus, and W, T, and L represent the width, thickness, and length of the fabricated film, respectively.

Cut the hydrogel membrane (0.2 mm in thickness) into rectangular (10 × 10 mm2) and I-shaped (10 × 20 mm2) shapes respectively. Then, attach the I-shaped membrane to the left and right sides of the fabricated PDMS substrate (60 × 25 mm2, 0.3 mm in thickness), and affix three pieces of rectangular membranes equally spaced to the middle of it. Finally, encapsulate the PDMS substrate and the HPC/PVA membrane with epoxy resin to enhance the adhesion [47].

In order to determine whether the designed biosensor with the preferred dimensions can adapt to the deformation of epidermis, we studied its load-deflection characteristic (Equation (2)).

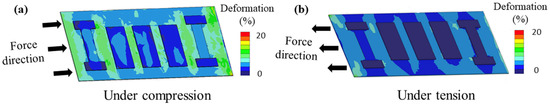

Here, P is the pressure exerted on the biosensor, is the Poisson’s ratio, and c is the deflection. When the strain force caused by skin deformation is applied to the biosensor, the proper value of deflection can ensure the biosensor’s structural integrity. Through finite element analysis (FEA), we studied the strain distribution of this structure in compression (Figure 2a) and tension (Figure 2b) states, respectively, to prove its highly deformable characteristic.

Figure 2.

Finite element analysis of flexible biosensor. (a) Compression state. (b) Tensile state.

2.3. Signal Acquisition and Classification

Due to the self-adhesive property and flexibility of the PDMS substrate, the hydrogel biosensor can maintain conformal contact with the dimpled epidermis. In order to collect the horizontal EOG signal and the strain signal generated from the epidermis deformation, the sensor is affixed to the middle of the user’s forehead.

When the eyes move to the left, the right eyeball approaches the inner canthus and transmits a positive potential (500–600 μV) to it, which shows an upward potential spike in EOG waveform [48]. The eye movement to the right transmits a negative potential, and a downward potential spike will appear in EOG waveform. When the eyes move upward or downward, the epidermis of the forehead is compressed or stretched accordingly. In the stretched state, the strain sensor is elongated and its resistance increases; when being compressed, the resistance decreases. The employment of the strain sensor is to complement EOG measurements. Consequently, eye movements can be identified by analyzing the change of potential difference and resistance [49].

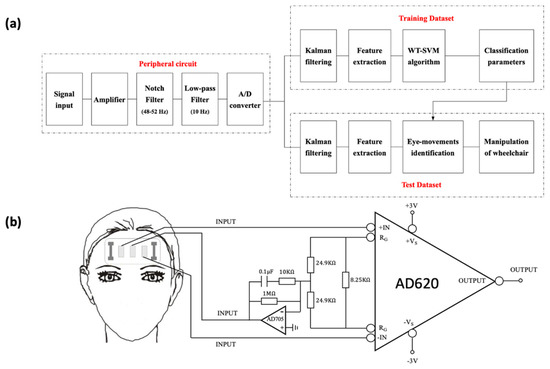

The flow chart of signal acquisition and classification is shown in Figure 3a. Considering that the electrophysiological signal is weak and contains much interference [50], we designed a circuit composed of an instrumentation amplifier (Figure 3b), a notch filter, a low-pass filter, and an A/D conversion module to process it. The instrumentation amplifier is utilized to amplify the signal without reducing the SNR (signal-to-noise ratio) [51]. Since the frequency of power supply is 50 Hz, the notch filter (48–52 Hz stop band) is applied to remove the power frequency interference. The main frequency of the EOG signal is concentrated below 10 Hz, so a low-pass filter is set to filter out the high frequency noise. Before being input into the laptop, the analog signal will be converted into a digital signal through an A/D conversion module.

Figure 3.

Processing of EOG and strain signals. (a) Flow chart of signal acquisition and classification. (b) Instrumentation amplifier.

To further improve the SNR of the signal data, a Kalman filtering algorithm is applied, and the principle of it is illustrated below. The predicted value of the signal (Equation (3)) is determined by the true value of the previous time point. The covariance matrix (Equation (4)) represents the difference between the predicted value and the real value. In order to reduce the error, it is necessary to obtain the value of the Kalman gain (Equation (5)) and continuously correct it during iteration. After obtaining the error covariance matrix and the Kalman gain, the true value of the signal (Equation (6)) can be calculated. The error covariance matrix (Equation (7)) between the estimated value and the true value will be used in the next iteration to obtain a better effect of signal filtering.

After implementing the Kalman filtering algorithm, the WT-SVM algorithm is introduced to achieve signal classification based on the signal features (amplitude, duration, interval). The filtered signal can be regarded as a superposition of the strain signal and the EOG signal. Due to the distinct characteristics of the two kinds of signals, setting a coefficient threshold of wavelet decomposition can separate them [52,53]. In the process of wavelet decomposition, signals are decomposed into low-frequency and high-frequency parts, and in the next decomposition, the low-frequency part is decomposed into a higher-order low-frequency part and a high-frequency part [54]. The N-order low-frequency signal and the (N + 1)-order low-frequency signal are combined to form the wavelet basis function, and the signals are then reconstructed.

The reconstructed signal data are divided into two data sets: training data set and test data set. There are five typical eye movement states (up, down, left, right, and straight), corresponding to five types of signals. Since the SVM is a two-class classifier, we adopt the “one-against-one” strategy and design ten classifiers to train the signal data. Considering the linear inseparability between the signals, the SVM classifiers use the Gaussian kernel function (Equation (8)) to map the five types of signals to a high-dimensional space to distinguish them.

The characteristic value of a signal is , and its corresponding weight value is . The SVM classifier can find the appropriate value of and limit the weighted values of different signals’ eigenvalues (Equation (9)) in a specific interval, and the above four kinds of signals (up, down, left, and right) can then be distinguished. If the weighted value falls on the boundary of the interval exactly, this means that the eye gaze direction is just straight.

3. Experimental Results Analysis

3.1. Performance of Flexible Hydrogel Biosensor

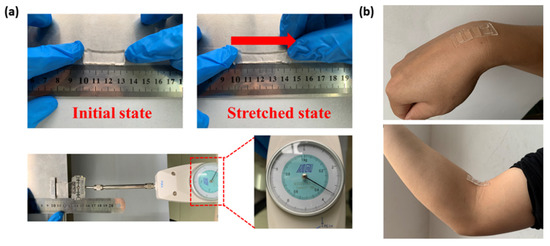

We have conducted a series of experiments to study the performance of the biosensor to verify the feasibility of its application in continuous electrophysiological signal monitoring. The mechanical performance of the biosensor is reflected in its structural flexibility, so we studied the relationship between the tensile force exerted on biosensor and its deformation degree. During the experiment, we used metal clips to clamp the two ends of the membrane. One end was fixed and aligned to a certain scale of a steel ruler, and the other end was connected to a tension gauge (NK-10, Hongkong Aigu Instrument Co., Ltd., Hongkong, China) (Figure 4a). The exceptional mechanical property of the biosensor enables it to follow the deformation of the epidermis without structural damage (Figure 4b).

Figure 4.

Performance test of the flexible hydrogel biosensor. (a) Tensile test. (b) Biosensor–epidermis adhesion.

In further experiments, the measurement of Young’s modulus was realized on a stretcher (DHY-2, Minsks Testing Equipment Co., Ltd., Xi'an, China). The biosensor was clamped to the platform of the stretcher and tensile force was exerted on it through clips. After reading the value of force, the Young’s modulus of biosensor was obtained according to Equation (10).

As to the measurement of the electrical behavior of biosensor, the method of voltammetry is introduced to determine the resistance and the conductivity [50]. We fixed both ends of the biosensor and applied a voltage to it through a power supply (UTP1306S, Unitech Technology Co., Ltd., Dongguan, China). Then, we stretched the biosensor to different lengths and recorded the corresponding current and voltage values. By measuring the width and thickness of the biosensor, the value of conductivity could be calculated by Equation (11).

To ensure the comfortable wearing of the biosensor, we have carried out research into its breathability. Three glass bottles were filled with same amount of water: one opened naturally, one was covered with a biosensor at the mouth of the bottle, and the other was covered with a traditional dry electrode. The evaporation of water through the biosensor resembles the evaporation process of sweat. After several hours, the breathability of biosensor was evaluated by calculating the water loss.

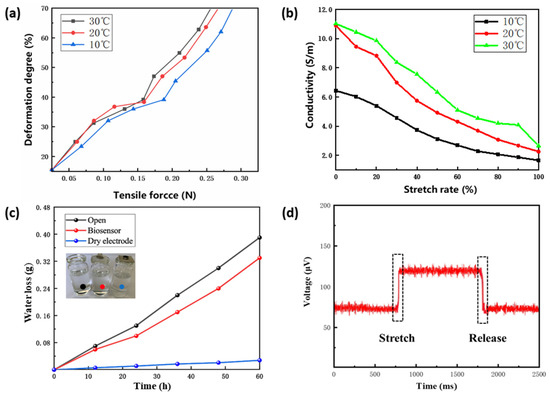

The relationship between the tensile force and the degree of deformation is presented in Figure 5a. The deformation of the biosensor changes slowly as tensile force increases at the beginning. When the tensile force increases to a certain value, the biosensor deforms significantly due to the change of internal structure. Furthermore, at a lower temperature, deformation caused by the same tensile force is smaller. In the environment of 30 °C, under the tensile force of 0.07 N, the deformation degree was approximately 20%, which is close to the maximum deformation of human skin without tearing [55]. We observed the surface of the biosensor with a microscope (LIOO, S600T) and found no obvious structural fractures. Experimental measurements found that the Young’s modulus of the biosensor is 286 kPa, which can fully adapt to the deformation of skin.

Figure 5.

Measurement of characterization. (a) Mechanical behavior. (b) Electrical behavior. (c) Comparison of breathability. (d) Response time.

As to the electrical properties, Figure 5b displays the conductivity of the biosensor at different stretch rates. The elongation of the biosensor leads to the increment of its resistance and the reduction of its conductivity. When the stretch rate is in the range of 0–20%, the conductivity decreases more remarkably. High temperature intensifies the movement of ions inside the hydrogel membrane, which explains why, in the environment of 20 °C and 30 °C, the conductivity is significantly greater than that in 10 °C.

The conformal contact between the biosensor and the epidermis leads to a reduction in contact resistance. We measured the skin contact resistance of the proposed biosensor and the Ag/AgCl electrode separately (26 °C, 60% humidity). Two electrodes were attached to the forehead epidermis, and after connecting to the same AC signal source, they are led out to the input of an instrumentation amplifier. The amplitude of the AC signal was 100 μA and the frequency was fixed at 100 Hz. Thus, the skin contact resistance can be obtained according to the voltage difference. The skin contact resistance of the flexible biosensor is 39.6 KΩ, which is smaller than that of the Ag/AgCl electrode (43.2 KΩ).

In the breathability experiment, the flexible biosensor showed a water vapor transmission rate (WVTR) of 18 g m−2 h−1 (Figure 5c), which is close to that of epidermis (20 g m−2 h−1). This indicates that the biosensor has great breathability and will not cause discomfort when laminating on the epidermis. Additionally, the biosensor delivers a high sensitivity of 7.8 mV·N−1 and a fast response time of 103 ms (Figure 5d).

The ductility of the sensor is the key to maintaining conformal contact with the skin during long-term monitoring. We compared the performance of the flexible biosensor and sensors that made from other materials in Table 1, which illustrates that why we choose HPC/PVA to fabricate the flexible biosensor.

Table 1.

A summary of sensor’s characterization. CNT—carbon nanotube; PEDOT:PSS—poly(3,4-ethylenedioxythiophene):poly(styrenesulfonate).

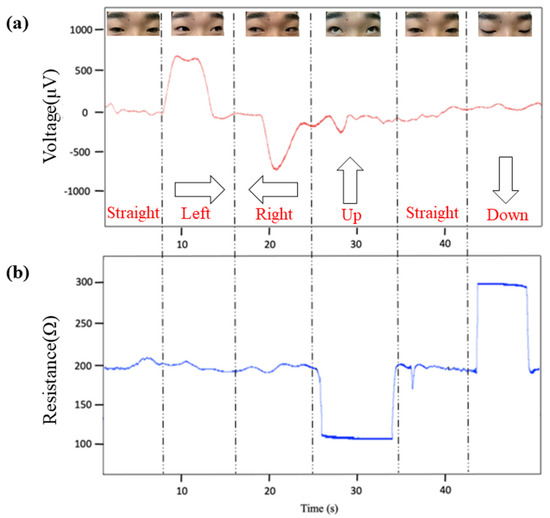

3.2. Eye Movements Identification

The waveforms of EOG and strain signals corresponding to various eye movements are depicted in Figure 6. To determine the recognition accuracy of the WT-SVM algorithm for eye movements, we collected 600 sets of eye movement signals from 30 volunteers with normal vision.

Figure 6.

Presentation of the electrophysiological signals in diverse eye movement states. (a) EOG signal. (b) Strain signal.

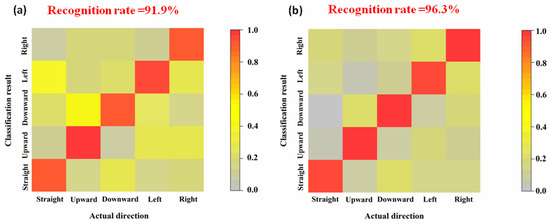

We recorded the actual states and the states identified by algorithms and constructed a 5 determinant. The value of each term in the determinant is the count that a particular state is recognized as another state (for example, the value of first row and first column of the determinant () is the count that the actual straight state is recognized as the right state). The correct recognition rate of each state (straight, upward, downward, left, and right) and the comprehensive accuracy can be calculated by Equations (12) and (13), respectively.

The classification and recognition result of signal data collected by Ag/AgCl electrode and flexible hydrogel biosensor are presented in Figure 7.

Figure 7.

Recognition accuracy of eye movement states. (a) Ag/AgCl electrode. (b) Flexible hydrogel biosensor.

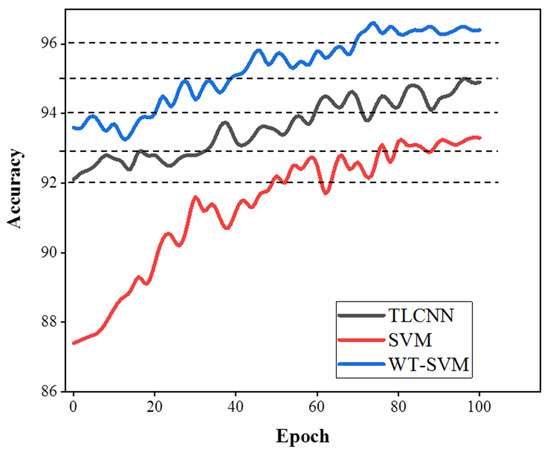

As can be seen in Figure 8, in the first epoch, the accuracy of the WT-SVM algorithm reached 93.6%. Compared with TLCNN and the traditional SVM method, it is fully trained and achieves a stable accuracy in less epochs. Therefore, the results of the WT-SVM algorithm in eye movement recognition are reproducible.

Figure 8.

Accuracy comparison of classification algorithms (TLCNN, SVM, WT-SVM).

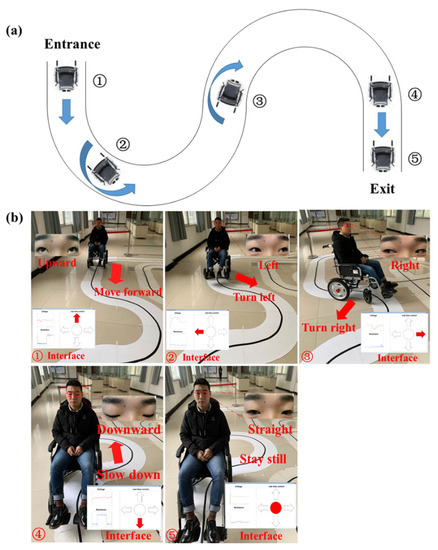

3.3. In-Site Experiment

We built a bend (Figure 9a) on an open field to examine the practicability of this eye-movement-controlled wheelchair prototype. Ten volunteers participated in this test and they were told how to control the wheelchair through eye movements. After an average of half an hour, they could already manipulate the wheelchair to their wills.

Figure 9.

In-site experiment of eye movement-controlled wheelchair prototype. (a) Route map of the bend. (b) Diverse eye movement states and corresponding wheelchair movement modes.

To pass the bend without collision, firstly, the selected volunteer needs to look upward to drive the wheelchair forward. When reaching the turning point, gazing at left or right will control the turning of wheelchair. Lastly, the tester should look downward at the exit to slow down the wheelchair gradually until it stops (Figure 9b). Ultimately, nine volunteers successfully maneuvered the wheelchair through the bend, which shows a certain degree of usability of the bioelectric system.

4. Conclusions

This work provides a new idea for realizing the eye-movement-control of wheelchairs through the combined analyses of EOG and strain signals. Owing to its structural flexibility, the hydrogel biosensor can be well-adhered to the dimpled epidermis, which allows it to collect electrophysiological signals accurately. Compared to the rigid Ag/AgCl electrode, the flexible biosensor is more biocompatible and less likely to cause skin irritations. To process and classify the signal data, Kalman filtering and WT-SVM algorithms are introduced. This soft bioelectronic system demonstrates a 96.3% accuracy for eye movement recognition, which is higher than the traditional rigid system (91.9%). The proposed eye movement control method possesses broad application scenarios like industrial control and AR/VR field.

Author Contributions

Conceptualization, X.W. and F.D.; methodology, X.W. and Y.X.; writing—original draft preparation, Y.X. and F.D.; investigation, Y.C.; data curation, H.Z. and Y.C.; writing—review and editing, Y.X., X.W. and Y.C.; project administration, H.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (grant number: 52005182), Science and Technology Research Project of Jiangxi Provincial Department of Education (grant number: 180308).

Institutional Review Board Statement

Ethical review and approval were waived for this study, because the experiment does not involve any invasive behaviors and will not have any impacts on participant’s physical or mental health.

Informed Consent Statement

Informed consent was obtained from all participants involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Disabled People in the World in 2019: Facts and Figures. Available online: https://www.inclusivecitymaker.com/disabled-people-in-the-world-in-2019-facts-and-figures/ (accessed on 25 February 2020).

- Kundu, A.S.; Mazumder, O.; Lenka, P.K.; Bhaumik, S. Hand Gesture Recognition Based Omnidirectional Wheelchair Control Using IMU and EMG Sensors. J. Intell. Robot. Syst. 2018, 91, 1–13. [Google Scholar] [CrossRef]

- Jha, P.; Khurana, P. Hand Gesture Controlled Wheelchair. Int. J. Sci. Technol. Res. 2016, 9, 243–249. [Google Scholar]

- Yassine, R.; Makrem, M.; Farhat, F. Intelligent Control Wheelchair Using a New Visual Joystick. J. Healthc. Eng. 2018, 2018, 1–20. [Google Scholar]

- Lopes, J.; Sim, O.M.; Mendes, N.; Safeea, M.; Afonso, J.; Neto, P. Hand/arm Gesture Segmentation by Motion Using IMU and EMG Sensing. Procedia Manuf. 2017, 11, 107–113. [Google Scholar] [CrossRef]

- Neubert, S.; Thurow, K.; Stoll, N.; Ruzaij, M.F. Hybrid Voice Controller for Intelligent Wheelchair and Rehabilitation Robot Using Voice Recognition and Embedded Technologies. J. Adv. Comput. Intell. Intell. Inform. 2016, 20, 615–622. [Google Scholar]

- Voznenko, T.I.; Chepin, E.V.; Urvanov, G.A. The Control System Based on Extended BCI for a Robotic Wheelchair. Procedia Comput. Sci. 2018, 123, 522–527. [Google Scholar] [CrossRef]

- Nishimori, M.; Saitoh, T.; Konishi, R. Voice controlled intelligent wheelchair. In Proceedings of the SICE Annual Conference, Takamatsu, Japan, 17–20 September 2007. [Google Scholar]

- Chahal, B.M. Microcontoller Based Gesture Controlled Wheelchair Using Accelerometer. Int. J. Eng. Sci. Res. Technol. 2014, 3, 1065–1070. [Google Scholar]

- Srinivasan, A.; Vinoth, T.R.; Ravinder, R.; Mosses, S.P.; Kumar, Y. Voice Controlled Wheel Chair with Intelligent Stability. J. Comput. Theor. Nanosci. 2020, 17, 3689–3693. [Google Scholar] [CrossRef]

- Eid, M.A.; Giakoumidis, N.; Saddik, A.E. A Novel Eye-Gaze-Controlled Wheelchair System for Navigating Unknown Environments: Case Study With a Person With ALS. IEEE Access 2016, 4, 558–573. [Google Scholar] [CrossRef]

- Meena, Y.K.; Cecotti, H.; Wong-Lin, K.F.; Prasad, G. A multimodal interface to resolve the Midas-Touch problem in gaze controlled wheelchair. In Proceedings of the Engineering in Medicine & Biology Society, Jeju, Korea, 11–15 July 2017. [Google Scholar]

- Dahmani, M.; Chowdhury, M.; Khandakar, A.; Rahman, T.; Kiranyaz, S. An Intelligent and Low-Cost Eye-Tracking System for Motorized Wheelchair Control. Sensors 2020, 20, 3936. [Google Scholar] [CrossRef]

- Larrazabal, A.J.; Cena, C.; Martínez, C. Video-oculography eye tracking towards clinical applications: A review. Comput. Biol. Med. 2019, 108, 57–66. [Google Scholar] [CrossRef]

- Nobuaki, K.; Masahiro, N. BCI-based control of electric wheelchair using fractal characteristics of EEG. IEEJ Trans. Electr. Electron. Eng. 2018, 13, 1795–1803. [Google Scholar]

- Shahin, M.K.; Tharwat, A.; Gaber, T.; Hassanien, A.E. A Wheelchair Control System Using Human-Machine Interaction: Single-Modal and Multimodal Approaches. J. Intell. Syst. 2017, 28, 115–132. [Google Scholar] [CrossRef]

- Liu, R.; Wang, Y.; Newman, G.I.; Thakor, N.V.; Ying, S. EEG Classification with a Sequential Decision-Making Method in Motor Imagery BCI. Int. J. Neural Syst. 2017, 27, 1750046. [Google Scholar] [CrossRef] [PubMed]

- Antoniou, E.; Bozios, P.; Christou, V.; Tzimourta, K.D.; Tzallas, A.T. EEG-Based Eye Movement Recognition Using the Brain–Computer Interface and Random Forests. Sensors 2021, 21, 2339. [Google Scholar] [CrossRef] [PubMed]

- Zaydoon, T.; Zaidan, B.B.; Zaidan, A.A.; Suzani, M.S. A Review of Disability EEG based Wheelchair Control System: Coherent Taxonomy, Open Challenges and Recommendations. Comput. Methods Programs Biomed. 2018, 164, 221–237. [Google Scholar]

- Mishra, S.; Norton, J.; Lee, Y.; Lee, D.S.; Agee, N.; Chen, Y.; Chun, Y. Soft, Conformal Bioelectronics for a Wireless Human-Wheelchair Interface. Biosens. Bioelectron. 2017, 91. [Google Scholar] [CrossRef]

- Qiyun, H.; Yang, C.; Zhijun, Z.; Shenghong, H.; Rui, Z.; Jun, L.; Yuandong, Z.; Ming, S.; Yuanqing, L. An EOG-based wheelchair robotic arm system for assisting patients with severe spinal cord injuries. J. Neural Eng. 2019, 16, 026021. [Google Scholar]

- Huang, Q.; He, S.; Wang, Q.; Gu, Z.; Peng, N.; Li, K.; Zhang, Y.; Shao, M.; Li, Y. An EOG-Based Human-Machine Interface for Wheelchair Control. IEEE Trans. Biomed. Eng. 2017, 65, 2023–2032. [Google Scholar] [CrossRef]

- Huang, Q.; Zhang, Z.; Yu, T.; He, S.; Li, Y. An EEG-/EOG-Based Hybrid Brain-Computer Interface: Application on Controlling an Integrated Wheelchair Robotic Arm System. Front. Neurosci. 2019, 13, 1243. [Google Scholar] [CrossRef]

- Choudhari, A.M.; Porwal, P.; Jonnalagedda, V.; Mériaudeau, F. An Electrooculography based Human Machine Interface for wheelchair control. Biocybern. Biomed. Eng. 2019, 39, 673–685. [Google Scholar] [CrossRef]

- Rui, Z.; He, S.; Yang, X.; Wang, X.; Kai, L.; Huang, Q.; Gu, Z.; Yu, Z.; Zhang, X.; Tang, D. An EOG-Based Human-Machine Interface to Control a Smart Home Environment for Patients With Severe Spinal Cord Injuries. IEEE Trans. Biomed. Eng. 2018, 66, 89–100. [Google Scholar]

- Kaur, A. Wheelchair control for disabled patients using EMG/EOG based human machine interface: A review. J. Med Eng. Technol. 2020, 45, 1–22. [Google Scholar]

- Martínez-Cerveró, J.; Ardali, M.K.; Jaramillo-Gonzalez, A.; Wu, S.; Chaudhary, U. Open Software/Hardware Platform for Human- Computer Interface Based on Electrooculography (EOG) Signal Classification. Sensors 2020, 20, 2443. [Google Scholar] [CrossRef]

- Paul, G.M.; Cao, F.; Torah, R.; Yang, K. A Smart Textile Based Facial EMG and EOG Computer Interface. IEEE Sens. J. 2013, 14, 393–400. [Google Scholar] [CrossRef]

- Zeng, H.; Song, A.; Yan, R.; Qin, H. EOG Artifact Correction from EEG Recording Using Stationary Subspace Analysis and Empirical Mode Decomposition. Sensors 2013, 13, 14839–14859. [Google Scholar] [CrossRef]

- Aziz, F.; Arof, H.; Mokhtar, N.; Mubin, M. HMM based automated wheelchair navigation using EOG traces in EEG. J. Neural Eng. 2014, 11, 056018. [Google Scholar] [CrossRef]

- Fang, F.; Takahiro, S.; Stefano, F. Electrooculography-based continuous eye-writing recognition system for efficient assistive communication systems. PLoS ONE 2018, 13, e0192684. [Google Scholar] [CrossRef]

- Rastjoo, A.; Arabalibeik, H. Evaluation of Hidden Markov Model for p300 detection in EEG signal. Stud. Health Technol. Inform. 2009, 142, 265–267. [Google Scholar]

- Zhu, Y.; Yan, Y.; Komogortsev, O. Hierarchical HMM for Eye Movement Classification; Springer: Cham, Germany, 2020. [Google Scholar]

- Abdollahpour, M.; Rezaii, T.Y.; Farzamnia, A.; Saad, I. Transfer Learning Convolutional Neural Network for Sleep Stage Classification Using Two-Stage Data Fusion Framework. IEEE Access 2020, 8, 180618–180632. [Google Scholar] [CrossRef]

- Jadhav, P.; Rajguru, G.; Datta, D.; Mukhopadhyay, S. Automatic sleep stage classification using time–frequency images of CWT and transfer learning using convolution neural network. Biocybern. Biomed. Eng. 2020, 40, 494–504. [Google Scholar] [CrossRef]

- Andreotti, F.; Phan, H.; Cooray, N.; Lo, C.; Hu, M.T.; De Vos, M. Multichannel Sleep Stage Classification and Transfer Learning using Convolutional Neural Networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Haque, R.U.; Pongos, A.L.; Manzaneres, C.M.; Lah, J.J.; Levey, A.I.; Clifford, G.D. Deep Convolutional Neural Networks and Transfer Learning for Measuring Cognitive Impairment Using Eye-Tracking in a Distributed Tablet-Based Environment. IEEE Trans. Biomed. Eng. 2021, 68, 11–18. [Google Scholar] [CrossRef]

- Shah, J.H.; Sharif, M.; Yasmin, M.; Fernandes, S.L. Facial Expressions Classification and False Label Reduction Using LDA and Threefold SVM. Pattern Recognit. Lett. 2017. [Google Scholar] [CrossRef]

- Zheng-Hua, M.A.; Qiao, Y.T.; Lei, L.I.; Rong, H.L. Classification of surface EMG signals based on LDA. Comput. Eng. Sci. 2016. [Google Scholar] [CrossRef]

- He, S.; Li, Y. A Single-channel EOG-based Speller. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1978–1987. [Google Scholar] [CrossRef]

- Tang, H.; Yue, Z.; Wei, H.; Wei, F. An anti-interference EEG-EOG hybrid detection approach for motor image identification and eye track recognition. In Proceedings of the 2015 34th Chinese Control Conference (CCC), Hangzhou, China, 28–30 July 2015. [Google Scholar]

- Basha, A.J.; Balaji, B.S.; Poornima, S.; Prathilothamai, M.; Venkatachalam, K. Support vector machine and simple recurrent network based automatic sleep stage classification of fuzzy kernel. J. Ambient Intell. Humaniz. Comput. 2020, 1–9. [Google Scholar] [CrossRef]

- Zhang, Z.; Wei, S.; Zhu, G.; Liu, F.; Li, Y.; Dong, X.; Liu, C.; Liu, F. Efficient sleep classification based on entropy features and support vector machine classifier. Physiol. Meas. 2018, 39, 115005. [Google Scholar] [CrossRef]

- Lajnef, T.; Chaibi, S.; Ruby, P.; Aguera, P.E.; Eichenlaub, J.B.; Samet, M.; Kachouri, A.; Jerbi, K. Learning machines and sleeping brains: Automatic sleep stage classification using decision-tree multi-class support vector machines. J. Neurosci. Methods 2015. [Google Scholar] [CrossRef]

- Andrea, R.; Alessio, T.; Marco, F.; Maria, S. A Flexible and Highly Sensitive Pressure Sensor Based on a PDMS Foam Coated with Graphene Nanoplatelets. Sensors 2016, 16, 2148. [Google Scholar]

- Wang, L.; Liu, J.; Yang, B.; Yang, C. PDMS-based low cost flexible dry electrode for long-term EEG measurement. IEEE Sensors J. 2012, 12, 2898–2904. [Google Scholar] [CrossRef]

- Yu, B.; Long, N.; Moussy, Y.; Moussy, F. A long-term flexible minimally-invasive implantable glucose biosensor based on an epoxy-enhanced polyurethane membrane. Biosens. Bioelectron. 2006, 21, 2275–2282. [Google Scholar] [CrossRef]

- Bescond, Y.L.; Lebeau, J.; Delgove, L.; Sadek, H.; Raphael, B. Smooth eye movement interaction using EOG glasses. Rev. Stomatol. Chir. Maxillo Faciale 2016, 93, 185. [Google Scholar]

- Ning, H.; Karube, Y.; Arai, M.; Watanabe, T.; Cheng, Y.; Yuan, L.; Liu, Y.; Fukunaga, H. Investigation on sensitivity of a polymer/carbon nanotube composite strain sensor. Carbon 2010, 48, 680–687. [Google Scholar]

- Aungsakul, S.; Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Evaluating Feature Extraction Methods of Electrooculography (EOG) Signal for Human-Computer Interface. Procedia Eng. 2012, 32, 246–252. [Google Scholar] [CrossRef]

- Wang, W.S.; Wu, Z.C.; Huang, H.Y.; Luo, C.H. Low-Power Instrumental Amplifier for Portable ECG. In Proceedings of the IEEE Circuits & Systems International Conference on Testing & Diagnosis, Chengdu, China, 28–29 April 2009. [Google Scholar]

- Naga, R.; Chandralingam, S.; Anjaneyulu, T.; Satyanarayana, K. Denoising EOG Signal using Stationary Wavelet Transform. Meas. Sci. Rev. 2012, 12, 46–51. [Google Scholar] [CrossRef]

- Agarwal, S.; Singh, V.; Rani, A.; Mittal, A.P. Hardware efficient denoising system for real EOG signal processing. J. Intell. Fuzzy Syst. Appl. Eng. Technol. 2017, 32, 2857–2862. [Google Scholar] [CrossRef]

- Singh, B.; Wagatsuma, H. Two-stage wavelet shrinkage and EEG-EOG signal contamination model to realize quantitative validations for the artifact removal from multiresource biosignals. Biomed. Signal Process. Control 2018, 47, 96–114. [Google Scholar] [CrossRef]

- Kim, Y.-S.; Lee, J.; Ameen, A.; Shi, L.; Li, M.; Wang, S.; Ma, R.; Jin, S.H.; Kang, Z.; Huang, Y.; et al. Multifunctional Epidermal Electronics Printed Directly Onto the Skin. Adv. Mater. 2013, 25. [Google Scholar] [CrossRef]

- Lu, N.; Chi, L.; Yang, S.; Rogers, J. Highly Sensitive Skin-Mountable Strain Gauges Based Entirely on Elastomers. Adv. Funct. Mater. 2012, 22, 4044–4050. [Google Scholar] [CrossRef]

- Lee, Y.Y.; Lee, J.H.; Cho, J.Y.; Kim, N.R.; Nam, D.H.; Choi, I.S.; Nam, K.T.; Joo, Y.C. Stretching—Induced Growth of PEDOT—Rich Cores: A New Mechanism for Strain-Dependent Resistivity Change in PEDOT:PSS Films. Adv. Funct. Mater. 2013, 23, 4020–4027. [Google Scholar] [CrossRef]

- Memarian, F.; Fereidoon, A.; Ganji, M. Graphene Young’s modulus: Molecular mechanics and DFT treatments. Superlattices Microstruct. 2015, 85, 348–356. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).