Chronic Study on Brainwave Authentication in a Real-Life Setting: An LSTM-Based Bagging Approach

Abstract

:1. Introduction

2. Materials and Methods

2.1. Ethics

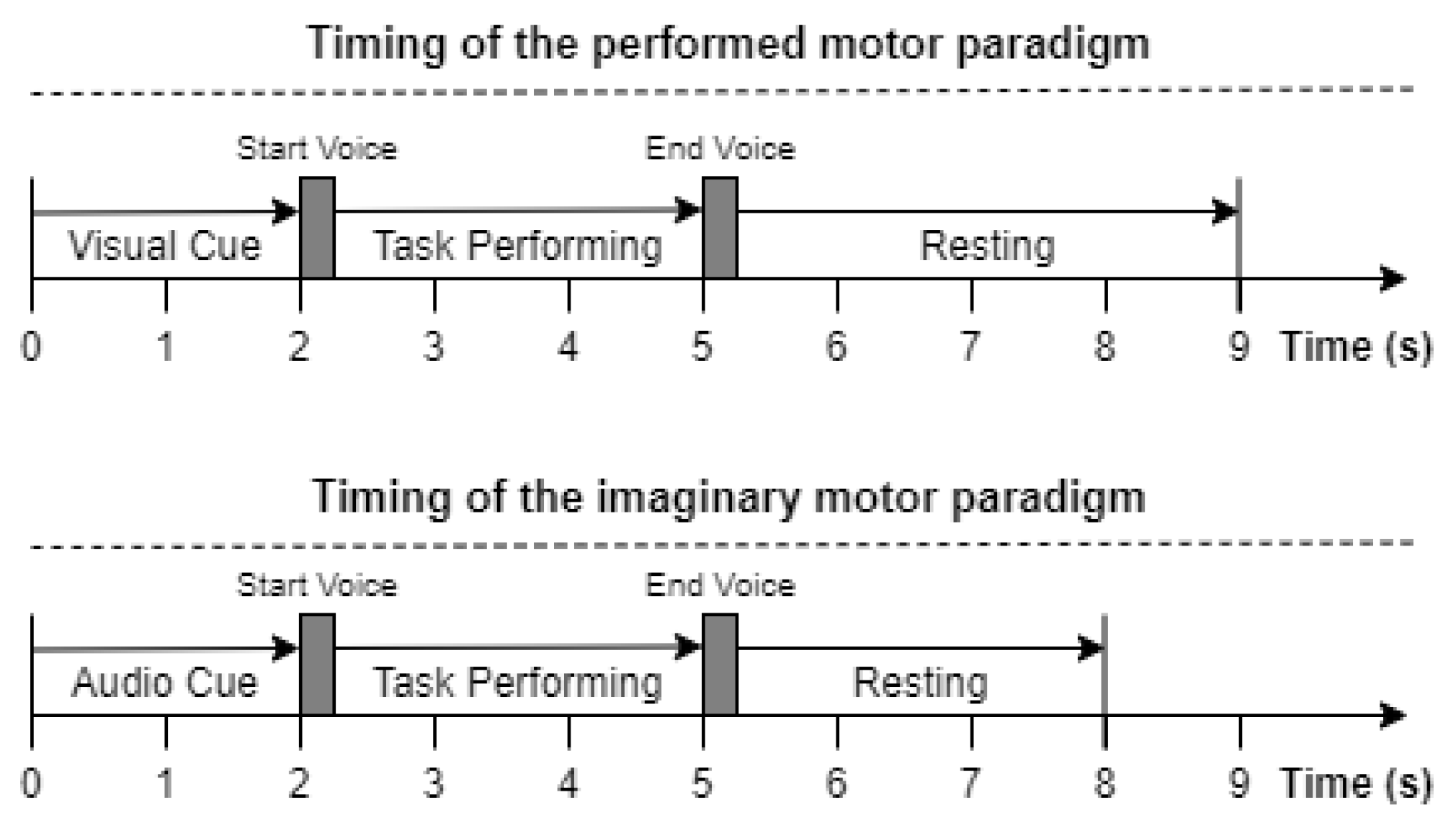

2.2. Experimental Setup

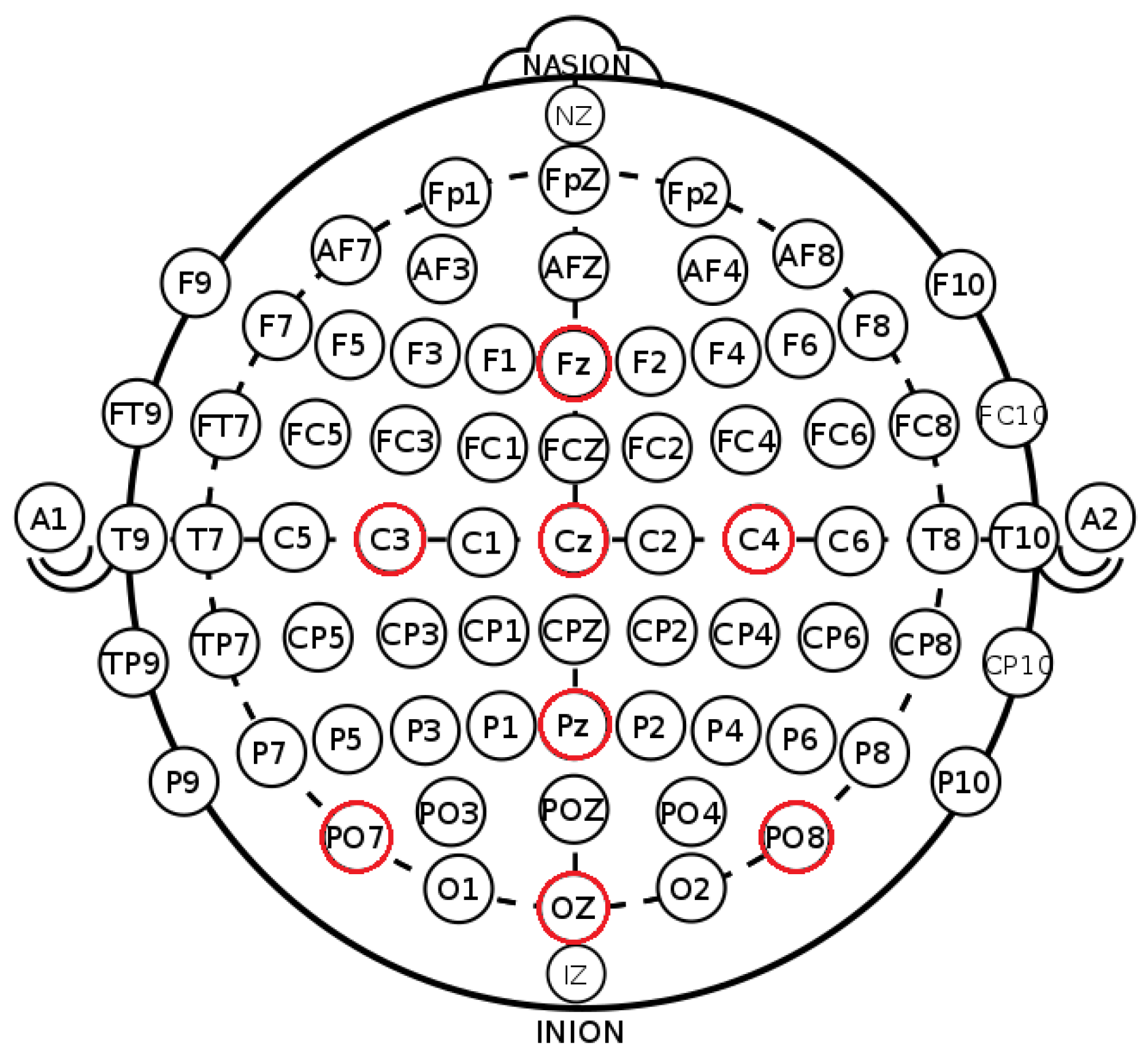

2.3. Preprocessing and Feature Extraction

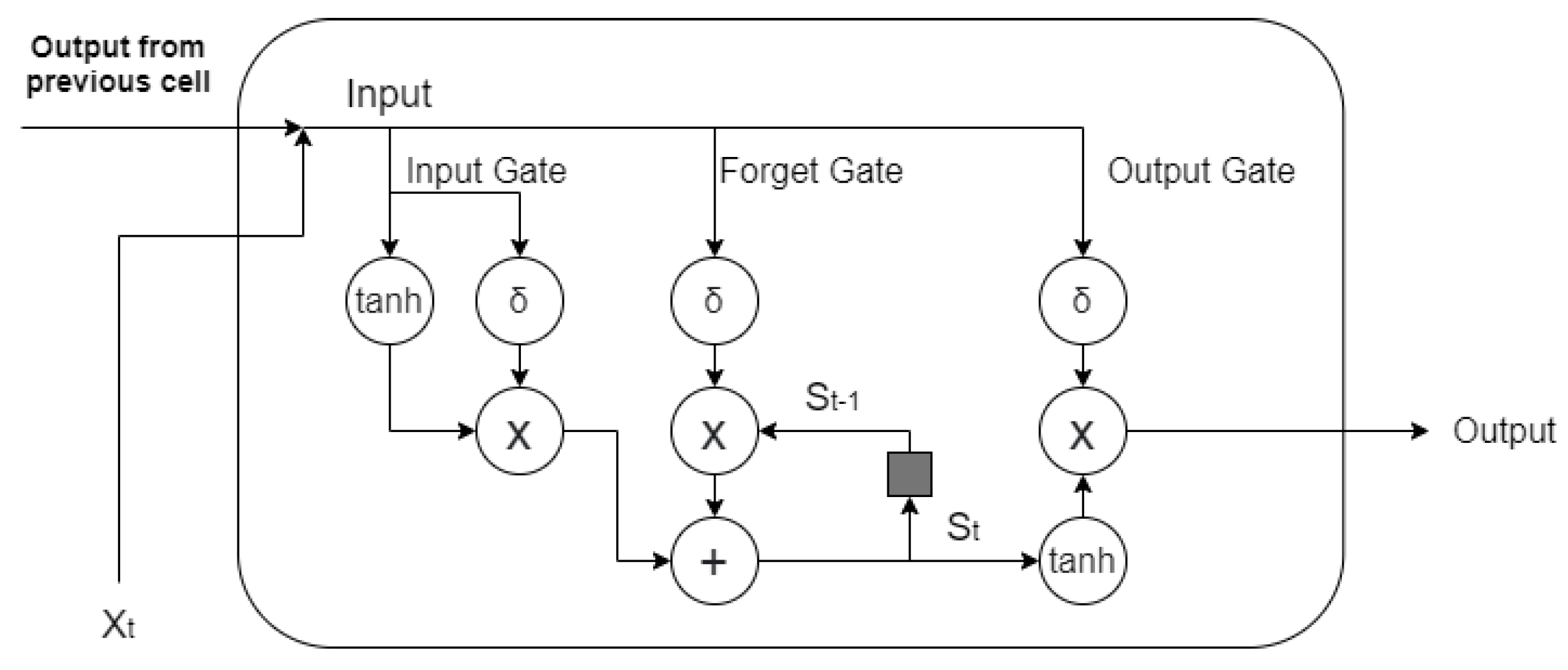

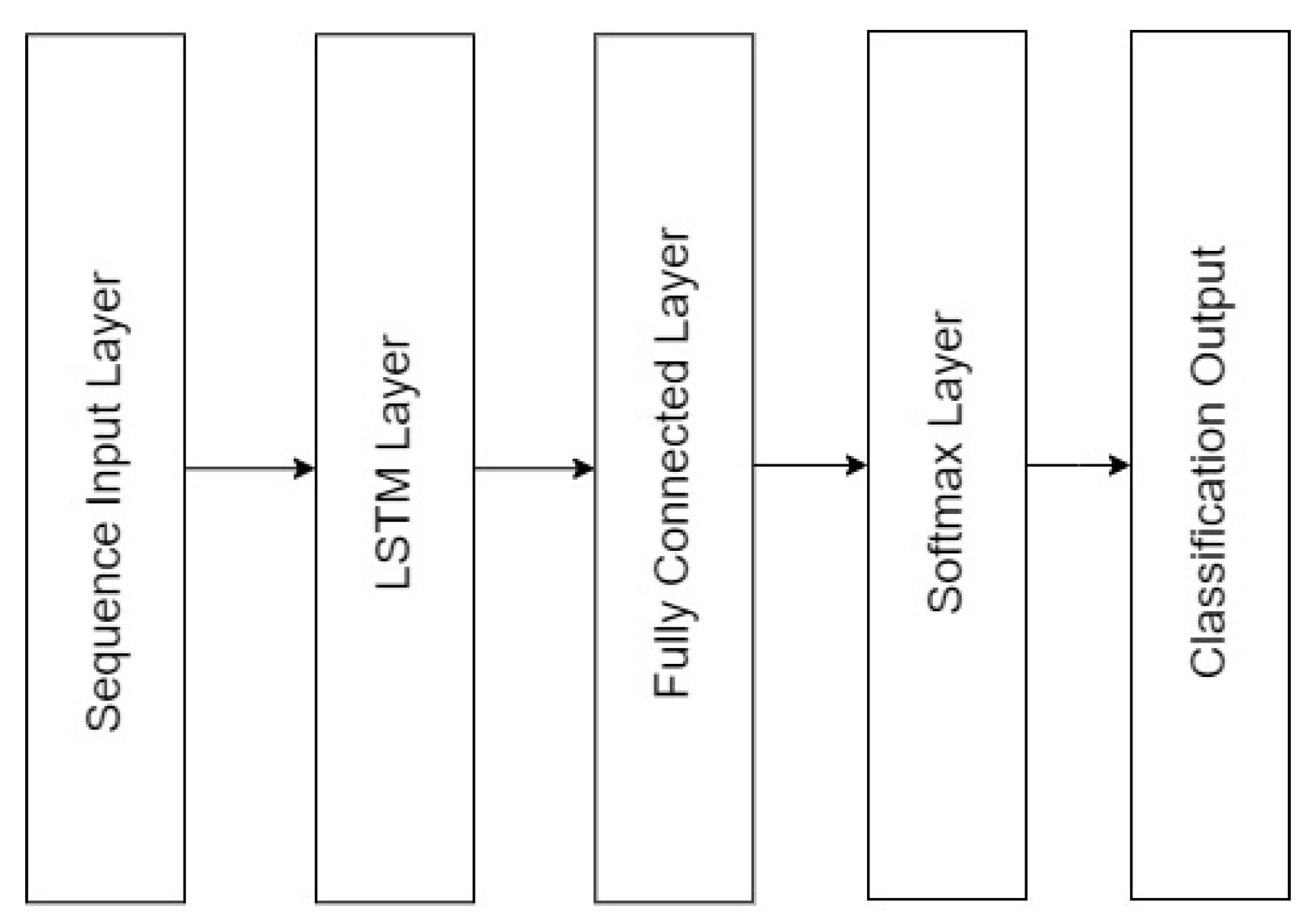

2.4. LSTM-Based Recurrent Neural Network

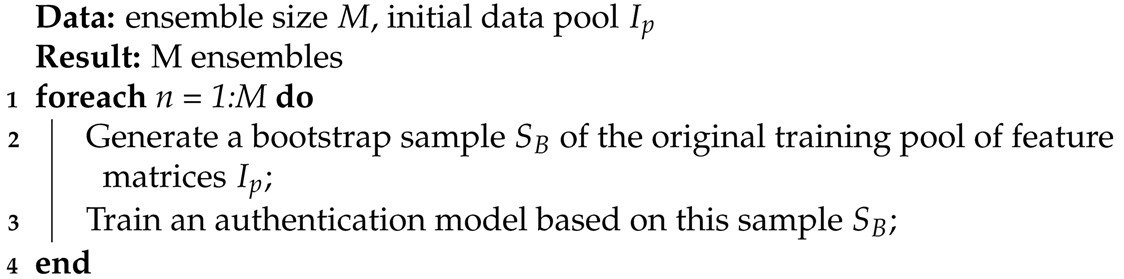

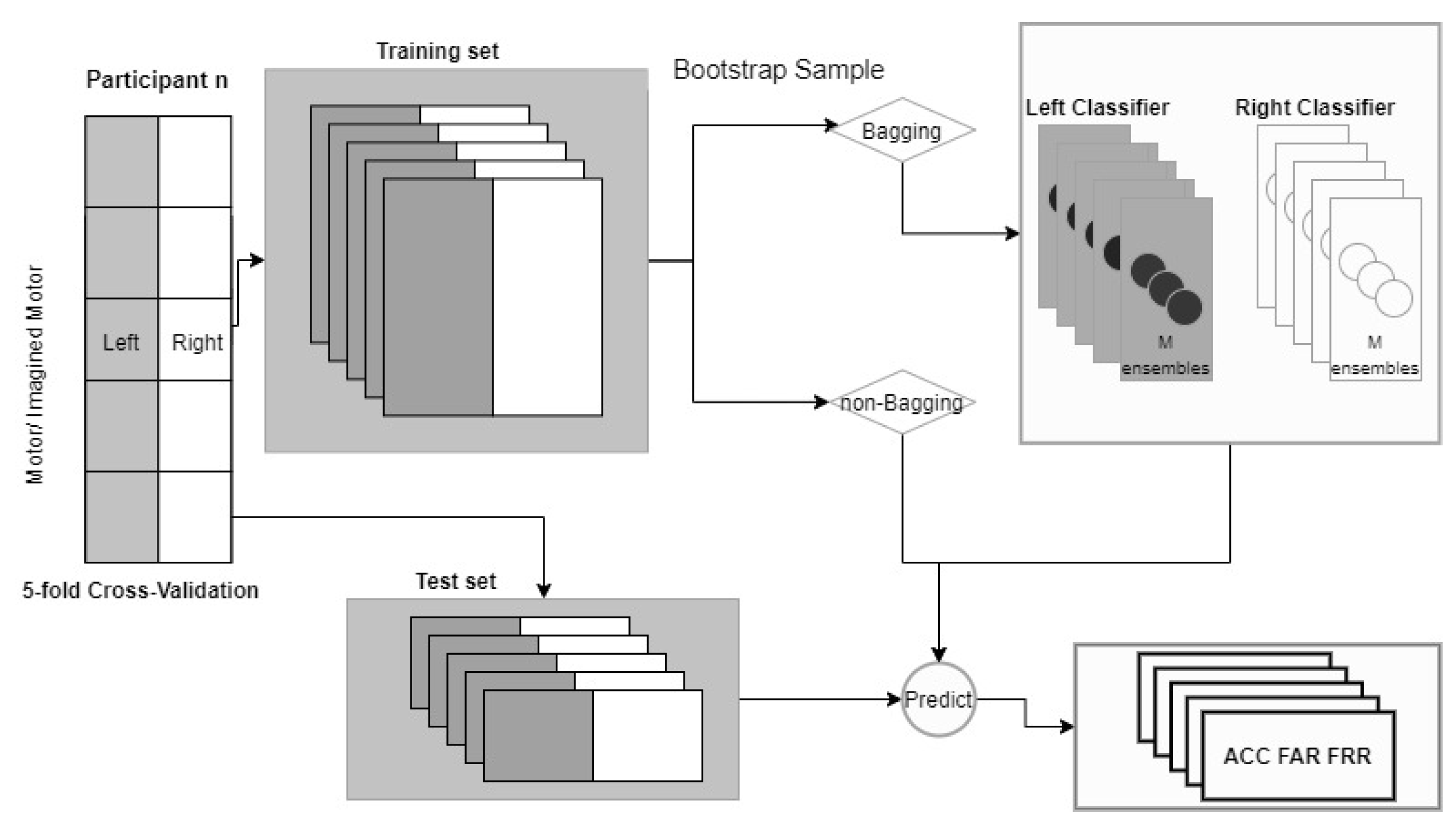

2.5. Bagging

| Algorithm 1: Bagging. |

|

2.6. Authentication

2.7. Statistics

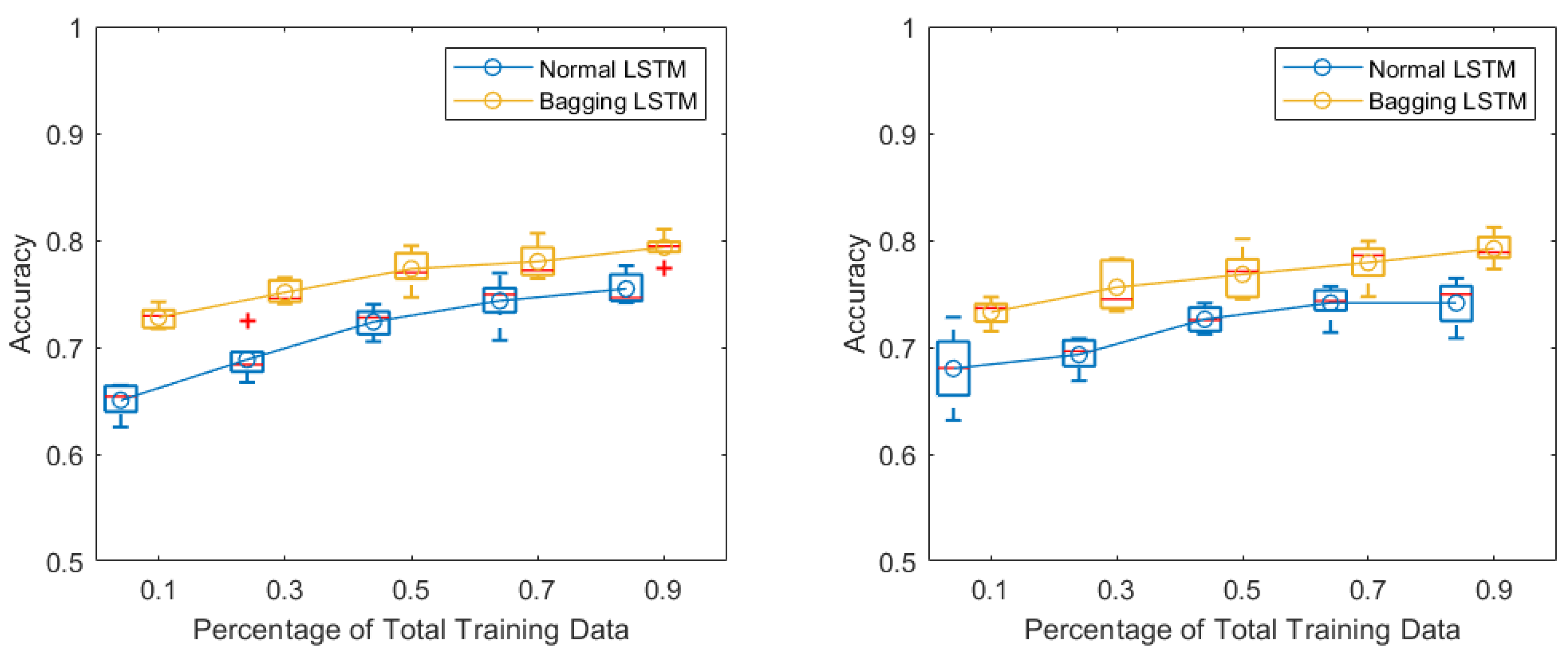

3. Results

4. Discussion

- Specialized decoders/features: Unlike [13], where the LSTM worked with a CNN, or [23], where advanced data handling methods such as cross-correlation and transient removal were applied to achieve better-quality temporal features, we used only basic techniques. It could also be interesting to compare different decoders and features and to investigate which one is most suited for real-life chronic EEG authentication.

- Electrode/sub-band selection: We used eight electrodes and five sub-bands, but, when eliminating some electrodes or sub-bands, the results improved. Moreover, resting-state signals could be adopted to guide the optimization, as shown in [13], where eye blinking was used, albeit for user identification.

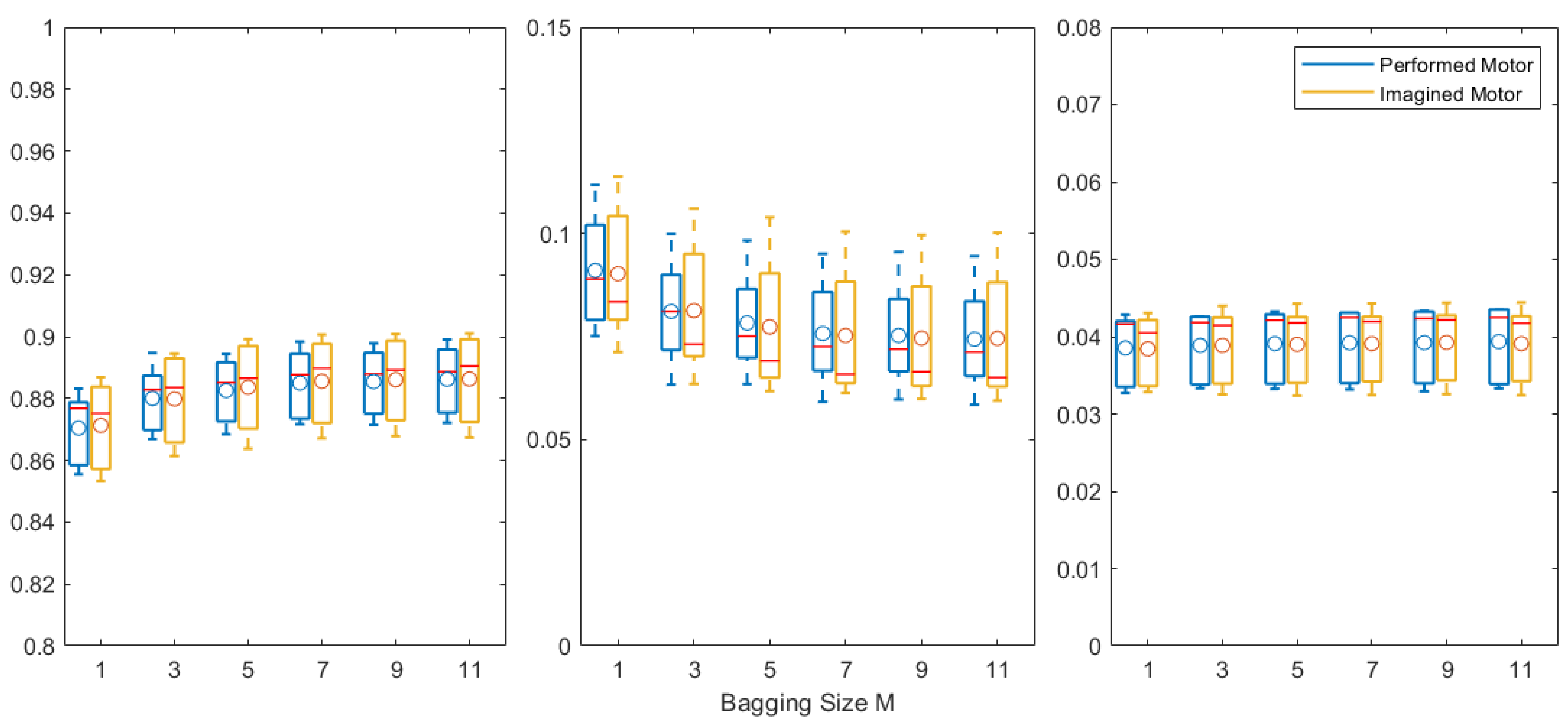

- Ensemble techniques: Although plausible, the proposed bagging with only one base classifier could be improved. According to Figure 7, no significant improvement was found when further increasing the ensemble size M. This may be caused by either insufficient training data or the limited decoding power of the LSTM-based model. Therefore, collecting more data or introducing other ensemble techniques could be investigated, for example, the mix-boost ensemble method proposed in [8], where better performance could be achieved when using multiple types of base classifiers rather than by varying hyperparameters for a single type of base classifier.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zviran, M.; Erlich, Z. Identification and Authentication: Technology and Implementation Issues. Commun. Assoc. Inf. Syst. 2006, 17, 90–105. [Google Scholar] [CrossRef] [Green Version]

- Menkus, B. Understanding the use of passwords. Comput. Secur. 1988, 7, 132–136. [Google Scholar] [CrossRef]

- Ashby, C.; Bhatia, A.; Tenore, F.; Vogelstein, J. Low-cost electroencephalogram (EEG) based authentication. In Proceedings of the 2011 5th International IEEE/EMBS Conference on Neural Engineering, Cancun, Mexico, 27 April–1 May 2011; pp. 442–445. [Google Scholar]

- Hu, J.F. New biometric approach based on motor imagery EEG signals. In Proceedings of the 2009 International Conference on Future BioMedical Information Engineering (FBIE), Sanya, China, 13–14 December 2009; pp. 94–97. [Google Scholar]

- Del Pozo-Banos, M.; Alonso, J.B.; Ticay-Rivas, J.R.; Travieso, C.M. Electroencephalogram subject identification: A review. Expert Syst. Appl. 2014, 41, 6537–6554. [Google Scholar] [CrossRef]

- Paranjape, R.; Mahovsky, J.; Benedicenti, L.; Koles’, Z. The electroencephalogram as a biometric. In Proceedings of the Canadian Conference on Electrical and Computer Engineering 2001, Conference Proceedings (Cat. No.01TH8555), Toronto, ON, Canada, 13–16 May 2001; Volume 2, pp. 1363–1366. [Google Scholar] [CrossRef]

- Barayeu, U.; Horlava, N.; Libert, A.; Van Hulle, M. Robust Single-Trial EEG-Based Authentication Achieved with a 2-Stage Classifier. Biosensors 2020, 10, 124. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, R.; Datta, A.; Sanyal, D. Ensemble Learning Approach to Motor Imagery EEG Signal Classification; Academic Press: London, UK, 2018; pp. 183–208. [Google Scholar] [CrossRef]

- Das, R.; Maiorana, E.; Campisi, P. Motor Imagery for EEG Biometrics Using Convolutional Neural Network; IEEE: Calgary, AB, Canada, 2018; pp. 2062–2066. [Google Scholar] [CrossRef]

- Yeom, S.K.; Suk, H.I.; Lee, S.W. Person authentication from neural activity of face-specific visual self-representation. Pattern Recognit. 2013, 46, 1159–1169. [Google Scholar] [CrossRef]

- Bidgoly, A.J.; Bidgoly, H.J.; Arezoumand, Z. A survey on methods and challenges in EEG based authentication. Comput. Secur. 2020, 93, 101788. [Google Scholar] [CrossRef]

- Zeng, Y.; Wu, Q.; Yang, K.; Tong, L.; Yan, B.; Shu, J.; Yao, D. EEG-Based Identity Authentication Framework Using Face Rapid Serial Visual Presentation with Optimized Channels. Sensors 2019, 19, 6. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, Y.; Lo, F.P.; Lo, B.P.L. EEG-based user identification system using 1D-convolutional long short-term memory neural networks. Expert Syst. Appl. 2019, 125, 259–267. [Google Scholar] [CrossRef]

- Klonovs, J.; Petersen, C.K.; Olesen, H.; Hammershøj, A. ID Proof on the Go: Development of a Mobile EEG-Based Biometric Authentication System. IEEE Veh. Technol. Mag. 2013, 8, 81–89. [Google Scholar] [CrossRef]

- Guger, C. Unicorn Brain Interface 2020. Available online: https://www.researchgate.net/publication/340940556_Unicorn_Brain_Interface (accessed on 20 September 2020).

- Kirar, J.; Agrawal, R. Relevant Frequency Band Selection using Sequential Forward Feature Selection for Motor Imagery Brain Computer Interfaces. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; pp. 52–59. [Google Scholar]

- Pfurtscheller, G.; Silva, F.L.D. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Essenwanger, O. Elements of Statistical Analysis; Elsevier: Amsterdam, The Netherlands, 1986. [Google Scholar]

- Huang, G.; Meng, J.; Zhang, D.; Zhu, X. Window Function for EEG Power Density Estimation and Its Application in SSVEP Based BCIs; ICIRA; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S. The Vanishing Gradient Problem During Learning Recurrent Neural Nets and Problem Solutions. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef] [Green Version]

- Olah, C. Understanding LSTM Networks. Available online: https://colah.github.io/posts/2015-08-Understanding-LSTMs/ (accessed on 20 September 2020).

- Puengdang, S.; Tuarob, S.; Sattabongkot, T.; Sakboonyarat, B. EEG-Based Person Authentication Method Using Deep Learning with Visual Stimulation. In Proceedings of the 2019 11th International Conference on Knowledge and Smart Technology (KST), Phuket, Thailand, 23–26 January 2019; pp. 6–10. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

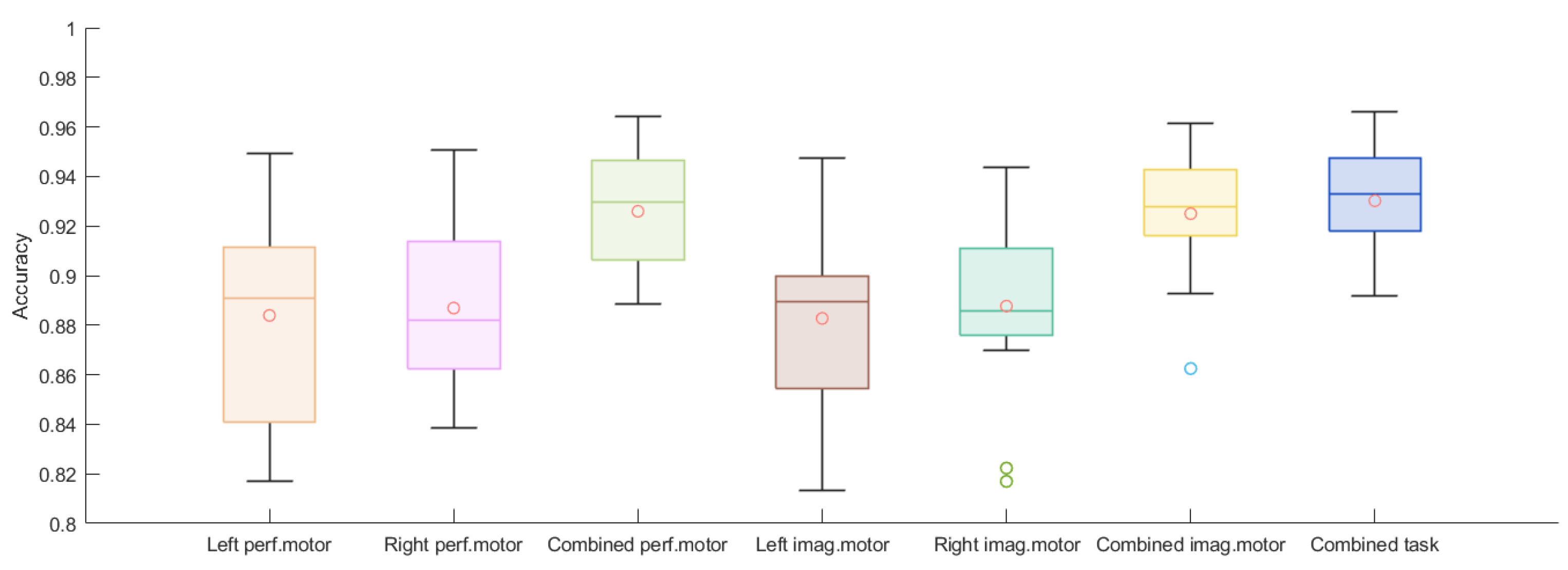

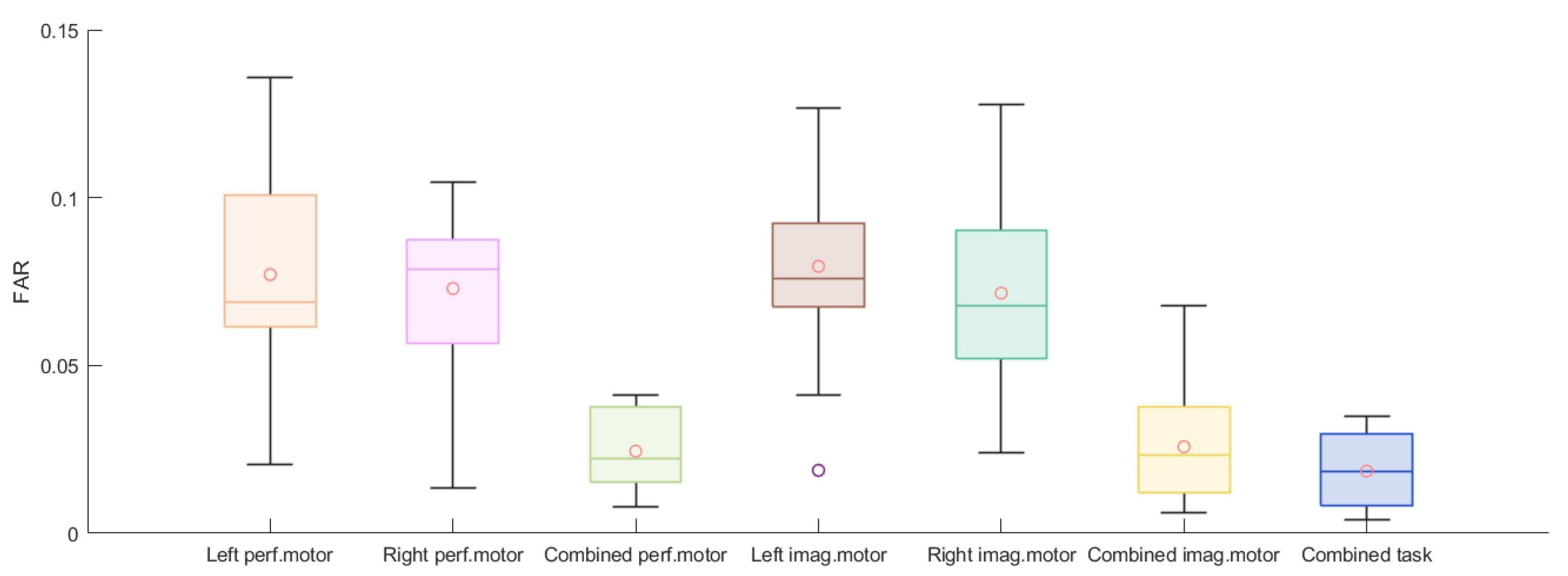

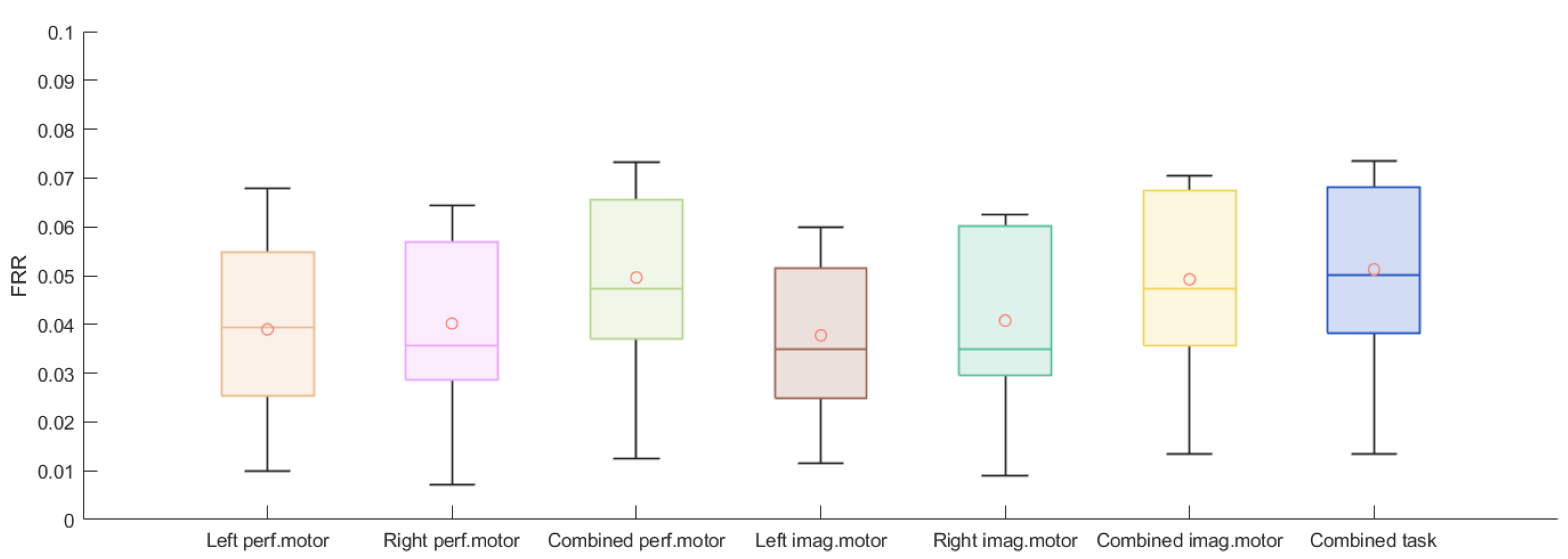

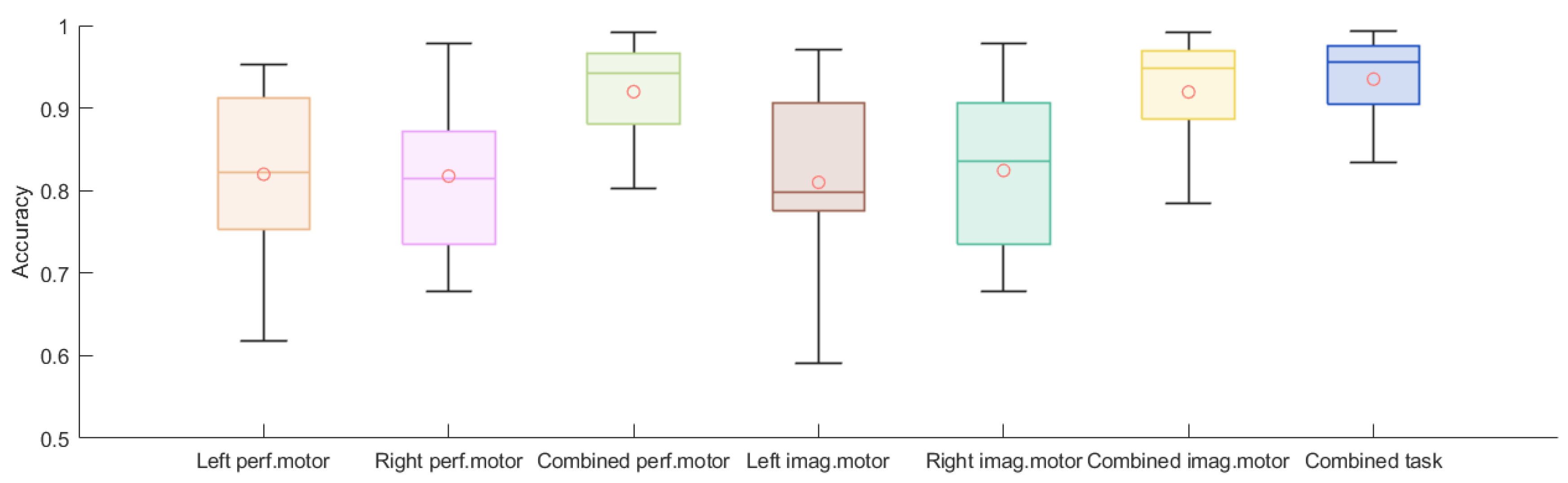

| Scheme | Left Performed Motor | Right Performed Motor | Combined Performed Motor | Left Imagined Motor | Right Imagined Motor | Combined Imagined Motor | Combined Task |

|---|---|---|---|---|---|---|---|

| Averaged Acc. | 0.884 | 0.887 | 0.926 | 0.883 | 0.888 | 0.925 | 0.930 |

| Averaged FAR | 0.077 | 0.073 | 0.025 | 0.080 | 0.072 | 0.026 | 0.019 |

| Averaged FRR | 0.039 | 0.040 | 0.050 | 0.038 | 0.041 | 0.049 | 0.051 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Libert, A.; Van Hulle, M.M. Chronic Study on Brainwave Authentication in a Real-Life Setting: An LSTM-Based Bagging Approach. Biosensors 2021, 11, 404. https://doi.org/10.3390/bios11100404

Yang L, Libert A, Van Hulle MM. Chronic Study on Brainwave Authentication in a Real-Life Setting: An LSTM-Based Bagging Approach. Biosensors. 2021; 11(10):404. https://doi.org/10.3390/bios11100404

Chicago/Turabian StyleYang, Liuyin, Arno Libert, and Marc M. Van Hulle. 2021. "Chronic Study on Brainwave Authentication in a Real-Life Setting: An LSTM-Based Bagging Approach" Biosensors 11, no. 10: 404. https://doi.org/10.3390/bios11100404

APA StyleYang, L., Libert, A., & Van Hulle, M. M. (2021). Chronic Study on Brainwave Authentication in a Real-Life Setting: An LSTM-Based Bagging Approach. Biosensors, 11(10), 404. https://doi.org/10.3390/bios11100404