Machine Learning-Assisted Identification of Single-Layer Graphene via Color Variation Analysis

Abstract

1. Introduction

2. Modeling and Methods

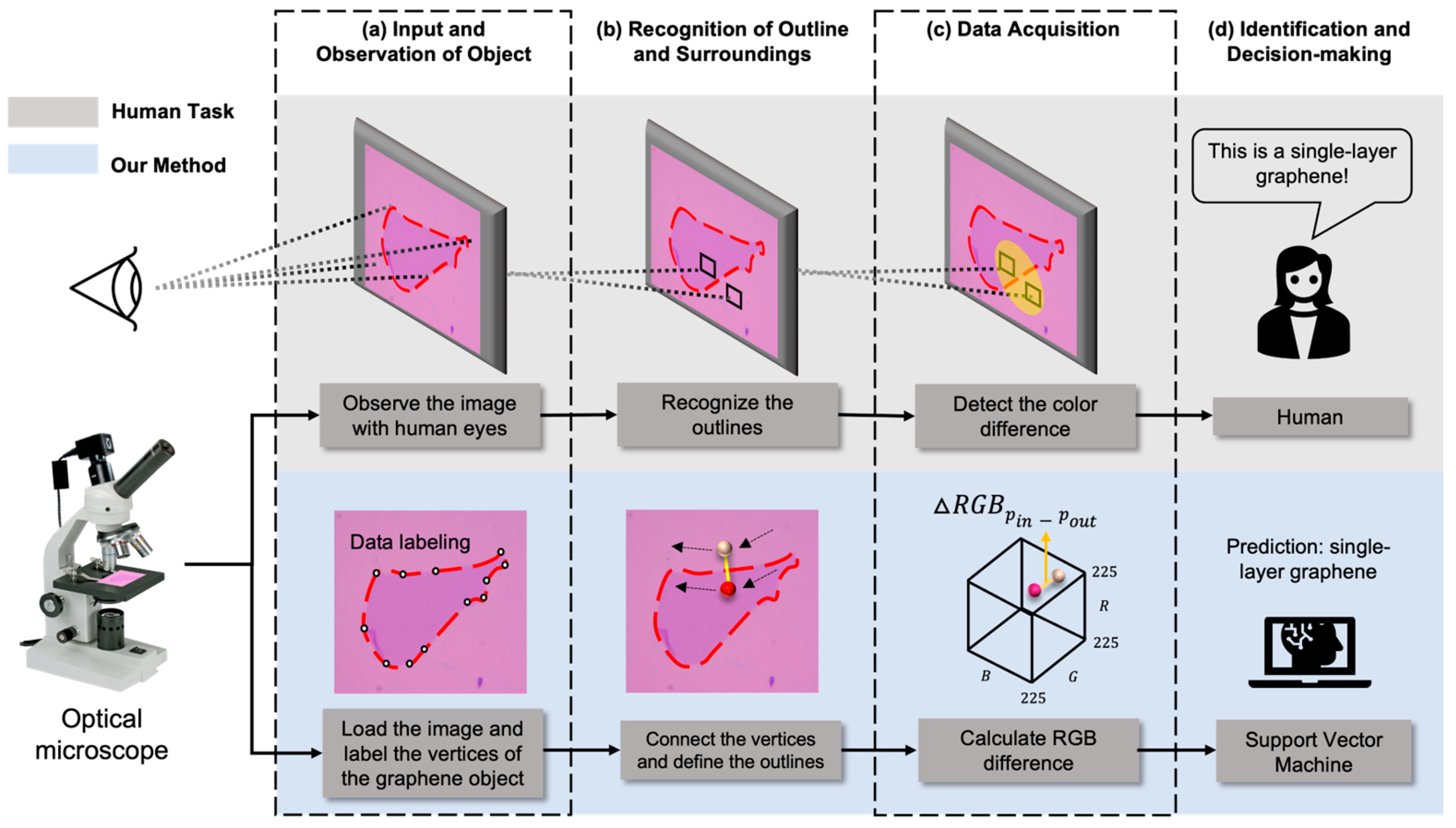

2.1. Our Approach to Identifying Single-Layer Graphene

2.2. Methods

2.2.1. Generating Graphene Image Datasets and Data Labeling

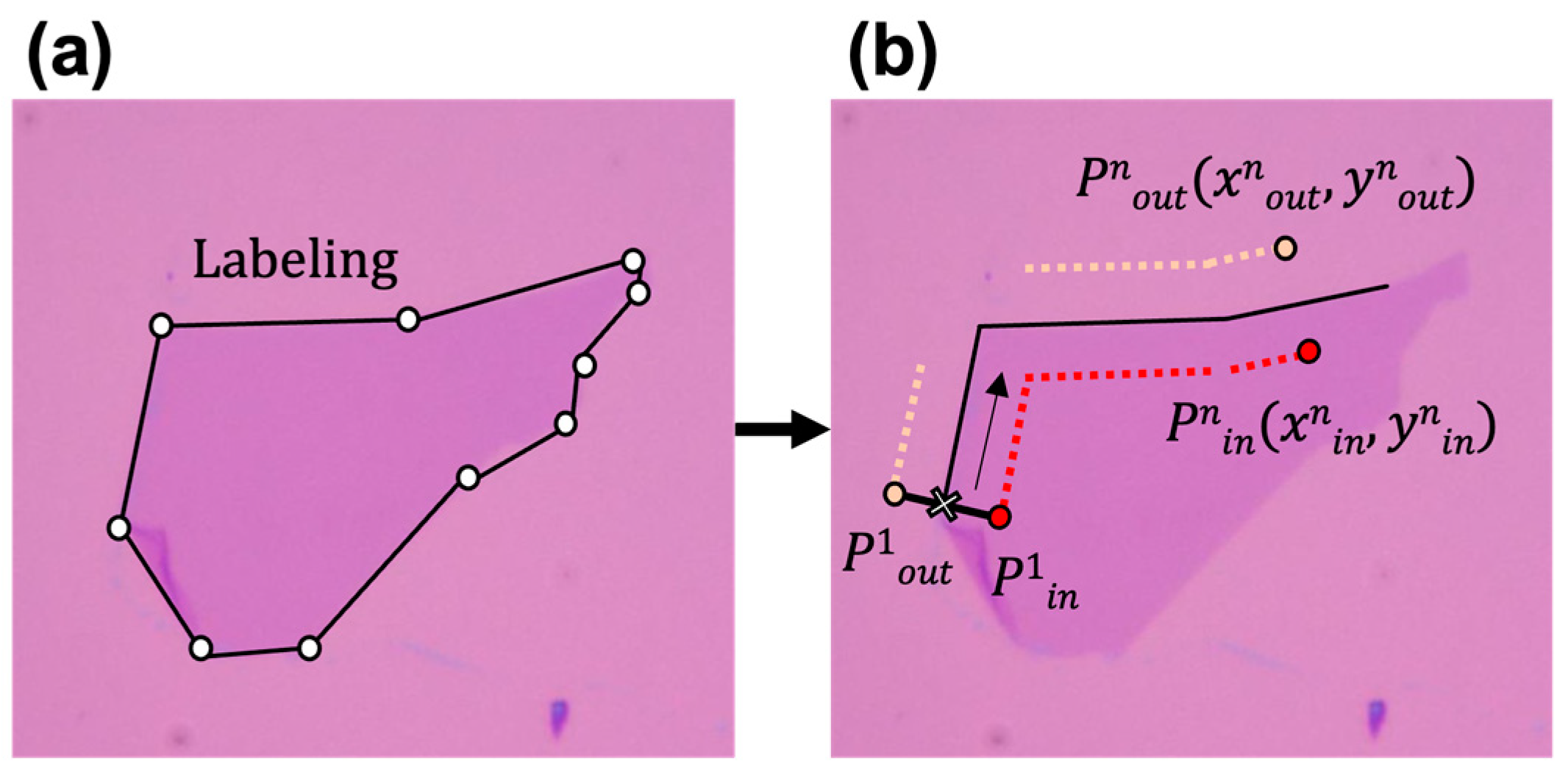

2.2.2. Recognition of Graphene Outline and Its Surroundings

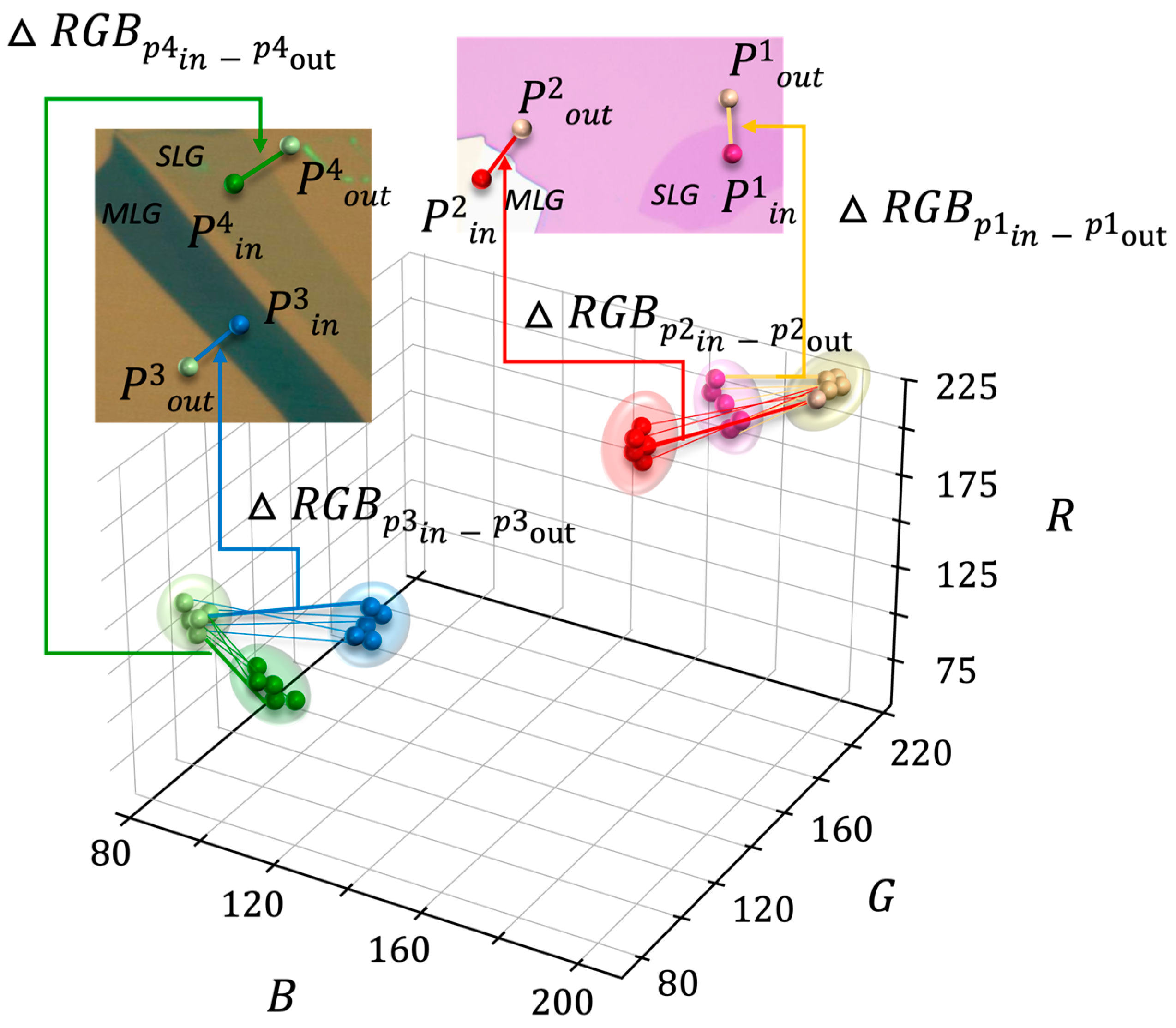

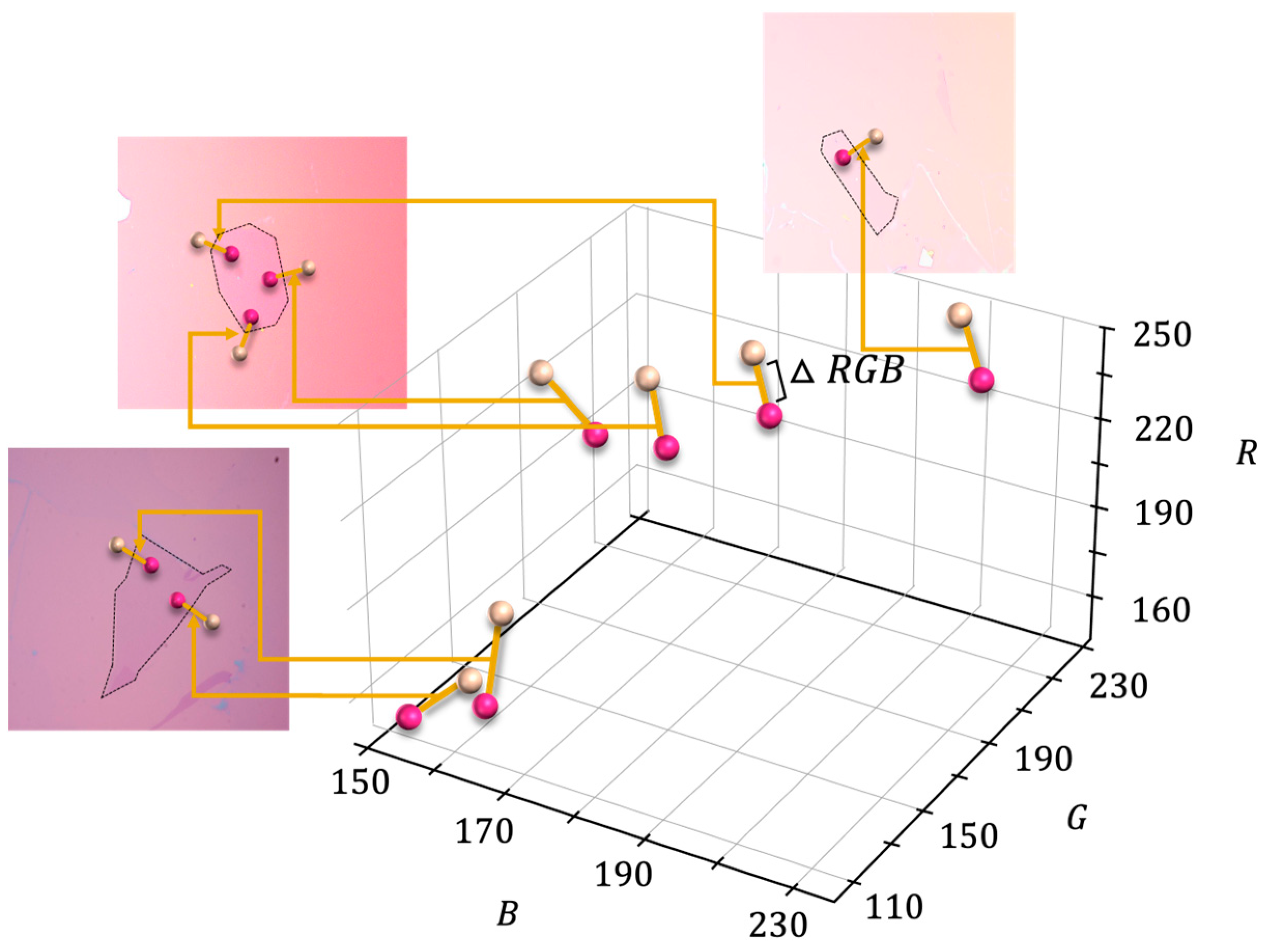

2.2.3. Data Acquisition

2.2.4. Application of Machine Learning Model

3. Results and Discussion

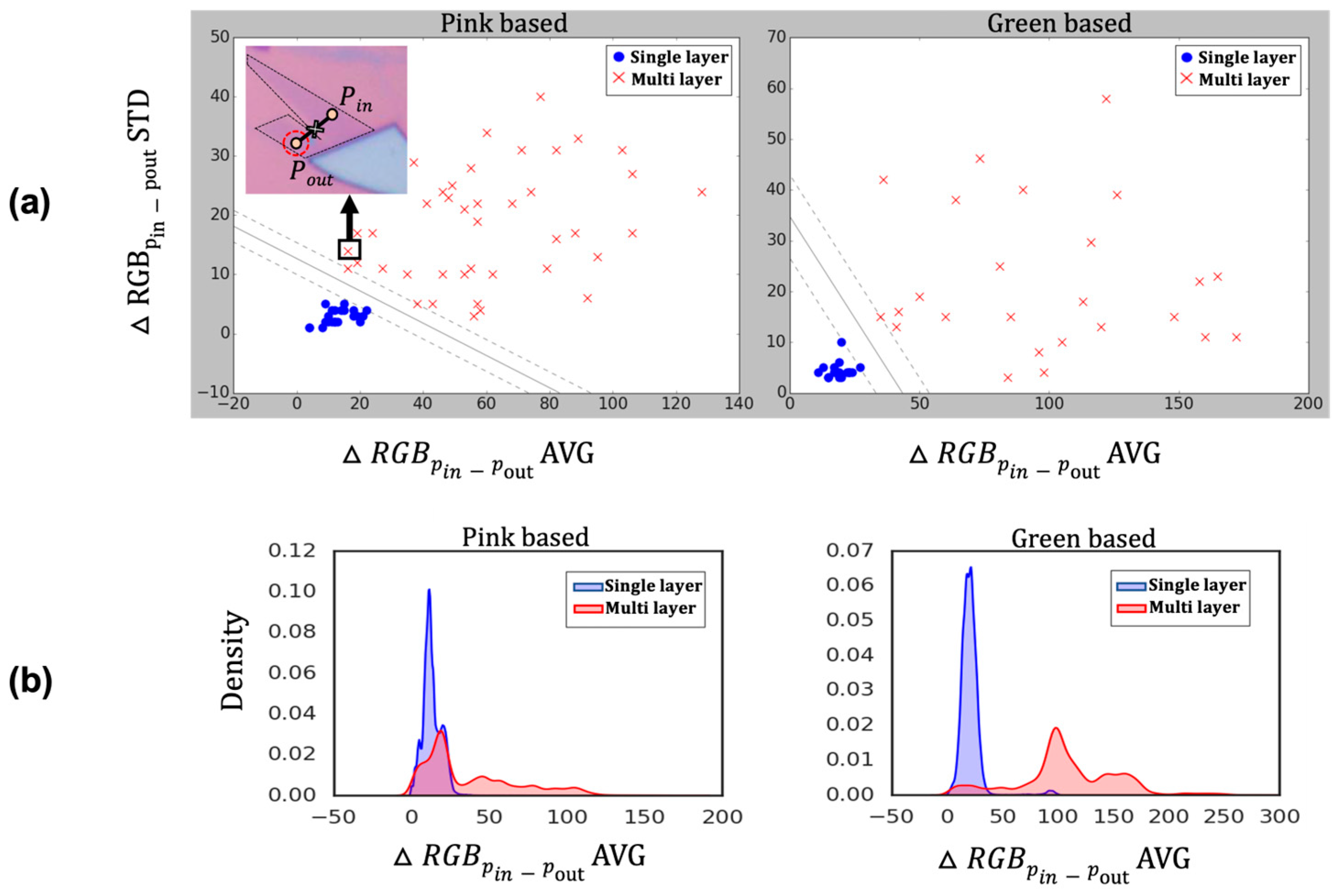

3.1. Training Data Distribution and Decision Boundary

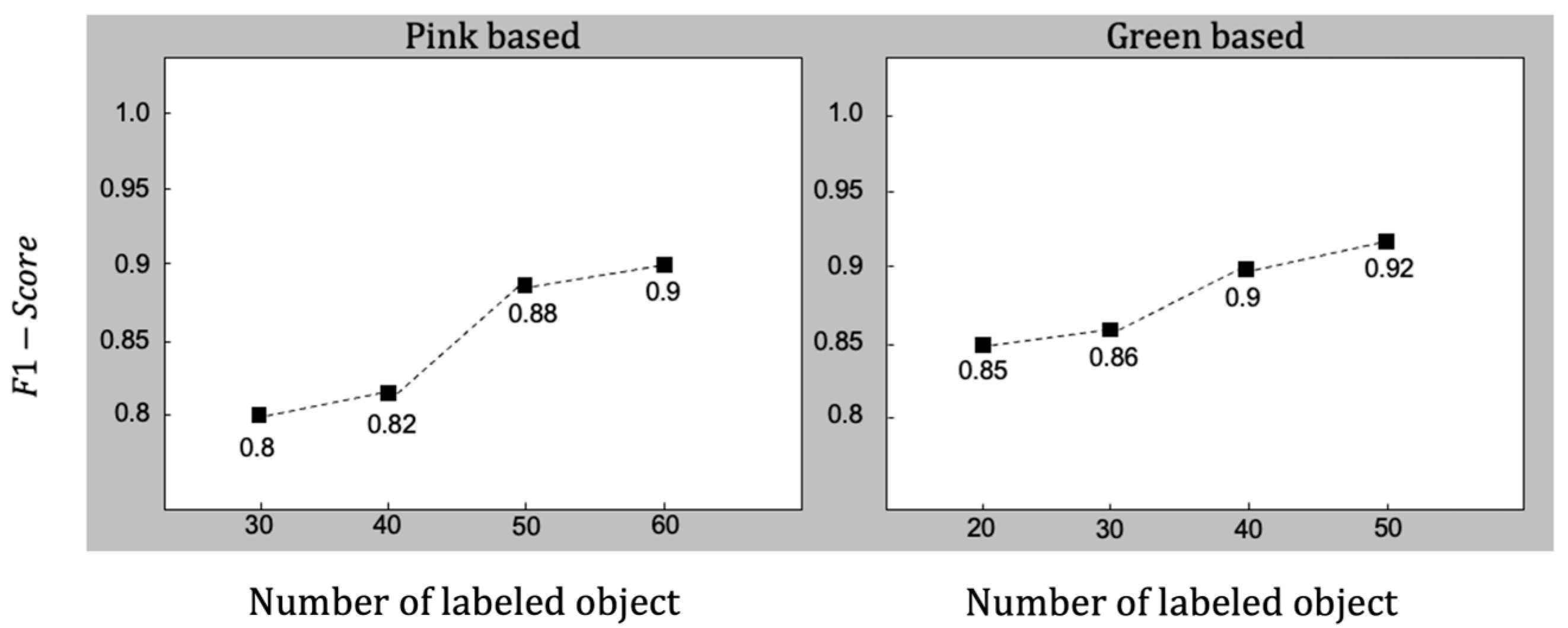

3.2. Model Performance

4. Conclusions

5. Patents

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Geim, A.K.; Novoselov, K.S. The rise of graphene. Nat. Mater. 2007, 6, 183–191. [Google Scholar] [CrossRef]

- Neto, A.C.; Guinea, F.; Peres, N.M.; Novoselov, K.S.; Geim, A.K. The electronic properties of graphene. Rev. Mod. Phys. 2009, 81, 109. [Google Scholar] [CrossRef]

- Akinwande, D.; Brennan, C.J.; Bunch, J.S.; Egberts, P.; Felts, J.R.; Gao, H.; Huang, R.; Kim, J.-S.; Li, T.; Li, Y. A review on mechanics and mechanical properties of 2D materials—Graphene and beyond. Extrem. Mech. Lett. 2017, 13, 42–77. [Google Scholar] [CrossRef]

- Abergel, D.; Apalkov, V.; Berashevich, J.; Ziegler, K.; Chakraborty, T. Properties of graphene: A theoretical perspective. Adv. Phys. 2010, 59, 261–482. [Google Scholar] [CrossRef]

- Randviir, E.P.; Brownson, D.A.; Banks, C.E. A decade of graphene research: Production, applications and outlook. Mater. Today 2014, 17, 426–432. [Google Scholar] [CrossRef]

- Blake, P.; Hill, E.; Castro Neto, A.; Novoselov, K.; Jiang, D.; Yang, R.; Booth, T.; Geim, A. Making graphene visible. Appl. Phys. Lett. 2007, 91, 063124. [Google Scholar] [CrossRef]

- Kumar, V.; Kumar, A.; Lee, D.-J.; Park, S.-S. Estimation of number of graphene layers using different methods: A focused review. Materials 2021, 14, 4590. [Google Scholar] [CrossRef]

- Malard, L.M.; Pimenta, M.A.; Dresselhaus, G.; Dresselhaus, M.S. Raman spectroscopy in graphene. Phys. Rep. 2009, 473, 51–87. [Google Scholar] [CrossRef]

- Ferrari, A.C.; Basko, D.M. Raman spectroscopy as a versatile tool for studying the properties of graphene. Nat. Nanotechnol. 2013, 8, 235–246. [Google Scholar] [CrossRef]

- Ni, Z.; Wang, Y.; Yu, T.; Shen, Z. Raman spectroscopy and imaging of graphene. Nano Res. 2008, 1, 273–291. [Google Scholar] [CrossRef]

- Nemes-Incze, P.; Osváth, Z.; Kamarás, K.; Biró, L. Anomalies in thickness measurements of graphene and few layer graphite crystals by tapping mode atomic force microscopy. Carbon 2008, 46, 1435–1442. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, J.; Wang, Y.; Liu, R.; Huai, X.; Jiang, J.; Anfuso, C. Atomic force microscopy for two-dimensional materials: A tutorial review. Opt. Commun. 2018, 406, 3–17. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Nassif, A.B.; Shahin, I.; Attili, I.; Azzeh, M.; Shaalan, K. Speech recognition using deep neural networks: A systematic review. IEEE Access 2019, 7, 19143–19165. [Google Scholar] [CrossRef]

- Manning, C.D.; Surdeanu, M.; Bauer, J.; Finkel, J.R.; Bethard, S.; McClosky, D. The Stanford CoreNLP natural language processing toolkit. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Baltimore, MD, USA, 22–27 June 2014; pp. 55–60. [Google Scholar]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Seo, M.; Lee, S.W. Methodology to classify hazardous compounds via deep learning based on convolutional neural networks. Curr. Appl. Phys. 2022, 41, 59–65. [Google Scholar] [CrossRef]

- Seo, M.; Yang, E.; Shin, D.H.; Je, Y.; Joo, C.; Lee, K.; Lee, S.W. Classifiable Limiting Mass Change Detection in a Graphene Resonator Using Applied Machine Learning. ACS Appl. Electron. Mater. 2022, 4, 5184–5190. [Google Scholar] [CrossRef]

- Yang, J.; Yao, H. Automated identification and characterization of two-dimensional materials via machine learning-based processing of optical microscope images. Extrem. Mech. Lett. 2020, 39, 100771. [Google Scholar] [CrossRef]

- Masubuchi, S.; Machida, T. Classifying optical microscope images of exfoliated graphene flakes by data-driven machine learning. NPJ 2d Mater. Appl. 2019, 3, 4. [Google Scholar] [CrossRef]

- Cho, W.H.; Shin, J.; Kim, Y.D.; Jung, G.J. Pixel-wise classification in graphene-detection with tree-based machine learning algorithms. Mach. Learn. Sci. Technol. 2022, 3, 045029. [Google Scholar] [CrossRef]

- Masubuchi, S.; Watanabe, E.; Seo, Y.; Okazaki, S.; Sasagawa, T.; Watanabe, K.; Taniguchi, T.; Machida, T. Deep-learning-based image segmentation integrated with optical microscopy for automatically searching for two-dimensional materials. NPJ 2d Mater. Appl. 2020, 4, 3. [Google Scholar] [CrossRef]

- Masubuchi, S.; Morimoto, M.; Morikawa, S.; Onodera, M.; Asakawa, Y.; Watanabe, K.; Taniguchi, T.; Machida, T. Autonomous robotic searching and assembly of two-dimensional crystals to build van der Waals superlattices. Nat. Commun. 2018, 9, 1413. [Google Scholar] [CrossRef]

- Lin, X.; Si, Z.; Fu, W.; Yang, J.; Guo, S.; Cao, Y.; Zhang, J.; Wang, X.; Liu, P.; Jiang, K. Intelligent identification of two-dimensional nanostructures by machine-learning optical microscopy. Nano Res. 2018, 11, 6316–6324. [Google Scholar] [CrossRef]

- Howse, J. OpenCV computer vision with python; Packt Publishing Birmingham: Birmingham, UK, 2013; Volume 27. [Google Scholar]

- Gulli, A.; Kapoor, A.; Pal, S. Deep Learning with TensorFlow 2 and Keras: Regression, ConvNets, GANs, RNNs, NLP, and More with TensorFlow 2 and the Keras API; Packt Publishing Ltd.: Birmingham, UK, 2019. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Dutta, A.; Zisserman, A. The VIA annotation software for images, audio and video. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2276–2279. [Google Scholar]

- Pezoa, F.; Reutter, J.L.; Suarez, F.; Ugarte, M.; Vrgoč, D. Foundations of JSON Schema. In Proceedings of the 25th International Conference on World Wide Web, Montreal, QC, Canada, 11–15 May 2016; pp. 263–273. [Google Scholar]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Fushiki, T. Estimation of prediction error by using K-fold cross-validation. Stat. Comput. 2009, 21, 137–146. [Google Scholar] [CrossRef]

- Wang, Y.Y.; Gao, R.X.; Ni, Z.H.; He, H.; Guo, S.P.; Yang, H.P.; Cong, C.X.; Yu, T. Thickness identification of two-dimensional materials by optical imaging. Nanotechnology 2012, 23, 495713. [Google Scholar] [CrossRef]

- Lanza, M.; Radu, I. Electronic Circuits made of 2D Materials. Adv Mater 2022, 34, e2207843. [Google Scholar] [CrossRef]

- Schram, T.; Sutar, S.; Radu, I.; Asselberghs, I. Challenges of Wafer-Scale Integration of 2D Semiconductors for High-Performance Transistor Circuits. Adv Mater 2022, 34, e2109796. [Google Scholar] [CrossRef]

- Panarella, L.; Smets, Q.; Verreck, D.; Schram, T.; Cott, D.; Asselberghs, I.; Kaczer, B. Analysis of BTI in 300 mm integrated dual-gate WS2 FETs. In Proceedings of the 2022 Device Research Conference (DRC), Columbus, OH, USA, 26–29 June 2022; pp. 1–2. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, E.; Seo, M.; Rhee, H.; Je, Y.; Jeong, H.; Lee, S.W. Machine Learning-Assisted Identification of Single-Layer Graphene via Color Variation Analysis. Nanomaterials 2024, 14, 183. https://doi.org/10.3390/nano14020183

Yang E, Seo M, Rhee H, Je Y, Jeong H, Lee SW. Machine Learning-Assisted Identification of Single-Layer Graphene via Color Variation Analysis. Nanomaterials. 2024; 14(2):183. https://doi.org/10.3390/nano14020183

Chicago/Turabian StyleYang, Eunseo, Miri Seo, Hanee Rhee, Yugyeong Je, Hyunjeong Jeong, and Sang Wook Lee. 2024. "Machine Learning-Assisted Identification of Single-Layer Graphene via Color Variation Analysis" Nanomaterials 14, no. 2: 183. https://doi.org/10.3390/nano14020183

APA StyleYang, E., Seo, M., Rhee, H., Je, Y., Jeong, H., & Lee, S. W. (2024). Machine Learning-Assisted Identification of Single-Layer Graphene via Color Variation Analysis. Nanomaterials, 14(2), 183. https://doi.org/10.3390/nano14020183