Lightweight Machine-Learning Model for Efficient Design of Graphene-Based Microwave Metasurfaces for Versatile Absorption Performance

Abstract

1. Introduction

2. Method

2.1. Graphene-Based Metasurface Absorber Model

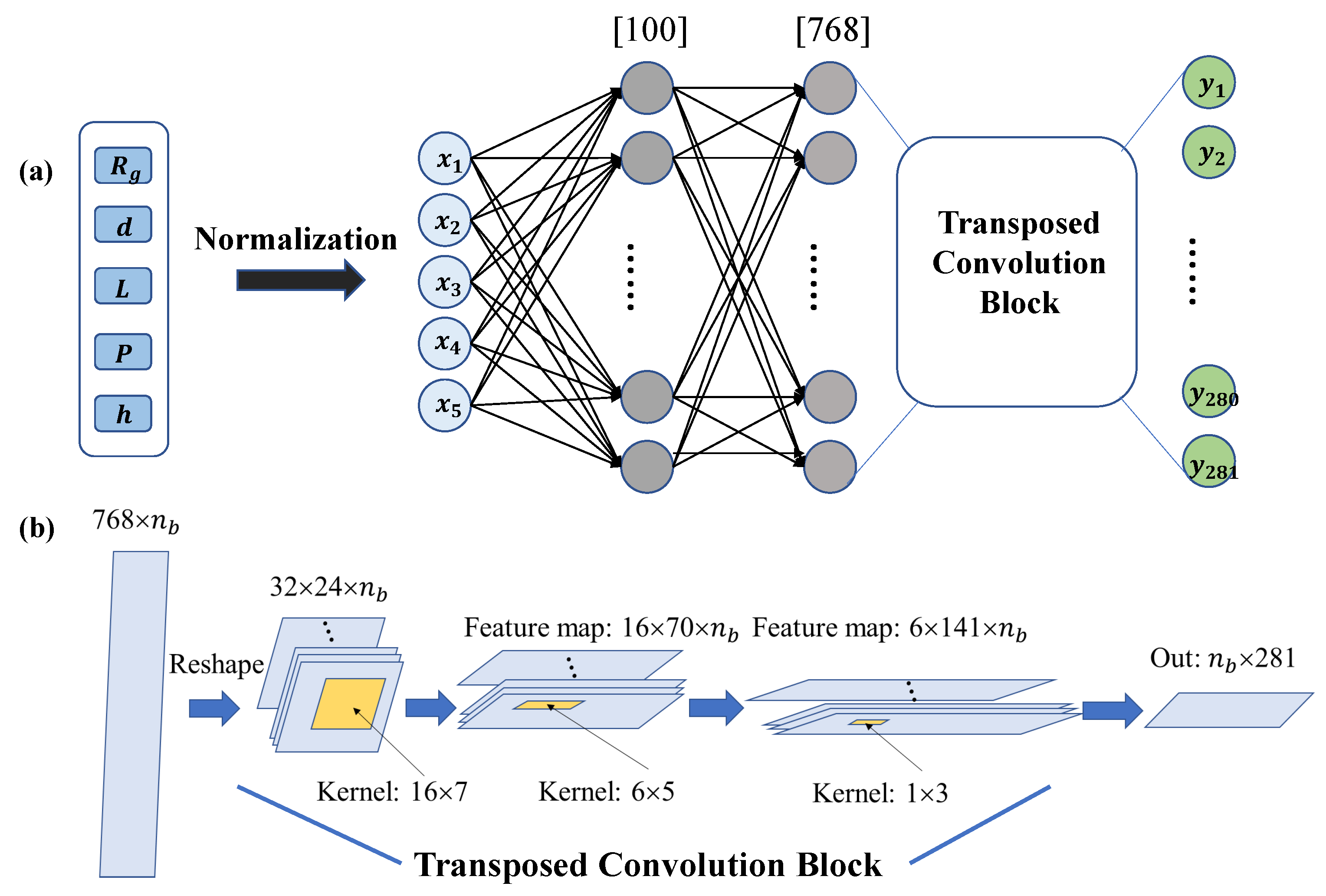

2.2. Machine-Learning Model

2.3. Inverse Design System

3. Experiments and Results

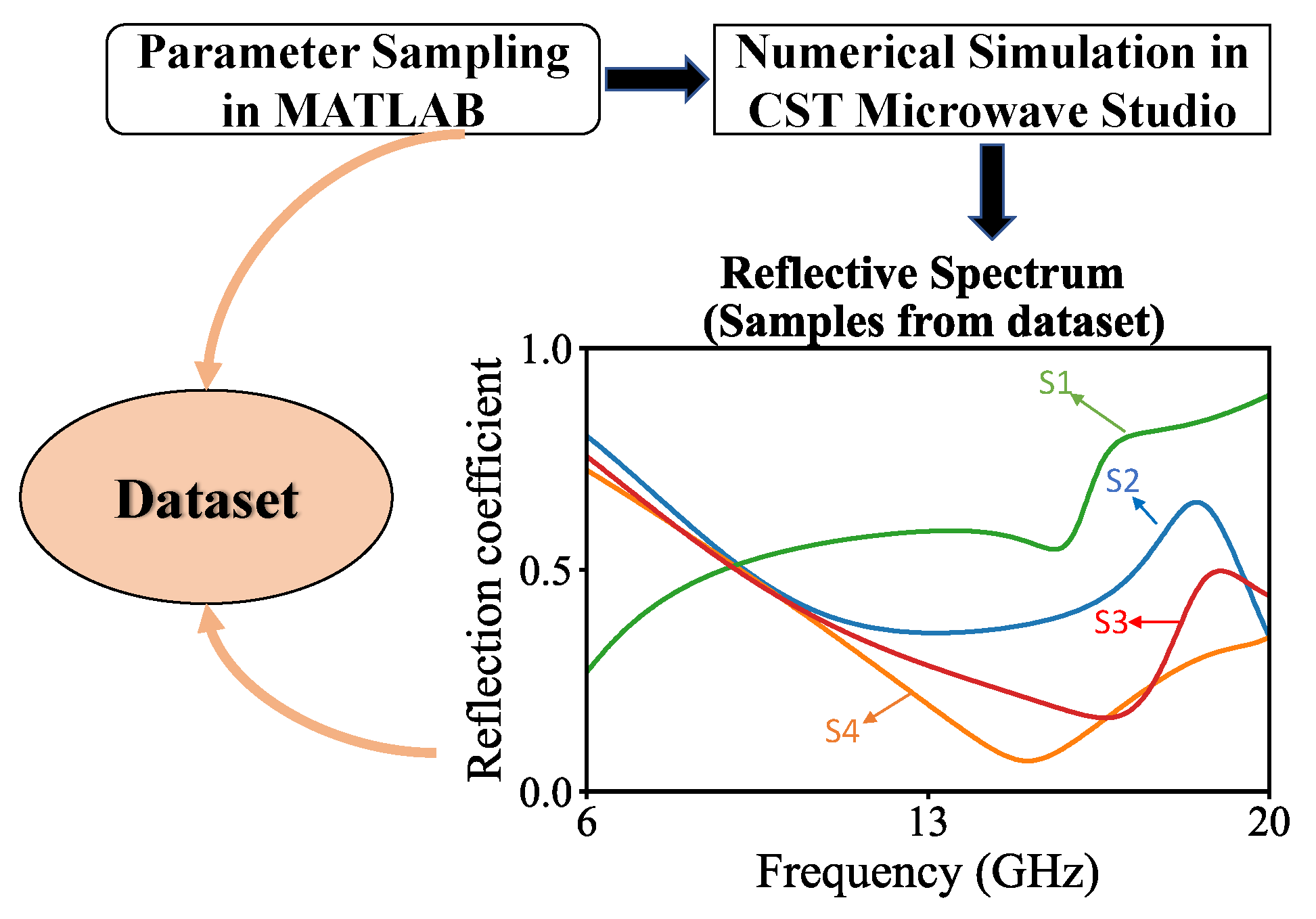

3.1. Dataset Collection

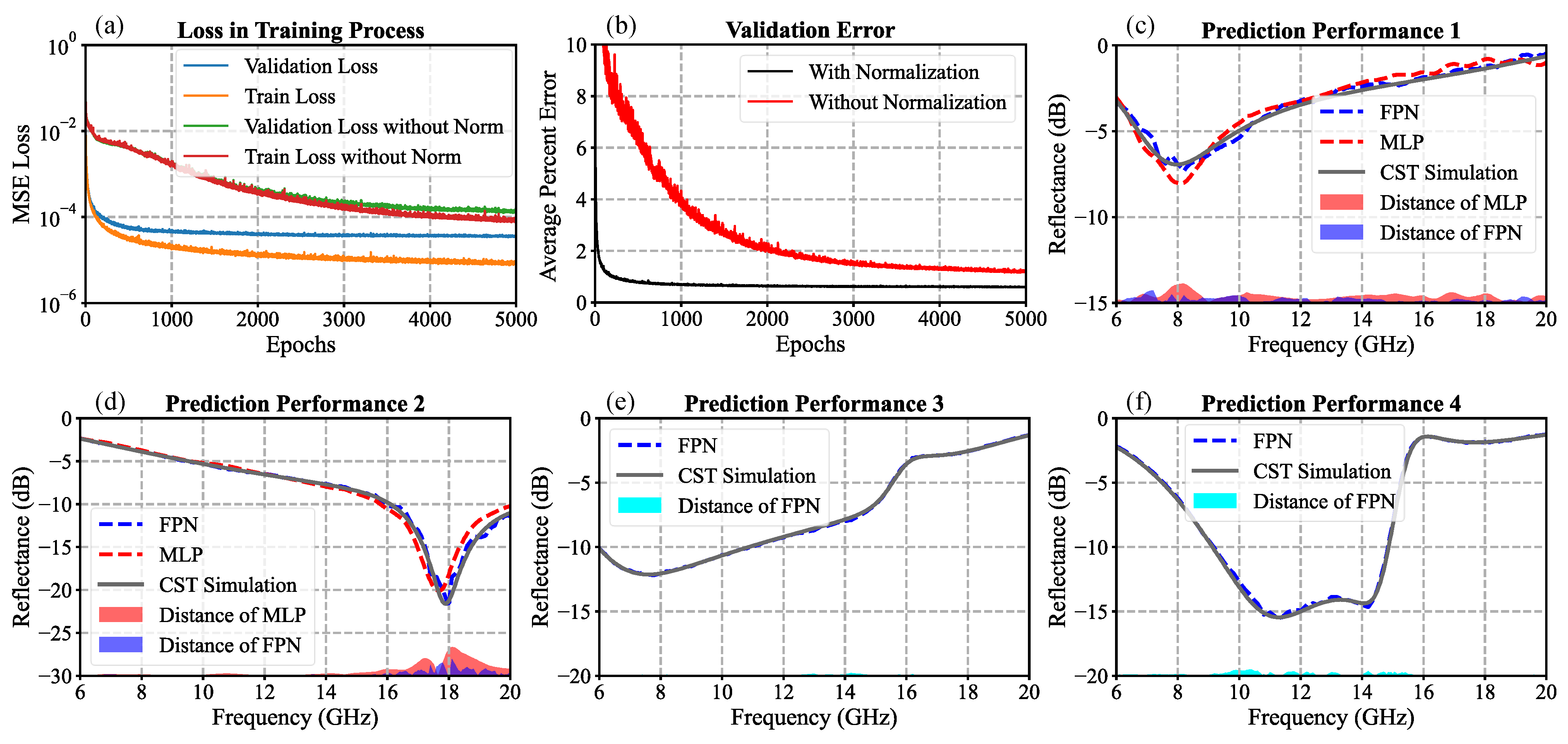

3.2. Performance of Forward Prediction

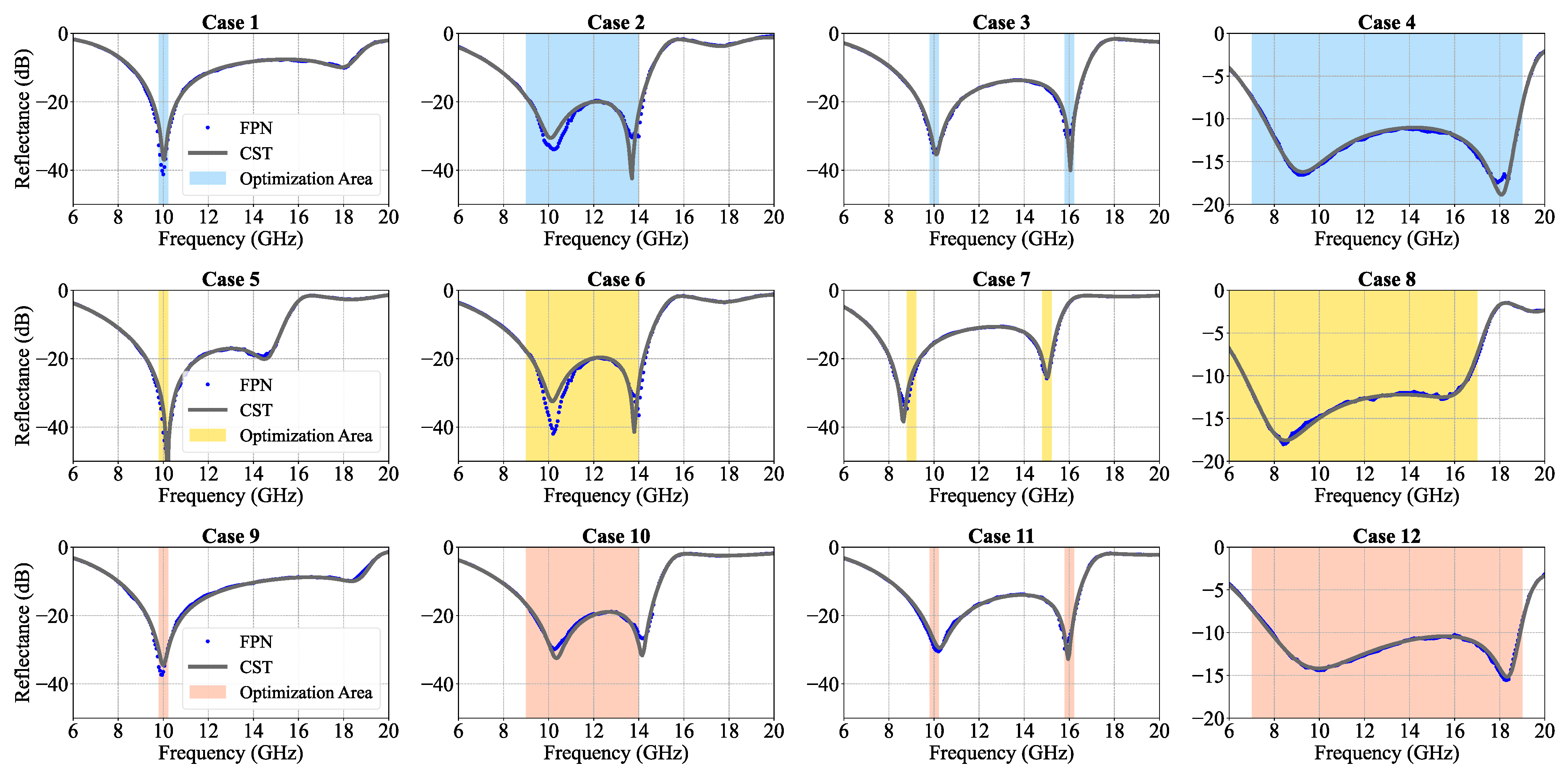

3.3. Results of Inverse Design System

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Yu, N.; Genevet, P.; Kats, M.A.; Aieta, F.; Tetienne, J.P.; Capasso, F.; Gaburro, Z. Light Propagation with Phase Discontinuities: Generalized Laws of Reflection and Refraction. Science 2011, 334, 333–337. [Google Scholar] [CrossRef]

- Sun, S.; He, Q.; Xiao, S.; Xu, Q.; Li, X.; Zhou, L. Gradient-index meta-surfaces as a bridge linking propagating waves and surface waves. Nat. Mater. 2012, 11, 426–431. [Google Scholar] [CrossRef]

- Schurig, D.; Mock, J.J.; Justice, B.J.; Cummer, S.A.; Pendry, J.B.; Starr, A.F.; Smith, D.R. Metamaterial Electromagnetic Cloak at Microwave Frequencies. Science 2006, 314, 977–980. [Google Scholar] [CrossRef]

- Kundtz, N.; Smith, D.R. Extreme-angle broadband metamaterial lens. Nat. Mater. 2010, 9, 129–132. [Google Scholar] [CrossRef]

- Zheludev, N.I.; Kivshar, Y.S. From metamaterials to metadevices. Nat. Mater. 2012, 11, 917–924. [Google Scholar] [CrossRef]

- Vendik, I.; Vendik, O. Metamaterials and their application in microwaves: A review. Tech. Phys. 2013, 58, 1–24. [Google Scholar] [CrossRef]

- Akram, M.R.; Ding, G.; Chen, K.; Feng, Y.; Zhu, W. Ultrathin Single Layer Metasurfaces with Ultra-Wideband Operation for Both Transmission and Reflection. Adv. Mater. 2020, 32, 1907308. [Google Scholar] [CrossRef]

- Li, Z.; Qi, J.; Hu, W.; Liu, J.; Zhang, J.; Shao, L.; Zhang, C.; Wang, X.; Jin, R.; Zhu, W. Dispersion-Assisted Dual-Phase Hybrid Meta-Mirror for Dual-Band Independent Amplitude and Phase Controls. IEEE Trans. Antenn. Propag. 2022, 70, 7316–7321. [Google Scholar] [CrossRef]

- Zheng, G.; Mühlenbernd, H.; Kenney, M.; Li, G.; Zentgraf, T.; Zhang, S. Metasurface holograms reaching 80% efficiency. Nat. Nanotech. 2015, 10, 308–312. [Google Scholar] [CrossRef]

- Akselrod, G.M.; Huang, J.; Hoang, T.B.; Bowen, P.T.; Su, L.; Smith, D.R.; Mikkelsen, M.H. Large-Area Metasurface Perfect Absorbers from Visible to Near-Infrared. Adv. Mater. 2015, 27, 8028–8034. [Google Scholar] [CrossRef]

- Yu, Y.; Xiao, F.; He, C.; Jin, R.; Zhu, W. Double-arrow metasurface for dual-band and dual-mode polarization conversion. Opt. Express 2020, 28, 11797–11805. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Premaratne, M.; Zhu, W. Advanced encryption method realized by secret shared phase encoding scheme using a multi-wavelength metasurface. Nanophotonics 2020, 9, 3687–3696. [Google Scholar] [CrossRef]

- Li, Z.; Kong, X.; Zhang, J.; Shao, L.; Zhang, D.; Liu, J.; Wang, X.; Zhu, W.; Qiu, C.W. Cryptography Metasurface for One-Time-Pad Encryption and Massive Data Storage. Laser Photonics Rev. 2022, 16, 2200113. [Google Scholar] [CrossRef]

- Zhao, H.; Shuang, Y.; Wei, M.; Cui, T.J.; Hougne, P.d.; Li, L. Metasurface-assisted massive backscatter wireless communication with commodity Wi-Fi signals. Nat. Commun. 2020, 11, 3926. [Google Scholar] [CrossRef]

- Li, L.; Shuang, Y.; Ma, Q.; Li, H.; Zhao, H.; Wei, M.; Che, L.; Hao, C.; Qiu, C.W.; Cui, T. Intelligent metasurface imager and recognizer. Light-Sci. Appl. 2019, 8, 97. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, K.; Zhao, J.; Chen, P.; Jiang, T.; Zhu, B.; Feng, Y.; Li, Y. Metasurface Salisbury screen: Achieving ultra-wideband microwave absorption. Opt. Express 2017, 25, 30241–30252. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, K.; Zhu, B.; Zhao, J.; Feng, Y.; Li, Y. Ultra-Wideband Microwave Absorption by Design and Optimization of Metasurface Salisbury Screen. IEEE Access 2018, 6, 26843–26853. [Google Scholar] [CrossRef]

- Guo, W.; Liu, Y.; Han, T. Ultra-broadband infrared metasurface absorber. Opt. Express 2016, 24, 20586–20592. [Google Scholar] [CrossRef]

- Alaee, R.; Albooyeh, M.; Rockstuhl, C. Theory of metasurface based perfect absorbers. J. Phys. D Appl. Phys. 2017, 50, 503002. [Google Scholar] [CrossRef]

- To, N.; Juodkazis, S.; Nishijima, Y. Detailed Experiment-theory comparison of mid-infrared metasurface perfect absorbers. Micromachines 2020, 11, 409. [Google Scholar] [CrossRef]

- Lu, W.B.; Wang, J.W.; Zhang, J.; Liu, Z.G.; Chen, H.; Song, W.J.; Jiang, Z.H. Flexible and optically transparent microwave absorber with wide bandwidth based on graphene. Carbon 2019, 152, 70–76. [Google Scholar] [CrossRef]

- Jang, T.; Youn, H.; Shin, Y.J.; Guo, L.J. Transparent and Flexible Polarization-Independent Microwave Broadband Absorber. ACS Photonics 2014, 1, 279–284. [Google Scholar] [CrossRef]

- Zeng, S.; Sreekanth, K.V.; Shang, J.; Yu, T.; Chen, C.K.; Yin, F.; Baillargeat, D.; Coquet, P.; Ho, H.P.; Kabashin, A.V.; et al. Graphene–Gold Metasurface Architectures for Ultrasensitive Plasmonic Biosensing. Adv. Mater. 2015, 27, 6163–6169. [Google Scholar] [CrossRef]

- Shi, S.F.; Zeng, B.; Han, H.L.; Hong, X.; Tsai, H.Z.; Jung, H.S.; Zettl, A.; Crommie, M.F.; Wang, F. Optimizing Broadband Terahertz Modulation with Hybrid Graphene/Metasurface Structures. Nano Lett. 2015, 15, 372–377. [Google Scholar] [CrossRef]

- Zhang, J.; Wei, X.; Rukhlenko, I.D.; Chen, H.T.; Zhu, W. Electrically Tunable Metasurface with Independent Frequency and Amplitude Modulations. ACS Photonics 2020, 7, 265–271. [Google Scholar] [CrossRef]

- Balci, O.; Polat, E.O.; Kakenov, N.; Kocabas, C. Graphene-enabled electrically switchable radar-absorbing surfaces. Nat. Commun. 2015, 6, 6628. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Zhang, H.; Yang, W.; Chen, K.; Wei, X.; Feng, Y.; Jin, R.; Zhu, W. Dynamic Scattering Steering with Graphene-Based Coding Metamirror. Adv. Opt. Mater. 2020, 8, 2000683. [Google Scholar] [CrossRef]

- Balci, O.; Kakenov, N.; Karademir, E.; Balci, S.; Cakmakyapan, S.; Polat, E.O.; Caglayan, H.; Özbay, E.; Kocabas, C. Electrically switchable metadevices via graphene. Sci. Adv. 2018, 4, eaao1749. [Google Scholar] [CrossRef]

- Grande, M.; Bianco, G.; Vincenti, M.; De Ceglia, D.; Capezzuto, P.; Petruzzelli, V.; Scalora, M.; Bruno, G.; D’Orazio, A. Optically transparent microwave screens based on engineered graphene layers. Opt. Express 2016, 24, 22788–22795. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Z.; Shao, L.; Zhu, W. Dynamical absorption manipulation in a graphene-based optically transparent and flexible metasurface. Carbon 2021, 176, 374–382. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Hirschberg, J.; Manning, C.D. Advances in natural language processing. Science 2015, 349, 261–266. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Liu, Z.; Yu, X.; Zhao, Y.; Chen, W.; Hu, B.; Du, X.; Li, X.; Helaoui, M.; Wang, W.; et al. Convolutional Neural Network for Behavioral Modeling and Predistortion of Wideband Power Amplifiers. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 3923–3937. [Google Scholar] [CrossRef]

- Peurifoy, J.; Shen, Y.; Jing, L.; Yang, Y.; Cano-Renteria, F.; DeLacy, B.G.; Joannopoulos, J.D.; Tegmark, M.; Soljačić, M. Nanophotonic particle simulation and inverse design using artificial neural networks. Sci. Adv. 2018, 4, eaar4206. [Google Scholar] [CrossRef]

- Lin, R.; Zhai, Y.; Xiong, C.; Li, X. Inverse design of plasmonic metasurfaces by convolutional neural network. Opt. Lett. 2020, 45, 1362–1365. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Zhu, D.; Rodrigues, S.P.; Lee, K.T.; Cai, W. Generative Model for the Inverse Design of Metasurfaces. Nano Lett. 2018, 18, 6570–6576. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, J.; Xie, Y.; Feng, N.; Liu, Q.H. Smart inverse design of graphene-based photonic metamaterials by an adaptive artificial neural network. Nanoscale 2019, 11, 9749–9755. [Google Scholar] [CrossRef]

- Liu, D.; Tan, Y.; Khoram, E.; Yu, Z. Training Deep Neural Networks for the Inverse Design of Nanophotonic Structures. ACS Photonics 2018, 5, 1365–1369. [Google Scholar] [CrossRef]

- Liu, L.; Xie, L.X.; Huang, W.; Zhang, X.J.; Lu, M.H.; Chen, Y.F. Broadband acoustic absorbing metamaterial via deep learning approach. Appl. Phys. Lett. 2022, 120, 251701. [Google Scholar] [CrossRef]

- On, H.I.; Jeong, L.; Jung, M.; Kang, D.J.; Park, J.H.; Lee, H.J. Optimal design of microwave absorber using novel variational autoencoder from a latent space search strategy. Mater. Des. 2021, 212, 110266. [Google Scholar] [CrossRef]

- Ma, W.; Cheng, F.; Xu, Y.; Wen, Q.; Liu, Y. Probabilistic Representation and Inverse Design of Metamaterials Based on a Deep Generative Model with Semi-Supervised Learning Strategy. Adv. Mater. 2019, 31, 1901111. [Google Scholar] [CrossRef]

- Hanson, G.W. Dyadic Green’s functions and guided surface waves for a surface conductivity model of graphene. J. Appl. Phys. 2008, 103, 064302. [Google Scholar] [CrossRef]

- Quader, S.; Zhang, J.; Akram, M.R.; Zhu, W. Graphene-Based High-Efficiency Broadband Tunable Linear-to-Circular Polarization Converter for Terahertz Waves. IEEE J. Sel. Top. Quantum Electron. 2020, 26, 4501008. [Google Scholar] [CrossRef]

- Zhang, J.; Wei, X.; Premaratne, M.; Zhu, W. Experimental demonstration of an electrically tunable broadband coherent perfect absorber based on a graphene-electrolyte-graphene sandwich structure. Photon. Res. 2019, 7, 868–874. [Google Scholar] [CrossRef]

- Kong, Q.; Cao, Y.; Iqbal, T.; Wang, Y.; Wang, W.; Plumbley, M.D. PANNs: Large-Scale Pretrained Audio Neural Networks for Audio Pattern Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2880–2894. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recogn. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Zhou, D.X. Theory of deep convolutional neural networks: Downsampling. Neural Netw. 2020, 124, 319–327. [Google Scholar] [CrossRef]

- Liao, X.; Gui, L.; Yu, Z.; Zhang, T.; Xu, K. Deep learning for the design of 3D chiral plasmonic metasurfaces. Opt. Mater. Express 2022, 12, 758–771. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Li, Y.; Bao, Y.; Yan, W.; Fang, Z.; Lu, H. Contextual deconvolution network for semantic segmentation. Pattern Recogn. 2020, 101, 107152. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Yang, X.; Mei, H.; Zhang, J.; Xu, K.; Yin, B.; Zhang, Q.; Wei, X. DRFN: Deep Recurrent Fusion Network for Single-Image Super-Resolution With Large Factors. IEEE Trans. Multimed. 2019, 21, 328–337. [Google Scholar] [CrossRef]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar] [CrossRef]

| Design Parameters | Start | End |

|---|---|---|

| () | 132 | 300 |

| d (mm) | 1 | 6 |

| l (mm) | 5 | 11 |

| p (mm) | 8 | 14 |

| h (mm) | 2 | 4 |

| Hyperparameters | Values |

|---|---|

| Learning rate | 3 × 10 |

| Optimization method | Adam |

| Learning-rate decay | 5 × 10 |

| Loss function | MSELoss |

| Parameters | () | d (mm) | l (mm) | p (mm) | h (mm) |

|---|---|---|---|---|---|

| Case 1 | 136.42 | 1 | 6.36 | 11.63 | 2.6 |

| Case 2 | 149.48 | 5.29 | 7.23 | 14 | 3.57 |

| Case 3 | 153.8 | 3.43 | 6.49 | 12.26 | 3.14 |

| Case 4 | 132 | 3.73 | 7 | 10.62 | 2.91 |

| Case 5 | 171.48 | 4.42 | 7.58 | 13.17 | 3.5 |

| Case 6 | 154.39 | 4.85 | 7.04 | 14 | 3.5 |

| Case 7 | 179.47 | 2.38 | 7.35 | 13.18 | 3.5 |

| Case 8 | 132 | 4.75 | 7.58 | 11.38 | 3.5 |

| Case 9 | 250 | 1 | 6.28 | 10.43 | 3.16 |

| Case 10 | 250 | 2.3 | 7.3 | 14 | 3.41 |

| Case 11 | 250 | 1 | 6.86 | 12.5 | 3.16 |

| Case 12 | 250 | 1.11 | 7.72 | 10.84 | 2.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, N.; He, C.; Zhu, W. Lightweight Machine-Learning Model for Efficient Design of Graphene-Based Microwave Metasurfaces for Versatile Absorption Performance. Nanomaterials 2023, 13, 329. https://doi.org/10.3390/nano13020329

Chen N, He C, Zhu W. Lightweight Machine-Learning Model for Efficient Design of Graphene-Based Microwave Metasurfaces for Versatile Absorption Performance. Nanomaterials. 2023; 13(2):329. https://doi.org/10.3390/nano13020329

Chicago/Turabian StyleChen, Nengfu, Chong He, and Weiren Zhu. 2023. "Lightweight Machine-Learning Model for Efficient Design of Graphene-Based Microwave Metasurfaces for Versatile Absorption Performance" Nanomaterials 13, no. 2: 329. https://doi.org/10.3390/nano13020329

APA StyleChen, N., He, C., & Zhu, W. (2023). Lightweight Machine-Learning Model for Efficient Design of Graphene-Based Microwave Metasurfaces for Versatile Absorption Performance. Nanomaterials, 13(2), 329. https://doi.org/10.3390/nano13020329