A Reappraisal of the Threshold Hypothesis of Creativity and Intelligence

Abstract

1. Introduction

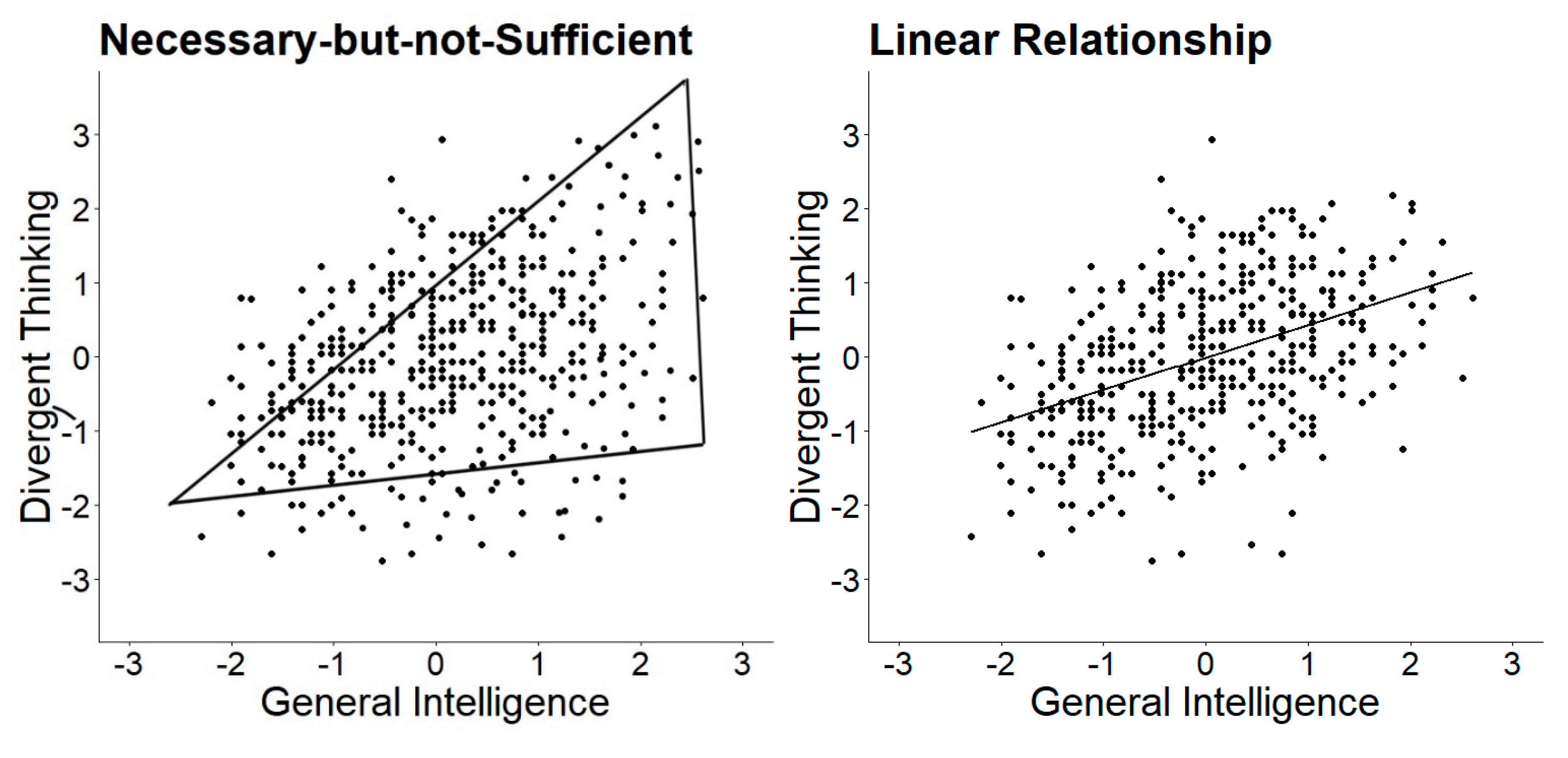

2. The Threshold Hypothesis of Creativity and Intelligence

3. Theoretical Underpinnings of the Threshold Hypothesis

4. Empirical Evaluation of the Threshold Hypothesis

5. Analytical Strategies in the Investigation of the Threshold Hypothesis

5.1. Correlational Analysis in Split Sample

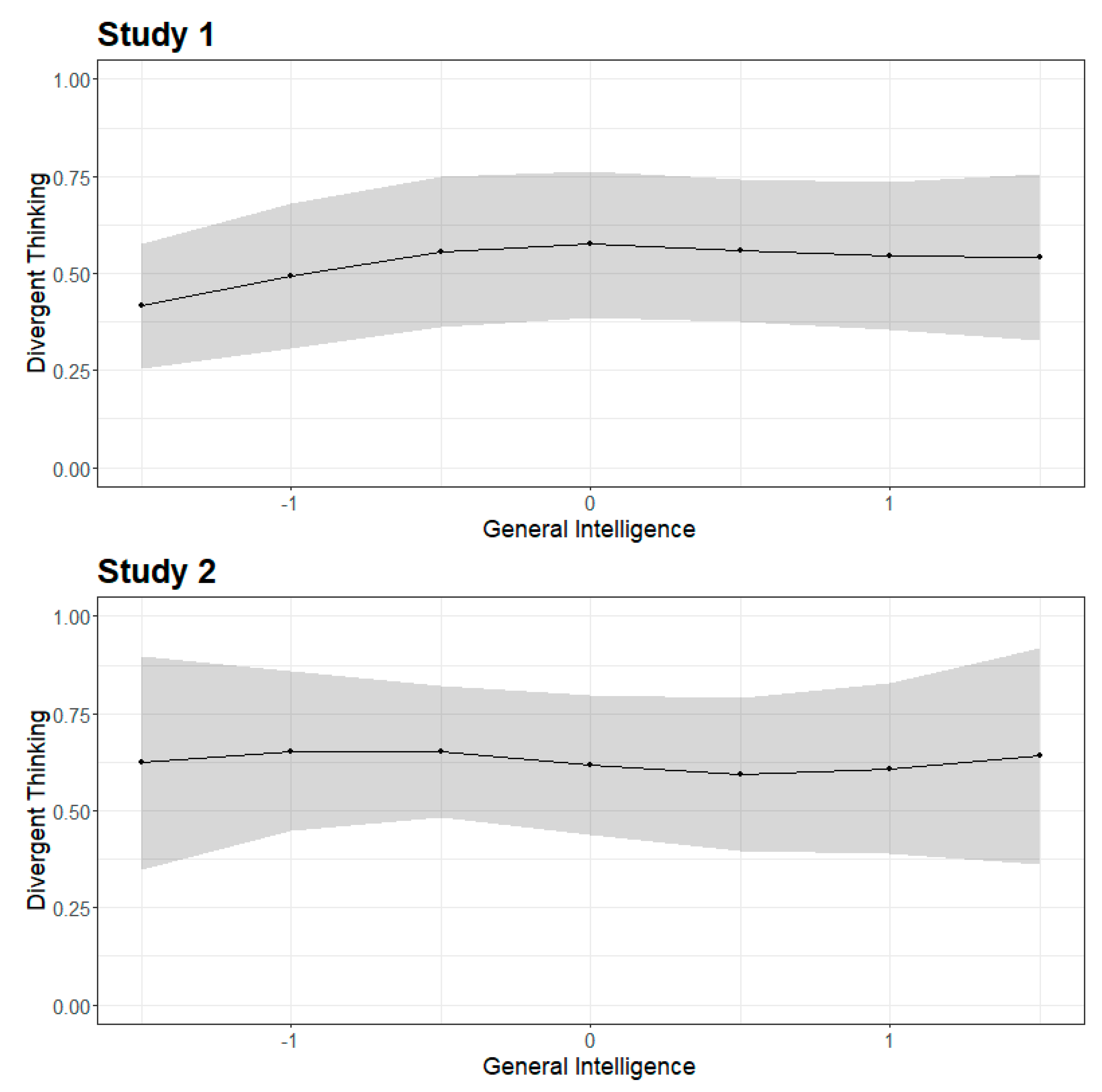

5.2. Segmented Regression Analysis

5.3. Local Structural Equation Modeling

6. The Present Studies

7. Method

7.1. Samples and Design

7.1.1. Study 1

7.1.2. Study 2

7.2. Measures and Scoring

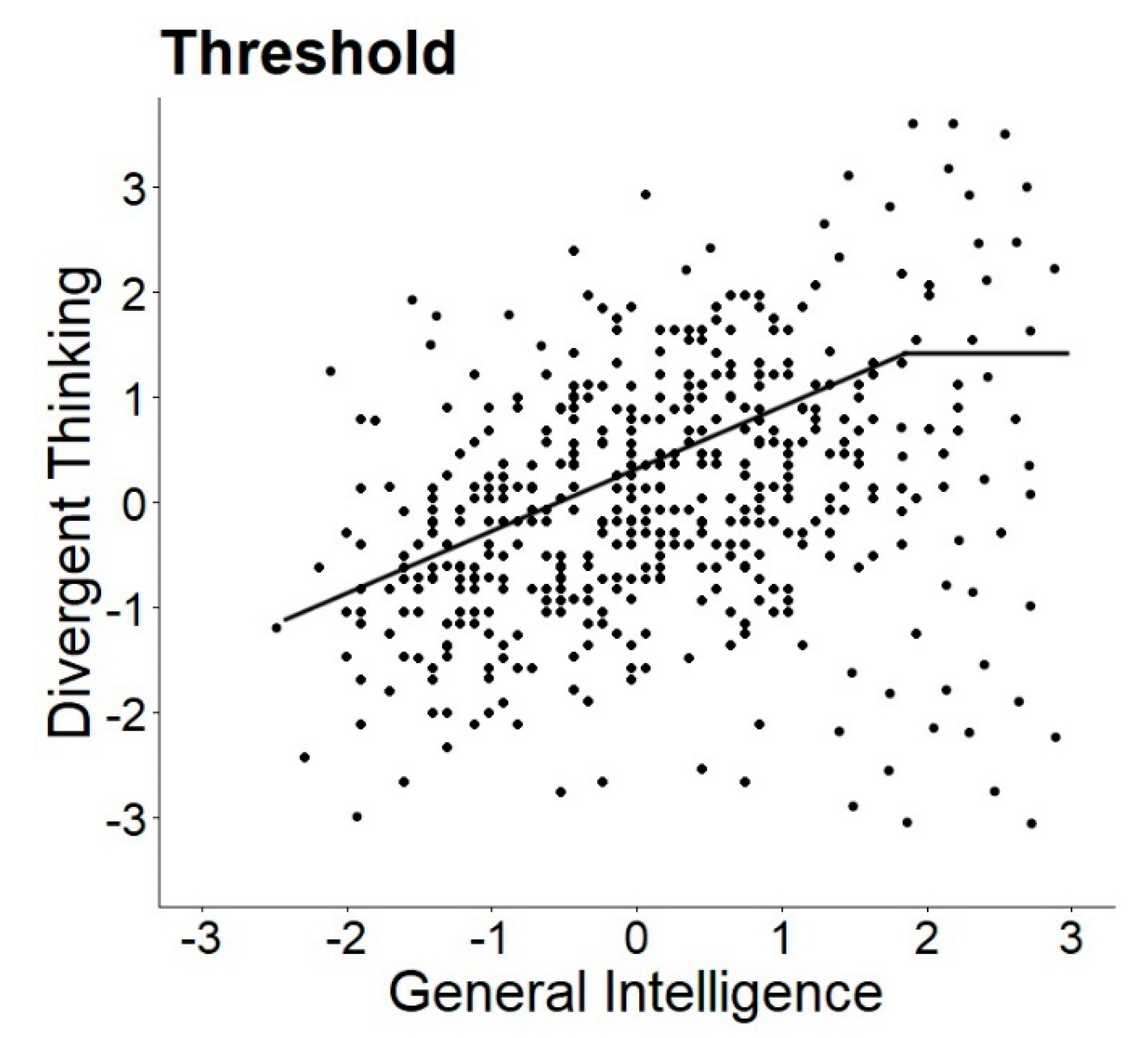

7.2.1. Study 1

7.2.2. Study 2

7.3. Statistical Analyses

7.3.1. Scatterplots and Heteroscedasticity

7.3.2. Segmented Regression Analysis

7.3.3. Local Structural Equation Modeling

7.4. Open Science

8. Results

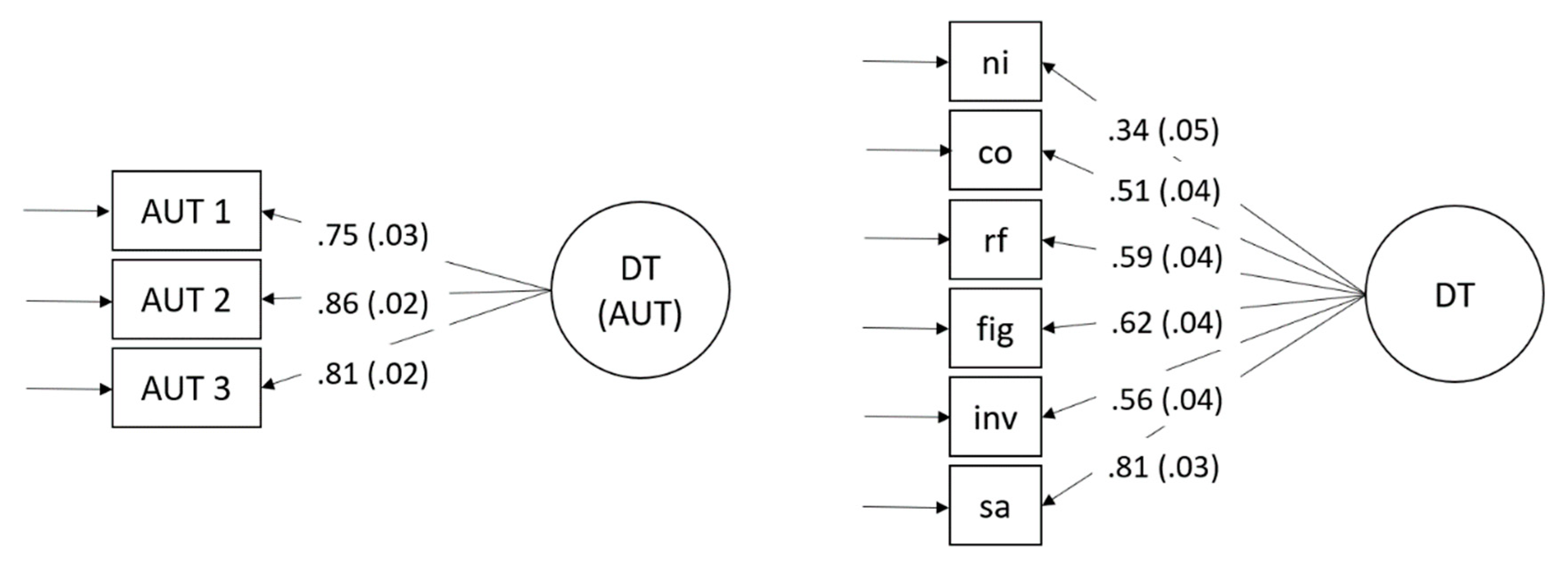

8.1. Scatterplots and Heteroscedasticity

8.2. Segmented Regression Analysis

8.3. Local Structural Equation Models

9. Discussion

9.1. Does a Threshold Exist?

9.2. Why Do Researchers Keep on Finding Evidence Anyway?

9.3. How Should We Approach the Threshold Hypothesis?

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Amabile, Teresa. M. 1982. Social psychology of creativity: A consensual assessment technique. Journal of Personality and Social Psychology 43: 997–1013. [Google Scholar] [CrossRef]

- Arendasy, M., L. F. Hornke, M. Sommer, J. Häusler, M. Wagner-Menghin, G. Gittler, and M. Wenzl. 2004. Manual Intelligence-Structure-Battery (INSBAT). Mödling: Schuhfried Gmbh. [Google Scholar]

- Bakker, Marjan, Annette van Dijk, and Jelte M. Wicherts. 2012. The rules of the game called psychological science. Perspectives on Psychological Science 7: 543–54. [Google Scholar] [CrossRef] [PubMed]

- Benedek, Mathias, Fabiola Franz, Moritz Heene, and Aljoscha C. Neubauer. 2012. Differential effects of cognitive inhibition and intelligence on creativity. Personality and Individual Differences 53: 480–85. [Google Scholar] [CrossRef] [PubMed]

- Breit, Moritz, Martin Brunner, and Franzis Preckel. 2020. General intelligence and specific cognitive abilities in adolescence: Tests of age differentiation, ability differentiation, and their interaction in two large samples. Developmental Psychology 56: 364–84. [Google Scholar] [CrossRef]

- Breusch, Trevor S., and Adrian R. Pagan. 1979. A simple test for heteroscedasticity and random coefficient variation. Econometrica 5: 1287–94. [Google Scholar] [CrossRef]

- Byrne, Barbara M. 2010. Structural Equation Modeling with AMOS: Basic Concepts, Applications, and Programming, 2nd ed. London: Routledge. [Google Scholar]

- Carroll, John B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. Cambridge: Cambridge University Press. [Google Scholar]

- Cattell, Raymond B., and A. K. S. Cattell. 1960. Culture Fair Intelligence Test: Scale 2. Champaign: Institute for Personality and Ability Testing. [Google Scholar]

- Cho, Sun Hee, Jan Te Nijenhuis, Annelies E. M. Vianen, Heui-Baik Kim, and Kun Ho Lee. 2010. The relationship between diverse components of intelligence and creativity. The Journal of Creative Behavior 44: 125–37. [Google Scholar] [CrossRef]

- Cropley, Arthur. 2006. In praise of convergent thinking. Creativity Research Journal 18: 391–404. [Google Scholar] [CrossRef]

- Cummins, James. 1979. Linguistic interdependence and the educational development of bilingual children. Review of Educational Research 49: 222–51. [Google Scholar] [CrossRef]

- Davies, Robert B. 2002. Hypothesis testing when a nuisance parameter is present only under the alternative: Linear model case. Biometrika 89: 484–89. [Google Scholar] [CrossRef]

- Diedrich, Jennifer, Emanuel Jauk, Paul J. Silvia, Jeffrey M. Gredlein, Aljoscha C. Neubauer, and Mathias Benedek. 2018. Assessment of real-life creativity: The Inventory of Creative Activities and Achievements (ICAA). Psychology of Aesthetics, Creativity, and the Arts 12: 304–16. [Google Scholar] [CrossRef]

- Dul, Jan. 2016. Necessary condition analysis (NCA) logic and methodology of “necessary but not sufficient” causality. Organizational Research Methods 19: 10–52. [Google Scholar] [CrossRef]

- Dumas, Denis. 2018. Relational reasoning and divergent thinking: An examination of the threshold hypothesis with quantile regression. Contemporary Educational Psychology 53: 1–14. [Google Scholar] [CrossRef]

- Fay, E., G. Trost, and G. Gittler. 2001. Intelligenz-Struktur-Analyse (ISA). Frankfurt: Swets Test Services. [Google Scholar]

- Finke, Ronald A. 1990. Creative Imagery: Discoveries as Inventions Invisualization. Mahwah: Lawrence Erlbaum Associates, Inc. [Google Scholar]

- Forthmann, Boris, David Jendryczko, Jana Scharfen, Ruben Kleinkorres, Mathias Benedek, and Heinz Holling. 2019. Creative ideation, broad retrieval ability, and processing speed: A confirmatory study of nested cognitive abilities. Intelligence 75: 59–72. [Google Scholar] [CrossRef]

- French, John W., Ruth B. Ekstrom, and Leighton A. Price. 1963. Manual for Kit of Reference Tests for Cognitive Factors (Revised 1963). Princeton: Educational Testing Service. [Google Scholar]

- Fuchs-Beauchamp, Karen D., Merle B. Karnes, and Lawrence J. Johnson. 1993. Creativity and intelligence in preschoolers. Gifted Child Quarterly 37: 113–17. [Google Scholar] [CrossRef]

- Garrett, Henry E. 1946. A developmental theory of intelligence. American Psychologist 1: 372–78. [Google Scholar] [CrossRef] [PubMed]

- Gelman, Andrew, and Jennifer Hill. 2006. Data Analysis Using Regression and Multilevel/hierarchical Models. Cambridge: Cambridge University Press. [Google Scholar]

- Getzels, Jacob W., and Philip W. Jackson. 1962. Creativity and Intelligence: Explorations with Gifted Students. Hoboken: Wiley. [Google Scholar]

- Gilhooly, K. J., Evie Fioratou, S. H. Anthony, and V. Wynn. 2007. Divergent thinking: Strategies and executive involvement in generating novel uses for familiar objects. British Journal of Psychology 98: 611–25. [Google Scholar] [CrossRef]

- Goecke, Benjamin, Selina Weiss, Diana Steger, Ulrich Schroeders, and Oliver Wilhelm. 2020. Its more about what you don’t know than what you do know: Perspectives on Overclaiming. Intelligence 81. [Google Scholar] [CrossRef]

- Guilford, J. P., and Paul R. Christensen. 1973. The one-way relation between creative potential and IQ*. The Journal of Creative Behavior 7: 247–52. [Google Scholar] [CrossRef]

- Guilford, Joy Paul. 1967. The Nature of Human Intelligence. New York: McGraw-Hill. [Google Scholar]

- Harris, Alexandra M., Rachel L. Williamson, and Nathan T. Carter. 2019. A conditional threshold hypothesis for creative achievement: On the interaction between intelligence and openness. Psychology of Aesthetics, Creativity, and the Arts 13: 322–37. [Google Scholar] [CrossRef]

- Hartung, Johanna, Laura E. Engelhardt, Megan L. Thibodeaux, K. Paige Harden, and Elliot M. Tucker-Drob. 2020. Developmental transformations in the structure of executive functions. Journal of Experimental Child Psychology 189: 104681. [Google Scholar] [CrossRef]

- Hartung, Johanna, Philipp Doebler, Ulrich Schroeders, and Oliver Wilhelm. 2018. Dedifferentiation and differentiation of intelligence in adults across age and years of education. Intelligence 69: 37–49. [Google Scholar] [CrossRef]

- Heitz, Richard P., Nash Unsworth, and Randall W. Engle. 2005. Working memory capacity, attention control, and fluid intelligence. In Handbook of Understanding and Measuring Intelligence. Edited by O. Wilhelm and R. W. Engle. Thousand Oaks: Sage Publications, pp. 61–78. [Google Scholar]

- Hildebrandt, Andrea, Oliver Lüdtke, Alexander Robitzsch, Christopher Sommer, and Oliver Wilhelm. 2016. Exploring factor model parameters across continuous variables with local structural equation models. Multivariate Behavioral Research 51: 257–58. [Google Scholar] [CrossRef] [PubMed]

- Hildebrandt, Andrea, Oliver Wilhelm, and Alexander Robitzsch. 2009. Complementary and competing factor analytic approaches for the investigation of measurement invariance. Review of Psychology 16: 87–102. [Google Scholar]

- Holling, Heinz, and Jörg-Tobias Kuhn. 2008. Does intellectual giftedness affect the factor structure of divergent thinking? Evidence from a MG-MACS analysis. Psychology Science Quaterly 50: 283–94. [Google Scholar]

- Hülür, Gizem, Oliver Wilhelm, and Alexander Robitzsch. 2011. Intelligence differentiation in early childhood. Journal of Individual Differences 32: 170–79. [Google Scholar] [CrossRef]

- Ilagan, Michael John, and Welfredo Patungan. 2018. The relationship between intelligence and creativity: On methodology for necessity and sufficiency. Archives of Scientific Psychology 6: 193–204. [Google Scholar] [CrossRef]

- Ioannidis, John P. A. 2005. Why most published research findings are false. PLoS Medicine 2: e124. [Google Scholar] [CrossRef]

- Jäger, A. O., Heinz Martin Süß, and A. Beauducel. 1997. Berliner Intelligenzstruktur-Test: BIS-Test. Göttingen: Hogrefe. [Google Scholar]

- Jauk, Emanuel, Mathias Benedek, Beate Dunst, and Aljoscha C. Neubauer. 2013. The relationship between intelligence and creativity: New support for the threshold hypothesis by means of empirical breakpoint detection. Intelligence 41: 212–21. [Google Scholar] [CrossRef]

- Karwowski, Maciej, and Jacek Gralewski. 2013. Threshold hypothesis: Fact or artifact? Thinking Skills and Creativity 8: 25–33. [Google Scholar] [CrossRef]

- Karwowski, Maciej, Dorota M. Jankowska, Arkadiusz Brzeski, Marta Czerwonka, Aleksandra Gajda, Izabela Lebuda, and Ronald A. Beghetto. 2020. Delving into creativity and learning. Creativity Research Journal 32: 4–16. [Google Scholar] [CrossRef]

- Karwowski, Maciej, Jan Dul, Jacek Gralewski, Emanuel Jauk, Dorota M. Jankowska, Aleksandra Gajda, Michael H. Chruszczewski, and Mathias Benedek. 2016. Is creativity without intelligence possible? A Necessary Condition Analysis. Intelligence 57: 105–17. [Google Scholar] [CrossRef]

- Karwowski, Maciej, Marta Czerwonka, and James C. Kaufman. 2018. Does intelligence strengthen creative metacognition? Psychology of Aesthetics, Creativity, and the Arts 14: 353–60. [Google Scholar] [CrossRef]

- Kaufman, Alan S., and Nadeen L. Kaufman. 1993. The Kaufman Adolescent and Adult Intelligence Test Manual. Circle Pines: American Guidance Service. [Google Scholar]

- Kim, Kyung Hee. 2005. Can only intelligent people be creative? A meta-analysis. Journal of Secondary Gifted Education 16: 57–66. [Google Scholar] [CrossRef]

- Legree, Peter J., Mark E. Pifer, and Frances C. Grafton. 1996. Correlations among cognitive abilities are lower for higher ability groups. Intelligence 23: 45–57. [Google Scholar] [CrossRef]

- MacCallum, Robert C., Shaobo Zhang, Kristopher J. Preacher, and Derek D. Rucker. 2002. On the practice of dichotomization of quantitative variables. Psychological Methods 7: 19–40. [Google Scholar] [CrossRef] [PubMed]

- McGrew, Kevin S. 2009. CHC theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence 37: 1–10. [Google Scholar] [CrossRef]

- Meade, Adam. W., and S. Bartholomew Craig. 2012. Identifying careless responses in survey data. Psychological Methods 17: 437–55. [Google Scholar] [CrossRef]

- Molenaar, Dylan, Conor V. Dolan, Jelte M. Wicherts, and Han LJ Van Der Maas. 2010. Modeling differentiation of cognitive abilities within the higher-order factor model using moderated factor analysis. Intelligence 38: 611–24. [Google Scholar] [CrossRef]

- Muggeo, Vito M. R. 2008. Segmented: An R package to fit regression models with broken-line relationships. R News 8: 20–25. [Google Scholar]

- Mumford, Michael D., and Tristan McIntosh. 2017. Creative thinking processes: The past and the future. The Journal of Creative Behavior 51: 317–22. [Google Scholar] [CrossRef]

- Muthén, Linda K., and Bengt O. Muthén. 2002. How to use a Monte Carlo study to decide on sample size and determine power. Structural Equation Modeling 9: 599–620. [Google Scholar] [CrossRef]

- Neubauer, Aljoscha C., Anna Pribil, Alexandra Wallner, and Gabriela Hofer. 2018. The self–other knowledge asymmetry in cognitive intelligence, emotional intelligence, and creativity. Heliyon 4: e01061. [Google Scholar] [CrossRef] [PubMed]

- Nusbaum, Emily C., and Paul J. Silvia. 2011. Are intelligence and creativity really so different? Fluid intelligence, executive processes, and strategy use in divergent thinking. Intelligence 39: 36–45. [Google Scholar] [CrossRef]

- Olaru, Gabriel, Ulrich Schroeders, Johanna Hartung, and Oliver Wilhelm. 2019. Ant colony optimization and local weighted structural equation modeling. A tutorial on novel item and person sampling procedures for personality research. European Journal of Personality 33: 400–19. [Google Scholar] [CrossRef]

- Preacher, Kristopher J., Derek D. Rucker, Robert C. MacCallum, and W. Alan Nicewander. 2005. Use of the extreme groups approach: A critical reexamination and new recommendations. Psychological Methods 10: 178–92. [Google Scholar] [CrossRef]

- Preckel, Franzis, Heinz Holling, and Michaela Wiese. 2006. Relationship of intelligence and creativity in gifted and non-gifted students: An investigation of threshold theory. Personality and Individual Differences 40: 159–70. [Google Scholar] [CrossRef]

- Raven, John, John C. Raven, and John H. Court. 2003. Manual for Raven’s Progressive Matrices and Vocabulary Scales. Section 1: General Overview. San Antonio: Harcourt Assessment. [Google Scholar]

- Robitzsch, Alexander. 2020. sirt: Supplementary Item Response Theory Models. Available online: https://rdrr.io/github/alexanderrobitzsch/sirt/man/sirt-package (accessed on 20 January 2020).

- Rosseel, Yves. 2012. Lavaan: An R package for structural equation modeling and more. Version 0.5–12 (BETA). Journal of Statistical Software 48: 1–36. [Google Scholar] [CrossRef]

- Runco, Mark A. 2008. Commentary: Divergent thinking is not synonymous with creativity. Psychology of Aesthetics, Creativity, and the Arts 2: 93–96. [Google Scholar] [CrossRef]

- Ryan, Sandra E., and Laurie S. Porth. 2007. A Tutorial on the Piecewise Regression Approach Applied to Bedload Transport Data; Washington: US Department of Agriculture, Forest Service, Rocky Mountain Research Station.

- Schoppe, Karl-Josef. 1975. Verbaler Kreativitäts-Test-VKT: ein Verfahren zur Erfassung verbal-produktiver Kreativitätsmerkmale. Göttingen: Verlag für Psychologie CJ Hogrefe. [Google Scholar]

- Shi, Baoguo, Lijing Wang, Jiahui Yang, Mengpin Zhang, and Li Xu. 2017. Relationship between divergent thinking and intelligence: An empirical study of the threshold hypothesis with Chinese children. Frontiers in Psychology 8: 254. [Google Scholar] [CrossRef]

- Silvia, Paul J. 2015. Intelligence and creativity are pretty similar after all. Educational Psychology Review 27: 599–606. [Google Scholar] [CrossRef]

- Silvia, Paul J., Beate P. Winterstein, John T. Willse, Christopher M. Barona, Joshua T. Cram, Karl I. Hess, Jenna L. Martinez, and Crystal A. Richard. 2008. Assessing creativity with divergent thinking tasks: Exploring the reliability and validity of new subjective scoring methods. Psychology of Aesthetics, Creativity, and the Arts 2: 68–85. [Google Scholar] [CrossRef]

- Silvia, Paul J., Roger E. Beaty, and Emily C. Nusbaum. 2013. Verbal fluency and creativity: General and specific contributions of broad retrieval ability (Gr) factors to divergent thinking. Intelligence 41: 328–40. [Google Scholar] [CrossRef]

- Simmons, Joseph P., Leif D. Nelson, and Uri Simonsohn. 2011. False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science 22: 1359–66. [Google Scholar] [CrossRef] [PubMed]

- Simonsohn, Uri. 2018. Two lines: A valid alternative to the invalid testing of U-shaped relationships with quadratic regressions. Advances in Methods and Practices in Psychological Science 1: 538–55. [Google Scholar] [CrossRef]

- Sligh, Allison C., Frances A. Conners, and Beverly Roskos-Ewoldsen. 2005. Relation of creativity to fluid and crystallized intelligence. The Journal of Creative Behavior 39: 123–36. [Google Scholar] [CrossRef]

- Spearman, Charles. 1927. The Abilities of Man. New York: MacMillan. [Google Scholar]

- Steger, Diana, Ulrich Schroeders, and Oliver Wilhelm. 2020. Caught in the act: Predicting cheating in unproctored knowledge assessment. Assessment. [Google Scholar] [CrossRef]

- Süß, Heinz Martin, and André Beauducel. 2005. Faceted models of intelligence. In Handbook of Understanding and Measuring Intelligence. Edited by Oliver Wilhelm and Randall W. Engle. Thousand Oaks: Sage Publications. [Google Scholar]

- Takakuwa, Mitsunori. 2003. Lessons from a paradoxical hypothesis: A methodological critique of the threshold hypothesis. In Előadás: 4th International Symposium on Bilingualism. Tempe: Arizona State University, p. 12. [Google Scholar]

- Terman, Lewis, and Maud Merill. 1973. Manual for the Third Revision (Form LM) of the Stanford-Binet Intelligence Scale. Boston: Houghton Mifflin. [Google Scholar]

- Torrance, E. Paul. 1981. Thinking Creatively in Action and Movement. Earth City: Scholastic Testing Service. [Google Scholar]

- Torrance, E. Paul. 1999. Torrance Tests of Creative Thinking: Thinking Creatively with Pictures, Form A. Earth City: Scholastic Testing Service. [Google Scholar]

- Tucker-Drob, Elliot M. 2009. Differentiation of cognitive abilities across the life span. Developmental Psychology 45: 1097–118. [Google Scholar] [CrossRef]

- Urban, Klaus K. 2005. Assessing creativity: The test for creative thinking—drawing production (TCT-DP). International Education Journal 6: 272–80. [Google Scholar]

- Van Der Maas, Han L., Conor V. Dolan, Raoul P. Grasman, Jelte M. Wicherts, Hild M. Huizenga, and Maartje E. Raijmakers. 2006. A dynamical model of general intelligence: the positive manifold of intelligence by mutualism. Psychological Review 113: 842–61. [Google Scholar] [CrossRef]

- Vandenberg, Robert J., and Charles E. Lance. 2000. A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods 3: 4–70. [Google Scholar] [CrossRef]

- Wechsler, David. 1981. WAIS Manual; Wechsler Adult Intelligence Scale-Revised. New York: The Psychological Corporation. [Google Scholar]

- Weiss, Selina, Diana Steger, Kaur Yadwinder, Andrea Hildebrandt, Ulrich Schroeders, and Oliver Wilhelm. 2020a. On the trail of creativity: Dimensionality of divergent thinking and its relation with cognitive abilities and personality. European Journal of Personality. [Google Scholar] [CrossRef]

- Weiss, Selina, Oliver Wilhelm, and Patrick Kyllonen. 2020b. A review and taxonomy of creativity measures. Psychology of Aesthetics, Creativity and the Arts. submitted. [Google Scholar]

- Whitehead, Nedra, Holly A. Hill, Donna J. Brogan, and Cheryl Blackmore-Prince. 2002. Exploration of threshold analysis in the relation between stressful life events and preterm delivery. American Journal of Epidemiology 155: 117–24. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Wicherts, Jelte M., Coosje L. S. Veldkamp, Hilde E. M. Augusteijn, Marjan Bakker, Robbie C. M. van Aert, and Marcel A. L. M. van Assen. 2016. Degrees of freedom in planning, running, analyzing, and reporting psychological studies: A checklist to avoid p-hacking. Frontiers in Psychology 7: 1832. [Google Scholar] [CrossRef]

- Wilhelm, Oliver, Ulrich Schroeders, and Stefan Schipolowski. 2014. Berliner Test zur Erfassung fluider und kristalliner Intelligenz für die 8. Bis 10. Jahrgangsstufe [Berlin Test of Fluid and Crystallized Intelligence for Grades 8‒10]. Göttingen: Hogrefe. [Google Scholar]

- Wilson, Robert C., J. P. Guilford, Paul R. Christensen, and Donald J. Lewis. 1954. A factor-analytic study of creative-thinking abilities. Psychometrika 19: 297–311. [Google Scholar] [CrossRef]

- Zhang, Feipeng, and Qunhua Li. 2017. Robust bent line regression. Journal of Statistical Planning and Inference 185: 41–55. [Google Scholar] [CrossRef]

| 1 | We understand creativity as the ability to produce divergent ideas; thus, we do not further distinguish between creativity and divergent thinking for the purpose of this paper and use the terms interchangeably from now on. |

| Study | Sample | Analytical Method | Measures of Creative Ability (DT) | Measures of Intelligence | Results | Threshold (z-Standardized) |

|---|---|---|---|---|---|---|

| Guilford and Christensen (1973) | 360 (students) | Scatterplots | 10 verbal and figural DT tests 1 | e.g., Stanford Achievement Test | No Threshold | - |

| Fuchs-Beauchamp et al. (1993) | 496 (pre-schoolers) | Correlations in two IQ groups | Thinking Creatively in Action and Movement 2 | e.g., Stanford-Binet Intelligence Scale 8 | Threshold | 1.33 |

| Sligh et al. (2005) | 88 (college students) | Correlations in two IQ groups | Finke Creative Invention Task 3 | KAIT 9 | No Threshold | - |

| Preckel et al. (2006) | 1328 (students) | Correlations and Multigroup CFA | BIS-HB 4 | BIS-HB 4 | No Threshold | - |

| Holling and Kuhn (2008) | 1070 (students) | Multigroup CFA | BIS-HB 4 | Culture Fair Test 10 | No Threshold | - |

| Cho et al. (2010) | 352 (young adults) | Correlations in two IQ groups | Torrance Test 5 | e.g., WAIS 11 | Threshold | 1.33 |

| Jauk et al. (2013) | 297 (adults) | SRA | Alternate Uses and Instances 6 | Intelligence-Structure-Battery 12 | Threshold | −1.00 to 1.33 |

| (Karwowski and Gralewski (2013) | 921 (students) | Regression analysis and CFA | Test for Creative Thinking-Drawing Production 7 | Raven’s Progressive Matrices 13 | Threshold | 1.00 to 1.33 |

| Shi et al. (2017) | 568 (students) | among others SRA | Torrance Test 5 | Raven’s Progressive Matrices 13 | Threshold | 0.61 to 1.12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weiss, S.; Steger, D.; Schroeders, U.; Wilhelm, O. A Reappraisal of the Threshold Hypothesis of Creativity and Intelligence. J. Intell. 2020, 8, 38. https://doi.org/10.3390/jintelligence8040038

Weiss S, Steger D, Schroeders U, Wilhelm O. A Reappraisal of the Threshold Hypothesis of Creativity and Intelligence. Journal of Intelligence. 2020; 8(4):38. https://doi.org/10.3390/jintelligence8040038

Chicago/Turabian StyleWeiss, Selina, Diana Steger, Ulrich Schroeders, and Oliver Wilhelm. 2020. "A Reappraisal of the Threshold Hypothesis of Creativity and Intelligence" Journal of Intelligence 8, no. 4: 38. https://doi.org/10.3390/jintelligence8040038

APA StyleWeiss, S., Steger, D., Schroeders, U., & Wilhelm, O. (2020). A Reappraisal of the Threshold Hypothesis of Creativity and Intelligence. Journal of Intelligence, 8(4), 38. https://doi.org/10.3390/jintelligence8040038