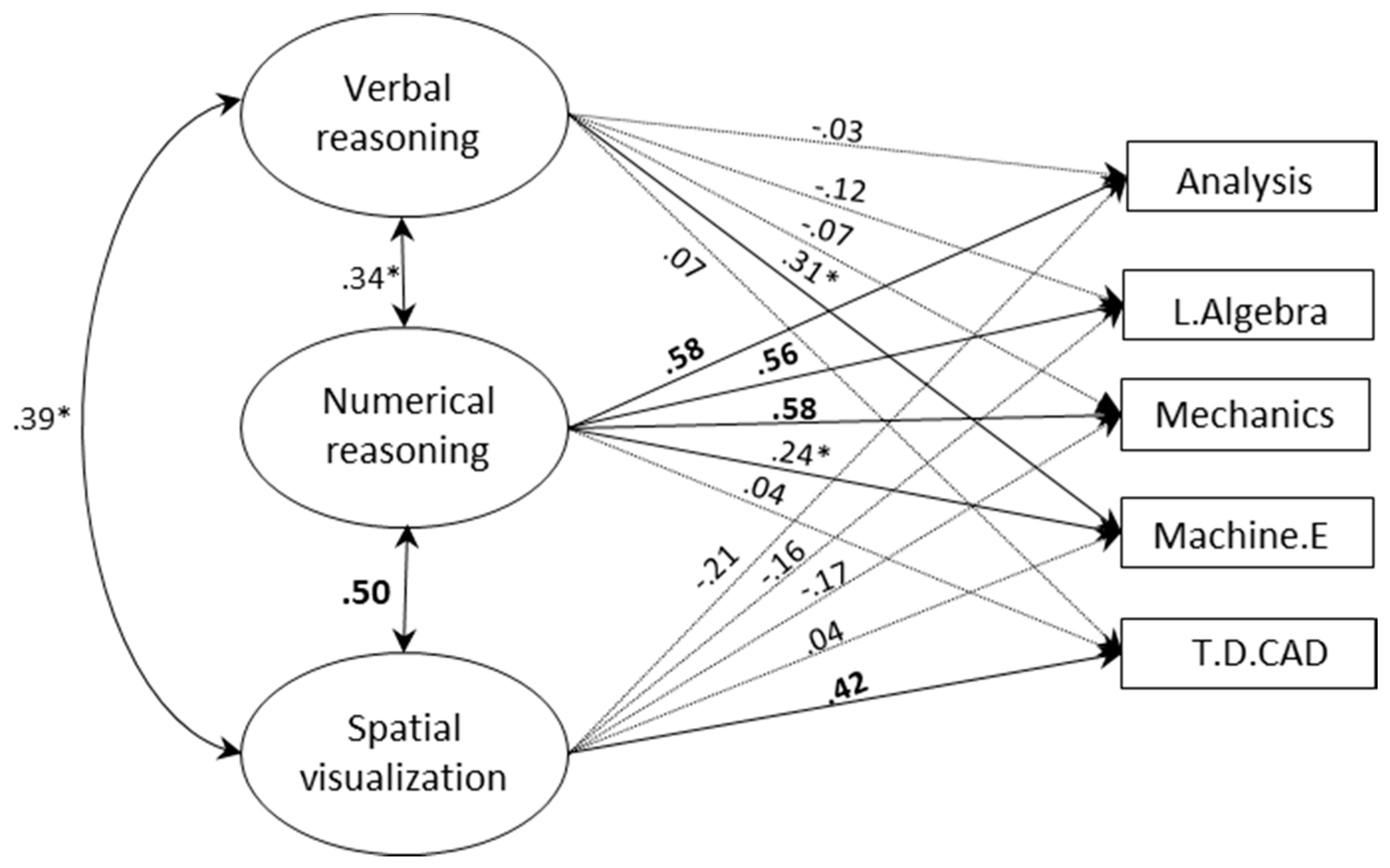

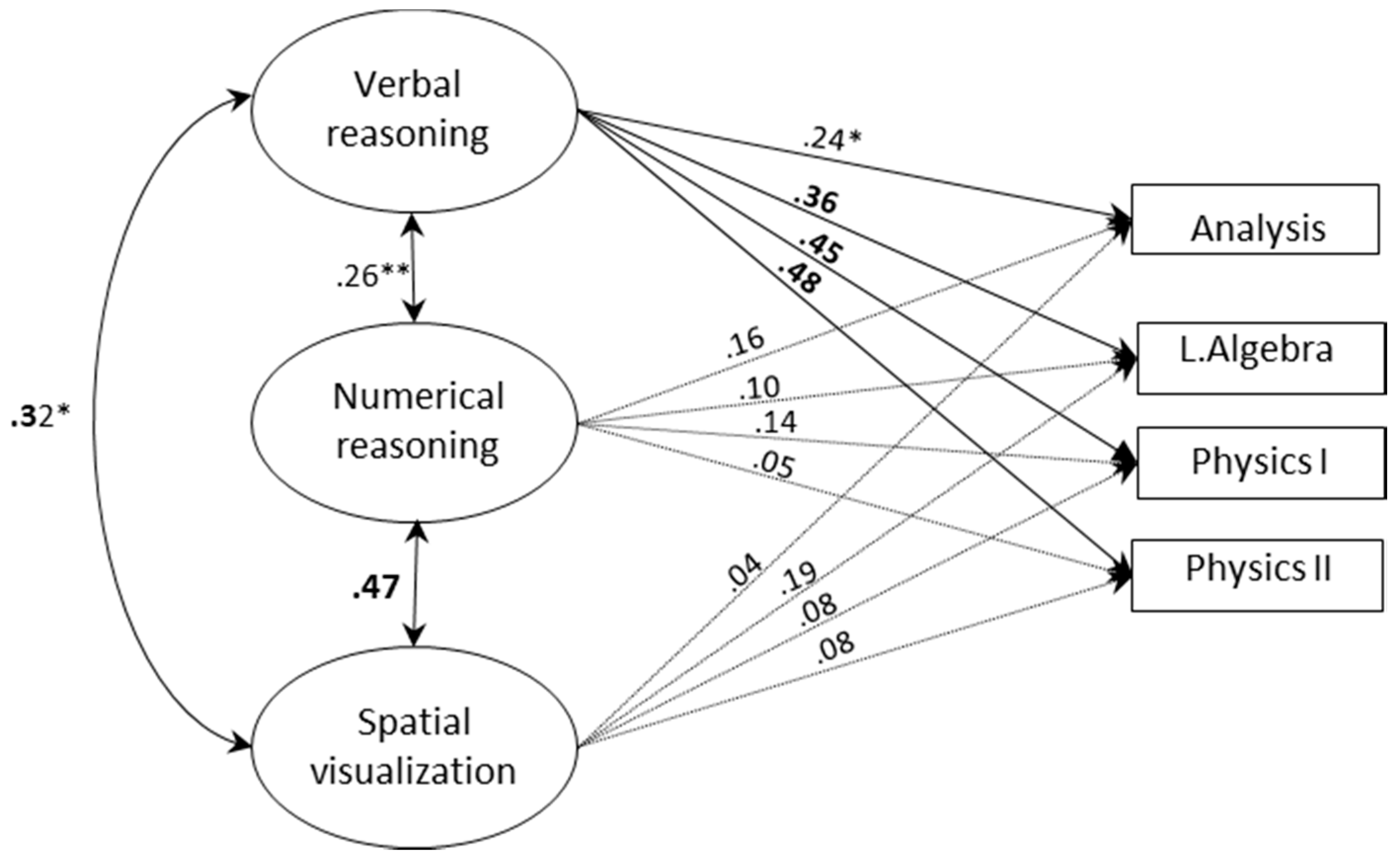

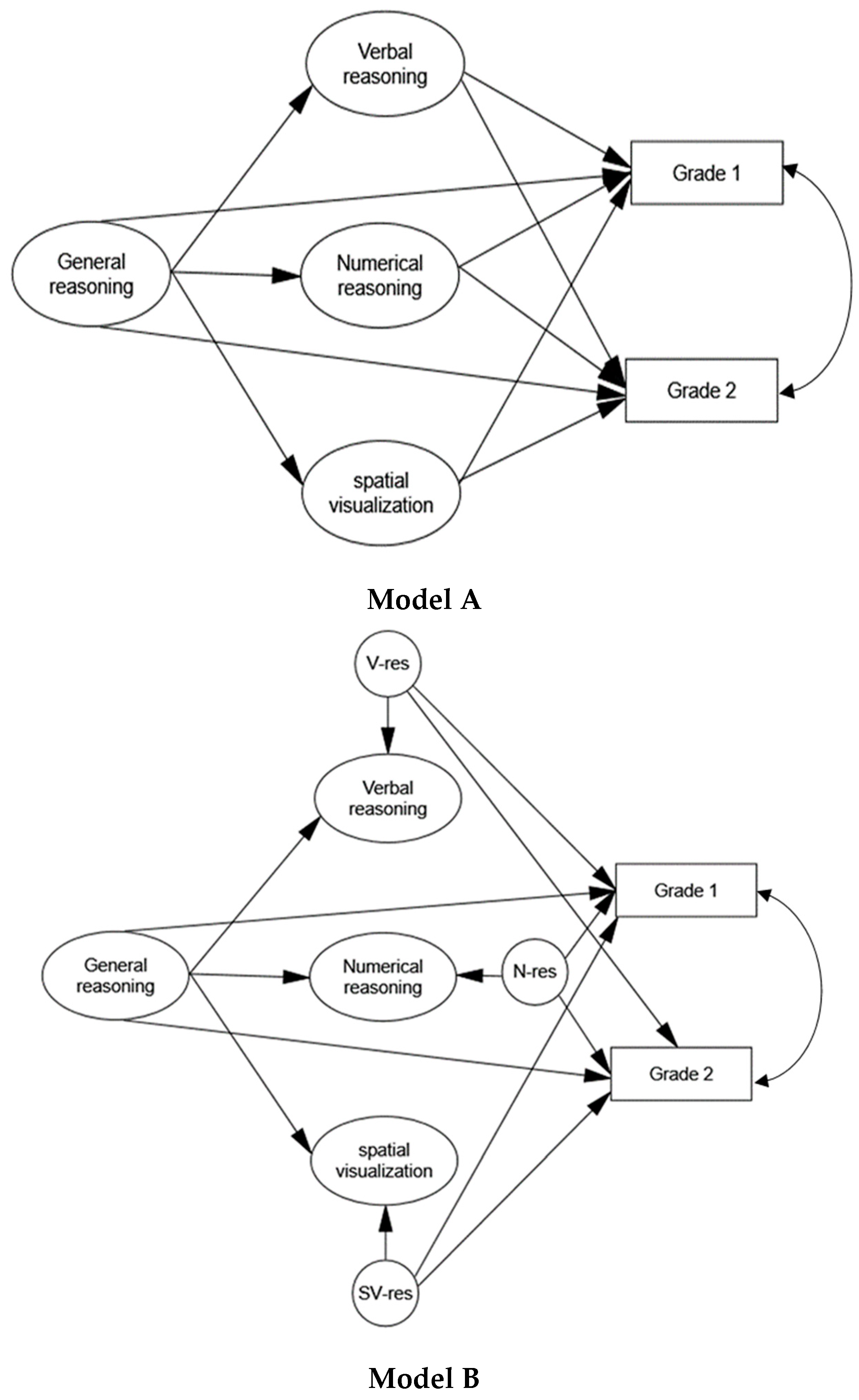

While previous research showed the unique predictive validity of early-assessed SA for long-term STEM achievements, we investigated whether ability differences among students who entered higher STEM education can be linked with their achievements during the first undergraduate year. Our primary focus was on the particular role of SV ability for different STEM topics. Additionally, as we were dealing with a high-ability sample, our investigation also challenged the view that cognitive abilities forfeit their predictive power in highly selected samples. Differently from other studies on advanced STEM learning, we systematically differentiated between ability domains, domains of achievements, study programs and ability measures. Overall, our data clearly showed that ability differences in this high-ability population are relevant to achievement prediction, corroborating previous findings against a ‘threshold’ view of cognitive abilities [

34,

44,

48,

49]. The results were also clear with respect to our two research questions, namely whether SV predicts achievements on STEM courses beyond numerical and verbal reasoning abilities, and whether SV differs in its predictive validity between domains of achievements. Across ability measures and study programs, SV did not uniquely predict achievements in math-intensive courses, which constitute a major part of the curriculum. In fact, SV was unrelated to the math and physics grades of engineering students even before controlling for other abilities. Among math-physics students, two cross-sectioning tests significantly correlated with math and physics grades, but without incremental effects over other abilities or a domain-general ability factor. In contrast, SV had unique predictive validity for achievements on an engineering technical drawing course. Moreover, numerical and verbal reasoning abilities were uniquely predictive of achievements on most math and physics courses. Thus, the predictions seem highly domain specific: differences in SV, even among the spatially talented, mattered when complex spatial tasks were in focus (technical drawing), whereas differences in numerical or verbal reasoning abilities among the mathematically talented mattered when math was in focus. Nonetheless, at least for math-physics students, the effects on math and physics grades were partly driven by domain-general factors. Moreover, when a general factor was controlled for, the effect of SV on technical drawing grades decreased. It therefore appears that rather than broadly determining STEM success during the novice phase, as would be expected based on prior research assumptions, SV emerged as a narrower STEM predictor relative to other abilities, which in turn predicted success on a broader range of core STEM subjects. Apparently, when it comes to predicting mathematics achievements, SV seems to have a lower threshold than numerical and verbal abilities (i.e., a lower level is sufficient for success). We next discuss further explanations and implications of these results.

4.1. Predictions at a High-Ability Range

One may suspect that the lack of correlations between SV and math-based achievements is a result of a restriction of range in SV, and that in more heterogeneous samples positive correlations would emerge. We cannot rule out this possibility. However, our goal was to study predictors of achievements among students who select advanced STEM programs rather than among students in general. The different patterns of abilities–achievements relations that we found indicate that in spite of the high-ability range in this group, sufficient variability existed for detecting effects. For this reason, we find it unlikely that the weak correlations between SV and math achievements found here are entirely due to a restriction of range, but rather assume they indicate a weaker relevance of SV to some domains of achievements. It is also noteworthy that the highly challenging test ‘Schnitte’, which was specifically designed for individuals with high SA, yielded the same pattern of links with grades as the other tests, even though its score range was broader.

Nonetheless, to elaborate on the more general case, it should be noted that all of the abilities were at the high range in this sample, and numerical ability even more so than SV. Consequently, all of the effects are potentially underestimated if generalizations to a broader population are to be made. The effects in a less selective sample are thus expected to be magnified proportionally: numerical and verbal abilities will still have stronger effects on math than SV. If indeed a higher frequency of lower SV scores were necessary to find effects on math achievements, one possible implication could be that poor SV ability is a stronger marker than exceptional SV ability for succeeding or not in advanced math learning. This would, in fact, be in line with findings on SV–math relations among students who performed poorly on SV tests (e.g., [

35]). Finally, to the extent that SV ability predicts STEM achievements more strongly in a lower ability range, it would remain to be determined whether this stems from spatial-visual factors or from domain-general ones. The point may even be more important in less selective samples, because the overlap between cognitive abilities (i.e., domain generality) tends to be stronger in lower ability ranges [

67]. With higher variability in general ability, its contribution to achievement prediction is likely to be stronger.

4.2. Correlates of Advanced Mathematics

It appears that for students who are not particularly poor in SV, being more talented spatially might not further contribute to achievements in math-intensive courses, at least in those that form the basis for even more advanced courses. That SV nonetheless predicted technical drawing grades in this sample suggests that the courses substantially differ in their spatial demands. It is possible that advanced math courses, although highly demanding, do not draw on exceptional spatial skills as more specialized engineering courses do. Math courses may focus on translating complex spatial structures into abstract mathematical formalism, more than they require superb visualization of such structures. Furthermore, ‘space’ in advanced math is often not entirely familiar from “real world” experience, for example when dealing with dimensions higher than three. Although students may use mental images to visualize abstract math concepts, these do not necessarily require particularly high spatial skills, nor is it clear whether they are crucial for better learning. This does not mean that SV ability is unimportant to advance math, but rather points at a possible limitation of SV as a cognitive resource for learning higher math. Additionally, our finding that positive correlations between one type of SV tests (visualization of cross-sections) and some math achievements were mediated by factors common to other domains of reasoning suggests that factors other than visualization—which may be strongly present in some spatial tests—play a role when it comes to mathematics.

The results additionally suggest differential cognitive demands between math-intensive courses, since cognitive abilities related differently to math-courses across study programs. As mentioned in the method section, mathematics is taught in essentially different ways in each of the study programs we investigated. An informal inquiry among math faculty (who teach these courses in both programs) informed us that for math-physics students, math is typically highly theoretical and centrally dealing with proof learning. It has also been described as requiring an extreme change in the ways in which students are used to thinking of mathematics at the Gymnasium, as it introduces concepts that sometimes contradict their previously acquired knowledge and intuitions. Mathematics for mechanical engineers, although also highly abstract compared with Gymnasium classes, is typically more strongly linked with real world applications and relies more often on calculations than on proof. Although merely descriptive, we speculate that such differences in teaching approach have implications on the kinds of mental processes and abilities that are required for successful learning. For example, application-oriented mathematics may require high efficiency in utilizing domain-specific skills and knowledge, which may explain the almost exclusive relation between numerical reasoning ability and math in the engineering group. Math that is more theoretical and proof-based may require additional reasoning abilities and rely less critically on calculation efficiency. The stronger domain-general links of abilities-grades in the math-physics group and the unique link with verbal reasoning may be seen as indications in this direction. Obviously, a more systematic investigation would be needed in order to confirm these observations.

2 Nevertheless, the data provides initial evidence that even within the same mathematical areas (i.e., calculus and algebra) basic cognitive abilities can have different roles.

4.3. The Present Results in Light of Previous Research

Our results seem inconsistent with findings on the unique predictive validity of SA among students of extremely high ability (e.g., the top 0.01% in Lubinski and Benbow, 2006). There are some obvious differences between the present study and these earlier ones, primarily in scale, the kinds of outcomes considered, and more broadly being conducted in educational systems that may substantially differ. Such factors likely limit the comparability of results. Nevertheless, we assume some invariance in the cognitive aspects of STEM learning across social-cultural systems, and find additional explanations noteworthy. First, the timing of ability assessment might be critical, as ability differences during early adolescence are likely to be influenced by ongoing cognitive development. Thus, differences in earlier age might reflect not only ability level per se, but also, for example, differences in developmental rates. These may considerably influence the prediction of future outcomes. Additionally, the studies on gifted adolescents used above-level testing (i.e., tests that were designed for college admission rather than for 13-year olds), which ensured large score ranges within a highly selected group. It is plausible that using tests that are more challenging in our sample would have yielded different results, though it is difficult to predict in what way. In particular, it is unclear whether higher variance further up the SV scale (i.e., due to more difficult tests), would have revealed effects where variance in lower levels did not. Also noteworthy is that in predicting long-term achievements, early ability differences may be markers of general potential for learning and creativity, more than they indicate which abilities are engaged in particular learning tasks. Moreover, while the above studies predicted general achievement criteria (e.g., choices, degrees, publications), we focused on the interplay between abilities and specific achievements, which we assumed more closely indicate which abilities are relevant to STEM learning. The differences in prediction patterns may have therefore stemmed, in part, from different choices of outcome criteria, and in this respect should be seen as complementary. Finally, measures of SA in previous studies might have involved more non-spatial factors than in the present one, and these might have influenced predictions. For example, in Wai et al. (2009), SA included a figural matrices test, which is a strong indicator of fluid reasoning, and a mechanical reasoning test, which is highly influenced by prior experience with mechanical rules. These factors may, in themselves, be essentially important to STEM, but they do not necessarily reflect SV ability.

Studies that examined SA and advanced mathematics among STEM students are scarce. Miller and Halpern [

44] found positive effects of SA training on physics but not math grades of high-ability STEM students, and concluded that SA training was not relevant to the content of math courses, which is consistent with our own conclusion. Some studies with younger populations also found small or no effects of SA on math performance [

3], but others found more positive relations between SA and math, mostly among non-STEM students or with simpler forms of math [

35,

64]. Further research on the role of SA in advanced math learning therefore seems warranted.

Finally, we were surprised by the weak correlations between SV and physics grades in both of the groups, and particularly with mechanics, which has been positively related to SV before [

10,

68]. One possible explanation for these differences is that students in our sample may have had higher levels of prior-knowledge in science: first at entry level due to self-selection, and at the time of achievements assessment (i.e., course exams), as it reflected learning during an entire academic year. As suggested by Uttal and Cohen [

16], SA may be particularly important when domain-knowledge is low. Consistent with this, SV was related to mechanics problem solving [

10,

68] and to advanced math problems [

36] among non-STEM students who had no formal knowledge on these topics. It follows that if students who select STEM programs have enough prior knowledge so that SV ability is not ‘needed’ for many topics, then the connection between SV ability and STEM is more relevant before rather than after entry into undergraduate STEM. This is, in fact, consistent with findings that SA was particularly predictive of later choice in STEM comparing to other domains [

4]. Nonetheless, students in our sample can by no means be regarded ‘experts’. Although they may have acquired STEM knowledge in high school, they are novices with respect to many new and challenging knowledge domains at the undergraduate level.