Abstract

The goal of this paper was to describe the context within which the PASS theory of intelligence was conceived and the reasons why this theory was used to guide the construction of the Cognitive Assessment System and the several versions of the Cognitive Assessment System, 2nd Edition. We also discuss validity issues such as equitable assessment of intelligence, using PASS scores to examine a pattern of strengths and weaknesses related to academic variability and diagnosis, and the utility of PASS scores for intervention. We provide summaries of the research that informs our suggestions that intelligence testing should be theory-based, not constrained by the seminal work of test developers in the early 1900s, and neurocognitive processes should be measured based on brain function.

1. Introduction

During most of the 1900s and a quarter of the 2000s, group and individually administered intelligence tests played a key role in education and psychology. Since () built upon the work of Binet and Simon to create the 1916 Stanford–Binet, the scores his test provided have changed the course of countless peoples’ lives—for some in good and others in bad ways (i.e., eugenics movement). Intelligence tests have played an important part in a comprehensive assessment, as recognized by (), who wrote, “Investigation of the mental capacities of human beings may rationally be considered a matter of prime importance for the individual and for civilization” (p. v). Today, intelligence tests are one of the most widely used tools by psychologists, and the scores these tests yield are the foundation of important decisions about children and adults ().

Despite the widespread use and the enormous impact intelligence tests have had, there has been and continues to be considerable controversy over their value, test fairness, interpretation, and even how to define and measure intelligence (e.g., ; ; ; ). Of all the issues surrounding intelligence tests, perhaps the two most important questions are: What kind of questions should be used to measure intelligence? And do these questions measure constructs that are defined by a theory of intelligence?

With reference to the Binet–Simon Scale, () stated that: “The scale… is not a theoretical work; it is the result of long investigations…in the primary schools of Paris, with both normal and sub normal children” (p. 41). They criticized “authors who have made a specialty of organizing new tests according to theoretical views, but who have made no effort patiently try them out in the schools” (p. 41). Binet and Simon’s examination of their tests helped them identify children in need of special education because of intellectual disability, and they made a distinction between the measure of intelligence and their method of classifying degrees of subnormal intelligence (). The tests they created were designed to be “simple, rapid, convenient, precise, heterogeneous, holding the subject in continued contact with the experimenter, and bearing principally upon the faculty of judgement” (p. 41). They did notice that “there are tests which require knowledge outside the intelligence of the child… that he has learned… from his parents or friends…and there are tests too exclusively scholastic, we have thought well to suppress” (). They continued, “This verbal superiority must certainly come from family life; the children of the rich are in a superior environment from the point of view of language; they hear a more correct language and one that is more expressive” (p. 320). In contrast, Terman included items dependent upon school learning in the 1916 version of the Stanford–Binet. He believed that “intelligence at the verbal and abstract levels is the highest form, the sine qua non, of mental ability” (). Subsequently, the Stanford–Binet was criticized because it relied “much too heavily with verbal and abstract material, thus penalizing the individual who for whatever reason, had been handicapped… by lack of opportunity to acquire and develop the use of the English language” (). Terman’s perspective on intelligence testing influenced the content of the US Army Alpha and Beta Tests (; ) via his student Arthur Otis.

Arthur Otis was instrumental in the development of the Army Mental Tests, which, in turn, influenced David Wechsler, who was trained in the School of Military Psychology () and aware of the Army Mental Tests as well as the Binet–Simon and other scales. The intelligence test Wechsler ultimately published in 1939 included subtests that were similar to those found in the Army Alpha and Beta (; ). Decades later, () noted that despite different titles and authors, intelligence tests developed to that point were “little more than tests of general intelligence, and thus are direct descendants of the Alpha and Beta which, in turn, were descendants of the Binet-Simon” (; ).

() noted that “Theoretical considerations have lagged behind the practical application of mental tests. We have been measuring intelligence long before we have decided as to what intelligence really is” (p. 1). The notion of general ability enunciated by () was widely accepted, yet as () explained, “We did not start with a clear definition of general intelligence… psychologists borrowed from every-day life a vague term implying all-round ability… and has been and still is attempting to define it more sharply and endow it with a stricter scientific connotation” (p. 53). Pintner foresaw the considerable efforts psychologists would make over the next 100 years to better understand and interpret what could be described as traditional intelligence tests. Perhaps an evolutionary change is needed.

It seems reasonable that the first question that should confront any intelligence test developer has been and should be, “What theory of intelligence will the test be built upon?” The question of test content would then be guided by the theory of intelligence. It also seems reasonable that the theory of intelligence should be based on an understanding of neurocognitive brain functions. We will provide a description of how neurocognitive abilities have been used to define intelligence and how test questions could be devised to represent those abilities. We will align those neurocognitive abilities to recent research on neural networks and examine various aspects of the validity of one such approach.

2. The PASS Neurocognitive Theory

The PASS theory () is rooted in the conceptualization of brain function as described by Alexander (, , ). Das and Naglieri utilized Luria’s description of the basic neurocognitive processes to define intelligence (; ; ). Luria hypothesized that human cognitive functions can be conceptualized within a framework of three separate but interrelated brain systems that provide four basic psychological processes. These brain systems are referred to as functional units because the neurocognitive mechanisms associated with each work in separate but interrelated components, namely, Planning, Attention, Simultaneous, and Successive, of basic psychological processes. Each of these neurocognitive abilities will be described in the sections that follow.

2.1. Planning

Planning is a neurocognitive ability used when a person decides how to complete a task using a strategy, self-monitoring, and self-correction, especially in novel situations (). Planning provides for the generation of new ways to solve problems, especially in situations where no method or solution is immediately apparent. Planning ability is also used when individuals reflect on events following a task that was completed, recognizing what worked and what did not work and anticipating other viable options to consider in the future.

CAS2 and CAS2: Brief subtests on the Planning scale vary in their content, but they all present the examinee with novel problems to solve. The examinee who creates a strategy completes the task more efficiently and, therefore, obtains a higher score. The Planned Codes subtest is a good example of a task that can be solved using a strategy. The subtest requires the child to write a specific letter code under the corresponding letter (e.g., XO for A, OX for B, etc.). Children often use a strategy such as completing all the As and then the Bs, which results in higher scores than those who do not (). All three subtests on the Planning scale are more efficiently completed using a strategy.

Teacher observations of a student’s classroom behavior included on the CAS2: Rating Scale () can provide insight into a student’s ability to use planning. For example, the teacher’s observations about how well the student solves novel tasks and how well a student can think of several ways to solve a problem can illustrate if and how a student is using Planning. It is important to consider, however, if the classroom instruction is very structured and each student is taught to use the same method of solving problems, then the behavior in the class will reflect how well the student is following directions rather than how well the student could develop a variety of solutions.

2.2. Attention

Attention is a neurocognitive ability used to selectively focus on a specific stimulus while inhibiting responses to other stimuli. Attention is an essential component of intelligent behavior because it provides cortical arousal and higher forms of attention and is required for the recruitment of other neurocognitive processes. Optimal conditions of arousal are needed for the more complex forms of attention involving “selective recognition of a particular stimulus and inhibition of responses to irrelevant stimuli” (). Higher forms of attention include focused and selective cognitive activity, shifting attention based on salience, and resistance to distraction. The longer attention is needed, the more the activity requires effort.

As an example, the Expressive Attention subtest in the CAS2 requires the student to identify one aspect of a target stimulus (e.g., the color blue) and resist responding to distractions (e.g., the red word written in blue ink) as in the Stroop test (). This task requires resistance to distraction and focused, selective, sustained, and effortful activity (). Focused attention allows for the identification of a specific stimulus, selective attention provides the inhibition of responses to distracting stimuli, and sustained attention provides continued effort over time.

Classroom behaviors observed by the teacher on the CAS2: Rating Scale can reflect a student’s ability to attend and resist distractions over time. For example, behavioral evidence of good attention can be noted when the teacher observes how well a student can stay focused on their work despite distractions in the classroom as well as distracting thoughts. Similarly, solving a math problem such as 2 + 6 − 1 =? involves careful attention to the numbers and the signs in addition to knowing the math facts.

2.3. Simultaneous

Simultaneous processing is a neurocognitive ability used to integrate separate stimuli into a single whole or interrelated group. This ability is used when separate elements must be combined into a conceptual whole. This may involve visual–spatial as well as linguistic stimuli that require comprehensive grammatical structures. The spatial aspect of Simultaneous ability involves the perception of stimuli and their interrelationships as a whole and the use of visual images. The grammatical dimension of Simultaneous processing provides a way to integrate words into ideas through the comprehension of word relationships, prepositions, and inflections so the person can obtain meaning. It is important to recognize that Simultaneous processes can involve nonverbal as well as verbal content.

The Verbal Spatial Relations subtest is a good example of a task that demands Simultaneous processing. The test requires that the examinee understand the interrelationships of objects presented in six different scenes. The task is to identify which scene corresponds to a verbal statement (e.g., “which picture shows a ball under the table?”) provided by the examiner. The other two subtests on the Simultaneous scale required understanding relationships, for example, among shapes (i.e., Matrices and Figure Memory).

Classroom behaviors can reflect the use of Simultaneous processing as measured by the CAS2: Rating Scale. Students who prefer hands-on materials and visual–spatial tasks like drawing designs, especially three-dimensional ones, and those who are good at patterns and complex shapes are usually good at Simultaneous processing. Simultaneous neurocognitive ability is also essential for identifying words as a whole (e.g., sight words), understanding grammar () and patterns in the spelling of words, verbal concepts, and reading comprehension dependent on getting the big picture.

2.4. Successive

Successive processing is a neurocognitive ability used when information is arranged in a specific sequence in which each part follows the other in a strictly defined order. Successive processing is used to manage any activity that is arranged in a sequence, for example, the formation of sounds and movements into a specific order. This ability is necessary for recalling information in order and understanding a statement based on the syntax of the language, as well as phonological analysis (; ). Successive processing is important for the initial acquisition of reading, decoding, remembering the sequence of motor movements, speech articulation, listening comprehension, and other tasks that require following sequential order.

The CAS2 and CAS2: Brief subtests that are used to measure Successive processing vary in their content, but all assess how well a student can manage a sequence of stimuli. The tasks demand repeating a sentence using the correct series of words as well as comprehension of sentences that are understood only by appreciating the sequence of words. The CAS2 also measures successive processing across auditory and visual modalities using the Word Recall and Visual Digit Span tests, respectively.

Classroom behaviors and those included in the CAS2: Rating Scale can reflect a student’s facility working with information in order. For example, following a series of directions given by the teacher demands Successive processing. Similarly, the examination of the sequence of a series of events is also involved in reading, especially initial reading, to decode unfamiliar words and spelling. Successive processing is critical when a student is presented with confusing words and must focus carefully on the pronunciation of sounds in order.

3. Measurement of PASS Theory Using CAS2

3.1. CAS2, CAS2: Brief & CAS2: Rating Scale

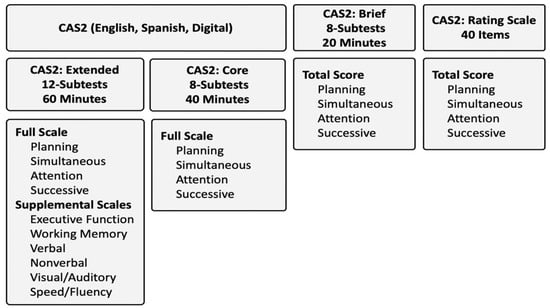

There are several ways to measure PASS neurocognitive abilities using the Cognitive Assessment System, Second Edition (CAS2; ). Practitioners have the option to use the CAS2 12-subtest Extended version, which yields standard scores for all subtests, the Full Scale, Planning, Attention, Simultaneous, Successive scales, and six Supplemental scores (see Figure 1). The CAS2 8-subtest version yields all subtests and five scores for the PASS and Full Scale. Both versions, as well as the English, Spanish (), and Digital () formats, are scored using CAS2: Online Scoring and Report System (), which generates all scores and an interpretive report. The CAS2: Brief comprises four subtests that yield five standard scores (PASS and a Total Score). The eight subtests on the CAS2 Core are the same as those on the CAS2 Extended, but the subtests on the CAS2 Brief are similar but not the same as those on the CAS2. Finally, the CAS2: Rating Scale comprises 10 items per PASS scale completed by a teacher and yields PASS and Total scores. Standard scores set at a mean of 100 and a standard deviation of 15 are provided.

Figure 1.

Cognitive Assessment System—2nd Edition.

One unique feature of the CAS2 and CAS2 Brief is that once the standard administration directions are provided to the examinee, the examiner is allowed to use alternative means to ensure the examinee clearly understands what is required to complete the task. If the child does not seem ready or appears in any way confused or uncertain, the examiner is allowed to provide a brief explanation if necessary. This is intended to give the examiner the freedom to explain what the child must do in whatever way necessary to ensure that the child understands the task, including gestures, verbal statements, or communication in any language. The intent is to give the examiner full decision-making to explain the demands of the subtest and to ensure that the child understands what to do. To date, we are not aware of any other measure of cognitive ability allowing this additional method. For more information about the different versions of the CAS2, including, for example, PASS scale variability, psychometric analysis of differential item functioning, and score interpretations, see the respective Manuals.

The development and cultural adaptation of the CAS2 Español began with an initial translation of the CAS into Spanish, undertaken in 2000 by a group at the University of Puerto Rico led by Wanda Rodriquez. These researchers used a method called “back translation”, in which the test is translated from English to Spanish and then it is translated back from Spanish to English. The administration and scoring manual, the test’s written materials, and the test scoring sheet were translated using this method. The twelve CAS subtests were divided into two equal groups, and each group was assigned to a pair of translators. Each translator on the team worked independently on six subtests, and once the subtests were translated, the two translators on the same team compared their translations. Any disagreements were discussed, and when necessary, teams consulted a translator on the other team. When agreement was reached on the translation of their six subtests, one translator from each team joined to determine the consistency of the vocabulary used in the whole test. Once these processes were completed, the product was presented to two psychologists with broad experience in instrument translation, and they, in turn, checked for coherence between the English and Spanish versions. A similar approach was used for the CAS2—Spanish, but with a larger group of experts that included psychologists and educators from different geographical locations with knowledge of different Spanish dialects.

Within the normative sample of the CAS2, Hispanic males and females were proportionally well represented, consistent with the 2011 US census. According to the US census, Hispanics ages 5 to 21 years constituted 21% of the population, and the CAS2 matched this within its normative sample. The validity of using PASS and CAS with Hispanics has been achieved through several means. Several studies have examined the CAS scores for racial and ethnic group differences. () found that CAS Full Scale scores for Hispanic and White children differed by 4.8 points when demographic differences were statistically controlled. They also reported that the correlations between CAS scores with achievement did not differ significantly for the Hispanic and White samples. () compared PASS scores obtained on the CAS when administered in English and Spanish to bilingual children referred for reading problems. The children earned similar Full-Scale scores on the English (mean of 84.6) and Spanish (mean of 87.6) versions of the CAS, and the scores from the two versions were highly correlated (r = 0.96). Additionally, () studied the performance of referred Hispanic ELLs on the English and Spanish versions of the CAS and reported that the Full-Scale scores on the English (mean of 86.4) and Spanish (mean of 87.1) versions were very similar and highly correlated (r = 0.99, corrected for range restriction). These findings for the CAS suggest that ability may be more fairly assessed across race and ethnic groups with the PASS neurocognitive approach.

3.2. CAS2 Versions

The rationale behind the development of the various versions of the CAS2 was driven by the ways different practitioners could obtain and use PASS scores. For example, the CAS2 8- and 12-subtest versions offer a comprehensive examination of a person’s neurocognitive abilities, which can be used for diagnostic decision-making and instructional planning. The CAS2: Brief can be used as a screening tool for possible learning problems and decisions related to instructional planning, as well as for re-evaluations and gifted identification. The CAS2: Rating Scale can be used with the CAS2 and CAS2: Brief to determine the similarity of the scores across these measures. For a full discussion of these versions of the CAS2, see Essentials of CAS2 Assessment () and the respective test Manuals.

3.3. Neuropsychological Underpinnings

The CAS and the CAS2 were created using the PASS Theory as a guide; the theory itself was based on Luria’s understanding of how the brain operates. Although sophisticated neuroscientific resources that exist today did not exist in the times of Luria, his understanding of how the brain works still stands as valid (e.g., ). The functional units of the brain he described can today be understood as functional networks. These networks involve several cortical and subcortical structures that are in constant flux of neural activity based on environmental demands. For example, studies using functional imaging technology (; ; ) have shown that each area of the brain participates in numerous large and small-scale functional systems within and across cortical and subcortical brain structures. Supportive research in neuroscience literature has shown that functional systems combine and dissolve at different times and on fast timescales across tasks (; , ). These networks have a profound impact on constructs such as attention, executive function, learning and memory, and information processing. () clearly stated that cognitive activity is the result of an interplay of complex functional systems, yet each system makes unique contributions. His assertion remains true today.

No part of the brain functions in isolation, and any given cortical region has a degree of information-processing specificity for a cognitive ability or part of cognitive operations (; ; ; ). This specificity is referred to as functional specialization. As originally put forth by the work of Luria, effective performance on any given task is characterized by the functional integration of distal brain regions. This union represents the transitory, dynamic, context-specific communications that transfer information via subsets of anatomical connections among a limited number of brain regions engaged by a cognitive process ().

Luria’s work on developing cognitive constructs and corresponding behaviors as manifestations of the operations of brain systems became known as functional units of the brain. In more recent terminology, this is equivalent to the well-recognized concept of brain networks. Table 1 represents a conceptualization of how PASS processes relate to functional units and how these relate to large-scale neural networks. For a detailed discussion on PASS processes, functional units, and their relationship to neural networks, see ().

Table 1.

PASS, functional units, and Neuro-networks.

The basic neurocognitive processes (PASS) responsible for the cognitive activity underlying intelligence and behavior represent a “working constellation” () of networks. Just as a variety of neural networks operate in a dynamic manner for a particular task, a person may execute the same task using any combination of the PASS processes, along with the application of the person’s knowledge and skills. Although completing most any task is accomplished through the integration of all processes, not every process is equally involved in every task. In addition, a task may be approached using varying combinations of processes, depending on how the task was initially taught or learned. For example, tasks like math calculation may be dominated by a single process (e.g., planning), while tasks such as reading decoding may be strongly related to another process (e.g., successive) while also recruiting other neurocognitive processes. Reading comprehension of familiar text may, for example, recruit both Simultaneous and Successive processes, while reading something composed of unfamiliar content may require an additional process to be recruited. The dynamic way PASS abilities intersect provides a way of using the neurocognitive processing strengths to address the PASS weaknesses involved in the learning process.

3.4. Test Content and Equitable Assessment

Now that the PASS theory and its operationalization in the CAS2 have been presented, we can begin to cover the practical implications of having a theoretical basis for a test of intelligence. Recall that two interrelated issues raised at the start of this paper are closely related to the equitable assessment of intelligence: (a) the need for a theory of intelligence and (b) test content should be aligned with the theory of intelligence. A theory of intelligence should provide the vision for the cognitive structure of the tasks used to measure intelligence from a theoretical perspective. For example, the PASS theory provided a description of what kind of thinking the subtests should evoke. From our theory, this means the following:

- Planning subtests should measure how well a person creates and uses strategies to complete a task.

- Attention subtests should measure how well a person can focus and resist distractions.

- Simultaneous subtests should measure how well a person can understand relationships among things.

- Successive subtests should measure how well a person can manage the sequence of a task.

When the Cognitive Assessment System was initially built, the measurement of the PASS basic psychological processes could have been achieved using tasks that demand knowledge. For example, written composition was used as a measure of Planning by () but a subtest like that would have reflected knowledge as well as Planning and, therefore, it was not deemed appropriate. This issue is important because the concept of fairness is described in the Standards for Educational and Psychological Testing (), which has two components—psychometric test bias and test content. Psychometric test bias has been examined. For example, () reported similar correlations between PASS scores on the CAS and academic achievement test scores across race. () found similar correlations with achievement for Hispanic and White students. () reported that none of the dichotomously scored items on the CAS2 were found to be biased across gender, race, and ethnicity using differential item functioning (DIF) analysis. Factorial invariance of PASS scores was reported by (). They reported multigroup confirmatory factor analysis results, which supported the configural invariance of the CAS factor structure (i.e., the PASS scales) between Italian (N = 809) and American children in 5- to 7-year-old and 8- to 18-year-old groups. These analyses are informative, but in addition to psychometric test bias, test content is also related to test fairness.

The Standards for Educational and Psychological Testing state that “opportunity to learn…can influence the fair and valid interpretations of test scores (p. 56)”. “Opportunity to learn is a fairness issue when [there is] differential access to opportunity to learn for some individuals and then holds those individuals who have not been provided that opportunity accountable for their test performance… [even if the test] may not be biased” (p. 57). Equitable assessment can be maximized when all examinees have an equal opportunity to display their ability to answer the questions on a test, and fairness can be thwarted by the inclusion of questions that demand knowledge some may not have had the opportunity to acquire. The standards also state that “Test users should be alert to potential misinterpretations of test scores… [and] take steps to minimize or avoid foreseeable misinterpretations and inappropriate uses of test scores” (p. 143). However, there is a history of using tests that demand knowledge (e.g., vocabulary, word analogies, arithmetic word problems) to measure intelligence, and in some instances, very similar test questions appear on intelligence and achievement tests (; ).

The similarity in content across intelligence and achievement tests was noted by () when he wrote, “inspection of the contents of most IQ tests reveals that many test items could be repurposed as items in an achievement test (e.g., vocabulary, general knowledge, and mental arithmetic items) (p. 287)”. () suggested that differences in knowledge between African Americans and Whites were related to differences in intelligence test scores, which could be eliminated when there is equal opportunity for exposure to the information to be tested. Other researchers (e.g., ) have suggested that intelligence tests that do not rely on knowledge would be more equitable. Despite these cautions, intelligence tests continue to include items that demand verbal knowledge and general information and use arithmetic word problems (). The continued use of questions that demand knowledge in a cognitive test has historical precedence but warrants justification.

Terman defended the use of verbal tests in the 1916 Stanford–Binet because he believed responses to verbal questions represented the highest form of mental ability. More recently, () argued that “verbal and quantitative abilities add importantly to the prediction of academic success” (p. 276). Some might suggest the logic behind this position to be considered circular. That is, verbal and arithmetic questions are good measures of intelligence because they correlate with verbal and math achievement test scores. Similarly, () wrote, “the SAT-Mathematics and SAT-Verbal composite is an excellent measure of IQ or general intelligence” (p. 4). () asserted that “Scores on [reading comprehension and mathematics can be] used as a proxy for IQ [because a] reading test is a measure of verbal comprehension and [a] mathematics test is a measure of “quantitative reasoning”, and both of these are major components of general intelligence (e.g., ; ) (p. 95).”

() used the 2007 PISA reading and math scores as a measure of intelligence (IQ) to compare children across regions in Italy. He concluded, “The lower IQ in southern Italy may be attributable to genetic admixture with populations from the Near East and North Africa” (p. 9). Lynn’s conclusion was challenged by (), who found little differences between southern and northern Italian children on Raven’s Progressive Matrices () and PASS scores from the Italian version of the Cognitive Assessment System (). D’Amico et al. argued that the differences in the PISA verbal and math scores reflected differences in children’s educational opportunities, not intelligence, and their results suggested that measuring intelligence with tests that are not dependent upon knowledge was more valid and equitable. Regardless of the rationale for the use of intelligence tests that demand knowledge, test content has considerable implications for fair assessment.

The correspondence between test questions that demand knowledge and test fairness across intelligence tests can be understood by the examination of average test score differences across racial and ethnic groups. () explored this question for group and individually administered intelligence tests and found larger race and ethnic differences on tests that include knowledge than tests with minimal knowledge. Table 2 provides a larger summary of the available research. The results suggest that those tests that require knowledge yield large score differences in total standard scores by race (average difference by race of 9.4 standard score points) and ethnicity (Mn = 6.6). In contrast, tests that require minimal knowledge yield smaller average score differences by race (Mn = 4.3) and ethnicity (Mn = 2.9). These findings suggest a relationship between intelligence test content and test equity.

Table 2.

Standard score differences by race and ethnicity across intelligence tests.

Perhaps the best test of the hypothesis that knowledge leads to equity problems for group-administered IQ tests such as the CogAT and OLSAT, which provide verbal, nonverbal, and quantitative scores, is addressed with the results presented for the Naglieri General Ability Tests: Verbal, Nonverbal and Quantitative (; ). Race, ethnicity, gender, and parental education level differences on the Verbal, Nonverbal, and Quantitative tests of the Naglieri General Ability Tests were examined. These tests were explicitly designed to measure general ability without the knowledge demands found in traditional intelligence tests. That is, they have features that the authors suggested make them appropriate for diverse populations of students, which include the following: (a) each test’s directions were delivered using an animated scene like that experienced by the student being tested so no verbal instructions are used; (b) no verbal response is required of the student; (c) the verbal test requires the student to identify a verbal concept represented in pictures and determine which image does not represent the concept; (d) the quantitative test uses questions that require close examination of the relationships among numbers and/or symbols, numerical sequences, and patterns involving only basic math; (e) the nonverbal test uses questions that require examination of shapes presented in a pattern, sequence, spatial orientation, and other distinguishing characteristics to arrive at the correct answer in a manner similar to the Naglieri Nonverbal Ability Test, 3rd Edition (). These three tests have different content, but factor analytic results provide support for their validity as measures of a broad general ability factor (). The results for these three tests, presented in Table 2, support the view that the academic knowledge required in traditional intelligence tests likely contributes to differences across race and ethnicity.

It is important to recall that many psychologists have cautioned against including questions that demand knowledge in intelligence tests. These voices were largely ignored, and the early development of intelligence tests has had a lasting impact on the content of intelligence tests used today. We suggest that a fair assessment of intelligence must be achieved, and this is more likely to occur if a neurocognitive approach to test development and test content is followed. Perhaps intelligence tests should be conceived and developed on a theory of intelligence, and the test’s questions should measure the kind of thinking and problem-solving that is defined by the theory. To ensure that all students have an equal opportunity to do as well as they can on a measure of intelligence, test questions should measure how well students can answer the questions by thinking in a way that is not confounded by how much they know. This is the approach that was used when the Cognitive Assessment System was initially created in 1984.

4. Empirical Support for the PASS Theory as Measured Using the CAS2

The initial effort that led to the PASS theory was initiated by Das and colleagues (, , ) and included an extensive analysis of the methods used by Luria and related measures used in neuropsychology, as well as cognitive and educational psychology. The possible methods that could be used to measure Luria’s conceptualization of basic psychological processes and ultimate operationalization using the CAS were summarized in several books (e.g., ; ; ; ; ; ; , ). The publication of the CAS2 () and the CAS2: Brief () test Manuals provided additional evidence for PASS theory and were further described in Essentials of CAS2 Assessment (). We summarize additional validity research in the sections that follow.

4.1. PASS Correlations with Achievement

Psychologists often rely on the examination of intelligence test scores to understand academic strengths and weaknesses and to anticipate future academic achievement. This makes understanding the correlation between intelligence and achievement an important validity issue. Some (e.g., ) argue that school grades should be used to examine the relationship between intelligence and achievement. Others (e.g., ) noted that grades are “more influenced by the teacher’s idiosyncratic perceptions of the child’s apparent effort” (p. 278). We will present evidence of the relationship between intelligence and standardized achievement tests because these tests have demonstrated reliability. There is, however, a methodological limitation to this kind of research.

Studying the relationship between intelligence test score and achievement is complicated by the similarity in the items on traditional intelligence tests and achievement tests (e.g., vocabulary, arithmetic word problems) (). The similarity in content gives some intelligence tests an advantage over those such as the CAS2, which does not include verbal and quantitative test items (). The first large-scale study of the relationship between PASS scores and achievement was reported by (). They examined the relationships between the PASS scores from the CAS and achievement scores from the Woodcock–Johnson Tests of Achievement–Revised (WJ-R; ) for a nationally representative sample of 1559 students and found an average correlation of 0.70.

() reported the correlations between several intelligence tests and achievement tests using two methods. First, the average correlation among all the scales on each intelligence test with an achievement test was computed. Second, the average of the scales on the intelligence tests that clearly did not demand knowledge were obtained. This enabled an understanding of how each intelligence test was correlated with achievement when the most achievement-like scale on the intelligence test was excluded. This procedure was conducted for the WISC-V and WIAT-III using data from the WISC-V manual (), Woodcock–Johnson IV (), and the K-ABC-II (). The findings showed that the correlation between each of these tests and achievement was higher when the scales that demand verbal knowledge were included. For example, the best explanation for why the Wechsler Verbal Comprehension scale and the WIAT-III were so highly correlated is the similarity in content across the two tests. Some (e.g., ) argue that this is evidence of validity. However, others may suggest that correlations between achievement and intelligence tests that contain questions that demand, for example, knowledge of words and arithmetic may be inflated because of the shared content. The correlations between intelligence tests that do not require knowledge and achievement tests may provide a more accurate estimate of the relationship between cognitive ability and achievement. What was most important was the correlation between the CAS and achievement; it was the highest of any of the correlations obtained with tests that demanded knowledge. A recent meta-analysis of the relationship between PASS scores on the CAS and achievement revealed the same findings.

() examined the relationships between PASS scores from the CAS with reading and math in 93 independent samples. They found that (a) PASS “cognitive processes (operationalized with CAS) can produce correlations that are stronger than those derived from popular IQ batteries (e.g., WISC) that include tasks (e.g., Arithmetic, Vocabulary) whose content is often confounded by school learning;” (p. 10) (b) PASS “processes have direct implications for instruction and intervention programming. For example, cognitive strategy instruction based on PASS processes has been found to improve children’s math calculation () and PASS Reading Enhancement Program (PREP) has been found to improve children’s decoding () and reading comprehension” () and (c) “the present meta-analysis adds to a growing body of research examining the role of intelligence in academic achievement (e.g., ; ) suggesting that there are significant benefits if we conceptualize intelligence as a constellation of cognitive processes that are linked to the functional organization of the brain” (p. 10).

4.2. Intelligence Test Profiles

There has been and continues to be considerable controversy about which scores on the various intelligence tests should and should not be interpreted when practitioners examine a profile of scores. The issue is centered around the amount of support that has been found for subtest, scale, or full-scale level interpretation. For example, Kaufman advocated for interpretation at many levels (). Other researchers argue that valid interpretation of the many scores typically provided “is dependent on how precisely each score reflects its intended construct and whether it provides unique information independent of other constructs” (). These researchers have found that the most valid score on, for example, the Wechsler Intelligence Scale for Children Fifth Edition (; ), Stanford–Binet Fifth Edition (), Differential Abilities Scales (), and the Woodcock–Johnson Fourth Edition () is the total score that estimates general ability, or g. Moreover, the reanalysis of John ’s () survey of factor-analytic studies conducted by () came to the same conclusion. They wrote that nearly all the specified abilities presented by Carroll “have little-to-no interpretive relevance above and beyond that of general intelligence” (p. 1028). These researchers have published many studies and have consistently found that practitioners should only report the total score, which represents general ability, and not the subtests or scales that are provided. There has been only one exception—the PASS scales of the CAS.

() concluded that sufficient variance was attributed to the PASS scales on the Cognitive Assessment System, supporting their interpretation. The factorial structure of the CAS2 has also been examined. () conducted a series of analyses using the standardization sample of the CAS2. Their study included an analysis of four cognitive factors (i.e., correlated model), a general g factor (i.e., one- and second-order factor models), or a combination of the two (i.e., bi-factor models). The results revealed that the correlated PASS model accounted for the inter-subtest covariation of the PASS neurocognitive abilities better than the unitary g factor or the bifactor models. Furthermore, factorial invariance analysis provided evidence that the PASS model, as a measure of cognitive processing or intelligence, was the same between genders. The factor analytic research provides important information about the structure of intelligence tests and gives direction to practitioners about which scores to interpret, but it is equally important to examine intelligence test profiles across disabilities.

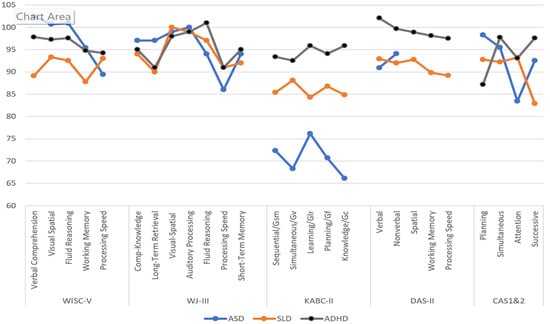

() addressed the utility of scale variability by examining profiles for individuals with ADHD, SLD, and ASD. Rather than an examination of subtest scores, they reported the scores on the scales provided in each test. They chose this approach because scales have higher reliability than subtests, and scales typically correspond to some intellectual construct identified by the authors. This level may also provide information that could be used to identify a specific pattern of strengths and weaknesses relevant to a student’s learning difficulty and may have diagnostic value. The data provided in Figure 2, largely obtained from the respective tests’ technical manuals, must be considered with recognition that the samples were not matched on demographic variables across the various studies, the accuracy of the diagnoses may not have been verified, and some of the sample sizes were small. Notwithstanding these limitations, the findings provide insights into the extent to which these tests are likely to yield profiles that could offer insight into the groups’ cognitive variability.

Figure 2.

Scale profiles on various intelligence tests for samples with ASD, SLD, and ADHD. Note: DAS-II scores for individuals with autism were only available for the Verbal and Nonverbal scales.

The profiles for students with SLD in reading decoding (dyslexia) across the WISC-V, KABC-II, and DAS-II scales show little variability (4–6 points). The WJ-III scores were all within the average range (90+), with a range of 10 points between the Visual-Spatial and Long-Term Retrieval scales. (More recent data for the WJ-IV is not provided in their Manual). The PASS scores also varied by 10 points, but the lowest score of 83 was on the Successive processing scale, and the other three scales were in the average range. The patterns for students with ADHD were also provided.

There was a small variability of scores for the ADHD samples on the WISC-V, KABC-II, and DAS-II (3–5 points). Although the WJ-III scores varied by 10 points, all the scales’ scores were within the average range. The PASS scores varied by 11 points; the highest score was on the Successive and Simultaneous scales (98), and the lowest score was on the Planning Scale (87). The results for the CAS included the CAS2, as well as values reported by (, ) and ().

The results for students with ASD showed a small difference between the Verbal and Nonverbal scales’ scores on the DAS-II. The KABC-II scores varied by 10 points, with all scores between 66 and 76. The WISC-V and WJ-III scores varied by 13 and 14 points, respectively, but nearly all the scores were in the average to low average ranges, with Processing Speed the lowest. The PASS scales showed the most variation, from a high of 98 on Planning to a low of 83 on Attention. The examination of these profiles provides a preliminary picture of the extent to which samples with different diagnoses are associated with different intelligence test results.

() examined PASS scores from the CAS standardization sample, referred to as the general education group (N = 1692), and a collection of students identified as having a learning disability (N = 367) from research by () and (). They used a cluster analysis methodology to identify unique groups based on their PASS scores. Ten distinct groups were found for the general education sample, and 12 different groups were identified for the sample with learning disabilities, as shown in Table 3 and Table 4, respectively. The profiles that were found provide some indication of the relationship between PASS score variability and different diagnostic groups.

Table 3.

PASS profiles for the general education sample.

Table 4.

PASS profiles for the learning-disabled sample.

The 10 groups of students identified in the general education sample vary from those with consistently high PASS scores (clusters 1 and 2) to those with all low PASS scores (cluster 10). These two clusters have PASS scores that could have implications for instruction and eligibility determination. For example, cluster 1 in the general education (GE) sample would likely include students with scores high enough to qualify for a gifted education program. There is also the possibility that students within this cluster with overall high scores might also show significant variability in PASS scores that have instructional implications and may even suggest a learning disability, as shown by (). They found that 54% of their sample had a PASS score that was significantly lower than each student’s average PASS score; 8% had a PASS score that was low in relation to the student’s average and less than 90 (which suggests a disorder in a basic psychological process); and 4% had both a PASS disorder and similarly low academic score, which could support the presence of a specific learning disability. Clusters 2–5 in the GE sample show variability of 14–21 points, with the smallest range found being cluster 10. This group’s scores ranged from 79 to 81 and suggests a sample that likely includes students with intellectual disabilities.

Huang concluded that the 10 profiles in the general education sample suggest that there were groups of students with different PASS patterns reflecting different learning strengths and weaknesses, which could have implications for instruction. Similarly, the 12 profiles for the sample of students with different kinds of learning disorders support the idea of associating PASS scores with different learning disabilities. They stated that: “the presence of various patterns of PASS cognitive processes provides initial, yet promising evidence that interpretation at the composite level using the CAS is useful for the cognitive assessment approach for identifying LD in children” (p. 27).

4.3. Diagnostic Implications

An essential step in understanding if a neurocognitive processing strength corresponds to an academic strength and a neurocognitive processing weakness corresponds to an academic weakness is achieved by comparing PASS and achievement test scores. Comparisons between ability (PASS neurocognitive) and achievement (reading, math, etc.) can be efficiently accomplished using the CAS2 because the PASS test items do not rely heavily on knowledge. That is, there are no vocabulary, general information, or arithmetic questions on the CAS2 (see for more discussion), which makes the analysis of the pattern of strengths and weaknesses across intelligence and achievement measures free from content overlap. It may be useful for practitioners to use the PASS scores when considering the identification of a specific learning disability described in the IDEA as a disorder in one or more of the basic psychological processes that are associated with academic failure.

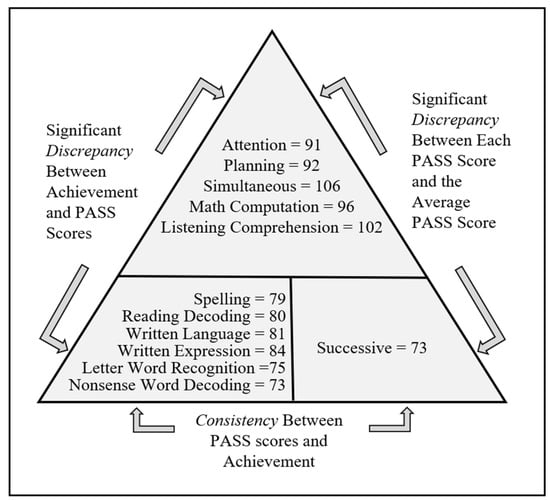

There are several methods for detecting a pattern of strengths and weaknesses (PSW) that could be used as part of the process of identifying a student with, for example, a specific learning disability (SLD). (), (), and () put forth a method for finding a combination of differences as well as similarities in scores across academic and cognitive tests to establish the presence of a disorder in one or more cognitive processes and its correspondence to deficits in academic skills. The approach used to operationalize a PSW using PASS scores from the CAS2 is called the Discrepancy Consistency Method (DCM). The method involves an examination of the variability of PASS and academic achievement test scores, which has three parts: two discrepancies and one consistency that form a pattern of strengths and weaknesses. A PASS scale discrepancy is found if there is a significant difference among the four scales relative to the child’s overall performance, with one or two PASS scores that are substantially below what would be considered typical (the normal range). A second discrepancy could be found between the PASS strengths and academic weaknesses. The consistency portion of the DCM is found when achievement scores are consistent with the low PASS scores. Such a finding suggests that a child may have a disorder in the basic psychological processes necessary for SLD identification (, ; ; ).

Figure 3 provides an illustration of the Discrepancy Consistency Method. In this example, the PASS and achievement test scores fall into three groups. First, we notice the student has strengths in Simultaneous processing with average scores in Attention and Planning. The Successive processing score of 73 is significantly lower than the average of the four PASS scores. It is important to note that the top of the triangle provides strengths in cognition and achievement, which may have relevance to intervention design. There is evidence of weaknesses in academic skills, ranging from a score of 73 on reading nonsense words to a score of 84 in written expression. These weaknesses are consistent with the Successive processing score of 73, according to the values required for significance provided by () using the PASS Score Analyzers for comparing PASS and achievement test scores ().

Figure 3.

Example of the Discrepancy Consistency Method for communicating findings across PASS and achievement test scores.

4.4. Intervention

One of the most important tasks associated with a comprehensive assessment is explaining how a student learns best, what obstacles to learning may exist, and how this information may inform instruction. Intellectual abilities that can be easily explained to teachers, parents, and, most importantly, the students can make this task more informative. The PASS theory provides the practitioner with ways to explain how a person learns best (i.e., PASS strength), what obstacles to learning may exist (i.e., PASS weakness), and what can be done to maximize learning (). Interpretation of the PASS scales (not subtests) is based on the definitions of the constructs and the following descriptions that are easy to explain to a teacher, parent, and student:

Planning is a kind of thinking used when you think about how to do something.

Attention is used when you focus your thinking on something and resist distractions.

Simultaneous processing is used when you think about how ideas or things go together.

Successive processing is used when you think about the sequence of actions or sounds.

These PASS scores can form a profile of an individual student’s learning strengths and weaknesses that can help determine which kinds of instruction should be considered (). () provide resources for interventions that are aligned with the PASS theory, rendered in brief handouts for teachers, parents, and students. There are also other resources for applying the PASS theory to academic instruction and remediation—for example, the PASS Remedial Program (PREP; ) and Planning Facilitation ().

PREP was developed as a remedial program based on the PASS theory of cognitive functioning (). The program is designed to encourage the use of Simultaneous and Successive processes that underlie reading for students aged 7–10 years. The program avoids the direct teaching of word-reading skills such as phoneme segmentation or blending because it is based on the premise that the transfer of learning is best facilitated through inductive rather than deductive inference (). PREP is structured so that strategies used to solve nonacademic tasks are generalized to tasks that demand academic content. Students are provided the opportunity to develop strategies in their own way to use Simultaneous and Successive neurocognitive processes () within the context of reading and spelling (). Several studies have demonstrated the efficacy of PREP for the enhancement of reading and reading comprehension (; ; ; ).

Another intervention approach based on PASS is Planning Facilitation, an instructional method first studied by (), which encourages students to be strategic (use Planning) when they complete reading and math tasks. The initial concept for planning facilitation was inspired by the work of () and (). () found that overt verbalization improved scores on a complex task and that the intervention was particularly effective in improving scores for children low in Planning. () examined the degree to which students with poor or good Planning scores benefited differently from a verbalization intervention like the one used by (). They found that students who had low Planning scores benefited more from the verbalizations of strategies than those with high Planning. These studies suggested that an intervention that encourages verbalizations about how to complete a task, the value of noting the important parts of a problem, and increased awareness of new ways to achieve the goal was differentially effective based on a student’s Planning score. These studies did not, however, involve academic tasks such as math or reading, a limitation addressed by (, ). () provided one-on-one sessions to students with learning disabilities using the Planning Facilitation method and math taken from the school curriculum. Students were given ten minutes to complete math worksheets, followed by five minutes of self-reflection guided by a tutor, and then ten more minutes to complete another math worksheet. The tutor gave prompts such as, “What did you notice about how you did the work? and What could you have done to get more correct?” The results showed that the intervention helped all the students, especially those low in Planning. The second study by () also included students with learning disabilities. The teachers facilitated group discussion in seven baseline sessions and 21 intervention sessions, during which questions were presented to help students reflect on how they completed the math worksheets. The teachers asked questions such as, “What could you have done to get more correct” and “What will you do next time?” The intervention designed to facilitate a planful approach to math given by teachers to their classes had differential effects depending upon the PASS profile. That is, students with low Planning scores improved more than those with high Planning scores because this instruction met their need to be more strategic when completing math computation problems.

() conducted a study to determine if the Planning Facilitation method given by regular classroom teachers would have differential effects depending on the PASS profiles of the students with learning disabilities and mild mental impairments. The students completed math worksheets during baseline and intervention phases, and PASS scores were obtained using the CAS. The findings confirmed previous research. Students with a cognitive weakness in Planning improved considerably (effect size of 1.4) compared to those with an Attention weakness (effect size of 0.3), Simultaneous weakness (effect size of −0.2), or Successive processing weakness (effect size of 0.4) and those without a weakness (effect size of 0.2). The authors concluded that the Planning Facilitation method, “which does not use teacher scripts or rigidly formatted procedures, can be replicated” (p. 595) and that the cognitive strategy instruction is especially helpful for the students who need it the most—those with low Planning scores. The next study on this method involved reading comprehension.

The purpose of a study by () was to determine if the Planning Facilitation method would have a different impact on reading comprehension for students with different PASS profiles from the CAS. The students’ pre- and post-reading comprehension scores were compared for those with a PASS weakness. The results showed that students with a weakness in Planning benefited from the Planning Facilitation method (effect size = 0.52). Students with no weakness and those with a Successive processing weakness (effect size = 0.06) did not benefit from the intervention. This study showed that helping students utilize Planning while completing a reading comprehension task had beneficial results, similar to the findings for math and nonacademic tasks.

() examined the effectiveness of the Planning Facilitation method for students with learning disabilities and ADHD randomly assigned to a control or experimental group. The students in the experimental group were given the Planning Facilitation method, and the control group received additional math instruction from the regular teacher. The results showed that students in the experimental group benefited (effect size = 0.85) from this instructional method, which encourages students to reflect on how they complete the work (i.e., use executive function). The comparison group who received math instruction from the regular teacher did not do as well (effect size 0.26). The intervention helped students in the experimental group develop and use more effective planning strategies when completing the math worksheets. In addition, students in the experimental group also showed significantly greater improvement on the Math Fluency subtest of the Woodcock–Johnson Achievement test and the WIATT-II Numerical Operations subtest. The authors concluded, “These results indicate not only did those students with ADHD benefit from planning strategy instruction in classroom math, as shown by their improvement on the worksheets, but also that they were able to transfer learned strategies to other measures of mathematics, suggesting far transfer of skills” (p. 191). In addition, the experimental group’s math scores were significantly greater than the control group one year later.

The results from this study support the previous studies on this instructional method called Planning Facilitation. The method was designed to avoid the direct teaching of strategies because transfer of learning is best achieved through inductive rather than deductive inference, as described in the section above about PREP (). The study by () is especially important because it used a randomized design and showed transfer from classroom math to norm-referenced tests of math achievement. In addition, the improvement found for students with ADHD is particularly important because researchers have found small effect size improvement in academic skills for students with ADHD (; ). Collectively, these intervention studies illustrate a relationship between PASS test scores and classroom instruction, as well as suggest a connection between intervention effectiveness and PASS profiles.

5. Conclusions

We have provided a short historical perspective on the state of intelligence testing in the 2020s and emphasized that the tests most widely used since the early 1900s have two critical limitations. Traditional intelligence tests were not built on a theory of intelligence, and they include content that is indistinguishable from questions on achievement tests (), which distorts the test scores for those with limited opportunity to learn. This appears to be a factor in the differences observed across race and ethnicity. The possible consequences of these limitations were anticipated by () when they wrote: “inaccuracy of psychological diagnosis [may result] in positive harm to the individual and hinders the development of scientific psychology (p. v)”. This caution foretold the American Psychological Association’s Apology to People of Color for APA’s Role in Promoting, Perpetuating, and Failing to Challenge Racism, Racial Discrimination, and Human Hierarchy in the U.S. (). We have presented summaries of research that suggest that a theory of intelligence that focuses on basic psychological processes defined by brain function and explicitly developed to minimize formal knowledge may offer the potential for greater validity and equity and thereby provide a possible remedy to address APA’s Apology.

Change in any field is not always easy. We hope that the information summarized here provides some evidence to support a consideration of a significant change. It is also important to recognize that Standards for the practice of psychology inform us of our professional obligations, which, according to the American Psychological Association, “are intended to facilitate the continued systematic development of the profession and to help facilitate a high level of practice by psychologists.” (https://www.apa.org/practice/guidelines/child-protection, accessed on 3 July 2024). () described the National Association of School Psychologists (NASP) Ethical Standards related to the practice of intellectual assessment, especially as it relates to equitable assessment. He noted that the NASP standards state that school psychologists should promote fairness and social justice (Guiding Principle 1.3), that they work as change agents to correct school practices that are unjustly discriminatory, and they do not engage in or condone actions or policies that discriminate (Standard 3.2). It is, therefore, important for all professionals who use cognitive measures to carefully examine all aspects of the validity of intelligence tests, especially as it relates to fairness, when making test selection decisions.

It is easy to rely on tests that are popular and already familiar to us. However, as we have shown, after a century of use, intelligence tests built without a firm basis in the theory of intelligence to guide test content have limitations. We suggest that researchers and practitioners recognize that an evolutionary step in the field of intelligence testing is needed, considering all we have learned in the past 100 years. The research presented here suggests that the PASS theory may provide a viable alternative to traditional intelligence tests. “To change our legacy [especially] with regard to systematic racism, we need to further heed the call and strongly pursue with the utmost urgency [new] streams of research and quickly leverage the findings to put into practice the mechanisms needed to drive real change ()”.

Author Contributions

Conceptualization, original draft preparation, writing, and review and editing were shared between the two authors. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The authors extend thanks to the reviewers for their suggestions regarding text content and presentation.

Conflicts of Interest

The authors recognize that they are royalty-accruing authors of some of the published tests discussed in this work.

References

- Ackerman, Phillip L. 2022. Intelligence Process vs. Content and Academic Performance: A Trip through a House of Mirrors. Journal of Intelligence 10: 128. [Google Scholar] [CrossRef] [PubMed]

- American Educational Research Association, American Psychological Association, and National Council on Measurement in Education. 2014. Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association. [Google Scholar]

- American Psychological Association. 2021. Apology to People of Color for APA’s Role in Promoting, Perpetuating, and Failing to Challenge Racism, Racial Discrimination, and Human Hierarchy in U.S. Washington, DC: American Psychological Association. Available online: https://www.apa.org/about/policy/racism-apology (accessed on 17 March 2023).

- Avant, Anna, and Marcia O’Neal. 1986. Investigation of the Otis-Lennon School Ability Test to Predict WISC-R Full Scale IQ for Referred Children. Paper presented at the 15th Annual Meeting of the Mid-South Educational Research Association, Memphis, TN, USA, November 19–21; Available online: https://files.eric.ed.gov/fulltext/ED286883.pdf (accessed on 14 March 2023).

- Avram, Mihai, Evgeny Gutyrchik, Yan Bao, Ernst Pöppel, Maximilian Reiser, and Janusch Blautzik. 2013. Neurofunctional correlates of esthetic and moral judgments. Neuroscience Letters 534: 128–32. [Google Scholar] [CrossRef]

- Benson, Nicholas F., A. Alexander Beaujean, Ryan J. McGill, and Stefan C. Dombrowski. 2018. Revisiting Carroll’s survey of factor-analytic studies: Implications for the clinical assessment of intelligence. Psychological Assessment 30: 1028–38. [Google Scholar] [CrossRef] [PubMed]

- Benson, Nicholas F., Randy G. Floyd, John H. Kranzler, Tanya L. Eckert, Sarah A. Fefer, and Grant B. Morgan. 2019. Test use and assessment practices of school psychologists in the United States: Findings from the 2017 National Survey. Journal of School Psychology 72: 29–48. [Google Scholar] [CrossRef] [PubMed]

- Binet, Alfred, and Theodore Simon. 1916. The Development of Intelligence in Children (The Binet-Simon Scale). Translated by Elizabeth S. Kite. Baltimore: Williams & Wilkins Co. [Google Scholar] [CrossRef]

- Boden, Carrie, and John R. Kirby. 1995. Successive processing, phonological coding, and the remediation of reading. Journal of Cognitive Education 4: 19–32. [Google Scholar]

- Brams, Andrew G. 1999. Utility of Das-Naglieri: Cognitive Assessment System in the discrimination of elementary school children with learning disabilities and speech impairments. (Doctoral dissertation, University of Northern Colorado, 1999). Dissertation Abstracts International 60: 1878. [Google Scholar]

- Bronner, Augusta F., William Healey, Gladys M. Lowe, and Myra E. Shimberg. 1927. A Manual of Individual Mental Tests and Testing. Boston: Little Brown and Company. [Google Scholar]

- Brulles, Dina, Kimberly Lansdowne, and Jack A. Naglieri. 2022. Understanding and Using the Naglieri General Ability Tests: A Call for Equity in Gifted Education. Minneapolis: Free Spirit Publishing. [Google Scholar]

- Canivez, Gary L. 2008. Orthogonal higher order factor structure of the Stanford-Binet Intelligence Scales--fifth edition for children and adolescents. School Psychology Quarterly 23: 533–41. [Google Scholar] [CrossRef]

- Canivez, Gary L. 2011. Hierarchical factor structure of the Cognitive Assessment System: Variance partitions from the Schmid-Leiman 1957 procedure. School Psychology Quarterly 26: 305–17. [Google Scholar] [CrossRef]

- Canivez, Gary L., and Ryan J. McGill. 2016. Factor structure of the Differential Ability Scales–Second Edition: Exploratory and hierarchical factor analyses with the core subtests. Psychological Assessment 28: 1475–88. [Google Scholar] [CrossRef] [PubMed]

- Canivez, Gary L., Marley W. Watkins, and Stephan C. Dombrowski. 2017. Structural Validity of the Wechsler Intelligence Scale for Children-Fifth Edition: Confirmatory Factor Analyses with the 16 Primary and Secondary Subtests. Psychological Assessment 29: 458–72. [Google Scholar] [CrossRef]

- Carlson, Jerry S., and Jagannath P. Das. 1997. A process approach to remediating word decoding deficiencies in Chapter 1 Children. Learning Disability Quarterly 20: 93–102. [Google Scholar] [CrossRef]

- Carman, Carol A., Christine A. Walther, and Robert A. Bartsch. 2018. Using the Cognitive Abilities Test (CogAT) 7 Nonverbal Battery to Identify the Gifted/Talented: An Investigation of Demographic Effects and Norming Plans. The Gifted Child Quarterly 62: 193–209. [Google Scholar] [CrossRef]

- Carroll, John B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. Cambridge: Cambridge University Press. [Google Scholar] [CrossRef]

- Cormier, Pierre, Jerry S. Carlson, and Jagannath P. Das. 1990. Planning ability and cognitive performance: The compensatory effects of a dynamic assessment approach. Learning and Individual Differences 2: 437–49. [Google Scholar] [CrossRef]

- D’Amico, Antonella, Maurizio Cardaci, Santo Di Nuovo, and Jack A. Naglieri. 2012. Differences in achievement not in intelligence in the north and south of Italy: Comments on Lynn (2010a, 2010b). Learning and Individual Differences 22: 128–32. [Google Scholar] [CrossRef]

- Das, Jagannath P. 2000. PREP: A cognitive remediation program in theory and practice. Developmental Disabilities Bulletin 28: 83–96. [Google Scholar]

- Das, Jagannath P. 2009. Reading Difficulties and Dyslexia, Rev. ed. New Delhi: Sage. [Google Scholar]

- Das, Jagannath P., Jack A. Naglieri, and John R. Kirby. 1994. Assessment of Cognitive Processes: The PASS Theory of Intelligence. Boston: Allyn & Bacon. [Google Scholar]

- Das, Jagannath P., John Cummins, John R. Kirby, and Ronald F. Jarman. 1979a. Simultaneous and successive processes, language and mental abilities. Canadian Psychological Review/Psychologie Canadienne 20: 1–11. [Google Scholar] [CrossRef]

- Das, Jagannath P., John R. Kirby, and Ronald F. Jarman. 1975. Simultaneous and successive syntheses: An alternative model for cognitive abilities. Psychological Bulletin 82: 87–103. [Google Scholar] [CrossRef]

- Das, Jagannath P., John R. Kirby, and Ronald F. Jarman. 1979b. Simultaneous and Successive Cognitive Processes. New York: Academic Press. [Google Scholar]

- Das, Jagannath P., Rama K. Mishra, and Judith E. Pool. 1995. An Experiment on Cognitive Remediation of Word-Reading Difficulty. Journal of Learning Disabilities 28: 66–79. [Google Scholar] [CrossRef] [PubMed]

- Dombrowski, Stefan C., Ryan J. McGill, and Gary L. Canivez. 2017. Exploratory and hierarchical factor analysis of the WJ-IV Cognitive at school age. Psychological Assessment 29: 394–407. [Google Scholar] [CrossRef]

- DuPaul, George J., Tanya L. Eckert, and Brigid Vilardo. 2012. The Effects of School-Based Interventions for Attention Deficit Hyperactivity Disorder: A Meta-Analysis 1996–2010. School Psychology Review 41: 387–412. [Google Scholar] [CrossRef]

- Edwards, Oliver, and Tom Oakland. 2006. Factorial Invariance of Woodcock-Johnson III Scores for African Americans and Caucasians Americans. Journal of Psychoeducational Assessment 24: 358–66. [Google Scholar] [CrossRef]

- Fagan, Joseph F., and Cynthia R. Holland. 2006. Racial equality in intelligence: Predictions from a theory of intelligence as processing. Intelligence 35: 361–387. [Google Scholar] [CrossRef]

- Flanagan, Dawn P., Vincent C. Alfonso, and Sam O. Ortiz. 2007. Essentials of Cross-Battery Assessment, 2nd ed. Hoboken: John Wiley & Sons. [Google Scholar]

- Freeman, Frank S. 1955. Theory and Practice of Psychological Testing. New York: Henry Holt and Company. [Google Scholar]

- Friston, Karl. 2002. Beyond phrenology: What can neu-reimaging tell us about distributed circuitry? Annual Review of Neuroscience 25: 221–50. [Google Scholar] [CrossRef] [PubMed]

- Georgiou, George K., Kan Guo, Nithya Naveenkumar, Vieira P. Vieira, and Jagannath P. Das. 2020. PASS theory of intelligence and academic achievement: A meta-analytic review. Intelligence 79: 101431. [Google Scholar] [CrossRef]

- Goldberg, Elkhonon. 2009. The New Executive Brain: Frontal Lobes in a Complex World. New York: Oxford University Press. [Google Scholar]

- Goldstein, Harold W., Kenneth P. Yusko, Charles A. Scherbaum, and Elliott C. Larson. 2023. Reducing black-white racial differences on intelligence tests used in hiring for public safety jobs. Journal of Intelligence 11: 62. [Google Scholar] [CrossRef] [PubMed]

- Haddad, Fred A., Yolanda E. Garcia, Jack A. Naglieri, Mark Grimditch, Andy McAndrews, and Julie Eubanks. 2003. Planning Facilitation and Reading Comprehension: Instructional Relevance of the Pass Theory. Journal of Psychoeducational Assessment 21: 282–89. [Google Scholar] [CrossRef]

- Hale, James B., and Catherine A. Fiorello. 2004. School Neuropsychology: A Practitioner’s Handbook. New York: Guilford Press. [Google Scholar]

- Huang, Leesa V., Achilles N. Bardos, and Rick C. D’Amato. 2010. Identifying students with learning disabilities: Composite profile analysis using the Cognitive Assessment System. Journal of Psychoeducational Assessment 28: 19–30. [Google Scholar] [CrossRef]

- Iseman, Jacqueline, and Jack A. Naglieri. 2011. A cognitive strategy instruction to improve math calculation for children with ADHD: A randomized controlled study. Journal of Learning Disabilities 44: 184–95. [Google Scholar] [CrossRef] [PubMed]

- Jensen, Arthur R. 1998. The G Factor: The Science of Mental Ability. Santa Barbara: Greenwood. [Google Scholar]

- Johnson, Jack A. 2001. The Planning-Attention-Simultaneous-Success model of cognitive processing in youth with and without written expression disabilities. Dissertation Abstracts International 62: 5988. [Google Scholar]

- Johnson, Mark H. 2005. Subcortical face processing. Nature Reviews Neuroscience 6: 766–74. [Google Scholar] [CrossRef]

- Kar, Bimal C., Upendra N. Dash, Jagannath P. Das, and Jerry S. Carlson. 1992. Two experiments on the dynamic assessment of planning. Learning and Individual Differences 5: 13–29. [Google Scholar] [CrossRef]

- Kaufman, Alan S., and Nadine L. Kaufman. 2004. Kaufman Assessment Battery for Children, 2nd ed. Circle Pines: American Guidance Service. [Google Scholar]

- Kaufman, Alan S., Susie Railford, and Diane Coalson. 2016. Intelligent Testing with the WISC-V. Hoboken: John Wiley. [Google Scholar]

- Kelly, John. 2023. Historically Discriminatory Assessment Practices and Changes We Need to Make. Paper presented at the National Association of School Psychologists (NASP) Annual Convention, Denver, CO, USA, February 9. [Google Scholar]

- Kirby, John R. 1984. Cognitive Strategies and Educational Performance. New York: Academic Press. [Google Scholar]

- Kirby, John R., and Noel H. Williams. 1991. Learning Problems: A Cognitive Approach. Toronto: Kagan & Woo. [Google Scholar]

- Koziol, Leonard F., and Michael C. Stevens. 2012. Neuropsychological assessment and the paradox of ADHD. Applied Neuropsychology: Child 12: 79–89. [Google Scholar] [CrossRef] [PubMed]

- Koziol, Leonard F., Lauren A. Barker, Arthur W. Joyce, and Skip Hrin. 2014. The small-world organization of large-scale brain systems and relationships with subcortical structures. Applied Neuropsychology: Child 3: 245–52. [Google Scholar] [CrossRef] [PubMed]

- Koziol, Leonard F., Paul Beljan, Kate Bree, John Mather, and Lauren Barker. 2016. Large-Scale Brain Systems and Neuropsychological Testing: An Effort to Move Forward. Cham: Springer. [Google Scholar]

- Lezak, Muriel D. 1995. Neuropsychological Assessment, 3rd ed. New York: Oxford University Press. [Google Scholar]

- Lichtenberger, Elizabeth O., Mary A. Volker, Alan S. Kaufman, and Nadine L. Kaufman. 2006. Assessing Gifted Children with the Kaufman Assessment Battery for Children-Second Edition (KABC-II). Gifted Education International 21: 99–126. [Google Scholar] [CrossRef]

- Lohman, David F. 2012. Cognitive Abilities Tests, form 7: Research and Development Guide. Rolling Meadows: Riverside Publishing. [Google Scholar]

- Lohman, David F., and Elizabeth P. Hagen. 2001. Cognitive Abilities Test. Itasca: Riverside. [Google Scholar]

- Lohman, David F., Katrina A. Korb, and Joni M. Lakin. 2008. Identifying Academically Gifted English-Language Learners Using Nonverbal Tests. Gifted Child Quarterly 52: 275–96. [Google Scholar] [CrossRef]

- Lubinski, David, and Camella P. Benbow. 2021. Intellectual Precocity: What Have We Learned Since Terman? Gifted Child Quarterly 65: 3–28. [Google Scholar] [CrossRef]

- Luria, Alexander R. 1966. Human Brain and Psychological Processes. New York: Harper & Row. [Google Scholar]

- Luria, Alexander R. 1973a. The origin and cerebral organization of man’s conscious action. In Children with Learning Problems: Readings in a Developmental-Interaction Approach. Edited by Selma G. Sapir and Ann C. Nitzburg. London: Brunner/Mazel. [Google Scholar]

- Luria, Alexander R. 1973b. The Working Brain: An Introduction to Neuropsychology. New York: Basic Books. [Google Scholar]

- Luria, Alexander R. 1980. Higher Cortical Functions in Man, 2nd ed. New York: Basic Books. [Google Scholar]

- Luria, Alexander R. 1982. Language and Cognition. New York: Wiley. [Google Scholar]