Abstract

Just as receptive emotional abilities, productive emotional abilities are essential for social communication. Although individual differences in receptive emotional abilities, such as perceiving and recognizing emotions, are well-investigated, individual differences in productive emotional abilities, such as the ability to express emotions in the face, are largely neglected. Consequently, little is known about how emotion expression abilities fit in a nomological network of related abilities and typical behavior. We developed a multitask battery for measuring the ability to pose emotional expressions scored with facial expression recognition software. With three multivariate studies (n1 = 237; n2 = 141; n3 = 123), we test competing measurement models of emotion posing and relate this construct with other socio-emotional traits and cognitive abilities. We replicate the measurement model that includes a general factor of emotion posing, a nested task-specific factor, and emotion-specific factors. The emotion-posing ability factor is moderately to strongly related to receptive socio-emotional abilities, weakly related to general cognitive abilities, and weakly related to extraversion. This is strong evidence that emotion posing is a cognitive interpersonal ability. This new understanding of abilities in emotion communication opens a gateway for studying individual differences in social interaction.

1. Introduction

If, in fact, homo est animal rationale sociale1 (“humans are rational and social animals”), then it is no surprise why emotion is among the most researched topics in psychology. Emotions are expressed in humans and other animals (Darwin 1872; De Waal 2019). They interfere with rational choices but facilitate decision making when resources are short (Kahneman 2003), and they have a communicative function crucial for any social interaction (Scherer 2005). In this paper, we investigate individual differences in facial emotion expression ability, a crucial aspect in socio-emotional communication, and embed this ability in a nomological network of personality, intelligence, and socio-emotional abilities.

1.1. Individual Differences in Emotion Communication

The component process definition of emotion (Scherer 2005) defines five components of emotions (appraisal, bodily symptoms, motivation, motor expression, and feeling) and links them with specific functions. The motor expression component is crucial for human communication. It triggers and drives the somatic nervous system to exhibit automatic communication of an emotional state (Scherer 2005); i.e., a person in fear might automatically express a fearful facial expression to warn others or to trigger them to help. The idea of emotions serving a communicative function is proposed by most theories of emotion. Throughout this paper, we will focus on the facial domain of emotion communication, because this is the only expressive domain in which objective systems to evaluate facial expressions currently exist (Geiger and Wilhelm 2019).

Emotions as a fundamental form of communication are far from flawless. For example, in movies, we see actors expressing emotions at their best and, only because of the context, do we expect that the expressed emotion was just acted. We typically have a concept of good and bad emotion posing and will judge that actor’s performance accordingly. In other words, we assume individual differences in the ability to express emotions. In emotion perception and recognition research—the receiving end of this communicative path (Scherer 2013)—individual differences are well-documented (Hildebrandt et al. 2015; Schlegel et al. 2012, 2017; Wilhelm et al. 2014a). Hence, the idea of individual differences in the ability to express emotions is plausible and has been proposed earlier (e.g., Snyder 1979). Given that we expect individual differences in the ability to express emotions, it is a promising ability to examine when striving to extend models of socio-emotional abilities. Furthermore, given that perceptive socio-emotional abilities have been embedded in models of intelligence, too (Hildebrandt et al. 2011, 2015; MacCann et al. 2014; Olderbak et al. 2019a; Schlegel et al. 2020), emotion expression abilities might also be a promising addition to models of intelligence.

1.2. Earlier Approaches to Emotion Expression Ability

The idea to study individual differences in emotion expression ability is not new (Côté et al. 2010; Gross and John 1998; Larrance and Zuckerman 1981; Riggio and Friedman 1986). Yet, existing measures of emotion expression ability do not adhere to standards of aptitude testing (Elfenbein and MacCann 2017). Consequently, our understanding of this construct is insufficient. Criteria for maximal effort tests include maximal performance instructions, an understanding or reasonable assumption of test-takers emotional states, multiple independent items/multiple tests that allow for latent factors models, a standardized assessment of emotion expression behavior, an evaluation of behavior with regards to veridicality, and scores that allow one to capture individual differences, i.e., continuous scores (Cronbach 1949).

Most emotion expression research uses self-report questionnaires in which participants respond to items such as “I think of myself as emotionally expressive” (Kring et al. 1994) or “I’m usually able to express my emotions when I want to” (Petrides 2017). Self-report questionnaires mostly assess typical behavior and not maximal performance and the few self-report measures intended to measure the same ability as a maximal performance construct hardly correlate (Jacobs and Roodenburg 2014; Olderbak and Wilhelm 2020; Paulhus et al. 1998). Thus, we do not consider these approaches viable for measuring emotion expression ability.

Another established approach in emotion expression research is the expressive accuracy approach (e.g., Riggio et al. 1987; Riggio and Friedman 1986), in which participants are asked to express certain emotions that are then evaluated by a group of raters. Similarly, in the slide-viewing technique, individuals are instructed to express emotions based on colored slides and then their emotional expressions are rated by another group of judges (Buck 2005). If these groups of raters were sufficiently large and a representative sample of the general population, they might qualify as a viable option for measuring emotion expression abilities. However, usually, rater samples are small and not representative, thus largely diminishing the objectivity of this approach.

Finally, there are approaches in which participants experience a form of emotion induction and their expression behavior is judged by more objective means such as the Facial Action Coding System (Ekman and Friesen 1978). For example, they startle participants or emotionally trigger them with videos while instructing them to regulate their facial expressions. Then, participants’ facial responses are evaluated via objective facial action coding (Côté et al. 2010). Although these approaches fulfill most criteria of aptitude testing, they do not fulfill all. Even the established methods of emotion induction used in studies with this approach, such as pictures (e.g., Lang et al. 1997) or videos (e.g., Rottenberg et al. 2007), do not permit individual standardization of the intensity of the induced emotion. Thus, the test taker’s real emotional state is unknown. Furthermore, prior work with this approach was not multivariate enough to allow for estimating latent factors. And, lastly, human raters, even Facial Action Coding-trained ones, are not as objective in their rating as computer software would be (Geiger and Wilhelm 2019).

We conclude that none of the prior approaches to assess emotion expression ability fulfill all criteria of aptitude testing. Consequently, any understanding of the constructs, such as how they relate to other socio-emotional abilities, how they can help to enhance models of intelligence (Elfenbein and Eisenkraft 2010; Elfenbein and MacCann 2017; MacCann et al. 2014), or how emotion expression ability among other socio-emotional abilities relates to personality, is still limited.

In this paper, we strive to fill this gap. We follow three steps. First, we develop multiple emotion expression ability tests that adhere to all criteria of aptitude testing. Second, we define competing measurement models based on attributes of the constructs reflected in the items of our tests and test them in empirical studies. Third, we use these tests and embed them in a nomological network to learn more about overarching models of socio-emotional abilities and traits and about models of intelligence.

1.3. Step I: Objective Emotion Expression Measurement

To develop new maximal effort emotion expression tasks, we focused on the two criteria that not even the most advanced approaches to measuring emotion expression match: (1) we established an understanding of the emotional state of the test taker and (2) we developed a standardized system involving computer software to record and objectively evaluate facial expressions with regard to veridicality (see “Scoring Facial Expressions” below) that is time-efficient and allows for more multiple items and tests. We address the evaluation of expressions by extending prior research (Olderbak et al. 2014), which we describe below.

To answer the first criterion, we first considered which types of emotion expression behavior exist and which qualify for tests of emotion expression ability. Emotion expression behavior can be categorized into three sets based on the situation from which they arise: (1) an emotion is felt, and a congruent emotion is expressed; (2) an emotion is felt, and an incongruent expression is produced; or (3) no emotion is felt, but emotion is expressed. All expression behaviors in these categories are either driven by push factors (automatic behavior), pull factors (controlled behavior) of emotion expression behavior (c.f. Scherer 2013), or an interaction of both factors.

In the first category, there are two types of expression: (1a) We show a genuine facial expression when we lack (cognitive) resources or reasons to regulate automatically triggered facial expression and simply let automatic emotion expression emerge and fade according to the underlying emotional state—behavior solely driven by push factors. (1b) We enhance an emotion expression to increase the intensity of our communicative signals, for example, to increase the probability of being correctly understood. When enhancing, the push factors of emotion expression are supported by pull factors.

Within the second category, there are also two types of expression: (2a) We suppress our emotional expression and instead present a “poker face” (i.e., a neutral facial expression) or reduced emotional expression (toward neutral) despite our emotional state triggering an emotional expression. When neutralizing, the push factor of emotion expression is inhibited. (2b) We mask how we feel by expressing another emotion. For example, we might be disgusted by a certain dish but in order to not insult the cook, we keep smiling. Thus, a pull factor overrides the push factor of emotion expression.

Finally, the third category contains two types of expression: (3a) Facial muscles are activated just by seeing a corresponding facial expression of another person; however, no emotion is felt (Dimberg 1982; Künecke et al. 2014), which is also called mimicry—a pure push-factor driven behavior. (3b) We pose an emotion, while initially feeling no emotion, to help communication (e.g., signaling empathy)—a pure pull-factor driven behavior. For example, someone frowns and clenches teeth in a painful expression to signal empathy toward a person who is actually in pain, without personally feeling pain.

Genuine expression and mimicry are automatic behaviors and can be classified as typical behavior constructs, defined as behaviors individuals are likely to display in everyday behavior and on a regular basis (c.f., Cronbach 1949). Enhancing, neutralizing, masking, and posing can be described as abilities, or maximal effort constructs, defined as behaviors exhibited when one is motivated to try to achieve their best in a given task (Cronbach 1949) and, thus, define emotion expression abilities.

Among these four, only posing requires a non-emotional state to begin with, which can be assumed to be the typical state of participants in laboratory studies (e.g., see the neutral group in Polivy 1981 or reactions to neutral films in the laboratory in Philippot 1993). Obviously, without objective measures of emotional states, we cannot know the emotional state of participants in a laboratory study and, thus, the other three expression behaviors are not a viable option, either. Although it is also unclear whether all participants are equally unemotional in a lab setting—the requirement for posing—assuming a neutral state in lab studies presumably introduces less error than assuming emotional states after unstandardized emotion induction methods. Furthermore, although the process of posing itself might induce emotions, we assume these effects cancel each other out by asking participants to express sequences of different emotions with only short inter-item intervals. Consequently, we chose to assess individual differences in the ability to pose facial expressions of emotion as a first step toward measuring individual differences in emotion expression ability. For the sake of brevity, throughout this manuscript, the term expression ability will always refer to the ability to pose facial expressions.

Posing facial expression items can differ by the target emotion to be posed (e.g., disgust) and the stimulus type instructing target emotions (e.g., emotion words or emotional faces). Emotions to be posed should be aligned with expression scoring systems. So, if a scoring system is based on the six basic emotions (Ekman 1992), tests should contain items for all six basic emotions. The standard in instructing which emotion is to be posed clearly is using emotion words, such as “happy” or “surprised”, and asking participants to produce expressions based on their individual understanding of the expression. However, in everyday life, we also imitate others’ expressions. For a posing facial emotion expression test, this means a test in which participants see facial emotion expressions and imitate them is also a viable option.

To answer the second criterion required for improved emotion expression ability tests, the standardized recording and time-efficient objective evaluation of facial expressions, it is vital to rely on recent technological advances. Although well-trained human raters (e.g., FACS [Facial Action Coding System; Ekman and Friesen 1978]-trained) can achieve high interrater reliabilities (e.g., Zhang et al. 2014), their ratings are less objective than perfectly replicable ratings from a machine, and machines achieve equal or higher accuracy scores compared with human raters (Krumhuber et al. 2019, 2021). Additionally, machine ratings are cheaper and quicker than human raters, allowing for analyses in higher time resolution and for more recordings in large samples (Geiger and Wilhelm 2019). Therefore, we use state-of-the-art emotion recognition software to score the posed facial expressions of test takers.

1.4. Step II: Measurement Model of Emotion Expression Ability

To better understand emotion expression ability, we must demonstrate the construct’s validity by establishing a measurement model of emotion expression ability. Measurement models and respective confirmatory factor models in prior work exist for some self-report questionnaires, but they cannot be extended to emotion expression abilities for the reasons discussed earlier.

As introduced in Step I, emotion expression ability tests can vary by the emotion to be displayed (six basic emotions) and the task type (word vs. image stimuli, i.e., production tests vs. imitation tests) used to instruct the target expression. Whether these distinctions result in specific factors or whether individual differences in emotion expression ability tests are best explained by a general factor is an empirical question that can be solved by comparing competing measurement models. That is, measurement models may include a general factor of emotion expression or correlated task or emotion factors. Models can also vary by having no specific factors or specific emotion and task factors.

A general factor would represent the broad ability to express emotions. Task-specific factors and emotion-specific factors would represent either method variation or variation due to specific abilities. For example, the computer software and scoring algorithm may systematically contribute to stable individual differences in performance (e.g., software-derived emotion scores differ in range; see Calvo et al. 2016, 2018) or, because posing different emotions to a camera might be perceived as differently unusual, common variation may arise. By comparing models with regard to fit and parsimony, we can choose the best model to continue with research questions about the factor(s) of emotion expression ability.

1.5. Step III: Nomological Network of Emotion Expression Ability

After concluding on a measurement model of emotion expression ability, we can test how this ability relates to other socio-emotional abilities, intelligence, and extraversion. We can embed emotion expression ability in a nomological network of convergent and divergent constructs (Cronbach and Meehl 1955) to test whether this construct is a viable addition to models of socio-emotion traits and intelligence. Below, we present a hypothesized nomological network of why and how emotion expression ability should relate to established constructs. The constructs are sorted by nomological proximity to emotion expression ability.

1.5.1. Non-Emotional Expression

All forms of facial expression, emotional or not, serve a communicative purpose. Similarly, all facial expressions are controlled via the volitional circuit of the n. facialis (for a comparison of volitional and emotional circuits, see Hopf et al. 1992; Töpper et al. 1995). Thus, the ability to express non-emotional expressions (e.g., lowering mouth corners, details see below) should be strongly related to the ability to express emotions. From a psychometric perspective, both emotional and non-emotional expression tasks can share the same type of productive responding, the same scoring approach, and consequently, any possible artifacts due to the specificities of expression coding methodology. Nevertheless, they differ by specific (emotional vs. non-emotional) context, so their relation can be expected to be strong, but not unity.

The overlap of emotional and non-emotional expression abilities is similar to the overlap of facial identity and emotion perception and recognition. Facial identity perception and recognition refers to the common variation in individual differences in perceiving, learning, and recognizing information about facial identity. This construct was demonstrated to be a specific ability distinct from general cognitive ability (e.g., Hildebrandt et al. 2011). Similarly, facial emotion perception and recognition refers to the individual difference ability to perceive, learn, and recognize emotion in unfamiliar faces. Both facial identity and emotion perception and recognition correlate strongly (Hildebrandt et al. 2015). This is no surprise given that both abilities rely on the same neural circuits of facial information processing (Haxby et al. 2000; Haxby and Gobbini 2011), require the processing of the structural code of facial information (Bruce and Young 1986), and are receptive, basic socio-emotional abilities (Hildebrandt et al. 2015). Furthermore, the tasks for both constructs share the same basic methodology (i.e., nature of stimuli and their presentation, type of response behavior, and performance appraisal). Consequently, we expect a similarly high correlation between non-emotional and emotional expression abilities as reported for facial identity and emotion perception and recognition.

Receptive Socio-Emotional Abilities

Theories of language acquisition (e.g., Long 1996) propose that productive and receptive abilities develop together over the course of the lifespan. This is partially supported by socio-emotional abilities, where researchers found a small correlation (r = .19) between tests of expressing and perceiving non-verbal information in studies with communicative intent (Elfenbein and Eisenkraft 2010). This positive correlation is also no surprise given that producing and perceiving emotion expressions are fundamental in communication. As abilities representing receptive socio-emotional abilities, we selected prominently studied socio-emotional constructs including facial identity and emotion perception and recognition, recognition of emotional postures, emotion management, emotion understanding, and faking ability.

We chose facial emotion and identity perception and recognition because they focus on the facial domain and thus share the same expressive channel with our emotion expression assessment. Correlations between emotion perception and emotion expression were stronger between tasks from facial or body channels compared with the vocal channel (Elfenbein and Eisenkraft 2010). Similarly, tests of emotion recognition with different channels correlated weaker (Schlegel et al. 2012) relative to tests with the same channel (Hildebrandt et al. 2015). Thus, we expect medium to strong correlations between tests of facial emotion expression and facial emotion perception and recognition, and slightly lower correlations with facial identity perception and recognition because the latter do not share the emotional component. Similarly, we expect lower correlations, i.e., of medium effect size only, with emotional posture recognition because the latter does not share the same expressive channel.

Following models of emotional intelligence, emotion understanding and management should be positively related to emotion expression abilities (e.g., Elfenbein and MacCann 2017). Both abilities involve handling emotional expressions (by understanding or managing them) but they are also heavily situationally dependent (Mayer et al. 2016), whereas emotion expression abilities can be assessed as situationally independent. Emotion understanding and management would best be assessed with behavioral observation in different emotional situations. However, because this is hardly achievable while still adhering to standards of psychometric testing, in research, emotion understanding and management are typically assessed with situational judgment tests (SJTs). SJTs of emotion understanding and management resemble receptive ability tests, but oftentimes their veridicality is questionable, thus not fully qualifying as ability tests (Wilhelm 2005). Furthermore, due to their test design that involves reading complex vignettes and response options, SJTs involve more verbal literacy than any of the previously mentioned tests, and they do not solely focus on communicating via the facial channel. In sum, although conceptually closely related to emotion expression, emotion management and understanding are assessed with limited measurement approaches. Consequently, we expect them to only have small correlations with emotion expression ability.

With its communicative function, emotion expression also plays a role in deception. A good lie means aligning content and behavior, including emotional expressions (Vrij 2002), and the capacity to express non-felt emotions should be positively related to deception skills. We chose to focus on faking ability, a socio-emotional ability based on the ATIC (Ability To Identify Criteria) model (König et al. 2006). It represents the ability to identify what psychological tests or interviewers in assessment situations “ask for” or “want to hear” and successfully respond accordingly. Faking ability correlates substantially with facial emotion perception and recognition and general cognition (Geiger et al. 2018). We studied faking ability as the ability to fake a desired personality profile on a questionnaire. Given that tasks share neither the facial nor the emotional component, we expect a small correlation between faking ability and emotion expression.

Non-Socio-Emotional Abilities

The most consensual model of individual differences in intelligence, the Cattell–Horn–Carroll model (CHC model; McGrew 2009), is based on a positive manifold among tests of cognitive abilities. In other words, tests of cognitive abilities all correlate positively, implying a general factor of intelligence (Spearman 1904a). Recent work with ability tests of emotional intelligence demonstrated that this positive manifold might be expanded to emotional abilities and that these can be included as stratum I or II abilities/factors in the CHC model (MacCann et al. 2014). In this study, the authors only used the Mayer–Salovey–Caruso Test of Emotional Intelligence (Mayer et al. 2003) to measure socio-emotional abilities, so their study lacked tests of emotion expression. Currently, no systematic investigation of relations between emotion expression and the general factor of intelligence g exists. However, as emotion expression has been demonstrated to correlate with other emotional abilities (Elfenbein and Eisenkraft 2010) and, thus, can be assumed at stratum I under the stratum II factor of socio-emotional abilities, we hypothesize that emotion expression abilities are subject to a positive manifold and thus relate positively to g. In our studies, g will be indicated by measures of fluid intelligence, working memory capacity, and immediate and delayed memory. From a psychometric perspective, these tests only share the attribute of a maximal performance test with emotion expression. Consequently, we expect the correlation between emotion expression and the g factor and its underlying abilities to be weak.

Following prior work correlating general cognitive abilities and emotional abilities (Olderbak et al. 2019a), we differentiate crystallized intelligence (accumulated skills and knowledge, and their use) from general cognitive abilities (g). For successfully posed emotion expressions, knowledge about emotions and their typical expressions is required. Although such emotional knowledge might be considered highly specific, recent research on the dimensionality of factual knowledge demonstrated how closely even the most diverse knowledge domains are related (Steger et al. 2019). Consequently, we expect small correlations between emotion expression and general knowledge, a marker variable to crystallized intelligence.

Typical Behavior

Finally, assuming that higher levels of socio-emotional personality traits, such as extraversion, result in more social interaction and therefore more situations in which one needs to communicate one’s emotional states, we assume a weak correlation between emotion expression and self-reported extraversion. We only expect a weak correlation because (a) self-report is prone to response biases that presumably distort validity and (b) extraversion is a typical behavior construct, which is usually weakly related or unrelated to cognitive abilities (Olderbak and Wilhelm 2017; Wilhelm 2005).

Similarly, it could be argued that self-report measures of mixed model emotional intelligence (mixed model EI) such as the Trait Emotional Intelligence Questionnaire (TEIQ; Freudenthaler et al. 2008) and the Trait Meta Mood Scale (TMMS; Salovey et al. 1995), which assess socio-emotional personality traits, too, should correlate with emotion expression ability. In prior work, they have already been shown to correlate weakly with receptive emotional abilities (e.g., Davis and Humphrey 2014; Saklofske et al. 2003; Zeidner and Olnick-Shemesh 2010) and, thus, a similar relation with emotion expression ability could be expected. However, given that measures of mixed model emotional intelligence have been shown to be largely overlapping with the Big Five, including major correlations with extraversion (van der Linden et al. 2012, 2017), any relations between mixed model EI and socio-emotional abilities might vanish when variance in mixed model EI is controlled for by the underlying socio-emotional personality trait extraversion. In fact, two of our studies included measures of mixed model EI, which allowed us to test this idea. These analyses are reported in the Supplementary Materials.

1.6. Current Study

The purpose of this paper is to show that facial emotion expression abilities can be measured according to established psychometric standards and that the construct measured is a valuable addition to research on socio-emotional traits and intelligence by testing correlations in a nomological network of constructs. We try to accomplish these goals in three steps:

I. Task development: Before reporting the results from our three consecutive studies, we introduce our expression tasks that adhere to standards of maximal performance testing. With experimental control of the presentation of task instructions and items, along with the computerized recording, coding, and scoring of responses, we maximize the objectivity of our measurement. Although the tasks we introduce have been used in other already published studies as covariate constructs (Geiger et al. 2021; Olderbak et al. 2021), this manuscript is the first to extensively describe the construction rationale of the test, as well as construct validation efforts with regard to the measurement model and the position of emotion expression abilities in a nomological network of related abilities and typical behavior traits.

II. Psychometric evaluation: To evaluate factorial validity, we test whether individual differences in the indicators derived from our newly developed test are accounted for by sound measurement models (Borsboom et al. 2004). A sound measurement model is a confirmatory factor model designed in accordance with theoretical considerations to explain individual differences in indicators of a psychological test. They offer the opportunity to test whether the assumed factorial structure of a test matches with empirical reality. For our emotion expression ability tests, we establish a general ability to pose emotional expressions as a latent variable and test competing measurement models in three studies.

III. The nomological network: We validate the general ability to pose emotional expressions by investigating its position in the nomological network of socio-emotional and general cognitive abilities, as well as self-reported socio-emotional traits. Across our three studies, we test correlations between the general ability to pose emotional expressions and non-emotional expression ability, receptive socio-emotional abilities (perceiving and recognizing identity and emotion in unfamiliar faces, emotion management, emotion understanding, emotional posture recognition, faking ability), general cognitive abilities, and the personality factor extraversion (see Table 1 for a summary). Following the critique of frequentist significance testing (Cohen 1990; Kline 2013), we report both effect sizes and p-values, but we focus on effect sizes when interpreting the results. Because effects that replicate would become significant if tested in bigger samples, instead of discussing significance, we focus on the consistency and replicability of our results across the three studies.

Table 1.

A summary of covariates across our studies, their methodological overlap with facial emotion expression ability, and their expected correlation with the latter.

1.7. Step I: Facial Expression Ability Task Development

1.7.1. Task Design

First, we developed two emotion expression tasks in which participants were instructed to pose emotional expressions with their faces to the best of their ability while being videotaped. In the first task, called production, participants received a written prompt naming the target that should be expressed, i.e., one of six basic emotion words (anger, disgust, fear, happiness, sadness, or surprise), and were asked to produce the respective facial expression. In the second task, called imitation, pictures of faces expressing target expressions were presented, and participants were asked to imitate the target face.

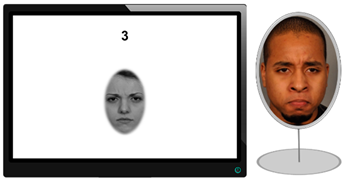

The trial presentation followed a uniform schedule. Participants had 10 (study 1) or 7 s (studies 2 and 3) to read the written prompt (production) or view the target expression (imitation) and prepare their expression (preparation time). Then, participants had 5 (study 1) or 3 s (studies 2 and 3) to produce or imitate the intended emotion at their best capability (expression time). Expression time immediately followed preparation time and trials followed each other with an inter-trial interval of 200 ms. Preparation time and expression time were shortened for studies 2 and 3 after many participants in study 1 reported the preparation and expression phases felt somewhat lengthy.

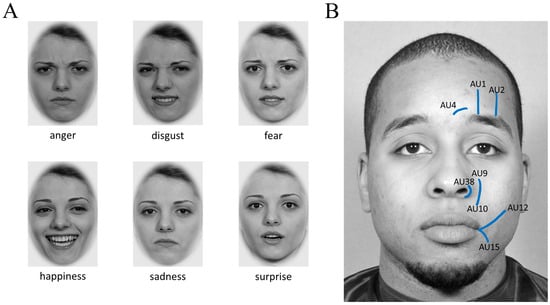

For the target emotions in the production and imitation tasks, we chose the six basic emotions (Ekman 1992). We additionally included neutral trials for baseline expression recordings (see below for details). As shown in Table 2, a timer in the top center of the screen, just below the camera, gave participants temporal orientation. The preparation phase instructions (emotion label or emotional face) were presented in the center of the screen. In the production task, we repeated each target once to increase task reliability. In the imitation task, target expressions were presented four times, but each trial included a new identity, and each emotion was expressed by two females and two males. Items were presented in fixed pre-randomized orders. Faces for the imitation task were sampled from previously unused (grey-scaled and ellipsed) faces of the BeEmo stimulus database that was also used to develop a task battery to measure facial emotion recognition and memory (Wilhelm et al. 2014a). Exemplary pictures of all six basic emotion expressions are presented in Figure 1A. We created two variants of the imitation task, namely, with and without feedback. In the feedback condition, participants saw their face next to the target face via a mirror, whereas there was no mirror in the without-feedback condition. Example items are shown in Table 2.

Table 2.

A summary of facial expression-posing ability tasks used throughout all studies.

Figure 1.

Target facial expressions that had to be shown during the posing tasks. (A): Six basic emotional expressions for the emotion-posing tasks. (B): Action units related to the movements in the non-emotional-posing task.

To evaluate the nomological network, we additionally developed one non-emotional production task as a closely related covariate measure, which was always presented as the first expression task. The goal of this task was to assess the ability to pose facial expressions independent of emotional expressions. To sample expressive abilities in the whole face, keep the testing time short, and create items that were not too difficult, we selected two distinctly executable facial movements per major facial region (eyes, nose, mouth; for a compendium of facial movements, see Ekman and Friesen 1978) as targets. Participants were instructed to display their facial movements as best as possible. Around the eyes, we asked participants to raise their eyebrows (AU1 and AU2; originate from m. frontalis) or to furrow them (AU4; originates from m. corrugator). Around the nose, we asked participants to widen their nostrils (AU38; originates from m. nasalis) or wrinkle their nose (AU9 and AU10; originate from m. levator). Around the mouth, we asked participants to move the corners of their mouth as high (AU12; originates from m. zygomaticus) or as low (AU15; originates from m. depressor) as possible. The position and line of movement of these AUs are shown in Figure 1B, and an example item is presented in Table 2. Each trial was presented twice. Additionally, for the very first item, participants were asked to present a neutral, relaxed expression (i.e., do nothing), so they could get used to the timed task design.

The production expression tasks are available on the project’s OSF page (https://osf.io/9kfnu/ (accessed on 2 February 2024)). Due to privacy restrictions of the facial stimuli, the imitation task is only available upon request from the corresponding author and after signing a user agreement. All other measurement instruments used in the studies are available from the authors upon request.

1.7.2. Scoring Facial Expressions

A defining characteristic of ability tasks is that they need a veridical response for every item (Cronbach 1949). For the non-emotional production task, the veridical response was the maximal value that could be achieved for the AUs underlying the requested facial movement. Defining a veridical facial emotion expression is more difficult, but the communicative function of emotion (Scherer 2005) offers a solution: communication can be successful or not. Research on the universality of emotion by Ekman (1992) demonstrated that what he calls universal or prototypical expressions are those expressions understood globally. We can conclude that the more prototypical an emotion expression, the higher the probability that it is identified correctly by another person, thereby maximizing its communicative success. Given that communication is maximally stressed in posing expressions, we argue that maximizing the communicative success of expressions is the goal of an expression task and, therefore, a maximally prototypical expression is the veridical response to an item of such a task.

Scoring both facial movements and prototypical emotional expression can be achieved with standardized facial expression coding systems, such as the Facial Action Coding System (FACS; Ekman and Friesen 1978). Emotional expressions are transient and dynamic phenomena and should therefore be recorded on video for thorough evaluation. Throughout all studies, we recorded high-definition videos with a framerate of 25 frames per second (fps), producing several thousand frames per participant. To satisfy the dynamic nature, every single frame had to be rated. Human raters, as typically used in FACS, are neither efficient nor objective enough to evaluate millions of frames of facial expressions. However, FACS has inspired the development of numerous facial expression recognition software programs (for a review, see Corneanu et al. 2016) that solve the problems of human ratings and have been shown to be valid and reliable tools for research (Dupré et al. 2020; Geiger and Wilhelm 2019; Krumhuber et al. 2019; Kulke et al. 2020).

Typically, such software is capable of scoring both some emotion scores—basic emotions and/or positive and negative valence—and a set of AUs. We use Facet with the Emotient SDK 4.1 (Emotient 2016a), a tool with the reportedly highest accuracy rates in scoring emotion expressions and AUs of different software tools (Dupré et al. 2020; Emotient 2016b; please note that Facet cannot score AU38 activity, which is why this item from the non-emotional expression task was excluded in our analyses). We only score behavior during the expression time of every item and, following our earlier argument on veridical responses to expression items, we only consider the emotion score of the item target emotion. For example, if the target emotion of an item with a 5 s expression time is an angry facial expression in an imitation task and the video is recorded at 25 fps, we extract the respective 5 × 25 = 125 anger values in a time series, similar to data from physiological responses. We then loess-smooth the data (quadratic degree and a smoothing parameter α = .22; Olderbak et al. 2014), extract the maximum scores, and regress them on their respective baseline emotion expressions extracted from neutral expression trials to continue with the residual value. More details regarding this scoring approach are reported in Olderbak et al. (2014).

This process results in one ability score per trial indicating how well participants pose the target emotion. We analyze Facet evidence scores, which are log-transformed odds ratios with higher values indicating stronger target expression. Thus, the higher a participant’s score, the better their performance. Facet produces missing data when, for example, participants move their heads at too extreme angles (yaw, pitch, or roll deviation of |45|° or more; Emotient 2016b) and, therefore, the software’s face recognition fails. Participants were instructed to always face the camera and remove obstructions to their faces. Thus, other things being equal, the proportion of missing data in Facet indicates the proportion of non-adherence to instructions. Therefore, we set a person’s item scores to missing if their respective time series had more than 20% missing data points.

1.7.3. Data Processing and Analyses

After evaluating the expression videos with Facet, the output data processing (smoothing, max-score extraction, baseline treatment, missingness treatment) was conducted with SAS (version 9.4; SAS Institute Inc. 2013). To facilitate open science, we also wrote functions for all these steps in R and uploaded them to the OSF (https://osf.io/9kfnu/ (accessed on 2 February 2024)). All consecutive data processing and analyses were conducted in R (version 3.6.2; R Core Team 2023), with the packages psych (version 1.9.12.; Revelle 2018), lavaan (version 0.6-5; Rosseel 2012), and semTools (version 0.5-3; Jorgensen et al. 2020).

1.7.4. Summary Step I

We develop two tasks that meet our requirements for developing a maximal effort test of emotion expression ability: (1) we can reasonably assume what test takers feel during the test, and (2) expressive behavior is evaluated in a standardized, efficient, and objective way and scored with regard to a veridical response. That is, we chose to focus on emotion posing only because the initial emotional state (neutral) of this behavior is assumed to be the default state during lab experiments. Next, we introduce measurement models of these tasks before we present the empirical studies in which the measurement models are tested and the nomological networks for Step III are analyzed.

1.8. Step II: The Measurement Models of Emotion Expression Ability

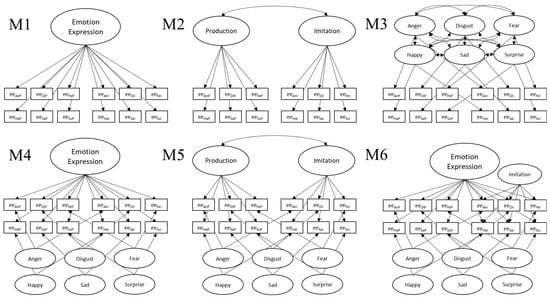

To address Step II, identifying a measurement model of emotion expression ability, we first defined a set of six plausible measurement models (M1 to M6; see schematic depictions in Figure 2). They were designed to reflect and test three major sources of between-subject variation in emotion expression: (1) general emotion expression ability, modeled as one factor loading on all items, (2) task-specific variance, modeled with factors indicated by production or imitation trials, and (3) emotion-specific variance, modeled with six factors indicated by the relevant emotion trails or as correlations between the residuals of same emotion trials. We also tested variations in the models depicted in Figure 2, though none offered a better fit to the data. The data and analysis syntax for these additional model candidates are available in the Supplementary Materials.

Figure 2.

Measurement models of emotion expression-posing ability. EE = emotion expression; An = anger; Di = disgust; Fe = fear; Ha = happiness; Sa = sadness; Su = surprise; P = production; I = imitation.

M1 models a single general emotion expression ability factor, M2 has two correlated task-specific emotion expression ability factors, and M3 has six correlated emotion-specific factors. In models M4 to M6, we combine these sources of variation in bifactor models to separate general and specific variance. M4 combines the general factor with the six orthogonal emotion-specific factors. M5 combines the two correlated task-specific factors with the six orthogonal emotion-specific factors. M6 combines all three sources of variation with a general factor, an orthogonal imitation factor, and six orthogonal emotion-specific factors.

We tested M1 to M6 on item-level and parcel-level data, but for parsimony, the item-level analyses are only reported in the Supplementary Materials. Parcels were preferred to reduce the complexity of our models (Little et al. 2002). All parcels were calculated as mean values across sets of items. For the emotion (and non-emotional) expression tasks, parcels were estimated from trials that had similar item types. For example, all anger imitation items were combined to an average anger imitation score (or all AU9/10 [m. levator] items in the non-emotional expression task were combined to an average levator score). This resulted in 12 emotional production parcels, 12 emotional imitation parcels, and five non-emotional production parcels.

All factors were identified with effects coding (Little et al. 2006; please note that whenever a factor was based on two indicators only, respective loadings were set to equality for local identification) and correlations were tested with a likelihood ratio test (Gonzalez and Griffin 2001) and an adjusted χ2-distribution (Stoel et al. 2006). Model fit was deemed acceptable with CFI and TLI ≥ .90, RMSEA < .08, and SRMR < .11; the fit was deemed good with CFI and TLI ≥ .95, RMSEA < .05, and SRMR < .08 (Bentler 1990; Hu and Bentler 1999; Steiger 1990). Missing data were handled with full information maximum likelihood imputation.

2. Study 1

2.1. Methods

2.1.1. Sample

We recruited 273 healthy adults between the ages of 18 and 35 living in the Berlin area. Due to technical problems and/or dropouts between testing sessions, 36 participants were removed because they either had: (1) no data for the emotion expression tasks or (2) missing data for more than five covariate tasks. The final sample of n = 237 participants (51.1% females) had a mean age of 26.24 years (SD = 6.15) and a reasonably heterogeneous educational background: 20.7% without a high school degree, including degrees that qualify for occupational education; 46.4% with a high school degree; and 32.9% with academic degrees. Within the largest SEM in this study, including 21 indicators, the sample size is sufficient to fulfill the five observations per indicator rule of thumb (Bentler and Chou 1987). With this sample, a significance threshold α = .05, and a moderate power of 1 − β = .80, we have enough sensitivity to detect bivariate manifest correlations as small as r = |.16| (calculated with G*Power; Faul et al. 2009).

Data from this sample was used in earlier publications on facial emotion perception and recognition (Hildebrandt et al. 2015; Wilhelm et al. 2014a) and expression scoring (Olderbak et al. 2014). However, these data have not been used to study measurement models of emotion expression ability or the nomological network of this ability.

2.1.2. Procedure, Constructs, and Measures

Data were collected in three test sessions, each about three hours in length (including two breaks), using Inquisit 3.2 (Draine 2018), and participants were tested with five to seven days in between sessions. We will only describe measures that are relevant to our research questions. The presentation of measures is organized around the construct they assess. In terms of the order of tasks, emotional and non-emotional tasks were alternated, if possible. For practical reasons, i.e., the camera recording, the expression tasks were presented consecutively. Facial emotional stimuli used across different tasks never had an identity overlap across tasks. This means no two tasks with such stimuli contain stimuli from the same person. The tasks were selected because of their strong psychometric properties demonstrated in previous work, which we briefly highlight below. We report the reliability of the constructs using the factor saturation estimate ω, along with their measurement model, in the Results Section. For the sake of brevity, we will not include detailed task descriptions when constructs are indicated by more than two different tasks, for example, the construct facial emotion perception and recognition.

Facial Emotion Expression Ability. We used two tasks to measure emotion expression abilities. For imitation, we tried two variants, one with and the other without feedback (via a mirror). Although the two imitation variants were designed to have 24 trials each, due to a programming error, only the first 12 trials in each task were presented. Therefore, both imitation tasks had 24 trials in total that were unevenly distributed across emotions: four anger, four disgust, four fear, two happiness, five surprise, and five sadness trials.

Non-Emotional Facial Expression Ability. The non-emotional expression task (as described in Step I) was presented twice, before and after the facial emotion expression ability tasks. The trial order was pre-randomized within tasks.

Facial Emotion Perception and Recognition (FEPR). Participants completed seven tasks from the BeEmo test battery (Wilhelm et al. 2014a): three perception tasks (“identification of emotion expressions from composite faces”, “identification of emotion expressions of different intensities from upright and inverted dynamic face stimuli”, and “visual search for faces with corresponding emotion expressions of different intensities”) and four recognition tasks (“learning and recognition of emotion expressions of different intensities”, “learning and recognition of emotional expressions from different viewpoints”, “cued emotional expressions span”, “memory game for facial expressions of emotions”). All tasks are reliable (ω = .59–.87; Wilhelm et al. 2014a) and valid (see e.g., Hildebrandt et al. 2015). The tasks were scored according to recommendations from the original authors, preferring unbiased hit rates (Wagner 1993) when recommended.

Facial Identity Perception and Recognition (FIPR). This construct was measured with six tasks from the BeFat test battery (Herzmann et al. 2008): three perception tasks (“facial resemblance”, “sequential matching of part-whole faces”, and “simultaneous matching of spatially manipulated faces”) and three recognition tasks (“acquisition curve”, “decay rate of learned faces”, and “eyewitness testimony”). All tasks are reliable (ω = .54–.90; Herzmann et al. 2008) and valid (see e.g., Hildebrandt et al. 2011). They were scored according to recommendations from the original authors.

Posture Emotion Recognition (PER). This ability was measured with the Diagnostic Analysis of Nonverbal Accuracy 2 (DANVA-2) posture task. Participants saw a picture of a person’s body with a blacked-out face who was making emotional postures and selected which among four response options (angry, fearful, happy, sad) best described the bodily expression. The task consists of 24 items and is reliable (α = .68–.78) and valid (Nowicki and Duke 2008).

Emotion Management (EM). To assess this ability, we used a situational judgment test (Situational Judgment Test of Emotion Management; STEM). In this task, participants completed multiple-choice questions and selected the best reaction to an emotional situation. The task is reliable with α = .61–.72 (MacCann and Roberts 2008). We used a short version (20 items) translated into German (Hilger et al. 2012).

Emotion Understanding (EU). This ability was assessed with the situational judgment test of emotion understanding (STEU). In this task, participants select which among four options best describes how they or others would feel in an emotional situation. The task is reliable with α = .43–.71 (MacCann and Roberts 2008). We used a short version (25 items) translated into German (Hilger et al. 2012).

General Mental Ability (g). As indicators of g, we assessed fluid intelligence, working memory capacity, and immediate and delayed memory. Fluid intelligence was assessed with 16 items of the Raven’s advanced progressive matrices task (Raven et al. 1979). In this figural task, participants use reasoning to fill the lower right cell of a 3 × 3 matrix containing symbols. The task is reliable with a retest reliability of rtt = .76–.91 (Raven et al. 1979).

We used well-established binding (number-position) and complex span (rotation span) tasks to assess participants’ working memory capacity, programmed according to Wilhelm et al. (2013). The rotation span (ω = .84) and binding task have good reliability (ω = .80; Wilhelm et al. 2013).

Memory without facial stimuli was assessed using six tests of immediate or delayed memory with either purely verbal, verbal–numerical, or visual stimuli (symbols). These tasks were adapted from the Wechsler Memory Scale (Härting et al. 2000; for details see Hildebrandt et al. 2011, 2015; Wilhelm et al. 2010).

Extraversion. We assessed extraversion (E) using the respective subscales from the NEO-PI-R (Costa and McCrae 2008). The extraversion scale of the NEO-PI-R has 48 items from six facet scales each with eight items, and it is reliable with α = .89 (Costa and McCrae 2008).

2.2. Results

2.2.1. Step II: Measurement Models of Facial Emotion Expression Ability

To address Step II, establishing a measurement model of emotion expression ability, the 12 indicators of emotion expression ability were modeled, as shown in Figure 2. Model fit for all six models is summarized in the left-hand part of Table 3. Only M6 reached acceptable fit levels across the four fit indices. Consequently, we determined that M6 was the best-fitting measurement model. M6 models all three earlier-introduced sources of variations (general ability factor, task-specific variation, and emotion-specific variation). Please note that due to the large number of factors relative to only 12 manifest indicators, when estimating M6 jointly with other constructs, the models often had estimation problems, such as non-positive definite Ψ-matrices. Because our focus lies on the general factor, and to avoid the issue of non-positive definite Ψ-matrices in model estimation, we also defined a model that is a structurally equivalent model to M6, in which emotion-specific variation is represented by correlated residuals of indicators of the same emotion instead of factors. This model differs by one df because when identifying a bifactor model without a reference factor via effects coding, the intercept constraint for the general factor is redundant with the intercept constraints of the bifactors. This structurally equivalent model, which we call M6b, also had acceptable to good model fit (χ2(42) = 91, p < .001; CFI = .947; TLI = .916; RMSEA = .070; SRMR = .050). The general factor (ω = .663) and the task-specific imitation (ω = .520) factors were reliable.

Table 3.

A comparison of measurement models of facial emotion expression-posing ability across studies 1 to 3.

2.2.2. Step III: Nomological Network

Before estimating correlations with other constructs, we first evaluated the factorial validity of the covariate constructs. Then, to understand correlations between emotion expression ability and each construct, we constructed separate models relating emotion expression ability with individual covariate constructs. Please note we additionally examined relations with the receptive ability covariates using a bifactor structure (c.f., Brunner et al. 2012), which takes the overlap of covariate constructs into consideration. For the sake of parsimony, these results are only reported in the Supplementary Materials.

The measurement models of the covariate constructs used composite task scores as indicators (all models are depicted in the Supplementary Materials). We clustered the nomological network results in three steps: 1. emotion expression ability with non-emotional expression ability; 2. emotion expression ability with receptive performance measures; and 3. emotion expression ability with extraversion. All correlations are summarized in Table 4.

Table 4.

Study 1: Correlations between facial emotion expression-posing ability and other abilities and traits in the socio-emotional nomological network.

Correlation with Non-Emotional Facial Expression Ability. Non-emotional expression ability was indicated by five indicators (corrugator, levator, depressor, frontalis, and zygomaticus, as described earlier) and had a good fit (χ2(5) = 2, p = .789; CFI = 1; TLI = 1; RMSEA < .001; SRMR = .019). The factor was reliable with ω = .589.

Next, we modeled M6b and non-emotional expression ability jointly and let the factors correlate. We expected and confirmed a large correlation between the general factors (r = .722, p < .001).

Correlations with Receptive Ability Constructs. The receptive abilities were modeled in line with previously established measurement models (Hildebrandt et al. 2015; including the residual correlations added there).

Facial emotion perception and recognition was indicated by seven variables, one for each task, had good fit (FEC model: χ2(14) = 16, p = .297; CFI = .994; TLI = .991; RMSEA = .026; SRMR = .028), and was reliable (ω = .781). When modeled with emotion expression ability, we found a medium correlation between the two abilities (r = .305, p < .001), supporting our hypothesis.

For facial identity perception and recognition, we estimated one to two indicators per task (e.g., “sequential matching” was split into a “part” and a “whole” parcel), resulting in nine indicators total. The model had good fit to the data (χ2(26) = 76, p < .001; CFI = .942; TLI = .919; RMSEA = .090; SRMR = .063) and was reliable (ω = .766). Contrary to our expectations, facial identity perception and recognition had only a very small correlation with emotion expression ability (r = .150, p = .032).

Posture emotion recognition was modeled with parceled indicators built by combining DANVA-2 posture items with emotion-specific parcels (i.e., items with the same target emotion were pooled into a parcel). To achieve acceptable model fit (χ2(1) = 2, p = .163; CFI = .977; TLI = .860; RMSEA = .063; SRMR = .022), we added one residual correlation based on modification indices. The posture emotion recognition factor had a small factor saturation: ω = .401. As expected, we found a small correlation between emotion expression ability and posture emotion recognition (r = .273, p = .010).

To model emotion management, we built four parcels from the STEM items. The model had a good fit, and the factor was reliable (χ2(2) = 3, p = .207; CFI = .993; TLI = .978; RMSEA = .049; SRMR = .020; ω = .705). In line with our hypotheses, emotion management correlated weakly with emotion expression ability (r = .196, p = .015).

Emotion understanding was indicated by five parcels, each composed of responses to the STEU items. The model had a mostly good fit and reliability (χ2(5) = 11, p = .047; CFI = .952; TLI = .904; RMSEA = .073; SRMR = .040; ω = .634). Emotion expression ability correlated weakly with emotion understanding (r = .184, p = .024).

G was indicated by nine variables, namely, performance on fluid intelligence, working memory capacity, and immediate and delayed memory tests. The general mental ability model had a mostly acceptable fit (χ2(24) = 95, p < .001; CFI = .929; TLI = .893; RMSEA = .112; SRMR = .062), and the factor was very reliable (ω = .801). General mental ability had a small correlation with emotion expression ability (r = .224, p = .005).

Correlations with Extraversion. Extraversion was indicated by six variables, one for each facet of extraversion (Costa and McCrae 2008). The measurement model had bad model fit (χ2(9) = 76, p < .001; CFI = .847; TLI = .745; RMSEA = .177; SRMR = .074), but reliability was high with ω = .791. Insufficient fit is a common problem in modeling self-report questionnaires (see e.g., Olaru et al. 2015). However, as extraversion is only a covariate in this study, we did not strive to optimize their models and instead followed through with a broad factor that is comparable with but superior to computing and using a manifest mean score. The extraversion factor had a weak correlation with emotion expression ability: r = .165, p = .025.

Joint Evaluation of Hypotheses. In the last step, we evaluated how closely the empirical correlations matched our predicted rank order of correlations, based on the expected effect sizes reported in Table 1. To evaluate this match, we estimated Spearman’s ρ. The correlation was ρ = .627, indicating a strong match of the predicted and empirical correlations.

2.3. Conclusions

In our first study, we introduced new tests of emotion expression ability, tested competing measurement models of expression ability, and positioned this ability in a nomological network of related constructs. We found that three sources of variation are required to describe individual differences in performance on our emotion expression tasks. These are (1) a general factor of emotion expression ability, (2) task-specific variation, and (3) emotion-specific variation.

The general factor of emotion expression ability correlated mostly as expected. We found medium to strong correlations with closely related socio-emotional abilities, weak to small correlations with more distantly related socio-emotional abilities, a small correlation with non-emotional cognitive abilities, and a weak correlation with extraversion.

The correlational pattern hints toward an overarching socio-emotional abilities construct incorporating receptive and productive abilities. Furthermore, the correlations with general cognitive abilities extend the idea of a positive manifold. Overall, this is evidence that a broad socio-emotional abilities factor, including emotion expression ability, might be considered an additional second stratum factor in models of intelligence. We additionally learned that extraversion plays a role in successful emotion expression.

In study 2, we strive to replicate these results with optimized emotion expression tasks (i.e., only imitation with feedback, with a complete trial list) and a reduced set of covariate tasks. In study 3, we will again replicate these findings and extend them to other abilities, including faking ability and crystallized intelligence.

3. Study 2

3.1. Methods

3.1.1. Sample

For study 2, we recruited n = 159 healthy participants from a university student participant database in Ulm, Germany. After excluding participants with incomplete data in the emotion expression task, the sample size was n = 141. Approximately half of the participants (51%) were female. Due to matching issues and incomplete responses, age, and education data were only available for n = 109 participants. For these, the mean age was AM = 23.42 (SD = 4.23), and 26% already had an academic degree.

The largest SEM in this study had 25 indicators, so the sample size is sufficient to fulfill the five observations per indicator rule of thumb (Bentler and Chou 1987). With the same significance threshold (α = .05) and power (1 − β = .80) as in study 1, we have enough sensitivity to detect bivariate manifest correlations as small as r = |.21| in study 2 (calculated with G*Power; Faul et al. 2009). No data from this study used in this manuscript have been published elsewhere.

3.1.2. Procedure and Measures

Study 2 consisted of two parts: (1) a lab study and (2) online questionnaires and a demographic survey. Data were matched anonymously with individual participant codes, but from the n = 141 participants, 22 participants did not report the same code in both parts and could not be matched. Consequently, part 2 data are only available for n = 119 participants. We will only discuss the tasks relevant to this paper. In terms of the order of tasks, emotional and non-emotional tasks were alternated, if possible. For practical reasons, i.e., the camera recording, the expression tasks were presented consecutively. The facial emotional stimuli used across different tasks never had an identity overlap across tasks. This means no two tasks with such stimuli contain stimuli from the same person. Please note that several tasks in part 1 were about pain sensation and regulation, which are not reported in this paper. All tasks in part 1 were programmed in and presented through Inquisit 4 (Draine 2018).

Expression Tasks. The lab study included two emotional expression ability tasks (production and imitation with feedback) and the non-emotional expression ability task, as they are described in Step I.

Facial Emotion Perception and Recognition (FEPR). Facial emotion perception was measured with the “identification of emotion expressions from composite faces” task from the BeEmo test battery by Wilhelm et al. (2014a). In this task, participants must identify facial emotion expressions with six basic emotion labels in either the top or bottom halves of composite faces expressing different emotions in the top and bottom halves. The task consists of 72 items and is highly reliable (ω = .81). According to the recommendations of the original authors, we scored the task with unbiased hit rates (Wagner 1993). Facial emotion recognition was measured with the “cued emotional expressions span” from the emotion perception and recognition task battery by Wilhelm et al. (2014a). In this task, participants learn three to six expressions of one person with varying intensities in one block and then recall them by identifying them among distractors. The task consists of seven such blocks and is reliable (ω = .59, Wilhelm et al. 2014a). We calculated the percentage of correctly remembered faces per block.

General Mental Ability. As a proxy to g, fluid intelligence was measured with the figural task of the Berlin Test of Figural and Crystallized Intelligence (Berliner Test zur Erfassung von Fluider und Kristalliner Intelligenz, BEFKI-gff; Wilhelm et al. 2014b), version 8-10. In this task, participants must complete sixteen sequences of figural images via logical deduction of rules explaining the change in the displayed sequence. Items are ordered by difficulty from lowest to highest. The task is well-established and very reliable with ω = .87.

Extraversion. Extraversion was assessed with the NEO-FFI (Borkenau and Ostendorf 2008), a well-established and reliable (α = .81) questionnaire to measure the Big Five personality factors on a factor level.

3.2. Results

3.2.1. Step II: Measurement Models of Facial Emotion Expression Ability

As in study 1, parcels for the expression tasks were calculated based on the respective muscle origin for the non-emotional expression task (e.g., one m.zygomaticus parcel) or based on emotion sets (e.g., one anger in production parcel). This resulted in the same 12 emotional expression indicators, which were used to test the measurement models M1 to M6, as displayed in Figure 2, and five non-emotional expression parcels.

We tested models M1 to M6 (see Figure 2) to determine the best-fitting measurement model of emotion expression ability (summarized in Table 3). Both Models M5 and M6 had a good fit, with M6 fitting the best. Therefore, we again concluded that M6 had the best fit to the data and that all three sources of variation, namely, a general factor, task-specific variation, and emotion-specific variation, need to be distinguished to account for the data. Because the model again had convergence problems when relating model M6 with the covariates, we used model M6b, which replaces the emotion-specific factors with residual correlations of the same emotion indicators. M6b had good model fit (χ2(42) = 46, p = .327; CFI = .992; TLI = .987; RMSEA = .024; SRMR = .053). The general emotion expression factor was reliable (ω = .639), and the imitation factor had low reliability (ω = .374).

3.2.2. Step III: Nomological Network

Before correlating emotion expression ability with its covariates, we first developed measurement models of the covariates to guarantee factorial validity and ensure sufficient factor saturation of covariates. As in study 1, each covariate in the nomological network was modeled and correlated with emotion expression ability independently. We additionally modeled the receptive abilities using a bifactor approach; those results are available in the Supplementary Materials.

As in study 1, we established emotion expression in a nomological network of socio-emotional traits through three steps: 1. correlation with non-emotional expression; 2. correlation with receptive abilities; and 3. correlations with extraversion (see Table 5).

Table 5.

Study 2: Correlations between facial emotion expression-posing ability and other abilities and traits in the socio-emotional nomological network.

Correlation with Non-Emotional Facial Expression Ability. Non-emotional expression ability was modeled as in study 1 with the same strategy for parceling. After allowing one residual correlation between indicators of mouth-related muscles, the zygomaticus, and the depressor indicator, the model had a (mostly) good fit to the data (χ2(4) = 7, p = .158; CFI = .956; TLI = .891; RMSEA = .068; SRMR = .038), and the factor was reliable (ω = .609). When correlating general emotion expression with non-emotional expression ability, we found a large correlation (r = .816, p < .001), thus supporting our hypothesis.

Correlations with Receptive Abilities. Facial emotion perception and recognition was modeled based on the six unbiased hit rate scores (one per basic emotion) from the facial emotion perception task and the seven average scores per block from the facial emotion recognition task. To achieve a good model fit, modification indices indicated that in addition to a general factor loading on all indicators, an orthogonal factor loading on the seven facial emotion memory indicators from the “cued emotional span” task was required. This model had a good fit (χ2(58) = 36, p = .989; CFI = 1; TLI = 1; RMSEA < .001; SRMR = .045), but the general facial emotion perception and recognition factor’s reliability was low (ωFEC = .16). This factor had a medium correlation with emotion expression ability (r = .347, p = .017).

g was modeled based on four sequential parcels by averaging items 1, 5, 9, and 13; items 2, 6, 10, and 14; etc., from the BEFKI-gff. The model had a good fit (χ2(2) = 1, p = .492; CFI = 1; TLI = 1; RMSEA < .001; SRMR = .012) and high reliability ω = .828. Fluid intelligence correlated weakly with emotion expression ability (r = .107, p = .177).

Correlations with Extraversion. Extraversion was modeled as a general factor loading on all 13 NEO-FFI extraversion items. The model had a (mostly) bad fit (χ2(65) = 181, p < .001; CFI = .766; TLI = .719; RMSEA = .122; SRMR = .096) but high reliability (ω = .830). As discussed in study 1, because self-report measures are often prone to bad model fit, we did not try to optimize the model. When correlated with emotion expression ability, we found a small correlation (r = .205, p = .046).

Joint Evaluation of Hypotheses. We calculated Spearman’s ρ to evaluate how closely the rank order of empirical correlations resembled the expected rank order of correlations in the nomological network, as described in Table 1. We found a correlation of ρ = .800, indicating a very good match of rank order.

3.3. Conclusions

For our second study, we updated the emotion expression ability test compared to study 1 with complete trial lists and focused our assessment of imitation to just the imitation with feedback task. Based on this adjusted item set, we again calculated 12 emotion- and task-specific parcels (as in study 1) and tested the same measurement models. We found that the same model (M6) representing three sources of variation (general ability, task-specific, and emotion-specific) fit the data best.

Next, we estimated correlations between emotion expression ability and non-emotional expression ability, facial emotion perception and recognition, non-emotional cognitive abilities, and extraversion. We replicated the very strong correlation with non-emotional expression ability found in study 1. Furthermore, we found emotion expression ability was moderately related to receptive emotional abilities, which supports the idea of positive manifold prediction among socio-emotional ability constructs. This effect must be interpreted with caution because the facial emotion perception ability factor had very low reliability. Emotion expression ability was also weakly related to indicators of general intelligence, supporting the idea that the construct our tasks assess can be considered a socio-emotional ability and that socio-emotional abilities can be considered intelligence. Additionally, we again found a small relation to extraversion, stressing the latter’s importance in successful emotion expression.

We conclude that the findings from study 1, namely, that emotion expression ability is a socio-emotional ability that can be measured via maximal performance tests and that fits well in a nomological network of socio-emotional traits, could conceptually be replicated in this study. In our last study, we will again strive to replicate the measurement model of emotion expression ability in a more applied sample and extend the nomological network with new abilities, namely, faking ability and crystallized intelligence.

4. Study 3

4.1. Methods

4.1.1. Sample

The n = 123 participants from Ulm, Germany, recruited for this study were all about to finish an academic degree or had recently finished an academic degree. Demographic data were missing for two participants. The rest of the sample was 57% females and had a mean age of AM = 25.15 (SD = 3.54); 36% were at their Bachelor’s level, 51% at their Master’s, 5% at their PhD level, and 8% did not report their current educational level.

As the largest SEM in this study consisted of 21 indicators, the sample size is sufficient to fulfill the five observations per indicator rule of thumb (Bentler and Chou 1987). With the same significance threshold (α = .05) and power (1 − β = .80) as in both prior studies, we have enough sensitivity to detect bivariate manifest correlations as small as r = |.22| in study 3 (calculated with G*Power; Faul et al. 2009). No data from this study used in this manuscript have been published elsewhere.

4.1.2. Procedure and Measures

While in studies 1 and 2, we collected data in regular lab settings, in our third study, we wanted to measure emotion expression ability in a more applied setting. Thus, the data were collected in a study about assessment interviews. All measures were presented within a simulated job assessment situation for students about to apply for their first job outside of university. This study involved three parts: part (1) online questionnaires, part (2) cognitive testing portion, including cognitive testing, and part (3) a simulated job interview, for which participants received personalized feedback as a reimbursement for their participation in this study. Data from the interview are not reported in this manuscript but are reported elsewhere (Melchers et al. 2020).

Participants completed the emotional expression battery (production and imitation with feedback) and non-emotional expression task, as described earlier, in addition to measures of facial emotion perception and recognition, faking ability, general mental ability, and crystallized intelligence. Faking ability tasks were administered in part 1, whereas all other tasks were administered in part 2 using Inquisit 4 (Draine 2018). In terms of the order of tasks, emotional and non-emotional tasks were alternated, if possible. For practical reasons, i.e., the camera recording, the expression tasks were presented consecutively. Facial emotional stimuli used across different tasks never had an identity overlap across tasks. This means no two tasks with such stimuli contain stimuli from the same person.

Facial Emotion Perception and Recognition (FEPR). Participants completed one test of facial emotion perception (“identification of emotion expressions of different intensities from upright and inverted dynamic face stimuli”) and one test of facial emotion recognition (“memory game for facial expressions of emotions”) from the BeEmo battery (Wilhelm et al. 2014a). In the “upright-inverted” task, participants see short dynamic facial emotional expressions either presented upright or inverted and must label them with one of the six basic emotions. We scored the task as recommended with six unbiased hit rates (Wagner 1993), one per emotion. The task is reliable with ω = .62. In the “memory game”, participants play the well-known game “memory”, where they must find picture pairs, with pictures of facial emotion expressions. The game consists of four blocks with three, six, and twice with nine pairs. We calculated the mean correct responses per block but excluded the first block due to extreme ceiling effects. The task is reliable with ω = .60. We chose different tasks than in study 2 to allow us to further generalize the findings in the case of a conceptual replication.

Faking (Good) Ability (FGA). Faking ability refers to the socio-emotional ability to adjust responses in self-report questionnaires to present a response profile that matches a certain goal, such as the goal to get hired in a job assessment (Geiger et al. 2018). Participants were asked to fake six job profiles using the Work Style Questionnaire (WSQ, Borman et al. 1999), namely, commercial airplane pilot, TV/radio announcer, tour guide, software developer, security guard, and insurance policy processing clerk (the first three were also used in Geiger et al. 2018). The responses for each job were each scored with the profile similarity index shape, essentially a correlation between a participant’s response profile and the optimum response profile for a given job (see Geiger et al. 2018, for details on scoring). While a version of this task design had low reliability (ω = .33), it had strong validity indicated by significant correlations with general cognitive abilities, crystallized intelligence, and facial emotion perception and recognition reported elsewhere (Geiger et al. 2018).

General Mental Ability (g). In this study, g was indicated by fluid intelligence assessed with a verbal deduction test, the verbal task of the BEFKI (BEFKI-gfv; Schipolowski et al. 2020), version 11-12+. Participants solved 16 items presented in short verbal vignettes with relational reasoning. Items are ordered by difficulty from lowest to highest. The task is well-established and very reliable (ω = .76).