AI for Psychometrics: Validating Machine Learning Models in Measuring Emotional Intelligence with Eye-Tracking Techniques

Abstract

:1. Introduction

1.1. AI and Psychometrics

1.2. Psychometric AI

“The field devoted to building information-processing entities capable of at least solid performance on all established, validated tests of intelligence and mental ability, a class of tests that includes not just the rather restrictive IQ tests…but also tests of artistic and literary creativity, mechanical ability, and so on”.(p. 273)

1.3. Emotional Intelligence

1.4. Eye-Tracking-Based Psychometric AI for EI Measurement

1.4.1. The Eye-Tracking Technique

1.4.2. Applications of Eye Tracking in Psychometrics, Affective Processing, and EI

1.4.3. The Eye-Tracking-Based AI Model for EI and the Effect of Data Quantity on Model Performance

- RQ1:

- What is the level of accuracy that ML models can achieve in measuring emotional intelligence, and which ML model is the most effective in this endeavor?

- RQ2:

- Does the ML accuracy differ across different facets or measures of emotional intelligence? Or can some facets/measures yield higher accuracy than others?

- RQ3:

- How much data do ML models require to achieve high accuracy in measuring emotional intelligence?

- RQ4:

- If ML models can accurately measure emotional intelligence with eye-tracking data, what are the unique eye-tracking features most predictive of emotional intelligence measures used in the ML models?

2. Method

2.1. Design and Participants

2.2. Emotional Intelligence Measures

2.2.1. WLEIS

2.2.2. TEIQue-SF

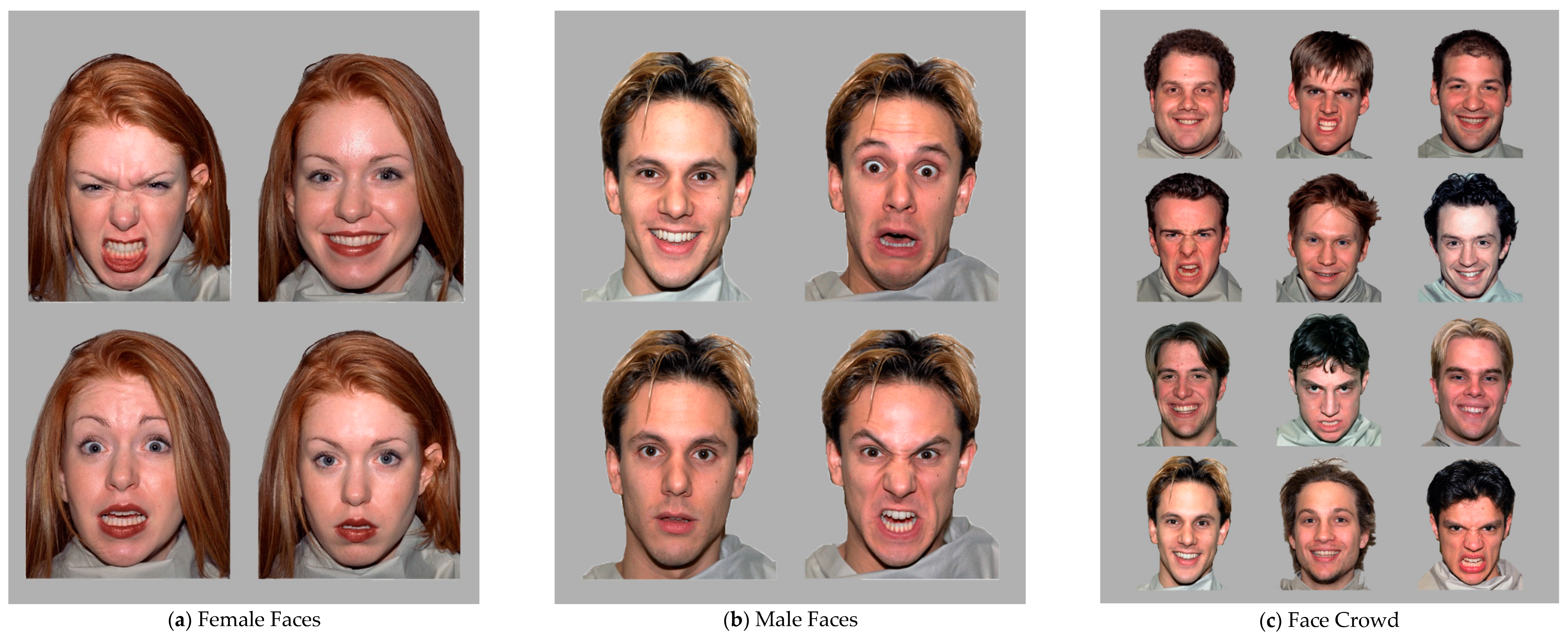

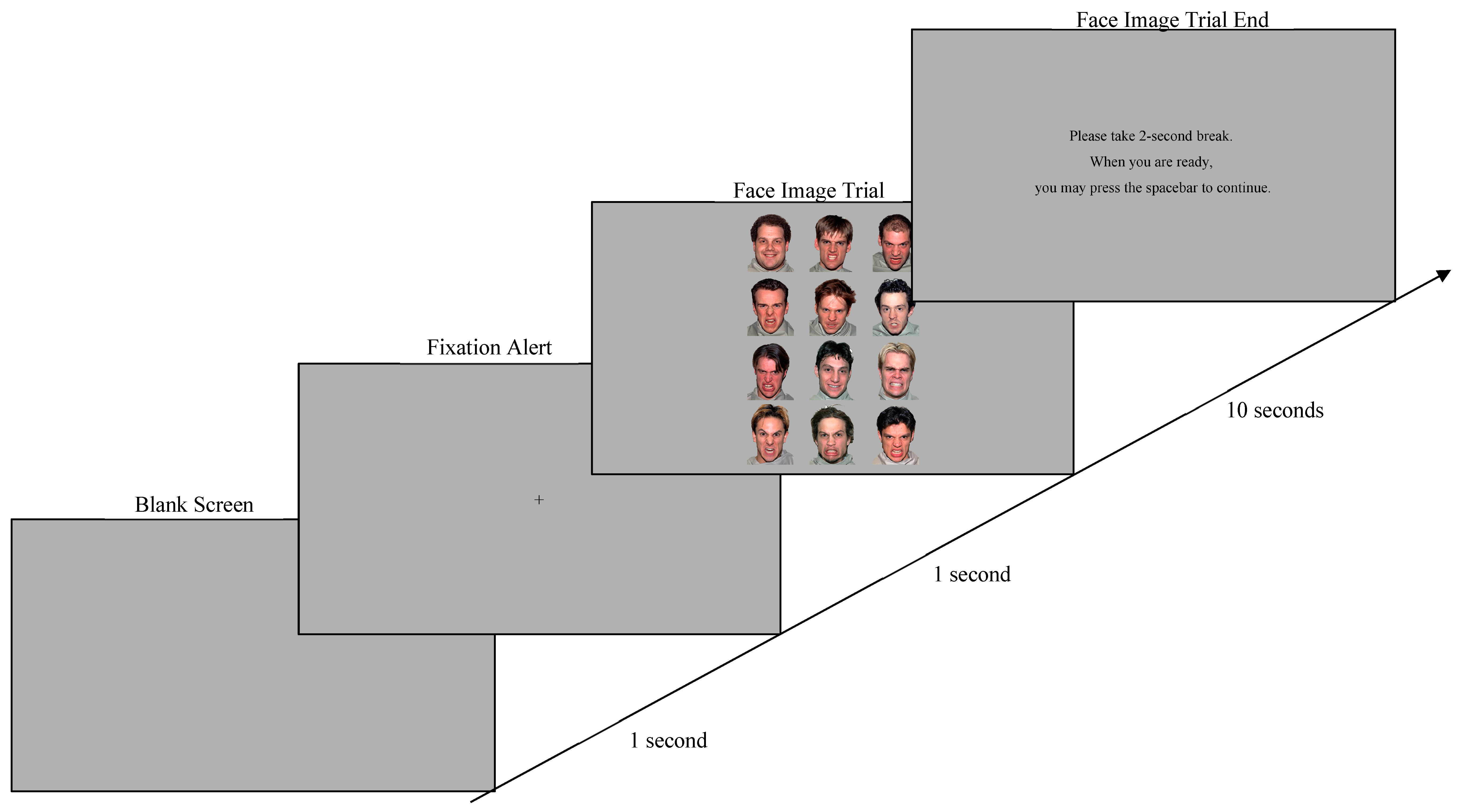

2.3. Visual Stimuli and Experimental Tasks

2.4. Eye-Tracker Device

2.5. Analytic Strategy

| Measure | Description |

|---|---|

| Area in focus: The entire image in a trial | |

| Blink Count | The total number of blinks in the trial within an interest period |

| Average Saccade Amplitude | Average size (in degrees of visual angle) of all saccades in the trial within an interest period |

| Average Blink Duration | Average duration (in milliseconds) of all blinks in the trial within an interest period |

| Fixation Count | The total number of fixations in the trial within an interest period |

| Area in focus: Each interest area | |

| Average Fix Pupil Size | Average pupil size across all fixations in the interest area within an interest period |

| Dwell Time | The summation of the duration across all fixations on the current interest area within an interest period |

| Fixation Count | The total number of fixations falling in the interest area within an interest period |

| First Fixation Time | Start time of the first fixation to enter the current interest area within an interest period |

| FSA Count | The number of fixations on the current interest area from another certain interest area within an interest period in the fixation sequence analysis (FSA), e.g., how many times does a participant switch his/her fixation from the female angry interest area to the female happy interest area? Or vice versa? |

| Run Count | The number of times the interest area was entered and left (runs) within an interest period |

3. Results

3.1. Descriptive Statistics of EI Measures

3.2. The Machine Learning Identification Accuracy

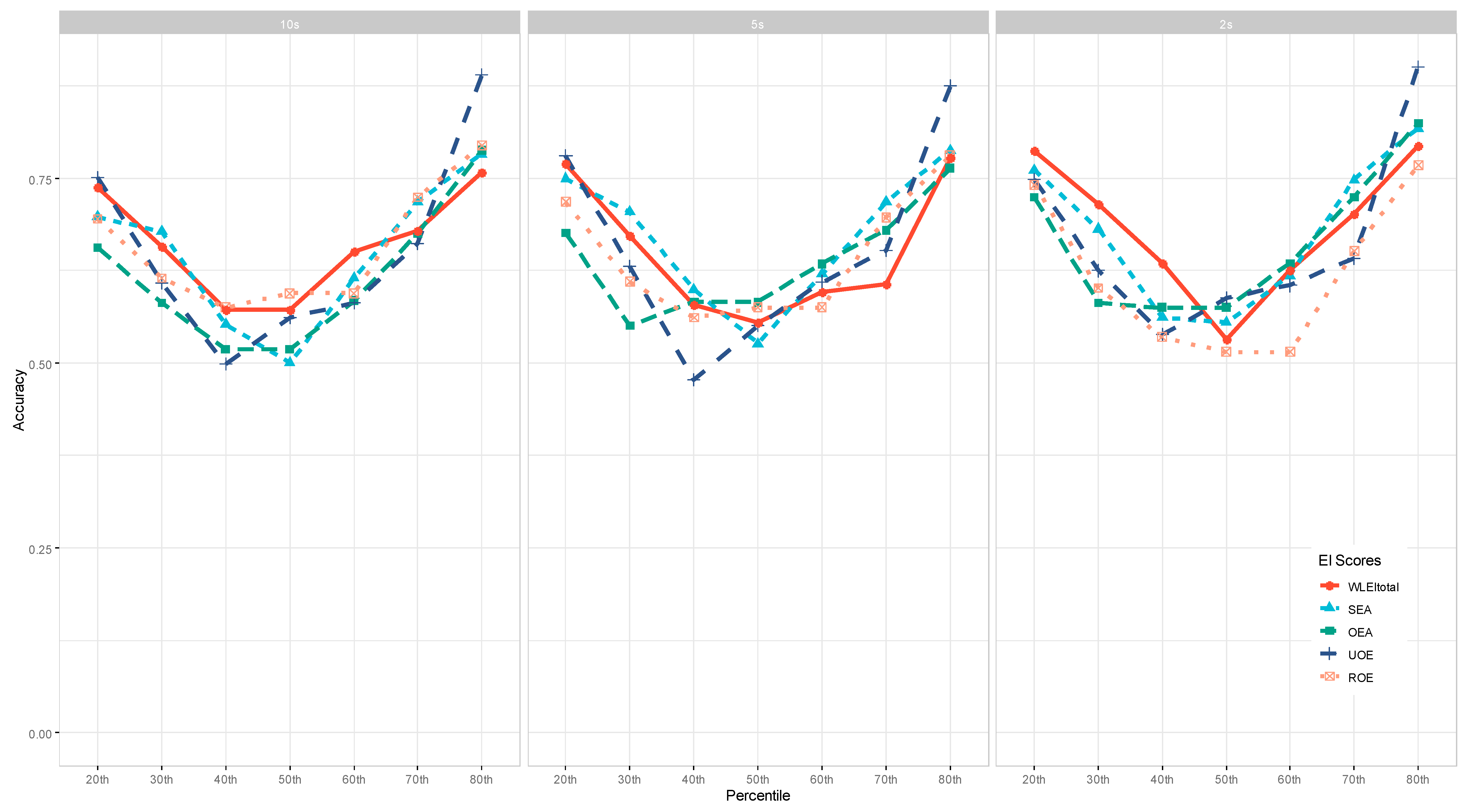

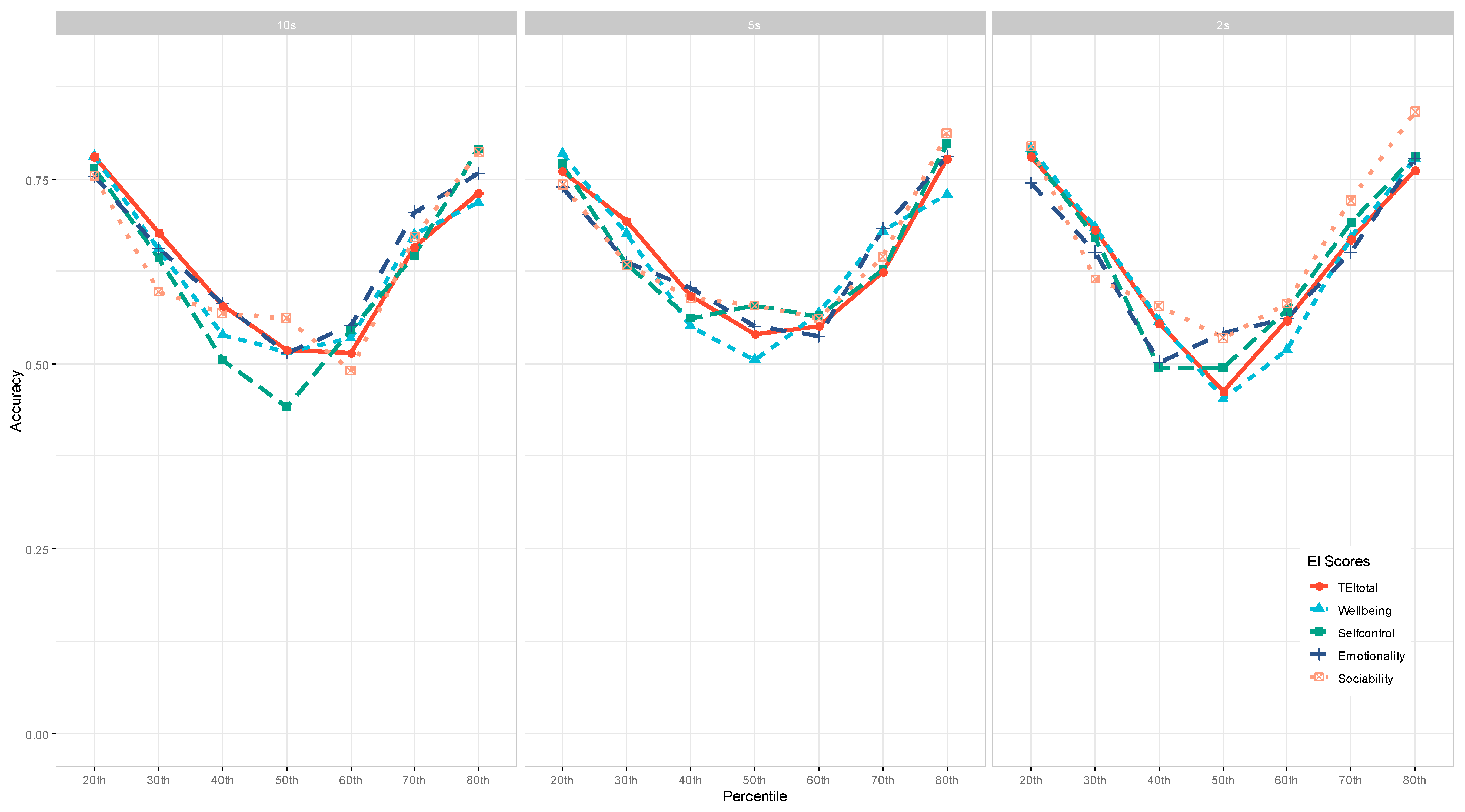

3.3. The Effect of EI Facets/Measures on Machine Learning Identification Accuracy

| 10 s | 5 s | 2 s | ||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NB | SVML | SVMR | SVMP | KNN | DT | RF | Mean | NB | SVML | SVMR | SVMP | KNN | DT | RF | Mean | NB | SVML | SVMR | SVMP | KNN | DT | RF | Mean | |||

| 20th Percentile | ||||||||||||||||||||||||||

| SEA | .605 | .535 | .767 | .721 | .767 | .767 | .721 | .698 | .683 | .537 | .805 | .805 | .805 | .805 | .805 | .749 | .767 | .721 | .767 | .767 | .767 | .767 | .767 | .761 | ||

| OEA | .636 | .432 | .705 | .705 | .705 | .705 | .705 | .656 | .585 | .512 | .732 | .732 | .732 | .732 | .707 | .676 | .721 | .698 | .721 | .721 | .721 | .767 | .721 | .724 | ||

| UOE | .674 | .628 | .791 | .791 | .791 | .791 | .791 | .751 | .659 | .780 | .805 | .805 | .805 | .805 | .805 | .780 | .744 | .535 | .791 | .791 | .791 | .791 | .791 | .748 | ||

| ROE | .500 | .614 | .750 | .750 | .750 | .750 | .750 | .695 | .585 | .659 | .756 | .756 | .756 | .756 | .756 | .718 | .721 | .674 | .767 | .721 | .767 | .767 | .767 | .741 | ||

| WLEIS Total | .581 | .535 | .814 | .814 | .814 | .791 | .814 | .738 | .634 | .610 | .829 | .829 | .829 | .829 | .829 | .770 | .837 | .628 | .814 | .791 | .814 | .814 | .814 | .787 | ||

| Well-being | .674 | .721 | .814 | .814 | .814 | .814 | .814 | .781 | .732 | .610 | .829 | .829 | .829 | .829 | .829 | .784 | .767 | .721 | .814 | .814 | .814 | .814 | .791 | .791 | ||

| Self-control | .674 | .605 | .814 | .814 | .814 | .814 | .814 | .764 | .634 | .780 | .805 | .805 | .805 | .805 | .756 | .770 | .814 | .605 | .814 | .814 | .814 | .814 | .814 | .784 | ||

| Emotionality | .659 | .705 | .795 | .795 | .773 | .795 | .750 | .753 | .634 | .683 | .805 | .805 | .805 | .805 | .634 | .739 | .791 | .628 | .791 | .698 | .791 | .744 | .767 | .744 | ||

| Sociability | .721 | .535 | .814 | .814 | .814 | .767 | .814 | .754 | .634 | .585 | .805 | .756 | .805 | .805 | .805 | .742 | .814 | .651 | .814 | .814 | .814 | .814 | .837 | .794 | ||

| TEI Total | .651 | .744 | .814 | .814 | .814 | .814 | .814 | .781 | .585 | .659 | .829 | .829 | .829 | .756 | .829 | .760 | .837 | .581 | .814 | .814 | .791 | .814 | .814 | .781 | ||

| Sub-Mean | .638 | .605 | .788 | .783 | .786 | .781 | .779 | .737 | .637 | .641 | .800 | .795 | .800 | .793 | .776 | .749 | .781 | .644 | .791 | .774 | .788 | .791 | .788 | .765 | ||

| 30th Percentile | ||||||||||||||||||||||||||

| SEA | .674 | .605 | .698 | .698 | .698 | .698 | .674 | .678 | .659 | .634 | .732 | .732 | .732 | .732 | .707 | .704 | .674 | .605 | .698 | .698 | .698 | .698 | .698 | .681 | ||

| OEA | .535 | .535 | .605 | .605 | .605 | .651 | .535 | .581 | .512 | .439 | .610 | .561 | .610 | .585 | .537 | .551 | .651 | .419 | .605 | .605 | .581 | .605 | .605 | .581 | ||

| UOE | .581 | .512 | .651 | .651 | .651 | .581 | .628 | .608 | .683 | .463 | .659 | .634 | .659 | .659 | .659 | .631 | .674 | .512 | .651 | .651 | .651 | .535 | .698 | .625 | ||

| ROE | .581 | .558 | .651 | .651 | .581 | .651 | .628 | .615 | .659 | .537 | .659 | .634 | .659 | .488 | .634 | .610 | .535 | .488 | .651 | .651 | .581 | .651 | .651 | .601 | ||

| WLEIS Total | .535 | .512 | .721 | .721 | .721 | .721 | .674 | .658 | .488 | .561 | .732 | .732 | .732 | .732 | .732 | .672 | .698 | .698 | .721 | .721 | .721 | .721 | .721 | .714 | ||

| Well-being | .395 | .605 | .721 | .721 | .721 | .721 | .698 | .654 | .585 | .537 | .732 | .732 | .732 | .683 | .732 | .676 | .628 | .558 | .721 | .721 | .721 | .721 | .721 | .684 | ||

| Self-control | .614 | .477 | .682 | .682 | .682 | .682 | .682 | .643 | .537 | .512 | .707 | .707 | .634 | .634 | .707 | .634 | .628 | .488 | .698 | .698 | .767 | .698 | .721 | .671 | ||

| Emotionality | .591 | .545 | .705 | .705 | .659 | .705 | .682 | .656 | .512 | .512 | .707 | .634 | .707 | .707 | .683 | .638 | .651 | .488 | .698 | .628 | .698 | .698 | .698 | .651 | ||

| Sociability | .545 | .614 | .682 | .545 | .682 | .568 | .545 | .597 | .561 | .488 | .683 | .683 | .683 | .683 | .659 | .634 | .558 | .558 | .674 | .605 | .698 | .581 | .628 | .615 | ||

| TEI Total | .488 | .651 | .721 | .721 | .721 | .721 | .721 | .678 | .659 | .537 | .732 | .732 | .732 | .732 | .732 | .693 | .674 | .628 | .721 | .721 | .721 | .558 | .744 | .681 | ||

| Sub-Mean | .554 | .561 | .684 | .670 | .672 | .670 | .647 | .637 | .585 | .522 | .695 | .678 | .688 | .663 | .678 | .644 | .637 | .544 | .684 | .670 | .684 | .647 | .688 | .650 | ||

| 40th Percentile | ||||||||||||||||||||||||||

| SEA | .477 | .477 | .568 | .545 | .545 | .636 | .614 | .552 | .512 | .659 | .610 | .610 | .585 | .634 | .585 | .599 | .535 | .605 | .581 | .581 | .535 | .628 | .465 | .561 | ||

| OEA | .605 | .442 | .535 | .419 | .628 | .465 | .535 | .518 | .561 | .488 | .585 | .537 | .659 | .707 | .537 | .582 | .698 | .442 | .512 | .558 | .674 | .488 | .651 | .575 | ||

| UOE | .372 | .558 | .581 | .488 | .442 | .535 | .512 | .498 | .537 | .488 | .561 | .341 | .463 | .610 | .341 | .477 | .442 | .535 | .581 | .581 | .442 | .651 | .535 | .538 | ||

| ROE | .442 | .581 | .581 | .581 | .628 | .674 | .535 | .575 | .463 | .634 | .610 | .610 | .512 | .537 | .561 | .561 | .512 | .512 | .512 | .488 | .558 | .651 | .512 | .535 | ||

| WLEIS Total | .488 | .605 | .605 | .628 | .605 | .535 | .535 | .571 | .463 | .585 | .610 | .707 | .488 | .659 | .537 | .578 | .628 | .674 | .605 | .605 | .605 | .674 | .651 | .635 | ||

| Well-being | .455 | .591 | .591 | .591 | .591 | .455 | .500 | .539 | .537 | .463 | .610 | .561 | .537 | .585 | .561 | .551 | .558 | .465 | .581 | .581 | .535 | .581 | .605 | .558 | ||

| Self-control | .488 | .442 | .581 | .512 | .465 | .558 | .488 | .505 | .512 | .585 | .585 | .512 | .634 | .561 | .537 | .561 | .488 | .512 | .558 | .465 | .395 | .558 | .488 | .495 | ||

| Emotionality | .568 | .568 | .568 | .568 | .636 | .636 | .523 | .581 | .610 | .634 | .610 | .561 | .610 | .610 | .585 | .603 | .465 | .465 | .512 | .535 | .512 | .442 | .581 | .502 | ||

| Sociability | .535 | .558 | .605 | .605 | .581 | .488 | .605 | .568 | .585 | .512 | .610 | .610 | .610 | .585 | .610 | .589 | .512 | .512 | .605 | .605 | .535 | .651 | .628 | .578 | ||

| TEI Total | .591 | .568 | .591 | .614 | .636 | .500 | .545 | .578 | .634 | .537 | .610 | .634 | .537 | .585 | .610 | .592 | .512 | .535 | .605 | .581 | .581 | .581 | .488 | .555 | ||

| Sub-Mean | .502 | .539 | .581 | .555 | .576 | .548 | .539 | .549 | .541 | .559 | .600 | .568 | .563 | .607 | .546 | .569 | .535 | .526 | .565 | .558 | .537 | .591 | .560 | .553 | ||

| 50th Percentile | ||||||||||||||||||||||||||

| SEA | .523 | .614 | .500 | .455 | .477 | .477 | .455 | .500 | .610 | .537 | .512 | .463 | .488 | .537 | .537 | .526 | .605 | .535 | .605 | .535 | .488 | .488 | .628 | .555 | ||

| OEA | .605 | .442 | .535 | .419 | .628 | .465 | .535 | .518 | .561 | .488 | .585 | .537 | .659 | .707 | .537 | .582 | .698 | .442 | .512 | .558 | .674 | .488 | .651 | .575 | ||

| UOE | .535 | .535 | .535 | .535 | .651 | .558 | .581 | .561 | .634 | .415 | .537 | .585 | .488 | .610 | .585 | .551 | .581 | .605 | .581 | .558 | .581 | .605 | .605 | .588 | ||

| ROE | .659 | .591 | .591 | .591 | .545 | .591 | .591 | .594 | .634 | .512 | .585 | .537 | .610 | .585 | .561 | .575 | .628 | .535 | .488 | .465 | .488 | .512 | .488 | .515 | ||

| WLEIS Total | .512 | .628 | .512 | .744 | .512 | .535 | .558 | .571 | .634 | .561 | .488 | .537 | .512 | .537 | .610 | .554 | .535 | .535 | .535 | .512 | .581 | .512 | .512 | .532 | ||

| Well-being | .568 | .545 | .477 | .523 | .523 | .477 | .500 | .516 | .488 | .463 | .561 | .512 | .512 | .488 | .512 | .505 | .535 | .465 | .488 | .465 | .442 | .372 | .395 | .452 | ||

| Self-control | .386 | .318 | .455 | .432 | .500 | .568 | .432 | .442 | .537 | .439 | .585 | .683 | .585 | .634 | .585 | .578 | .535 | .558 | .419 | .465 | .558 | .442 | .488 | .495 | ||

| Emotionality | .488 | .558 | .535 | .535 | .488 | .488 | .512 | .515 | .537 | .610 | .488 | .561 | .537 | .512 | .610 | .551 | .605 | .558 | .535 | .442 | .488 | .558 | .605 | .542 | ||

| Sociability | .523 | .636 | .568 | .705 | .432 | .500 | .568 | .562 | .634 | .463 | .561 | .610 | .585 | .610 | .585 | .578 | .535 | .395 | .581 | .581 | .512 | .581 | .558 | .535 | ||

| TEI Total | .512 | .488 | .512 | .512 | .581 | .535 | .488 | .518 | .634 | .463 | .488 | .512 | .634 | .537 | .512 | .540 | .465 | .512 | .442 | .512 | .442 | .442 | .419 | .462 | ||

| Sub-Mean | .531 | .536 | .522 | .545 | .534 | .520 | .522 | .530 | .590 | .495 | .539 | .554 | .561 | .576 | .563 | .554 | .572 | .514 | .519 | .509 | .526 | .500 | .535 | .525 | ||

| 60th Percentile | ||||||||||||||||||||||||||

| SEA | .488 | .605 | .651 | .651 | .651 | .651 | .605 | .615 | .512 | .707 | .634 | .634 | .634 | .634 | .585 | .620 | .558 | .628 | .651 | .651 | .605 | .651 | .581 | .618 | ||

| OEA | .455 | .591 | .659 | .591 | .636 | .636 | .523 | .584 | .610 | .732 | .610 | .610 | .634 | .610 | .634 | .634 | .581 | .721 | .628 | .628 | .651 | .628 | .605 | .635 | ||

| UOE | .636 | .568 | .614 | .568 | .500 | .636 | .545 | .581 | .561 | .659 | .634 | .634 | .610 | .634 | .537 | .610 | .581 | .581 | .605 | .605 | .581 | .628 | .651 | .605 | ||

| ROE | .659 | .591 | .591 | .591 | .545 | .591 | .591 | .594 | .634 | .512 | .585 | .537 | .610 | .585 | .561 | .575 | .628 | .535 | .488 | .465 | .488 | .512 | .488 | .515 | ||

| WLEIS Total | .512 | .674 | .651 | .744 | .674 | .651 | .651 | .651 | .585 | .561 | .634 | .585 | .634 | .634 | .537 | .596 | .581 | .605 | .651 | .558 | .651 | .721 | .605 | .625 | ||

| Well-being | .442 | .419 | .605 | .488 | .581 | .605 | .605 | .535 | .512 | .561 | .585 | .585 | .585 | .585 | .561 | .568 | .442 | .488 | .605 | .535 | .512 | .535 | .512 | .518 | ||

| Self-control | .477 | .455 | .591 | .659 | .568 | .500 | .568 | .545 | .561 | .585 | .585 | .585 | .610 | .415 | .610 | .564 | .558 | .535 | .581 | .581 | .535 | .605 | .605 | .571 | ||

| Emotionality | .500 | .591 | .591 | .568 | .545 | .523 | .545 | .552 | .585 | .512 | .585 | .561 | .439 | .585 | .488 | .537 | .581 | .558 | .605 | .605 | .488 | .581 | .512 | .561 | ||

| Sociability | .500 | .409 | .477 | .500 | .455 | .568 | .523 | .490 | .512 | .585 | .585 | .585 | .561 | .585 | .512 | .561 | .535 | .395 | .628 | .628 | .581 | .698 | .605 | .581 | ||

| TEI Total | .512 | .442 | .605 | .535 | .512 | .465 | .535 | .515 | .610 | .439 | .585 | .585 | .585 | .561 | .488 | .551 | .488 | .581 | .605 | .605 | .581 | .512 | .535 | .558 | ||

| Sub-Mean | .518 | .534 | .603 | .590 | .567 | .583 | .569 | .566 | .568 | .585 | .602 | .590 | .590 | .583 | .551 | .582 | .553 | .563 | .605 | .586 | .567 | .607 | .570 | .579 | ||

| 70th Percentile | ||||||||||||||||||||||||||

| SEA | .523 | .750 | .773 | .773 | .773 | .727 | .705 | .718 | .537 | .707 | .756 | .756 | .756 | .756 | .756 | .718 | .721 | .674 | .767 | .767 | .767 | .767 | .767 | .748 | ||

| OEA | .535 | .512 | .744 | .698 | .744 | .744 | .744 | .674 | .561 | .537 | .732 | .732 | .732 | .732 | .732 | .679 | .721 | .628 | .744 | .744 | .744 | .744 | .744 | .724 | ||

| UOE | .605 | .628 | .698 | .628 | .698 | .698 | .674 | .661 | .585 | .610 | .683 | .683 | .683 | .634 | .683 | .652 | .581 | .535 | .698 | .651 | .698 | .674 | .651 | .641 | ||

| ROE | .659 | .659 | .750 | .750 | .750 | .750 | .750 | .724 | .634 | .585 | .732 | .732 | .732 | .732 | .732 | .697 | .605 | .512 | .744 | .651 | .698 | .628 | .721 | .651 | ||

| WLEIS Total | .545 | .682 | .705 | .705 | .705 | .705 | .705 | .679 | .512 | .561 | .683 | .585 | .610 | .659 | .634 | .606 | .651 | .744 | .698 | .698 | .721 | .698 | .698 | .701 | ||

| Well-being | .628 | .674 | .721 | .674 | .651 | .651 | .721 | .674 | .659 | .561 | .707 | .707 | .707 | .707 | .707 | .679 | .628 | .558 | .721 | .721 | .721 | .628 | .721 | .671 | ||

| Self-control | .500 | .455 | .727 | .727 | .705 | .705 | .705 | .646 | .585 | .415 | .732 | .732 | .707 | .488 | .732 | .627 | .674 | .558 | .721 | .744 | .721 | .721 | .698 | .691 | ||

| Emotionality | .614 | .705 | .727 | .727 | .727 | .727 | .705 | .705 | .634 | .610 | .732 | .732 | .683 | .659 | .732 | .683 | .535 | .605 | .721 | .721 | .721 | .558 | .698 | .651 | ||

| Sociability | .535 | .581 | .721 | .721 | .721 | .721 | .698 | .671 | .561 | .610 | .707 | .683 | .683 | .610 | .659 | .645 | .767 | .558 | .744 | .744 | .744 | .744 | .744 | .721 | ||

| TEI Total | .535 | .535 | .698 | .698 | .698 | .698 | .744 | .658 | .561 | .463 | .683 | .683 | .683 | .683 | .610 | .624 | .651 | .558 | .698 | .698 | .721 | .674 | .674 | .668 | ||

| Sub-Mean | .568 | .618 | .726 | .710 | .717 | .713 | .715 | .681 | .583 | .566 | .715 | .702 | .698 | .666 | .698 | .661 | .653 | .593 | .726 | .714 | .726 | .684 | .712 | .687 | ||

| 80th Percentile | ||||||||||||||||||||||||||

| SEA | .614 | .682 | .841 | .841 | .841 | .818 | .841 | .782 | .732 | .634 | .829 | .829 | .829 | .829 | .829 | .787 | .814 | .721 | .837 | .837 | .837 | .837 | .837 | .817 | ||

| OEA | .814 | .535 | .837 | .814 | .837 | .837 | .837 | .787 | .659 | .561 | .829 | .829 | .805 | .829 | .829 | .763 | .860 | .791 | .837 | .837 | .837 | .767 | .837 | .824 | ||

| UOE | .818 | .864 | .909 | .909 | .909 | .909 | .909 | .890 | .756 | .854 | .902 | .902 | .902 | .902 | .902 | .875 | .907 | .860 | .907 | .907 | .907 | .907 | .907 | .900 | ||

| ROE | .674 | .744 | .837 | .837 | .837 | .791 | .837 | .794 | .683 | .732 | .829 | .829 | .829 | .732 | .829 | .780 | .744 | .558 | .814 | .814 | .814 | .814 | .814 | .767 | ||

| WLEIS Total | .628 | .767 | .814 | .814 | .814 | .698 | .767 | .757 | .756 | .659 | .805 | .805 | .805 | .805 | .805 | .777 | .791 | .698 | .814 | .814 | .814 | .814 | .814 | .794 | ||

| Well-being | .636 | .591 | .795 | .795 | .795 | .614 | .795 | .718 | .610 | .585 | .780 | .780 | .780 | .780 | .780 | .728 | .767 | .721 | .791 | .791 | .791 | .791 | .791 | .777 | ||

| Self-control | .605 | .744 | .837 | .837 | .837 | .837 | .837 | .791 | .756 | .829 | .829 | .829 | .829 | .683 | .829 | .798 | .721 | .558 | .837 | .837 | .837 | .837 | .837 | .781 | ||

| Emotionality | .605 | .698 | .814 | .791 | .814 | .814 | .767 | .757 | .707 | .732 | .805 | .805 | .805 | .805 | .805 | .780 | .721 | .651 | .814 | .814 | .814 | .814 | .814 | .777 | ||

| Sociability | .727 | .750 | .841 | .818 | .841 | .705 | .818 | .786 | .780 | .756 | .829 | .829 | .829 | .829 | .829 | .812 | .814 | .791 | .860 | .837 | .860 | .860 | .860 | .841 | ||

| TEI Total | .591 | .636 | .795 | .705 | .795 | .795 | .795 | .731 | .732 | .683 | .805 | .805 | .805 | .805 | .805 | .777 | .721 | .674 | .791 | .791 | .791 | .767 | .791 | .761 | ||

| Sub-Mean | .671 | .701 | .832 | .816 | .832 | .782 | .821 | .779 | .717 | .702 | .824 | .824 | .822 | .800 | .824 | .788 | .786 | .702 | .830 | .828 | .830 | .821 | .830 | .804 | ||

3.4. The Amount of Data Needed to Identify EI with ML Models

3.5. The Most Predictive Eye-Tracking Features

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Allen, Mary J., and Wendy M. Yen. 2001. Introduction to Measurement Theory. Long Grove: Waveland Press. [Google Scholar]

- Al-Samarraie, Hosam, Samer Muthana Sarsam, Ahmed Ibrahim Alzahrani, and Nasser Alalwan. 2018. Personality and individual differences: The potential of using preferences for visual stimuli to predict the Big Five traits. Cognition, Technology & Work 20: 337–49. [Google Scholar] [CrossRef]

- Al-Samarraie, Hosam, Samer Muthana Sarsam, Maria Lonsdale, and Ahmed Ibrahim Alzahrani. 2022. Emotional Intelligence and Individual Visual Preferences: A Predictive Machine Learning Approach. International Journal of Human–Computer Interaction 39: 2392–400. [Google Scholar] [CrossRef]

- Altemeyer, Boris. 2019. Making the business case for AI in HR: Two case studies. Strategic HR Review 18: 66–70. [Google Scholar] [CrossRef]

- Berkovsky, Shlomo, Ronnie Taib, Irena Koprinska, Eileen Wang, Yucheng Zeng, Jingjie Li, and Sabina Kleitman. 2019. Detecting Personality Traits Using Eye-Tracking Data. Paper presented at the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, May 4–9; pp. 1–12. [Google Scholar] [CrossRef]

- Birkeland, Scott A., Todd M. Manson, Jennifer L. Kisamore, Michael T. Brannick, and Mark A. Smith. 2006. A meta-analytic investigation of job applicant faking on personality measures: Job applicant faking on personality measures. International Journal of Selection and Assessment 14: 317–35. [Google Scholar] [CrossRef]

- Bringsjord, Selmer. 2011. Psychometric artificial intelligence. Journal of Experimental & Theoretical Artificial Intelligence 23: 271–77. [Google Scholar] [CrossRef]

- Bringsjord, Selmer, and Bettina Schimanski. 2003. What is artificial intelligence? Psychometric AI as an answer. Paper presented at the 18th International Joint Conference on Artificial Intelligence IJCAI2003, San Francisco, CA, USA, August 9–15. [Google Scholar]

- Brownlee, Jason. 2020. A Gentle Introduction to Imbalanced Classification. Available online: https://machinelearningmastery.com/what-is-imbalanced-classification/ (accessed on 1 June 2023).

- Cao, Mengyang, and Fritz Drasgow. 2019. Does forcing reduce faking? A meta-analytic review of forced-choice personality measures in high-stakes situations. Journal of Applied Psychology 104: 1347–68. [Google Scholar] [CrossRef]

- Chen, Yen-Yu, Chien-Liang Liu, Tao-Hsing Chang, and Chia-Hoang Lee. 2010. An unsupervised automated essay scoring system. IEEE Intelligent Systems 25: 61–67. [Google Scholar] [CrossRef]

- Cuve, Helio Clemente, Jelka Stojanov, Xavier Roberts-Gaal, Caroline Catmur, and Geoffrey Bird. 2022. Validation of Gazepoint low-cost eye-tracking and psychophysiology bundle. Behavior Research Methods 54: 1027–49. [Google Scholar] [CrossRef]

- Dalmaso, Mario, Luigi Castelli, and Giovanni Galfano. 2020. Social modulators of gaze-mediated orienting of attention: A review. Psychonomic Bulletin & Review 27: 833–55. [Google Scholar] [CrossRef]

- Davis, Sarah K. 2018. Emotional intelligence and attentional bias for threat-related emotion under stress. Scandinavian Journal of Psychology 59: 328–39. [Google Scholar] [CrossRef]

- Eckstein, Maria K., Belen Guerra-Carrillo, Alison T. Miller Singley, and Silvia A. Bunge. 2017. Beyond eye gaze: What else can eyetracking reveal about cognition and cognitive development? Developmental Cognitive Neuroscience 25: 69–91. [Google Scholar] [CrossRef]

- Goldberg, Lewis R., John. A. Johnson, Herbert W. Eber, Robert Hogan, Michael C. Ashton, C. Robert Cloninger, and Harrison G. Gough. 2006. The international personality item pool and the future of public-domain personality measures. Journal of Research in Personality 40: 84–96. [Google Scholar] [CrossRef]

- Grobelny, Jaroslaw, Patrycja Radke, and Daria P. Maczka. 2021. Emotional intelligence and job performance: A meta-analysis. International Journal of Work Organisation and Emotion 12: 1. [Google Scholar] [CrossRef]

- Hoppe, Sabrina, Tobias Loetscher, Stephanie A. Morey, and Andreas Bulling. 2018. Eye movements during everyday behavior predict personality traits. Frontiers in Human Neuroscience 12: 105. [Google Scholar] [CrossRef] [PubMed]

- Huang, Yuchi, and Saad M. Khan. 2021. Advances in AI and Machine Learning for Education Research. In Computational Psychometrics: New Methodologies for a New Generation of Digital Learning and Assessment: With Examples in R and Python. Edited by Alina A. von Davier, Robert J. Mislevy and Jiangang Hao. Berlin and Heidelberg: Springer International Publishing, pp. 195–208. [Google Scholar] [CrossRef]

- Hussein, Mohamed Abdellatif, Hesham Hassan, and Mohammad Nassef. 2019. Automated language essay scoring systems: A literature review. PeerJ Computer Science 5: e208. [Google Scholar] [CrossRef] [PubMed]

- Joseph, Dana L., Jing Jin, Daniel A. Newman, and Ernest H. O’Boyle. 2015. Why does self-reported emotional intelligence predict job performance? A meta-analytic investigation of mixed EI. Journal of Applied Psychology 100: 298–342. [Google Scholar] [CrossRef] [PubMed]

- Kawakami, Kerry, Justin P. Friesen, and Xia Fang. 2022. Perceiving ingroup and outgroup faces within and across nations. British Journal of Psychology 113: 551–74. [Google Scholar] [CrossRef]

- Kerr, Robert, John Garvin, Norma Heaton, and Emily Boyle. 2006. Emotional intelligence and leadership effectiveness. Leadership & Organization Development Journal 27: 265–79. [Google Scholar] [CrossRef]

- Konovalova, Irma, Jastine V. Antolin, Helen Bolderston, and Nicola J. Gregory. 2021. Adults with higher social anxiety show avoidant gaze behaviour in a real-world social setting: A mobile eye tracking study. PLoS ONE 16: e0259007. [Google Scholar] [CrossRef]

- Lange, Wolf-Gero, Kathrin Heuer, Oliver Langner, Ger P. J. Keijsers, Eni S. Becker, and Mike Rinck. 2011. Face value: Eye movements and the evaluation of facial crowds in social anxiety. Journal of Behavior Therapy and Experimental Psychiatry 42: 355–63. [Google Scholar] [CrossRef]

- Laue, Cameron, Marcus Griffey, Ping-I. Lin, Kirk Wallace, Menno Van Der Schoot, Paul Horn, Ernest Pedapati, and Drew Barzman. 2018. Eye gaze patterns associated with aggressive tendencies in adolescence. Psychiatric Quarterly 89: 747–56. [Google Scholar] [CrossRef] [PubMed]

- Lea, Rosanna G., Pamela Qualter, Sarah K. Davis, Juan-Carlos Pérez-González, and Munirah Bangee. 2018. Trait emotional intelligence and attentional bias for positive emotion: An eye-tracking study. Personality and Individual Differences 128: 88–93. [Google Scholar] [CrossRef]

- Luna, Beatriz, Katerina Velanova, and Charles F. Geier. 2008. Development of eye-movement control. Brain and Cognition 68: 293–308. [Google Scholar] [CrossRef] [PubMed]

- MacCann, Carolyn, and Richard D. Roberts. 2008. New paradigms for assessing emotional intelligence: Theory and data. Emotion 8: 540–51. [Google Scholar] [CrossRef]

- MacCann, Carolyn, Dana L. Joseph, Daniel A. Newman, and Richard D. Roberts. 2014. Emotional intelligence is a second-stratum factor of intelligence: Evidence from hierarchical and bifactor models. Emotion 14: 358–74. [Google Scholar] [CrossRef]

- Madera, Juan M., and Michelle R. Hebl. 2012. Discrimination against facially stigmatized applicants in interviews: An eye-tracking and face-to-face investigation. Journal of Applied Psychology 97: 317–30. [Google Scholar] [CrossRef]

- Malouff, John M., Nicola S. Schutte, and Einar B. Thorsteinsson. 2014. Trait emotional intelligence and romantic relationship satisfaction: A meta-analysis. The American Journal of Family Therapy 42: 53–66. [Google Scholar] [CrossRef]

- McCarthy, John. 2007. What is Artificial Intelligence? Stanford: Stanford University. Available online: http://jmc.stanford.edu/articles/whatisai/whatisai.pdf (accessed on 23 June 2023).

- Miao, Chao, Ronald H. Humphrey, and Shanshan Qian. 2017. A meta-analysis of emotional intelligence and work attitudes. Journal of Occupational and Organizational Psychology 90: 177–202. [Google Scholar] [CrossRef]

- Mujtaba, Dena F., and Nihar R. Mahapatra. 2020. Artificial Intelligence in Computerized Adaptive Testing. Paper presented at the 2020 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, December 16–18. [Google Scholar]

- O’Connor, Peter J., Andrew Hill, Maria Kaya, and Brett Martin. 2019. The measurement of emotional intelligence: A critical review of the literature and recommendations for researchers and practitioners. Frontiers in Psychology 10: 1116. [Google Scholar] [CrossRef]

- Petrides, Konstantinos Vassilis. 2009. Psychometric Properties of the Trait Emotional Intelligence Questionnaire (TEIQue). Berlin and Heidelberg: Springer US, pp. 85–101. [Google Scholar] [CrossRef]

- Petrides, Konstantinos Vassilis, and Adrian Furnham. 2000. On the dimensional structure of emotional intelligence. Personality and Individual Differences 29: 313–20. [Google Scholar] [CrossRef]

- Petrides, Konstantinos Vassilis, Juan Carlos Pérez-González, and Adrian Furnham. 2007a. On the criterion and incremental validity of trait emotional intelligence. Cognition & Emotion 21: 26–55. [Google Scholar] [CrossRef]

- Petrides, Konstantinos Vassilis, Ria Pita, and Flora Kokkinaki. 2007b. The location of trait emotional intelligence in personality factor space. British Journal of Psychology 98: 273–89. [Google Scholar] [CrossRef] [PubMed]

- Potthoff, Jonas, and Anne Schienle. 2021. Effects of self-esteem on self-viewing: An eye-tracking investigation on mirror gazing. Behavioral Sciences 11: 164. [Google Scholar] [CrossRef] [PubMed]

- Rasmussen, Stig Hebbelstrup Rye, Steven G. Ludeke, and Robert Klemmensen. 2023. Using deep learning to predict ideology from facial photographs: Expressions, beauty, and extra-facial information. Scientific Reports 13: 5257. [Google Scholar] [CrossRef]

- Rauthmann, John F., Christian T. Seubert, Pierre Sachse, and Marco R. Furtner. 2012. Eyes as windows to the soul: Gazing behavior is related to personality. Journal of Research in Personality 46: 147–56. [Google Scholar] [CrossRef]

- Risko, Evan F., Nicola C. Anderson, Sophie Lanthier, and Alan Kingstone. 2012. Curious eyes: Individual differences in personality predict eye movement behavior in scene-viewing. Cognition 122: 86–90. [Google Scholar] [CrossRef]

- Roberts, Richard D., Moshe Zeidner, and Gerald Matthews. 2001. Does emotional intelligence meet traditional standards for an intelligence? Some new data and conclusions. Emotion 1: 196–231. [Google Scholar] [CrossRef]

- Salovey, Peter, and John D. Mayer. 1990. Emotional intelligence. Imagination, Cognition and Personality 9: 185–211. [Google Scholar] [CrossRef]

- Scherbaum, Charles A., Jennifer Sabet, Michael J. Kern, and Paul Agnello. 2013. Examining Faking on Personality Inventories Using Unfolding Item Response Theory Models. Journal of Personality Assessment 95: 207–16. [Google Scholar] [CrossRef]

- Shasteen, Jonathon R., Noah J. Sasson, and Amy E. Pinkham. 2014. Eye Tracking the Face in the Crowd Task: Why Are Angry Faces Found More Quickly? PLoS ONE 9: e93914. [Google Scholar] [CrossRef]

- Shishido, Emiko, Shiori Ogawa, Seiko Miyata, Maeri Yamamoto, Toshiya Inada, and Norio Ozaki. 2019. Application of eye trackers for understanding mental disorders: Cases for schizophrenia and autism spectrum disorder. Neuropsychopharmacology Reports 39: 72–77. [Google Scholar] [CrossRef] [PubMed]

- Singh, Navjot, and Amarjot Kaur. 2020. Role of Artificial Intelligence in Modeling Psychometrics to Detect Neurodevelopmental Disorders: Use of AI to Understand Human Behavioral Aspects. In Interdisciplinary Approaches to Altering Neurodevelopmental Disorders. Edited by Wadhera Tanu and Kakkar Deepti. Hershey: IGI Global, pp. 213–27. [Google Scholar] [CrossRef]

- SR Research Ltd. 2022. EyeLink® 1000 Plus User Manual, Version 1.0.2. Oakville: SR Research Ltd. [Google Scholar]

- Suslow, Thomas, Dennis Hoepfel, Vivien Günther, Anette Kersting, and Charlott Maria Bodenschatz. 2022. Positive attentional bias mediates the relationship between trait emotional intelligence and trait affect. Scientific Reports 12: 20733. [Google Scholar] [CrossRef] [PubMed]

- Tableau. n.d. What Is the History of Artificial Intelligence (AI)? Available online: https://www.tableau.com/data-insights/ai/history (accessed on 25 June 2023).

- Thielmann, Isabel, Benjamin E. Hilbig, Ingo Zettler, and Morten Moshagen. 2017. On measuring the sixth basic personality dimension: A comparison between hexaco honesty-humility and big six honesty-propriety. Assessment 24: 1024–36. [Google Scholar] [CrossRef]

- Tottenham, Nim, James W. Tanaka, Andrew C. Leon, Thomas McCarry, Marcella Nurse, Todd A. Hare, David J. Marcus, Alissa Westerlund, BJ Casey, and Charles Nelson. 2009. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research 168: 242–49. [Google Scholar] [CrossRef] [PubMed]

- Valtakari, Niilo V, Ignace T. C. Hooge, Charlotte Viktorsson, Par Nyström, Terje Falck-Ytter, and Roy S. Hessels. 2021. Eye tracking in human interaction: Possibilities and limitations. Behavior Research Methods 53: 1592–608. [Google Scholar] [CrossRef] [PubMed]

- Wang, Wei, Julie V. Dinh, Kisha S. Jones, Siddharth Upadhyay, and Jun Yang. 2022. Corporate Diversity Statements and Employees’ Online DEI Ratings: An Unsupervised Machine-Learning Text-Mining Analysis. Journal of Business and Psychology 38: 45–61. [Google Scholar] [CrossRef]

- Wisiecka, Katarzyna, Krzysztof Krejtz, Izabela Krejtz, Damian Sromek, Adam Cellary, Beata Lewandowska, and Andrew Duchowski. 2022. Comparison of Webcam and Remote Eye Tracking. Paper presented at the 2022 Symposium on Eye Tracking Research and Applications, Seattle, WA, USA, June 8–11. [Google Scholar]

- Wong, Chi-Sum, and Kenneth S. Law. 2002. The effects of leader and follower emotional intelligence on performance and attitude. The Leadership Quarterly 13: 243–74. [Google Scholar] [CrossRef]

- Wu, Youyou, Michal Kosinski, and David Stillwell. 2015. Computer-based personality judgments are more accurate than those made by humans. Proceedings of the National Academy of Sciences of the United States of America 112: 1036–40. [Google Scholar] [CrossRef]

- Yan, Wen-Jing, Qian-Nan Ruan, and Ke Jiang. 2022. Challenges for artificial intelligence in recognizing mental disorders. Diagnostics 13: 2. [Google Scholar] [CrossRef]

- Zeidner, Moshe, Gerald Matthews, and Richard D. Roberts. 2012. The emotional intelligence, health, and well-being nexus: What have we learned and what have we missed?: The emotional intelligence, health, and well-being nexus. Applied Psychology: Health and Well-Being 4: 1–30. [Google Scholar] [CrossRef]

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Wong and Law Emotional Intelligence Scale (WLEIS): Facets and Measure | ||||||||||||

| 1. SEA | 5.39 | 1.00 | .72 | |||||||||

| 2. OEA | 5.49 | 0.93 | .57 ** | .66 | ||||||||

| 3. UOE | 5.36 | 1.16 | .49 ** | .46 ** | .80 | |||||||

| 4. ROE | 5.04 | 1.19 | .54 ** | .41 ** | .65 ** | .75 | ||||||

| 5. WLEIS Total | 5.32 | 0.86 | .80 ** | .73 ** | .83 ** | .83 ** | .88 | |||||

| Trait Emotional Intelligence Questionnaire (TEIQue-SF): Facets and Measure | ||||||||||||

| 6. Well-being | 5.33 | 1.07 | .44 ** | .30 ** | .66 ** | .46 ** | .59 ** | .81 | ||||

| 7. Self-control | 4.49 | 1.04 | .48 ** | .22 ** | .50 ** | .65 ** | .59 ** | .51 ** | .70 | |||

| 8. Emotionality | 5.01 | 0.89 | .48 ** | .39 ** | .36 ** | .45 ** | .52 ** | .46 ** | .46 ** | .61 | ||

| 9. Sociability | 4.58 | 1.03 | .32 ** | .26 ** | .44 ** | .33 ** | .43 ** | .53 ** | .35 ** | .43 ** | .70 | |

| 10. TEIQue-SF Total | 4.89 | 0.78 | .56 ** | .39 ** | .67 ** | .61 ** | .70 ** | .81 ** | .74 ** | .76 ** | .73 ** | .89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Kofler, L.; Lindgren, C.; Lobel, M.; Murphy, A.; Tong, Q.; Pickering, K. AI for Psychometrics: Validating Machine Learning Models in Measuring Emotional Intelligence with Eye-Tracking Techniques. J. Intell. 2023, 11, 170. https://doi.org/10.3390/jintelligence11090170

Wang W, Kofler L, Lindgren C, Lobel M, Murphy A, Tong Q, Pickering K. AI for Psychometrics: Validating Machine Learning Models in Measuring Emotional Intelligence with Eye-Tracking Techniques. Journal of Intelligence. 2023; 11(9):170. https://doi.org/10.3390/jintelligence11090170

Chicago/Turabian StyleWang, Wei, Liat Kofler, Chapman Lindgren, Max Lobel, Amanda Murphy, Qiwen Tong, and Kemar Pickering. 2023. "AI for Psychometrics: Validating Machine Learning Models in Measuring Emotional Intelligence with Eye-Tracking Techniques" Journal of Intelligence 11, no. 9: 170. https://doi.org/10.3390/jintelligence11090170

APA StyleWang, W., Kofler, L., Lindgren, C., Lobel, M., Murphy, A., Tong, Q., & Pickering, K. (2023). AI for Psychometrics: Validating Machine Learning Models in Measuring Emotional Intelligence with Eye-Tracking Techniques. Journal of Intelligence, 11(9), 170. https://doi.org/10.3390/jintelligence11090170