Does Using None-of-the-Above (NOTA) Hurt Students’ Confidence?

Abstract

1. Introduction

1.1. Multiple-Choice Testing: The Pros and Cons of Using NOTA

1.2. Metacognition

1.3. The Present Experiments

2. Experiment 1a

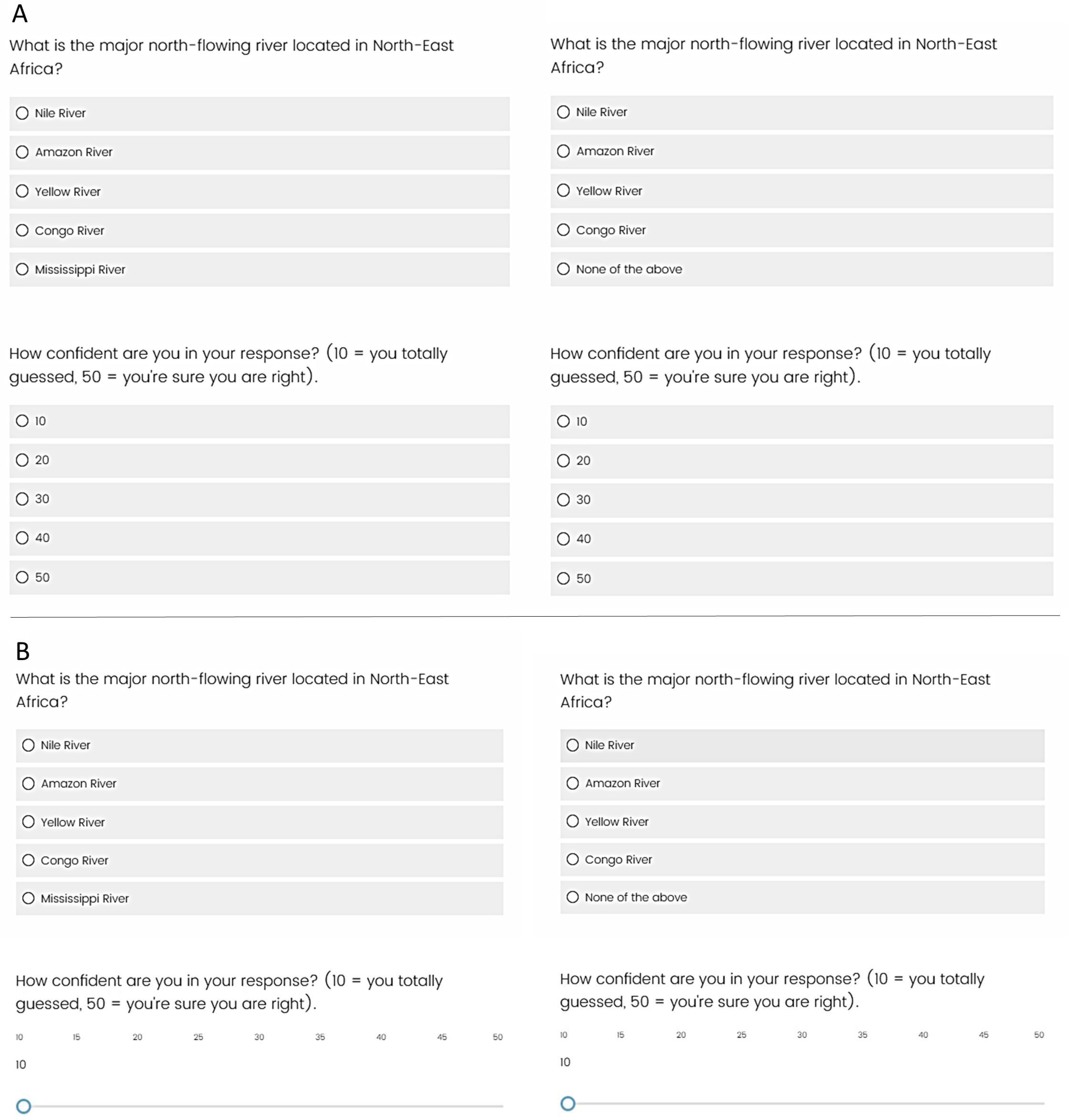

2.1. Method

2.1.1. Participants

2.1.2. Design

2.1.3. Materials

2.1.4. Procedure

2.2. Results

2.3. Discussion

3. Experiment 1b

3.1. Method

3.1.1. Participants

3.1.2. Design, Materials, and Procedure

3.2. Results

3.3. Discussion

4. Experiment 2a

4.1. Method

4.1.1. Participants

4.1.2. Design

4.1.3. Materials

4.1.4. Procedure

4.2. Results

4.3. Discussion

5. Experiment 2b

5.1. Method

5.1.1. Participants

5.1.2. Design, Materials, and Procedure

5.2. Results

5.3. Discussion

6. General Discussion

6.1. Metacognitive Beliefs about NOTA

6.2. Performance and the Implications of Using NOTA as a Desirable Difficulty

6.3. Additional Limitations and Future Directions

6.4. Applications and Conclusion

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | Because Blendermann et al. were interested in comparing NOTA (answer correct) to NOTA (answer incorrect) questions, the number of these two types of items was equal in their design. Although a valid experimental design decision for their purposes, having equal numbers of questions for which NOTA is and is not correct is not educationally realistic because NOTA correct answers are overrepresented; this design feature may be a reason that participants avoided choosing NOTA (Odegard and Koen 2007 made a similar design decision). |

| 2 | Four participants failed to provide aggregate ratings. |

References

- Ackerman, Rakefet. 2019. Heuristic cues for meta-reasoning judgments: Review and methodology. Psihologijske Teme 28: 219801. [Google Scholar] [CrossRef]

- Ackerman, Rakefet, and Hagar Zalmanov. 2012. The persistence of the fluency–confidence association in problem solving. Psychonomic Bulletin and Review 19: 1187–92. [Google Scholar] [CrossRef] [PubMed]

- Begg, Ian, Susanna Duft, Paul Lalonde, Richard Melnick, and Josephine Sanvito. 1989. Memory predictions are based on ease of processing. Journal of Memory and Language 28: 610–32. [Google Scholar] [CrossRef]

- Benjamin, Aaron S., Robert A. Bjork, and Bennett L. Schwartz. 1998. The mismeasure of memory: When retrieval fluency is misleading as a metamnemonic index. Journal of Experimental Psychology: General 127: 55–68. [Google Scholar] [CrossRef] [PubMed]

- Bjork, Elizabeth L., and Robert A. Bjork. 2011. Making things hard on yourself, but in a good way: Creating desirable difficulties to enhance learning. In Psychology and the Real World: Essays Illustrating Fundamental Contributions to Society. New York: Worth Publishing, vol. 2. [Google Scholar]

- Bjork, Elizabeth Ligon, Nicholas C. Soderstrom, and Jeri L. Little. 2015. Can multiple-choice testing induce desirable difficulties? Evidence from the laboratory and the classroom. The American Journal of Psychology 128: 229–39. [Google Scholar] [CrossRef]

- Bjork, Robert A., and Elizabeth L. Bjork. 2020. Desirable difficulties in theory and practice. Journal of Applied Research in Memory and Cognition 9: 475–79. [Google Scholar] [CrossRef]

- Blendermann, Mary F., Jeri L. Little, and Kayla M. Gray. 2020. How “none of the above” (NOTA) affects the accessibility of tested and related information in multiple-choice questions. Memory 28: 473–80. [Google Scholar] [CrossRef]

- Butler, Andrew C. 2018. Multiple-choice testing in education: Are the best practices for assessment also good for learning? Journal of Applied Research in Memory and Cognition 7: 323–31. [Google Scholar] [CrossRef]

- DiBattista, David, Jo-Anne Sinnige-Egger, and Glenda Fortuna. 2014. The “none of the above” option in multiple-choice testing: An experimental study. The Journal of Experimental Education 82: 168–83. [Google Scholar] [CrossRef]

- Dunlosky, John, and Sarah K. Tauber. 2014. Understanding people’s metacognitive judgments: An isomechanism framework and its implications for applied and theoretical research. In Handbook of Applied Memory. Edited by Timothy T. Perfect and D. Stephen Lindsay. Thousand Oaks: Sage, pp. 444–64. [Google Scholar]

- Dunlosky, John, and Thomas O. Nelson. 1992. Importance of the kind of cue for judgments of learning (JOL) and the delayed-JOL effect. Memory and Cognition 20: 374–80. [Google Scholar] [CrossRef]

- Frary, Robert B. 1991. The none-of-the-above option: An empirical study. Applied Measurement in Education 4: 115–24. [Google Scholar] [CrossRef]

- Haladyna, Thomas M., Steven M. Downing, and Michael C. Rodriguez. 2002. A review of multiple-choice item-writing guidelines for classroom assessment. Applied Measurement in Education 15: 309–33. [Google Scholar] [CrossRef]

- Hanson, Whitney. 2015. Test Bank for Cognition: Exploring the Science of the Mind, 6th ed. New York: W.W. Norton & Co. [Google Scholar]

- Hertwig, Ralph, Stefan M. Herzog, Lael J. Schooler, and Torsten Reimer. 2008. Fluency heuristic: A model of how the mind exploits a by-product of information retrieval. Journal of Experimental Psychology: Learning, Memory, and Cognition 34: 1191–206. [Google Scholar] [CrossRef] [PubMed]

- Koriat, Asher. 1997. Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: Genera 126: 349–70. [Google Scholar] [CrossRef]

- Koriat, Asher. 2008a. Subjective confidence in one’s answers: The consensuality principle. Journal of Experimental Psychology: Learning, Memory, and Cognition 34: 945–59. [Google Scholar] [CrossRef]

- Koriat, Asher. 2008b. Easy comes, easy goes? The link between learning and remembering and its exploitation in metacognition. Memory & Cognition 36: 416–28. [Google Scholar] [CrossRef]

- Koriat, Asher. 2019. Confidence judgments: The monitoring of object-level and same-level performance. Metacognition and Learning 14: 463–78. [Google Scholar] [CrossRef]

- Koriat, Asher, Ravlt Nussinson, Herbert Bless, and Nira Shaked. 2008. Information-based and experience-based metacognitive judgments: Evidence from subjective confidence. In Handbook of Metamemory and Memory. London: Psychology Press, pp. 117–35. [Google Scholar]

- Koriat, Asher, Robert A. Bjork, Limor Sheffer, and Sarah K. Bar. 2004. Predicting one’s own forgetting: The role of experience-based and theory-based processes. Journal of Experimental Psychology: General 133: 643–56. [Google Scholar] [CrossRef]

- Koriat, Asher, Sarah Lichtenstein, and Baruch Fischhoff. 1980. Reasons for confidence. Journal of Experimental Psychology: Human Learning and Memory 6: 107–18. [Google Scholar] [CrossRef]

- Kornell, Nate, and Robert A. Bjork. 2008. Learning concepts and categories: Is spacing the “enemy of induction”? Psychological Science 19: 585–92. [Google Scholar] [CrossRef]

- Little, Jeri L. 2018. The role of multiple-choice tests in increasing access to difficult-to-retrieve information. Journal of Cognitive Psycho ogy 30: 520–31. [Google Scholar] [CrossRef]

- Little, Jeri L., and Elizabeth Ligon Bjork. 2015. Optimizing multiple-choice tests as tools for learning. Memory & Cognition 43: 14–26. [Google Scholar] [CrossRef]

- Little, Jeri L., Elise A. Frickey, and Alexandra K. Fung. 2019. The role of retrieval in answering multiple-choice questions. Journal of Experimental Psychology: Learning, Memory, and Cognition 45: 1473–85. [Google Scholar] [CrossRef]

- Little, Jeri L., Elizabeth Ligon Bjork, Robert A. Bjork, and Genna Angello. 2012. Multiple-choice tests exonerated, at least of some charges: Fostering test-induced learning and avoiding test-induced forgetting. Psychological Science 23: 1337–44. [Google Scholar] [CrossRef] [PubMed]

- Marsh, Elizabeth J., Henry L. Roediger, Robert A. Bjork, and Elizabeth L. Bjork. 2007. The memorial consequences of multiple-choice testing. Psychonomic Bulletin & Review 14: 194–99. [Google Scholar] [CrossRef]

- Mazzoni, Giuliana, and Thomas O. Nelson. 1995. Judgments of learning are affected by the kind of encoding in ways that cannot be attributed to the level of recall. Journal of Experimental Psychology: Learning, Memory, and Cognition 21: 1263–74. [Google Scholar] [CrossRef]

- Nelson, Thomas O. 1984. A comparison of current measures of the accuracy of feeling-of-knowing predictions. Psychological Bulletin 95: 109–33. [Google Scholar] [CrossRef]

- Nelson, Thomas O., and John Dunlosky. 1991. When people’s judgments of learning (JOLs) are extremely accurate at predicting subsequent recall: The “delayed-JOL effect”. Psychological Science 2: 267–71. [Google Scholar] [CrossRef]

- Odegard, Timothy N., and Joshua D. Koen. 2007. “None of the above” as a correct and incorrect alternative on a multiple-choice test: Implications for the testing effect. Memory 15: 873–85. [Google Scholar] [CrossRef]

- Rhodes, Matthew G., and Alan D. Castel. 2008. Memory predictions are influenced by perceptual information: Evidence for metacognitive illusions. Journal of Experimental Psychology: General 137: 615–25. [Google Scholar] [CrossRef]

- Roediger, Henry L., III, and Jeffrey D. Karpicke. 2006. Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science 17: 249–55. [Google Scholar] [CrossRef]

- Serra, Michael J., and Lindzi L. Shanks. 2023. Methodological factors lead participants to overutilize domain familiarity as a cue for judgments of learning. Journal of Intelligence 11: 142. [Google Scholar] [CrossRef] [PubMed]

- Shaw, Raymond J., and Fergus I. M. Craik. 1989. Age differences in predictions and performance on a cued recall task. Psychology and Aging 4: 131–35. [Google Scholar] [CrossRef] [PubMed]

- Sniezek, Janet A., and Timothy Buckley. 1991. Confidence depends on level of aggregation. Journal of Behavioral Decision Making 4: 263–72. [Google Scholar] [CrossRef]

- Tullis, Jonathan G., Jason R. Finley, and Aaron S. Benjamin. 2013. Metacognition of the testing effect: Guiding learners to predict the benefits of retrieval. Memory & Cognition 41: 429–42. [Google Scholar] [CrossRef]

- Wang, Xun, Luyao Chen, Xinyue Liu, Cai Wang, Zhenxin Zhang, and Qun Ye. 2023. The screen inferiority depends on test format in reasoning and meta-reasoning tasks. Frontiers in Psychology 14: 1067577. [Google Scholar] [CrossRef] [PubMed]

| Performance | Item-by-Item Confidence | Aggregate Confidence | ||||

|---|---|---|---|---|---|---|

| NOTA | Basic | NOTA | Basic | NOTA | Basic | |

| Exp. 1a | 53% (3%) | 56% (3%) | 27.9 (1.1) | 27.8 (1.1) | 26.5 (1.5) | 31.7 (1.7) |

| Exp. 1b | 64% (3%) | 68% (3%) | 32.0 (1.1) | 33.0 (1.0) | 28.7 (1.3) | 33.6 (1.3) |

| Exp. 2a | 68% (4%) | 68% (4%) | 34.9 (1.3) | 34.3 (1.2) | 26.7 (1.6) | 36.6 (1.5) |

| Exp. 2b | 66% (4%) | 66% (4%) | 33.3 (1.0) | 33.2 (1.0) | 26.9 (1.4) | 33.4 (1.6) |

| Performance | Item-by-Item Confidence | |

|---|---|---|

| Exp. 1a | 48% (5%) | 29.5 (1.6) |

| Exp. 1b | 59% (4%) | 35.7 (1.3) |

| Exp. 2a | 22% (7%) | 37.8 (1.8) |

| Exp. 2b | 7% (4%) | 31.2 (1.9) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Little, J.L. Does Using None-of-the-Above (NOTA) Hurt Students’ Confidence? J. Intell. 2023, 11, 157. https://doi.org/10.3390/jintelligence11080157

Little JL. Does Using None-of-the-Above (NOTA) Hurt Students’ Confidence? Journal of Intelligence. 2023; 11(8):157. https://doi.org/10.3390/jintelligence11080157

Chicago/Turabian StyleLittle, Jeri L. 2023. "Does Using None-of-the-Above (NOTA) Hurt Students’ Confidence?" Journal of Intelligence 11, no. 8: 157. https://doi.org/10.3390/jintelligence11080157

APA StyleLittle, J. L. (2023). Does Using None-of-the-Above (NOTA) Hurt Students’ Confidence? Journal of Intelligence, 11(8), 157. https://doi.org/10.3390/jintelligence11080157