The Impact of Prompts and Feedback on the Performance during Multi-Session Self-Regulated Learning in the Hypermedia Environment

Abstract

1. Introduction

2. Experiment 1: The Impact of Prompts and Feedback within Hypermedia Environments on the Performance of Self-Regulated Learning

2.1. Experimental Purpose

2.2. Experimental Method

2.2.1. Participants

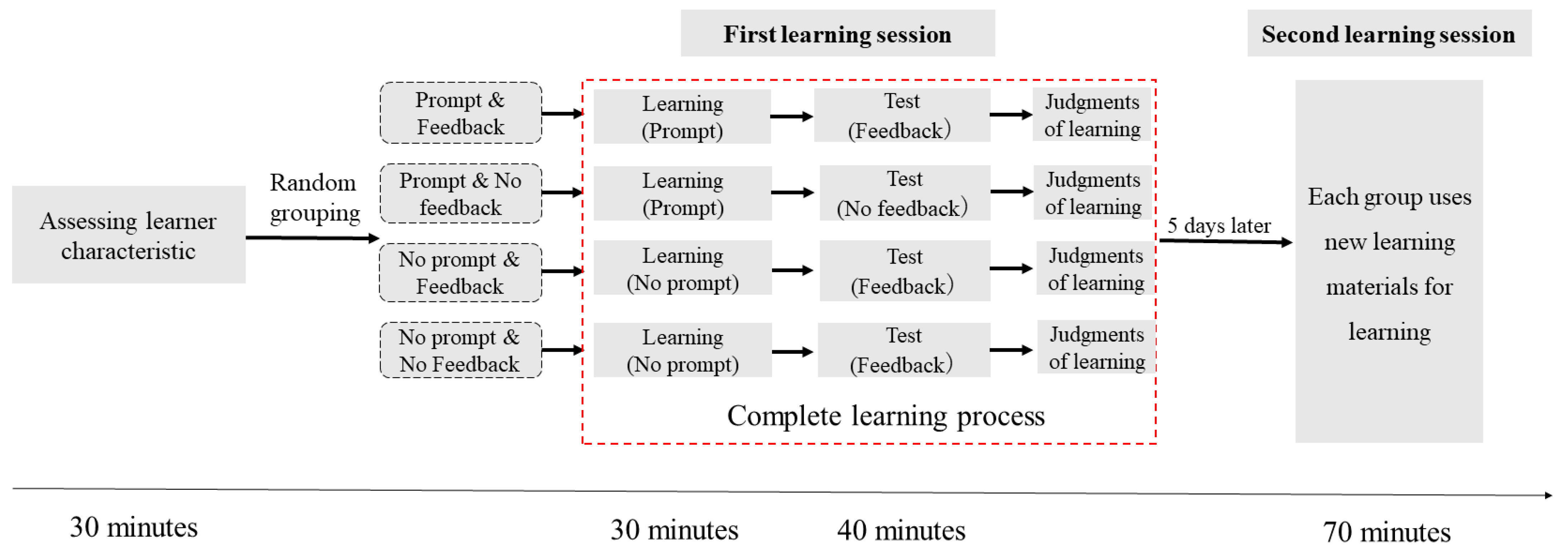

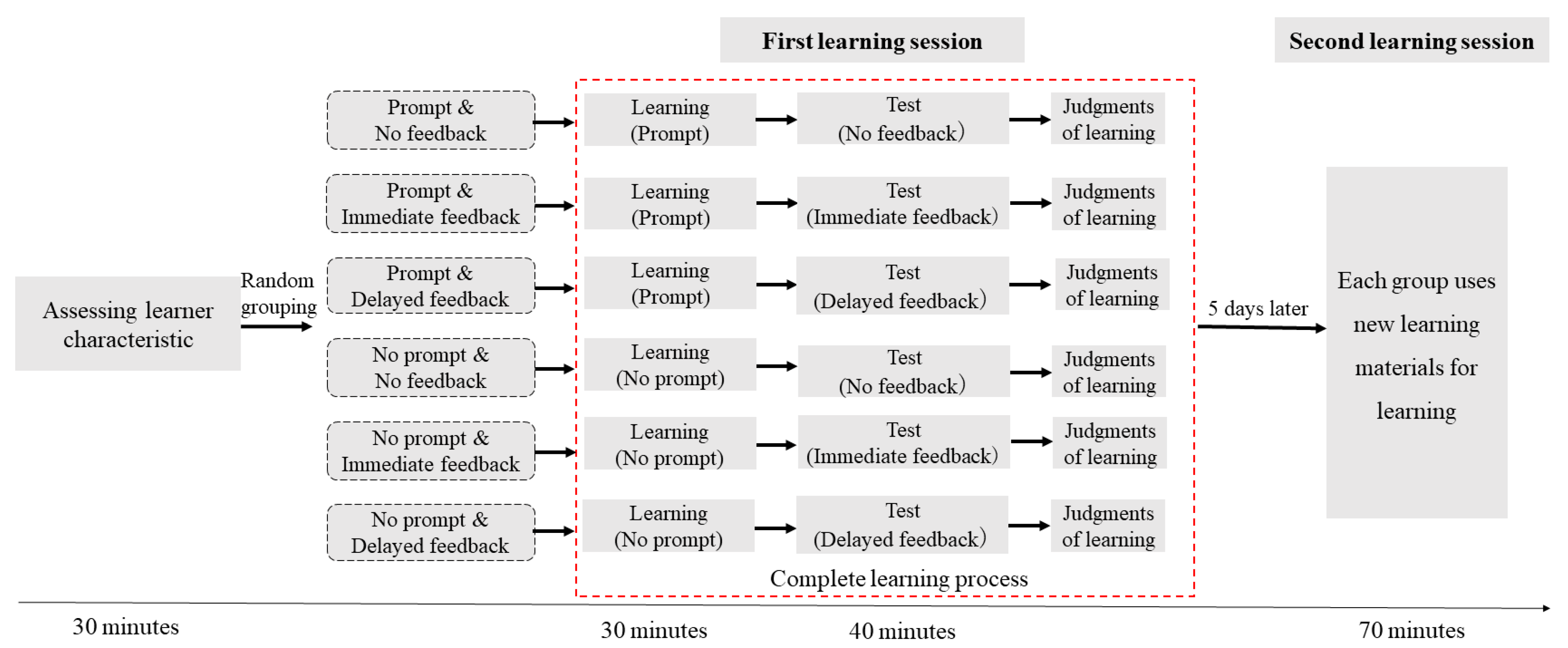

2.2.2. Experiment Design

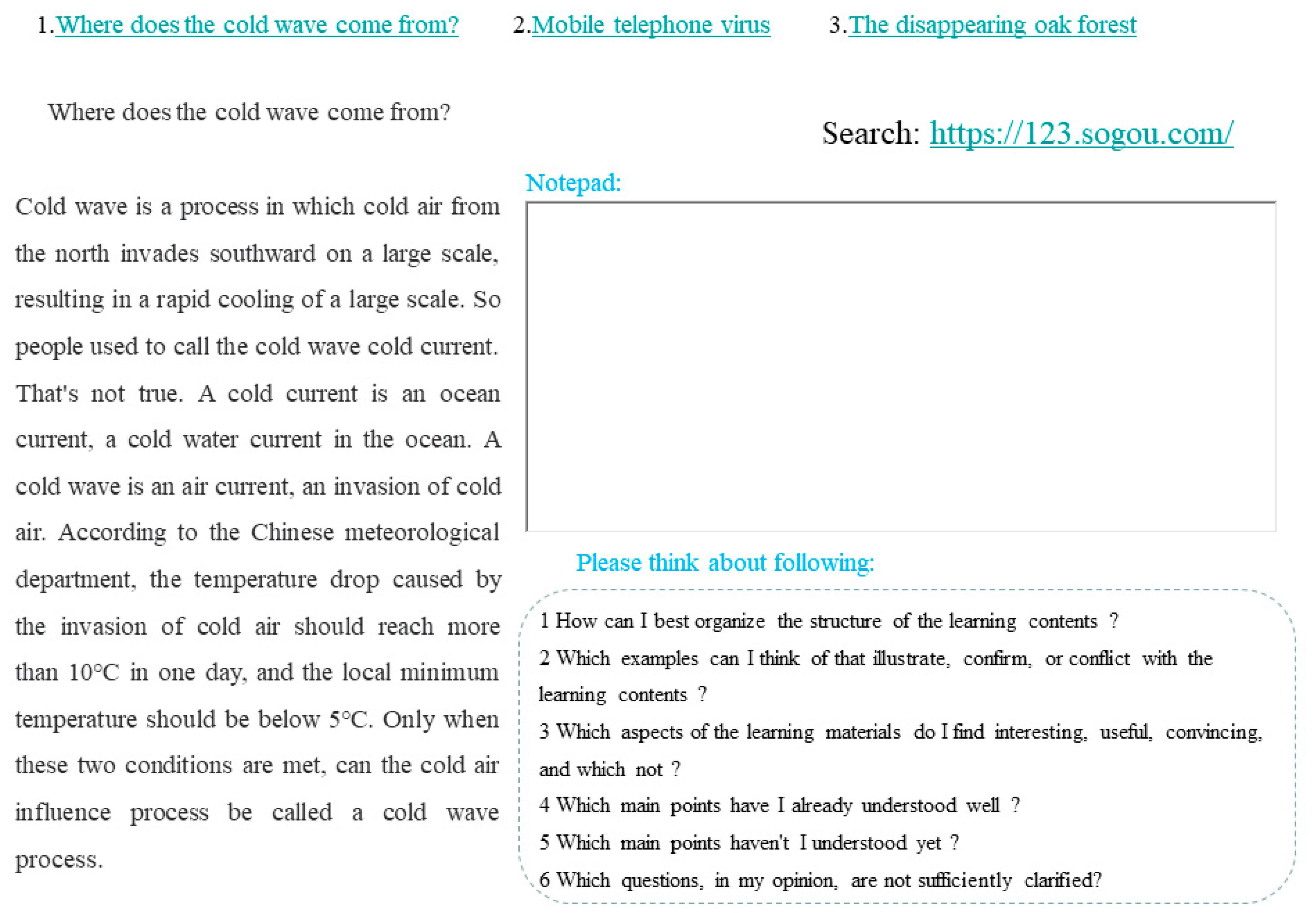

2.2.3. Learning Materials and Environments

2.2.4. Prompt Setting

2.2.5. Feedback Setting

2.2.6. Learner Characteristics Assessment

2.2.7. Learning Performance Assessment

2.2.8. Absolute Accuracy of Meta-Cognitive Monitoring

2.2.9. Experiment Procedure

2.3. Results

2.3.1. Comparison of Controlled Variables between Groups

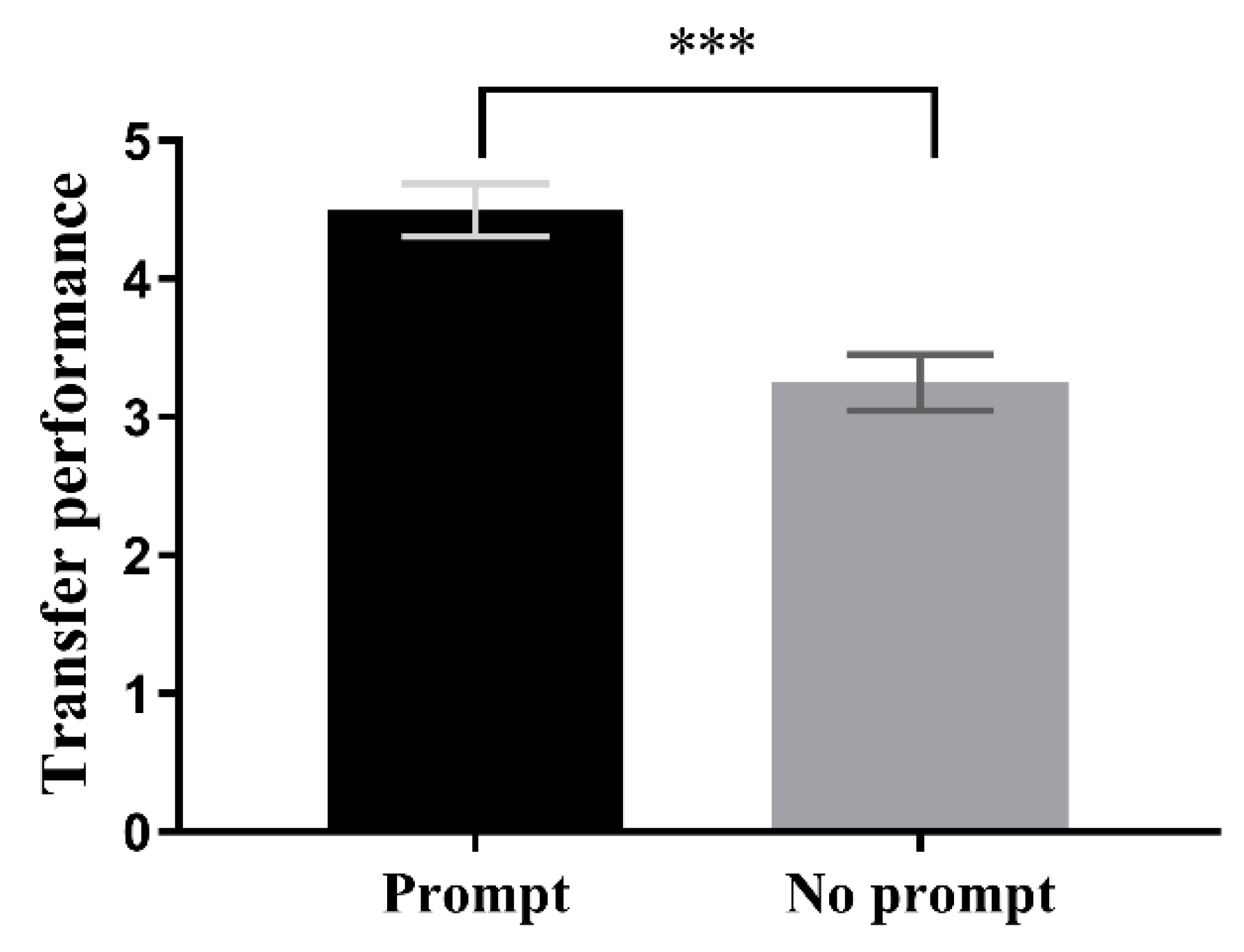

2.3.2. Three-Factor ANOVA of Different Learning Metrics

2.3.3. Three-Factor ANOVA of Predicted Actual

2.4. Discussion

3. Experiment 2: The Impact of Prompts and Different Types of Feedback on Self-Regulated Learning Outcomes in a Hypermedia Environment

3.1. Experiment Purpose

3.2. Experiment Method

3.2.1. Participants

3.2.2. Experiment Design

3.2.3. Learning Materials and Environments

3.2.4. Prompt Setting

3.2.5. Feedback Setting

3.2.6. Learner Characteristics Assessment

3.2.7. Learning Performance Assessment

3.2.8. Absolute Accuracy of Meta-Cognitive Monitoring

3.2.9. Experimental Procedure

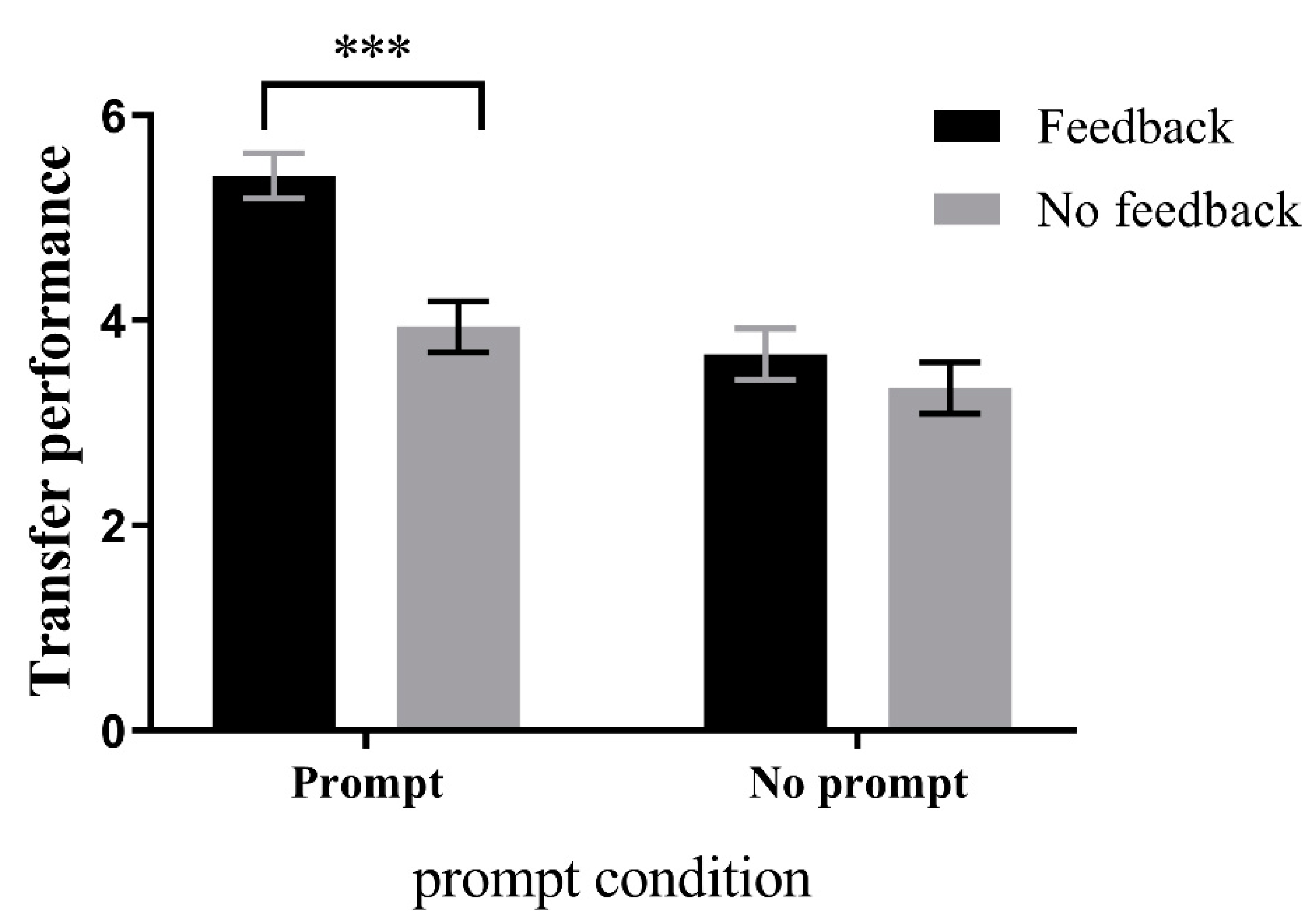

3.3. Results

3.3.1. Comparison of Controlled Variables between Groups

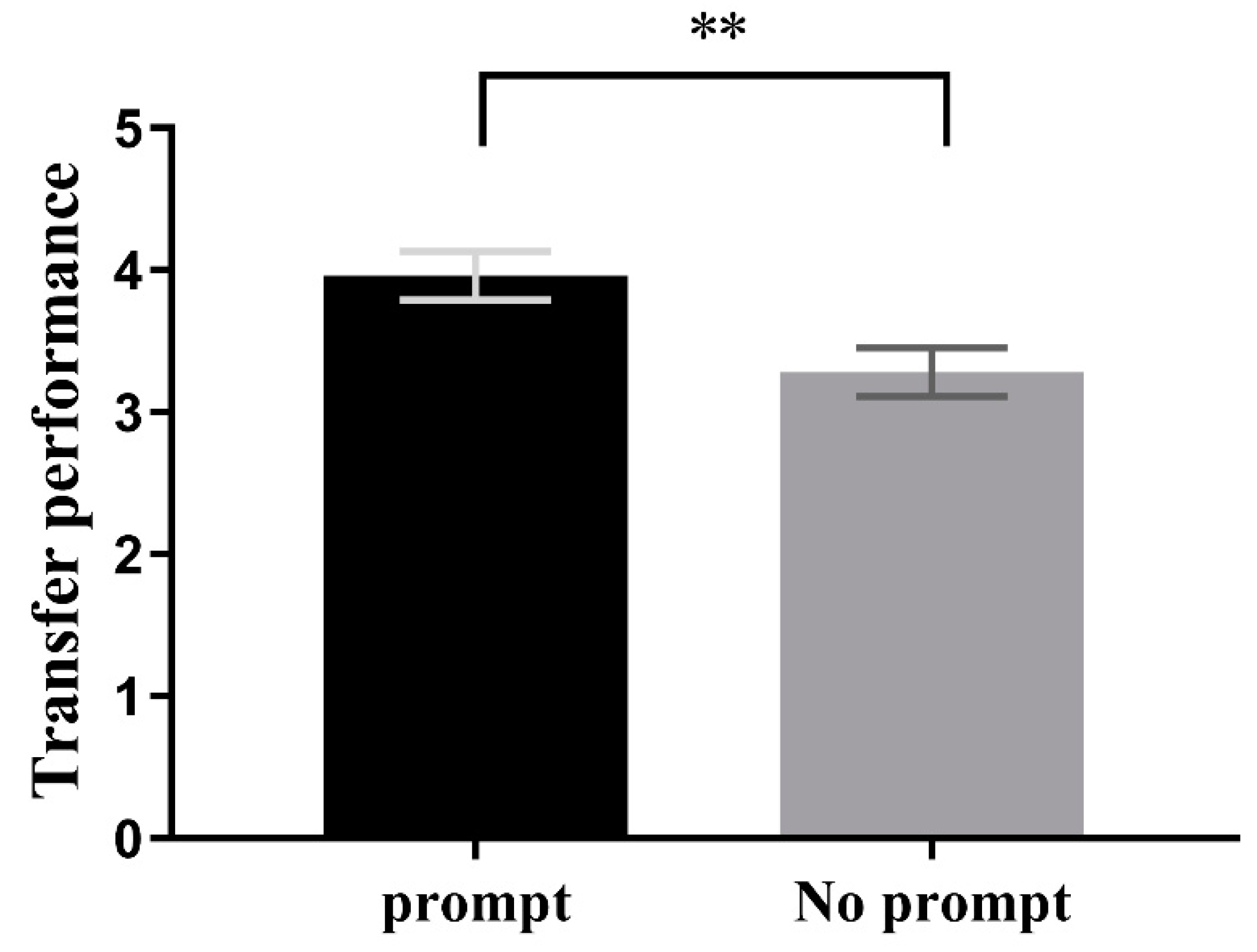

3.3.2. Three-Factor ANOVA of Different Learning Metrics

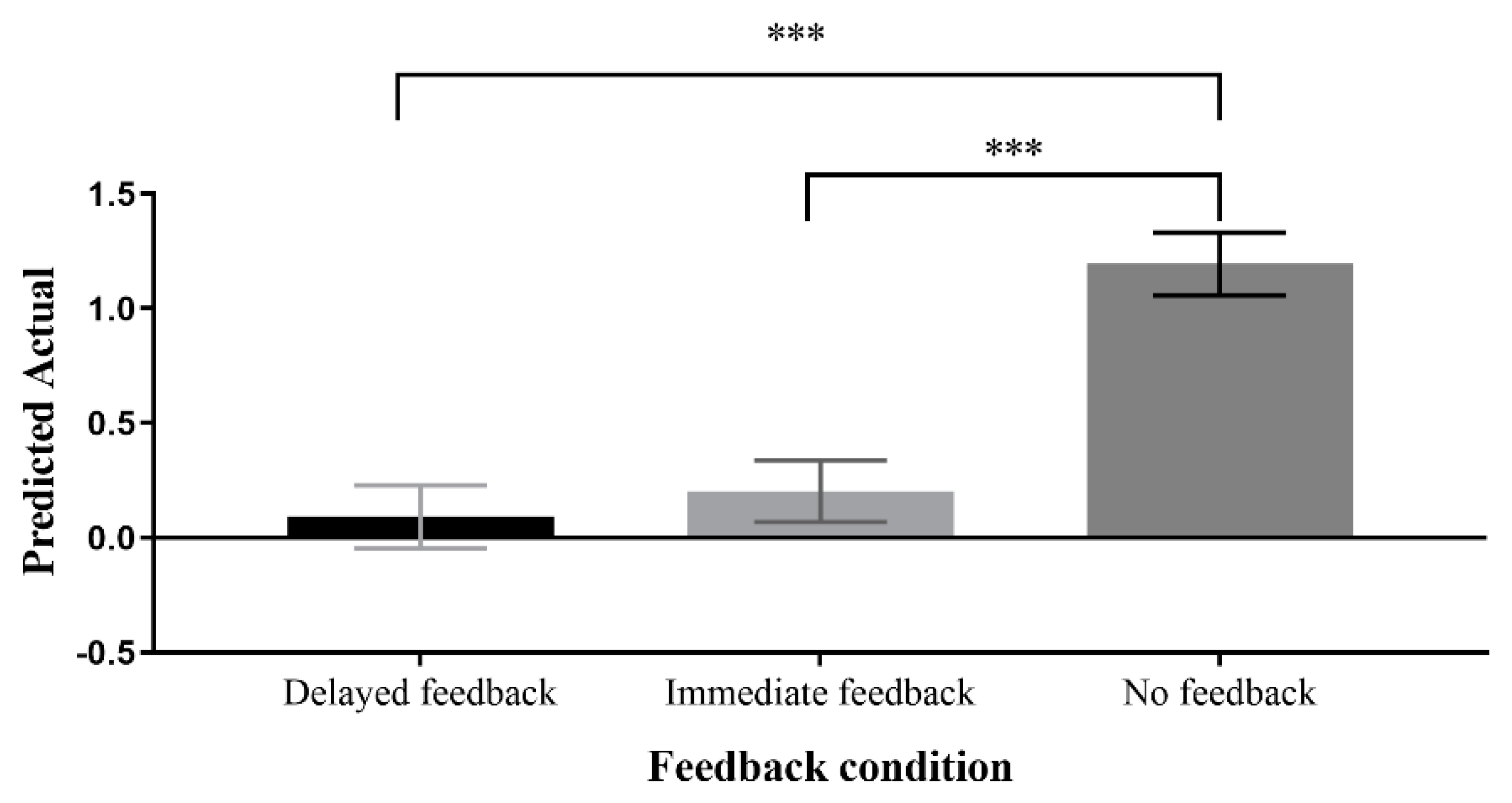

3.3.3. Three-Factor ANOVA of PA

3.4. Discussion

4. General Discussion

4.1. Prompts and Feedback Can Continuously Improve Self-Regulated Learning Performance in Multi-Session Learning

4.2. Prompts and Delayed Feedback Can Consistently Enhance Self-Regulated Learning Performance in Multi-Session Learning

4.3. Limitations and Prospects

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Azevedo, Roger, and Robert M. Bernard. 1995. A meta-analysis of the effects of feedback in computer-based instruction. Journal of Educational Computing Research 13: 111–27. [Google Scholar] [CrossRef]

- Azevedo, Roger, Jennifer G. Cromley, Daniel C. Moos, Jeffrey A. Greene, and Fielding I. Winters. 2011. Adaptive content and process scaffolding: A key to facilitating students’ self-regulated learning with hypermedia. Psychological Testing and Assessment Modeling 53: 106–40. [Google Scholar]

- Bannert, Maria, and Christpph Mengelkamp. 2013. Scaffolding hypermedia learning through metacognitive prompts. International Handbook of Metacognition and Learning Technologies 28: 171–86. [Google Scholar]

- Bannert, Maria, and Peter Reimann. 2012. Supporting self-regulated hypermedia learning through prompts. Instructional Science 40: 193–211. [Google Scholar] [CrossRef]

- Bannert, Maria, Christoph Sonnenberg, Christoph Mengelkamp, and Elisabeth Pieger. 2015. Short-and long-term effects of students’ self-directed metacognitive prompts on navigation behavior and learning performance. Computers in Human Behavior 52: 293–306. [Google Scholar] [CrossRef]

- Bannert, Maria, Melanie Hildebrand, and Christoph Mengelkamp. 2009. Effects of a metacognitive support device in learning environments. Computers in Human Behavior 25: 829–35. [Google Scholar] [CrossRef]

- Berthold, Kirsten, Matthias Nückles, and Alexabder Renkl. 2007. Do learning protocols support learning strategies and outcomes? The role of cognitive and metacognitive prompts. Learning & Instruction 17: 564–77. [Google Scholar]

- Biggs, John B. 1987. Study Process Questionnaire Manual. Student Approaches to Learning and Studying. Frederick St. Hawthorn: Australian Council for Educational Research Ltd. [Google Scholar]

- Bloom, Bernard S. 1956. Taxonomy of Educational Objectives. Affective Domain. Thousand Oaks: Corwin Press. [Google Scholar]

- Breitwieser, Jasmin, Andreas B. Neubauer, Florian Schmiedek, and Garvin Brod. 2022. Self-Regulation Prompts Promote the Achievement of Learning Goals-but Only Briefly: Uncovering Hidden Dynamics in the Effects of a Psychological Intervention. Learning and Instruction 80: 101560. [Google Scholar] [CrossRef]

- Butler, Andrew C., Jeffrey D. Karpicke, and Henry L. Roediger, III. 2007. The effect of type and timing of feedback on learning from multiple-choice tests. Journal of Experimental Psychology: Applied 13: 273. [Google Scholar]

- Butler, Andrew, Namrata Godbole, and Elizabeth J. Marsh. 2013. Explanation feedback is better than correct answer feedback for promoting transfer of learning. Journal of Educational Psychology 105: 290. [Google Scholar] [CrossRef]

- Butler, Deborah L., and Philip H. Winne. 1995. Feedback and Self-Regulated Learning: A Theoretical Synthesis. Review of Educational Research 65: 245–81. [Google Scholar] [CrossRef]

- Chou, Chih Yueh, and Nian Bao Zou. 2020. An analysis of internal and external feedback in self-regulated learning activities mediated by self-regulated learning tools and open learner models. International Journal of Educational Technology in Higher Education 17: 1–27. [Google Scholar] [CrossRef]

- Christoph, Sonnenberg, and Bannert Maria. 2019. Using process mining to examine the sustainability of instructional support: How stable are the effects of metacognitive prompting on self-regulatory behavior? Computers in Human Behavior 96: 259–72. [Google Scholar]

- Dignath, Charlotte, and Gerhard Büttner. 2008. Components of fostering self-regulated learning among students. A meta-analysis on intervention studies at primary and secondary school level. Metacognition and Learning 3: 231–64. [Google Scholar] [CrossRef]

- Engelmann, Katharina, Maria Bannert, and Nadine Melzner. 2021. Do self-created metacognitive prompts promote short- and long-term effects in computer-based learning environments? Research and Practice in Technology Enhanced Learning 16: 1–21. [Google Scholar] [CrossRef]

- Hattie, John, and Helen Timperley. 2007. The power of feedback. Review of Educational Research 77: 81–112. [Google Scholar] [CrossRef]

- Jaehnig, Wendy, and Matthew L. Miller. 2007. Feedback types in programmed instruction: A systematic review. The Psychological Record 57: 219–32. [Google Scholar] [CrossRef]

- Kapp, Felix, Antje Proske, Susanne Narciss, and Herman Körndle. 2015. Distributing vs. blocking learning questions in a web-based learning environment. Journal of Educational Computing Research 51: 397–416. [Google Scholar] [CrossRef]

- Kulhavy, Raymond W., and Richrad C. Anderson. 1972. Delay-retention effect with multiple-choice tests. Journal of Educational Psychology 63: 505. [Google Scholar] [CrossRef]

- Lee, Hyeon Woo, Kyu Yon Lim, and Barbara L. Grabowski. 2010. Improving self-regulation, learning strategy use, and achievement with metacognitive feedback. Educational Technology Research & Development 58: 629–48. [Google Scholar]

- Lehmann, Thomas, Lnka Hähnlein, and Dirk Ifenthaler. 2014. Cognitive, metacognitive and motivational perspectives on preflection in self-regulated online learning. Computers in Human Behavior 32: 313–23. [Google Scholar] [CrossRef]

- Li, Lei, Zhijin Hou, and Xuejun Bai. 1997. Motivation and learning strategies of senior students at different levels. Psychological Development and Education 13: 17–21. [Google Scholar]

- Maki, Ruth H., Micheal Shields, Amanda Easton Wheeler, and Tammy Lowery Zacchilli. 2005. Individual Differences in Absolute and Relative Metacomprehension Accuracy. Journal of Educational Psychology 97: 723. [Google Scholar] [CrossRef]

- Metcalfe, Janet, Nate Kornell, and Bridgid Finn. 2009. Delayed versus immediate feedback in children’s and adults’ vocabulary learning. Memory & Cognition 37: 1077–87. [Google Scholar]

- Moos, Daniel C., and Roger Azevedo. 2008. Exploring the fluctuation of motivation and use of self-regulatory processes during learning with hypermedia. Instructional Science 36: 203–31. [Google Scholar] [CrossRef]

- Moreno, Roxana. 2004. Decreasing cognitive load for novice students: Effects of explanatory versus corrective feedback in discovery-based multimedia. Instructional Science 32: 99–113. [Google Scholar] [CrossRef]

- Müller, Nadja M., and Seufert Tina. 2018. Effects of self-regulation prompts in hypermedia learning on learning performance and self-efficacy. Learning and Instruction 58: 1–11. [Google Scholar] [CrossRef]

- Narciss, Susanne. 2008. Feedback strategies for interactive learning tasks. In Handbook of Research on Educational Communications and Technology. London: Routledge, pp. 125–43. [Google Scholar]

- Narciss, Susanne. 2013. Designing and evaluating tutoring feedback strategies for digital learning. Digital Education Review 23: 7–26. [Google Scholar]

- Nicol, David J., and Debra Macfarlane-Dick. 2006. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education 31: 199–218. [Google Scholar] [CrossRef]

- Scheiter, Katharina, and Peter Gerjets. 2007. Learner control in hypermedia environments. Educational Psychology Review 19: 285–307. [Google Scholar] [CrossRef]

- Shapiro, Amy Shapiro, and Dale Niederhauser. 2004. Learning from Hypertext: Research Issues and Findings. In Handbook of Research on Educational Communications & Technology. Available online: https://compassproject.net/Sadhana/teaching/readings/23.pdf (accessed on 9 December 2022).

- Shute, Valerie J. 2008. Focus on formative feedback. Review of Educational Research 78: 153–89. [Google Scholar] [CrossRef]

- Thillmann, Hubertina, Josef Künsting, Joachim Wirth, and Detlev Leutner. 2009. Is it merely a question of “what” to prompt or also “when” to prompt? The role of point of presentation time of prompts in self-regulated learning. Zeitschrift für Pädagogische Psychologie 23: 105–15. [Google Scholar] [CrossRef]

- Van der Kleij, Fabienne M., Remco C. Feskens, and Theo J. Eggen. 2015. Effects of feedback in a computer-based learning environment on students’ learning outcomes: A meta-analysis. Review of Educational Research 85: 475–511. [Google Scholar] [CrossRef]

- Zheng, Lanqin. 2016. The effectiveness of self-regulated learning scaffolds on academic performance in computer-based learning environments: A meta-analysis. Asia Pacific Education Review 17: 187–202. [Google Scholar] [CrossRef]

- Zimmerman, Barry J. 2000. Attaining self-regulation: A social cognitive perspective. In Handbook of Self-Regulation. Cambridge: Academic Press, pp. 13–39. [Google Scholar]

| Prompt and Feedback | Prompt and No Feedback | No Prompt and Feedback | No Prompt and No Feedback | p | |

|---|---|---|---|---|---|

| Male | 9 (41%) | 9 (50%) | 7 (39%) | 8 (44%) | 0.912 a |

| Age | 16.09 (0.43) | 16.22 (0.43) | 16.06 (0.42) | 16.17 (0.8) | 0.616 b |

| Learning strategies | 196.18 (5.80) | 199.33 (6.51) | 197.50 (10.18) | 196.00 (10.53) | 0.626 b |

| Motivation for learning | 73.50 (2.54) | 75.83 (3.05) | 75.44 (2.99) | 74.50 (3.87) | 0.091 b |

| Prior knowledge (Session 1) | 0.68 (1.29) | 0.33 (0.97) | 0.33 (0.97) | 0.33 (0.97) | 0.649 b |

| Prior knowledge (Session 2) | 1.64 (2.36) | 0.44 (1.29) | 1.11 (1.84) | 0.67 (1.86) | 0.186 b |

| Learning Session 1 | Learning Session 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| Prompt | No Prompt | Prompt | No Prompt | |||||

| Feedback n = 22 | No Feedback n = 18 | Feedback n = 18 | No Feedback n = 18 | Feedback n = 22 | No Feedback n = 18 | Feedback n = 18 | No Feedback n = 18 | |

| Recall | 2.41 (1.17) | 2.17 (0.84) | 2.17 (0.77) | 2.44 (0.51) | 2.41 (1.00) | 2.19 (0.77) | 2.39 (0.83) | 2.44 (0.48) |

| Comprehension | 2.55 (1.53) | 2.67 (1.94) | 2.00 (0.97) | 2.33 (1.03) | 2.55 (1.26) | 2.78 (1.56) | 2.28 (0.83) | 2.22 (0.94) |

| Transfer | 4.77 (1.50) | 4.22 (1.33) | 3.39 (1.02) | 3.11 (0.76) | 5.41 (1.34) | 3.94 (1.01) | 3.67 (0.77) | 3.34 (0.90) |

| JOL | 11.18 (3.35) | 10.56 (3.15) | 7.22 (2.21) | 8.81 (2.32) | 10.34 (2.74) | 10.17 (1.95) | 8.33 (1.56) | 9.53 (2.25) |

| PA | 0.23 (0.46) | 1.50 (1.85) | 0.42 (1.27) | 0.33 (1.29) | −0.11 (0.49) | 1.36 (0.76) | 0.22 (0.81) | 1.13 (0.94) |

| Prompt | Feedback | Learning Session | Prompt × Feedback | Prompt × Learning Session | Feedback × Learning Session | Prompt × Feedback × Learning Session | |

|---|---|---|---|---|---|---|---|

| Recall | n.s | n.s | n.s | F(1,72) = 1.182 p = 0.281 η2 = 0.16 | n.s | n.s | n.s |

| Comprehension | F(1,72) = 2.396 p = 0.126 η2 = 0.03 | n.s | n.s | n.s | n.s | n.s | n.s |

| Transfer | F(1,72) = 22.617 p < 0.001 η2 = 0.24 | F(1,72) = 6.623 p < 0.05 η2 = 0.08 | F(1,72) = 15.56 p < 0.001 η2 = 0.18 | F(1,72) = 1.916 p = 0.171 η2 = 0.03 | n.s | F(1,72) = 19.37 p < 0.001 η2 = 0.21 | F(1,72) = 15.56 p < 0.001 η2 = 0.18 |

| PA | F(1,72) = 1.719 p = 0.194 η2 = 0.02 | F(1,72) = 29.078 p < 0.001 η2 = 0.29 | n.s | F(1,72) = 8.687 p < 0.01 η2 = 0.10 | F(1,72) = 2.272 p = 0.136 η2 = 0.03 | F(1,72) = 2.761 p = 0.101 η2 = 0.04 | F(1,72) = 1.211 p = 0.275 η2 = 0.017 |

| Prompt | No Prompt | p | |||||

|---|---|---|---|---|---|---|---|

| Delayed Feedback | Immediate Feedback | No Feedback | Delayed Feedback | Immediate Feedback | No Feedback | ||

| Male | 7 (40%) | 8 (50%) | 6 (40%) | 6 (41%) | 8 (38%) | 8 (38%) | 0.950 a |

| Age | 16.07 (0.46) | 16.25 (0.45) | 16.07 (0.46) | 16.19 (0.40) | 16.13 (0.34) | 16.19 (0.40) | 0.790 b |

| Learning strategies | 183.67 (4.86) | 185.81 (6.10) | 181.93 (3.15) | 183.50 (4.52) | 184.31 (4.95) | 184.56 (5.01) | 0.371 b |

| Motivation for learning | 69.00 (1.77) | 70.19 (2.17) | 69.53 (3.00) | 68.75 (1.44) | 69.19 (1.64) | 70.25 (2.77) | 0.282 b |

| Prior knowledge (Session 1) | 1.4 (1.55) | 1.31 (1.54) | 0.80 (1.37) | 2.06 (1.44) | 1.69 (1.54) | 1.31 (1.54) | 0.299 b |

| Prior knowledge (Session 2) | 1.07 (1.83) | 0.00 (0.00) | 0.80 (1.66) | 1.50 (2.00) | 0.75 (1.61) | 0.50 (1.37) | 0.143 b |

| Learning Session 1 | Learning Session 2 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prompt | No Prompt | Prompt | No Prompt | |||||||||

| Delayed Feedback n = 15 | Immediate Feedback n = 16 | No Feedback n = 15 | Delayed Feedback n = 16 | Immediate Feedback n = 16 | No Feedback n = 16 | Delayed Feedback n = 15 | Immediate Feedback n = 16 | No Feedback n = 15 | Delayed Feedback n = 16 | Immediate Feedback n = 16 | No Feedback n = 16 | |

| Recall | 2.20 (1.16) | 2.28 (1.06) | 1.90 (0.83) | 2.13 (1.15) | 1.84 (0.87) | 1.69 (0.68) | 2.33 (0.92) | 2.46 (0.88) | 2.27 (0.82) | 2.19 (1.03) | 1.88 (0.83) | 1.81 (0.75) |

| Comprehension | 1.60 (1.12) | 2.13 (1.36) | 2.13 (0.92) | 1.75 (0.68) | 2.13 (0.89) | 1.50 (0.89) | 1.73 (0.70) | 1.88 (0.50) | 2.00 (0.76) | 2.13 (0.89) | 2.13 (1.15) | 1.88 (0.89) |

| Transfer | 3.93 (1.08) | 4.16 (1.15) | 3.80 (1.46) | 3.47 (1.06) | 3.19 (1.22) | 3.19 (0.98) | 5.53 (0.83) | 4.59 (0.88) | 3.40 (0.83) | 3.81 (0.83) | 3.25 (0.77) | 3.00 (0.82) |

| JOL | 9.23 (2.48) | 9.13 (2.82) | 9.73 (2.64) | 7.94 (3.29) | 7.44 (3.31) | 7.44 (2.37) | 10.30 (1.54) | 9.84 (2.28) | 9.33 (1.80) | 7.91 (1.65) | 7.50 (1.83) | 7.88 (1.87) |

| PA | 0.43 (0.53) | 0.22 (0.41) | 1.57 (0.82) | 0.28 (1.40) | 0.31 (1.67) | 0.78 (1.37) | −0.07 (0.42) | 0.16 (0.57) | 1.37 (1.30) | −0.28 (0.77) | 0.13 (1.15) | 1.06 (1.09) |

| Prompt | Feedback | Learning Session | Prompt × Feedback | Prompt × Learning Session | Feedback × Learning Session | Prompt × Feedback × Learning Session | |

|---|---|---|---|---|---|---|---|

| Recall | F(1,88) = 3.024 p = 0.086 η2 = 0.03 | n.s | F(1,88) = 8.260 p < 0.05 η2 = 0.09 | n.s | F(1,88) = 2.210 p = 0.141 η2 = 0.02 | n.s | n.s |

| Comprehension | n.s | F(1,88) = 1.179 p = 0.313 η2 = 0.03 | n.s | F(1,88) = 1.879 p = 0.160 η2 = 0.04 | F(1,88) = 1.766 p = 0.187 η2 = 0.02 | n.s | n.s |

| Transfer | F(1,88) = 25.441 p < 0.001 η2 = 0.22 | F(1,88) = 7.032 p < 0.01 η2 = 0.14 | F(1,88) = 9.158 p < 0.01 η2 = 0.09 | F(1,88) = 1.286 p = 0.281 η2 = 0.03 | F(1,88) = 5.350 p < 0.05 η2 = 0.06 | F(1,88) = 12.720 p < 0.001 η2 = 0.22 | F(1,88) = 4.312 p < 0.05 η2 = 0.09 |

| PA | F(1,88) = 2.194 p = 0.142 η2 = 0.02 | F(1,88) = 19.839 p < 0.001 η2 = 0.31 | F(1,88) = 1.905 p = 0.171 η2 = 0.02 | F(1,88) = 1.152 p = 0.321 η2 = 0.03 | n.s | F(1,88) = 1.293 p = 0.280 η2 = 0.03 | n.s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zhang, H.; Wang, J.; Ma, X. The Impact of Prompts and Feedback on the Performance during Multi-Session Self-Regulated Learning in the Hypermedia Environment. J. Intell. 2023, 11, 131. https://doi.org/10.3390/jintelligence11070131

Wang Y, Zhang H, Wang J, Ma X. The Impact of Prompts and Feedback on the Performance during Multi-Session Self-Regulated Learning in the Hypermedia Environment. Journal of Intelligence. 2023; 11(7):131. https://doi.org/10.3390/jintelligence11070131

Chicago/Turabian StyleWang, Yurou, Haobo Zhang, Jue Wang, and Xiaofeng Ma. 2023. "The Impact of Prompts and Feedback on the Performance during Multi-Session Self-Regulated Learning in the Hypermedia Environment" Journal of Intelligence 11, no. 7: 131. https://doi.org/10.3390/jintelligence11070131

APA StyleWang, Y., Zhang, H., Wang, J., & Ma, X. (2023). The Impact of Prompts and Feedback on the Performance during Multi-Session Self-Regulated Learning in the Hypermedia Environment. Journal of Intelligence, 11(7), 131. https://doi.org/10.3390/jintelligence11070131