Do Judgments of Learning Impair Recall When Uninformative Cues Are Salient?

Abstract

:1. Introduction

2. JOL Reactivity Research

3. Cue-Strengthening Hypothesis

4. Cue-Processing Account of Reactivity

5. Current Study

6. EXPERIMENT 1

6.1. Method

Participants

6.2. Materials and Procedure

6.3. Results and Discussion

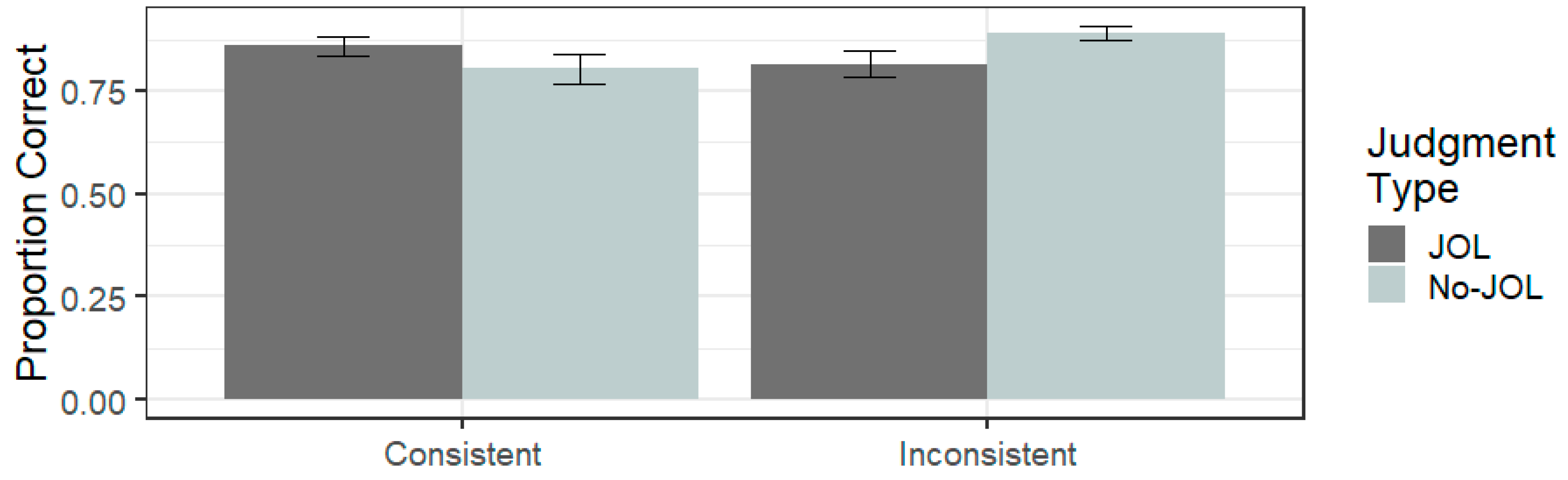

6.3.1. Judgement Type and Font Consistency

6.3.2. Font Size Effects on Recall and JOLs

7. EXPERIMENT 2

7.1. Method

Participants

7.2. Materials and Procedure

7.3. Results and Discussion

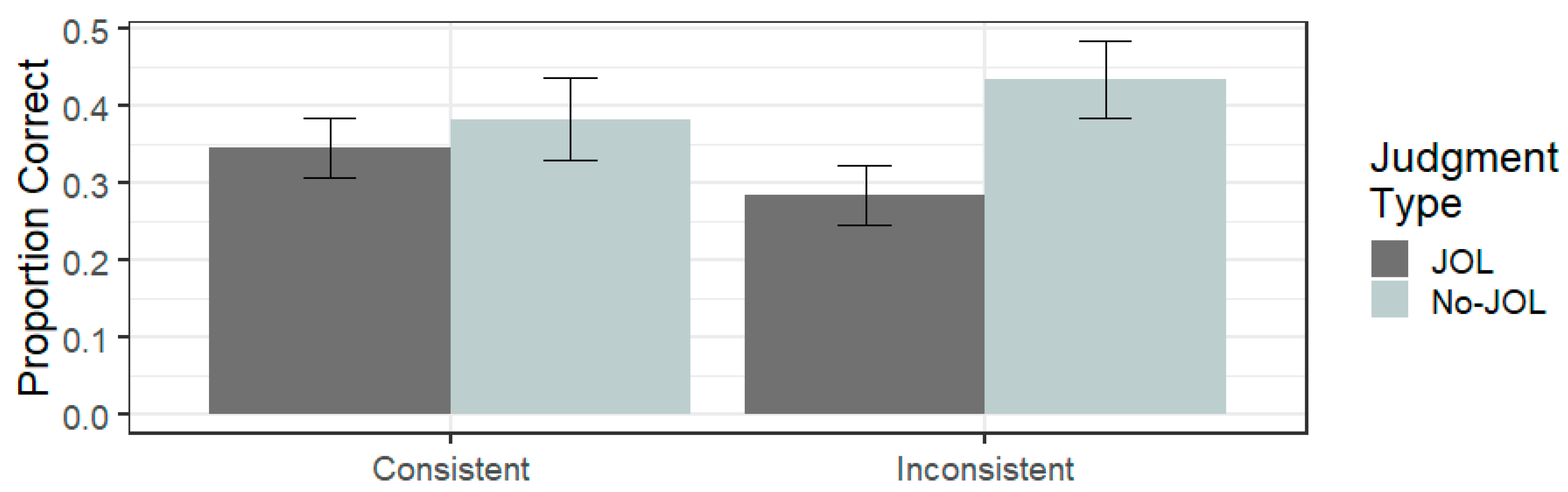

7.3.1. Judgement Type and Font Consistency

7.3.2. Font Size Effects on Recall and JOLs

8. EXPERIMENT 3

8.1. Participants

8.2. Materials and Procedure

8.3. Results and Discussion

8.3.1. Recall

8.3.2. Study Time

8.3.3. JOLs

8.3.4. Mini Meta-Analysis

8.3.5. General Discussion

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ariel, Robert, Jeffrey D. Karpicke, Amber E. Witherby, and Sarah K. Tauber. 2021. Do judgments of learning directly enhance learning of educational materials? Educational Psychology Review 33: 693–712. [Google Scholar] [CrossRef]

- Begg, Ian, Susanna Duft, Paul Lalonde, Richard Melnick, and Josephine Sanvito. 1989. Memory predictions are based on ease of processing. Journal of Memory and Language 28: 610. [Google Scholar] [CrossRef]

- Benjamin, Aaron S., Robert A. Bjork, and Bennett L. Schwartz. 1998. The mismeasure of memory: When retrieval fluency is misleading as a metamnemonic index. Journal of Experimental Psychology: General 127: 55. [Google Scholar] [CrossRef] [PubMed]

- Birney, Damian P., Jens F. Beckmann, Nadin Beckmann, Kit S. Double, and Karen Whittingham. 2018. Moderators of learning and performance trajectories in microworld simulations: Too soon to give up on intellect!? Intelligence 68: 128–40. [Google Scholar] [CrossRef]

- Chang, Minyu, and Charles J. Brainerd. 2022. Association and dissociation between judgments of learning and memory: A Meta-analysis of the font size effect. Metacognition and Learning 17: 443–76. [Google Scholar] [CrossRef]

- Double, Kit S., and Damian P. Birney. 2017a. Are you sure about that? Eliciting confidence ratings may influence performance on Raven’s progressive matrices. Thinking and Reasoning 23: 190–206. [Google Scholar] [CrossRef]

- Double, Kit S., and Damian P. Birney. 2017b. The interplay between self-evaluation, goal orientation, and self-efficacy on performance and learning. Paper presented at the 39th Annual Conference of the Cognitive Science Society, London, UK, July 26–29. [Google Scholar]

- Double, Kit S., and Damian P. Birney. 2018. Reactivity to confidence ratings in older individuals performing the latin square task. Metacognition and Learning 13: 309–26. [Google Scholar] [CrossRef]

- Double, Kit S., and Damian P. Birney. 2019a. Do confidence ratings prime confidence? Psychonomic Bulletin and Review 26: 1035–42. [Google Scholar] [CrossRef]

- Double, Kit S., and Damian P. Birney. 2019b. Reactivity to measures of metacognition. Frontiers in Psychology 10: 2755. [Google Scholar] [CrossRef]

- Double, Kit S., Damian P. Birney, and Sarah A. Walker. 2018. A meta-analysis and systematic review of reactivity to judgements of learning. Memory 26: 741–50. [Google Scholar] [CrossRef]

- Dougherty, Michael R., Alison M. Robey, and Daniel Buttaccio. 2018. Do metacognitive judgments alter memory performance beyond the benefits of retrieval practice? A comment on and replication attempt of Dougherty, Scheck, Nelson, and Narens (2005). Memory and Cognition 46: 558–65. [Google Scholar] [CrossRef]

- Dougherty, Michael R., Petra Scheck, Thomas O. Nelson, and Louis Narens. 2005. Using the past to predict the future. Memory and Cognition 33: 1096–115. [Google Scholar] [CrossRef]

- Fox, Mark C., and Neil Charness. 2010. How to Gain Eleven IQ Points in Ten Minutes: Thinking Aloud Improves Raven’s Matrices Performance in Older Adults. Aging, Neuropsychology, and Cognition 17: 191–204. [Google Scholar] [CrossRef]

- Garcia, Mikey, and Nate Kornell. 2014. Collector [Software]. Available online: https://github.com/gikeymarcia/Collector (accessed on 21 January 2019).

- Goh, Jin X., Judith A. Hall, and Robert Rosenthal. 2016. Mini meta-analysis of your own studies: Some arguments on why and a primer on how. Social and Personality Psychology Compass 10: 535–49. [Google Scholar] [CrossRef]

- Halamish, Vered. 2018. Can very small font size enhance memory? Memory and Cognition 46: 979–93. [Google Scholar] [CrossRef] [PubMed]

- Halamish, Vered, and Monika Undorf. 2023. Why do judgments of learning modify memory? Evidence from identical pairs and relatedness judgments. Journal of Experimental Psychology: Learning, Memory, and Cognition 49: 547. [Google Scholar] [CrossRef] [PubMed]

- Janes, Jessica L., Michelle L. Rivers, and John Dunlosky. 2018. The influence of making judgments of learning on memory performance: Positive, negative, or both? Psychonomic Bulletin and Review 25: 2356–64. [Google Scholar] [CrossRef]

- Kelemen, William L., and Charles A. Weaver III. 1997. Enhanced memory at delays: Why do judgments of learning improve over time? Journal of Experimental Psychology: Learning, Memory, and Cognition 23: 1394. [Google Scholar] [CrossRef]

- Koriat, Asher. 1997. Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General 126: 349. [Google Scholar] [CrossRef]

- Kornell, Nate, Matthew G. Rhodes, Alan D. Castel, and Sarah K. Tauber. 2011. The ease-of-processing heuristic and the stability bias: Dissociating memory, memory beliefs, and memory judgments. Psychological Science 22: 787–94. [Google Scholar] [CrossRef] [PubMed]

- Lei, Wei, Jing Chen, Chunliang Yang, Yiqun Guo, Pan Feng, Tingyong Feng, and Hong Li. 2020. Metacognition-related regions modulate the reactivity effect of confidence ratings on perceptual decision-making. Neuropsychologia 144: 107502. [Google Scholar] [CrossRef]

- Luna, Karlos, Beatriz Martín-Luengo, and Pedro B. Albuquerque. 2018. Do delayed judgements of learning reduce metamemory illusions? A meta-analysis. Quarterly Journal of Experimental Psychology 71: 1626–36. [Google Scholar] [CrossRef] [PubMed]

- Maxwell, Nicholas P., and Mark J. Huff. 2022. Reactivity from judgments of learning is not only due to memory forecasting: Evidence from associative memory and frequency judgments. Metacognition and Learning 17: 589–625. [Google Scholar] [CrossRef] [PubMed]

- Maxwell, Nicholas P., and Mark J. Huff. 2023. Is discriminability a requirement for reactivity? Comparing the effects of mixed vs. pure list presentations on judgment of learning reactivity. Memory and Cognition 51: 1198–213. [Google Scholar] [CrossRef] [PubMed]

- Mitchum, Ainsley L., Colleen M. Kelley, and Mark C. Fox. 2016. When asking the question changes the ultimate answer: Metamemory judgments change memory. Journal of Experimental Psychology: General 145: 200. [Google Scholar] [CrossRef] [PubMed]

- Murphy, Dillon H., Vered Halamish, Matthew G. Rhodes, and Alan D. Castel. 2023. How evaluating memorability can lead to Unintended Consequences. Metacognition and Learning 18: 375–403. [Google Scholar] [CrossRef]

- Nelson, Douglas L., Cathy L. McEvoy, and Thomas A. Schreiber. 2004. The University of South Florida free association, rhyme, and word fragment norms. Behavior Research Methods, Instruments, and Computers 36: 402–7. [Google Scholar] [CrossRef]

- R Core Team. 2017. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. [Google Scholar]

- Rhodes, Matthew G., and Alan D. Castel. 2008. Memory predictions are influenced by perceptual information: Evidence for metacognitive illusions. Journal of Experimental Psychology: General 137: 615. [Google Scholar] [CrossRef]

- Rhodes, Matthew G., and Sarah K. Tauber. 2011. The influence of delaying judgments of learning on metacognitive accuracy: A meta-analytic review. Psychological Bulletin 137: 131. [Google Scholar]

- Rivers, Michelle L., Jessica L. Janes, and John Dunlosky. 2021. Investigating memory reactivity with a within-participant manipulation of judgments of learning: Support for the cue-strengthening hypothesis. Memory 29: 1342–53. [Google Scholar] [CrossRef]

- Shi, Aike, Chenyuqi Xu, Wenbo Zhao, David R. Shanks, Xiao Hu, Liang Luo, and Chunliang Yang. 2023. Judgments of learning reactively facilitate visual memory by enhancing learning engagement. Psychonomic Bulletin and Review 30: 676–87. [Google Scholar] [CrossRef]

- Soderstrom, Nicholas C., Colin T. Clark, Vered Halamish, and Elizabeth Ligon Bjork. 2015. Judgments of learning as memory modifiers. Journal of Experimental Psychology: Learning, Memory, and Cognition 41: 553. [Google Scholar] [PubMed]

- Su, Ningxin, Tongtong Li, Jun Zheng, Xiao Hu, Tian Fan, and Liang Luo. 2018. How font size affects judgments of learning: Simultaneous mediating effect of item-specific beliefs about fluency and moderating effect of beliefs about font size and memory. PLoS ONE 13: e0200888. [Google Scholar] [CrossRef] [PubMed]

- Tauber, Sarah K., and Matthew G. Rhodes. 2012. Measuring memory monitoring with judgements of retention (JORs). The Quarterly Journal of Experimental Psychology 65: 1376–96. [Google Scholar] [CrossRef] [PubMed]

- Tauber, Sarah K., John Dunlosky, and Katherine A. Rawson. 2015. The influence of retrieval practice versus delayed judgments of learning on memory: Resolving a memory-metamemory paradox. Experimental Psychology 62: 254. [Google Scholar] [CrossRef]

- Undorf, Monika, and Arndt Bröder. 2020. Cue integration in metamemory judgements is strategic. Quarterly Journal of Experimental Psychology 73: 629–42. [Google Scholar] [CrossRef] [PubMed]

- Undorf, Monika, Anke Söllner, and Arndt Bröder. 2018. Simultaneous utilization of multiple cues in judgments of learning. Memory and Cognition 46: 507–19. [Google Scholar] [CrossRef]

- Undorf, Monika, and Malte F. Zimdahl. 2019. Metamemory and memory for a wide range of font sizes: What is the contribution of perceptual fluency? Journal of Experimental Psychology: Learning, Memory, and Cognition 45: 97. [Google Scholar] [CrossRef]

- Witherby, Amber E., and Sarah K. Tauber. 2017. The Influence of Judgments of Learning on Long-Term Learning and Short-Term Performance. Journal of Applied Research in Memory and Cognition 6: 496–503. Available online: http://www.sciencedirect.com/science/article/pii/S2211368117301195 (accessed on 21 January 2019).

- Yang, Haiyan, Ying Cai, Qi Liu, Xiao Zhao, Qiang Wang, Chuansheng Chen, and Gui Xue. 2015. Differential neural correlates underlie judgment of learning and subsequent memory performance. Frontiers in Psychology 6: 1699. [Google Scholar] [CrossRef]

- Zechmeister, Eugene B., and John J. Shaughnessy. 1980. When you know that you know and when you think that you know but you don’t. Bulletin of the Psychonomic Society 15: 41–44. [Google Scholar] [CrossRef]

- Zhao, Wenbo, Jiaojiao Li, David R. Shanks, Baike Li, Xiao Hu, Chunliang Yang, and Liang Luo. 2023. Metamemory judgments have dissociable reactivity effects on item and interitem relational memory. Journal of Experimental Psychology: Learning, Memory, and Cognition 49: 557–74. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Double, K.S. Do Judgments of Learning Impair Recall When Uninformative Cues Are Salient? J. Intell. 2023, 11, 203. https://doi.org/10.3390/jintelligence11100203

Double KS. Do Judgments of Learning Impair Recall When Uninformative Cues Are Salient? Journal of Intelligence. 2023; 11(10):203. https://doi.org/10.3390/jintelligence11100203

Chicago/Turabian StyleDouble, Kit S. 2023. "Do Judgments of Learning Impair Recall When Uninformative Cues Are Salient?" Journal of Intelligence 11, no. 10: 203. https://doi.org/10.3390/jintelligence11100203

APA StyleDouble, K. S. (2023). Do Judgments of Learning Impair Recall When Uninformative Cues Are Salient? Journal of Intelligence, 11(10), 203. https://doi.org/10.3390/jintelligence11100203