Executive functions are the cognitive abilities responsible for planning, controlling, and guiding thoughts, feelings, and actions. They are the central executive of the cognitive system, i.e., they transform intentions and purposes into practical actions. People with a greater development of executive functions are more likely to achieve their goals, as they can plan their tasks adequately. There is ample evidence of the impact of executive functions on various areas of human development, especially academic performance (

Diamond 2016). Next, we define the theoretical model on which the Yellow-Red test was built, describe the relationship between children and technology use, and summarize previous contributions regarding the assessment of executive functions at the international level. Subsequently, a general description of the test is presented, allowing a better understanding of the results and reflections derived from the standardization process of the Yellow-Red test.

1.1. The Three-Component Model of Executive Functions

There are various models for conceptualizing executive functions; one of the most widely accepted is the one that defines the presence of three basic components of executive functions. These basic components develop interdependently during childhood and early adolescence and serve as the basis for developing higher-order executive functions such as planning and problem-solving.

Inhibitory control includes inhibiting thoughts, actions, or behaviors in the face of competing for internal or external stimuli. It is, therefore, an ability that allows the inhibition of cognitive, emotional, and behavioral factors. The first and second are related to thinking and memory. The third, with behavioral inhibition, for example, is related to gratification delay.

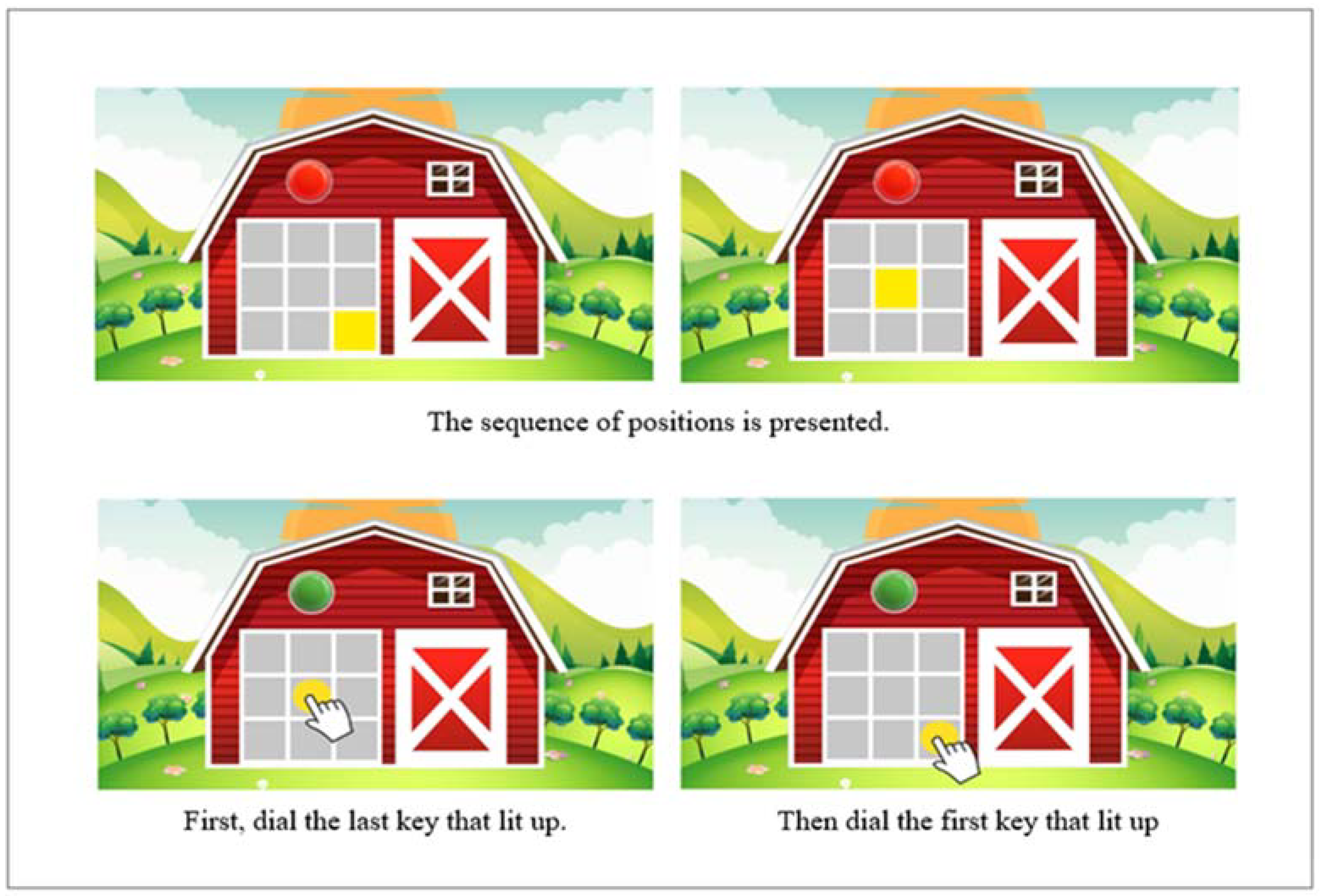

Working memory is the ability to operate with mental representations, whether visual, auditory, or episodic. According to

Cowan (

2017), it is a set of components of the mind that hold a limited amount of information temporarily available for processing the task at hand. It is an ability of limited capacity, although it progressively expands with age, reaching its maximum capacity around age 12.

Finally, cognitive flexibility is the ability to provide alternative solutions to the same problem. It is closely related to creativity, and of the three functions, it is the one with the latest appearance (

Diamond 2013).

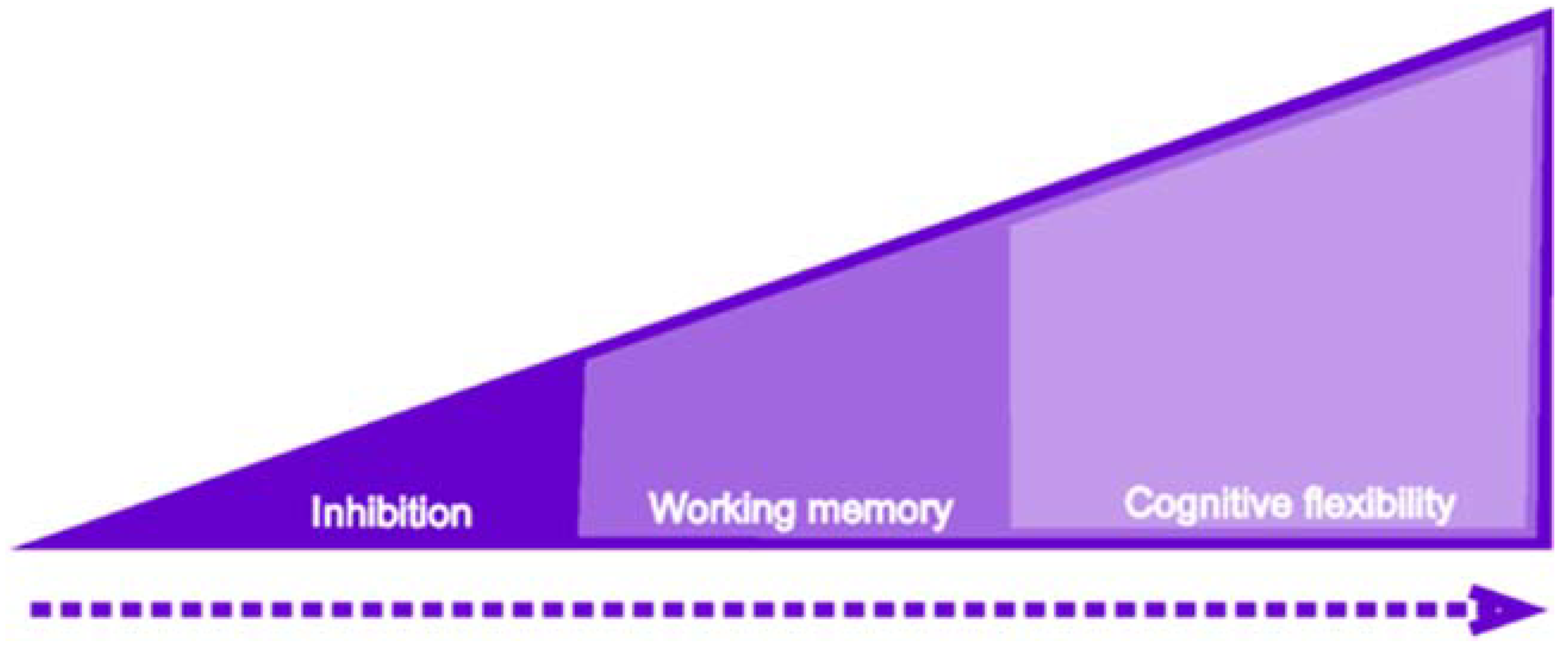

The three main components of EF, as shown in

Figure 1, can be understood as successively integrated over time. The first to make its appearance is inhibitory control, followed by working memory, and finally, cognitive flexibility. Although, conceptually, the three components are present throughout development, it is clear that their nature changes from 18 months of age, which is the age at which language begins to be a fundamental part of cognitive development. Language plays a fundamental role in executive functioning since it allows for labeling internal instructions that inhibit behaviors and actions, processing problems, and seeking alternative solutions to unknown problems.

The three components of EF also have important interrelationships, given by their successive integration into development. For example, to solve problems in working memory, it is essential to have active interference control of internal and external stimuli while processing the solution, which is a component of inhibitory control. For this reason, many models of working memory (e.g.,

Kane and Engle 2003) incorporate interference control as a component of working memory. However, it could properly be considered as a factor of inhibitory control. As

Diamond (

2013) noted, some authors (e.g.,

Baddeley and Hitch 1994) incorporated inhibitory and flexibility factors in their working memory model. However, following this author, we kept the three factors separate in the present work, as

Miyake et al. (

2000) suggested.

Likewise, cognitive flexibility requires both working memory and inhibitory control to provide the alternative solution being processed (e.g., if the task is to say all the words that begin with a given letter, in working memory, I must simultaneously evaluate that the new term I come up with actually starts with that letter and simultaneously remember and discard repeating the ones I have already said).

An interesting issue regarding the progressive differentiation of executive functions is the unicity versus diversity approach formulated by

Friedman and Miyake (

2017). Essentially, this approach postulates that executive functions show unicity and diversity, depending on both the analysis techniques used and the developmental level of the samples assessed. We stayed with the latter aspect for the present article, which focuses mainly on children.

Friedman and Miyake (

2017) reported that, although some studies show a unicity of executive functions at early ages, all studies evidence a differentiation of working memory and flexibility in older children or adults. In other words, at earlier ages, the appropriate mode for understanding executive functions is unity, while at older ages, it is that of diversity. The evidence on this point is mixed, specifically regarding when and which components are part of the first and second factors in school-aged children. This is partly due to the variety of tests used, as the selection of tests according to each component of executive functions is highly heterogeneous, both in their assessment objectives and the way they are assessed (for a comprehensive review on this topic, see

Lee et al. 2013).

1.2. Use of Technology Tools for Child Assessment

New generations grow up immersed in digital media-rich environments, and technology is integral to their lives from birth (

Sweeney and Geer 2008). From a very young age, children are exposed to technological resources, which translates into an early mastery of various digital tools (

Mcmanis and Gunnewig 2012).

The use of technological tools has increased both in the world and in Chile. In the USA, in 2018, 85% of households had an internet connection (

United States Census Bureau 2021). In Chile, the statistics are similar, with 87.4% of households reported to have an internet connection in 2017. Likewise, access to technological devices is equally high, with 85.7% of households in which school-age children live have a smart mobile device (

Subsecretaría de Telecomunicaciones de Chile 2017). According to

Chaudron et al. (

2018), who investigated the use of technology in children aged 0–8 years in 21 countries, the use of digital technologies starts earlier and earlier (under two years), and tablets and smartphones are the preferred devices of children, due to their multifunctionality and portability. According to these authors, devices with touch screens are appreciated by children, especially for their ease of use, the possibility of accessing different applications, and their playful aspects.

For these reasons, the need to incorporate technology into educational systems has been raised (

Sweeney and Geer 2008), considering its use both for the mediation of teaching and for the assessment of learning.

Day et al. (

2019) noted that there is a need for technology- and game-based executive function assessment tools that can be used outside of the clinical or academic context, allowing for accurate, ecological, and contingent assessments.

Technology-mediated assessments have several advantages over traditional assessments, as they allow for gamification of the assessment format by incorporating aspects traditionally related to video games or applications. They also allow the standardization of certain technical elements, such as instructions, examples, or forms of response, and the automation of correction processes. On the other hand, it was observed that the use of technological instruments allows the assessment of aspects impossible to assess and apply in pencil and paper instruments, for example, reaction times, presentation of algorithmically programmed items, and the measurement of aspects related to behavior (

Germine et al. 2019;

Parsey and Schmitter-Edgecombe 2013).

Parsey and Schmitter-Edgecombe (

2013) noted that the widespread access of younger generations has produced a cohort effect, in which children and young people perform better on computer-based assessments than older people with less technological experience. Moreover, the use of technology in assessment contexts generates an increased level of student engagement and motivation, enabling the expression of their full performance potential (

Perrotta et al. 2013;

Rosas et al. 2015).

Germine et al. (

2019) recommended focusing on four aspects when developing technology-based assessment instruments: (a) designing interfaces that are accessible and appealing to the target age group, (b) developing simple and clear instructions, (c) presenting applied test items that allow the user to interact with the test, which is more effective than reading written instructions, and (d) developing specific norms for technology-based tests rather than adapting norms from pencil-and-paper instruments.

However, it is important to consider whether technology-mediated assessments correspond to those in traditional formats. In this regard, several meta-analyses involving tests with students from K to 12 have shown no significant differences in the results of the two types of assessment (

Kingston 2009;

Wang et al. 2008), which contributes to the reliability of this type of instrument.

1.3. Description of Instruments and Gold Standards for FE Assessment

Multiple research fields have approached executive functions, such as neuropsychology, cognitive psychology, education, and, more recently, cognitive neurosciences. Likewise, each of these areas has developed its assessment paradigms, depending on the nature of their studies.

The first research came from neuropsychology and was based mainly on studying adults with some type of brain injury, thus establishing the relationship between executive functions and the frontal lobe. The works of Luria and his collaborators were paradoxical. They described frontal lobe syndrome in 1964, proposing a series of tasks to evaluate the relationship between neurological disorders and performance in cognitive and motor functions (

Canavan et al. 1985). Thus, in 1980, the standardized version of their procedures was published as the so-called Luria-Nebraska battery. In 1981, they presented the first version for children between 8 and 11 years of age (

Plaisted et al. 1983). According to

Zelazo et al. (

2016), interest in assessing executive functions in children only arises when the belief that the limited frontal lobe development during childhood was demystified in the early 1980s. It has been shown that it is just the opposite since it has been demonstrated that the frontal lobe shows a more significant development during childhood.

From this new interest in the association between executive functions and frontal lobe development in children, children’s versions of instruments used to assess executive functions in adults were developed. An example is the Stroop Interference Test (

Stroop 1935), one of the most widely used neuropsychological measures. The Stroop test consists of three consecutive tasks: First, a list of colors expressed in words must be read aloud. Second, one must name a series of colors presented as such in rectangles. Third, a list of colors printed in ink of a color different from that expressed by the word, for example, “yellow” printed in red ink, must be named. According to

Homack and Riccio (

2004), there is consensus that this last task measures cognitive flexibility and inhibition. The original version proposed by Stroop was interpreted in different ways. One of the most widely used versions is the one developed by

Golden (

1978), standardized in 2003 for adults and 2002 for children. The children’s version can be applied to children aged from five to fourteen years and only differs from the adult version in scoring norms (

Moran and Yeates 2018;

Rozenblatt 2018). This is an example of how the original tools were designed for application with adults. Their children’s versions are only later adaptations, not instruments directly created for these age groups, which do not have standardized versions. If they do, they present very poor application norms (

Carlson 2005). According to

Hughes and Ensor (

2011), child adaptation from adult tasks runs the risk of losing critical components of executive functions, for example, oversimplifying them or not considering other cognitive aspects that develop in parallel or later, such as the use of language, specifically vocabulary.

Since 2000, research in psychology and neuroscience has grown exponentially, generating several instruments consisting of individual behavioral tasks based on performance (for more details, see

Carlson (

2005) and

Garon et al. (

2008)), which have become more accurate thanks to their technological versions applied on PCs and, later, on tablets. One of the most widely used tests is the Hearts and Flowers test, which corresponds to a version of “Dots” originally developed by

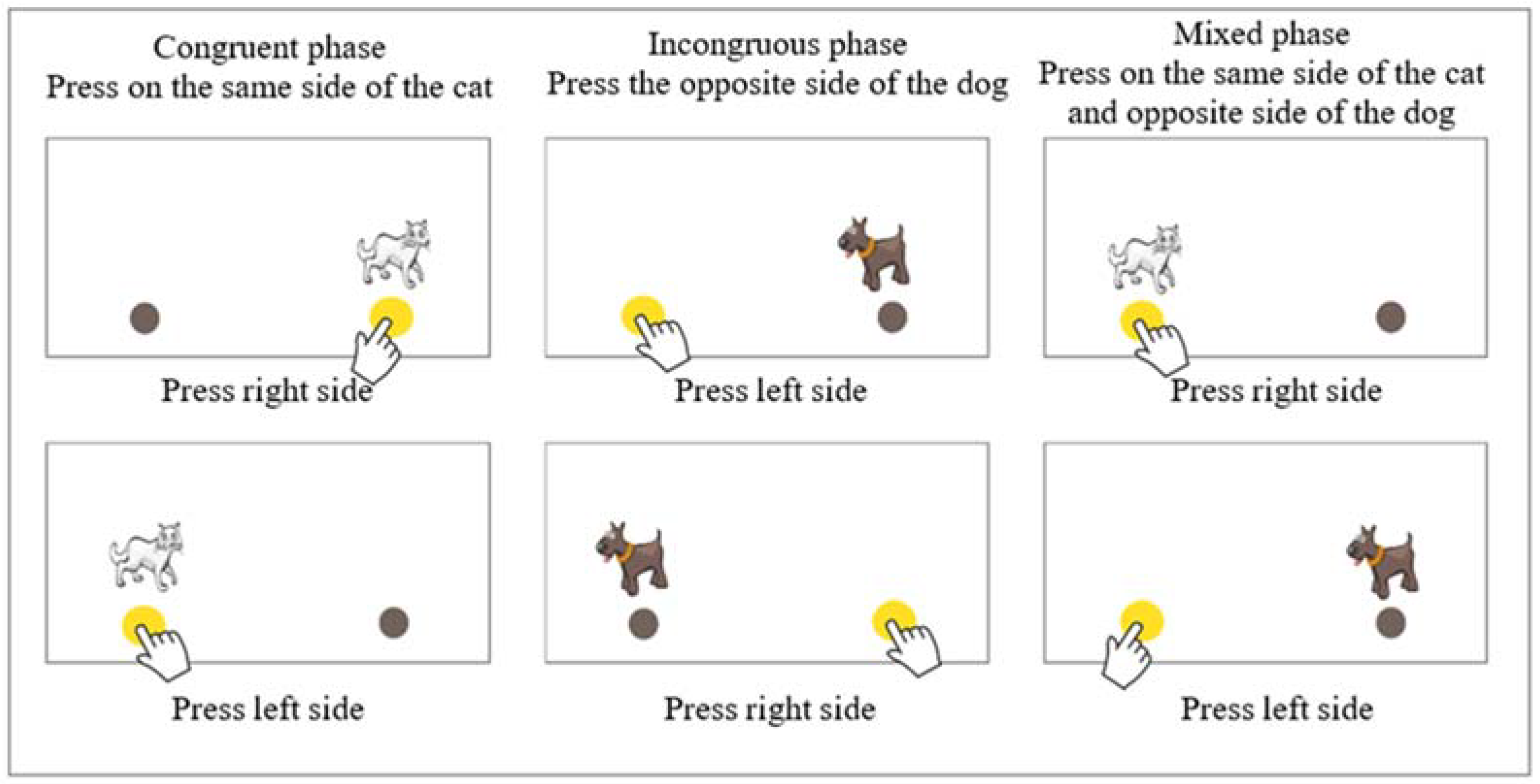

Davidson et al. (

2006). This test consists of three consecutive tasks; in the first block, the person must press a key on the same side on which a heart appears (congruent block); in the second task, he/she must press a key on the opposite side of which a flower appears (incongruent block). Finally, there is a mixed block in which hearts and flowers appear randomly. The individual must follow two rules simultaneously, depending on the stimulus that appears, forming a mixed block. Despite its wide use, Hearts and Flowers does not have norms, validity, or reliability studies (

Camerota et al. 2020).

In 1996,

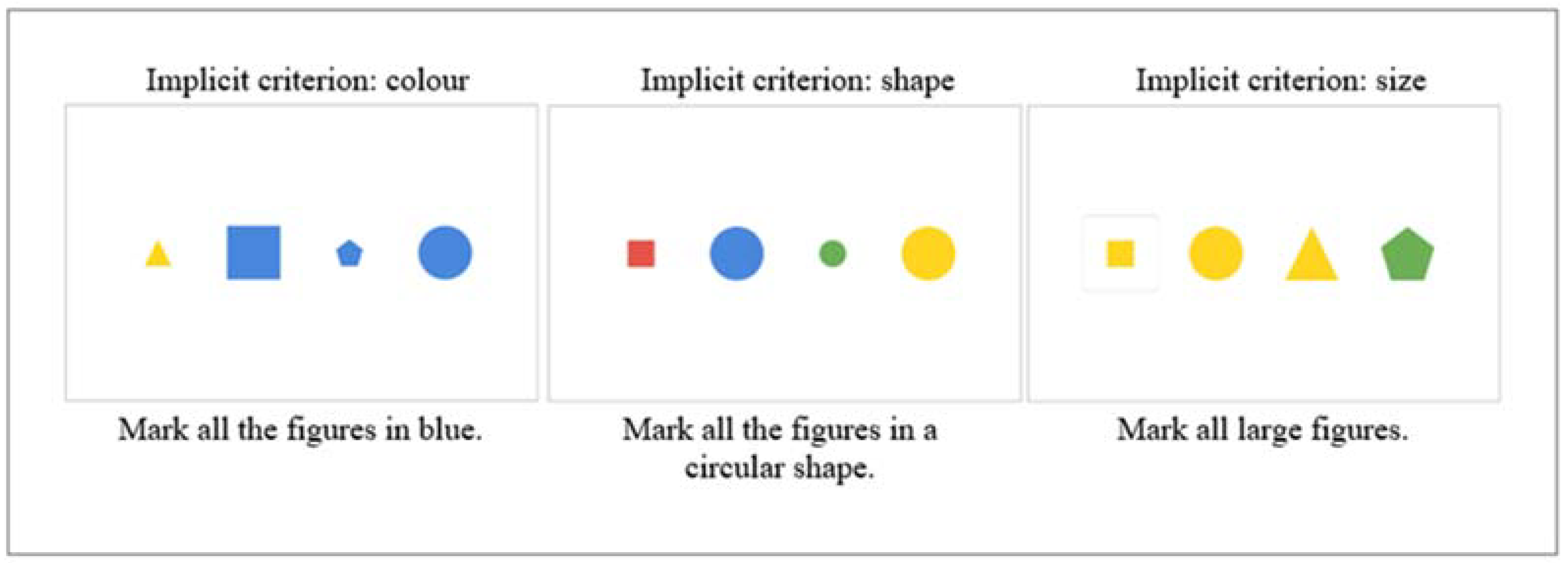

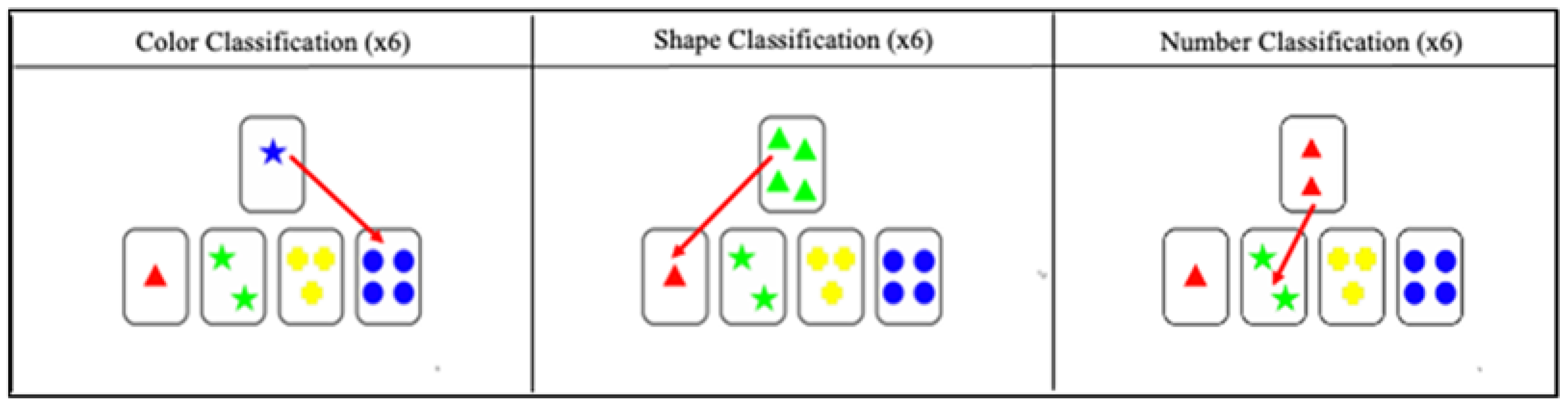

Zelazo et al. (

1996) presented the first version of the Dimensional Change Card Sort (DCCS). in which the child is asked to sort a series of drawings, first according to their shape (put a card with a rabbit on top of another rabbit card, regardless of its color) and then according to their color (put a card with a red figure on top of another red card, regardless of its shape). The child must sort 48 cards according to the instruction of the evaluator, who randomly says “shape” or “color.” The DCCS is now part of a free, validated, norm-referenced battery for the North American population aged 2.5 to 85 (

Zelazo et al. 2013). This is a digital version, whose only disadvantage is that it is only available for IOS devices. The previous tests are traditionally laboratory-based but more ecological; behavioral measures are generally related to cognitive and educational psychology. It is in these contexts where tests that have not been standardized but are widely used are also used, such as Simon says (

Strommen 1973), based on the traditional children’s game, or Head Shoulders Knees and Toes (

Cameron Ponitz et al. 2008), in which the child is progressively asked to touch parts of his body in alternating order.

Other instruments that can be used for the assessment of executive functions are the ENFEN (

Portellano et al. 2011), which assesses the global maturational development of children between 6 and 12 years of age with the main focus on executive functions. This test presents norms for the Spanish population with an individual application format with attractive tasks for students, does not directly consider a play format, and does not use digital support. On the other hand, an alternative is the Psychology Experiment Building Language (PEBL) platform, which allows the free programming of digital tests. This platform has some traditional tests pre-designed on the platform, focusing on evaluating executive functions. Among the tests that can be selected is a version of Berg’s Card Sorting Test, similar to the Wisconsin test, Corsi’s block test, and an implementation of Eriksen’s Flanker task. However, although these tools are digital and free of charge, prior knowledge is required to select the tests to be applied, and they do not present information regarding the norms for each population.

On the other hand, tests that assess more general skills are used in educational contexts, which sometimes include the assessment of executive functions or some of their components. This is the case for tests such as the Woodcock-Muñoz battery (

Muñoz-Sandoval et al. 2005) and the WISC-V test (

Rosas et al. 2022), which include specific components related to the assessment of executive functions. Finally, and especially in school contexts, some scales assess executive functions indirectly, in different contexts, and through the appreciation of actors close to the children, such as teachers or relatives. Among the most widely used are the Behavior Rating Inventory of Executive Function (BRIEF,

Gioia et al. 2000), the Behavior Assessment System for Children, now in its third edition (BASC,

Reynolds and Kamphaus 2015), and the Conners test (

Conners 2008).

However, the assessment systems mentioned above present certain limitations because, on the one hand, the tests used in the research area assess executive functions in a general way without detailing aspects related to their components. On the other hand, the assessment of executive functions in school contexts only considers executive functions as a minor aspect of more general skills, such as cognitive ability. Moreover, the scales that focus on the appreciation of third parties tend to mark a tendency towards the less cognitive aspects of executive functions, generating a biased view of their development. On the other hand, there are doubts about the validity of these instruments, which are discussed in the next section.

1.4. Discussion of the Importance of Direct EF Assessment over Indirect Ones

Executive functions are important for children’s behavior and learning, but what method is best for assessing these abilities? Much research shows low correlations between the results of direct and indirect assessments of executive functions. In a review of 20 studies reporting correlations between the two types of measures,

Toplak et al. (

2013) found that only 24% of all reported correlations were statistically significant and that the median correlations were only r = 0.19 (equating to only 3.6% common variance). It should be noted that this result cannot be attributed to the lack of reliability or validity of both methods since both indirect and direct scales showed quite good psychometric properties. So, how can two types of assessment that are supposed to measure the same thing have such low correlations? A recent study sought to answer this question. Even though both measurement forms can show good predictive abilities for academic performance (

Gerst et al. 2015), the evidence seems to indicate that direct cognitive tests are more efficient and robust than indirect assessments for measuring executive functioning. The study by

Soto et al. (

2020), conducted with 136 children, clearly showed how executive function assessments made by teachers adequately predicted students’ academic assessments (also made by teachers) but failed to predict academic performance. Tests of executive functions instead predict academic performance very well and predict academic ratings even better than indirect assessments of executive functions.

This study is of particular relevance since, to date, it is the only published research with two independent and two dependent variables and, in both cases, with direct and indirect methods. Moreover, this makes it possible to elucidate more precisely what both techniques measure; the academic assessments seem to better measure better school adjustment according to teachers, while the direct ones are a better measure of school adjustment and academic achievement. Thus, it would appear that direct measures are more accurate and would be a better indicator of executive functioning than indirect measures.