Analysing Complex Problem-Solving Strategies from a Cognitive Perspective: The Role of Thinking Skills

Abstract

:1. Introduction

1.1. Complex Problem Solving: Definition, Assessment and Relations to Intelligence

1.2. Inductive and Combinatorial Reasoning as Component Skills of Complex Problem Solving

1.3. Behaviours and Strategies in a Complex Problem-Solving Environment

2. Research Aims and Questions

- (RQ1)

- What exploration strategy profiles characterise the various problem-solvers at the university level?

- (RQ2)

- Can developmental differences in CPS, IR and CR be detected among students with different exploration strategy profiles?

- (RQ3)

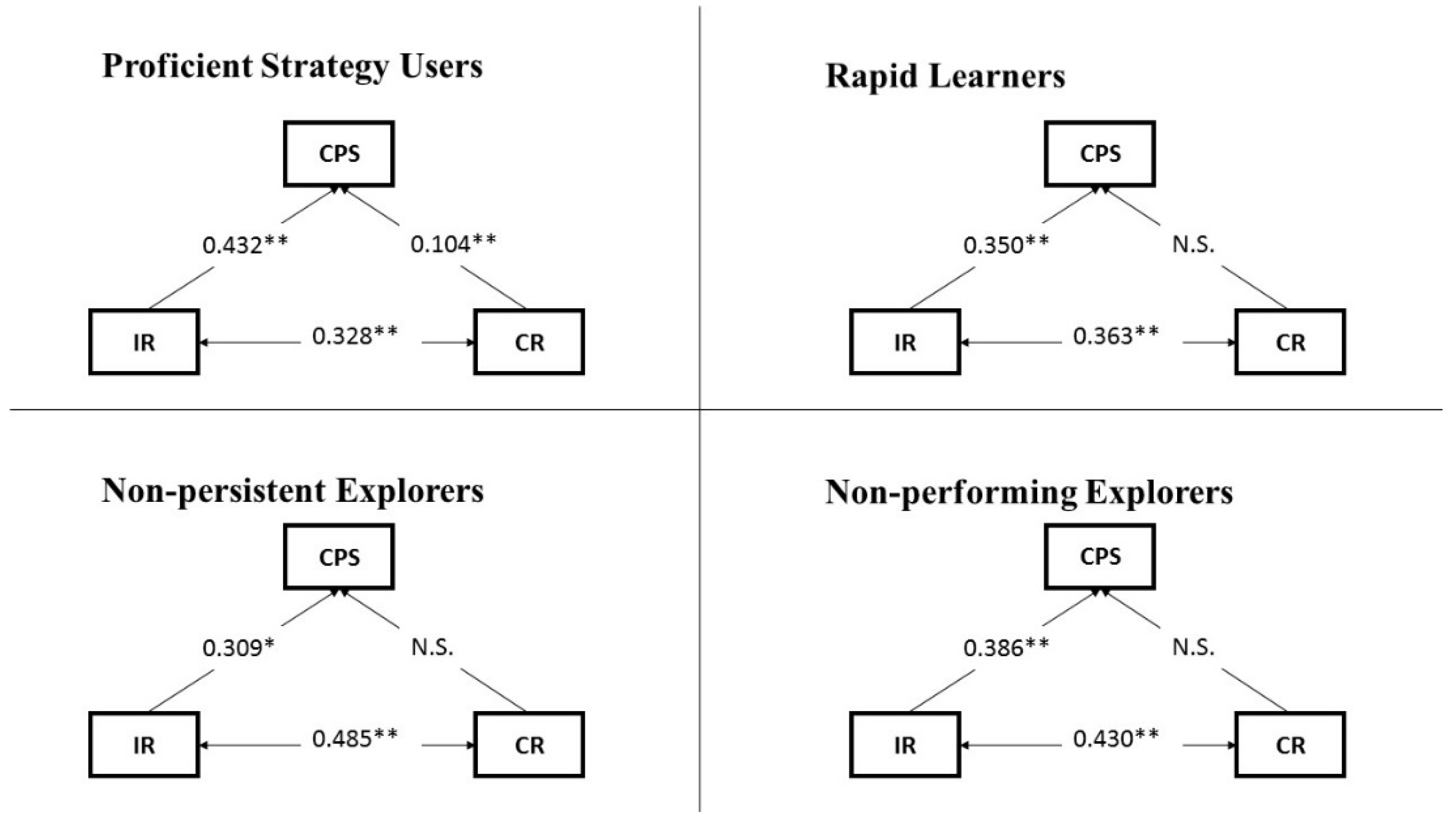

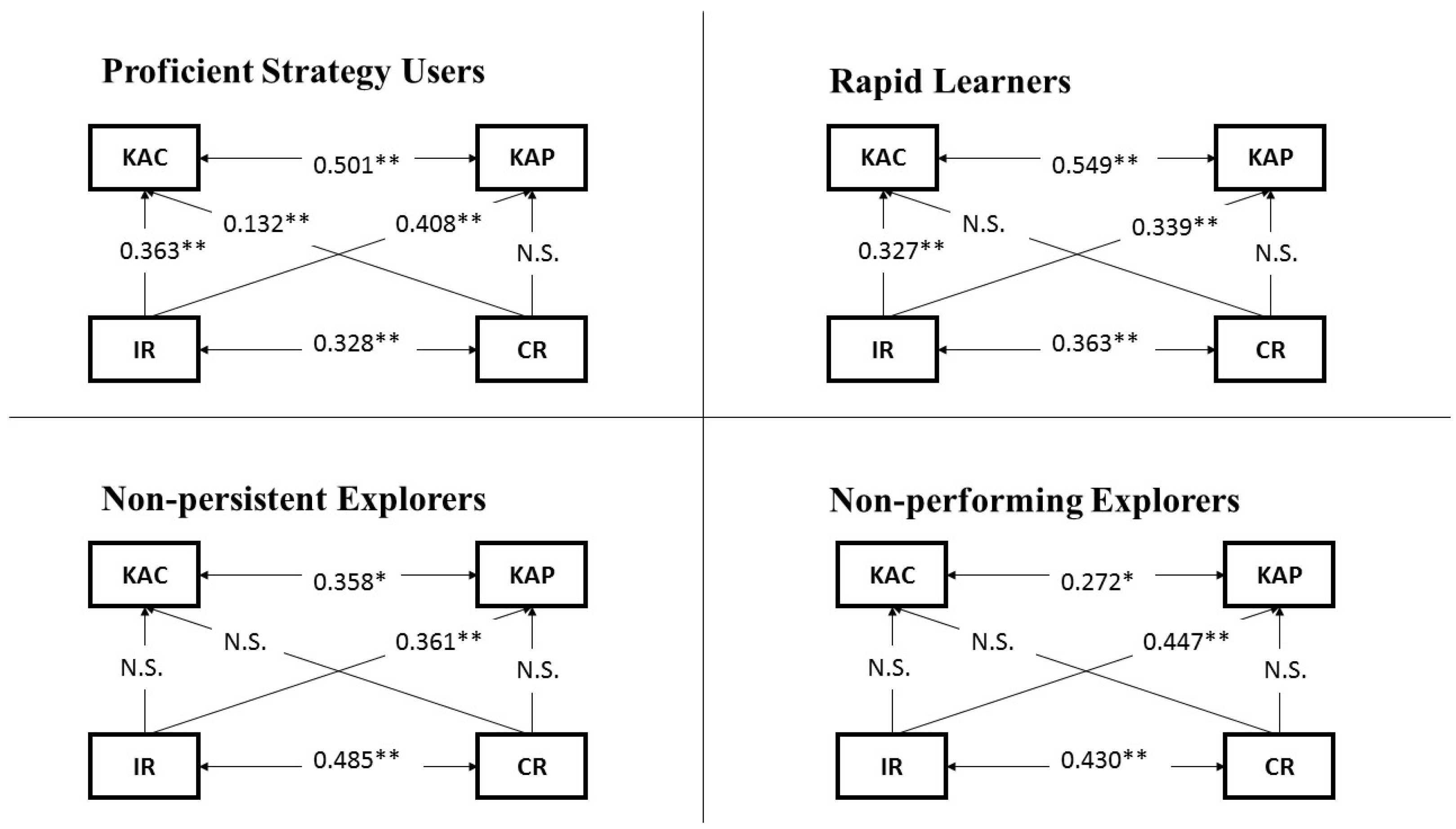

- What are the similarities and differences in the roles IR and CR play in the CPS process as well as in the two phases of CPS (i.e., KAC and KAP) among students with different exploration strategy profiles?

3. Methods

3.1. Participants and Procedure

3.2. Instruments

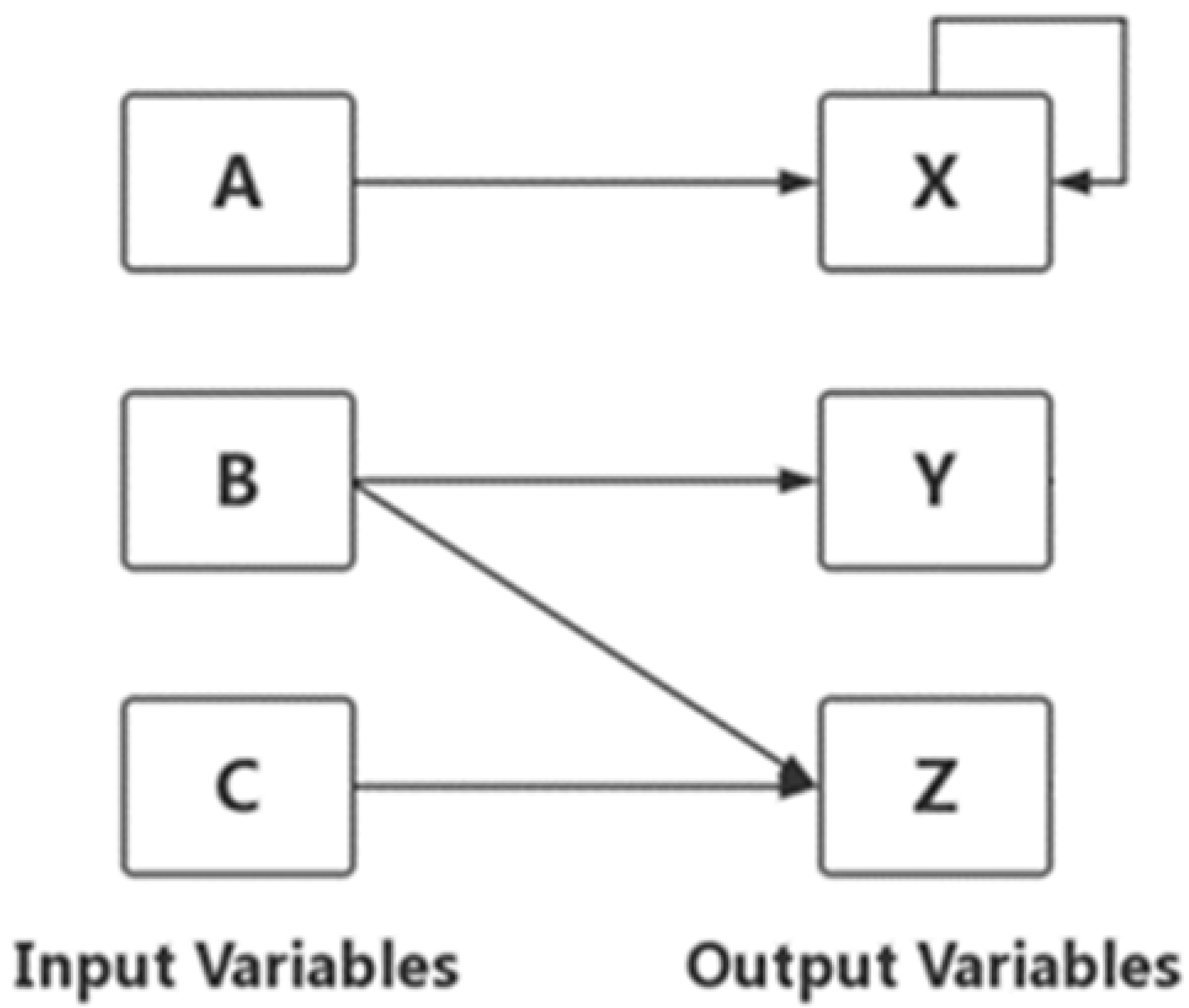

3.2.1. Complex Problem Solving (CPS)

3.2.2. Inductive Reasoning (IR)

3.2.3. Combinatorial Reasoning (CR)

3.3. Scoring

3.4. Coding and Labelling the Logfile Data

- “Only one single input variable was manipulated, whose relationship to the output variables was unknown (we considered a relationship unknown if its effect cannot be known from previous settings), while the other variables were set at a neutral value like zero […]

- One single input variable was changed, whose relationship to the output variables was unknown. The others were not at zero, but at a setting used earlier. […]

- One single input variable was changed, whose relationship to the output variables was unknown, and the others were not at zero; however, the effect of the other input variable(s) was known from earlier settings. Even so, this combination was not attempted earlier” (Molnár and Csapó 2018, p. 8)

3.5. Data Analysis Plan

4. Results

4.1. Descriptive Results

4.2. Four Qualitatively Different Exploration Strategy Profiles Can Be Distinguished in CPS

4.3. Better Exploration Strategy Users Showed Better Performance in Reasoning Skills

4.4. The Roles of IR and CR in CPS and Its Processes Were Different for Each Type of Exploration Strategy User

5. Discussion

6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adey, Philip, and Benő Csapó. 2012. Developing and Assessing Scientific Reasoning. In Framework for Diagnostic Assessment of Science. Edited by Benő Csapó and Gábor Szabó. Budapest: Nemzeti Tankönyvkiadó, pp. 17–53. [Google Scholar]

- Batanero, Carmen, Virginia Navarro-Pelayo, and Juan D. Godino. 1997. Effect of the implicit combinatorial model on combinatorial reasoning in secondary school pupils. Educational Studies in Mathematics 32: 181–99. [Google Scholar] [CrossRef]

- Beckmann, Jens F., and Jürgen Guthke. 1995. Complex problem solving, intelligence, and learning ability. In Complex Problem Solving: The European Perspective. Edited by Peter A. Frensch and Joachim Funke. Hillsdale: Erlbaum, pp. 177–200. [Google Scholar]

- Buchner, Axel. 1995. Basic topics and approaches to the study of complex problem solving. In Complex Problem Solving: The European Perspective. Edited by Peter A. Frensch and Joachim Funke. Hillsdale: Erlbaum, pp. 27–63. [Google Scholar]

- Chen, Yunxiao, Xiaoou Li, Jincheng Liu, and Zhiliang Ying. 2019. Statistical analysis of complex problem-solving process data: An event history analysis approach. Frontiers in Psychology 10: 486. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Csapó, Benő. 1988. A kombinatív képesség struktúrája és fejlődése. Budapest: Akadémiai Kiadó. [Google Scholar]

- Csapó, Benő. 1997. The development of inductive reasoning: Cross-sectional assessments in an educational context. International Journal of Behavioral Development 20: 609–26. [Google Scholar] [CrossRef] [Green Version]

- Csapó, Benő. 1999. Improving thinking through the content of teaching. In Teaching and Learning Thinking Skills. Lisse: Swets & Zeitlinger, pp. 37–62. [Google Scholar]

- Csapó, Benő, and Gyöngyvér Molnár. 2019. Online diagnostic assessment in support of personalized teaching and learning: The eDia System. Frontiers in Psychology 10: 1522. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dörner, Dietrich, and Joachim Funke. 2017. Complex problem solving: What it is and what it is not. Frontiers in Psychology 8: 1153. [Google Scholar] [CrossRef] [PubMed]

- English, Lyn D. 2005. Combinatorics and the development of children’s combinatorial reasoning. In Exploring Probability in School: Challenges for Teaching and Learning. Edited by Graham A. Jones. New York: Springer, pp. 121–41. [Google Scholar]

- Fischer, Andreas, Samuel Greiff, and Joachim Funke. 2012. The process of solving complex problems. Journal of Problem Solving 4: 19–42. [Google Scholar] [CrossRef] [Green Version]

- Frensch, Peter A., and Joachim Funke. 1995. Complex Problem Solving: The European Perspective. New York: Psychology Press. [Google Scholar]

- Funke, Joachim. 2001. Dynamic systems as tools for analysing human judgement. Thinking and Reasoning 7: 69–89. [Google Scholar] [CrossRef]

- Funke, Joachim. 2010. Complex problem solving: A case for complex cognition? Cognitive Processing 11: 133–42. [Google Scholar] [CrossRef] [Green Version]

- Funke, Joachim. 2021. It Requires More Than Intelligence to Solve Consequential World Problems. Journal of Intelligence 9: 38. [Google Scholar] [CrossRef]

- Funke, Joachim, Andreas Fischer, and Daniel V. Holt. 2018. Competencies for complexity: Problem solving in the twenty-first century. In Assessment and Teaching of 21st Century Skills. Edited by Esther Care, Patrick Griffin and Mark Wilson. Dordrecht: Springer, pp. 41–53. [Google Scholar]

- Gilhooly, Kenneth J. 1982. Thinking: Directed, Undirected and Creative. London: Academic Press. [Google Scholar]

- Gnaldi, Michela, Silvia Bacci, Thiemo Kunze, and Samuel Greiff. 2020. Students’ complex problem solving profiles. Psychometrika 85: 469–501. [Google Scholar] [CrossRef] [PubMed]

- Greiff, Samuel, and Joachim Funke. 2009. Measuring complex problem solving-the MicroDYN approach. In The Transition to Computer-Based Assessment. Edited by Friedrich Scheuermann and Julius Björnsson. Luxembourg: Office for Official Publications of the European Communities, pp. 157–63. [Google Scholar]

- Greiff, Samuel, Daniel V. Holt, and Joachim Funke. 2013. Perspectives on problem solving in educational assessment: Analytical, interactive, and collaborative problem solving. Journal of Problem Solving 5: 71–91. [Google Scholar] [CrossRef] [Green Version]

- Greiff, Samuel, Gyöngyvér Molnár, Romain Martina, Johannes Zimmermann, and Benő Csapó. 2018. Students’ exploration strategies in computer-simulated complex problem environments: A latent class approach. Computers & Education 126: 248–63. [Google Scholar]

- Greiff, Samuel, Sascha Wüstenberg, and Francesco Avvisati. 2015a. Computer-generated log-file analyses as a window into students’ minds? A showcase study based on the PISA 2012 assessment of problem solving. Computers & Education 91: 92–105. [Google Scholar]

- Greiff, Samuel, Sascha Wüstenberg, and Joachim Funke. 2012. Dynamic problem solving: A new measurement perspective. Applied Psychological Measurement 36: 189–213. [Google Scholar] [CrossRef]

- Greiff, Samuel, Sascha Wüstenberg, Benő Csapó, Andreas Demetriou, Jarkko Hautamäki, Arthur C. Graesser, and Romain Martin. 2014. Domain-general problem solving skills and education in the 21st century. Educational Research Review 13: 74–83. [Google Scholar] [CrossRef]

- Greiff, Samuel, Sascha Wüstenberg, Thomas Goetz, Mari-Pauliina Vainikainen, Jarkko Hautamäki, and Marc H. Bornstein. 2015b. A longitudinal study of higher-order thinking skills: Working memory and fluid reasoning in childhood enhance complex problem solving in adolescence. Frontiers in Psychology 6: 1060. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hołda, Małgorzata, Anna Głodek, Malwina Dankiewicz-Berger, Dagna Skrzypińska, and Barbara Szmigielska. 2020. Ill-defined problem solving does not benefit from daytime napping. Frontiers in Psychology 11: 559. [Google Scholar] [CrossRef]

- Klauer, Karl Josef. 1990. Paradigmatic teaching of inductive thinking. Learning and Instruction 2: 23–45. [Google Scholar]

- Klauer, Karl Josef, Klaus Willmes, and Gary D. Phye. 2002. Inducing inductive reasoning: Does it transfer to fluid intelligence? Contemporary Educational Psychology 27: 1–25. [Google Scholar] [CrossRef]

- Kuhn, Deanna. 2010. What is scientific thinking and how does it develop? In The Wiley-Blackwell Handbook of Childhood Cognitive Development. Edited by Usha Goswami. Oxford: Wiley-Blackwell, pp. 371–93. [Google Scholar]

- Kuhn, Deanna, Merce Garcia-Mila, Anat Zohar, Christopher Andersen, H. White Sheldon, David Klahr, and Sharon M. Carver. 1995. Strategies of knowledge acquisition. Monographs of the Society for Research in Child Development 60: 1–157. [Google Scholar] [CrossRef]

- Lo, Yungtai, Nancy R. Mendell, and Donald B. Rubin. 2001. Testing the number of components in a normal mixture. Biometrika 88: 767–78. [Google Scholar] [CrossRef]

- Lotz, Christin, Ronny Scherer, Samuel Greiff, and Jörn R. Sparfeldt. 2017. Intelligence in action—Effective strategic behaviors while solving complex problems. Intelligence 64: 98–112. [Google Scholar] [CrossRef]

- Mayer, Richard E. 1998. Cognitive, metacognitive, and motivational aspects of problem solving. Instructional Science 26: 49–63. [Google Scholar] [CrossRef]

- Molnár, Gyöngyvér, and Benő Csapó. 2011. Az 1–11 évfolyamot átfogó induktív gondolkodás kompetenciaskála készítése a valószínűségi tesztelmélet alkalmazásával. Magyar Pedagógia 111: 127–40. [Google Scholar]

- Molnár, Gyöngyvér, and Benő Csapó. 2018. The efficacy and development of students’ problem-solving strategies during compulsory schooling: Logfile analyses. Frontiers in Psychology 9: 302. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Molnár, Gyöngyvér, Saleh Ahmad Alrababah, and Samuel Greiff. 2022. How we explore, interpret, and solve complex problems: A cross-national study of problem-solving processes. Heliyon 8: e08775. [Google Scholar] [CrossRef] [PubMed]

- Molnár, Gyöngyvér, Samuel Greiff, and Benő Csapó. 2013. Inductive reasoning, domain specific and complex problem solving: Relations and development. Thinking Skills and Creativity 9: 35–45. [Google Scholar] [CrossRef]

- Mousa, Mojahed, and Gyöngyvér Molnár. 2020. Computer-based training in math improves inductive reasoning of 9- to 11-year-old children. Thinking Skills and Creativity 37: 100687. [Google Scholar] [CrossRef]

- Mustafić, Maida, Jing Yu, Matthias Stadler, Mari-Pauliina Vainikainen, Marc H. Bornstein, Diane L. Putnick, and Samuel Greiff. 2019. Complex problem solving: Profiles and developmental paths revealed via latent transition analysis. Developmental Psychology 55: 2090–101. [Google Scholar] [CrossRef]

- Muthén, Linda K., and Bengt O. Muthén. 2010. Mplus User’s Guide. Los Angeles: Muthén & Muthén. [Google Scholar]

- Newell, Allen. 1993. Reasoning, Problem Solving, and Decision Processes: The Problem Space as a Fundamental Category. Boston: MIT Press. [Google Scholar]

- Novick, Laura R., and Miriam Bassok. 2005. Problem solving. In The Cambridge Handbook of Thinking and Reasoning. Edited by Keith James Holyoak and Robert G. Morrison. New York: Cambridge University Press, pp. 321–49. [Google Scholar]

- OECD. 2010. PISA 2012 Field Trial Problem Solving Framework. Paris: OECD Publishing. [Google Scholar]

- OECD. 2014. Results: Creative Problem Solving—Students’ Skills in Tackling Real-Life Problems (Volume V). Paris: OECD Publishing. [Google Scholar]

- Pásztor, Attila. 2016. Technology-Based Assessment and Development of Inductive Reasoning. Ph.D. thesis, Doctoral School of Education, University of Szeged, Szeged, Hungary. [Google Scholar]

- Pásztor, Attila, and Benő Csapó. 2014. Improving Combinatorial Reasoning through Inquiry-Based Science Learning. Paper presented at the Science and Mathematics Education Conference, Dublin, Ireland, June 24–25. [Google Scholar]

- Pásztor, Attila, Sirkku Kupiainen, Risto Hotulainen, Gyöngyvér Molnár, and Benő Csapó. 2018. Comparing Finnish and Hungarian Fourth Grade Students’ Inductive Reasoning Skills. Paper presented at the EARLI SIG 1 Conference, Helsinki, Finland, August 29–31. [Google Scholar]

- Sandberg, Elisabeth Hollister, and Mary Beth McCullough. 2010. The development of reasoning skills. In A Clinician’s Guide to Normal Cognitive Development in Childhood. Edited by Elisabeth Hollister Sandberg and Becky L. Spritz. New York: Routledge, pp. 179–89. [Google Scholar]

- Schraw, Gregory, Michael E. Dunkle, and Lisa D. Bendixen. 1995. Cognitive processes in well-defined and ill-defined problem solving. Applied Cognitive Psychology 9: 523–38. [Google Scholar] [CrossRef]

- Schweizer, Fabian, Sascha Wüstenberg, and Samuel Greiff. 2013. Validity of the MicroDYN approach: Complex problem solving predicts school grades beyond working memory capacity. Learning and Individual Differences 24: 42–52. [Google Scholar] [CrossRef]

- Stadler, Matthias, Nicolas Becker, Markus Gödker, Detlev Leutner, and Samuel Greiff. 2015. Complex problem solving and intelligence: A meta-analysis. Intelligence 53: 92–101. [Google Scholar] [CrossRef]

- Sternberg, Robert J. 1982. Handbook of Human Intelligence. New York: Cambridge University Press. [Google Scholar]

- Sternberg, Robert J., and Scott Barry Kaufman. 2011. The Cambridge Handbook of Intelligence. New York: Cambridge University Press. [Google Scholar]

- van de Schoot, Rens, Peter Lugtig, and Joop Hox. 2012. A checklist for testing measurement invariance. European Journal of Developmental Psychology 9: 486–92. [Google Scholar] [CrossRef]

- Vollmeyer, Regina, Bruce D. Burns, and Keith J. Holyoak. 1996. The impact of goal specificity on strategy use and the acquisition of problem structure. Cognitive Science 20: 75–100. [Google Scholar] [CrossRef]

- Welter, Marisete Maria, Saskia Jaarsveld, and Thomas Lachmann. 2017. Problem space matters: The development of creativity and intelligence in primary school children. Creativity Research Journal 29: 125–32. [Google Scholar] [CrossRef]

- Wenke, Dorit, Peter A. Frensch, and Joachim Funke. 2005. Complex Problem Solving and intelligence: Empirical relation and causal direction. In Cognition and Intelligence: Identifying the Mechanisms of the Mind. Edited by Robert J. Sternberg and Jean E. Pretz. New York: Cambridge University Press, pp. 160–87. [Google Scholar]

- Wittmann, Werner W., and Keith Hattrup. 2004. The relationship between performance in dynamic systems and intelligence. Systems Research and Behavioral Science 21: 393–409. [Google Scholar] [CrossRef]

- Wu, Hao, and Gyöngyvér Molnár. 2018. Interactive problem solving: Assessment and relations to combinatorial and inductive reasoning. Journal of Psychological and Educational Research 26: 90–105. [Google Scholar]

- Wu, Hao, and Gyöngyvér Molnár. 2021. Logfile analyses of successful and unsuccessful strategy use in complex problem-solving: A cross-national comparison study. European Journal of Psychology of Education 36: 1009–32. [Google Scholar] [CrossRef]

- Wu, Hao, Andi Rahmat Saleh, and Gyöngyvér Molnár. 2022. Inductive and combinatorial reasoning in international educational context: Assessment, measurement invariance, and latent mean differences. Asia Pacific Education Review 23: 297–310. [Google Scholar] [CrossRef]

- Wüstenberg, Sascha, Samuel Greiff, and Joachim Funke. 2012. Complex problem solving—More than reasoning? Intelligence 40: 1–14. [Google Scholar] [CrossRef] [Green Version]

- Wüstenberg, Sascha, Samuel Greiff, Gyöngyvér Molnár, and Joachim Funke. 2014. Cross-national gender differences in complex problem solving and their determinants. Learning and Individual Differences 29: 18–29. [Google Scholar] [CrossRef]

| CPS | IR | CR | |||

|---|---|---|---|---|---|

| Overall | KAC | KAP | |||

| Mean (%) | 56.21 | 62.93 | 49.50 | 65.83 | 68.46 |

| S.D. (%) | 22.37 | 26.65 | 22.75 | 15.41 | 20.02 |

| Number of Latent Classes | AIC | BIC | aBIC | Entropy | L–M–R Test | p |

|---|---|---|---|---|---|---|

| 2 | 9078 | 9333 | 9177 | 0.987 | 4255 | <0.001 |

| 3 | 8520 | 8905 | 8670 | 0.939 | 604 | <0.001 |

| 4 | 8381 | 8897 | 8582 | 0.959 | 188 | <0.05 |

| 5 | 8339 | 8984 | 8591 | 0.955 | 92 | 0.93 |

| 6 | 8309 | 9084 | 8611 | 0.877 | 96 | 0.34 |

| Class Profiles | CPS | IR | CR | |||

|---|---|---|---|---|---|---|

| Overall | KAC | KAP | ||||

| Proficient strategy users | Mean (%) | 61.37 | 69.57 | 53.17 | 67.79 | 70.47 |

| S.D. (%) | 19.67 | 22.25 | 21.90 | 14.22 | 18.96 | |

| Rapid learners | Mean (%) | 35.39 | 36.65 | 34.14 | 59.23 | 62.67 |

| S.D. (%) | 14.26 | 20.45 | 17.15 | 14.22 | 17.60 | |

| Non-persistent explorers | Mean (%) | 27.03 | 24.59 | 29.47 | 57.29 | 56.11 |

| S.D. (%) | 10.75 | 14.06 | 11.80 | 18.75 | 24.52 | |

| Non-performing explorers | Mean (%) | 22.75 | 19.64 | 25.86 | 50.65 | 53.72 |

| S.D. (%) | 12.67 | 15.30 | 16.38 | 16.55 | 23.99 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Molnár, G. Analysing Complex Problem-Solving Strategies from a Cognitive Perspective: The Role of Thinking Skills. J. Intell. 2022, 10, 46. https://doi.org/10.3390/jintelligence10030046

Wu H, Molnár G. Analysing Complex Problem-Solving Strategies from a Cognitive Perspective: The Role of Thinking Skills. Journal of Intelligence. 2022; 10(3):46. https://doi.org/10.3390/jintelligence10030046

Chicago/Turabian StyleWu, Hao, and Gyöngyvér Molnár. 2022. "Analysing Complex Problem-Solving Strategies from a Cognitive Perspective: The Role of Thinking Skills" Journal of Intelligence 10, no. 3: 46. https://doi.org/10.3390/jintelligence10030046

APA StyleWu, H., & Molnár, G. (2022). Analysing Complex Problem-Solving Strategies from a Cognitive Perspective: The Role of Thinking Skills. Journal of Intelligence, 10(3), 46. https://doi.org/10.3390/jintelligence10030046