Abstract

We study linear-quadratic stochastic optimal control problems with bilinear state dependence where the underlying stochastic differential equation (SDE) has multiscale features. We show that, in the same way in which the underlying dynamics can be well approximated by a reduced-order dynamics in the scale separation limit (using classical homogenization results), the associated optimal expected cost converges to an effective optimal cost in the scale separation limit. This entails that we can approximate the stochastic optimal control for the whole system by a reduced-order stochastic optimal control, which is easier to compute because of the lower dimensionality of the problem. The approach uses an equivalent formulation of the Hamilton-Jacobi-Bellman (HJB) equation, in terms of forward-backward SDEs (FBSDEs). We exploit the efficient solvability of FBSDEs via a least squares Monte Carlo algorithm and show its applicability by a suitable numerical example.

1. Introduction

Stochastic optimal control is one of the important fields in mathematics which has attracted the attention of both pure and applied mathematicians [1,2]. Stochastic control problems also appear in a variety of applications, such as statistics [3,4], financial mathematics [5,6], molecular dynamics [7,8] or materials science [9,10], to mention just a few. For some applications in science and engineering, such as molecular dynamics [8,11], the high dimensionality of the state space is an important challenge when solving optimal control problems. Another issue when solving optimal control problems by discretising the corresponding dynamic programming equations in space and time are multiscale effects that come into play when the state space dynamics exhibits slow and fast motions.

Here we consider such systems that have slow and fast scales and that are possibly high-dimensional. Several techniques have been developed to reduce the spatial dimension of control systems (see e.g., [12,13] and the references therein), but these techniques treat the control as a possibly time-dependent parameter (“open loop control”) and do not take into account that the control may be a feedback control that depends on the state variables (“closed loop control”). Needless to say that homogenisation techniques for stochastic control systems have been extensively studied by applied analysts using a variety of different mathematical tools, including viscosity solutions of the Hamilton-Jacobi-Bellman equation [14,15], backward stochastic differential equations [16,17], or occupation measures [18,19]. However the convergence analysis of multiscale stochastic control systems is quite involved and non-constructive, in that the limiting equations of motion are not given in explicit or closed form, which makes these results of limited practical use; see [20,21] for notable exceptions, dealing mainly with the case when the dynamics is linear.

In general, the elimination of variables and solving control problems do not commute, so one of the key questions in control engineering is under which conditions it is possible to eliminate variables before solving an optimal problem. We call this the model reduction problem. In this paper, we identify a class of stochastic feedback control problems with bilinear state dependence that have the property that they admit the elimination of variables (i.e., model reduction) before solving the control problem. These systems turn out to be relevant in the control of high-dimensional transport PDEs, such as Fokker-Planck equations or the evolution equations of open quantum systems [22,23]. The possibility of applying model reduction before solving the corresponding optimal control problem means that it is possible to treat the control in the original equation simply as a parameter. This is in accordance with the general model reduction strategy in control engineering that is motivated by the fact that solving a dimension-reduced control problem, rather than the original one, is numerically much less demanding; see e.g., [12,13] and the references therein. We will show that this strategy, under certain assumptions, yields a good approximation of the high-dimensional optimal control, which implies that the reduced-order optimal control can be used to control the full systems dynamics almost optimally.

Our approach is based on a Donsker-Varadhan type duality principle between a linear Feynman-Kac PDE and the semi-linear dynamic programming PDE associated with a stochastic control problem [24]. Here we exploit the fact that the dynamic programming PDE can be recast as an uncoupled forward-backward stochastic differential equation (see e.g., [25,26]) that can be treated by model reduction techniques, such as averaging or homogenization. The relation between semilinear PDEs of Hamilton-Jacobi-Bellman type and forward-backward stochastic differential equations (FBSDE) is a classical subject that has been first studied by Pardoux and Peng [27] and since then received lot of attention from various sides, e.g., [28,29,30,31,32,33]. The solution theory for FBSDEs has its roots in the work of Antonelli [34] and has been extended in various directions since then; see e.g., [35,36,37,38]. From a theoretical point of view, this paper goes beyond our previous works [24,39] in that we prove strong convergence of the value function and the control without relying on compactness or periodicity assumptions for the fast variables, even though we focus on bilinear systems only, which is the weakest form of nonlinearity. (Many nonlinear systems however can be represented as bilinear systems by a so-called Carleman linearisation.) It also goes beyond the classical works [20,21] that treat systems that are either fully linear or linear in the fast variables. We stress that we are mainly aiming at the model reduction problem, but we discuss alongside with the theoretical results some ideas to discretise the corresponding FBSDE [40,41,42,43,44], since one of the main motivations for doing model reduction is to reduce the numerical complexity of solving optimal control problems.

1.1. Set-Up and Problem Statement

We briefly discuss the technical set-up of the control problem considered in this paper. In this work, we consider the linear-quadratic (LQ) stochastic control problem of the following form: minimise the expected cost

over all admissible controls and subject to:

Here is a bounded stopping time (specified below), and the set of admissible controls is chosen such that (2) has a unique strong solution. The denomination linear-quadratic for (1)–(2) is due to the specific dependence of the system on the control variable u. The state vector is assumed to be high-dimensional, which is why we seek a low-dimensional approximation of (1)–(2).

Specifically, we consider the case that and are quadratic in x, a is linear and is constant, and the control term is an affine function of x, i.e.,

In this case, the system is called bilinear (including linear systems as a special case), and the aim is to replace (2) by a lower dimensional bilinear system

with states , and an associated reduced cost functional

that is solved instead of (1)–(2). Letting denote the minimizer of , we require that is a good approximation of the minimiser of the original problem where “good approximation” is understood in the sense that

It is a priori not clear how the symbol “≈” in the last equation must be interpreted, e.g., pointwise for all initial data for some , or uniformly on all compact subsets of .

One situation in which the above approximation property holds is when uniformly in t and the cost is continuous in the control, but it turns out that this requirement will be too strong in general and overly restrictive. We will discuss alternative criteria in the course of this paper.

1.2. Outline

The paper is organised as follows: In Section 2 we introduce the bilinear stochastic control problem studied in this paper and derive the corresponding forward-backward stochastic differential equation (FBSDE). Section 3 contains the main result, a convergence result for the value function of a singularly perturbed control problem with bilinear state dependence, based on a FBSDE formulation. In Section 4 we present a numerical example to illustrate the theoretical findings and discuss the numerical discretisation of the FBSDE. The article concludes in Section 5 with a short summary and a discussion of future work. The proof of the main result and some technical lemmas are recorded in the Appendix A.

2. Singularly Perturbed Bilinear Control Systems

We now specify the system dynamics (2) and the corresponding cost functional (1). Let with denote a decomposition of the state vector into relevant (slow) and irrelevant (fast) components. Further let denote a -valued Brownian motion on a probability space that is endowed with the filtration generated by W. For any initial condition and any -valued admissible control , with , we consider the following system of Itô stochastic differential equations

that depends parametrically on a parameter via the coefficients

where for brevity we also drop the dependence of the process on the control u, i.e., . The stiffness matrix A in (3) is assumed to be of the form

with . Control and noise coefficients are given by

and

where for all ; often we will consider either the case with , , or , with C being a multiple of the identity when . All block matrices , and are assumed to be order 1 and independent of .

The above -scaling of the coefficients is natural for a system with slow and fast degrees of freedom and arises, for example, as a result of a balancing transformation applied to a large-scale system of equations; see e.g., [22,45]. A special case of (3) is the linear system

Our goal is to control the stochastic dynamics (3)—or (7) as a special variant—so that a given cost criterion is optimised. Specifically, given two symmetric positive semidefinite matrices , we consider the quadratic cost functional

that we seek to minimise subject to the dynamics (3). Here the expectation is understood as an expectation over all realisations of starting at , and as a consequence J is a function of the initial data . The stopping time is defined as the minimum of some time and the first exit time of a domain where is an open and bounded set with smooth boundary. Specifically, we set , with

In other words, is the stopping time that is defined by the event that either or leaves the set , whichever comes first. Please note that the cost function does not explicitly depend on the fast variables . We define the corresponding value function by

Remark 1.

As a consequence of the boundedness of , we may assume that all coefficients in our control problem are bounded or Lipschitz continuous, which makes some of the proofs in the paper more transparent.

We further note that all of the following considerations trivially carry over to the case and a multi-dimensional control variable, i.e., and .

From Stochastic Control to Forward-Backward Stochastic Differential Equations

We suppose that the matrix pair satisfies the Kalman rank condition

A necessary—and in this case sufficient—condition for optimality of our control problem is that the value function (9) solves a semilinear parabolic partial differential equation of Hamilton-Jacobi-Bellman type (a.k.a. dynamic programming equation) [46]

where

and is the terminal set of the augmented process , precisely . Here is the infinitesimal generator of the control-free process,

and the nonlinearity f is given by

Please note that f is independent of y where denotes the Moore-Penrose pseudoinverse that is is unambiguously defined since and , which by noting that is the orthogonal projection onto implies that

The specific semilinear form of the equation is a consequence of the control problem being linear-quadratic. As a consequence, the dynamic programming Equation (11) admits a representation in form of an uncoupled forward-backward stochastic differential equation (FBSDE). To appreciate this point, consider the control-free process with infinitesimal generator and define an adapted process by

(We abuse notation and denote both the controlled and the uncontrolled process by .) Then, by definition, . Moreover, by Itô’s formula and the dynamic programming Equation (11), the pair can be shown to solve the system of equations

with being the control variable. Here, the second equation is only meaningful if interpreted as a backward equation, since only in this case is uniquely defined. To see this, let and and note that the ansatz (14) implies that is adapted to the filtration generated by the forward process . If the second equation was just a time-reversed SDE then would be the unique solution to the SDE with terminal condition . However, such a solution would not be adapted, because for would depend on the future value of the forward process.

Remark 2.

Equation (15) is called an uncoupled FBSDE because the forward equation for is independent of or . The fact that the FBSDE is uncoupled furnishes a well-known duality relation between the value function of an LQ optimal control problem and the cumulate generating function of the cost [47,48]; specifically, in the case that , and the pair being completely controllable, it holds that

with

Here the expectation on the right hand side is taken over all realisations of the control-free process , starting at . By the Feynman-Kac theorem, the function solves the linear parabolic boundary value problem

which is equivalent to the corresponding dynamic programming Equation (11).

3. Model Reduction

The idea is to exploit the fact that (15) is uncoupled, which allows us to derive an FBSDE for the slow variables only, by standard singular perturbation methods. The reduced FBSDE as will then be of the form

where the limiting form of the backward SDE follows from the corresponding properties of the forward SDE. Specifically, assuming that the solution of the associated SDE

that is governing the fast dynamics as , is ergodic with unique Gaussian invariant measure , where is the unique solution to the Lyapunov equation

we obtain that, asymptotically as ,

As a consequence, the limiting SDE governing the evolution of the slow process —in other words: the forward part of (18)—has the coefficients

which follows from standard homogenisation arguments [49]; a formal derivation is given in the Appendix A. By a similar reasoning we find that the driver of the limiting backward SDE reads

specifically,

with

The limiting backward SDE is equipped with a terminal condition that equals , namely,

3.1. Interpretation as an Optimal Control Problem

It is possible to interpret the reduced FBSDE again as the probabilistic version of a dynamic programming equation. To this end, note that (10) implies that the matrix pair satisfies the Kalman rank condition [50]

As a consequence, the semilinear partial differential equation

with and

has a classical solution . Letting , , with initial data and , the limiting FBSDE (18) can be readily seen to be equivalent to (27). The latter is the dynamic programming equation of the following LQ optimal control problem: minimise the cost functional

subject to

where denotes standard Brownian motion in and we have introduced the new control coefficients and .

3.2. Convergence of the Control Value

Before we state our main result and discuss its implications for the model reduction of linear and bilinear systems, we recall the basic assumptions that we impose on the system dynamics. Specifically, we say that the dynamics (3) and the corresponding cost functional (8) satisfy Condition LQ if the following holds:

- is controllable, and the range of is a subspace of .

- The matrix is Hurwitz (i.e., its spectrum lies entirely in the open left complex half-plane) and the matrix pair is controllable.

- The driver of the FBSDE (15) is continuous and quadratically growing in Z.

Assumption 2 implies that the fast subsystem (19) has a unique Gaussian invariant measure with full topological support, i.e., we have . According to ([51], Prop. 3.1) and [33], existence and uniqueness of (15) is guaranteed by Assumptions 3 and 4 and the controllability of and the range condition, which imply that the transition probability densities of the (controlled or uncontrolled) forward process are smooth and strictly positive. As a consequence of the complete controllability of the original system, the reduced system (30) is completely controllable too, which guarantees existence and uniqueness of a classical solution of the limiting dynamic programming Equation (27); see, e.g., [52].

Uniform convergence of the value function is now entailed by the strong convergence of the solution to the corresponding FBSDE as is expressed by the following Theorem.

Theorem 1.

The proof of the Theorem is given in Appendix A.2. For the reader’s convenience, we present a formal derivation of the limit equation in the next subsection.

3.3. Formal Derivation of the Limiting FBSDE

Our derivation of the limit FBSDE follows standard homogenisation arguments (see [49,53,54]), taking advantage of the fact that the FBSDE is uncoupled. To this end we consider the following linear evolution equation

for a function where

with

is the generator associated with the control-free forward process in (15). We follow the standard procedure of [49] and consider the perturbative expansion

that we insert into the Kolmogorov Equation (31). Equating different powers of we find a hierarchy of equations, the first three of which read

Assumption 2 on page 7 implies that has a one-dimensional nullspace that is spanned by functions that are constant in , and thus the first of the three equations implies that is independent of . Hence the second equation—the cell problem—reads

The last equation has a solution by the Fredholm alternative, since the right hand side averages to zero under the invariant measure of the fast dynamics that is generated by the operator , in other words, the right hand side of the linear equation is orthogonal to the nullspace of spanned by the density of . Here is the formal adjoint of the operator , defined on a suitable dense subspace of . The form of the equation suggests the general ansatz

where the function R plays no role in what follows, so we set it equal to zero. Since , the function must be of the form with a matrix . Hence

Now, solvability of the last of the three equations of (36) requires again that the right hand side averages to zero under , i.e.,

which formally yields the limiting equation for . Since is a Gaussian measure with mean 0 and covariance given by (20), the integral (38) can be explicitly computed:

where is given by (28) and the initial condition is a consequence of the fact that the initial condition in (31) is independent of . By the controllability of the pair , the limiting Equation (39) has a unique classical solution and uniform convergence is guaranteed by standard results, e.g., ([49], Thm. 20.1).

4. Numerical Studies

In this section, we present numerical results for linear and bilinear control systems and discuss the numerical discretisation of uncoupled FBSDE associated with LQ stochastic control problems. We begin with the latter.

4.1. Numerical FBSDE Discretisation

The fact that (15) or (18) are decoupled entails that they can be discretised by an explicit time-stepping algorithm. Here we utilise a variant of the least-squares Monte Carlo algorithm proposed in [41]; see also [55]. The convergence of numerical schemes for FBSDE with quadratic nonlinearities in the driver has been analysed in [56].

The least-squares Monte Carlo scheme is based on the Euler discretisation of (15):

where denotes the numerical discretisation of the joint process , where we set for when , and is an i.i.d. sequence of normalised Gaussian random variables. Now let

be the -algebra generated by the discrete Brownian motion . By definition the joint process is adapted to the filtration generated by , therefore

where we have used that is independent of . To compute from we use the identification of with and replace in (41) by

and the parametric ansatz (44) for makes the overall scheme explicit in and .

4.2. Least-Squares Solution of the Backward SDE

To evaluate the conditional expectation we recall that a conditional expectation can be characterised as the solution to the following quadratic minimisation problem:

Given M independent realisations , of the forward process , this suggests the approximation scheme

where is defined by with terminal values

(Please note that is random.) For simplicity, we assume in what follows that the terminal value is zero, i.e., we set . (Recall that the existence and uniqueness result from [33] requires to be bounded.) To represent as a function we use the ansatz

with coefficients and suitable basis functions (e.g., Gaussians). Please note that the coefficients are the unknowns in the least-squares problem (43) and thus are independent of the realisation. Now the least-squares problem that has to be solved in the n-th step of the backward iteration is of the form

with coefficients

and data

Assuming that the coefficient matrix , defined by (46) has maximum rank K, then the solution to the least-squares problem (45) is given by

The thus defined scheme is strongly convergent of order 1/2 as and as has been analysed by [41]. Controlling the approximation quality for finite values , however, requires a careful adjustment of the simulation parameters and appropriate basis functions, especially with regard to the condition number of the matrix .

4.3. Numerical Example

Illustrating our theoretical findings of Theorem 1, we consider a linear system of form (7) where the matrices and C are given by

and

This is an instance of a controlled Langevin equation with friction and noise coefficients which are assumed to fulfil the fluctuation-dissipation relation

In the example we let and . The quadratic cost functional (8) is determined by the running cost via and we apply no terminal cost, i.e.,

We apply the previously described FBSDE scheme (40), (44)–(48), which was shown to yield good results in [57], to both the full and the reduced system, and we choose , i.e., the full system is six dimensional. To this end we choose the basis functions

where is fixed but changes in each timestep such that the basis follows the forward process. For this, we simulate K additional forward trajectories and set .

We choose the parameters for the numerics as follows. The number of basis functions K is given by for the reduced system and for the full system. We choose these values because the maximally observed rank of the coefficient matrices defined in (46) is 9 for the reduced system and they should not be rank deficient. For the full system we could have used a greater values for K, but we want to keep the computational effort reasonable. Further, we choose , the final time and the number of realisations

We let the whole algorithm run five times and compute the distance between the value functions of the full and reduced systems

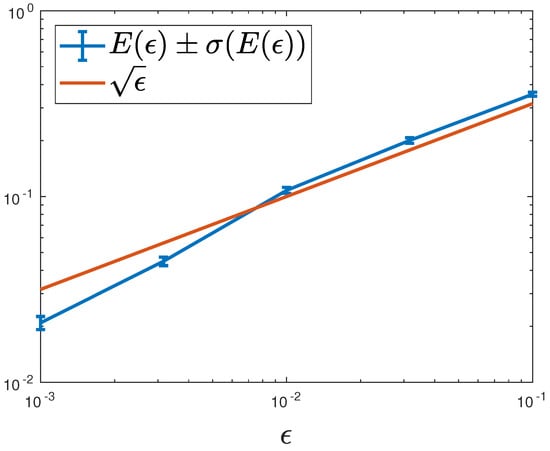

for which convergence of order was found in the proof of Theorem 1. Indeed, we observe convergence of order in our numerical example as can be seen in Figure 1 where we depict the mean and standard deviation of .

Figure 1.

Plot of the mean of its standard deviation and for comparison we plot against on a doubly logarithmic scale: we observe convergence of order as predicted by the theory.

4.4. Discussion

We shall now discuss the implications of the above simple example when it comes to more complicated dynamical systems. As a general remark the results show that it is possible to to apply model reduction before solving the corresponding optimal control problem where the control variable in the original equation can simply be treated as a parameter. This is in accordance with the general model reduction strategy in control engineering; see e.g., [12,13] and the references therein. Our results not only guarantee convergence of the value function via convergence of , but they also imply strong convergence of the optimal control, by the convergence of the control process in . (See the Appendix A for details.) This means that in the case of a system with time scale separation, our result is highly valuable since we can resort to the reduced system for finding the optimal control which can then be applied to the full systems dynamics.

We stress that our results carry over to fully nonlinear stochastic control problems which have a similar LQ structure [24]. Clearly, for realistic (i.e., high-dimensional or nonlinear) systems the identification of a small parameter remains challenging, and one has to resort to e.g., semi-empirical approaches, such as [58,59].

If the dynamics is linear, as is the case here, small parameters may be identified using system theoretic arguments based on balancing transformations (see, e.g., [22,45]). These approaches require that the dynamics is either linear or bilinear in the state variables, but the aforementioned duality for the quasi-linear dynamic programming equation can be used here as well in order to change the drift of the forward SDE from some nonlinear vector field b to a linear vector field, say, . Assuming that the noise coefficient C is square and invertible and ignoring and the boundary condition for the moment, it is easy to see that the dynamic programming PDE (11) can be recast as

Here

is the generator of a forward SDE with nonlinear drift b, and

is the driver of the corresponding backward SDE. Even though the change of drift is somewhat arbitrary, it shows that by changing the driver in the backward SDE it is possible to reduce the control problem to one with linear drift that falls within the category that is considered in this paper, at the expense of having a possibly non-quadratic cost functional.

Remark 3.

Changing the drift may be advantageous in connection with the numerical FBSDE solver. In the martingale basis approach of Bender and Steiner [41], the authors have suggested to use basis functions that are defined as conditional expectations of certain linearly independent candidate functions over the forward process, which makes the basis functions martingales. Computing the martingale basis, however, comes with a large computational overhead, which is why the authors consider only cases in which the conditional expectations can be computed analytically. Changing the drift of the forward SDE may thus be used to simplify the forward dynamics so that its distribution becomes analytically tractable.

5. Conclusions and Outlook

We have given a proof of concept that model reduction methods for singularly perturbed bilinear control systems can be applied to the dynamics before solving the corresponding optimal control problem. The key idea here was to exploit the equivalence between the semi-linear dynamic programming PDE corresponding to our stochastic optimal control problem and a singularly perturbed forward-backward SDE which is decoupled. Using this equivalence, we could derive a reduced-order FBSDE which was then interpreted as the representation of a reduced-order stochastic control problem. We have proved uniform convergence of the corresponding value function and, as an auxiliary result, obtained a strong convergence result for the optimal control. As we have argued the latter implies that the optimal control computed from the reduced system can be used to control the original dynamics.

We have presented a numerical example to illustrate our findings and discussed the numerical discretisation of uncoupled FBSDEs, based on the computation of conditional expectations. For the latter, the choice of the basis functions played an essential role, and how to cleverly choose the ansatz functions, possibly exploiting that the forward SDE has an explicit solution (see e.g., [41]) is an important aspect that future research ought to address, especially with regard to high dimensional problems.

Another class of important problems not considered in this article are slow-fast systems with vanishing noise. The natural question here is how the limit equation depends on the order in which noise and time scale parameters go to zero. This question has important consequences for the associated deterministic control problem and its regularisation by noise. We leave this topic for future work.

Author Contributions

All authors have contributed equally to this work.

Funding

This research has been partially funded by Deutsche Forschungsgemeinschaft (DFG) through the Grant CRC 1114 “Scaling Cascades in Complex Systems”, Project A05 “Probing scales in equilibrated systems by optimal nonequilibrium forcing”. Omar Kebiri acknowledges funding from the EU-METALIC II Programme.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proofs and Technical Lemmas

The idea of the proof of Theorem 1 closely follows the work [60], with the main differences being (a) that we consider slow-fast systems exhibiting three time scales, in particular the slow equation contains singular terms, and (b) that the coefficients of the fast dynamics are not periodic, with the fast process being asymptotically Gaussian as ; in particular the -dimensional fast process lives on the unbounded domain .

Appendix A.1. Poisson Equation Lemma

Theorem 1 rests on the following Lemma that is similar to a result in [61].

Lemma A1.

Suppose that the assumptions of Condition LQ on page 7 hold and define to be a function of the class . Further assume that h is centred with respect to the invariant measure π of the fast process. Then for every and initial conditions , , we have

Proof.

We remind the reader of the definition (33)–(35) of the differential operators and , and consider the Poisson equation

on the domain . (The variables and are considered as parameters.) Since h is centred with respect to , Equation (A2) has a solution by the Fredholm alternative. By Assumption 2 is a hypoelliptic operator in and thus by ([62], Thm. 2), the Poisson Equation (A2) has a unique solution that is smooth and bounded. Applying Itô’s formula to and introducing the shorthand yields

where and are square integrable martingales with respect to the natural filtration generated by the Brownian motion :

By the properties of the solution to (A2) the first three integrals on the right hand side are uniformly bounded in u and v, and thus

By the Itô isometry and the boundedness of the derivatives and , the martingale term can be bounded by

Hence

with a generic constant that is independent of and . ☐

Appendix A.2. Convergence of the Value Function

Lemma A2.

Proof.

The idea of the proof is to apply Itô’s formula to , where satisfies the backward SDE

where

and

with

We set for when . Then, by construction, , is centred with respect to and bounded (since the running cost is independent of ), therefore Lemma A1 implies that

The second contribution to the driver can be recast as , with and as given by (12) and (28) and thus, as ,

by the functional central limit theorem for diffusions with Lipschitz coefficients [53]; cf. also Section 3.3. As a consequence of (A6) and (A7), we have in , which, since , implies strong convergence of the solution of the corresponding backward SDE in .

Specifically, since is bounded , Itô’s formula applied to , yields after an application of Gronwall’s Lemma:

where the Lipschitz constant is independent of and finite for every compact subset by the boundedness of (since V is a classical solution and in bounded). Hence uniformly for , and by setting , we obtain

for a constant . ☐

This proves Theorem 1.

References

- Fleming, W.H.; Mete Soner, H. Controlled Markov Processes and Viscosity Solutions, 2nd ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

- Stengel, F.R. Optimal Control and Estimation; Dover Books on Advanced Mathematics; Dover Publications: New York, NY, USA, 1994. [Google Scholar]

- Dupuis, P.; Spiliopoulos, K.; Wang, H. Importance sampling for multiscale diffusions. Multiscale Model. Simul. 2012, 10, 1–27. [Google Scholar] [CrossRef]

- Dupuis, P.; Wang, H. Importance sampling, large deviations, and differential games. Stoch. Rep. 2004, 76, 481–508. [Google Scholar] [CrossRef]

- Davis, M.H.; Norman, A.R. Portfolio selection with transaction costs. Math. Oper. Res. 1990, 15, 676–713. [Google Scholar] [CrossRef]

- Pham, H. Continuous-Time Stochastic Control and Optimization with Financial Applications; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Hartmann, C.; Schütte, C. Efficient rare event simulation by optimal nonequilibrium forcing. J. Stat. Mech. Theor. Exp. 2012, 2012, 11004. [Google Scholar] [CrossRef]

- Schütte, C.; Winkelmann, S.; Hartmann, C. Optimal control of molecular dynamics using markov state models. Math. Program. Ser. B 2012, 134, 259–282. [Google Scholar] [CrossRef]

- Asplund, E.; Klüner, T. Optimal control of open quantum systems applied to the photochemistry of surfaces. Phys. Rev. Lett. 2011, 106, 140404. [Google Scholar] [CrossRef] [PubMed]

- Steinbrecher, A. Optimal Control of Robot Guided Laser Material Treatment. In Progress in Industrial Mathematics at ECMI 2008; Fitt, A.D., Norbury, J., Ockendon, H., Wilson, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 501–511. [Google Scholar]

- Zhang, W.; Wang, H.; Hartmann, C.; Weber, M.; Schütte, C. Applications of the cross-entropy method to importance sampling and optimal control of diffusions. SIAM J. Sci. Comput. 2014, 36, A2654–A2672. [Google Scholar] [CrossRef]

- Antoulas, A.C. Approximation of Large-Scale Dynamical Systems; SIAM: Philadelphia, PA, USA, 2005. [Google Scholar]

- Baur, U.; Benner, P.; Feng, L. Model order reduction for linear and nonlinear systems: A system-theoretic perspective. Arch. Comput. Meth. Eng. 2014, 21, 331–358. [Google Scholar] [CrossRef]

- Bensoussan, A.; Blankenship, G. Singular perturbations in stochastic control. In Singular Perturbations and Asymptotic Analysis in Control Systems; Lecture Notes in Control and Information Sciences; Kokotovic, P.V., Bensoussan, A., Blankenship, G.L., Eds.; Springer: Berlin/Heidelberg, Germany, 1987; Volume 90, pp. 171–260. [Google Scholar]

- Evans, L.C. The perturbed test function method for viscosity solutions of nonlinear PDE. Proc. R. Soc. Edinb. A 1989, 111, 359–375. [Google Scholar] [CrossRef]

- Buckdahn, R.; Hu, Y. Probabilistic approach to homogenizations of systems of quasilinear parabolic PDEs with periodic structures. Nonlinear Anal. 1998, 32, 609–619. [Google Scholar] [CrossRef]

- Ichihara, N. A stochastic representation for fully nonlinear PDEs and its application to homogenization. J. Math. Sci. Univ. Tokyo 2005, 12, 467–492. [Google Scholar]

- Kushner, H.J. Weak Convergence Methods and Singularly Perturbed Stochastic Control and Filtering Problems; Birkhäuser: Boston, MA, USA, 1990. [Google Scholar]

- Kurtz, T.; Stockbridge, R.H. Stationary solutions and forward equations for controlled and singular martingale problems. Electron. J. Probab. 2001, 6, 5. [Google Scholar] [CrossRef]

- Kabanov, Y.; Pergamenshchikov, S. Two-Scale Stochastic Systems: Asymptotic Analysis and Control; Springer: Berlin/Heidelberg, Germany; Paris, France, 2003. [Google Scholar]

- Kokotovic, P.V. Applications of singular perturbation techniques to control problems. SIAM Rev. 1984, 26, 501–550. [Google Scholar] [CrossRef]

- Hartmann, C.; Schäfer-Bung, B.; Zueva, A. Balanced averaging of bilinear systems with applications to stochastic control. SIAM J. Control Optim. 2013, 51, 2356–2378. [Google Scholar] [CrossRef]

- Pardalos, P.M.; Yatsenko, V.A. Optimization and Control of Bilinear Systems: Theory, Algorithms, and Applications; Springer: New York, NY, USA, 2010. [Google Scholar]

- Hartmann, C.; Latorre, J.; Pavliotis, G.A.; Zhang, W. Optimal control of multiscale systems using reduced-order models. J. Comput. Dyn. 2014, 1, 279–306. [Google Scholar] [CrossRef]

- Peng, S. Backward Stochastic Differential Equations and Applications to Optimal Control. Appl. Math. Optim. 1993, 27, 125–144. [Google Scholar] [CrossRef]

- Touzi, N. Optimal Stochastic Control, Stochastic Target Problem, and Backward Differential Equation; Springer: Berlin, Germany, 2013. [Google Scholar]

- Pardoux, E.; Peng, S. Adapted solution of a backward stochastic differential equation. Syst. Control Lett. 1990, 14, 55–61. [Google Scholar] [CrossRef]

- Bahlali, K.; Kebiri, O.; Khelfallah, N.; Moussaoui, H. One dimensional BSDEs with logarithmic growth application to PDEs. Stochastics 2017, 89, 1061–1081. [Google Scholar] [CrossRef]

- Duffie, D.; Epstein, L.G. Stochastic differential utility. Econometrica 1992, 60, 353–394. [Google Scholar] [CrossRef]

- El Karoui, N.; Peng, S.; Quenez, M.C. Backward stochastic differential equations in finance. Math. Financ. 1997, 7, 1–71. [Google Scholar] [CrossRef]

- Hu, Y.; Imkeller, P.; Müller, M. Utility maximization in incomplete markets. Ann. Appl. Probab. 2005, 15, 1691–1712. [Google Scholar] [CrossRef]

- Hu, Y.; Peng, S. A stability theorem of backward stochastic differential equations and its application. Acad. Sci. Math. 1997, 324, 1059–1064. [Google Scholar] [CrossRef]

- Kobylanski, M. Backward stochastic differential equations and partial differential equations with quadratic growth. Ann. Probab. 2000, 28, 558–602. [Google Scholar] [CrossRef]

- Antonelli, F. Backward-forward stochastic differential equations. Ann. Appl. Probab. 1993, 3, 777–793. [Google Scholar] [CrossRef]

- Bahlali, K.; Gherbal, B.; Mezerdi, B. Existence of optimal controls for systems driven by FBSDEs. Syst. Control Lett. 1995, 60, 344–349. [Google Scholar] [CrossRef]

- Bahlali, K.; Kebiri, O.; Mtiraoui, A. Existence of an optimal Control for a system driven by a degenerate coupled Forward-Backward Stochastic Differential Equations. Comptes Rendus Math. 2017, 355, 84–89. [Google Scholar] [CrossRef]

- Ma, J.; Protter, P.; Yong, J. Solving Forward-Backward Stochastic Differential Equations Explicitly—A Four Step Scheme. Probab. Theory Relat. Fields 1994, 98, 339–359. [Google Scholar] [CrossRef]

- Zhen, W. Forward-backward stochastic differential equations, linear quadratic stochastic optimal control and nonzero sum differential games. J. Syst. Sci. Complex. 2005, 18, 179–192. [Google Scholar]

- Hartmann, C.; Schütte, C.; Weber, M.; Zhang, W. Importance sampling in path space for diffusion processes with slow-fast variables. Probab. Theory Relat. Fields 2017, 170, 177–228. [Google Scholar] [CrossRef]

- Bally, V. Approximation scheme for solutions of BSDE. In Backward Stochastic Differential Equations; El Karoui, N., Mazliak, L., Eds.; Addison Wesley Longman: Boston, MA, USA, 1997; pp. 177–191. [Google Scholar]

- Bender, C.; Steiner, J. Least-Squares Monte Carlo for BSDEs. In Numerical Methods in Finance; Springer: Berlin, Germany, 2012; pp. 257–289. [Google Scholar]

- Bouchard, B.; Elie, R.; Touzi, N. Discrete-time approximation of BSDEs and probabilistic schemes for fully nonlinear PDEs. Comput. Appl. Math. 2009, 8, 91–124. [Google Scholar]

- Chevance, D. Numerical methods for backward stochastic differential equations. In Numerical Methods in Finance; Publications of the Newton Institute, Cambridge University Press: Cambridge, UK, 1997; pp. 232–244. [Google Scholar]

- Hyndman, C.B.; Ngou, P.O. A Convolution Method for Numerical Solution of Backward Stochastic Differential Equations. Methodol. Comput. Appl. Probab. 2017, 19, 1–29. [Google Scholar] [CrossRef]

- Hartmann, C. Balanced model reduction of partially-observed Langevin equations: An averaging principle. Math. Comput. Model. Dyn. Syst. 2011, 17, 463–490. [Google Scholar] [CrossRef]

- Fleming, W.H. Optimal investment models with minimum consumption criteria. Aust. Econ. Pap. 2005, 44, 307–321. [Google Scholar] [CrossRef]

- Budhiraja, A.; Dupuis, P. A variational representation for positive functionals of infinite dimensional Brownian motion. Probab. Math. Stat. 2000, 20, 39–61. [Google Scholar]

- Dai Pra, P.; Meneghini, L.; Runggaldier, J.W. Connections between stochastic control and dynamic games. Math. Control Signal Syst. 1996, 9, 303–326. [Google Scholar] [CrossRef]

- Pavliotis, G.A.; Stuart, A.M. Multiscale Methods: Averaging and Homogenization; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Anderson, B.D.O.; Liu, Y. Controller reduction: Concepts and approaches. IEEE Trans. Autom. Control 1989, 34, 802–812. [Google Scholar] [CrossRef]

- Bensoussan, A.; Boccardo, L.; Murat, F. Homogenization of elliptic equations with principal part not in divergence form and hamiltonian with quadratic growth. Commun. Pure Appl. Math. 1986, 39, 769–805. [Google Scholar] [CrossRef]

- Pardoux, E.; Peng, S. Backward stochastic differential equations and quasilinear parabolic partial differential equations. In Stochastic Partial Differential Equations and Their Applications; Lecture Notes in Control and Information Sciences 176; Rozovskii, B.L., Sowers, R.B., Eds.; Springer: Berlin, Germany, 1992. [Google Scholar]

- Freidlin, M.; Wentzell, A. Random Perturbations of Dynamical Systems; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Khasminskii, R. Principle of averaging for parabolic and elliptic differential equations and for Markov processes with small diffusion. Theory Probab. Appl. 1963, 8, 1–21. [Google Scholar] [CrossRef]

- Gobet, E.; Turkedjiev, P. Adaptive importance sampling in least-squares Monte Carlo algorithms for backward stochastic differential equations. Stoch. Proc. Appl. 2005, 127, 1171–1203. [Google Scholar] [CrossRef]

- Turkedjiev, P. Numerical Methods for Backward Stochastic Differential Equations of Quadratic and Locally Lipschitz Type. Ph.D. Thesis, Humboldt-Universität zu Berlin, Berlin, Germany, 2013. [Google Scholar]

- Kebiri, O.; Neureither, L.; Hartmann, C. Adaptive importance sampling with forward-backward stochastic differential equations. arXiv, 2018; arXiv:1802.04981. [Google Scholar]

- Franzke, C.; Majda, A.J.; Vanden-Eijnden, E. Low-order stochastic mode reduction for a realistic barotropic model climate. J. Atmos. Sci. 2005, 62, 1722–1745. [Google Scholar] [CrossRef]

- Lall, S.; Marsden, J.; Glavaški, S. A subspace approach to balanced truncation for model reduction of nonlinear control systems. Int. J. Robust. Nonlinear Control 2002, 12, 519–535. [Google Scholar] [CrossRef]

- Briand, P.; Hu, Y. Probabilistic approach to singular perturbations of semilinear and quasilinear parabolic. Nonlinear Anal. 1999, 35, 815–831. [Google Scholar] [CrossRef]

- Bensoussan, A.; Lions, J.L.; Papanicolaou, G. Asymptotic Analysis for Periodic Structures; American Mathematical Society: Washington, DC, USA, 1978; pp. 769–805. [Google Scholar]

- Pardoux, E.; Yu, A. Veretennikov: On the poisson equation and diffusion approximation 3. Ann. Probab. 2005, 33, 1111–1133. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).