Beyond Traditional Classifiers: Evaluating Large Language Models for Robust Hate Speech Detection

Abstract

1. Introduction

2. Related Works

3. Methodology

3.1. Dataset Selection and Preparation

- Fine-grained HS labels (such as “racist” or “sexiest”) were consolidated into broader categories. Content labeled with any form of HS was reclassified under the general label “hate”, and those labeled “neutral” or “not-hateful” were converted to “not-hate”.

- Ambiguous labels were removed by excluding any content with unclear categorization, such as “abusive”, since such content may not necessarily be considered hateful.

- Content labeled “neutral”, “not-hate”, or “not abusive” was reclassified as “not-hate”.

3.2. LLM Selection and Rationale

3.2.1. LLM Selection

- Meta-Llama-3-8B-Instruct.Q4_0 (Meta) [18]: This model is part of the Meta-Llama series, designed to excel in instruction-following tasks. With 8 billion parameters, it has been fine-tuned on diverse instructional data, making it well-suited for tasks requiring nuanced understanding and contextual interpretation.

- Phi-3-Mini-4k-instruct.Q4_0 (Phi) [19]: This model is a smaller yet efficient member of the Phi series, with 3 billion parameters. Despite its size, it is designed for instruction-based tasks and is optimized for quick inference, making it an effective choice for scenarios where computational resources are limited.

- Nous-Hermes-2-Mistral-7B-DPO.Q4_0 (Hermes) [20]: This model, part of the Nous-Hermes series, incorporates the Mistral architecture and has 7 billion parameters. It has been fine-tuned with a focus on dialogue and contextual understanding, which are crucial for accurately identifying hate speech in conversational contexts.

- WizardLM-13B-v1.2.Q4_0 (WizardLM) [21]: This is a large 13-billion-parameter model from the WizardLM series, designed to perform well on a variety of NLP tasks, including text generation and comprehension. Its architecture is optimized for both speed and accuracy, providing a balance of performance and resource efficiency.

3.2.2. LLM Selection Rationale

3.3. Experimental Design

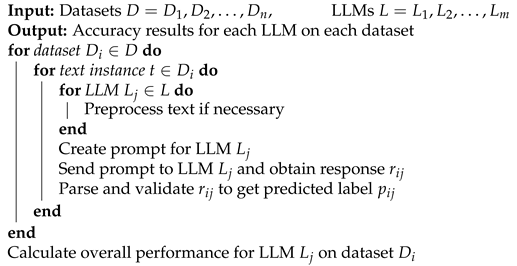

| Algorithm 1: Hate speech detection methodology |

|

4. Classification Results and Analysis

4.1. Binary Classification

4.2. Multi-Class Classification

5. Limitations

5.1. Technical Limitations

5.2. Ethical Risks and Societal Implications of LLM-Based Hate Speech Detection

5.2.1. Censorship and Freedom of Expression

5.2.2. Cultural Sensitivity and Representational Bias

5.2.3. Transparency and Algorithmic Accountability

5.2.4. Amplification of Societal Biases

5.2.5. Scale and Human Oversight Challenges

5.2.6. Democratic Discourse and Self-Censorship

5.2.7. Stakeholder Impact and Justice Considerations

5.2.8. Recommendations for Ethical Deployment

6. Practical Deployment and Operational Considerations

6.1. Real-World Applications and Implementation

6.2. Policy and Regulatory Implications

6.3. Industry Standards and Best Practices

6.4. Societal Impact and Digital Rights

7. Future Work

- Cross-Platform and Multimodal Extensions: A critical direction for future work could involve expanding the evaluation framework to encompass diverse social media platforms and their unique contextual characteristics. While our current study focuses on text-based detection, future research should investigate how hate speech manifestations vary across platforms like YouTube, Reddit, TikTok, and Instagram, each with distinct user demographics, communication norms, and content formats. This expansion would require developing platform-specific datasets and evaluation metrics that capture the nuanced ways hate speech adapts to different social environments. Building upon our text-based foundation, multimodal hate speech detection [23] represents a natural and necessary evolution. Future work should explore how LLMs can be integrated with computer vision and audio processing models to detect hate speech in videos, memes, and multimedia content. This multimodal approach would significantly enhance real-world deployment readiness by addressing the increasingly visual and interactive nature of online hate speech.

- Context-Aware Evaluation Frameworks: The findings highlight the need for more sophisticated evaluation frameworks that incorporate the complex dynamics of public discourse behavior. Future research should develop benchmarking methodologies (such as [4]) that account for sentiment polarity, toxicity gradients, and user engagement patterns, as they influence hate speech detection effectiveness [24]. This could involve creating sentiment-aware evaluation metrics that assess model performance across different emotional contexts and toxicity-gradient benchmarks that evaluate detection accuracy at varying levels of content harmfulness. Additionally, investigating temporal dynamics and evolving hate speech patterns would provide valuable insights into model robustness over time. Future work should examine how LLM performance degrades or adapts as hate speech evolves linguistically and contextually, potentially incorporating continual learning approaches to maintain detection effectiveness.

- Hybrid Classification Frameworks: A particularly promising direction could involve utilizing LLMs as components within broader classification frameworks rather than as standalone detection systems. Future research should explore hybrid architectures where LLMs serve specific roles such as feature extraction, contextual understanding, or ensemble voting within multi-stage classification pipelines. This approach could combine the semantic understanding capabilities of LLMs with the efficiency and specialization of traditional machine learning models, potentially achieving superior performance while maintaining computational feasibility for large-scale deployment. Such hybrid frameworks could leverage LLMs for tasks like generating contextual embeddings [25], performing semantic similarity analysis, or providing interpretable explanations for classification decisions, while relying on lighter models for initial filtering or real-time processing components.

- Robustness and Adversarial Evaluation: Future work should address the robustness of LLM-based hate speech detection against adversarial attacks and evasion techniques. This could include evaluating model performance against character-level perturbations, linguistic obfuscation, and emerging evasion strategies employed by users attempting to circumvent detection systems. Developing adversarially robust models and evaluation protocols would enhance the practical reliability of hate speech detection systems.

- Ethical and Bias Considerations: Expanding our evaluation framework to include comprehensive bias analysis represents another critical future direction [26]. This should encompass investigating demographic biases, cultural sensitivity across different communities, and fairness metrics that ensure equitable detection performance across diverse user populations. Future research should also explore how different prompting strategies and model fine-tuning approaches can mitigate inherent biases while maintaining detection effectiveness.

- Real-World Deployment Studies: Finally, bridging the gap between academic evaluation and practical deployment requires longitudinal studies of LLM-based hate speech detection systems in real-world environments. Future work should investigate how model performance translates to actual content moderation effectiveness, user satisfaction, and platform safety improvements. This could include studying the interaction between automated detection systems and human moderators, developing effective human-in-the-loop workflows, and measuring the broader societal impact of improved hate speech detection capabilities. These future research directions collectively address the limitations identified in our current study while building upon the foundational benchmarks we have established. By pursuing these avenues, the research community can develop more comprehensive, contextually aware, and practically deployable hate speech detection systems that effectively serve the complex needs of modern digital communication platforms.

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gröndahl, T.; Pajola, L.; Juuti, M.; Conti, M.; Asokan, N. All you need is “love” evading hate speech detection. In Proceedings of the 11th ACM Workshop on Artificial Intelligence and Security, Toronto, ON, Canada, 15–19 October 2018; pp. 2–12. [Google Scholar]

- Wang, C.C.; Day, M.Y.; Wu, C.L. Political Hate Speech Detection and Lexicon Building: A Study in Taiwan. IEEE Access 2022, 10, 44337–44346. [Google Scholar] [CrossRef]

- Badjatiya, P.; Gupta, S.; Gupta, M.; Varma, V. Deep Learning for Hate Speech Detection in Tweets. In Proceedings of the 26th International Conference on World Wide Web Companion, Republic and Canton of Geneva, CHE, Perth, Australia, 3–7 April 2017; WWW ’17 Companion. pp. 759–760. [Google Scholar] [CrossRef]

- Jaf, S.; Barakat, B. Empirical Evaluation of Public HateSpeech Datasets. arXiv 2024, arXiv:2407.12018. [Google Scholar] [CrossRef]

- Plaza-del Arco, F.M.; Nozza, D.; Hovy, D. Respectful or toxic? using zero-shot learning with language models to detect hate speech. In Proceedings of the 7th Workshop on Online Abuse and Harms (WOAH), Toronto, ON, Canada, 13 July 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023. [Google Scholar]

- Pan, R.; García-Díaz, J.A.; Valencia-García, R. Comparing Fine-Tuning, Zero and Few-Shot Strategies with Large Language Models in Hate Speech Detection in English. CMES-Comput. Model. Eng. Sci. 2024, 140, 2849–2868. [Google Scholar] [CrossRef]

- Tunstall, L.; Beeching, E.; Lambert, N.; Rajani, N.; Rasul, K.; Belkada, Y.; Huang, S.; Von Werra, L.; Fourrier, C.; Habib, N.; et al. Zephyr: Direct distillation of lm alignment. arXiv 2023, arXiv:2310.16944. [Google Scholar]

- Saha, P.; Agrawal, A.; Jana, A.; Biemann, C.; Mukherjee, A. On Zero-Shot Counterspeech Generation by LLMs. arXiv 2024, arXiv:2403.14938. [Google Scholar]

- Nirmal, A.; Bhattacharjee, A.; Sheth, P.; Liu, H. Towards Interpretable Hate Speech Detection using Large Language Model-extracted Rationales. arXiv 2024, arXiv:2403.12403. [Google Scholar]

- Suryawanshi, S.; Chakravarthi, B.R.; Arcan, M.; Buitelaar, P. Multimodal meme dataset (MultiOFF) for identifying offensive content in image and text. In Proceedings of the Second Workshop on Trolling, Aggression and Cyberbullying, Marseille, France, 11–16 May 2020; pp. 32–41. [Google Scholar]

- Salminen, J.; Almerekhi, H.; Milenković, M.; Jung, S.g.; An, J.; Kwak, H.; Jansen, B.J. Anatomy of online hate: Developing a taxonomy and machine learning models for identifying and classifying hate in online news media. In Proceedings of the Twelfth International AAAI Conference on Web and Social Media, Palo Alto, CA, USA, 25–28 June 2018. [Google Scholar]

- Davidson, T.; Warmsley, D.; Macy, M.; Weber, I. Automated Hate Speech Detection and the Problem of Offensive Language. Proc. Int. AAAI Conf. Web Soc. Media 2017, 11, 512–515. [Google Scholar] [CrossRef]

- De Gibert, O.; Perez, N.; García-Pablos, A.; Cuadros, M. Hate speech dataset from a white supremacy forum. arXiv 2018, arXiv:1809.04444. [Google Scholar]

- Waseem, Z.; Hovy, D. Hateful Symbols or Hateful People? Predictive Features for Hate Speech Detection on Twitter. In Proceedings of the NAACL Student Research Workshop, San Diego, CA, USA, 13–15 June 2016; pp. 88–93. [Google Scholar] [CrossRef]

- Qian, J.; Bethke, A.; Liu, Y.; Belding, E.; Wang, W.Y. A Benchmark Dataset for Learning to Intervene in Online Hate Speech. arXiv 2019, arXiv:1909.04251. [Google Scholar]

- Vidgen, B.; Nguyen, D.; Margetts, H.; Rossini, P.; Tromble, R.; Toutanova, K.; Rumshisky, A.; Zettlemoyer, L.; Hakkani-Tur, D.; Beltagy, I.; et al. Introducing CAD: The contextual abuse dataset. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 2289–2303. [Google Scholar]

- Kennedy, C.J.; Bacon, G.; Sahn, A.; von Vacano, C. Constructing interval variables via faceted Rasch measurement and multitask deep learning: A hate speech application. arXiv 2020, arXiv:2009.10277. [Google Scholar]

- AI@Meta. Llama 3 Model Card 2024. Available online: https://github.com/meta-llama/llama3/blob/main/MODEL_CARD.md (accessed on 4 August 2025).

- Microsoft. Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone. arXiv 2024, arXiv:2404.14219. [Google Scholar]

- Teknium; Theemozilla; Karan4d; Huemin_art. Nous Hermes 2 Mistral 7B DPO. 2024. Available online: https://huggingface.co/NousResearch/Nous-Hermes-2-Mistral-7B-DPO (accessed on 4 August 2025).

- Xu, C.; Sun, Q.; Zheng, K.; Geng, X.; Zhao, P.; Feng, J.; Tao, C.; Jiang, D. WizardLM: Empowering Large Language Models to Follow Complex Instructions. arXiv 2023, arXiv:2304.12244. [Google Scholar]

- Song, Q.; Liao, P.; Zhao, W.; Wang, Y.; Hu, S.; Zhen, H.L.; Jiang, N.; Yuan, M. Harnessing On-Device Large Language Model: Empirical Results and Implications for AI PC. arXiv 2025, arXiv:2505.15030. [Google Scholar]

- Kiela, D.; Firooz, H.; Mohan, A.; Goswami, V.; Singh, A.; Ringshia, P.; Testuggine, D. The hateful memes challenge: Detecting hate speech in multimodal memes. Adv. Neural Inf. Process. Syst. 2020, 33, 2611–2624. [Google Scholar]

- Carvallo, A.; Mendoza, M.; Fernandez, M.; Ojeda, M.; Guevara, L.; Varela, D.; Borquez, M.; Buzeta, N.; Ayala, F. Hate Explained: Evaluating NER-Enriched Text in Human and Machine Moderation of Hate Speech. In Proceedings of the 9th Workshop on Online Abuse and Harms (WOAH), Vienna, Austria, 1 August 2025; pp. 458–467. [Google Scholar]

- Tao, C.; Shen, T.; Gao, S.; Zhang, J.; Li, Z.; Tao, Z.; Ma, S. Llms are also effective embedding models: An in-depth overview. arXiv 2024, arXiv:2412.12591. [Google Scholar]

- Lin, L.; Wang, L.; Guo, J.; Wong, K.F. Investigating bias in llm-based bias detection: Disparities between llms and human perception. arXiv 2024, arXiv:2403.14896. [Google Scholar]

| Dataset | Ref. | Dataset Size |

|---|---|---|

| Suryawanish | [10] | 743 |

| Salminen | [11] | 3222 |

| Davidson | [12] | 2860 |

| Gibert | [13] | 10,944 |

| Waseem | [14] | 10,458 |

| Qian | [15] | 27,546 |

| Vidgen | [16] | 8186 |

| Dataset | Hate Speech Classes | Ref. |

|---|---|---|

| Vidgen | Affiliation, Person, Identity | [16] |

| Kennedy | Race, Religion, Origin, Gender, Sexuality, Age, Disability | [17] |

| Characteristic | Llama-3-8B | Phi-3-Mini-4k | Hermes-2-Mistral-7B | WizardLM-13B |

|---|---|---|---|---|

| Base Model | Llama 3 | Phi-3 | Mistral-7B | Llama 2 |

| Parameters | 8B | 3.8B | 7B | 13B |

| Quantization | Q4_0 | Q4_0 | Q4_0 | Q4_0 |

| Context Length | 8192 | 4096 | 32,768 | 4096 |

| Developer | Meta | Microsoft | Nous Research | WizardLM Team |

| Training Focus | General Instruct | Compact Efficiency | DPO Fine-Tuning | Instruction-Following |

| Specialization | Balanced | Mobile/Edge | Conversational | Complex Reasoning |

| Memory Usage | Medium | Low | Medium | High |

| Performance | High | Good | High | Very High |

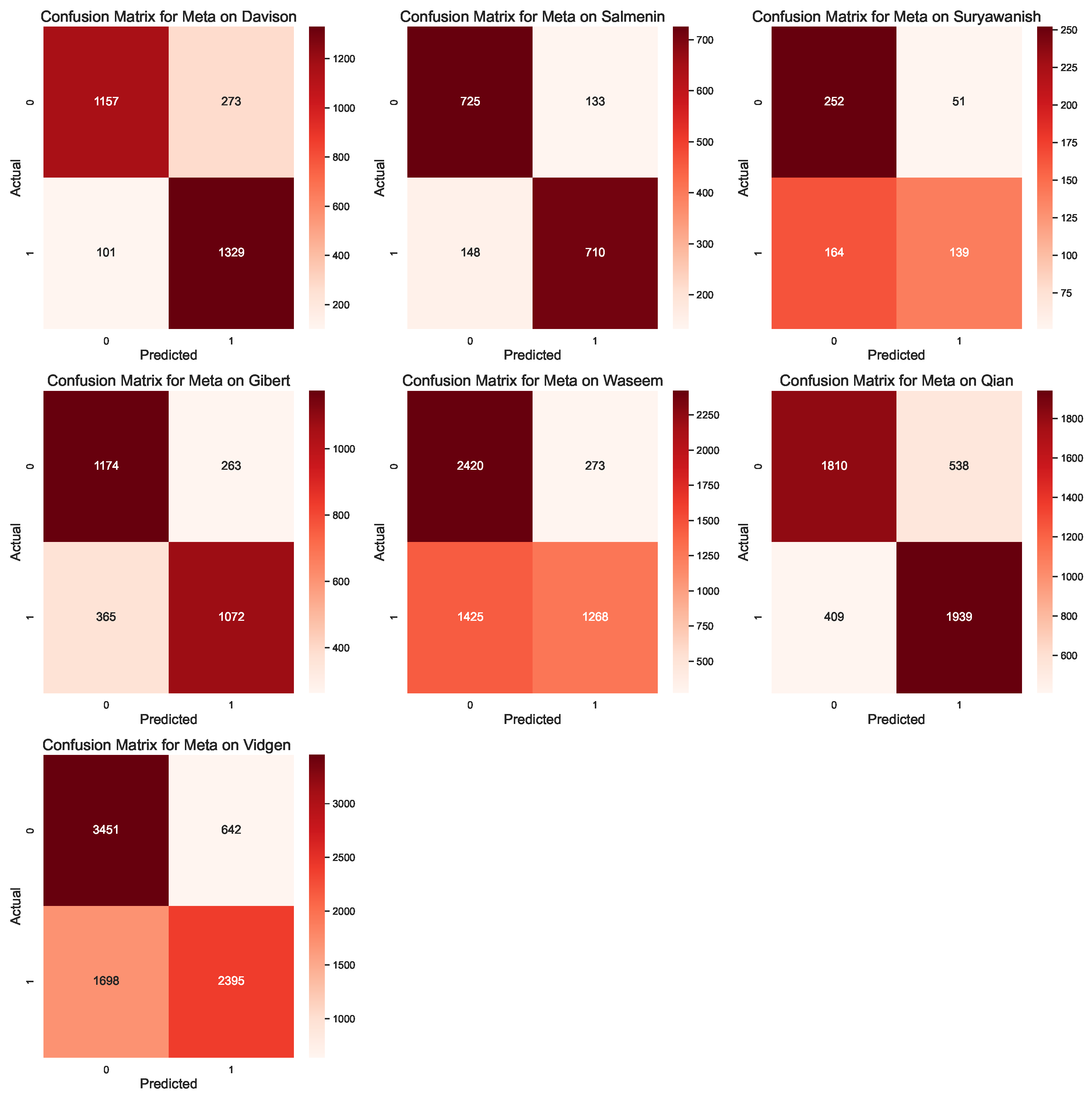

| Dataset | Model | Kappa | Acc. | Micro F1 | Macro F1 | Wtd. F1 | Recall | Prec. |

|---|---|---|---|---|---|---|---|---|

| Davison | Phi | 0.6622 | 0.8311 | 0.8311 | 0.8301 | 0.8301 | 0.8311 | 0.8395 |

| Meta | 0.7385 | 0.8692 | 0.8692 | 0.8688 | 0.8688 | 0.8692 | 0.8747 | |

| WizardLM | −0.0518 | 0.4741 | 0.4741 | 0.4741 | 0.4741 | 0.4741 | 0.4741 | |

| Hermes | 0.7790 | 0.8895 | 0.8895 | 0.8895 | 0.8895 | 0.8895 | 0.8895 | |

| Salmenin | Phi | 0.5163 | 0.7582 | 0.7582 | 0.7487 | 0.7487 | 0.7582 | 0.8039 |

| Meta | 0.6725 | 0.8363 | 0.8363 | 0.8362 | 0.8362 | 0.8363 | 0.8364 | |

| WizardLM | 0.0221 | 0.5111 | 0.5111 | 0.4876 | 0.4876 | 0.5111 | 0.5136 | |

| Hermes | 0.5431 | 0.7716 | 0.7716 | 0.7634 | 0.7634 | 0.7716 | 0.8148 | |

| Suryawanish | Phi | 0.1617 | 0.5809 | 0.5809 | 0.5159 | 0.5159 | 0.5809 | 0.6746 |

| Meta | 0.2904 | 0.6452 | 0.6452 | 0.6324 | 0.6324 | 0.6452 | 0.6687 | |

| WizardLM | 0.2178 | 0.6089 | 0.6089 | 0.6088 | 0.6088 | 0.6089 | 0.6091 | |

| Hermes | 0.2112 | 0.6056 | 0.6056 | 0.5688 | 0.5688 | 0.6056 | 0.6603 | |

| Gibert | Phi | 0.3591 | 0.6795 | 0.6795 | 0.6588 | 0.6588 | 0.6795 | 0.7373 |

| Meta | 0.5630 | 0.7815 | 0.7815 | 0.7812 | 0.7812 | 0.7815 | 0.7829 | |

| WizardLM | −0.0056 | 0.4972 | 0.4972 | 0.4967 | 0.4967 | 0.4972 | 0.4972 | |

| Hermes | 0.4941 | 0.7470 | 0.7470 | 0.7419 | 0.7419 | 0.7470 | 0.7684 | |

| Waseem | Phi | 0.0873 | 0.5436 | 0.5436 | 0.4677 | 0.4677 | 0.5436 | 0.6016 |

| Meta | 0.3695 | 0.6847 | 0.6847 | 0.6696 | 0.6696 | 0.6847 | 0.7261 | |

| WizardLM | −0.0650 | 0.4675 | 0.4675 | 0.4654 | 0.4654 | 0.4675 | 0.4670 | |

| Hermes | 0.1749 | 0.5875 | 0.5875 | 0.5240 | 0.5240 | 0.5875 | 0.6872 | |

| Qian | Phi | 0.4302 | 0.7151 | 0.7151 | 0.7064 | 0.7064 | 0.7151 | 0.7438 |

| Meta | 0.5967 | 0.7983 | 0.7983 | 0.7982 | 0.7982 | 0.7983 | 0.7992 | |

| WizardLM | 0.0503 | 0.5251 | 0.5251 | 0.5163 | 0.5163 | 0.5251 | 0.5271 | |

| Hermes | 0.4911 | 0.7455 | 0.7455 | 0.7396 | 0.7396 | 0.7455 | 0.7702 | |

| Vidgen | Phi | 0.2448 | 0.6224 | 0.6224 | 0.5880 | 0.5880 | 0.6224 | 0.6838 |

| Meta | 0.4283 | 0.7142 | 0.7142 | 0.7093 | 0.7093 | 0.7142 | 0.7294 | |

| WizardLM | 0.0259 | 0.5130 | 0.5130 | 0.5025 | 0.5025 | 0.5130 | 0.5141 | |

| Hermes | 0.2800 | 0.6400 | 0.6400 | 0.6067 | 0.6067 | 0.6400 | 0.7118 |

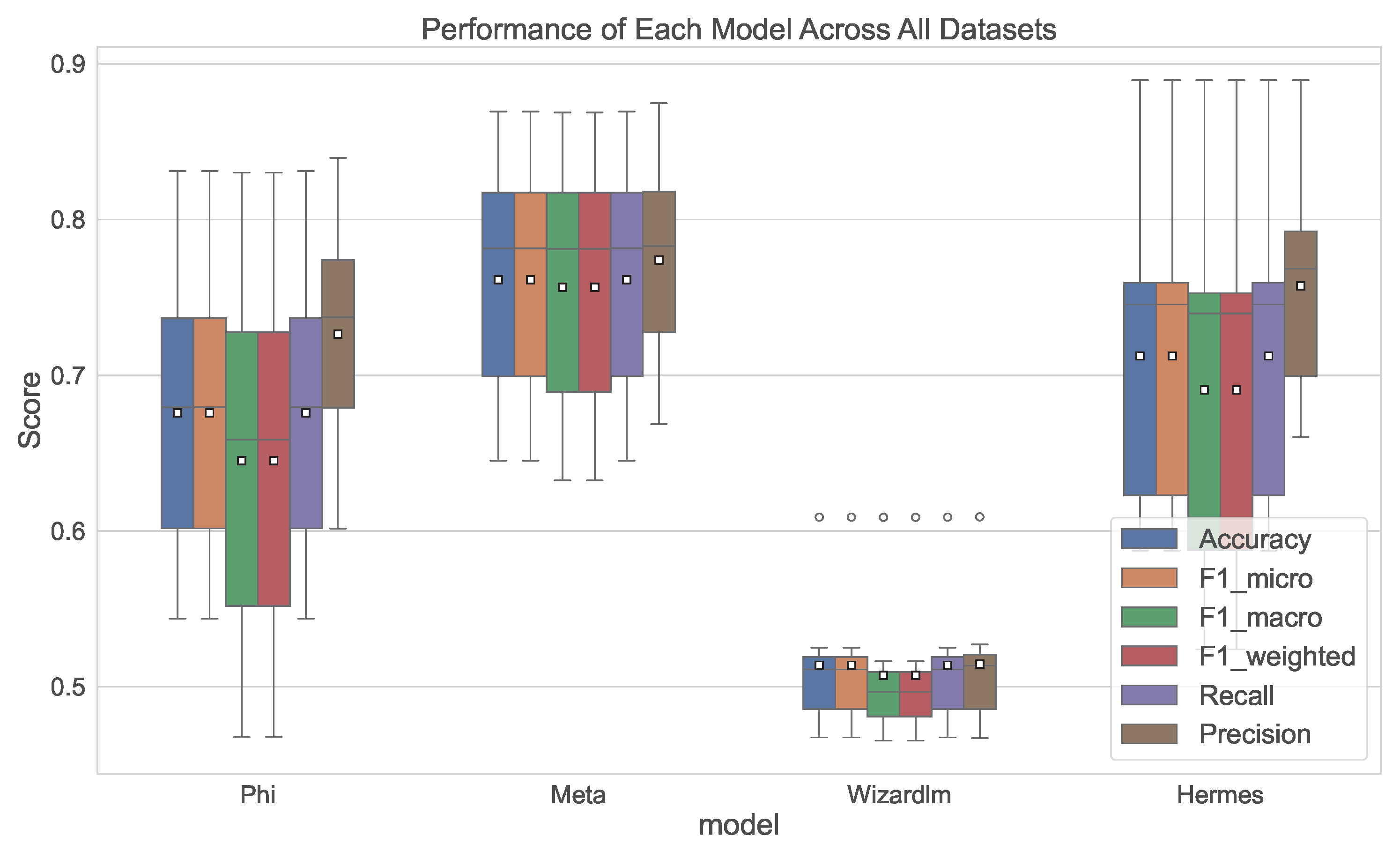

| Metric | Phi | Meta | WizardLM | Hermes |

|---|---|---|---|---|

| Kappa | 0.35165 | 0.52269 | 0.02769 | 0.42477 |

| Accuracy | 0.67583 | 0.76134 | 0.51385 | 0.71239 |

| Micro F1 | 0.67583 | 0.76134 | 0.51385 | 0.71239 |

| Macro F1 | 0.64508 | 0.75654 | 0.50734 | 0.69057 |

| Weighted F1 | 0.64508 | 0.75654 | 0.50734 | 0.69057 |

| Recall | 0.67583 | 0.76134 | 0.51385 | 0.71239 |

| Precision | 0.72635 | 0.77391 | 0.51459 | 0.75746 |

| Dataset | Model | Kappa | Accuracy | Micro F1 | Macro F1 | Weighted F1 | Recall | Precision |

|---|---|---|---|---|---|---|---|---|

| Vidgen | Phi | 0.08226 | 0.580545 | 0.580545 | 0.112344 | 0.6914 | 0.580545 | 0.889401 |

| Meta | 0.125767 | 0.742737 | 0.742737 | 0.248307 | 0.806462 | 0.742737 | 0.889668 | |

| Hermes | 0.156025 | 0.833877 | 0.833877 | 0.280837 | 0.851569 | 0.833877 | 0.87374 | |

| WizardLM | 0.145076 | 0.864848 | 0.864848 | 0.276903 | 0.861384 | 0.864848 | 0.867348 | |

| Kennedy | Phi | 0.045342 | 0.210755 | 0.210755 | 0.099052 | 0.278826 | 0.210755 | 0.723553 |

| Meta | 0.066452 | 0.259884 | 0.259884 | 0.087799 | 0.356399 | 0.259884 | 0.651437 | |

| Hermes | 0.08737 | 0.309698 | 0.309698 | 0.099408 | 0.423658 | 0.309698 | 0.678297 | |

| WizardLM | 0.13222 | 0.594667 | 0.594667 | 0.13243 | 0.548781 | 0.594667 | 0.514553 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barakat, B.; Jaf, S. Beyond Traditional Classifiers: Evaluating Large Language Models for Robust Hate Speech Detection. Computation 2025, 13, 196. https://doi.org/10.3390/computation13080196

Barakat B, Jaf S. Beyond Traditional Classifiers: Evaluating Large Language Models for Robust Hate Speech Detection. Computation. 2025; 13(8):196. https://doi.org/10.3390/computation13080196

Chicago/Turabian StyleBarakat, Basel, and Sardar Jaf. 2025. "Beyond Traditional Classifiers: Evaluating Large Language Models for Robust Hate Speech Detection" Computation 13, no. 8: 196. https://doi.org/10.3390/computation13080196

APA StyleBarakat, B., & Jaf, S. (2025). Beyond Traditional Classifiers: Evaluating Large Language Models for Robust Hate Speech Detection. Computation, 13(8), 196. https://doi.org/10.3390/computation13080196