Multi-Corpus Benchmarking of CNN and LSTM Models for Speaker Gender and Age Profiling

Abstract

1. Introduction

2. Related Work

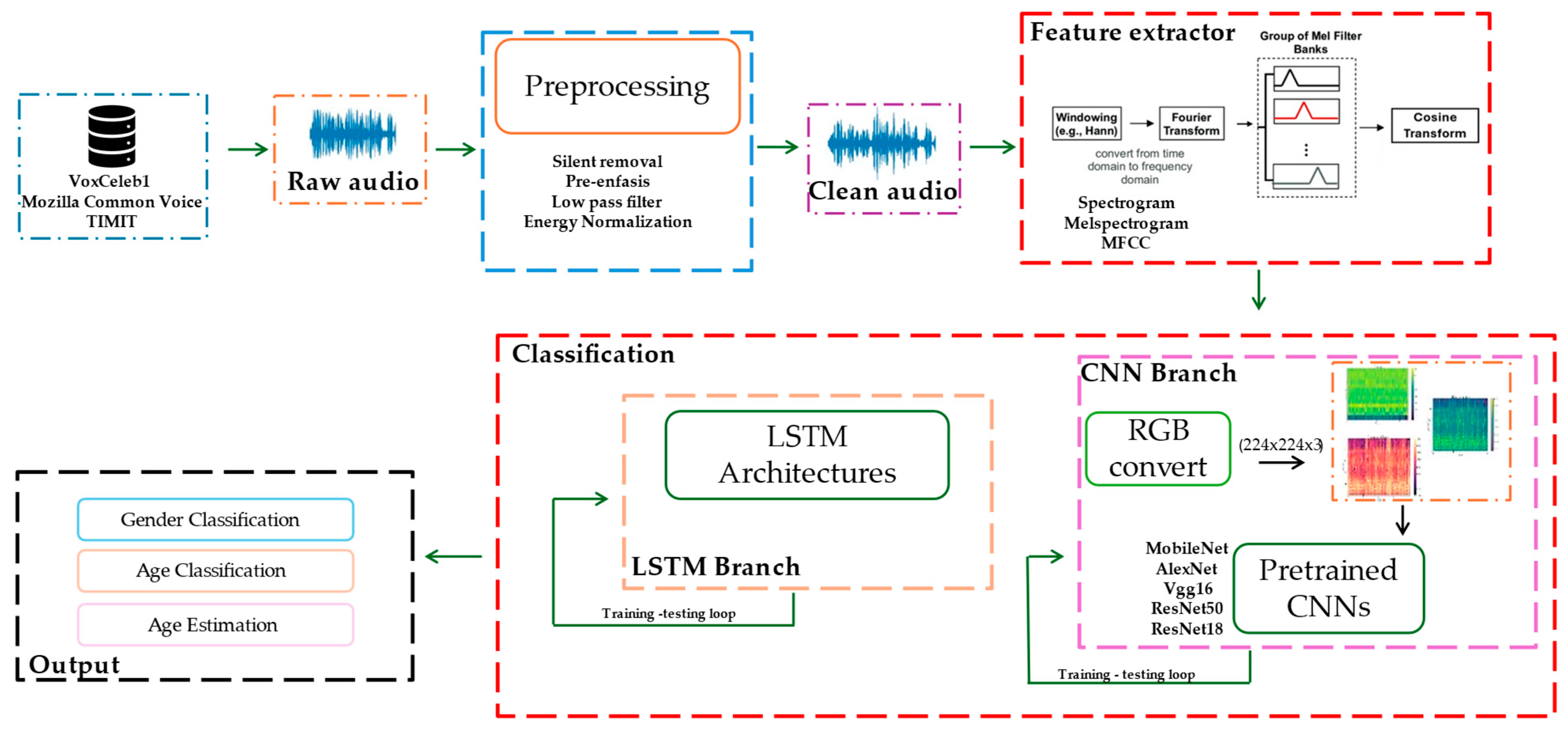

3. Proposed Method

3.1. Preprocessing

3.1.1. Silence Removal

3.1.2. Pre-Emphasis

3.1.3. Low-Pass Filtering

3.1.4. Energy Normalization

3.2. Feature Extraction

3.3. Classification

3.3.1. Feature Selection for Classifiers

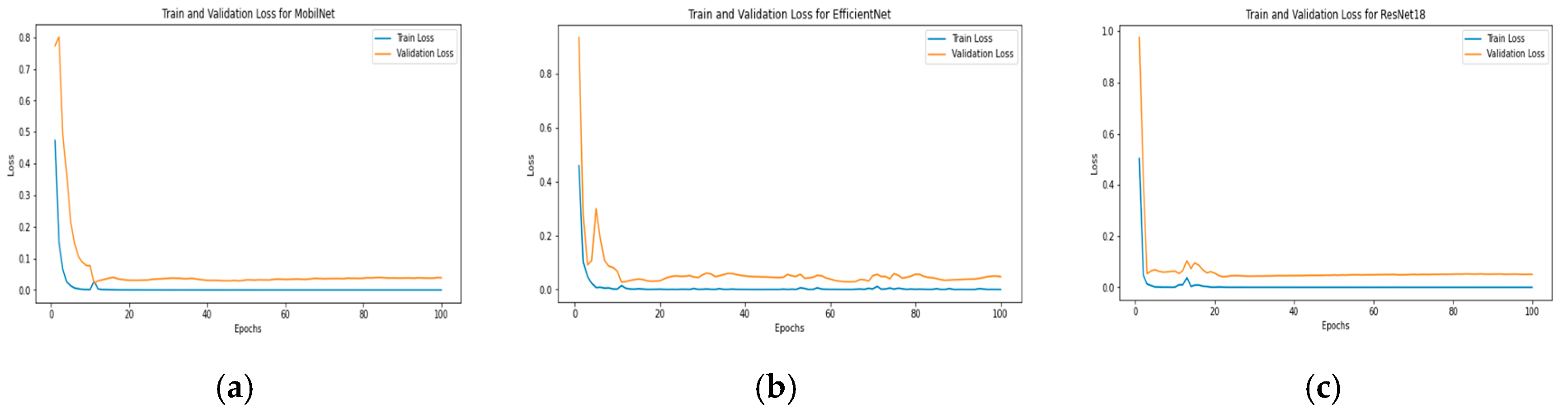

3.3.2. Training of Classifiers

- Stage 1—Model Selection: Transfer learning was applied to various pre-trained CNN models, and multiple LSTM configurations were trained on Subset 1. The top three CNN models and the best LSTM configuration were selected based on classification accuracy for gender and age classification or MAE for age estimation.

- Stage 2—Fine-Tuning and Full Training: The selected CNN models underwent fine-tuning, and the best LSTM configuration was trained on the complete datasets to optimize the performance. This two-stage approach leverages the strengths of transfer learning for CNNs and systematic hyperparameter tuning for LSTMs, ensuring robust adaptation to the gender classification and age estimation tasks.

First Stage

Second Stage

- Graphics Card: NVIDIA GeForce RTX 3070 Ti, NVIDIA, Santa Clara, CA, USA.

- Graphics Clock: 1770 MHz.

- Total Available Memory: 24,527 MB.

- Host: Ryzen 7 3700x 8-core CPU at 3.60 GHz, 32 GB RAM, AMD, Santa Clara, CA, USA.

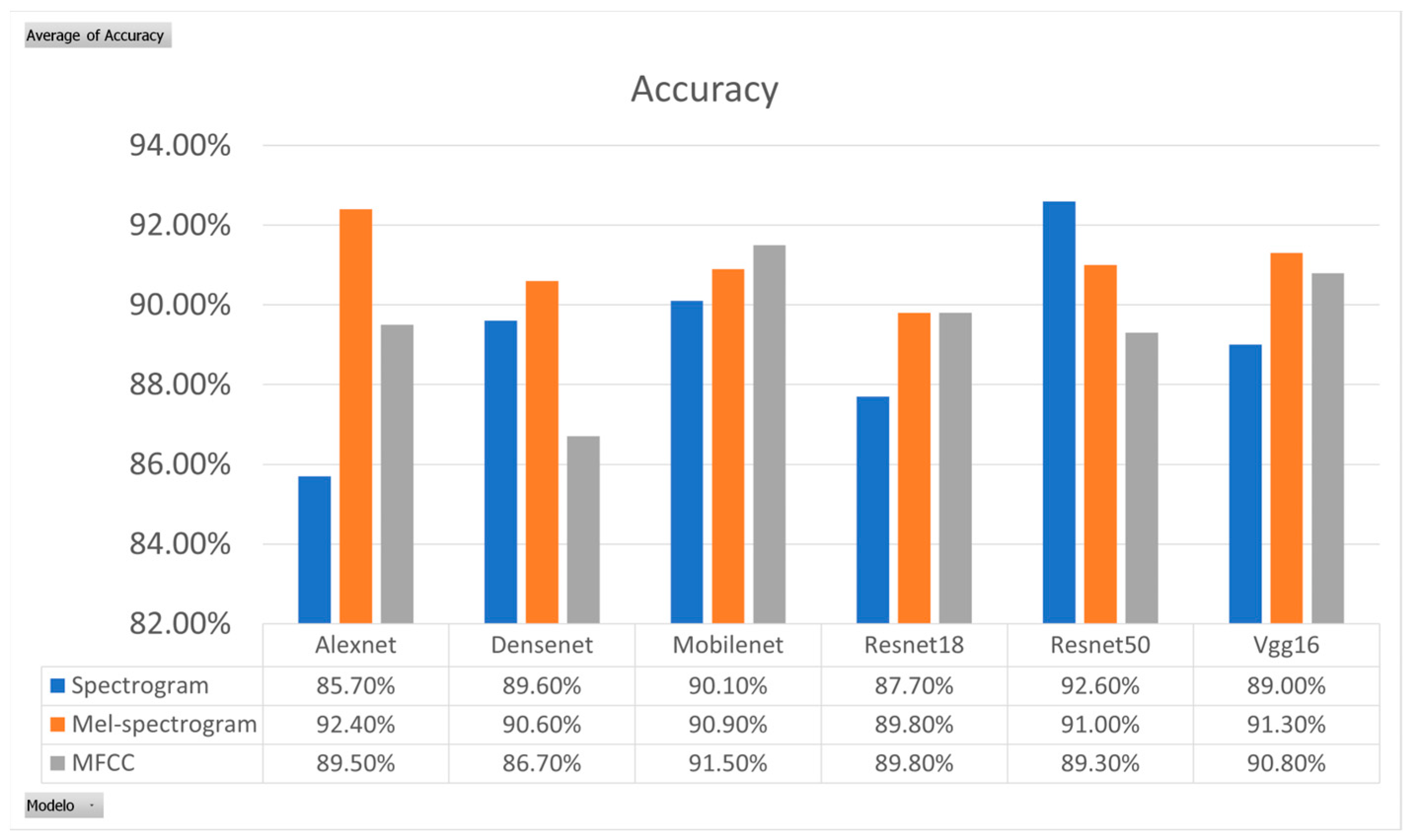

4. Results and Discussion

4.1. Datasets

4.2. Results

5. Conclusions and Future Work

- Improving results using fine preprocessing.

- CNN/LSTM pipeline generalizes across diverse corpora without hyperparameter retuning.

- Model capacity should match corpus scale.

- Front-end choice must fit the architecture.

- Performance improvements over prior art is statistically significant

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Model | Frozen Layers (Blocks) | Retrained Layers (Blocks) |

|---|---|---|

| VGG16 | Blocks 1–3 (conv1_1—conv3_3) | Blocks 4 and 5 (conv4_1—conv5_3) |

| ResNet50 | Stem conv1 + Layer1 (conv2_x) + Layer2 (conv3_x) | Layer3 (conv4_x) and Layer4 (conv5_x) |

| ResNet18 | Stem conv1 + Layer1 (conv2_x) + Layer2 (conv3_x) | Layer3 (conv4_x) and Layer4 (conv5_x) |

| MobileNetV2 | Conv2dNormActivation (block 0) + Inverted Residual blocks 1–15 | Inverted Residual blocks 16 and 17 + Classification head |

| EfficientNet-B0 | Stem conv + Stages 1–5 (MBConv1/MBConv6) | Stages 6 and 7 (MBConv6) + Head |

Appendix B

- 0.

- Constants & Conventions

DATASETS = {

"VoxCeleb1": {fs_in:16_000, fs_proc:16_000, n_mels:224, n_mfcc:40},

"CommonVoice": {fs_in:44_100, fs_proc:22_050, n_mels:128, n_mfcc:13},

"TIMIT": {fs_in:16_000, fs_proc:16_000, n_mels:64, n_mfcc:13}

}

WINDOW_LEN = 25 ms

WINDOW_STEP = 10 ms

IMG_SIZE = 224×224×3

OPTIM = Adam(lr=1e-3, weight_decay=1e-4)

SCHED = ReduceOnPlateau(factor=0.5, patience=3, min_lr=1e-6)

EARLY_STOP = patience 15

DROPOUT = 0.5

- 1.

-

Main Flow

for corpus in DATASETS: train_set, test_set = stratify(corpus, split=0.8) cnn_models = train_CNN_stage1(train_set, corpus) lstm_model = train_LSTM_stage1(train_set, corpus) top_cnns = select_top3(cnn_models, metric="accuracy-or-MAE") top_lstm = select_top1(lstm_model, metric="accuracy-or-MAE") final_models = fine_tune_stage2(top_cnns, top_lstm, train_set, corpus) evaluate(final_models, test_set, corpus) save(final_models, path="checkpoints/"+corpus)

- 2.

-

Audio Preprocessing

def preprocess(wav, fs_in, fs_proc):

# 2.1 Resampling

wav = resample(wav, fs_in, fs_proc)

# 2.2 Silence removal

μ, σ = mean_variance(wav)

threshold = q # choose q ∈[0.05,0.1]

wav = discard_samples(|wav|2 < threshold)

# 2.3 Pre–emphasis

wav = pre_emphasis_filter(wav, α=0.97)

# 2.4 10th-order Butterworth low-pass, fc=4 kHz

wav = butter_lowpass(wav, order=10, fc=4_000, fs=fs_proc)

# 2.5 Energy Normalization

wav = (wav-μ)/σ

return wav

- 3.

-

Feature Extraction

def extract_features(wav, corpus):

# 3.1 Frame segmentation + Hamming window

frames = window(wav, WINDOW_LEN, WINDOW_STEP)

# 3.2 Power spectrogram (STFT)

spec = |STFT(frames)|2

# 3.3 Mel spectrogram

mel = apply_mel_bank(spec, n_mels=DATASETS[corpus].n_mels)

# 3.4 MFCC

mfcc = DCT(log(mel))[:DATASETS[corpus].n_mfcc]

# 3.5 Prepare for CNN

img = resize_to_RGB(mel, target=IMG_SIZE)

return img, mfcc

- 4.

-

Training—Stage 1 (model selection)

def train_CNN_stage1(data, corpus):

SUBSET = sample(data, n_train=5_000, n_val=500) # VoxCeleb1 “Subset 1”

ARCHS = [AlexNet, VGG16, ResNet18, ResNet50, DenseNet, EfficientNet, MobileNet]

results = {}

for arch in ARCHS:

net = load_pretrained(arch, "ImageNet")

freeze_base(net)

replace_head(net, task_output) # 2-class, 6-class or 1-reg

metrics = train(net, SUBSET.img, labels, OPTIM, SCHED, EARLY_STOP, DROPOUT)

results[arch] = metrics

return results

def train_LSTM_stage1(data, corpus):

CONFIGS = combinations(layers=[1,2,3], hidden=[128,256,512])

results = {}

for layers, hidden in CONFIGS:

net = LSTM(input_dim=DATASETS[corpus].n_mfcc,

hidden_dim=hidden,

num_layers=layers, dropout=DROPOUT)

metrics = train(net, data.mfcc, labels, OPTIM, SCHED,

EARLY_STOP, DROPOUT)

results[(layers,hidden)] = metrics

return results

- 5.

-

Training—Stage 2 (fine-tuning)

def fine_tune_stage2(cnns, lstm, data, corpus):

final_models = {}

for model in cnns + [lstm]:

net = load(model)

if net.is_CNN: unfreeze_last_block(net)

metrics = train(net, data.features(model), labels, OPTIM, SCHED, EARLY_STOP, DROPOUT)

final_models[model] = metrics

return final_models

- 6.

-

Evaluation and Metrics

def evaluate(models, test_set, corpus):

for name, net in models.items():

preds = net(test_set.features(name))

if task == "regression":

MAE = mean_absolute_error(preds, test_set.age)

else:

ACC = accuracy(preds, test_set.label)

CM = confusion_matrix(preds, test_set.label)

print_results(name, ACC, MAE, CM)

- 7.

-

Inference (production service)

def infer(audio_path, corpus, task):

wav = load_wav(audio_path)

wav = preprocess(wav, fs_in=DATASETS[corpus].fs_in, fs_proc=DATASETS[corpus].fs_proc)

img, mfcc = extract_features(wav, corpus)

model = load_best_model(corpus, task)

x = img if model.is_CNN else mfcc

output = model(x)

return output

- 8.

-

Hardware & Software Requirements

GPU : NVIDIA RTX 3070 Ti 8 GB CPU : Ryzen 7 3700X, 32 GB RAM Framework: PyTorch ≥ 1.13 Typical time: – Stage 1 (subset) ≈ 2–3 h per architecture – Stage 2 (full) ≈ 6–8 h per model/dataset

- 9.

-

Final Notes

* Use Mel spectrograms for CNN and MFCC for LSTM; coefficient counts depend on the corpus. * Tune silence threshold q between 0.05 and 0.1 by observing PSD. * Enable checkpointing and early-stopping to avoid overfitting. * Keep the provided seeds and splits to exactly reproduce the paper results.

References

- Corkrey, R.; Parkinson, L. Interactive voice response: Review of studies 1989–2000. Behav. Res. Methods Instrum. Comput. 2002, 34, 342–353. [Google Scholar] [CrossRef]

- Jaid, U.H.; Hassan, A.K.A. Review of Automatic Speaker Profiling: Features, Methods, and Challenges. Iraqi J. Sci. 2023, 6548–6571. [Google Scholar] [CrossRef]

- Humayun, M.A.; Shuja, J.; Abas, P.E. Speaker Profiling Based on the Short-Term Acoustic Features of Vowels. Technologies 2023, 11, 119. [Google Scholar] [CrossRef]

- Vásquez-Correa, J.C.; Álvarez Muniain, A. Novel speech recognition systems applied to forensics within child exploitation: Wav2vec2. 0 vs. whisper. Sensors 2023, 23, 1843. [Google Scholar] [CrossRef] [PubMed]

- Kalluri, S.B.; Vijayasenan, D.; Ganaphaty, S. Automatic speaker profiling from short duration speech data. Speech Commun. 2020, 121, 16–28. [Google Scholar] [CrossRef]

- Schuller, B.W.; Steidl, S.; Batliner, A.; Marschik, P.B.; Baumeister, H.; Dong, F.; Zafeiriou, S. The interspeech 2018 computational paralinguistics challenge: Atypical & self-assessed affect, crying & heart beats. In Proceedings of the 19th Annual Conference of the International Speech Communication, INTERSPEECH 2018, Hyderabad, India, 2–6 September 2018; International Speech Communication Association: Brussels, Belgium, 2018. [Google Scholar]

- Lee, Y.; Kreiman, J. Acoustic voice variation in spontaneous speech. J. Acoust. Soc. Am. 2022, 151, 3462–3472. [Google Scholar] [CrossRef]

- Al-Maashani, T.; Mendonça, I.; Aritsugi, M. Age classification based on voice using Mel-spectrogram and MFCC. In Proceedings of the 2023 24th International Conference on Digital Signal Processing (DSP), Rhodes, Greece, 11–13 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Cover, T.M.; Hart, P.E. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Raghavan, U.N.; Albert, R.; Kumara, S. Near linear time algorithm to detect communiti structures in large-scale networks. Rhysical Rev. E 2007, 76, 036106. [Google Scholar]

- Sánchez-Hevia, H.A.; Gil-Pita, R.; Utrilla-Manso, M.; Rosa-Zurera, M. Age group classification and gender recognition from speech with temporal convolutional neural networks. Multimed. Tools Appl. 2022, 81, 3535–3552. [Google Scholar] [CrossRef]

- Kwasny, D.; Hemmerling, D. Gender and age estimation methods based on speech using deep neural networks. Sensors 2021, 21, 4785. [Google Scholar] [CrossRef]

- Alnuaim, A.A.; Zakariah, M.; Shashidhar, C.; Hatamleh, W.A.; Tarazi, H.; Shukla, P.K.; Ratna, R. Speaker gender recognition based on deep neural networks and ResNet50. Wirel. Commun. Mob. Comput. 2022, 2022, 4444388. [Google Scholar] [CrossRef]

- Hechmi, K.; Trong, T.N.; Hautamäki, V.; Kinnunen, T. Voxceleb enrichment for age and gender recognition. In Proceedings of the 2021 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Cartagena, Colombia, 13–17 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 687–693. [Google Scholar]

- Tursunov, A.; Mustaqeem Choeh, J.Y.; Kwon, S. Age and gender recognition using a convolutional neural network with a specially designed multi-attention module through speech spectrograms. Sensors 2021, 21, 5892. [Google Scholar] [CrossRef]

- Zheng, W.; Yang, P.; Lai, R.; Zhu, K.; Zhang, T.; Zhang, J.; Fu, H. Exploring Multi-task Learning Based Gender Recognition and Age Estimation for Class-imbalanced Data. In Proceedings of the 23rd INTERSPEECH, Incheon, Republic of Korea, 18–22 September 2022; pp. 1983–1987. [Google Scholar]

- Nowakowski, A.; Kasprzak, W. Automatic speaker’s age classification in the Common Voice database. In Proceedings of the 2023 18th Conference on Computer Science and Intelligence Systems (FedCSIS), Warsaw, Poland, 17–20 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1087–1091. [Google Scholar]

- Haluška, R.; Popovič, M.; Pleva, M.; Frohman, M. Detection of Gender and Age Category from Speech. In Proceedings of the 2023 World Symposium on Digital Intelligence for Systems and Machines (DISA), Košice, Slovakia, 21–22 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 72–77. [Google Scholar]

- Yücesoy, E. Speaker age and gender recognition using 1D and 2D convolutional neural networks. Neural Comput. Appl. 2024, 36, 3065–3075. [Google Scholar] [CrossRef]

- Yücesoy, E. Automatic Age and Gender Recognition Using Ensemble Learning. Appl. Sci. 2024, 14, 6868. [Google Scholar] [CrossRef]

- Jahangir, R.; Teh, Y.W.; Nweke, H.F.; Mujtaba, G.; Al-Garadi, M.A.; Ali, I. Speaker identification through artificial intelligence techniques: A comprehensive review and research challenges. Expert Syst. Appl. 2021, 171, 114591. [Google Scholar] [CrossRef]

- Rabiner, L.R.; Schafer, R.W. Introduction to Digital Speech Processing; Now Publishers Inc.: Norwell, MA, USA, 2007; Volume 1, pp. 1–194. [Google Scholar] [CrossRef]

- Kacur, J.; Puterka, B.; Pavlovicova, J.; Oravec, M. Frequency, Time, Representation and Modeling Aspects for Major Speech and Audio Processing Applications. Sensors 2022, 22, 6304. [Google Scholar] [CrossRef]

- Tun, P.T.Z.; Swe, K.T. Audio signal filtering with low-pass and high-pass filters. Int. J. All Res. Writ. 2020, 2, 1–4. [Google Scholar]

- MacCallum, J.K.; Olszewski, A.E.; Zhang, Y.; Jiang, J.J. Effects of low-pass filtering on acoustic analysis of voice. J. Voice 2011, 25, 15–20. [Google Scholar] [CrossRef]

- Meng, H.; Yan, T.; Yuan, F.; Wei, H. Speech emotion recognition from 3D log-mel spectrograms with deep learning network. IEEE Access 2019, 7, 125868–125881. [Google Scholar] [CrossRef]

- Ittichaichareon, C.; Suksri, S.; Yingthawornsuk, T. Speech recognition using MFCC. In Proceedings of the International Conference on Computer Graphics, Simulation and Modeling, Pattaya, Thailand, 28–29 July 2012; Volume 9, p. 2012. [Google Scholar]

- Bhandari, B. Comparative study of popular deep learning models for machining roughness classification using sound and force signals. Micromachines 2021, 12, 1484. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.T.; Zhang, J.; Dai, L.R. Differential Time-frequency Log-mel Spectrogram Features for Vision Transformer Based Infant Cry Recognition. Proc. Interspeech 2022, 2022, 1963–1967. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Mukherjee, K.; Khare, A.; Verma, A. A simple dynamic learning-rate tuning algorithm for automated training of DNNs. arXiv 2019, arXiv:1910.11605. [Google Scholar]

- Prechelt, L. Early stopping—But when? In Neural Networks: Tricks of the Trade; Orr, G.B., Müller, K.-R., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–69. [Google Scholar]

- Krogh, A.; Hertz, J.A. A simple weight decay can improve generalization. In Proceedings of the 4th Conference on Neural Information Processing Systems (NIPS 1991), Denver, CO, USA, 2–5 December 1991; pp. 950–957. [Google Scholar]

- Srivastava, N.; Hinton, G.E.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Shim, J.W. Enhancing cross entropy with a linearly adaptive loss function for optimized classification performance. Sci. Rep. 2024, 14, 27405. [Google Scholar] [CrossRef]

- Hodson, T.O. Root-mean-square error (RMSE) or mean absolute error (MAE): When to use them or not. Geosci. Model Dev. 2022, 15, 5481–5487. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2009, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning (ICML) 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2014, 22, 1345–1359. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 27, 3320–3328. [Google Scholar]

- Nagrani, A.; Chung, J.S.; Zisserman, A. VoxCeleb: A large-scale speaker identification dataset. Proc. Interspeech 2017, 2017, 2616–2620. [Google Scholar] [CrossRef]

- Ardila, R.; Branson, M.; Davis, K.; Henretty, M.; Kohler, M.; Meyer, J.; Morais, R.; Saunders, L.; Tyers, F.M.; Weber, G. Common voice: A massively-multilingual speech corpus. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2020; pp. 4218–4222. [Google Scholar]

- Zue, V.; Seneff, S.; Glass, J. Speech database development at MIT: Timit and beyond. Speech Commun. 1990, 9, 351–356. [Google Scholar] [CrossRef]

- Mitsui, K.; Sawada, K. MSR-NV: Neural Vocoder Using Multiple Sampling Rates. In Proceedings of the INTERSPEECH 2022, Incheon, Republic of Korea, 18–22 September 2022; pp. 798–802. [Google Scholar]

| Dataset | Number of Mel Coefficients | Number of MFCC Coefficients |

|---|---|---|

| VoxCeleb 1 | 224 | 40 |

| Mozilla Common Voice | 128 | 13 |

| TIMIT | 64 | 13 |

| Category | Feature | VoxCeleb1 | Mozilla Common Voice | TIMIT |

|---|---|---|---|---|

| Size and Composition | Number of recordings (F/M *) | 65,369/79,896 | 218,267/536,857 | 390/1170 |

| Number of speakers (F/M) | 563/688 | 2953/10,107 | 192/438 | |

| Audio Duration (s) | Min/Mean/Max | 4.0/8.2/145 | 0.072/4.87/79.89 | 0.92/3.07/7.79 |

| Technical Characteristics | Original sampling rate | 16 kHz | 44.1 kHz | 16 kHz |

| Language | English | English | English | |

| Native/Non-native speakers | 1113/138 | 365,190/389,934 | 630/0 | |

| Metadata and Labels | Labels | Gender | Gender and age groups | Gender and age |

| Unique characteristics | Data collected in-the-wild | High accent variability and large dataset | Widely used in academic publications |

| Model | Accuracy (%) |

|---|---|

| MobilNet | 98.86 |

| ResNet50 | 97.73 |

| VGG16 | 97.3 |

| Hidden Units | Layers | Input Units | Accuracy (%) |

|---|---|---|---|

| 256 | 2 | 40 | 97.50 |

| 512 | 3 | 40 | 97.68 |

| 256 | 3 | 40 | 97.52 |

| Model | Classification Task | Accuracy (%) |

|---|---|---|

| ResNet18 | gender | 98.90 |

| ResNet50 | gender | 98.85 |

| EfficientNet | gender | 99.82 |

| ResNet18 | age | 99.11 |

| ResNet50 | age | 99.86 |

| EfficientNet | age | 99.37 |

| Hidden Units | Layers | Input Units | Accuracy for Age (%) | Accuracy for Gender (%) |

|---|---|---|---|---|

| 256 | 2 | 40 | 93.63 | 98.55 |

| 256 | 3 | 40 | 94.78 | 98.64 |

| 512 | 3 | 40 | 97.67 | 98.81 |

| Model | MAE for Age (Years) | Accuracy (%) |

|---|---|---|

| MobileNet | 5.35 | 99.23 |

| AlexNet | 8.89 | 98.97 |

| ResNet50 | 8.42 | 99.23 |

| Hidden Units | Layers | Input Units | MAE for Age (Years) | Accuracy for Gender (%) |

|---|---|---|---|---|

| 128 | 2 | 64 | 5.47 | 98.65 |

| 256 | 3 | 64 | 5.48 | 98.46 |

| 512 | 2 | 64 | 5.45 | 98.27 |

| Dataset | Gender Classification | Age Range Classification | Age Estimation |

|---|---|---|---|

| VoxCeleb1 | MobilNet (98.86) | - | - |

| MCV | EfficientNet (99.82) | ResNet50 (99.86) | - |

| TIMIT | LSTM 2 × 128 (98.65) | - | MobileNet (5.35) |

| Dataset | Metric | Baseline | Proposed | Δ (Proposed—Baseline) |

|---|---|---|---|---|

| VoxCeleb1 | Gender Acc (%) | 98.29 [14] | 98.86 ✓ | +0.57 |

| MCV | Gender Acc (%) | 98.57 [13] | 99.82 ✓ | +1.25 |

| MCV | Age Acc (%) | 97.00 [8] | 99.86 ✓ | +2.86 |

| TIMIT | MAE (male, years) | 5.12 [12] | 5.35 ✓ | +0.23 (worse) |

| TIMIT | MAE (female, years) | 5.269 [12] | 5.44 ✓ | +0.15 (worse) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jorrin-Coz, J.; Nakano, M.; Perez-Meana, H.; Hernandez-Gonzalez, L. Multi-Corpus Benchmarking of CNN and LSTM Models for Speaker Gender and Age Profiling. Computation 2025, 13, 177. https://doi.org/10.3390/computation13080177

Jorrin-Coz J, Nakano M, Perez-Meana H, Hernandez-Gonzalez L. Multi-Corpus Benchmarking of CNN and LSTM Models for Speaker Gender and Age Profiling. Computation. 2025; 13(8):177. https://doi.org/10.3390/computation13080177

Chicago/Turabian StyleJorrin-Coz, Jorge, Mariko Nakano, Hector Perez-Meana, and Leobardo Hernandez-Gonzalez. 2025. "Multi-Corpus Benchmarking of CNN and LSTM Models for Speaker Gender and Age Profiling" Computation 13, no. 8: 177. https://doi.org/10.3390/computation13080177

APA StyleJorrin-Coz, J., Nakano, M., Perez-Meana, H., & Hernandez-Gonzalez, L. (2025). Multi-Corpus Benchmarking of CNN and LSTM Models for Speaker Gender and Age Profiling. Computation, 13(8), 177. https://doi.org/10.3390/computation13080177