Application of AI in Date Fruit Detection—Performance Analysis of YOLO and Faster R-CNN Models

Abstract

1. Introduction

2. Materials and Methods

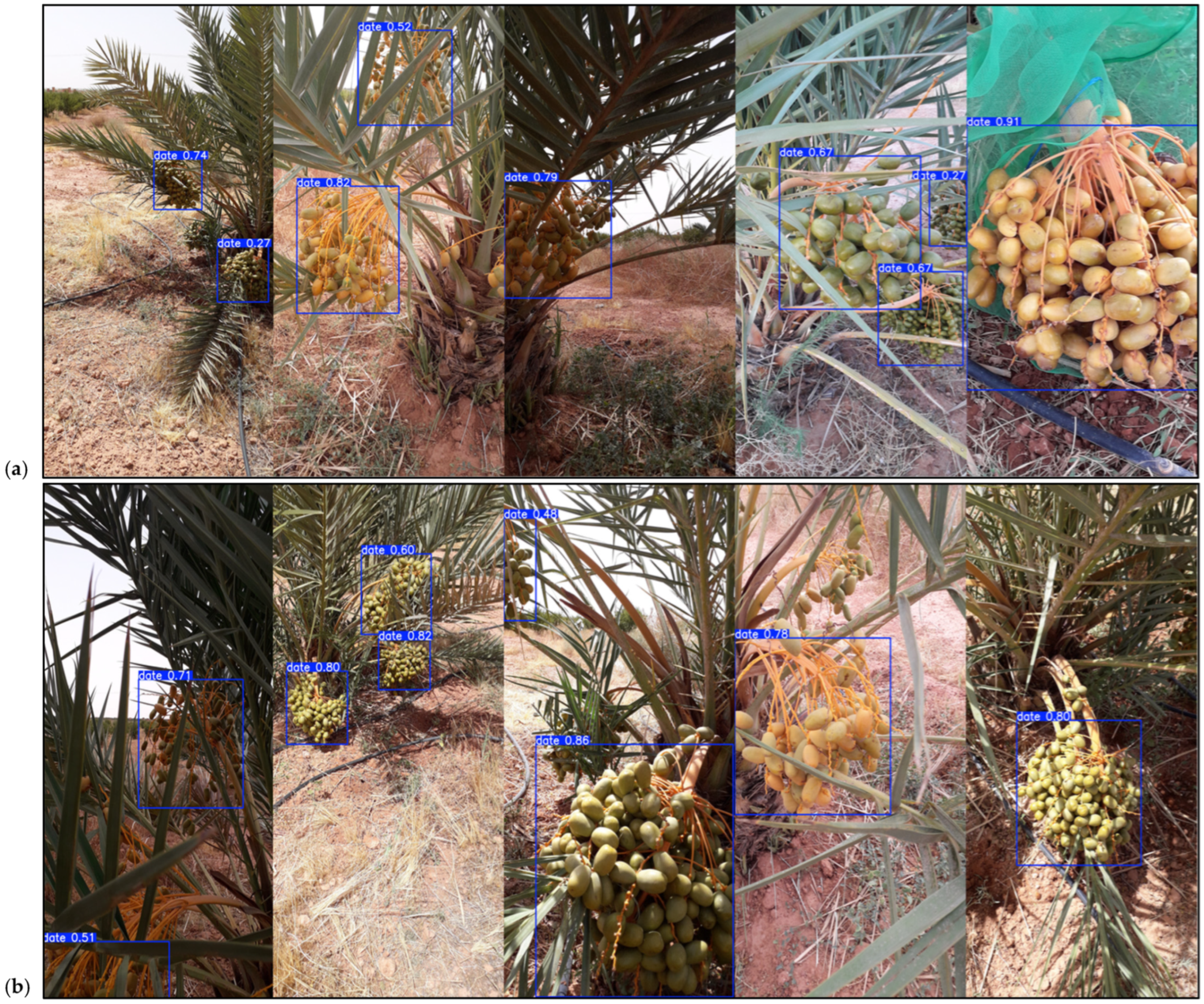

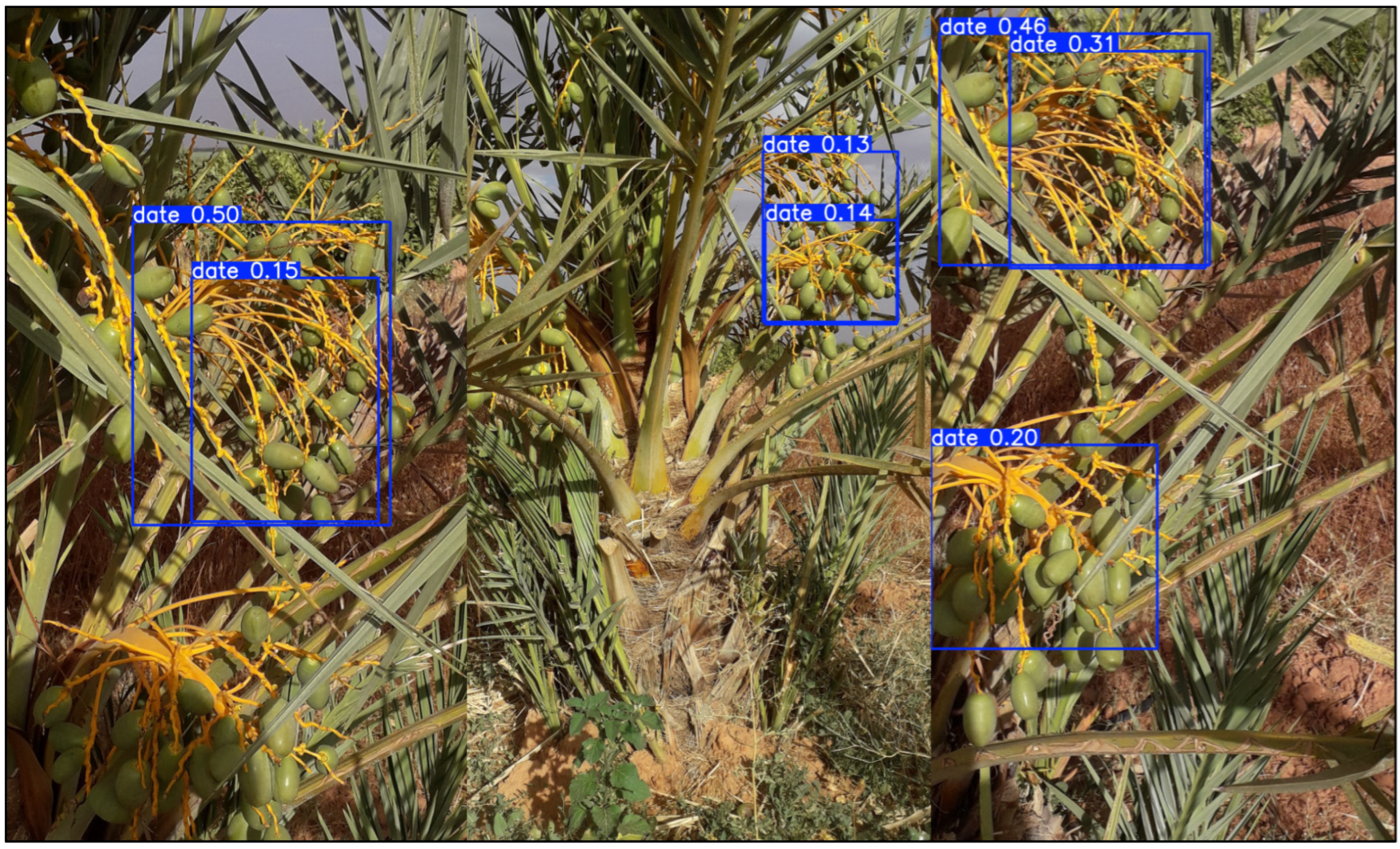

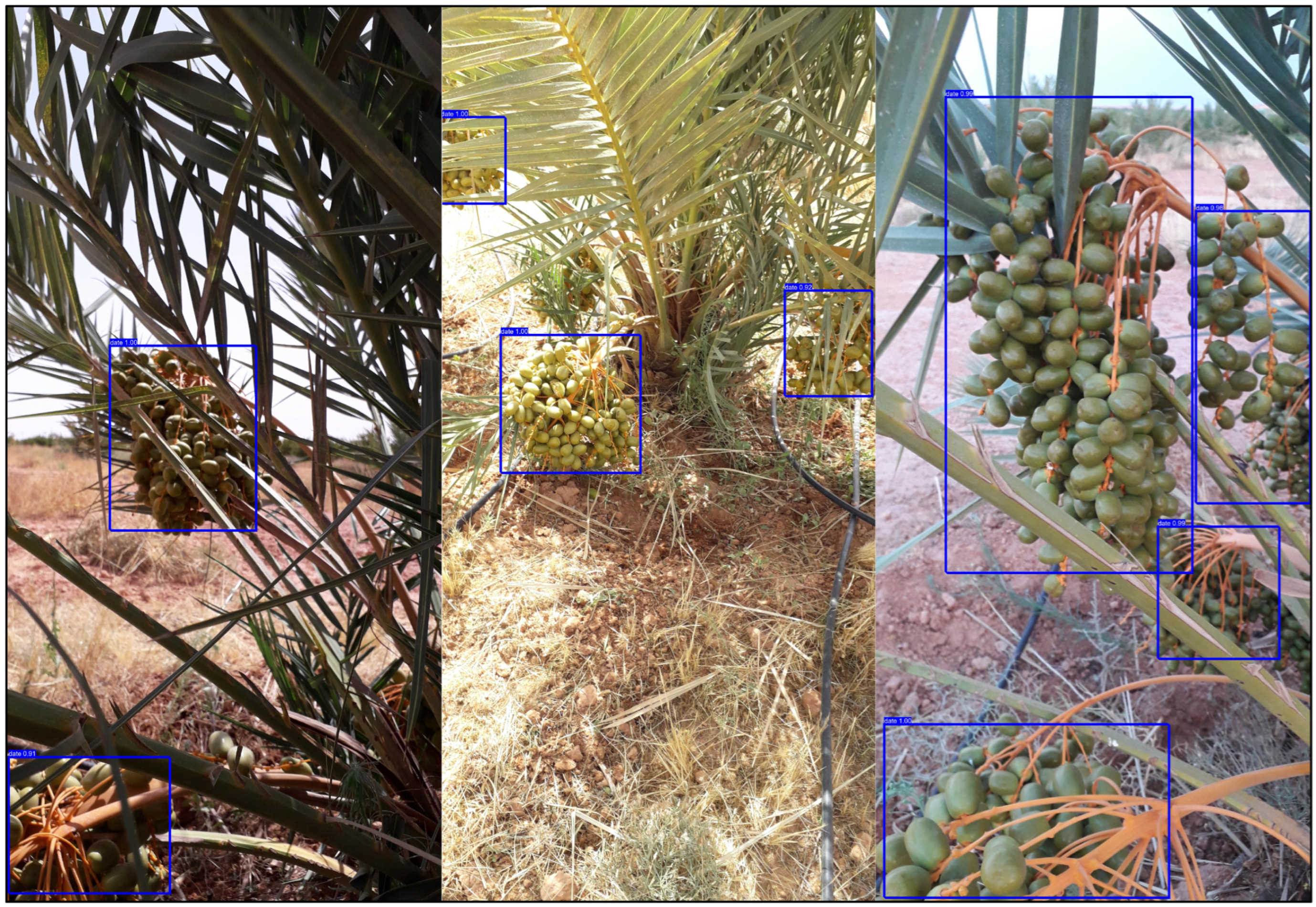

2.1. Data Source and Characteristics

- Boufagous: Immature—913, Khalal—563, Rutab—1675, Tamar—1174;

- Majhoul: Immature—304, Khalal—573, Rutab—1345, Tamar—423;

- Bouisthami: Immature—95, Khalal—137, Rutab—46, Tamar—82;

- Kholt: Immature—140, Khalal—414, Rutab—649, Tamar—559.

2.2. Hardware Specification

- Processor: AMD Ryzen 7 3800X with 8 cores and a base clock speed of 3.9 GHz;

- Graphics Card: NVIDIA GeForce RTX 2060;

- RAM: 16 GB;

- Storage: WDC WD10EZEX—00WN4A0 (1 TB HDD).

2.3. Development Environment and Libraries

- PyTorch 2.6—used for implementing and training deep learning models, including Faster R-CNN;

- YOLOv8n—utilized for object detection tasks (this lightweight variant of the YOLOv8 architecture is specifically designed for efficient inference on devices with limited computational resources; it is well-suited for real-time applications in environments such as mobile, IoT, or GPU-less systems, where speed and efficiency are prioritized over maximum accuracy);

- scikit-learn—applied for model evaluation;

- pycocotools—used for handling COCO-format datasets and evaluating Faster R-CNN performance;

- Matplotlib 3.10.0 and Pandas 2.2.3—employed for data visualization and analysis;

- Pillow—used for image preprocessing and manipulation.

2.4. Preparation of Training, Validation, and Test Data

2.5. Used Metrics

3. AI Models Description

3.1. YOLO

- high inference speed;

- efficient use of computational resources;

- good performance on large and well-separated objects.

- small, densely clustered objects;

- heavily occluded objects;

- fine-grained distinctions between similar classes.

3.2. Faster R-CNN

- high detection accuracy, especially on small and occluded objects;

- flexibility in backbone selection and hyperparameter tuning;

- high performance on complex datasets.

3.3. Justification for Comparative Study

- Identify the strengths and limitations of each model in the context of date fruit detection;

- Determine the most suitable model for specific agricultural use cases (e.g., real-time harvesting vs. post-harvest analysis);

- Provide insights into how model selection impacts overall system performance in real-world orchard environments.

4. Results

4.1. YOLO

- epochs—20 full passes through the training dataset;

- batch size—16 images per iteration;

- optimizer—AdamW, with a learning rate of 0.002 and momentum of 0.9;

- resolution—input images were resized to 256 × 256 pixels.

4.2. Faster R-CNN

5. Discussion

Study Limitations

6. Summary and Conclusions

6.1. Advantages and Limitations of the Proposed Approaches

6.2. Potential Practical Applications

6.3. Future Development Directions

- Expanding the dataset to include e.g., various date cultivars and ripening stages to enhance model robustness;

- Introducing multi-class detection (e.g., by fruit type or maturity level) for more detailed classification;

- Increasing input image resolution to improve detection accuracy (while carefully managing loss function behavior—particularly in YOLOv8).

6.4. Additional Observations

- GPU acceleration (e.g., using an RTX 2060) significantly reduced training time—by approximately an order of magnitude compared to CPU-only training;

- Higher input image resolution positively impacted model performance, improving detection accuracy and reliability, but only to a certain extent.

6.5. Summary

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chao, C.T.; Krueger, R.R. The date palm (Phoenix dactylifera L.): Overview of biology, uses, and cultivation. HortScience 2007, 42, 1077–1082. [Google Scholar] [CrossRef]

- Zaid, A. Date Palm Cultivation, 2nd ed.; Food and Agricultural Organization of the United Nations: Rome, Italy, 2024. [Google Scholar]

- Al-Karmadi, A.; Okoh, A.I. An overview of date (Phoenix dactylifera) fruits as an important global food resource. Foods 2024, 13, 1024. [Google Scholar] [CrossRef]

- Soomro, A.H.; Marri, A.; Shaikh, N. Date palm (Phoenix dactylifera): A review of economic potential, industrial valorization, nutritional and health significance. In Neglected Plant Foods of South Asia: Exploring and Valorizing Nature to Feed Hunger; Tariq, I., Akhtar, S., Lazarte, C.E., Eds.; Springer: Cham, Switzerland, 2023; pp. 319–350. [Google Scholar]

- Hamad, I.; AbdElgawad, H.; Al Jaouni, S.; Zinta, G.; Asard, H.; Hassan, S.; Hegab, M.; Hagagy, N.; Selim, S. Metabolic analysis of various date palm fruit (Phoenix dactylifera L.) cultivars from Saudi Arabia to assess their nutritional quality. Molecules 2015, 20, 13620–13641. [Google Scholar] [CrossRef]

- Muñoz-Tebar, N.; Viuda-Martos, M.; Lorenzo, J.M.; Fernandez-Lopez, J.; Perez-Alvarez, J.A. Strategies for the valorization of date fruit and its co-products: A new ingredient in the development of value-added foods. Foods 2023, 12, 1456. [Google Scholar] [CrossRef]

- Rambabu, K.; Bharath, G.; Hai, A.; Banat, F.; Hasan, S.W.; Taher, H.; Mohd Zaid, H.F. Nutritional quality and physico-chemical characteristics of selected date fruit varieties of the United Arab Emirates. Processes 2020, 8, 256. [Google Scholar] [CrossRef]

- Barakat, H.; Alfheeaid, H.A. Date palm fruit (Phoenix dactylifera) and its promising potential in developing functional energy bars: Review of chemical, nutritional, functional, and sensory attributes. Nutrients 2023, 15, 2134. [Google Scholar] [CrossRef]

- Fernández-López, J.; Viuda-Martos, M.; Sayas-Barberá, E.; Navarro-Rodríguez de Vera, C.; Pérez-Álvarez, J.Á. Biological, nutritive, functional and healthy potential of date palm fruit (Phoenix dactylifera L.): Current research and future prospects. Agronomy 2022, 12, 876. [Google Scholar] [CrossRef]

- Alharbi, K.L.; Raman, J.; Shin, H.J. Date fruit and seed in nutricosmetics. Cosmetics 2021, 8, 59. [Google Scholar] [CrossRef]

- Anjali; Jena, A.; Bamola, A.; Mishra, S.; Jain, I.; Pathak, N.; Sharma, N.; Joshi, N.; Pandey, R.; Kaparwal, S.; et al. State-of-the-art non-destructive approaches for maturity index determination in fruits and vegetables: Principles, applications, and future directions. Food Prod. Process. Nutr. 2024, 6, 56. [Google Scholar] [CrossRef]

- Mohammed, M.; Munir, M.; Aljabr, A. Prediction of date fruit quality attributes during cold storage based on their electrical properties using artificial neural networks models. Foods 2022, 11, 1666. [Google Scholar] [CrossRef]

- Mohyuddin, G.; Khan, M.A.; Haseeb, A.; Mahpara, S.; Waseem, M.; Saleh, A.M. Evaluation of Machine Learning approaches for precision farming in Smart Agriculture System—A comprehensive Review. IEEE Access 2024, 12, 60155–60184. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef] [PubMed]

- Taye, M.M. Theoretical understanding of convolutional neural network: Concepts, architectures, applications, future directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Chu, X.; Miao, P.; Zhang, K.; Wei, H.; Fu, H.; Liu, H.; Jiang, H.; Ma, Z. Green Banana maturity classification and quality evaluation using hyperspectral imaging. Agriculture 2022, 12, 530. [Google Scholar] [CrossRef]

- Gupta, S.; Tripathi, A.K. Fruit and vegetable disease detection and classification: Recent trends, challenges, and future opportunities. Eng. Appl. Artif. Intell. 2024, 133, 108260. [Google Scholar] [CrossRef]

- Santelices, I.R.; Cano, S.; Moreira, F.; Fritz, Á.P. Artificial Vision Systems for Fruit Inspection and Classification: Systematic Literature Review. Sensors 2025, 25, 1524. [Google Scholar] [CrossRef]

- Xiao, F.; Wang, H.; Li, Y.; Cao, Y.; Lv, X.; Xu, G. Object detection and recognition techniques based on digital image processing and traditional machine learning for fruit and vegetable harvesting robots: An overview and review. Agronomy 2023, 13, 639. [Google Scholar] [CrossRef]

- Zarouit, Y.; Zekkouri, H.; Ouhda, M.; Aksasse, B. Date fruit detection dataset for automatic harvesting. Data Br. 2024, 52, 109876. [Google Scholar] [CrossRef]

- Bilous, N.; Malko, V.; Frohme, M.; Nechyporenko, A. Comparison of CNN-Based Architectures for Detection of Different Object Classes. AI 2024, 5, 2300–2320. [Google Scholar] [CrossRef]

- Jiang, Q.; Jia, M.; Bi, L.; Zhuang, Z.; Gao, K. Development of a core feature identification application based on the Faster R-CNN algorithm. Eng. Appl. Artif. Intell. 2022, 115, 105200. [Google Scholar] [CrossRef]

- Shobaki, W.A.; Milanova, M.A. Comparative Study of YOLO, SSD, Faster R-CNN, and More for Optimized Eye-Gaze Writing. Sci 2025, 7, 47. [Google Scholar] [CrossRef]

- Tulbure, A.A.; Tulbure, A.A.; Dulf, E.H. A review on modern defect detection models using DCNNs–Deep convolutional neural networks. J. Adv. Res. 2022, 35, 33–48. [Google Scholar] [CrossRef] [PubMed]

- Date Fruit Detection Dataset for Computer Vision-Based Automatic Harvesting. Available online: https://doi.org/10.5281/zenodo.8315235 (accessed on 20 May 2025). [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning with Applications in R; Springer: New York, NY, USA, 2013. [Google Scholar]

- Shobha, G.; Rangaswamy, S. Machine learning. Handb. Stat. 2018, 38, 197–228. [Google Scholar]

- Mirhaji, H.; Soleymani, M.; Asakereh, A.; Mehdizadeh, S.A. Fruit detection and load estimation of an orange orchard using the YOLO models through simple approaches in different imaging and illumination conditions. Comp. Electron. Agric. 2021, 191, 106533. [Google Scholar] [CrossRef]

- Sengupta, S.; Lee, W.S. Identification and determination of the number of immature green citrus fruit in a canopy under different ambient light conditions. Biosyst. Eng. 2014, 117, 51–61. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, G.; Cao, H.; Hu, K.; Wang, Q.; Deng, Y.; Gao, J.; Tang, Y. Geometry-Aware 3D Point Cloud Learning for Precise Cutting-Point Detection in Unstructured Field Environments. J. Field Robot. 2025. [Google Scholar] [CrossRef]

- Shiu, Y.S.; Lee, R.Y.; Chang, Y.C. Pineapples’ detection and segmentation based on faster and mask r-cnn in uav imagery. Remote Sens. 2023, 15, 814. [Google Scholar] [CrossRef]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 24, 1183–1219. [Google Scholar] [CrossRef]

- Hussain, M. Yolov1 to v8: Unveiling each variant–a comprehensive review of yolo. IEEE Access 2024, 12, 42816–42833. [Google Scholar] [CrossRef]

- Kang, S.; Hu, Z.; Liu, L.; Zhang, K.; Cao, Z. Object Detection YOLO Algorithms and Their Industrial Applications: Overview and Comparative Analysis. Electronics 2025, 14, 1104. [Google Scholar] [CrossRef]

- Ezzeddini, L.; Ktari, J.; Frikha, T.; Alsharabi, N.; Alayba, A.; Alzahrani, A.J.; Jadi, A.; Alkholidi, A.; Hamam, H. Analysis of the performance of Faster R-CNN and YOLOv8 in detecting fishing vessels and fishes in real time. PeerJ Comput. Sci. 2024, 10, e2033. [Google Scholar] [CrossRef] [PubMed]

- Wan, S.; Goudos, S. Faster R-CNN for multi-class fruit detection using a robotic vision system. Comput. Netw. 2020, 168, 107036. [Google Scholar] [CrossRef]

- Noutfia, Y.; Ropelewska, E. What can artificial intelligence approaches bring to an improved and efficient harvesting and postharvest handling of date fruit (Phoenix dactylifera L.)? A review. Postharvest Biol. Technol. 2024, 213, 112926. [Google Scholar] [CrossRef]

- Fan, C.L.; Chung, Y.J. Design and optimization of CNN architecture to identify the types of damage imagery. Mathematics 2022, 10, 3483. [Google Scholar] [CrossRef]

- Krohn, J.; Beyleveld, G.; Bassens, A. Deep Learning Illustrated: A Visual, Interactive Guide to Artificial Intelligence; Addison-Wesley Professional: Boston, MA, USA, 2019. [Google Scholar]

- Koonce, B. ResNet 50. In Convolutional Neural Networks with Swift for Tensorflow. Image Recognition and Dataset Categorization; Koonce, B., Ed.; APress: New York, NY, USA, 2021; pp. 63–72. [Google Scholar]

- Zhang, X.; Li, H.; Sun, S.; Zhang, W.; Shi, F.; Zhang, R.; Liu, Q. Classification and identification of apple leaf diseases and insect pests based on improved ResNet-50 model. Horticulturae 2023, 9, 1046. [Google Scholar] [CrossRef]

- Wang, H.; Xu, X.; Liu, Y.; Lu, D.; Liang, B.; Tang, Y. Real-time defect detection for metal components: A fusion of enhanced canny–devernay and YOLOv6 algorithms. Appl. Sci. 2023, 13, 6898. [Google Scholar] [CrossRef]

| Criterion | YOLO | Faster R-CNN |

|---|---|---|

| Inference speed | High—well-suited for near real-time detection in date palm orchards | Lower—less suited for time-sensitive applications |

| Accuracy in simple scenes | Good performance under clear visibility and limited occlusion | High accuracy even in well-structured scenes |

| Performance in challenging conditions | May face limitations with small or partially occluded fruits (e.g., hidden by palm leaves) | More robust in detecting fruits under occlusion or varied lighting |

| Computational requirements | Relatively low—can be deployed on mobile or edge devices | High—requires more processing power and memory |

| Ease of training | Easier to implement and optimize;faster training times | More complex training process with additional tuning steps |

| Suitability for field use | Appropriate for real-time monitoring in palm groves and mobile robotics | Better suited for offline analysis and quality control tasks |

| Recommended use cases | Rapid fruit localization in open-field monitoring or autonomous systems | Detailed detection and assessment in research or post-harvest scenarios |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lipiński, S.; Sadkowski, S.; Chwietczuk, P. Application of AI in Date Fruit Detection—Performance Analysis of YOLO and Faster R-CNN Models. Computation 2025, 13, 149. https://doi.org/10.3390/computation13060149

Lipiński S, Sadkowski S, Chwietczuk P. Application of AI in Date Fruit Detection—Performance Analysis of YOLO and Faster R-CNN Models. Computation. 2025; 13(6):149. https://doi.org/10.3390/computation13060149

Chicago/Turabian StyleLipiński, Seweryn, Szymon Sadkowski, and Paweł Chwietczuk. 2025. "Application of AI in Date Fruit Detection—Performance Analysis of YOLO and Faster R-CNN Models" Computation 13, no. 6: 149. https://doi.org/10.3390/computation13060149

APA StyleLipiński, S., Sadkowski, S., & Chwietczuk, P. (2025). Application of AI in Date Fruit Detection—Performance Analysis of YOLO and Faster R-CNN Models. Computation, 13(6), 149. https://doi.org/10.3390/computation13060149